Chapter 7. Monitoring and Feedback Loop

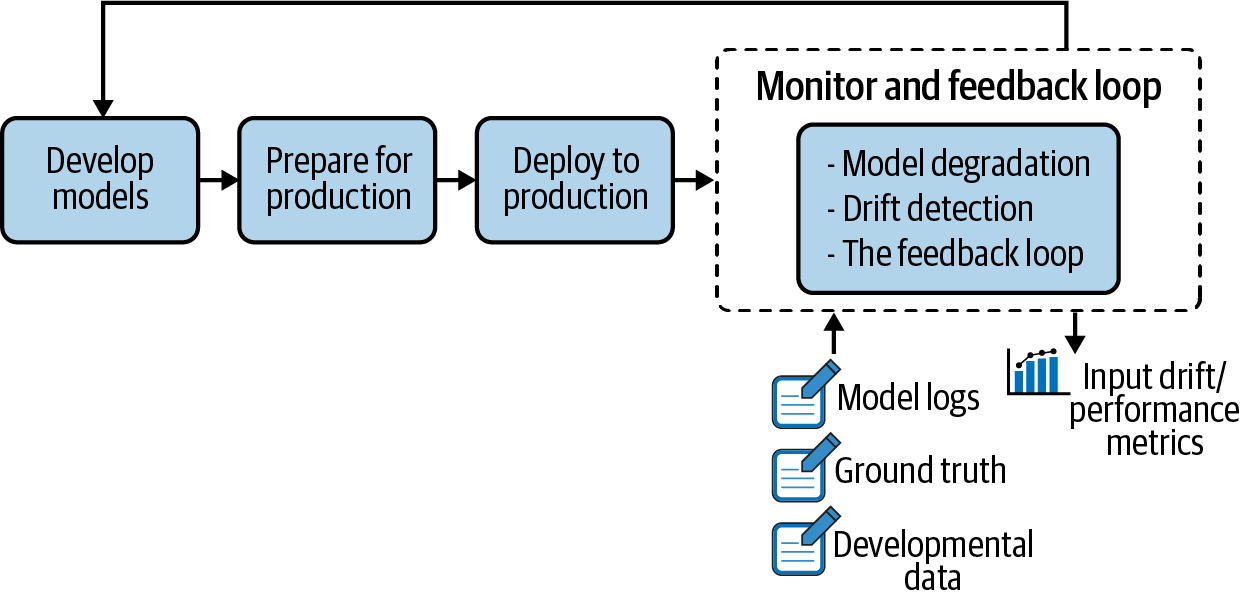

When a machine learning model is deployed in production, it can start degrading in quality fast—and without warning—until it’s too late (i.e., it’s had a potentially negative impact on the business). That’s why model monitoring is a crucial step in the ML model life cycle and a critical piece of MLOps (illustrated in Figure 7-1 as a part of the overall life cycle).

Figure 7-1. Monitoring and feedback loop highlighted in the larger context of the ML project life cycle

Machine learning models need to be monitored at two levels:

-

At the resource level, including ensuring the model is running correctly in the production environment. Key questions include: Is the system alive? Are the CPU, RAM, network usage, and disk space as expected? Are requests being processed at the expected rate?

-

At the performance level, meaning monitoring the pertinence of the model over time. Key questions include: Is the model still an accurate representation of the pattern of new incoming data? Is it performing as well as it did during the design phase?

The first level is a traditional DevOps topic that has been extensively addressed in the literature (and has been covered in Chapter 6). However, the latter is more complicated. Why? Because how well a model performs is a reflection of the data used to train it; in particular, how representative that training ...

Get Introducing MLOps now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.