Chapter 4. Securing Communication Within Istio

A key requirement for many cloud native applications is the ability to provide secure communication paths between services. Traditionally, applications would deploy a “secure at the edge” architecture. Although this approach was sufficient in a monolithic architecture, it has security exposures in a distributed, microservices architecture.

In the previous chapter, we explored including services into a mesh; however, our installation of Istio from Chapter 2 configured a permissive security mode. Recall that the Istio permissive security setting is useful when you have services that are being moved into the service mesh incrementally by allowing both plain text and mTLS traffic. A strict security setting would force all communication to be secure, which can cause endless headaches if you must incrementally move services of your application into the mesh. In this chapter, we explore how Istio manages secure communication between services, and we investigate enabling strict security communication between some of the services in our sample application.

Istio Security

It is always imperative to secure communication to your application by ensuring that only trusted identities can call your services. In traditional applications, we often see that communication to services is secured at the edge of the application, or, to be more explicit, a network gateway (appliance or software) is configured on the network in which the application is deployed. In these topologies the first line of defense—and often the only line of defense—is at the edge of the network prior to getting into the application. Such a deployment topology exhibits faults when moving to a highly distributed, cloud native solution.

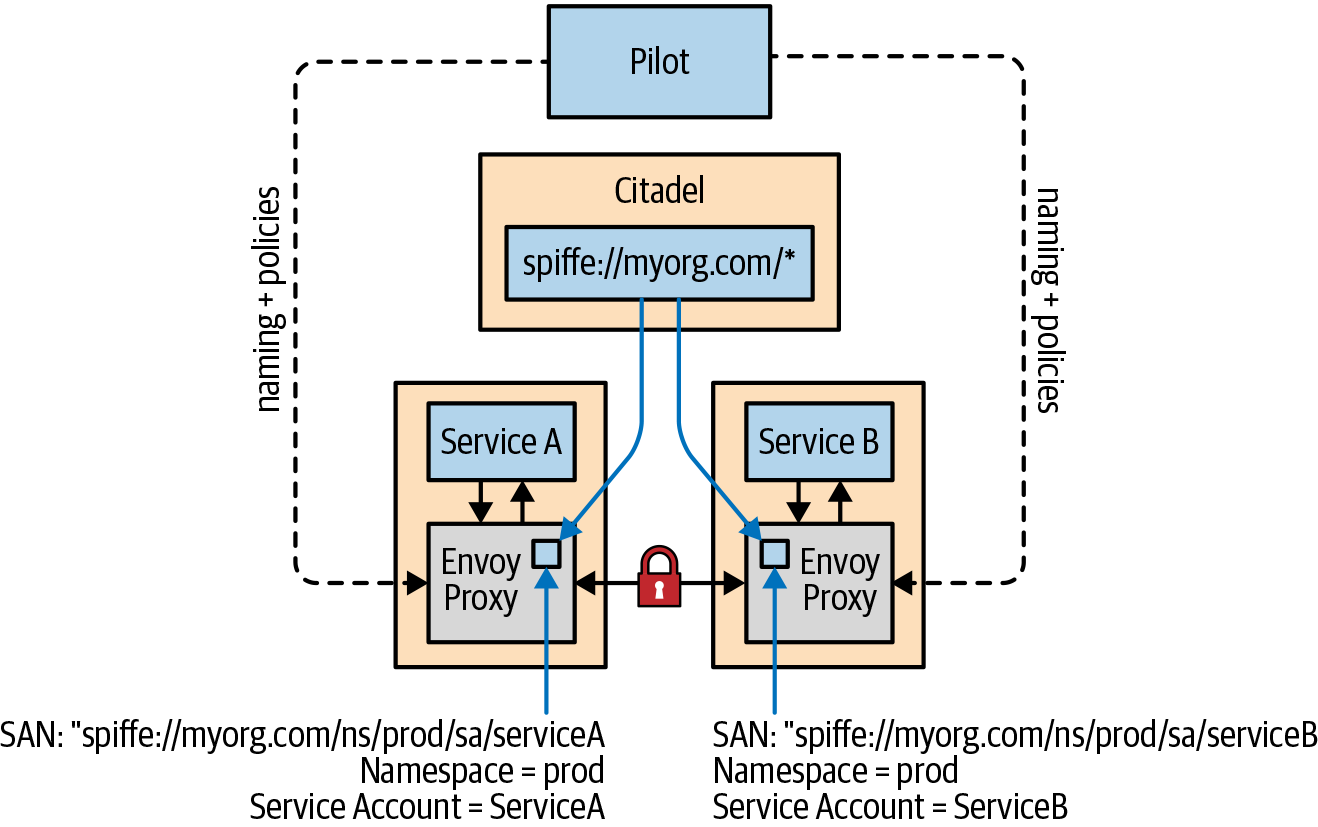

Istio aims to provide security in depth to ensure that an application can be secured even on an untrusted network. Security at depth places security controls at every endpoint of the mesh and not simply at the edge. Placing security controls at each endpoint ensures defense against man-in-the-middle attacks by enabling mTLS, encrypted traffic flow with secure service identities. Traditionally, application code would be modified using common libraries and approaches to establish TLS communication to other services in the application. The traditional approach is complex, varies between languages, and relies on the developers to follow development guidelines to enable TLS communication between services. The traditional approach is fraught with errors, and a single error to secure a connection can compromise the entire application. Istio, on the other hand, establishes and manages mTLS connections within the mesh itself and not within the application code. Thus mTLS communication can be enabled without changing code, and it can be done with a high degree of consistency and control ensuring far less opportunity for error. Figure 4-1 shows the key Istio components involved in providing mTLS communication between services in the mesh, including the following:

- Citadel

- Manages keys and certificates including generation and rotation.

- Istio (Envoy) Proxy

- Implements secure communication between clients and servers.

- Pilot

- Distributes secure naming, mapping, and authentication policies to the proxies.

Figure 4-1. Istio secure identity architecture

Istio Identities

A critical aspect of being able to secure communication between services requires a consistent approach to defining the service identities. For mutual authentication between two services, the services must exchange credentials encoded with their identity. In Kubernetes, service accounts are used to provide service identities. Istio uses secure naming information on the client side of a service invocation to determine whether the client is allowed to call the server-side service. On the server side, the server is able to determine how the client can access and what information can be accessed on the service using authorization policies.

Along with service identities being encoded in certificates, secure naming in Istio will map the service identities to the service names that have been discovered. In simple Kubernetes terms this means a mapping of service account (i.e., the service identity) X to a service named Z indicates that “service account X is authorized to run service Z.” What this means in practice is that when a client attempts to call service Z, Istio will check whether the identity running the service is actually authorized to run the service before allowing the client to use the service. As you learned earlier, Istio Pilot is responsible for configuring the Envoy proxies. In a Kubernetes environment, Pilot will watch the Kubernetes api-server for services being added or removed and generates the secure naming mapping information, which is then securely distributed to all of the Envoy proxies in the mesh. Secure naming prevents DNS spoofing attacks with the mapping of service identities (service accounts) to service names.

Authorization policies are modeled after Kubernetes Role-Based Access Control (RBAC), which defines roles with actions used within a Kubernetes cluster and role bindings to associate roles to identities, either user or service. Authorization policies are defined using a ServiceRole and ServiceRoleBinding. A ServiceRole is used to define permissions for accessing services, and a ServiceRoleBinding grants a ServiceRole to subjects that can be a user, a group, or a service. This combination defines who is allowed to do what under which conditions. Here is a simple example of a ServiceRole that provides read access to all services under the /quotes path in the trader namespace:

apiVersion: "rbac.istio.io/v1alpha1" kind: ServiceRole metadata: name: quotes-viewer namespace: trader spec: rules: - services: ["*"] paths: ["*/quotes"] methods: ["GET"]

A ServiceRole uses a combination of namespace, services, paths, and methods to define how a service or services are accessed.

A ServiceRoleBinding has two parts, a roleRef that refers to a ServiceRole within the namespace, and a list of subjects to be assigned to the role. For example, you can define a ServiceRoleBinding shown here, to allow only authenticated users and services to view quotes:

apiVersion: "rbac.istio.io/v1alpha1" kind: ServiceRoleBinding metadata: name: binding-view-quotes-all-authenticated namespace: trader spec: subjects: - properties: source.principal: "*" roleRef: kind: ServiceRole name: "quotes-viewer"

Citadel

To provide secure communication between services it is necessary to have a public key infrastructure (PKI). Istio’s PKI is built on top of a component named Citadel. A PKI is responsible for securing communication between a client and a server by using public and private cryptographic keys. The PKI creates, distributes, and revokes digital certificates as well as manages public key encryption. Thus, Citadel is responsible for managing keys and certificates across the mesh. A PKI will bind public keys with an identity of a given entity. In the case of Citadel, the entities are the services within the mesh.

Citadel uses the SPIFFE format to construct strong identities for every service encoded in x.509 certificates. SPIFFE (stands for “Secure Production Identity Framework for Everyone”) is an open source project that removes the need for application-level authentication and network ACLs by encoding workload identities in specially crafted x.509 certificates. Istio uses SPIFFE Verifiable Identity documents (SVIDs) for the identity documents. The SVID certificate URI field in a Kubernetes environment uses the following format:

spiffe://\<domain\>/ns/\<namespace\>/sa/\<serviceaccount>\>

Figure 4-1 shows an example of an encoded service identity using SPIFFE in the Subject Alternative Name (SAN) extension field. Using SVID allows Istio to accept connections with other SPIFFE-compliant systems.

Enable mTLS Communication Between Services

Now that you have a basic understanding for how secure communication works in Istio, let’s get hands-on to explore enabling mTLS communication between services in our Stock Trader reference application. We start by enabling mTLS communication between the trader service and the portfolio service. Before getting started, you can validate that the cluster is installed with the global PERMISSIVE mesh policy to allow both plain-text and mTLS connections using the following command:

$ kubectl describe meshpolicy default

Confirm that PERMISSIVE is in the mTLS mode:

Spec: Peers: Mtls: Mode: PERMISSIVE

You can use the istioctl authn command to validate the existing TLS settings both client side and server side for accessing the portfolio service from the point of view of a trader service pod. Use these commands to check the TLS settings for a trader pod (the client) using the portfolio service (the server):

$ TRADER_POD=$(kubectl get pod -l app=trader -o jsonpath={.items..metadata.name} -n stock-trader)

$ istioctl authn tls-check ${TRADER_POD}.stock-trader portfolio-service.stock-trader.svc.cluster.local

You should see an output that states that the portfolio-service supports both plain-text and mTLS connections (the SERVER column has HTTP/mTLS) defined by the global mesh policy (the AUTHN POLICY column has default/) since there is no destination rule defined:

HOST:PORT STATUS portfolio-service.stock-trader.svc.cluster.local:9080 OK SERVER CLIENT AUTHN POLICY DESTINATION RULE HTTP/mTLS HTTP default/ -

You can now begin enabling mTLS communication between the services for the Stock Trader application.

Using Kiali

For information about using Kiali, refer to Chapter 3.

We use Kiali to show how communication is visualized between Istio services. Open the Kiali console using the following command, and log in with admin/admin as the default credentials for the Istio demo profile:

$ istioctl dashboard kiali

You will change some settings to enable security visualization. Start by switching to the Graph tab on the left sidebar. Change the namespace selection to include stock-trader and stock-trader-data at a minimum, as shown in Figure 4-2.

Figure 4-2. Kiali namespace selection

Adjust the Kiali display settings to show Traffic Animation and Security as illustrated in Figure 4-3. You can adjust the type of graph shown to see more or less detail.

At this point Kiali will only show information based on the Istio mesh registration. You will now generate a little load so that you can see how Kiali captures and visualizes traffic between services. In a terminal window, enter the following commands:

$ ibmcloud ks workers --cluster $CLUSTER_NAME $ export STOCK_TRADER_IP=<public IP of one of the worker nodes>

Remember to Set the STOCK_TRADER_IP Variable

Recall from Chapter 3 that the STOCK_TRADER_IP environment variable is set using these commands.

Figure 4-3. Kiali display settings

First, log in to the sample application site, which will be used to generate load against the Stock Trader application. You can use the following cURL command to log in to the Stock Trader application from a terminal to obtain an authentication cookie:

$ curl -X POST \ http://$STOCK_TRADER_IP:32388/trader/login \ -H 'Content-Type: application/x-www-form-urlencoded' \ -H 'Referer: http://$STOCK_TRADER_IP:32388/trader/login' \ -H 'cache-control: no-cache' \ -d 'id=admin&password=admin&submit=Submit' \ --insecure --cookie-jar stock-trader-cookie

Then, you can generate load against the summary page using the cached cookie:

$ while sleep 2.0; do curl -L http://$STOCK_TRADER_IP:32388/trader/summary --insecure --cookie stock-trader-cookie; done

After a couple of minutes, return to the Kiali console, where you should see traffic flowing between the services as shown in Figure 4-4. A green line indicates that traffic is successfully flowing between the services.

Figure 4-4. Kiali traffic flow

As you can see in both the TLS settings and the Kiali console, you do not have secure communication between the services in the mesh. This means that the clients are sending data in plain text and the servers are accepting the clear text. Next, you will configure the services in the stock-trader namespace so that they require client-side mTLS, but they will still tolerate plain text, which is useful for incremental onboarding services into the mesh. You can accomplish this by executing the following command to create a default DestinationRule that has a TLS traffic policy set to ISTIO_MUTUAL for the stock-trader namespace:

$ kubectl apply -f - <<EOF apiVersion: "networking.istio.io/v1alpha3" kind: "DestinationRule" metadata: name: "default" namespace: "stock-trader" spec: host: "*.stock-trader.svc.cluster.local" trafficPolicy: tls: mode: ISTIO_MUTUAL EOF

Default DestinationRule

There can be only one default DestinationRule in a given namespace, and it must be named “default.” The default DestinationRule will override the global setting and provide a default setting for all services defined in the namespace.

Use the following command to execute the tls-check command again:

$ TRADER_POD=$(kubectl get pod -l app=trader -o jsonpath={.items..metadata.name} -n stock-trader)

$ istioctl authn tls-check ${TRADER_POD}.stock-trader portfolio-service.stock-trader.svc.cluster.local

You will see that you now have a default namespace scoped DestinationRule (default/stock-trader) that applies to the pod being examined. Notice that the results show that the client-side authentication requires mTLS as defined in the default DestinationRule in the stock-trader namespace, meaning that mesh clients will send encrypted messages. The server-side authentication remains PERMISSIVE, accepting both plain text and mTLS from the client due to the mesh-wide default policy:

HOST:PORT STATUS SERVER trader-service.stock-trader.svc.cluster.local:9080 OK HTTP/mTLS CLIENT AUTHN POLICY DESTINATION RULE mTLS default/ default/stock-trader

You should still be generating load in a terminal window from earlier. Switching back to the Kiali console, you should notice that a padlock icon now appears on the traffic being sent between the services, indicating that the messages are encrypted (see Figure 4-5).

You can further lock down the secure access to require both mTLS from the client and server in the stock-trader namespace using an authentication Policy to remove the PERMISSIVE support. Execute this command to define a default policy in the stock-trader namespace that updates all of the servers to accept only mTLS traffic:

$ kubectl apply -f - <<EOF

apiVersion: "authentication.istio.io/v1alpha1"

kind: "Policy"

metadata:

name: "default"

namespace: "stock-trader"

spec:

peers:

- mtls: {}

EOF

Executing the tls-check once again, you see that both client-side and server-side authentication requires mTLS:

$ TRADER_POD=$(kubectl get pod -l app=trader -o jsonpath={.items..metadata.name} -n stock-trader)

$ istioctl authn tls-check ${TRADER_POD}.stock-trader portfolio-service.stock-trader.svc.cluster.local

HOST:PORT STATUS SERVER trader-service.stock-trader.svc.cluster.local:9080 OK mTLS CLIENT AUTHN POLICY DESTINATION RULE mTLS default/stock-trader default/stock-trader

Figure 4-5. Kiali with mTLS communication

Managing HTTP Health Checks

The trader and stock-quote deployments both have Kubernetes HTTP probes configured for checking the health of the containers. Since you enabled only mTLS communication to the servers, this means the Kubernetes probes initiated by the Kubelet on the nodes will fail since the Kubelet isn’t using mTLS to communicate with the server. If the Kubernetes probes fail, then the pods will begin to fail. This problem was avoided because the sidecar.istio.io/rewriteAppHTTPProbers: "true" pod annotation was already defined in the corresponding deploy.yaml files. This annotation enables the rewrite of the HTTP probes without requiring changes to the services. We expect this feature will be enabled by default in a future Istio release.

You will see failures now in your cURL calls from earlier since the server side is requiring clients to send mTLS traffic, which also includes clients from the internet. It will be necessary to secure inbound traffic to the service mesh as well to remove these errors:

curl: (56) Recv failure: Connection reset by peer

Securing Inbound Traffic

Now that you have walked through the configuration of secure mTLS communication within the mesh, we want to turn your attention to securing communication into the mesh from a client outside the mesh. For example, clients using a web browser want to access a service from the public internet. As you saw in the last section, setting the default stock-trader namespace Policy to enforce mTLS from clients caused client requests from the internet (i.e., from a web browser) to fail TLS handshake. This is because PERMISSIVE support was removed on the microservices within the Istio mesh. To solve this problem, use an Istio Gateway configured with TLS.

Inbound and outbound traffic for the mesh is controlled with Istio gateways. These gateways are implemented as Envoy proxies, which allow or block traffic from entering or leaving the mesh. A mesh can have multiple gateway configurations; for example, you may want one set of gateways for public internet inbound and outbound traffic, while having a separate set of gateways for private network traffic. Istio Gateways are primarily used to provide secure inbound access to the mesh, but the Istio egress (outbound) gateway also provides critical control over outbound traffic. For example, you can configure an Istio egress gateway with policies to restrict which destinations can be reached by specific services within the mesh. This level of traffic control is quite difficult with Kubernetes itself. Let’s start by ensuring there is secure inbound communication by configuring an Istio ingress (inbound) gateway for secure TLS communication to the trade service within the mesh.

You can see that a default Istio ingress gateway has already been deployed into our Kubernetes cluster during installation. Using the following command, you can see that the ingress gateway is deployed as a LoadBalancer service with an external public IP address:

$ kubectl get svc -n istio-system -l app=istio-ingressgateway NAME TYPE CLUSTER-IP EXTERNAL-IP istio-ingressgateway LoadBalancer 172.21.39.61 169.63.159.157 PORT(S) 15020:31382/TCP,80:31380/TCP,443:31390/TCP,31400:31400/TCP,15029:31133/ TCP,15030:30832/TCP,15031:31732/TCP,15032:32263/TCP,15443:31348/TCP AGE 15d

To configure secure communication via the gateway, you will need a signed certificate. For this example, we’ll use a DNS entry with a signed wildcard certificate from IBM Cloud Kubernetes Service (IKS). Refer to your cloud provider’s documentation to determine how to configure a DNS entry for your Istio ingress gateway service.

You can use IKS to generate a DNS entry and certificate for the Istio ingress gateway used in the example Stock Trader application. Using the ibmcloud CLI, you will register a new DNS entry for the Istio ingress gateway using the external IP of the istio-ingressgateway service. You need to set the name of your cluster in an environment variable to be used in later commands. In the following command, make sure that you replace <YOUR_CLUSTER_NAME> with the name of the cluster that you created in IKS:

$ export CLUSTER_NAME=<YOUR_CLUSTER_NAME>

If you do not recall the name of your cluster, you can use the following command to list all the clusters that belong to you:

$ ibmcloud ks clusters

Now you can register a DNS entry using the IP address of the Istio ingress gateway using these commands:

$ export INGRESS_IP=$(kubectl -n istio-system get service istio-ingressgateway -o jsonpath='{.status.loadBalancer.ingress[0].ip}')

$ ibmcloud ks nlb-dns-create --cluster $CLUSTER_NAME --ip $INGRESS_IP

You should see a result similar to the following:

OK Hostname subdomain is created as <YOUR_CLUSTER_NAME>-f0a5715bb2873122b708ede2bf765701-0001.us-east.containers.appdomain.cloud

Check the status of the DNS entry using the IKS nlb-dns command, which will have a result similar to this:

$ ibmcloud ks nlb-dns ls --cluster $CLUSTER_NAME Retrieving hostnames, certificates, IPs, and health check monitors for network load balancer (NLB) pods in cluster <YOUR_CLUSTER_NAME>... OK Hostname istio-book-f0a5715bb2873122b708ede2bf765701-0001.us-east.containers.appdomain.cloud IP(s) Health Monitor SSL Cert Status 169.63.159.157 None created SSL Cert Secret Name istio-book-f0a5715bb2873122b708ede2bf765701-0001

The SSL certificate is encoded in the SSL Cert Secret Name Kubernetes secret stored in the default namespace. You will need to copy the secret into the istio-system namespace where the gateway service is deployed, and you will need to name the secret istio-ingressgateway-certs. The name is a reserved name and will automatically get loaded by the ingress gateway when a secret with the istio-ingressgateway-certs is found.

Copy the SSL secret generated by your cloud provider into the istio-ingressgateway-certs secret. To do this you’ll need to export the secret name using this command where you change <YOUR_SSL_SECRET_NAME> to the name generated by your cloud provider:

$ export SSL_SECRET_NAME=<YOUR_SSL_SECRET_NAME>

Now you can create the istio-ingressgateway-certs secret with this command:

$ kubectl get secret -n default $SSL_SECRET_NAME --export -o json | jq '.metadata.name |= "istio-ingressgateway-certs"' | kubectl -n istio-system create -f -

Validate that the secret was created using the following command:

$ kubectl get secret istio-ingressgateway-certs -n istio-system NAME TYPE DATA AGE istio-ingressgateway-certs Opaque 2 3h5m

You will need to force the gateway pod(s) to be restarted to pick up the certificates. This is done by deleting the istio-ingressgateway pods with this kubectl command.

$ kubectl delete pod -n istio-system -l istio=ingressgateway

You’re now ready to configure the gateway using the generated domain name and the signed certificate stored in the secret. You will need to use the generated hostname from the nlb-dns when configuring the ingress gateway. The following command provides you with the generated domain name within IKS:

$ ibmcloud ks nlb-dns ls --cluster $CLUSTER_NAME

The certificate that IKS has generated is a signed wildcard certificate. This means you can add segments to the front of the generated hostname if you want. You will use the following YAML file to configure the gateway with TLS. The tls section configures the gateway to use simple TLS authentication, and the certificate and private key need to have the exact paths specified. These paths will be automatically mounted using the istio-ingressgateway-certs secret that you created earlier. Apply your gateway configuration with this command:

$ kubectl apply -f - <<EOF apiVersion: networking.istio.io/v1alpha3 kind: Gateway metadata: name: trader-gateway namespace: stock-trader spec: selector: istio: ingressgateway # use istio default ingress gateway servers: - port: number: 443 name: https protocol: HTTPS tls: mode: SIMPLE serverCertificate: /etc/istio/ingressgateway-certs/tls.crt privateKey: /etc/istio/ingressgateway-certs/tls.key hosts: - "*" EOF

At this point you have secured the ingress gateway, but you haven’t defined any services to be accessed via the gateway. Exposing a service outside the mesh requires that a service is bound to the gateway using an Istio VirtualService resource. The VirtualService is bound to the gateway using the gateways section. In this case the VirtualService is bound to the newly configured trader-gateway. Using this command, you will apply your virtual service configuration:

$ kubectl apply -f - <<EOF apiVersion: networking.istio.io/v1alpha3 kind: VirtualService metadata: name: virtual-service-trader spec: hosts: - '*' gateways: - trader-gateway http: - match: - uri: prefix: /trader route: - destination: host: trader-service port: number: 9080 EOF

Specifying a Hostname

You can specify a hostname instead of using “*” for a given gateway resource and virtual service resource. For example, you can use <YOUR_DNS_NLB_HOSTNAME> as the hostname.

You should now be able to access the Stock Trader application in your web browser with a secure connection and no handshake failures. Enter https://<YOUR_DNS_NLB_HOSTNAME>/trader in your web browser (see Figure 4-6).

Figure 4-6. Secure access via web browser

When you go back to the Kiali dashboard, you can see that the gateway is now shown and there is a secure connection between the gateway and the trader, as shown in Figure 4-7. The gateway itself is configured with TLS ensuring that traffic is encrypted from the client outside the mesh all the way to the target service.

Using RBAC with Secure Communication

Istio also supports authorization policies that leverage ServiceRoles and ServiceRoleBindings to describe who is allowed to do what under which conditions. A ServiceRole describes a set of permissions or methods including paths for accessing a service. A ServiceRoleBinding grants a ServiceRole to a set of subjects, which can be a user or a service. The combination of ServiceRoles and ServiceRoleBindings provide you with fine-grained access controls to services.

Conclusion

In this chapter, you learned that Istio provides a simple yet powerful mechanism to manage mTLS communication between services using strong identities defined with the SPIFFE format. Citadel is the key Istio component responsible for the generation and rotation of keys and certificates used for secure communication between services within the mesh. You learned that Istio uses a declarative model to set security policies enabling the ability to incrementally onboard secure services into the mesh. By using a permissive model, it is possible to have services that support both plain text and mTLS communication, which makes it easier to incrementally move services into the mesh. Using Istio gateways ensures that there are secure, encrypted communication from clients outside of the mesh accessing services that are exposed from the mesh. Now that you have discovered how to secure services in the mesh, we turn your attention toward controlling traffic within the mesh.

Figure 4-7. Secure gateway communication

Get Istio Explained now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.