Chapter 1. Juniper MX Architecture

Back in 1998, Juniper Networks released its first router, the M40. Leveraging Application-Specific Integrated Circuits (ASICs), the M40 was able to outperform any other router architecture. The M40 was also the first router to have a true separation of the control and data planes, and the M Series was born. Originally, the model name M40 referred to its ability to process 40 million packets per second (Mpps). As the product portfolio expanded, the “M” now refers to the multiple services available on the router, such as MPLS with a wide variety of VPNs. The primary use case for the M Series was to allow Service Providers to deliver services based on IP while at the same time supporting legacy frame relay and ATM networks.

Fast-forward 10 years and the number of customers that Service Providers have to support has increased exponentially. Frame relay and ATM have been decimated, as customers are demanding high-speed Layer 2 and Layer 3 Ethernet-based services. Large Enterprise companies are becoming more Service Provider-like and are offering IP services to departments and subsidiaries.

Nearly all networking equipment connects via Ethernet. It’s one of the most well understood and deployed networking technologies used today. Companies have challenging requirements to reduce operating costs and at the same time provide more services. Ethernet enables the simplification in network operations, administration, and maintenance.

The MX Series was introduced in 2007 to solve these new challenges. It is optimized for delivering high-density and high-speed Layer 2 and Layer 3 Ethernet services. The “M” still refers to the multiple services heritage, while the “X” refers to the new switching capability and focus on 10G interfaces and beyond; it’s also interesting to note that the Roman numeral for the number 10 is “X.”

It’s no easy task to create a platform that’s able to solve these new challenges. The MX Series has a strong pedigree: although mechanically different, it leverages technology from both the M and T Series for chassis management, switching fabric, and the Routing Engine.

Features that you have come to know and love on the M and T Series are certainly present on the MX Series, as it runs on the same image of Junos. In addition to the “oldies, but goodies,” is an entire feature set focused on Service Provider switching and broadband network gateway (BNG). Here’s just a sample of what is available on the MX:

- High availability

Non-Stop Routing (NSR), Non-Stop Bridging (NSB), Graceful Routing Engine Switchover (GRES), Graceful Restart (GR), and In-Service Software Upgrade (ISSU)

- Routing

RIP, OSPF, IS-IS, BGP, and Multicast

- Switching

Full suite of Spanning Tree Protocols (STP), Service Provider VLAN tag manipulation, QinQ, and the ability to scale beyond 4,094 bridge domains by leveraging virtual switches

- Inline services

Network Address Translation (NAT), IP Flow Information Export (IPFIX), Tunnel Services, and Port Mirroring

- MPLS

L3VPN, L2VPNs, and VPLS

- Broadband services

PPPoX, DHCP, Hierarchical QoS, and IP address tracking

- Virtualization

Multi-Chassis Link Aggregation, Virtual Chassis, Logical Systems, Virtual Switches

With such a large feature set, the use case of the MX Series is very broad. It’s common to see it in the core of a Service Provider network, providing BNG, or in the Enterprise providing edge routing or core switching.

This chapter introduces the MX platform, features, and architecture. We’ll review the hardware, components, and redundancy in detail.

Junos OS

The Junos OS is a purpose-built networking operating system based on one of the most stable and secure operating systems in the world: FreeBSD. Junos software was designed as a monolithic kernel architecture that places all of the operating system services in the kernel space. Major components of Junos are written as daemons that provide complete process and memory separation. Since Junos 14.x, a big change was introduced—modularity. Although Junos is still based on FreeBSD, it becomes independent of the “guest OS” and offers a separation between the Core OS and the HW drivers. Many improvements are coming over the next few years.

Indeed, the Junos OS is starting its great modernization as this Second Edition of this book is being written. For scaling purposes, it will be more modular, faster, and easier to support all the new virtual functionality coming on the heels of SDN. Already Junos is migrating to recent software architectures such as Kernel SMP and multi-core OS.

One Junos

Creating a single network operating system that’s able to be leveraged across routers, switches, and firewalls simplifies network operations, administration, and maintenance. Network operators need only learn Junos once and become instantly effective across other Juniper products. An added benefit of a single Junos instance is that there’s no need to reinvent the wheel and have 10 different implementations of BGP or OSPF. Being able to write these core protocols once and then reuse them across all products provides a high level of stability, as the code is very mature and field-tested.

Software Releases

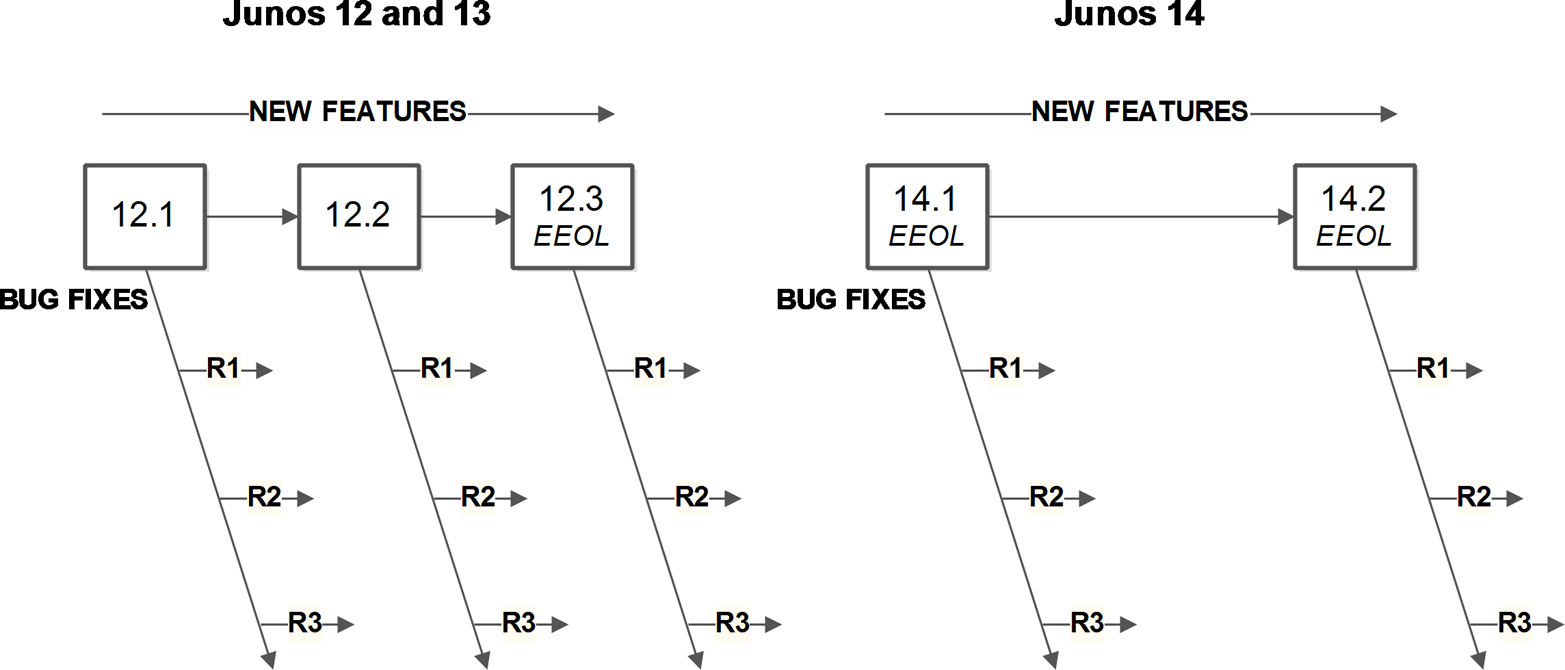

For a long time (nearly 15 years) there has been a consistent and predictable release of Junos every calendar quarter. Recently, Juniper has changed its release strategy, starting with Junos 12.x and 13.x, which each offered three major releases, and then Junos 14.x, which offered two major releases. The development of the core operating system is now a single release train allowing developers to create new features or fix bugs once and share them across multiple platforms. Each Junos software release is built for both 32-bit and 64-bit Routing Engines.

The release numbers are now in a major and minor format. The major number is the version of Junos for a particular calendar year and the minor release indicates which semester of that year the software was released. When there are several major and minor release numbers, it identifies a major release—for example, 14.1, 14.2.

Since Junos 14.x, each release of the Junos OS (the two majors per year) is supported for 36 months. In other words, every Junos software has a known Extended End of Life (EEOL), as shown in Figure 1-1.

Figure 1-1. Junos release model and cadence

There are a couple of different types of Junos that are released more frequently to resolve issues: maintenance and service releases. Maintenance releases are released about every eight weeks to fix a collection of issues and they are prefixed with “R.” For example, Junos 14.2R2 would be the second maintenance release for Junos 14.2. Service releases are released on demand to specifically fix a critical issue that has yet to be addressed by a maintenance release. These releases are prefixed with an “S.” An example would be Junos 14.2R3-S2.

The general rule of thumb is that new features are added every minor release and bug fixes are added every maintenance release. For example, Junos 14.1 to 14.2 would introduce new features, whereas Junos 14.1R1 to 14.1R2 would introduce bug fixes.

The next Junos release “15” introduces the concept of “Innovation” release prefixes with F. Each major release will offer two innovation releases that should help customers to quickly implement innovative features. The innovative features will then be included in the next major release. For example, the major release 15.1 will have two “F” releases: 15.1F1 and 15.2F2. And that will be the same for the second major release, 15.2. The innovations developed in 15.1F1 will then be natively included in the first maintenance release of the next major software: 15.2R1

Junos Continuity—JAM

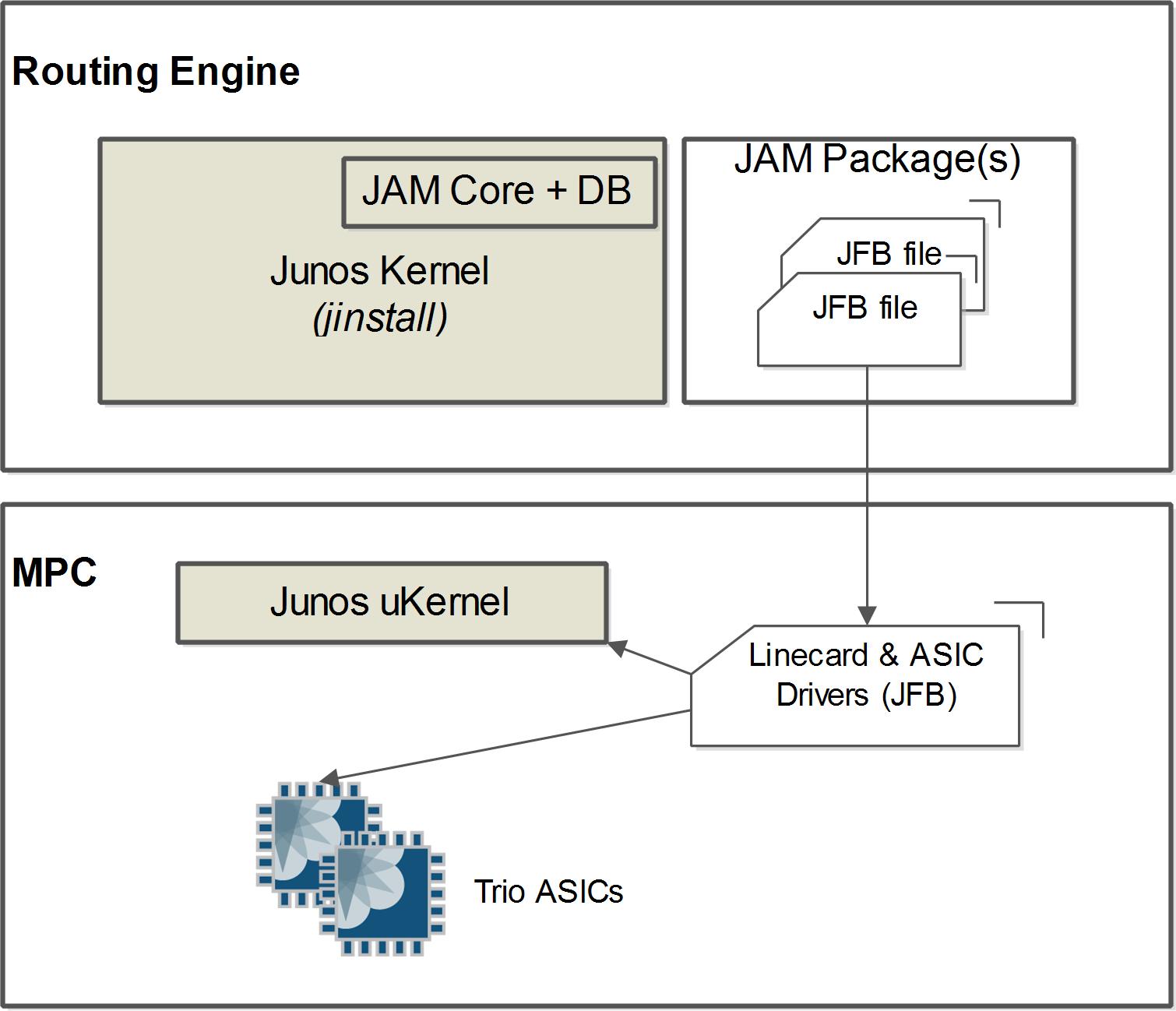

JAM means Junos Agile deployment Methodology, a new concept also known by its marketing name Junos Continuity.

The JAM feature is one of the new Junos modularity enhancements. In releases prior to 14.x, the hardware drivers were embedded into the larger Junos software build, which did not allow you to install new line card models (for example, those not yet available before a given Junos release) without requiring a complete new Junos installation.

Since Junos 14.x, a separation between the Junos core and the hardware drivers has been made, allowing an operator to deploy new hardware onto existing Junos releases, as shown in Figure 1-2. It’s a significant advancement in terms of time spent for testing, validating, and upgrading a large network. Indeed, new hardware is usually requested by customers more often than a new software addition, usually to upgrade their bandwidth capacity, which grows very quickly in Internet Service or Content Provider networks. You only need more 10G or 100G interfaces per slot with just the parity features set. The ability to install newer, faster, and denser hardware while keeping the current stable Junos release you have configured is a great asset. JAM functionalities also prevent downtime because installing new hardware with JAM doesn’t require any reboot of the router. Awesome, isn’t it?

The JAM model is made of two major components:

- The JAM database

Included in the Junos OS itself (in other words, in a JAM-aware Junos release) so the OS maintains platform-specific parameters and attributes.

- The JAM package

A set of line card and chipset drivers (JFB file).

Figure 1-2. The JAM model

There are two methods for implementing JAM and getting the most benefit from it:

With a standalone JAM package available for any existing elected release (a release that officially supports JAM model). The first elected releases for JAM are 14.1R4 and 14.2R3. A standalone JAM package is different than a jinstall package which is prefixed by “jam-xxxx”

.Through an integrated JAM release. In this configuration, JAM packages are directly integrated into the jinstall package.

Let’s take the example of the first JAM package already available (jam-mpc-2e-3e-ng64): JAM for NG-MPC2 and NG-MPC3 cards. This single JAM package includes hardware drivers for the following new cards:

MPC2E-3D-NG

MPC2E-3D-NG-Q

MPC3E-3D-NG

MPC3E-3D-NG-Q

The elected releases for this package are 14.1R4 and 14.2R3, as mentioned before. Customers who use these releases could install the above next-generation of MPC cards without any new Junos installation. They could follow the typical installation procedure:

Insert new MPC (MPC stays offline because it is not supported).

Install the standalone JAM package for the given FRU.

Bring the MPC online.

MPC retrieves its driver from the JAM database (on the RE).

MPC then boots and is fully operational.

Users that use older releases should use the integrated mode by installing Junos release 14.1 or 14.2, which include a JAM package for these cards. Finally, another choice might be to use the native release, which provides built-in support for these new MPCs; for NG-MPC 2 and NG -MPC3 cards, the native release is 15.1.R1.

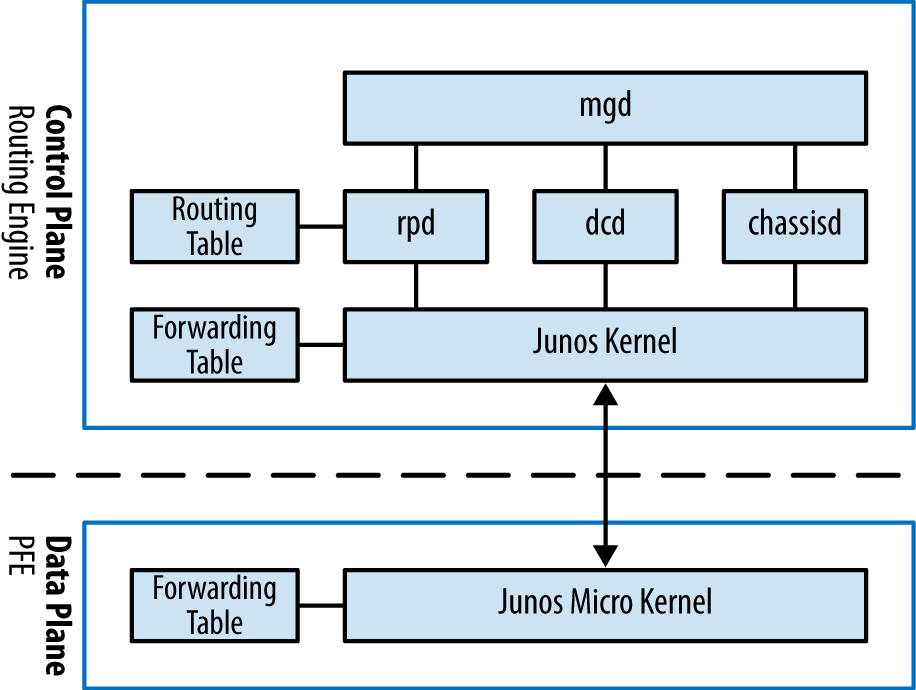

Software Architecture

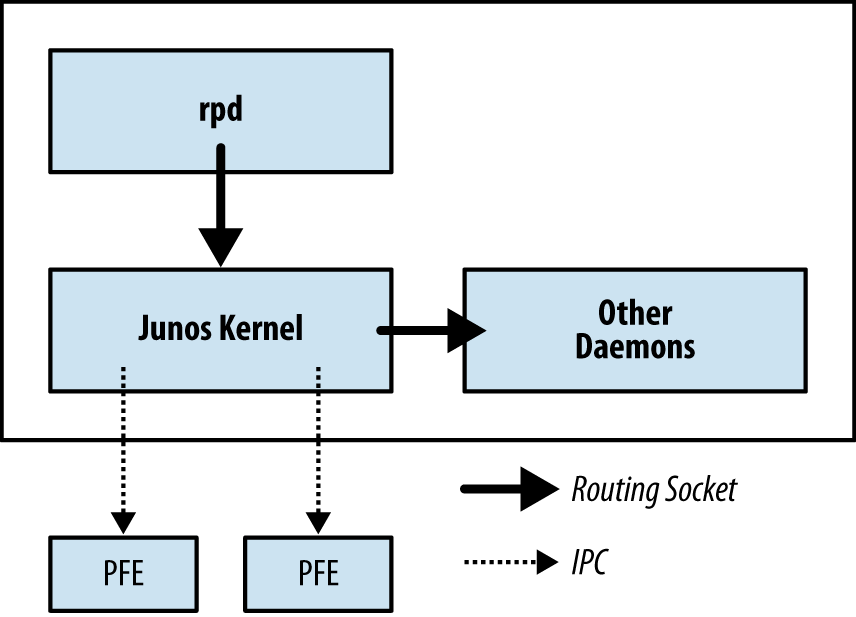

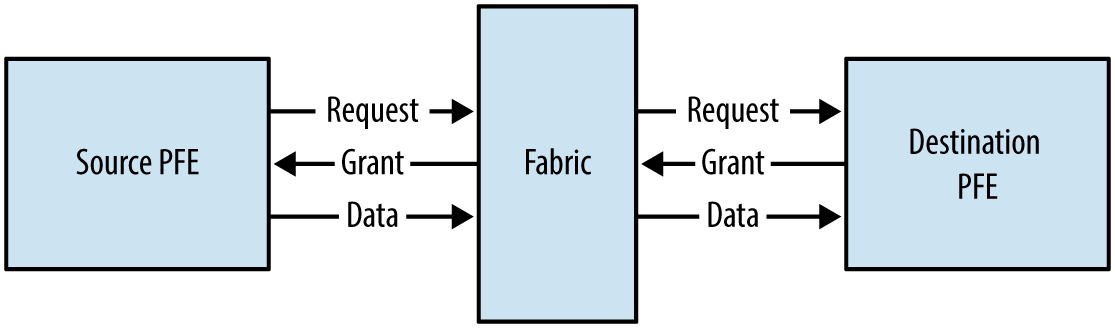

Junos was designed from the beginning to support a separation of control and forwarding plane. This is true for the MX Series, where all of the control plane functions are performed by the Routing Engine while all of the forwarding is performed by the packet forwarding engine (PFE). PFEs are hosted on the line card, which also has a dedicated CPU to communicate with the RE and handle some specific inline features.

Providing this level of separation ensures that one plane doesn’t impact the other. For example, the forwarding plane could be routing traffic at line rate and performing many different services while the Routing Engine sits idle and unaffected control plane functions come in many shapes and sizes. There’s a common misconception that the control plane only handles routing protocol updates. In fact, there are many more control plane functions. Some examples include:

Updating the routing table

Answering SNMP queries

Processing SSH or HTTP traffic to administer the router

Changing fan speed

Controlling the craft interface

Providing a Junos micro kernel to the PFEs

Updating the forwarding table on the PFEs

Figure 1-3. Junos software architecture

At a high level, the control plane is implemented within the Routing Engine while the forwarding plane is implemented within each PFE using a small, purpose-built kernel that contains only the required functions to route and switch traffic. Some control plane tasks are delegated to the CPU of the Trio line cards in order to scale more. This is the case for the ppmd process detailed momentarily.

The benefit of control and forwarding separation is that any traffic that is being routed or switched through the router will always be processed at line rate on the PFEs and switch fabric; for example, if a router was processing traffic between web servers and the Internet, all of the processing would be performed by the forwarding plane.

The Junos kernel has five major daemons; each of these daemons plays a critical role within the MX and work together via Interprocess Communication (IPC) and routing sockets to communicate with the Junos kernel and other daemons. The following daemons take center stage and are required for the operation of Junos:

Management daemon (

mgd)Routing protocol daemon (

rpd)Periodic packet management daemon (

ppmd)Device control daemon (

dcd)Chassis daemon (

chassisd)

There are many more daemons for tasks such as NTP, VRRP, DHCP, and other technologies, but they play a smaller and more specific role in the software architecture.

Management daemon

The Junos User Interface (UI) keeps everything in a centralized database. This allows Junos to handle data in interesting ways and open the door to advanced features such as configuration rollback, apply groups, and activating and deactivating entire portions of the configuration.

The UI has four major components: the configuration database, database schema, management daemon (mgd), and the command-line interface (cli).

The management daemon (mgd) is the glue that holds the entire Junos User Interface (UI) together. At a high level, mgd provides a mechanism to process information for both network operators and daemons.

The interactive component of mgd is the Junos cli; this is a terminal-based application that allows the network operator an interface into Junos. The other side of mgd is the extensible markup language (XML) remote procedure call (RPC) interface. This provides an API through Junoscript and Netconf to allow for the development of automation applications.

The cli responsibilities are:

Command-line editing

Terminal emulation

Terminal paging

Displaying command and variable completions

Monitoring log files and interfaces

Executing child processes such as ping, traceroute, and ssh

mgd responsibilities include:

Passing commands from the

clito the appropriate daemonFinding command and variable completions

Parsing commands

It’s interesting to note that the majority of the Junos operational commands use XML to pass data. To see an example of this, simply add the pipe command display xml to any command. Let’s take a look at a simple command such as show isis adjacency:

{master}

dhanks@R1-RE0> show isis adjacency

Interface System L State Hold (secs) SNPA

ae0.1 R2-RE0 2 Up 23

So far everything looks normal. Let’s add the display xml to take a closer look:

{master}dhanks@R1-RE0> show isis adjacency | display xml

<rpc-reply xmlns:junos="http://xml.juniper.net/junos/11.4R1/junos">

<isis-adjacency-information xmlns="http://xml.juniper.net/junos/11.4R1/junos

-routing" junos:style="brief">

<isis-adjacency>

<interface-name>ae0.1</interface-name>

<system-name>R2-RE0</system-name>

<level>2</level>

<adjacency-state>Up</adjacency-state>

<holdtime>22</holdtime>

</isis-adjacency>

</isis-adjacency-information>

<cli>

<banner>{master}</banner>

</cli>

</rpc-reply>

As you can see, the data is formatted in XML and received from mgd via RPC.

This feature (available since the beginning of Junos) is a very clever mechanism of separation between the data model and the data processing, and it turns out to be a great asset in our newly found network automation era—in addition to the netconf protocol, Junos offers the ability to remotely manage and configure the MX in an efficient manner.

Routing protocol daemon

The routing protocol daemon (rpd) handles all of the routing protocols configured within Junos. At a high level, its responsibilities are receiving routing advertisements and updates, maintaining the routing table, and installing active routes into the forwarding table. In order to maintain process separation, each routing protocol configured on the system runs as a separate task within rpd. The other responsibility of rpd is to exchange information with the Junos kernel to receive interface modifications, send route information, and send interface changes.

Let’s take a peek into rpd and see what’s going on. The hidden command set task accounting toggles CPU accounting on and off:

{master}

dhanks@R1-RE0> set task accounting on

Task accounting enabled.

Now we’re good to go. Junos is currently profiling daemons and tasks to get a better idea of what’s using the Routing Engine CPU. Let’s wait a few minutes for it to collect some data.

We can now use show task accounting to see the results:

{master}

dhanks@R1-RE0> show task accounting

Task accounting is enabled.

Task Started User Time System Time Longest Run

Scheduler 265 0.003 0.000 0.000

Memory 2 0.000 0.000 0.000

hakr 1 0.000 0 0.000

ES-IS I/O./var/run/ppmd_c 6 0.000 0 0.000

IS-IS I/O./var/run/ppmd_c 46 0.000 0.000 0.000

PIM I/O./var/run/ppmd_con 9 0.000 0.000 0.000

IS-IS 90 0.001 0.000 0.000

BFD I/O./var/run/bfdd_con 9 0.000 0 0.000

Mirror Task.128.0.0.6+598 33 0.000 0.000 0.000

KRT 25 0.000 0.000 0.000

Redirect 1 0.000 0.000 0.000

MGMT_Listen./var/run/rpd_ 7 0.000 0.000 0.000

SNMP Subagent./var/run/sn 15 0.000 0.000 0.000

Not too much going on here, but you get the idea. Currently, running daemons and tasks within rpd are present and accounted for.

Once you’ve finished debugging, make sure to turn off accounting:

{master}

dhanks@R1-RE0> set task accounting off

Task accounting disabled.

Warning

The set task accounting command is hidden for a reason. It’s possible to put additional load on the Junos kernel while accounting is turned on. It isn’t recommended to run this command on a production network unless instructed by JTAC. Again, after your debugging is finished, don’t forget to turn it back off with set task accounting off.

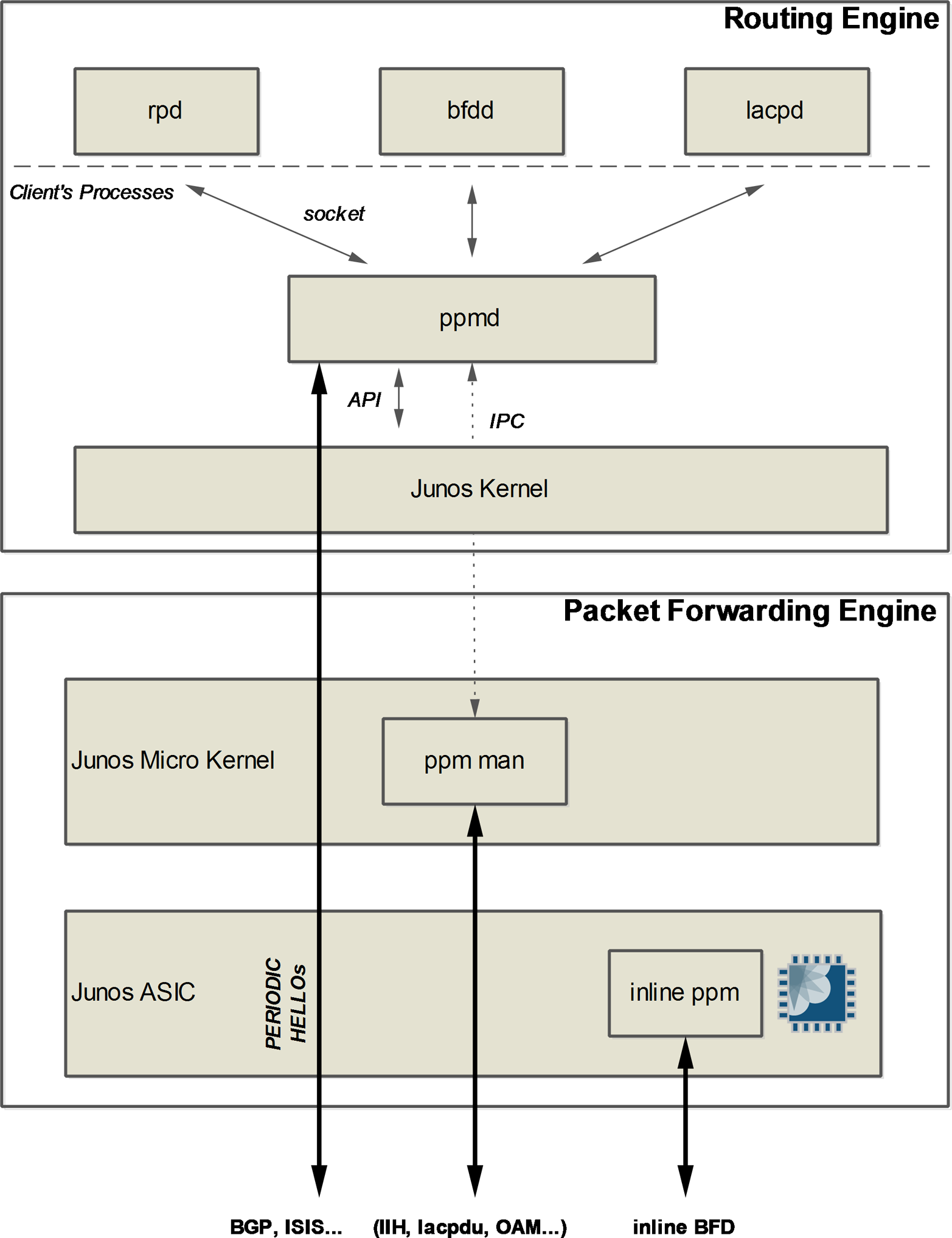

Periodic packet management daemon

Periodic packet management (ppmd) is a specific process dedicated to handling and managing Hello packets from several protocols. In the first Junos releases, RPD managed the adjacencies state. Each task, such as OSPF and ISIS, was in charge of receiving and sending periodic packets and maintaining the time of each adjacency. In some configurations, in large scaling environments with aggressive timers (close to the second), RPD could experience scheduler SLIP events, which broke the real time required by the periodic hellos.

Juniper decided to put the management of Hello packets outside RPD in order to improve stability and reliability in scaled environments. Another goal was to provide subsecond failure detection by allowing new protocols like BFD to propose millisecond holding times.

First of all, ppmd was developed for ISIS and OSPF protocols, as part of the routing daemon process. You can show this command to check which task of RPD has delegated its hello management to ppmd:

jnpr@R1> show task | match ppmd_control 39 ES-IS I/O./var/run/ppmd_control 40 <> 39 IS-IS I/O./var/run/ppmd_control 39 <> 40 PIM I/O./var/run/ppmd_control 41 <> 40 LDP I/O./var/run/ppmd_control 16 <>

ppmd was later extended to support other protocols, including LACP, BFD, VRRP, and OAM LFM. These last protocols are not coded within RPD but have a dedicated, correspondingly named process: lacpd, bfdd, vrrpd, lfmd, and so on.

The motivation of ppmd is to be as dumb as possible against its clients (RPD, LACP, BFD...). In other words, notify the client’s processes only when there is an adjacency change or to send back gathered statistics.

For several years, ppmd has not been a single process hosted on the Routing Engine and now it has been developed to work in a distributed manner. Actually, ppmd runs on the Routing Engine but also on each Trio line card, on the line card’s CPU, where it is called PPM Manager, also known as ppm man. The following PFE command shows the ppm man thread on a line card CPU:

NPC11(R1 vty)# show threads [...] 54 M asleep PPM Manager 4664/8200 0/0/2441 ms 0%

The motivation for the delegation of some control processing to the line card CPU originated with the emergence of subsecond protocols like BFD. Recently, the Trio line card offers a third enhanced version of ppm, driven also by the BFD protocol in scaled environments, which is called inline ppm. In this case, the Junos OS has pushed the session management out to the packet forwarding engines themselves.

To check which adjacency is delegated to hardware or not, you can use these following hidden commands:

/* all adjacencies manages by ppmd */ jnpr@R1> show ppm adjacencies Protocol Hold time (msec) VRRP 9609 LDP 15000 LDP 15000 ISIS 9000 ISIS 27000 PIM 105000 PIM 105000 LACP 3000 LACP 3000 LACP 3000 LACP 3000 Adjacencies: 11, Remote adjacencies: 4 /* all adjacencies manages by remote ppmd (ppm man or inline ppmd) */ jnpr@R1> show ppm adjacencies remote Protocol Hold time (msec) LACP 3000 LACP 3000 LACP 3000 LACP 3000 Adjacencies: 4, Remote adjacencies: 4

The ppm delegation and inline ppm features are enabled by default, but can be turned off. In the following configuration, only the ppmd instance of the Routing Engine will work.

set routing-options ppm no-delegate-processing set routing-options ppm no-inline-processing

Note

Why disable the ppm delegation features?

Protocol delegation is not compatible with the embedded tcpdump tool (monitor traffic interface). You cannot capture control plane packets that are managed by ppm man or inline ppmd. So for lab testing or maintenance window purposes, it could be helpful to disable temporally the delegation/inline modes to catch packets via the monitor traffic interface command.

Figure 1-4 illustrates the relationship of ppmd instances with other Junos processes.

Figure 1-4. PPM architecture

Device control daemon

The device control daemon (dcd) is responsible for configuring interfaces based on the current configuration and available hardware. One feature of Junos is being able to configure nonexistent hardware, as the assumption is that the hardware can be added at a later date and “just work.” An example is the expectation that you can configure set interfaces ge-1/0/0.0 family inet address 192.168.1.1/24 and commit. Assuming there’s no hardware in FPC1, this configuration will not do anything. As soon as hardware is installed into FPC1, the first port will be configured immediately with the address 192.168.1.1/24.

Chassis daemon (and friends)

The chassis daemon (chassisd) supports all chassis, alarm, and environmental processes. At a high level, this includes monitoring the health of hardware, managing a real-time database of hardware inventory, and coordinating with the alarm daemon (alarmd) and the craft daemon (craftd) to manage alarms and LEDs.

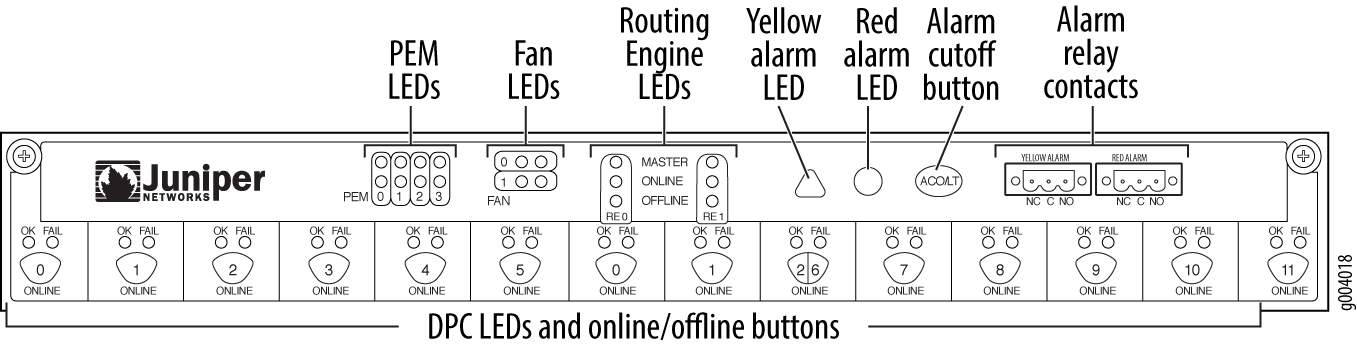

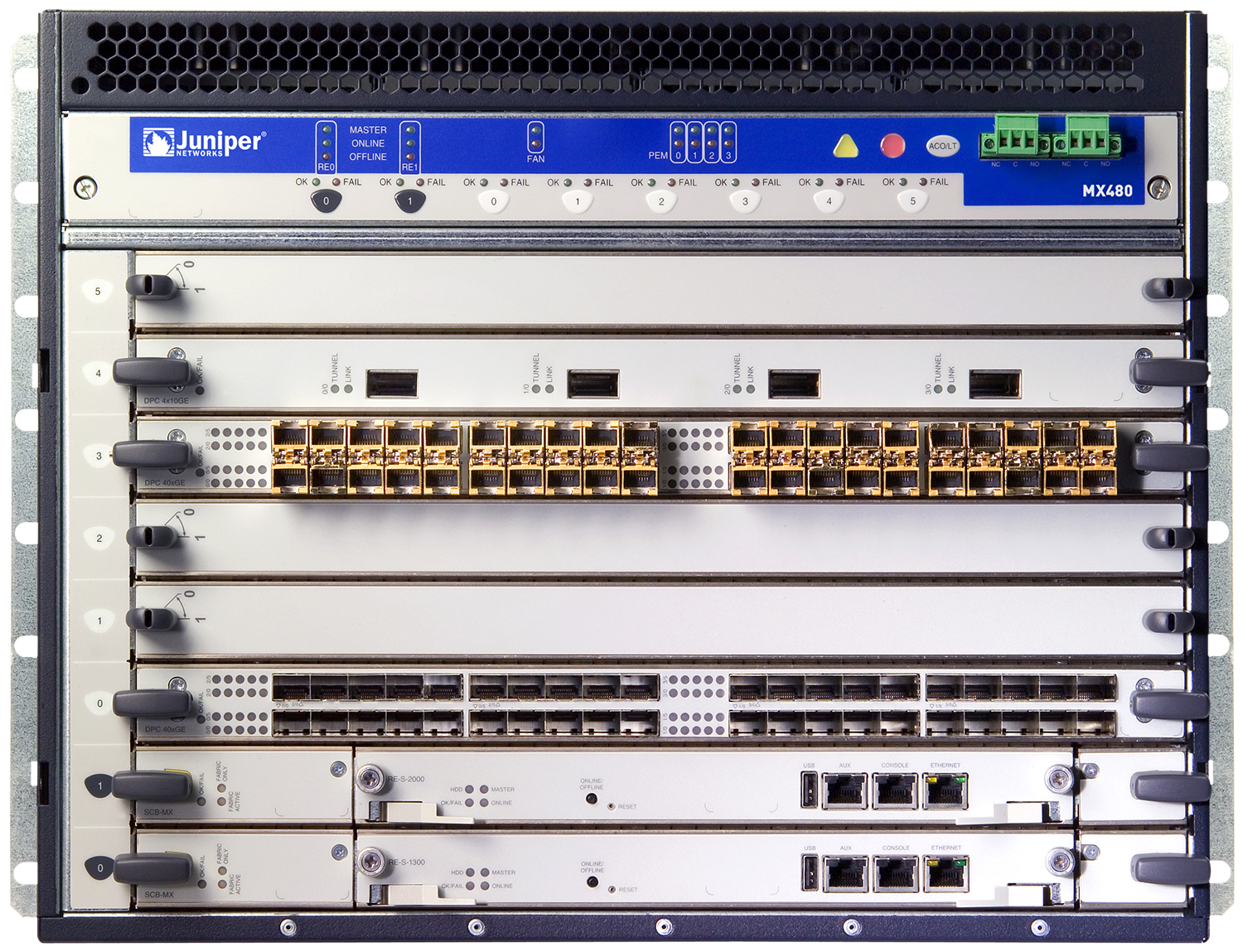

It should all seem self-explanatory except for craftd; the craft interface that is the front panel of the device as shown in Figure 1-5. Let’s take a closer look at the MX960 craft interface.

Figure 1-5. Juniper MX960 craft interface

The craft interface is a collection of buttons and LED lights to display the current status of the hardware and alarms. Information can also be obtained:

dhanks@R1-RE0> show chassis craft-interface Front Panel System LEDs: Routing Engine 0 1 -------------------------- OK * * Fail . . Master * . Front Panel Alarm Indicators: ----------------------------- Red LED . Yellow LED . Major relay . Minor relay . Front Panel FPC LEDs: FPC 0 1 2 ------------------ Red . . . Green . * * CB LEDs: CB 0 1 -------------- Amber . . Green * * PS LEDs: PS 0 1 2 3 -------------------- Red . . . . Green * . . . Fan Tray LEDs: FT 0 ---------- Red . Green *

One final responsibility of chassisd is monitoring the power and cooling environmentals. chassisd constantly monitors the voltages of all components within the chassis and will send alerts if the voltage crosses any thresholds. The same is true for the cooling. The chassis daemon constantly monitors the temperature on all of the different components and chips, as well as fan speeds. If anything is out of the ordinary, chassisd will create alerts. Under extreme temperature conditions, chassisd may also shut down components to avoid damage.

Routing Sockets

Routing sockets are a UNIX mechanism for controlling the routing table. The Junos kernel takes this same mechanism and extends it to include additional information to support additional attributes to create a carrier-class network operating system.

Figure 1-6. Routing socket architecture

At a high level, there are two actors when using routing sockets: state producer and state consumer. The rpd daemon is responsible for processing routing updates and thus is the state producer. Other daemons are considered state consumers because they process information received from the routing sockets.

Let’s take a peek into the routing sockets and see what happens when we configure ge-1/0/0.0 with an IP address of 192.168.1.1/24. Using the rtsockmon command from the shell will allow us to see the commands being pushed to the kernel from the Junos daemons:

{master}

dhanks@R1-RE0> start shell

dhanks@R1-RE0% rtsockmon -st

sender flag type op

[16:37:52] dcd P iflogical add ge-1/0/0.0 flags=0x8000

[16:37:52] dcd P ifdev change ge-1/0/0 mtu=1514 dflags=0x3

[16:37:52] dcd P iffamily add inet mtu=1500 flags=0x8000000200000000

[16:37:52] dcd P nexthop add inet 192.168.1.255 nh=bcst

[16:37:52] dcd P nexthop add inet 192.168.1.0 nh=recv

[16:37:52] dcd P route add inet 192.168.1.255

[16:37:52] dcd P route add inet 192.168.1.0

[16:37:52] dcd P route add inet 192.168.1.1

[16:37:52] dcd P nexthop add inet 192.168.1.1 nh=locl

[16:37:52] dcd P ifaddr add inet local=192.168.1.1

[16:37:52] dcd P route add inet 192.168.1.1 tid=0

[16:37:52] dcd P nexthop add inet nh=rslv flags=0x0

[16:37:52] dcd P route add inet 192.168.1.0 tid=0

[16:37:52] dcd P nexthop change inet nh=rslv

[16:37:52] dcd P ifaddr add inet local=192.168.1.1 dest=192.168.1.0

[16:37:52] rpd P ifdest change ge-1/0/0.0, af 2, up, pfx 192.168.1.0/24

Note

We configured the interface ge-1/0/0 in a different terminal window and committed the change while the rtstockmon command was running.

The command rtsockmon is a Junos shell command that gives the user visibility into the messages being passed by the routing socket. The routing sockets are broken into four major components: sender, type, operation, and arguments. The sender field is used to identify which daemon is writing into the routing socket. The type identifies which attribute is being modified. The operation field is showing what is actually being performed. There are three basic operations: add, change, and delete. The last field is the arguments passed to the Junos kernel. These are sets of key and value pairs that are being changed.

In the previous example, you can see how dcd interacts with the routing socket to configure ge-1/0/0.0 and assign an IPv4 address:

dcdcreates a new logical interface (IFL).dcdchanges the interface device (IFD) to set the proper MTU.dcdadds a new interface family (IFF) to support IPv4.dcdsets the nexthop, broadcast, and other attributes that are needed for the RIB and FIB.dcdadds the interface address (IFA) of 192.168.1.1.rpdfinally adds a route for 192.168.1.1 and brings it up.

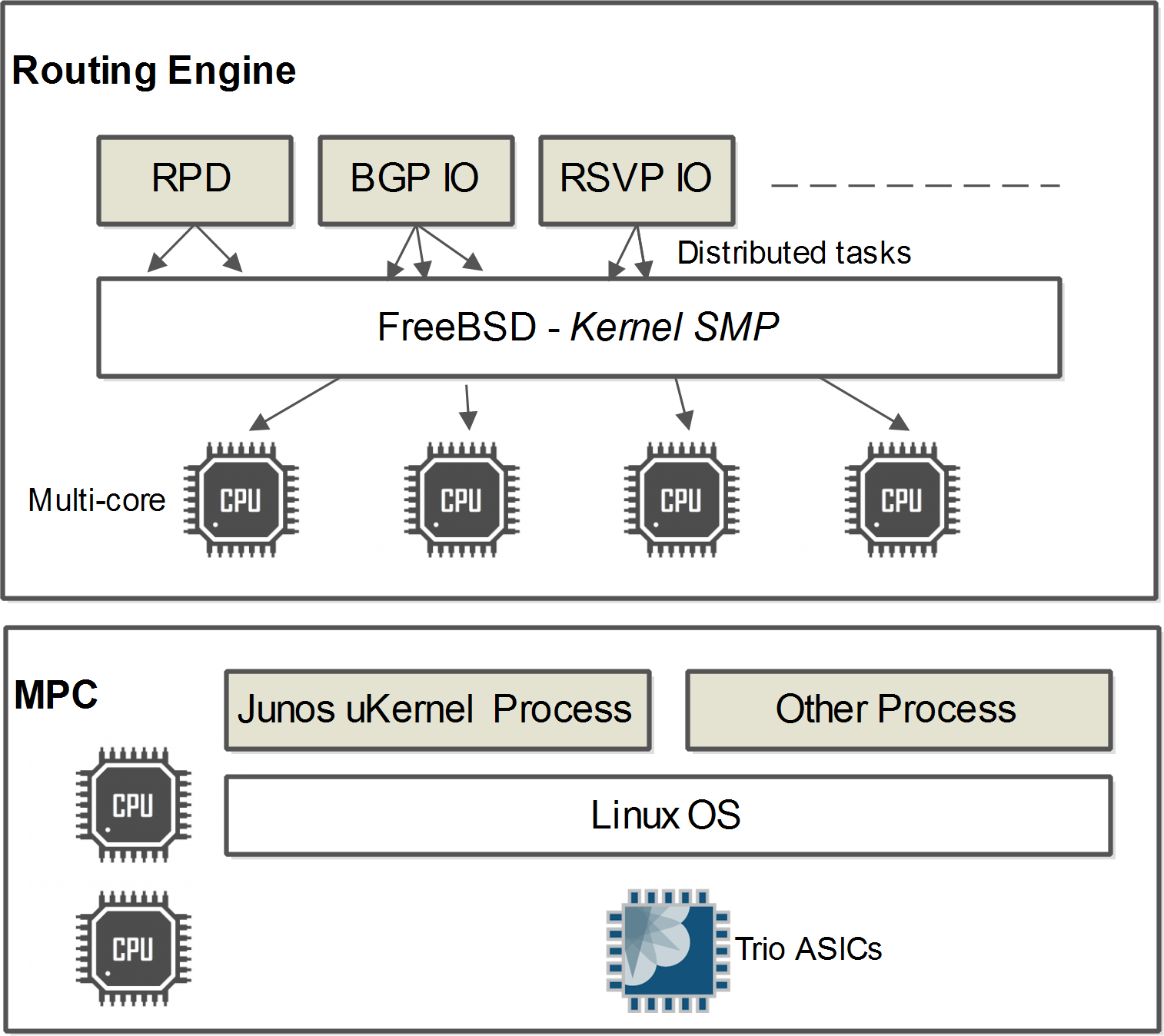

Junos OS Modernization

Starting with Junos 14.2, Juniper has launched its Junos OS modernization program. The aim is to provide more scalabilty, faster boots and commits, convergence improvements, and so on.

This huge project has been phased and the key steps are:

RPD 64 bits: even though Junos 64 bits has been available since the introduction of the Routing Engine with 64-bit processors, the RPD daemon was still a 32-bit process, which cannot address more than 4 GB of memory. Starting with Junos 14.1, you can explicitly turn on the RPD 64-bit mode allowing the device to address more memory on the 64-bit RE. It is very useful for environments that request large amounts of routes in RIB:

{master}[edit system] jnpr@R1# set processes routing force-64-bitFreeBSD upgrade and Junos independence: At release 15.1, Junos becomes totally autonomous with respect to the FreeBSD operating system. In addition, FreeBSD has been also upgraded with version 10 to support recent OS enhancements (like Kernel SMP). Junos and FreeBSD can be upgraded independently allowing smarter installation packaging and offering better reactivity for FreeBSD updates (security patches, new OS features, etc.).

Kernel SMP (Symmetric Multi-Processing) support: recently introduced in Junos 15.1.

RPD modularity: RPD will no longer be a monolithic process and instead will be split into several processes to introduce a clean separation between I/O modules and the protocols themselves. This separation will begin with the BGP and RSVP protocols at Junos 16.x.

RPD multi-core: The complete multi-core system infrastructure is scheduled after Junos 16.x.

Note

Note that starting with release 15.x, the performance of the Junos OS is dramatically improved, especially in term of convergence.

The micro-kernel of the MPC is also earmarked by the Junos modernization program. Actually, the new MPCs, starting with NG-MPC2 and NG-MPC-3, support a new multi-core processor with a customized Linux operating system, as shown in Figure 1-7. (The previous micro-kernel becomes a process over Linux OS.) This new system configuration of MPC allows more modularity, and will allow future processes to be implemented into the MPC, such as the telemetry process.

Figure 1-7. Junos modernization

Juniper MX Chassis

Figure 1-8. Juniper MX family

Ranging from virtual MX (vMX) to 45U, the MX comes in many shapes and configurations. From left to right: vMX, MX5/10/40/80, MX104, MX240, MX480, MX960, MX2010, and MX2020. The MX240 and higher models have chassis that house all components such as line cards, Routing Engines, and switching fabrics. The MX104 and below are considered midrange and only accept interface modules.

| Model | DPC capacity | MPC capacity |

|---|---|---|

| vMX | N/A | 160 Gbps |

| MX5 | N/A | 20 Gbps |

| MX10 | N/A | 40 Gbps |

| MX40 | N/A | 60 Gbps |

| MX80 | N/A | 80 Gbps |

| MX104 | N/A | 80 Gbps |

| MX240 | 240 Gbps | 1.92 Tbps |

| MX480 | 480 Gbps | 5.12 Tbps |

| MX960 | 960 Gbps | Scale up to 9.92 Tbps |

| MX2010 | N/A | Scale up to 40 Tbps |

| MX2020 | N/A | 80 Tbps |

Note

Note that the DPC and MPC capacity is based on current hardware—4x10GE DPC and MPC5e or MPC6e—and is subject to change in the future as new hardware is released. This information only serves as an example. Always check online at www.juniper.net for the latest specifications.

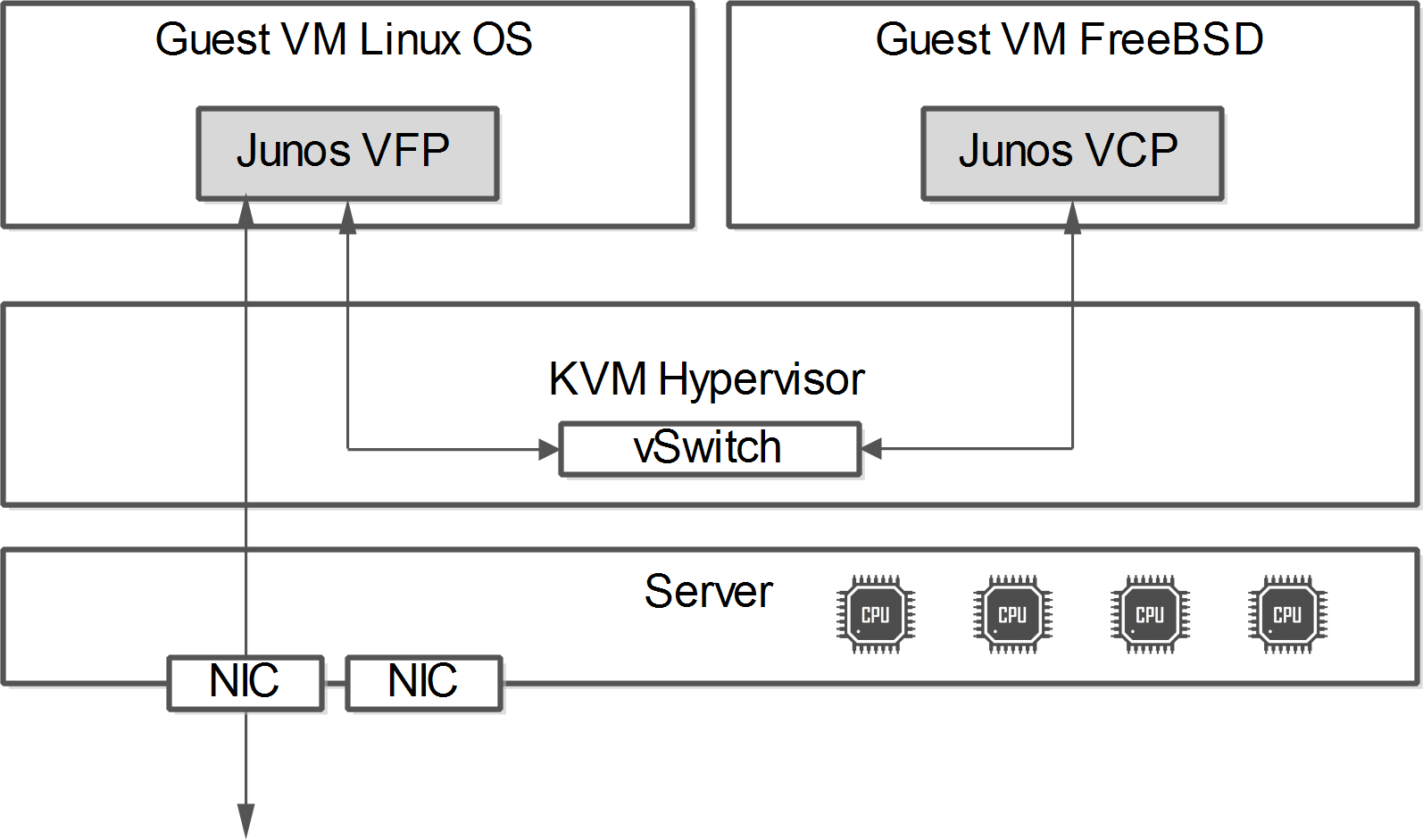

vMX

The book’s MX journey begins with the virtual MX or vMX. While vMX has a dedicated chapter in this book, it’s important to note here that vMX is not only a clone of the control plane entity of the classical Junos. vMX is a complete software router with, as its hardware “father,” a complete separation of the control plane and the forwarding plane. vMX is made of two virtual machines (VM):

VCP VM: Virtual Control Plane

VFP VM: Virtual Forwarding Plane

Both VMs run on top of a KVM hypervisor; moreover, the guest OS of the VCP virtual machine runs on FreeBSD and VFP on Linux. The two VMs communicate with each other through the virtual switch of the KVM host OS. Figure 1-9 illustrates the system architecture of the vMX. Chapter 11 describes each component and how the entity operates.

Figure 1-9. The vMX basic system architecture

MX80

The MX80 is a small, compact 2U router that comes in two models: the MX80 and MX80-48T. The MX80 supports two Modular Interface Cards (MICs), whereas the MX80-48T supports 48 10/100/1000BASE-T ports. Because of the small size of the MX80, all of the forwarding is handled by a single Trio chip and there’s no need for a switch fabric. The added bonus is that in lieu of a switch fabric, each MX80 comes with four fixed 10GE ports.

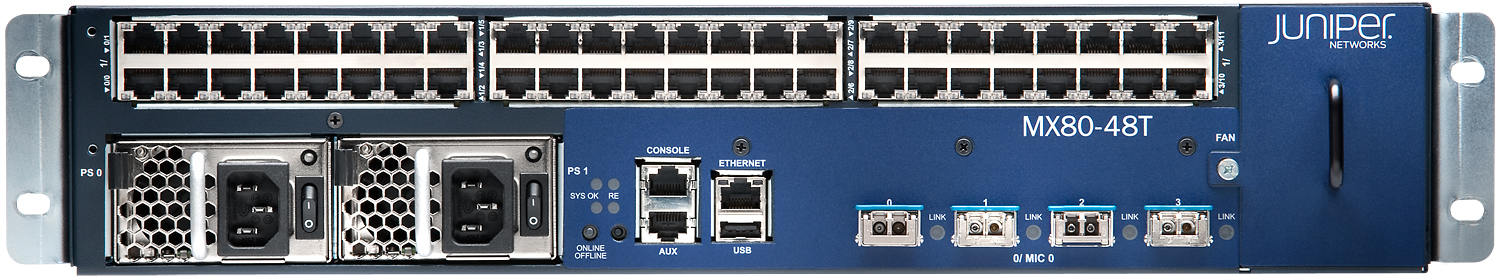

Figure 1-10. The Juniper MX80-48T supports 48x1000BASE-T and 4x10GE ports

Each MX80 comes with field-replaceable, redundant power supplies and fan trays. The power supplies come in both AC and DC. Because the MX80 is so compact, it doesn’t support slots for Routing Engines, Switch Control Boards (SCBs), or FPCs. The Routing Engine is built into the chassis and isn’t replaceable. The MX80 only supports MICs.

Note

The MX80 has a single Routing Engine and currently doesn’t support features such as NSR, NSB, and ISSU.

But don’t let the small size of the MX80 fool you. This is a true hardware-based router based on the Juniper Trio chipset. Here are some of the performance and scaling characteristics at a glance:

55 Mpps

1,000,000 IPv4 prefixes in the Forwarding Information Base (FIB)

4,000,000 IPv4 prefixes in the Routing Information Base (RIB)

16,000 logical interfaces (IFLs)

512,000 MAC addresses

MX80 interface numbering

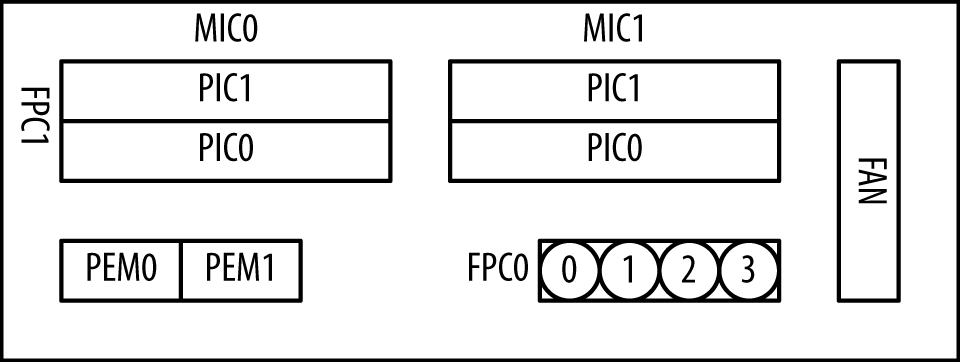

The MX80 has two FPCs: FPC0 and FPC1. FPC0 will always be the four fixed 10GE ports located on the bottom right. The FPC0 ports are numbered from left to right, starting with xe-0/0/0 and ending with xe-0/0/3.

Figure 1-11. Juniper MX80 FPC and PIC locations

Note

The dual power supplies are referred to as a Power Entry Module (PEM): PEM0 and PEM1.

FPC1 is where the MICs are installed. MIC0 is installed on the left side and MIC1 is installed on the right side. Each MIC has two Physical Interface Cards (PICs). Depending on the MIC, such as a 20x1GE or 2x10GE, the total number of ports will vary. Regardless of the number of ports, the port numbering is left to right and always begins with 0.

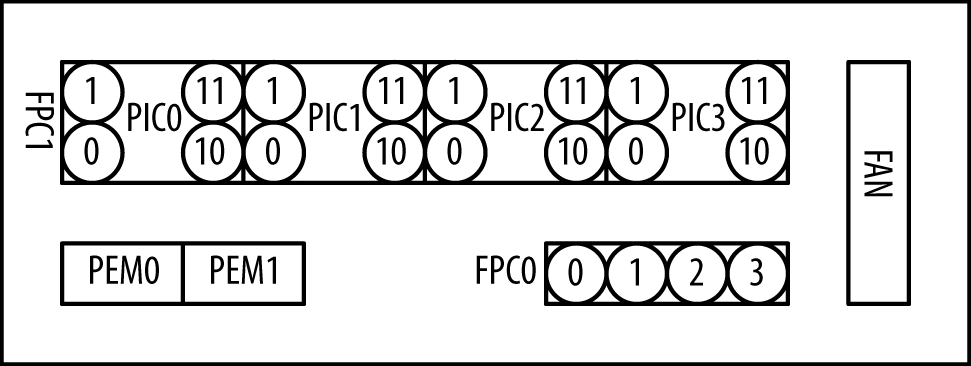

MX80-48T interface numbering

The MX80-48T interface numbering is very similar to the MX80. FPC0 remains the same and refers to the four fixed 10GE ports. The only difference is that FPC1 refers to the 48x1GE ports. FPC1 contains four PICs; the numbering begins at the bottom left, works its way up, and then shifts to the right starting at the bottom again. Each PIC contains 12x1GE ports numbered 0 through 11.

Figure 1-12. Juniper MX80-48T FPC and PIC locations

| FPC | PIC | Interface names |

|---|---|---|

| FPC0 | PIC0 | xe-0/0/0 through xe-0/0/3 |

| FPC1 | PIC0 | ge-1/0/0 through ge-1/0/11 |

| FPC1 | PIC1 | ge-1/1/0 through ge-1/1/11 |

| FPC1 | PIC2 | ge-1/2/0 through ge-1/2/11 |

| FPC1 | PIC3 | ge-1/3/0 through ge-1/3/11 |

With each PIC within FPC1 having 12x1GE ports and a total of four PICs, this brings the total to 48x1GE ports.

The MX80-48T has a fixed 48x1GE and 4x10GE ports and doesn’t support MICs. These ports are tied directly to a single Trio chip as there is no switch fabric.

Midrange

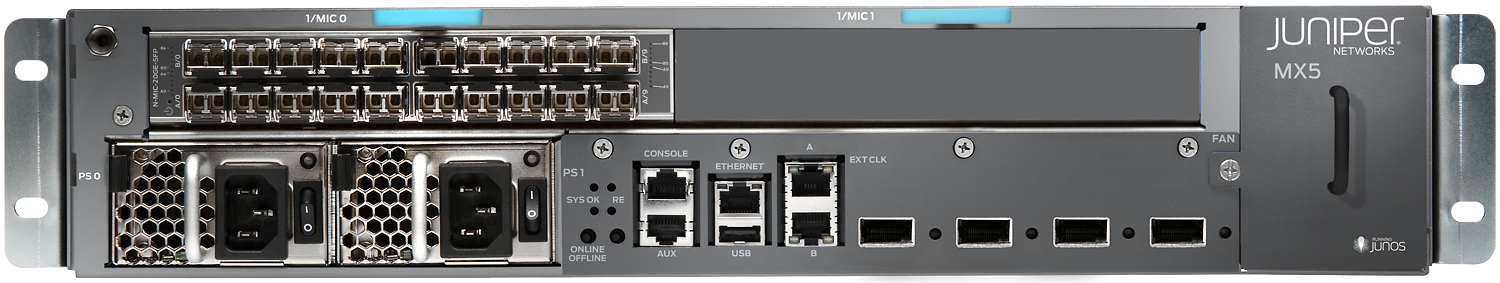

Figure 1-13. Juniper MX5

If the MX80 is still too big of a router, there are licensing options to restrict the number of ports on the MX80. The benefit is that you get all of the performance and scaling of the MX80, but at a fraction of the cost. These licensing options are known as the MX Midrange: the MX5, MX10, MX40, and MX80.

| Model | MIC slot 0 | MIC slot 1 | Fixed 10GE ports | Services MIC |

|---|---|---|---|---|

| MX5 | Available | Restricted | Restricted | Available |

| MX10 | Available | Available | Restricted | Available |

| MX40 | Available | Available | Two ports available | Available |

| MX80 | Available | Available | All four ports available | Available |

Each router is software upgradable via a license. For example, the MX5 can be upgraded to the MX10 or directly to the MX40 or MX80.

When terminating a small number of circuits or Ethernet handoffs, the MX5 through the MX40 are the perfect choice. Although you’re limited in the number of ports, all of the performance and scaling numbers are identical to the MX80. For example, given the current size of a full Internet routing table is about 420,000 IPv4 prefixes, the MX5 would be able to handle over nine full Internet routing tables.

Keep in mind that the MX5, MX10, and MX40 are really just an MX80. There is no difference in hardware, scaling, or performance. The only caveat is that the MX5, MX10, and MX40 use a different front view on the front of the router for branding.

The only restriction on the MX5, MX10, and MX40 are which ports are allowed to be configured. The software doesn’t place any sort of bandwidth restrictions on the ports at all. There’s a common misconception that the MX5 is a “5-gig router,” but this isn’t the case. For example, the MX5 comes with a 20x1GE MIC and is fully capable of running each port at line rate.

MX104

The MX104 is a high-density router for pre-aggregation and access. It was designed to be compact and compatible with floor-to-ceiling racks. Even if it was optimized for aggregating mobile traffic, the MX104 is also useful as a PE for Enterprise and residential access networks.

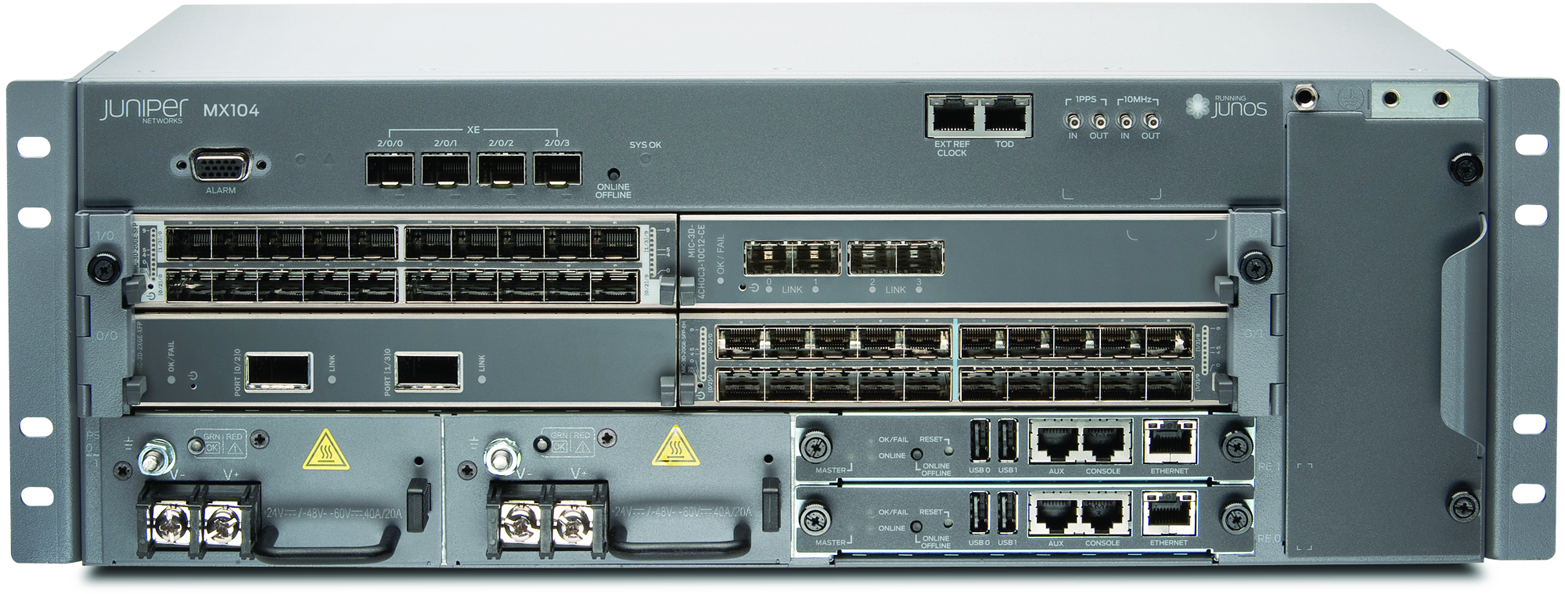

Figure 1-14. Juniper MX104

The MX104 provides redundancy for power and the Routing Engine. The chassis offers four slots to host MICs—these MICs are compatible with those available for the MX5/MX10/MX40 and MX80 routers. The MX104 also provides four built-in 10-Gigabit Ethernet SFP+ ports.

Interface numbering

Each MX104 router has three built-in MPCs, which are represented in the CLI as FPC 0 through FPC 2. The numbering of the MPCs is from bottom to top. MPC 0 and 1 can both host two MICs. MPC 2 hosts a built-in MIC with four 10GE ports. Figure 1-15 illustrates interface numbering on the MX104.

Note

Each MIC can number ports differently, and Figure 1-15 illustrates two types of MICs as examples.

Figure 1-15. Juniper MX104 interface numbering

MX240

The MX240 (see Figure 1-16) is the first router in the MX Series lineup that has a chassis supporting modular Routing Engines, SCBs, and FPCs. The MX240 is 5U tall and supports four horizontal slots. There’s support for one Routing Engine, or optional support for two Routing Engines. Depending on the number of Routing Engines, the MX240 supports either two or three FPCs.

Note

The Routing Engine is installed into an SCB and will be described in more detail later in the chapter.

Figure 1-16. Juniper MX240

To support full redundancy, the MX240 requires two SCBs and Routing Engines. If a single SCB fails, there is enough switch fabric capacity on the other SCB to support the entire router at line rate. This is referred to as 1 + 1 SCB redundancy. In this configuration, only two FPCs are supported.

Alternatively, if redundancy isn’t required, the MX240 can be configured to use a single SCB and Routing Engine. This configuration allows for three FPCs instead of two.

Interface numbering

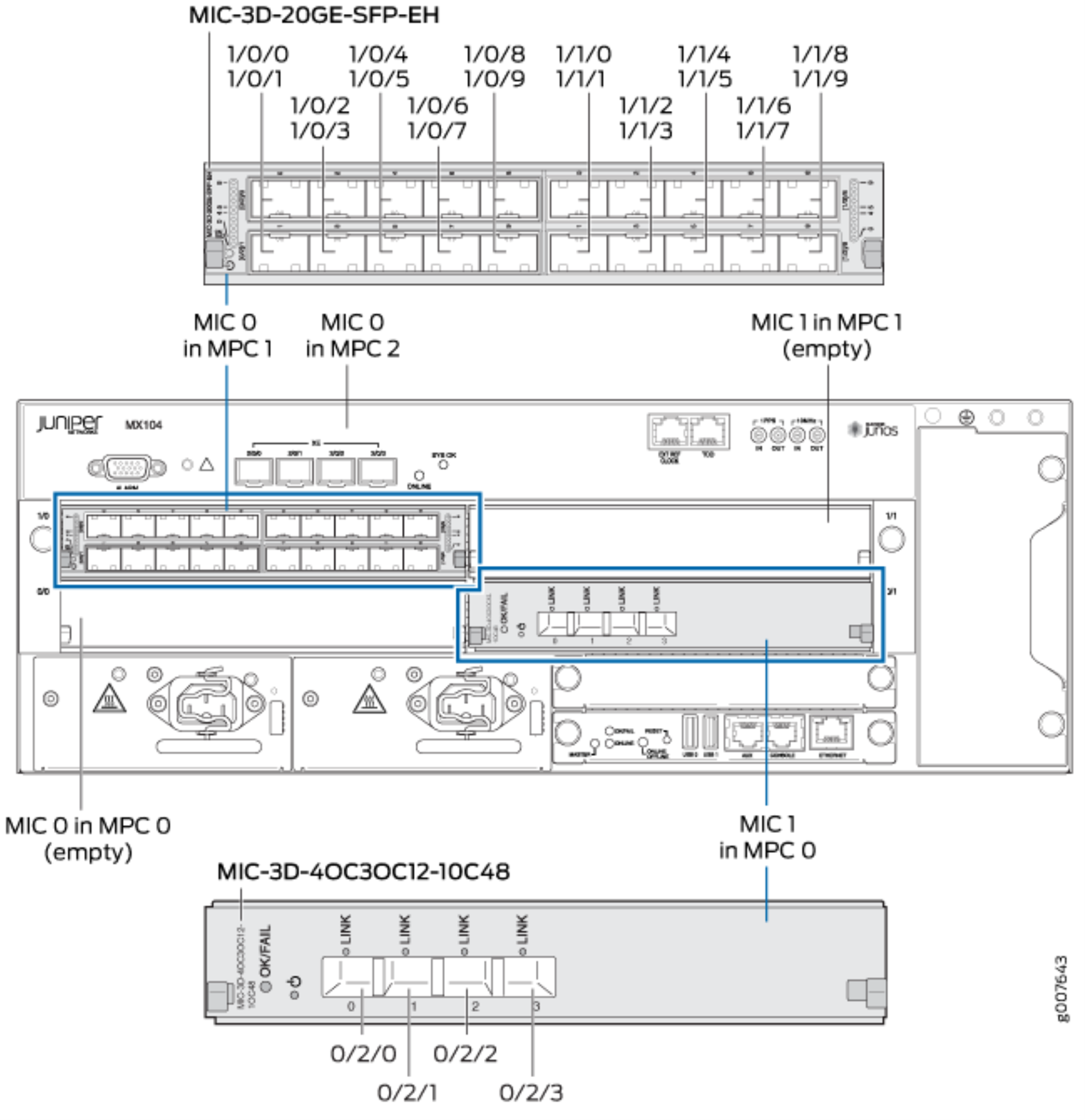

The MX240 is numbered from the bottom up starting with the SCB. The first SCB must be installed into the very bottom slot. The next slot up is a special slot that supports either a SCB or FPC, and thus begins the FPC numbering at 0. From there, you may install two additional FPCs as FPC1 and FPC2.

Full redundancy

The SCBs must be installed into the very bottom slots to support 1 + 1 SCB redundancy (see Figure 1-17). These slots are referred to as SCB0 and SCB1. When two SCBs are installed, the MX240 supports only two FPCs: FPC1 and FPC2.

Figure 1-17. Juniper MX240 interface numbering with SCB redundancy

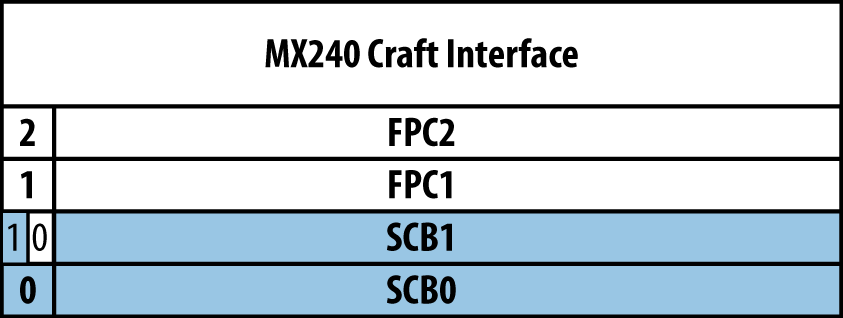

No redundancy

When a single SCB is used, it must be installed into the very bottom slot and obviously doesn’t provide any redundancy; however, three FPCs are supported. In this configuration, the FPC numbering begins at FPC0 and ends at FPC2, as shown in Figure 1-18.

Figure 1-18. Juniper MX240 interface numbering without SCB redundancy

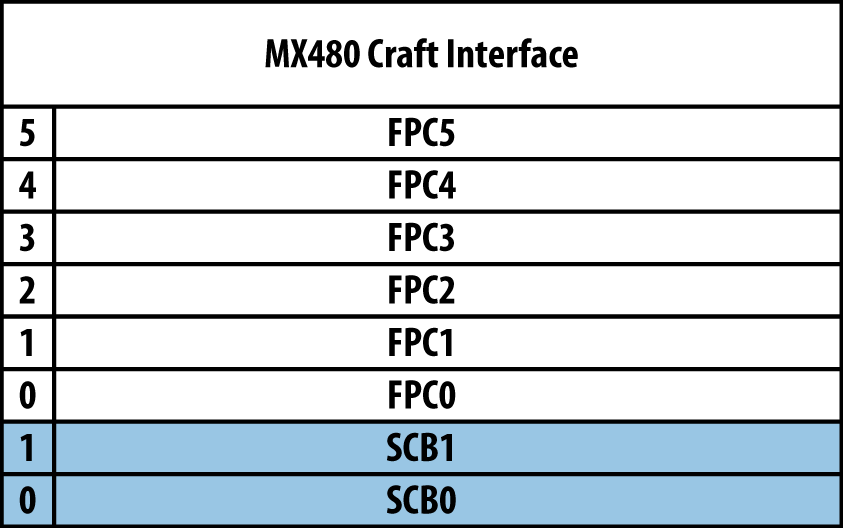

MX480

The MX480 is the big brother to the MX240. There are eight horizontal slots total. It supports two SCBs and Routing Engines as well as six FPCs in only 8U of space. The MX480 tends to be the most popular MX Series in Enterprise because six slots tends to be the “sweet spot” for the number of slots (see Figure 1-19).

Figure 1-19. Juniper MX480

Like its little brother, the MX480 requires two SCBs and Routing Engines for full redundancy. If a single SCB were to fail, the other SCB would be able to support all six FPCs at line rate.

All components between the MX240 and MX480 are interchangeable. This makes the sparing strategy cost effective and provides FPC investment protection.

Note

There is custom keying on the SCB and FPC slots so that an SCB cannot be installed into an FPC slot and vice versa. In the case where the chassis supports either an SCB or FPC in the same slot, such as the MX240 or MX960, the keying will allow for both.

The MX480 is a bit different from the MX240 and MX960, as it has two dedicated SCB slots that aren’t able to be shared with FPCs.

Interface numbering

The MX480 is numbered from the bottom up (see Figure 1-20). The SCBs are installed into the very bottom of the chassis into SCB0 and SCB1. From there, the FPCs may be installed and are numbered from the bottom up as well.

Figure 1-20. Juniper MX480 interface numbering with SCB redundancy

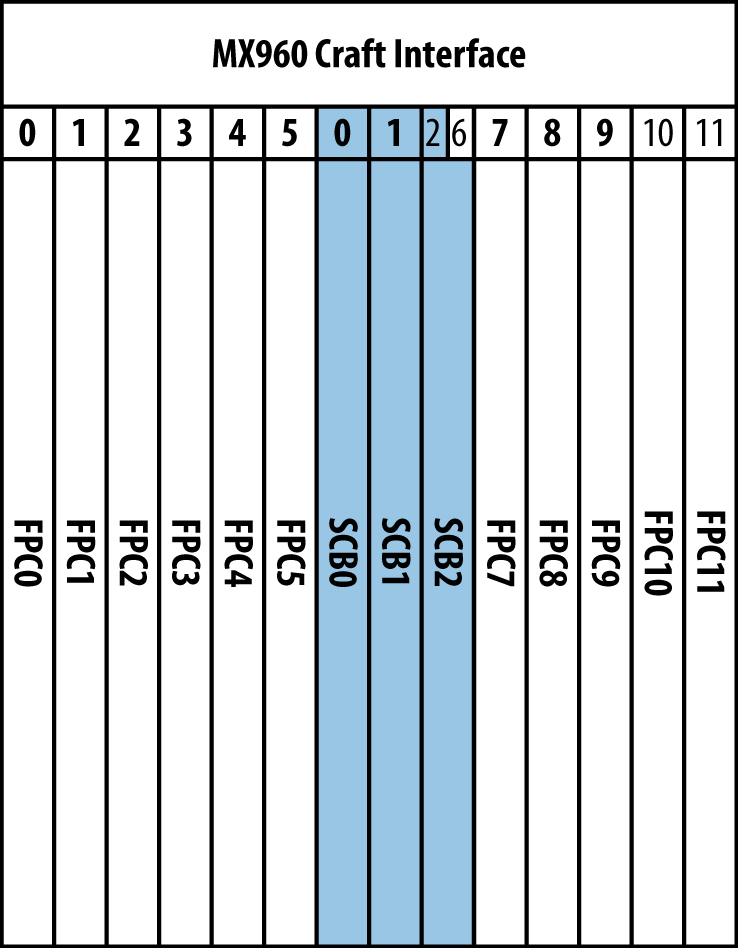

MX960

Some types of traffic require a big hammer. The MX960, the sledgehammer of the MX Series, fills this need. The MX960 is all about scale and performance. It stands at 16U and weighs in at 334 lbs. The SCBs and FPCs are installed vertically into the chassis so that it can support 14 slots side to side.

Figure 1-21. Juniper MX960

Because of the large scale, three SCBs are required for full redundancy. This is referred to as 2 + 1 SCB redundancy. If any SCB fails, the other two SCB are able to support all 11 FPCs at line rate.

If you like living life on the edge and don’t need redundancy, the MX960 requires at least two SCBs to switch the available 12 FPCs.

Note

The MX960 requires special power supplies that are not interchangeable with the MX240 or MX480.

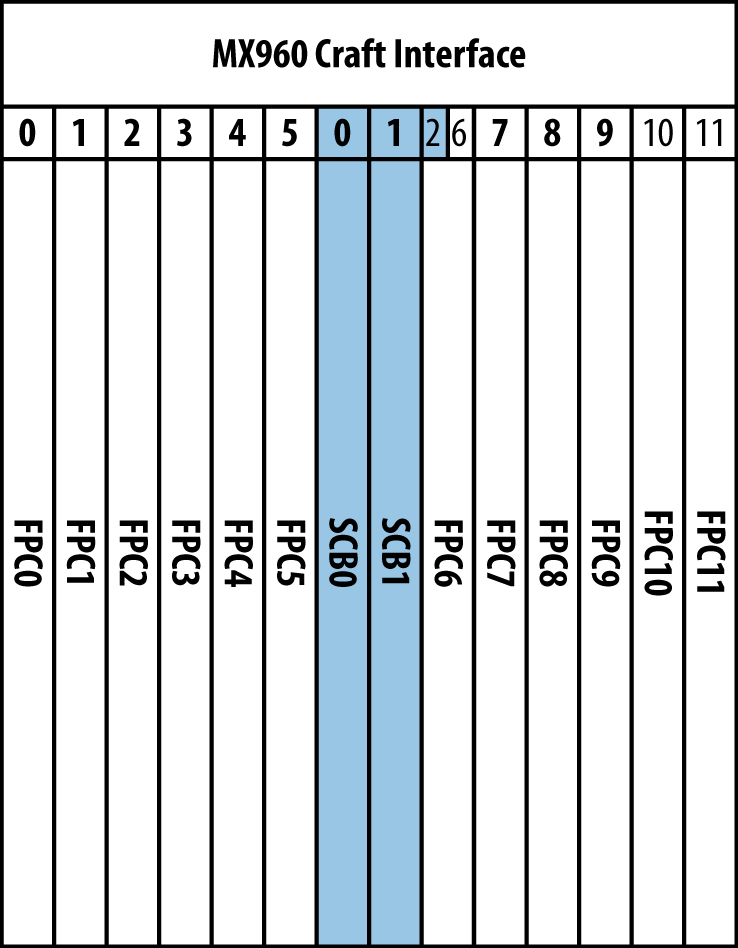

Interface numbering

The MX960 is numbered from the left to the right. The SCBs are installed in the middle, whereas the FPCs are installed on either side. Depending on whether or not you require SCB redundancy, the MX960 is able to support 11 or 12 FPCs.

Full redundancy

The first six slots are reserved for FPCs and are numbered from left to right beginning at 0 and ending with 5, as shown in Figure 1-22. The next two slots are reserved and keyed for SCBs. The next slot is keyed for either an SCB or FPC. In the case of full redundancy, SCB2 needs to be installed into this slot. The next five slots are reserved for FPCs and begin numbering at 7 and end at 11.

Figure 1-22. Juniper MX960 interface numbering with full 2 + 1 SCB redundancy

No redundancy

Running with two SCBs gives you the benefit of being able to switch 12 FPCs at line rate. The only downside is that there’s no SCB redundancy. Just like before, the first six slots are reserved for FPC0 through FPC5. The difference now is that SCB0 and SCB1 are to be installed into the next two slots. Instead of having SCB2, you install FPC6 into this slot. The remaining five slots are reserved for FPC7 through FPC11.

Figure 1-23. Juniper MX960 interface numbering without SCB redundancy

MX2010 and MX2020

The MX2K family (MX2010 and MX2020) is a router family in the MX Series that’s designed to solve the 10G and 100G high port density needs of Content Service Providers (CSP), Multisystem Operators (MSO), and traditional Service Providers. At a glance, the MX2010 supports ten line cards, eight switch fabric boards, and two Routing Engines, and its big brother the MX2020 supports twenty line cards, eight switch fabric boards, and two Routing Engines as well.

The chassis occupies 34RU for MX2010 and 45RU for MX2020 and has front-to-back cooling.

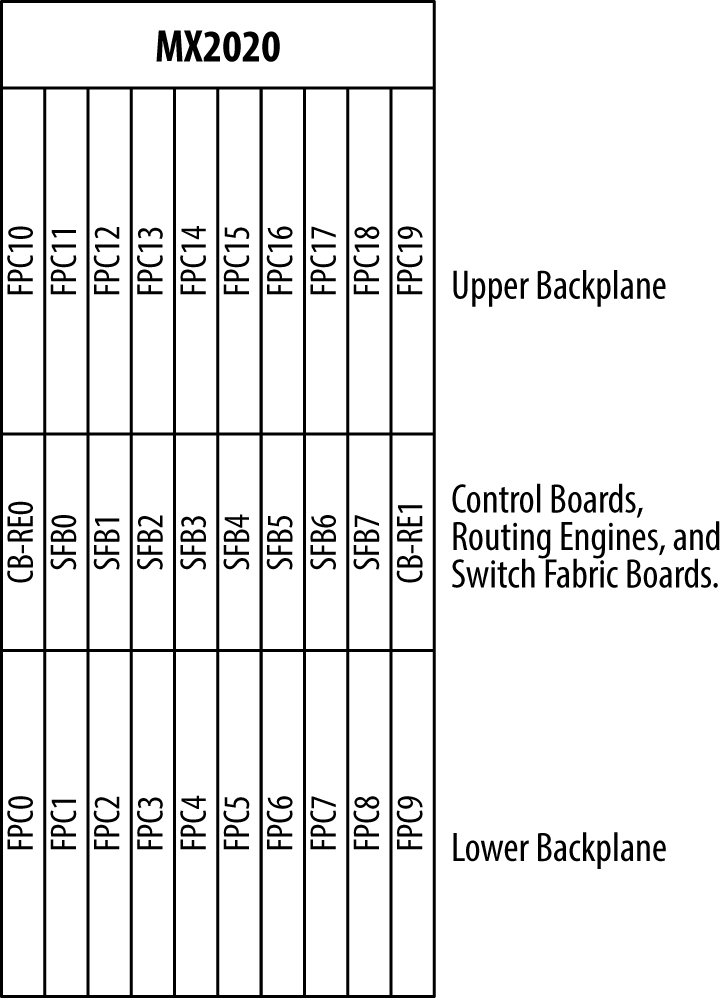

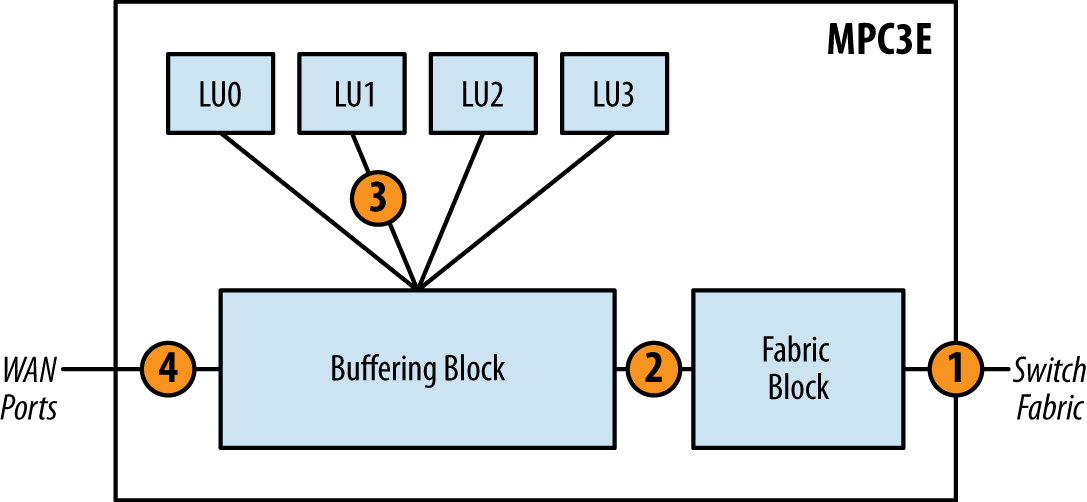

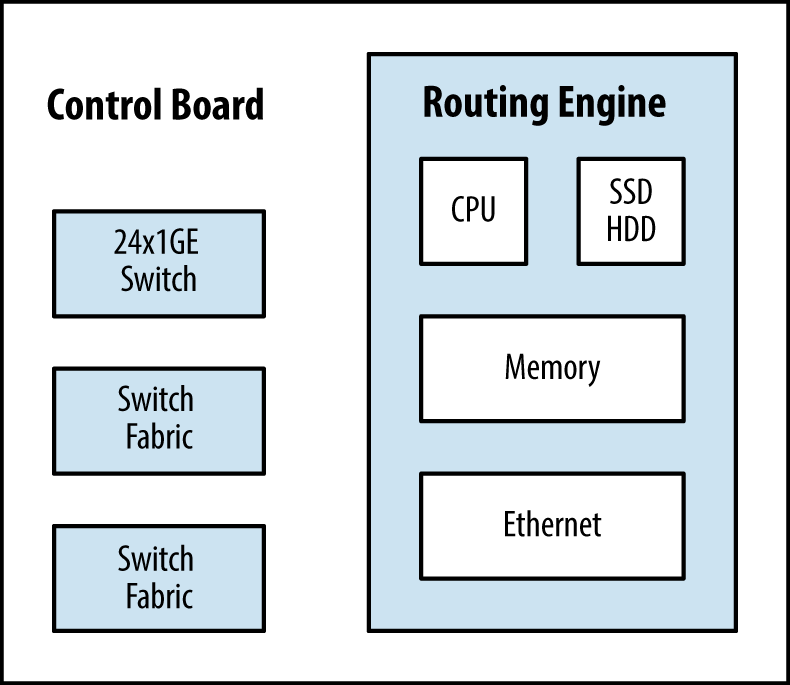

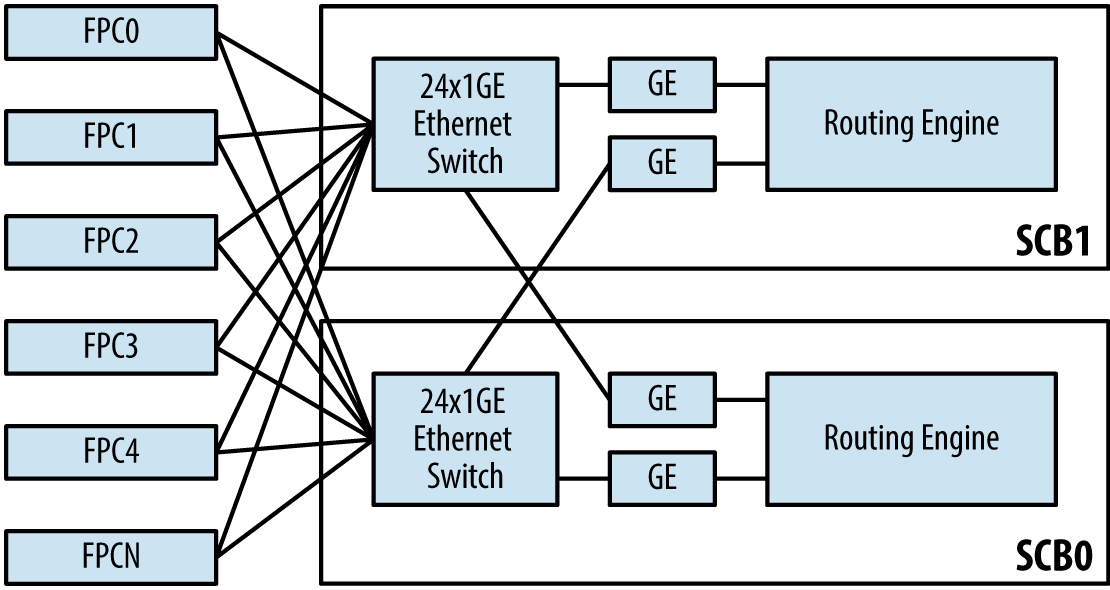

MX2020 architecture

The MX2020 is a standard backplane-based system, albeit at a large scale. There are two backplanes connected together with centralized switch fabric boards (SFB). The Routing Engine and control board is a single unit that consumes a single slot, as illustrated in Figure 1-24 on the far left and right.

Figure 1-24. Illustration of MX2020 architecture

The FPC numbering is the standard Juniper method of starting at the bottom and moving left to right as you work your way up. The SFBs are named similarly, with zero starting on the left and going all the way to seven on the far right. The Routing Engine and control boards are located in the middle of the chassis on the far left and far right.

Switch fabric board

Each backplane has 10 slots that are tied into eight SFBs in the middle of the chassis. Because of the high number of line cards and PFEs the switch fabric must support, a new SFB was created specifically for the MX2020. The SFB is able to support more PFEs and has a much higher throughput compared to the previous SCBs. Recall that the SCB and SCBE presents its chipsets to Junos as a fabric plane and can be seen with the show chassis fabric summary command—the new SFB has multiple chipsets as well, but presents them as an aggregate fabric plane to Junos. In other words, each SFB will appear as a single fabric plane within Junos. Each SFB will be in an Active state by default. Let’s take a look at the installed SFBs first:

dhanks@MX2020> show chassis hardware | match SFB SFB 0 REV 01 711-032385 ZE5866 Switch Fabric Board SFB 1 REV 01 711-032385 ZE5853 Switch Fabric Board SFB 2 REV 01 711-032385 ZB7642 Switch Fabric Board SFB 3 REV 01 711-032385 ZJ3555 Switch Fabric Board SFB 4 REV 01 711-032385 ZE5850 Switch Fabric Board SFB 5 REV 01 711-032385 ZE5870 Switch Fabric Board SFB 6 REV 04 711-032385 ZV4182 Switch Fabric Board SFB 7 REV 01 711-032385 ZE5858 Switch Fabric Board

There are eight SFBs installed; now let’s take a look at the switch fabric status:

dhanks@MX2020> show chassis fabric summary Plane State Uptime 0 Online 1 hour, 25 minutes, 59 seconds 1 Online 1 hour, 25 minutes, 59 seconds 2 Online 1 hour, 25 minutes, 59 seconds 3 Online 1 hour, 25 minutes, 59 seconds 4 Online 1 hour, 25 minutes, 59 seconds 5 Online 1 hour, 25 minutes, 59 seconds 6 Online 1 hour, 25 minutes, 59 seconds 7 Online 1 hour, 25 minutes, 59 seconds

Depending on which line cards are being used, only a subset of the eight SFBs need to be present in order to provide a line-rate switch fabric, but this is subject to change with line cards.

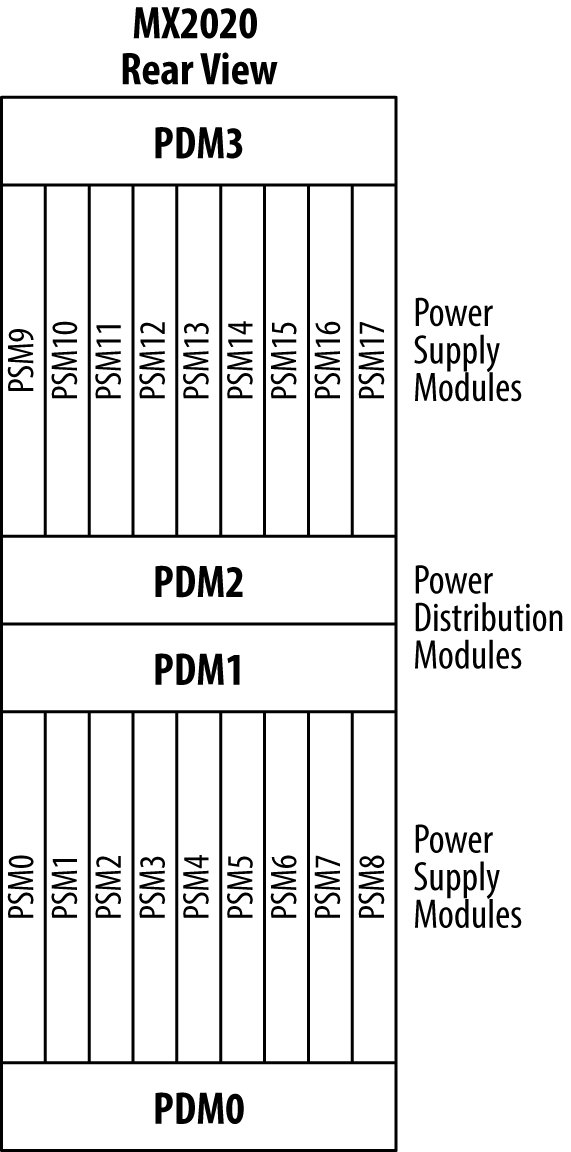

Power supply

The power supply on the MX2020 is a bit different than the previous MX models, as shown in Figure 1-25. The MX2020 power system is split into two sections: top and bottom. The bottom power supplies provide power to the lower backplane line cards, lower fan trays, SFBs, and CB-REs. The top power supplies provide power to the upper backplane line cards and fan trays. The MX2020 provides N + 1 power supply redundancy and N + N feed redundancy. There are two major power components that supply power to the MX2K:

- Power Supply Module

The Power Supply Modules (PSMs) are the actual power supplies that provide power to a given backplane. There are nine PSMs per backplane, but only eight are required to fully power the backplane. Each backplane has 8 + 1 PSM redundancy.

- Power Distribution Module

There are two Power Distribution Modules (PDM) per backplane, providing 1 + 1 PDM redundancy for each backplane. Each PDM contains nine PSMs to provide 8 + 1 PSM redundancy for each backplane.

Figure 1-25. Illustration of MX2020 power supply architecture

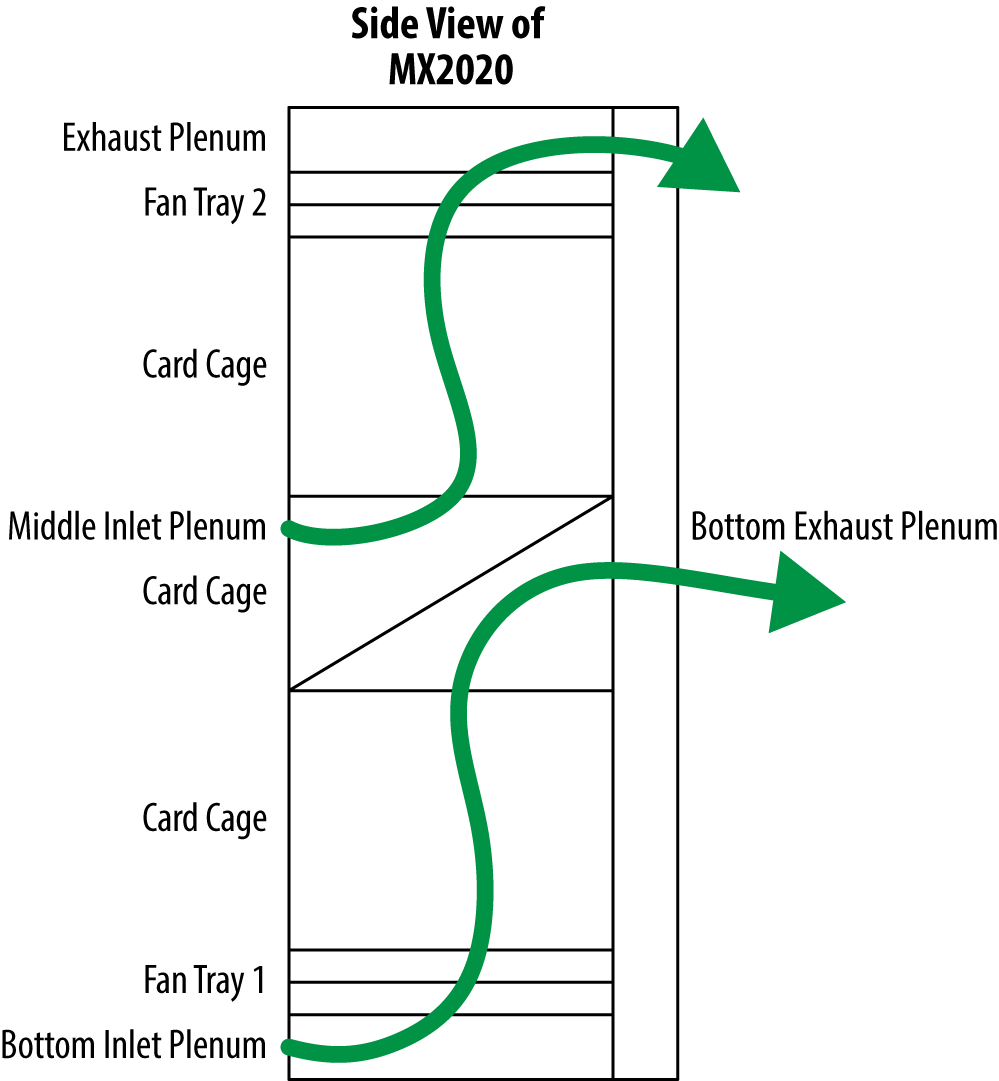

Air flow

The majority of data centers support hot and cold aisles, which require equipment with front-to-back cooling to take advantage of the airflow. The MX2020 does support front-to-back cooling and does so in two parts, as illustrated in Figure 1-26. The bottom inlet plenum supplies cool air from the front of the chassis and the bottom fan trays force the cool air through the bottom line cards; the air is then directed out of the back of the chassis by a diagonal airflow divider in the middle card cage. The same principal applies to the upper section. The middle inlet plenum supplies cool air from the front of the chassis and the upper fan trays push the cool air through the upper card cage; the air is then directed out the back of the chassis.

Figure 1-26. Illustration of MX2020 front-to-back air flow

Line card compatibility

The MX2020 is compatible with all Trio-based MPC line cards; however, there will be no backwards compatibility with the first-generation DPC line cards. The caveat is that the MPC1E, MPC2E, MPC3E, MPC4E, MPC5E, and MPC7E line cards will require a special MX2020 Line Card Adapter. The MX2020 can support up to 20 Adapter Cards (ADC) to accommodate 20 MPC1E through MPC7E line cards. Because the MX2020 uses a newer-generation SFB with faster bandwidth, line cards that were designed to work with the SCB and SCBE must use the ADC in the MX2020.

The ADC is merely a shell that accepts MPC1E through MPC7E line cards in the front and converts power and switch fabric in the rear. Future line cards built specifically for the MX2020 will not require the ADC. Let’s take a look at the ADC status with the show chassis adc command:

dhanks@MX2020> show chassis adc Slot State Uptime 3 Online 6 hours, 2 minutes, 52 seconds 4 Online 6 hours, 2 minutes, 46 seconds 8 Online 6 hours, 2 minutes, 39 seconds 9 Online 6 hours, 2 minutes, 32 seconds 11 Online 6 hours, 2 minutes, 26 seconds 16 Online 6 hours, 2 minutes, 19 seconds 17 Online 6 hours, 2 minutes, 12 seconds 18 Online 6 hours, 2 minutes, 5 seconds

In this example, there are eight ADC cards in the MX2020. Let’s take a closer look at FPC3 and see what type of line card is installed:

dhanks@MX2020> show chassis hardware | find "FPC 3"

FPC 3 REV 22 750-028467 YE2679 MPC 3D 16x 10GE

CPU REV 09 711-029089 YE2832 AMPC PMB

PIC 0 BUILTIN BUILTIN 4x 10GE(LAN) SFP+

Xcvr 0 REV 01 740-031980 B10M00015 SFP+-10G-SR

Xcvr 1 REV 01 740-021308 19T511101037 SFP+-10G-SR

Xcvr 2 REV 01 740-031980 AHK01AS SFP+-10G-SR

PIC 1 BUILTIN BUILTIN 4x 10GE(LAN) SFP+

PIC 2 BUILTIN BUILTIN 4x 10GE(LAN) SFP+

Xcvr 0 REV 01 740-021308 19T511100867 SFP+-10G-SR

PIC 3 BUILTIN BUILTIN 4x 10GE(LAN) SFP+

The MPC-3D-16X10GE-SFPP is installed into FPC3 using the ADC for compatibility. Let’s check the environmental status of the ADC installed into FPC3:

dhanks@MX2020> show chassis environment adc | find "ADC 3" ADC 3 status: State Online Intake Temperature 34 degrees C / 93 degrees F Exhaust Temperature 46 degrees C / 114 degrees F ADC-XF1 Temperature 51 degrees C / 123 degrees F ADC-XF0 Temperature 61 degrees C / 141 degrees F

Each ADC has two chipsets, as shown in the example output: ADC-XF1 and ADC-XF2. These chipsets convert the switch fabric between the MX2020 SFB and the MPC1E through MPC7E line cards.

Aside from the simple ADC carrier to convert power and switch fabric, the MPC-3D-16X10GE-SFPP line card installed into FPC3 works just like a regular line card with no restrictions. Let’s just double-check the interface names to be sure:

dhanks@MX2020> show interfaces terse | match xe-3 Interface Admin Link Proto Local Remote xe-3/0/0 up down xe-3/0/1 up down xe-3/0/2 up down xe-3/0/3 up down xe-3/1/0 up down xe-3/1/1 up down xe-3/1/2 up down xe-3/1/3 up down xe-3/2/0 up down xe-3/2/1 up down xe-3/2/2 up down xe-3/2/3 up down xe-3/3/0 up down xe-3/3/1 up down xe-3/3/2 up down xe-3/3/3 up down

Just as expected: the MPC-3D-16X10GE-SFPP line card has 16 ports of 10GE interfaces grouped into four PICs with four interfaces each.

The MPC6e is the first MX2K MPC which is not request ADC card. The MPC6e is a modular MPC that can host two high-density MICs. Each MIC slot has a 240Gbps full-duplex bandwidth capacity.

Trio

Juniper Networks prides itself on creating custom silicon and making history with silicon firsts. Trio is the latest milestone:

1998: First separation of control and data plane

1998: First implementation of IPv4, IPv6, and MPLS in silicon

2000: First line-rate 10 Gbps forwarding engine

2004: First multi-chassis router

2005: First line-rate 40 Gbps forwarding engine

2007: First 160 Gbps firewall

2009: Next generation silicon: Trio

2010: First 130 Gbps PFE; next generation Trio

2013: New generation of the lookup ASIC; XL chip upgrades to the PFE 260Gbps

2015: First “all-in-one” 480Gbps PFE ASIC: EAGLE (3rd generation of Trio)

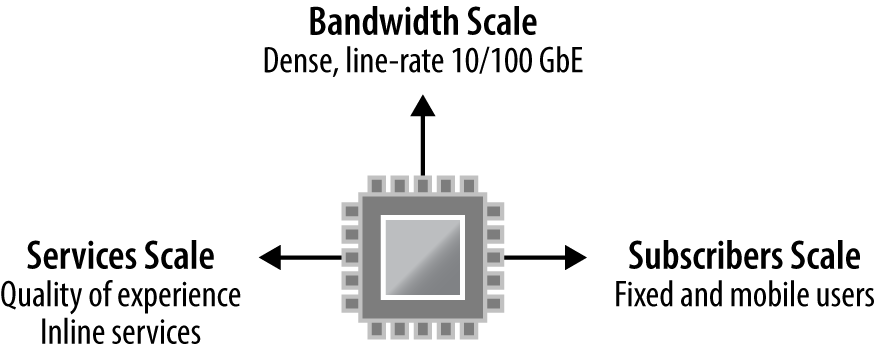

Trio is a fundamental technology asset for Juniper that combines three major components: bandwidth scale, services scale, and subscriber scale (see Figure 1-27). Trio was designed from the ground up to support high-density, line-rate 10G and 100G ports. Inline services such as IPFIX, NAT, GRE, and BFD offer a higher level of quality of experience without requiring an additional services card. Trio offers massive subscriber scale in terms of logical interfaces, IPv4 and IPv6 routes, and hierarchical queuing.

Figure 1-27. Juniper Trio scale: Services, bandwidth, and subscribers

Trio is built upon a Network Instruction Set Processor (NISP). The key differentiator is that Trio has the performance of a traditional ASIC, but the flexibility of a field-programmable gate array (FPGA) by allowing the installation of new features via software. Here is just an example of the inline services available with the Trio chipset:

Tunnel encapsulation and decapsulation

IP Flow Information Export

Network Address Translation

Bidirectional Forwarding Detection

Ethernet operations, administration, and management

Instantaneous Link Aggregation Group convergence

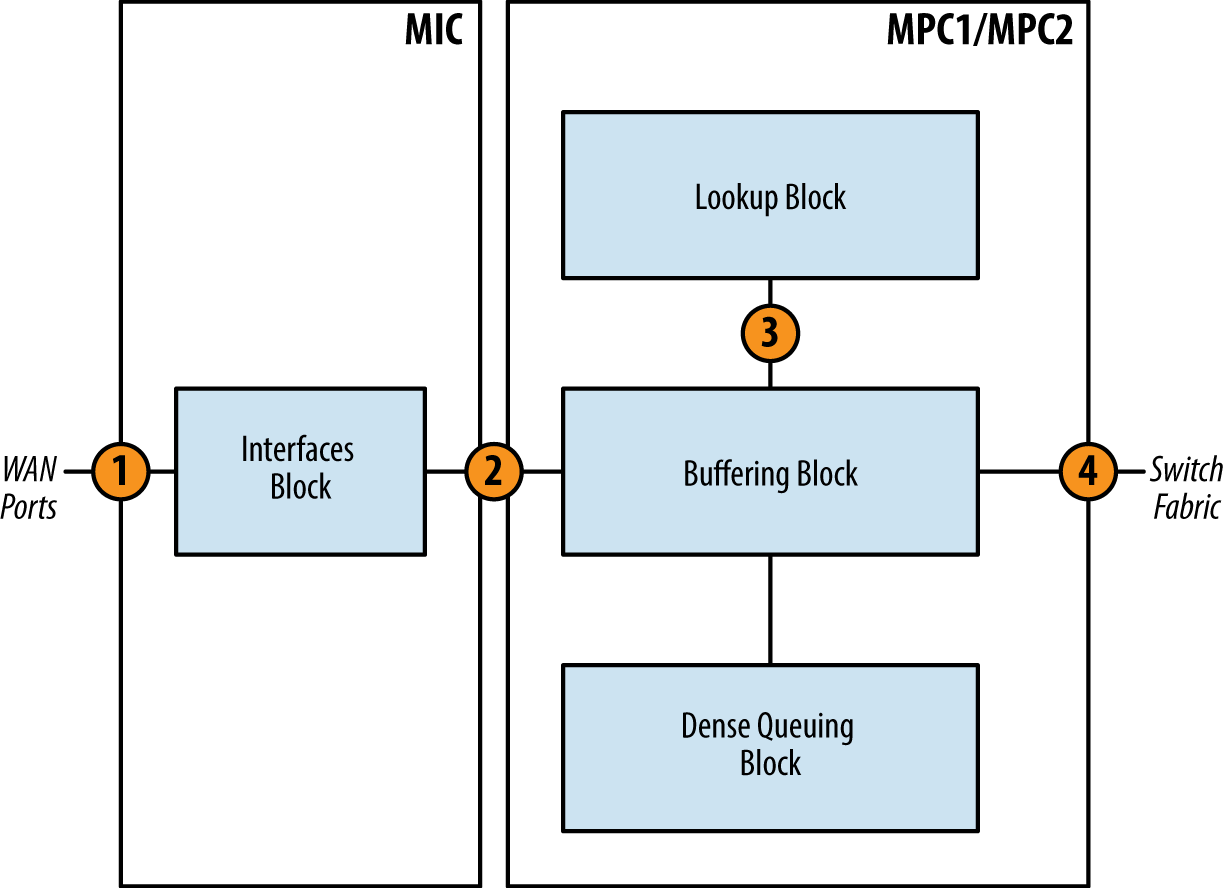

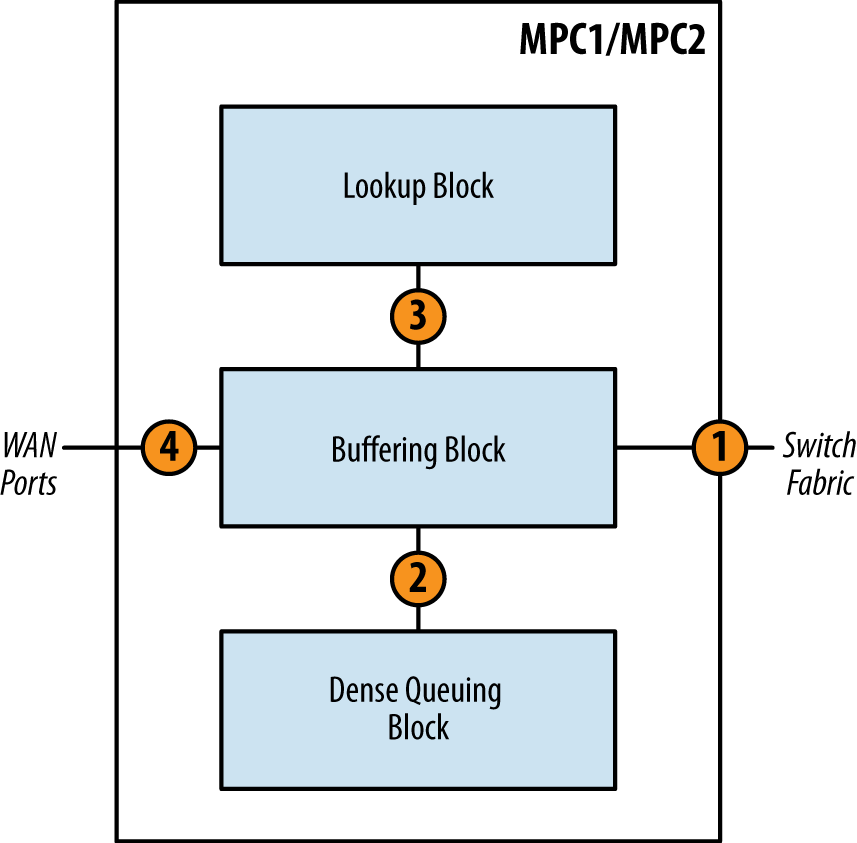

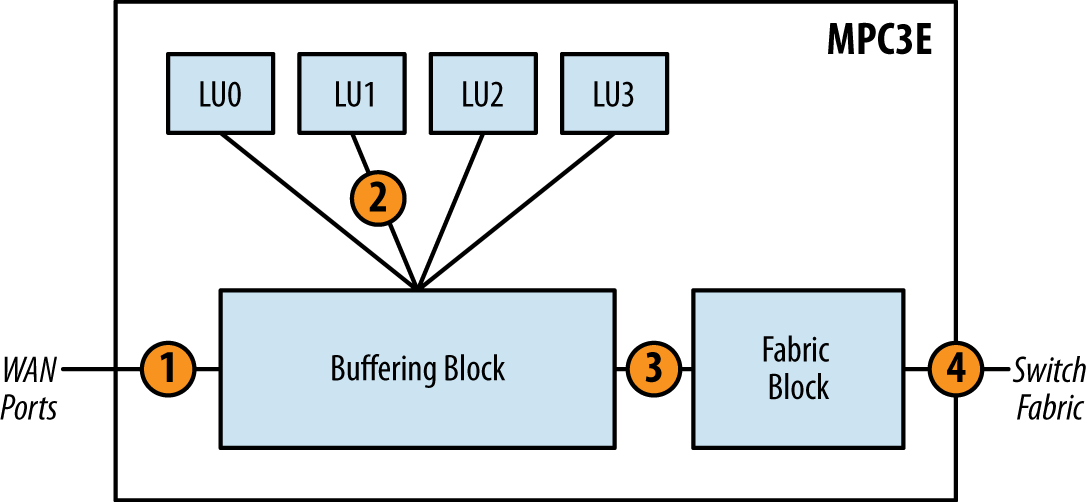

Trio Architecture

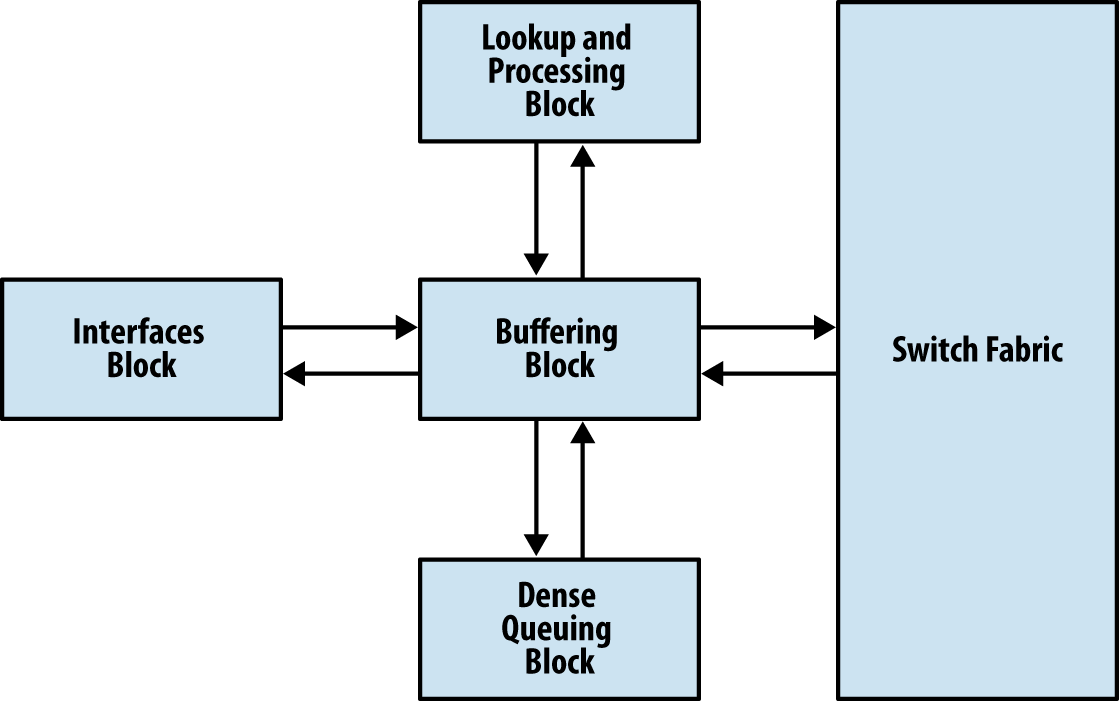

As shown in Figure 1-28, the Trio chipset is comprised of four major building blocks: Buffering, Lookup, Interfaces, and Dense Queuing. Depending on the Trio generation chipset, these blocks might be split into several hardware components or merge into the same chipset (which is the case for the latest generation of Trio chipsets as well as future upcoming versions).

Figure 1-28. Trio functional blocks: Buffering, Lookup, Interfaces, and Dense Queuing

Each function is separated into its own block so that each function is highly optimized and cost efficient. Depending on the size and scale required, Trio is able to take these building blocks and create line cards that offer specialization such as hierarchical queuing or intelligent oversubscription.

Trio Generations

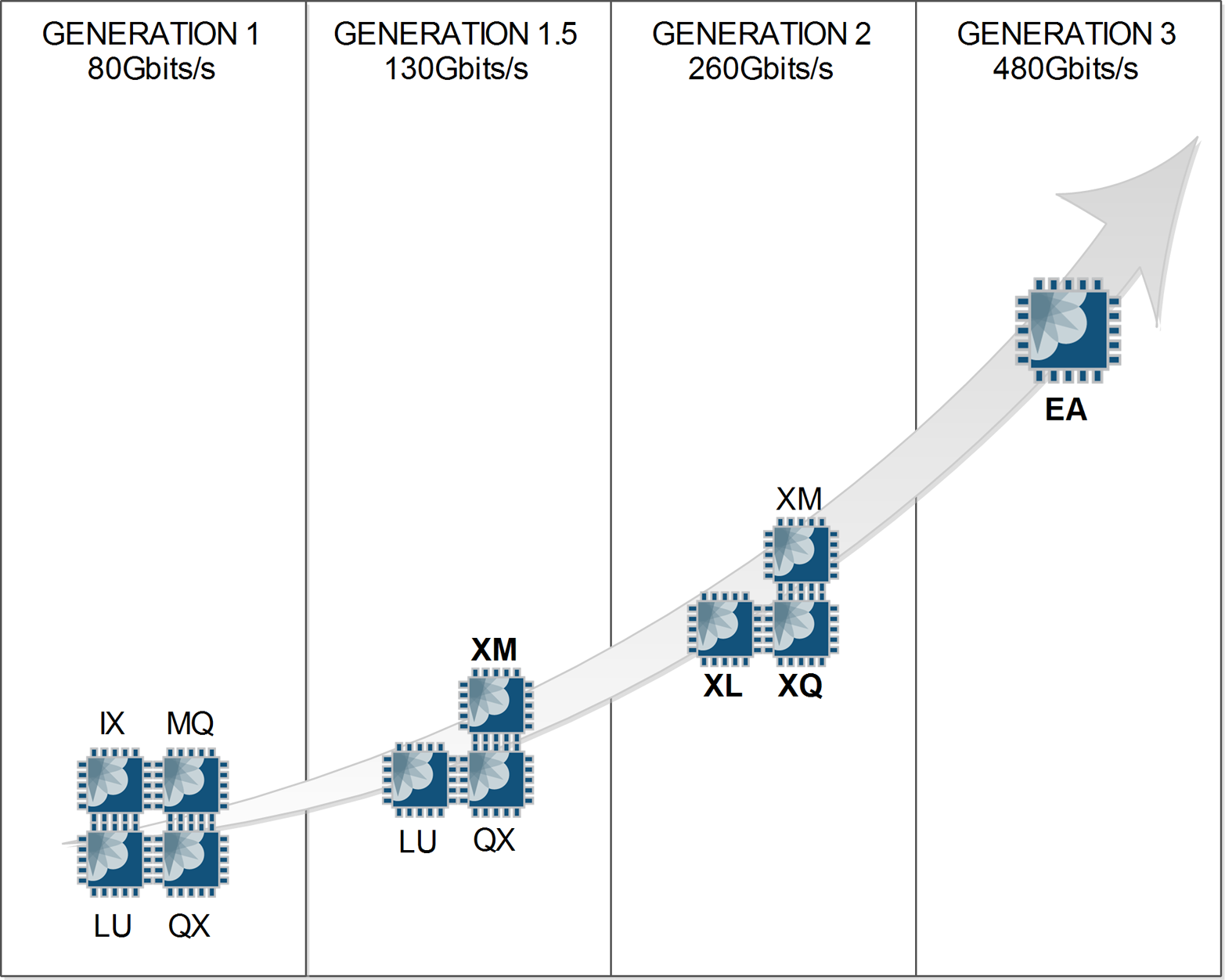

The Trio chipset has evolved in terms of scaling and features, but also in terms of the number of ASICs (see Figure 1-29):

The first generation of Trio was born with four specifics ASICs: IX (interface management for oversubscribed MIC), MQ (Buffering/Queuing Block), LU (Lookup Block), and QX (Dense Queuing Block). This first generation of Trio is found on MPC1, 2, and the 16x10GE MPCs.

The intermediate generation, called the 1.5 Generation, updated and increased the capacity of the buffering ASIC with the new generation of XM chipsets. This also marked the appearance of multi-LU MPCs, such as MPC3e and MPC4e.

The actual second generation of Trio enhanced the Lookup and Dense Queuing Blocks: the LU chip became the XL chip and the QX chip became the XQ, respectively. This second generation of Trio equips the MPC5e, MPC6e and the NG-MPC2e and NG-MPC3e line cards.

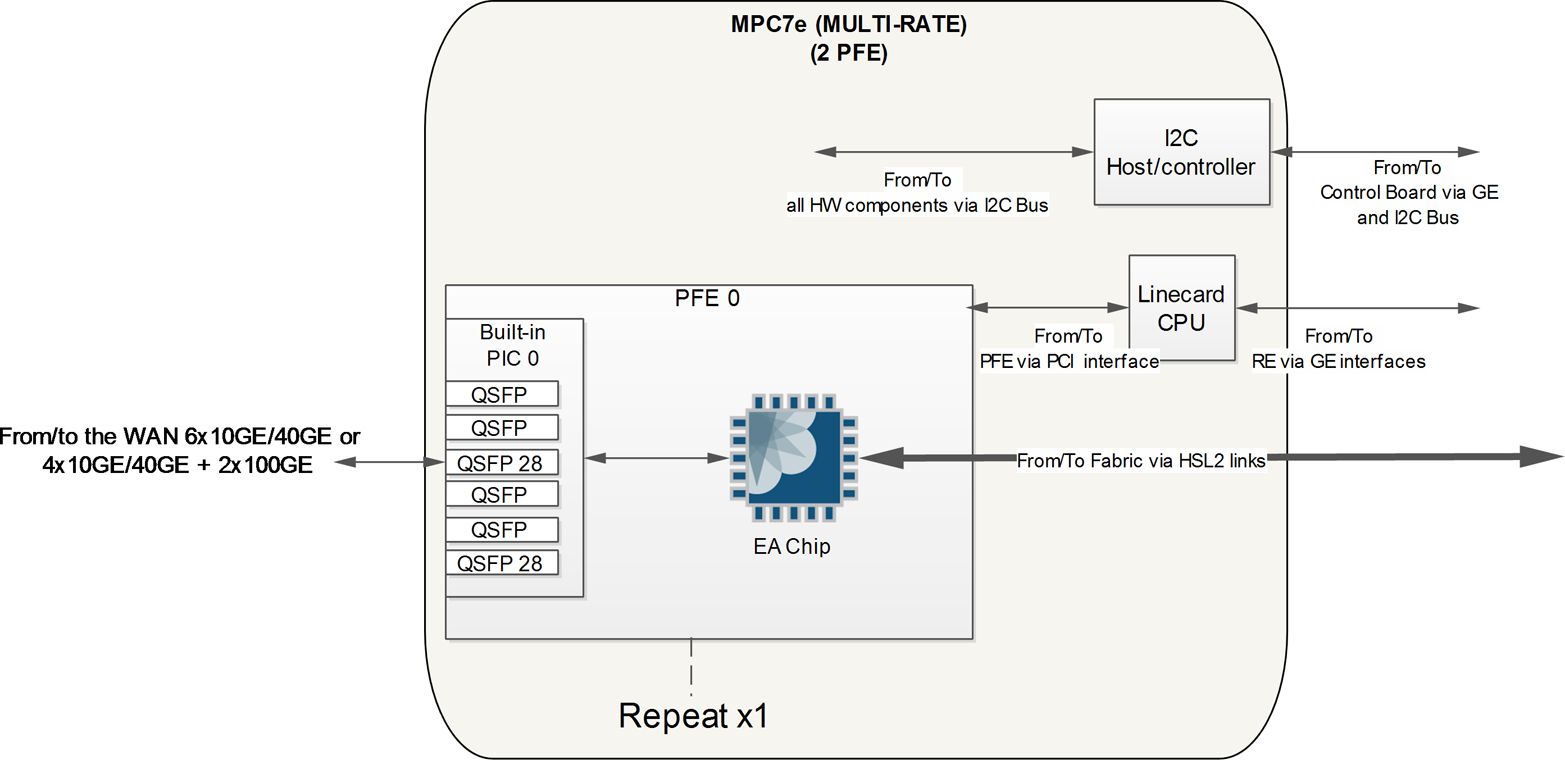

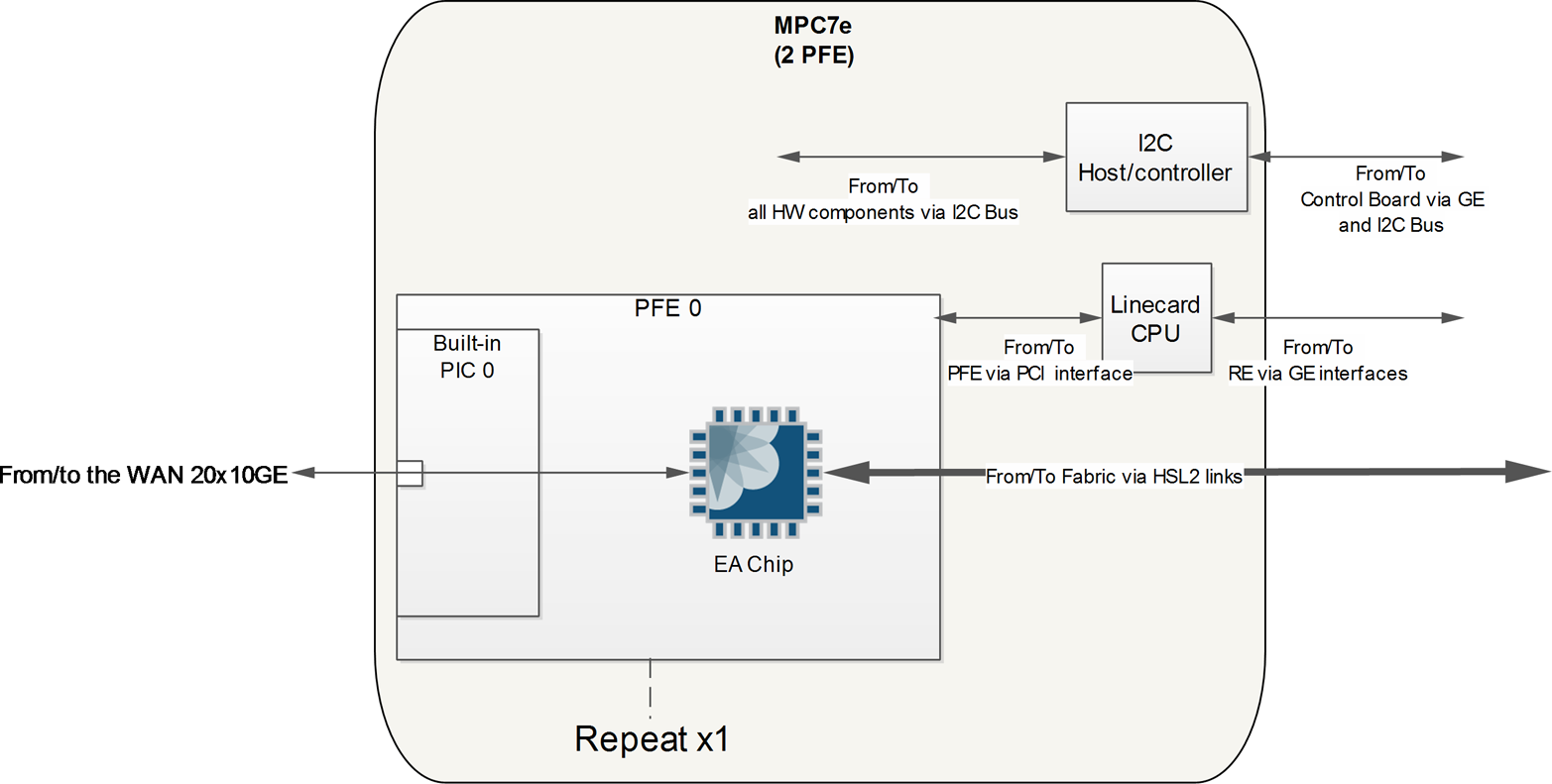

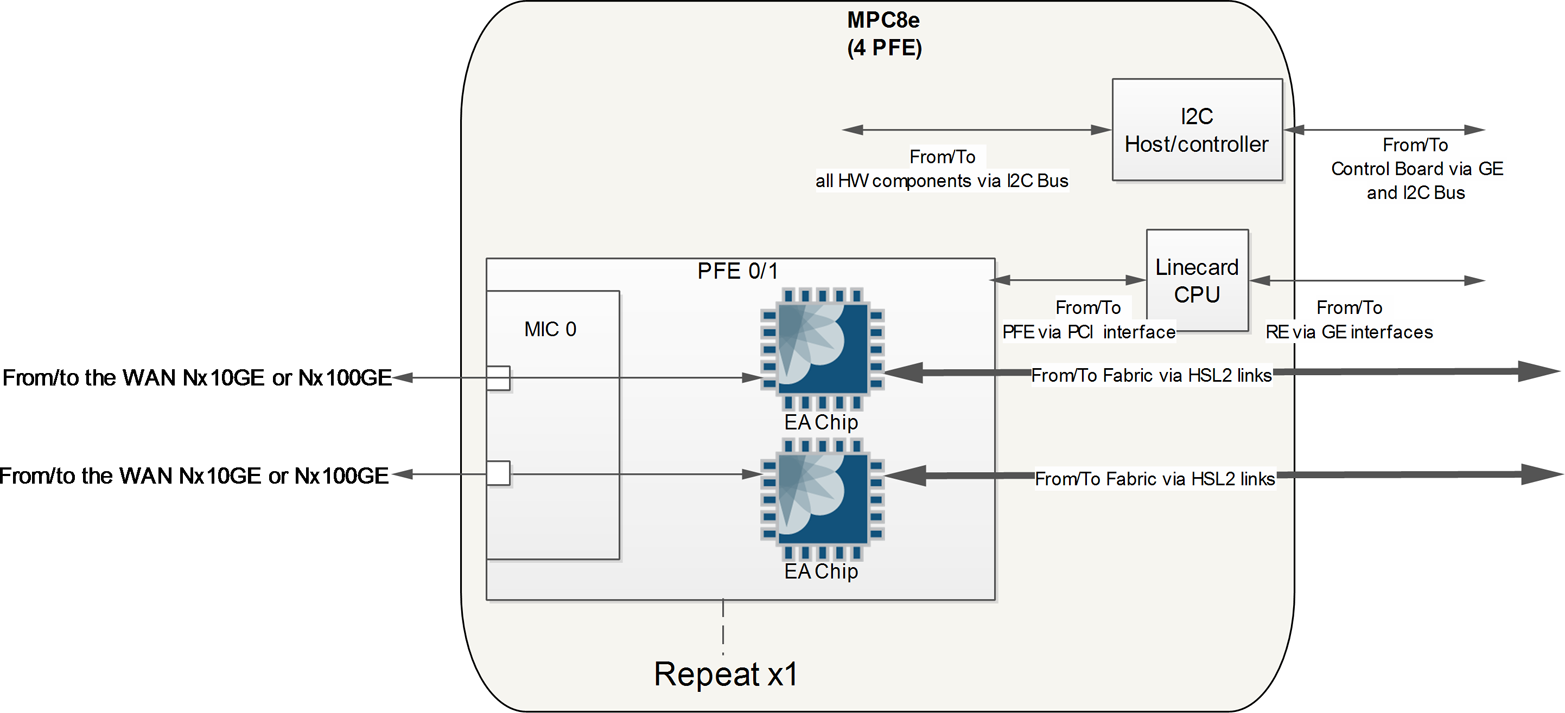

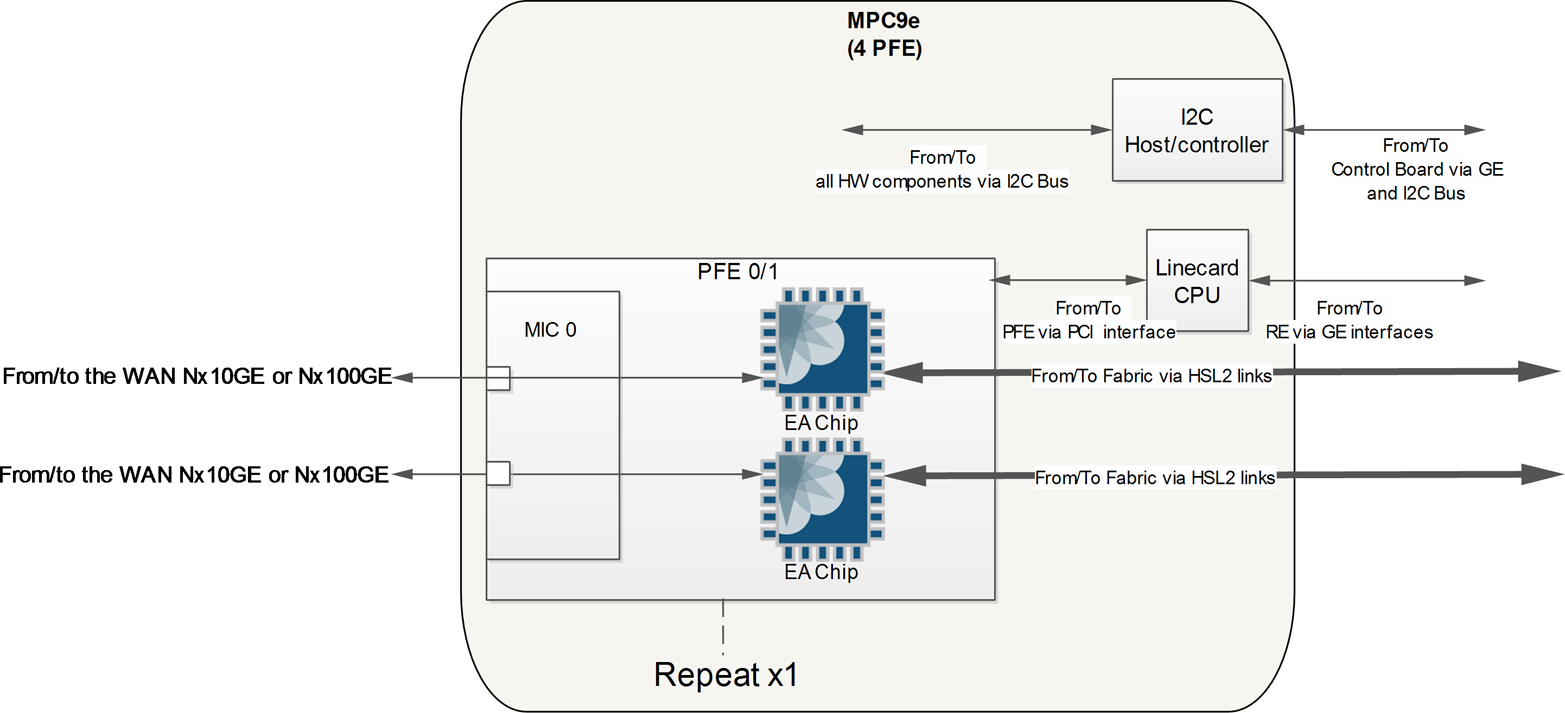

The third generation of Trio is a revolution. It embeds all the functional blocks in one ASIC. The Eagle ASIC, also known as the EA Chipset, is the first 480Gbits/s option in the marketplace and equips the new MPC7e, MPC8e, and MPC9e.

Figure 1-29. Trio chipset generations

Buffering Block

The Buffering Block is part of the MQ, XM, and EA ASICs, and ties together all of the other functional Trio blocks. It primarily manages packet data, fabric queuing, and revenue port queuing. The interesting thing to note about the Buffering Block is that it’s possible to delegate responsibilities to other functional Trio blocks. As of the writing of this book, there are two primary use cases for delegating responsibility: process oversubscription and revenue port queuing.

In the scenario where the number of revenue ports on a single MIC is less than 24x1GE or 2x10GE, it’s possible to move the handling of oversubscription to the Interfaces Block. This opens the doors to creating oversubscribed line cards at an attractive price point that are able to handle oversubscription intelligently by allowing control plane and voice data to be processed during congestion.

The Buffering Block is able to process basic per port queuing. Each port has eight hardware queues by default, large delay buffers, and low-latency queues (LLQs). If there’s a requirement to have hierarchical class of service (H-QoS) and additional scale, this functionality can be delegated to the Dense Queuing Block.

Lookup Block

The Lookup Block is part of the LU, XL, and EA ASICs. The Lookup Block has multi-core processors to support parallel tasks using multiple threads. This is the bread and butter of Trio. The Lookup Block also supports all of the packet header processing, including:

Route lookups

Load balancing

MAC lookups

Class of service (QoS) classification

Firewall filters

Policers

Accounting

Encapsulation

Statistics

Inline periodic packet management (such as inline BFD)

A key feature in the Lookup Block is that it supports Deep Packet Inspection (DPI) and is able to look over 256 bytes into the packet. This creates interesting features such as Distributed Denial-of-Service (DDoS) protection, which is covered in Chapter 4.

As packets are received by the Buffering Block, the packet headers are sent to the Lookup Block for additional processing. This chunk of packet is called the Parcel. All processing is completed in one pass through the Lookup Block regardless of the complexity of the workflow. Once the Lookup Block has finished processing, it sends the modified packet headers back to the Buffering Block to send the packet to its final destination.

In order to process data at line rate, the Lookup Block has a large bucket of reduced-latency dynamic random access memory (RLDRAM) that is essential for packet processing.

Let’s take a quick peek at the current memory utilization in the Lookup Block:

{master}

dhanks@R1-RE0> request pfe execute target fpc2 command "show jnh 0 pool usage"

SENT: Ukern command: show jnh 0 pool usage

GOT:

GOT: EDMEM overall usage:

GOT: [NH///|FW///|CNTR//////|HASH//////|ENCAPS////|--------------]

GOT: 0 2.0 4.0 9.0 16.8 20.9 32.0M

GOT:

GOT: Next Hop

GOT: [*************|-------] 2.0M (65% | 35%)

GOT:

GOT: Firewall

GOT: [|--------------------] 2.0M (1% | 99%)

GOT:

GOT: Counters

GOT: [|----------------------------------------] 5.0M (<1% | >99%)

GOT:

GOT: HASH

GOT: [*********************************************] 7.8M (100% | 0%)

GOT:

GOT: ENCAPS

GOT: [*****************************************] 4.1M (100% | 0%)

GOT:

LOCAL: End of file

The external data memory (EDMEM) is responsible for storing all of the firewall filters, counters, next-hops, encapsulations, and hash data. These values may look small, but don’t be fooled. In our lab, we have an MPLS topology with over 2,000 L3VPNs including BGP route reflection. Within each VRF, there is a firewall filter applied with two terms. As you can see, the firewall memory is barely being used. These memory allocations aren’t static and are allocated as needed. There is a large pool of memory and each EDMEM attribute can grow as needed.

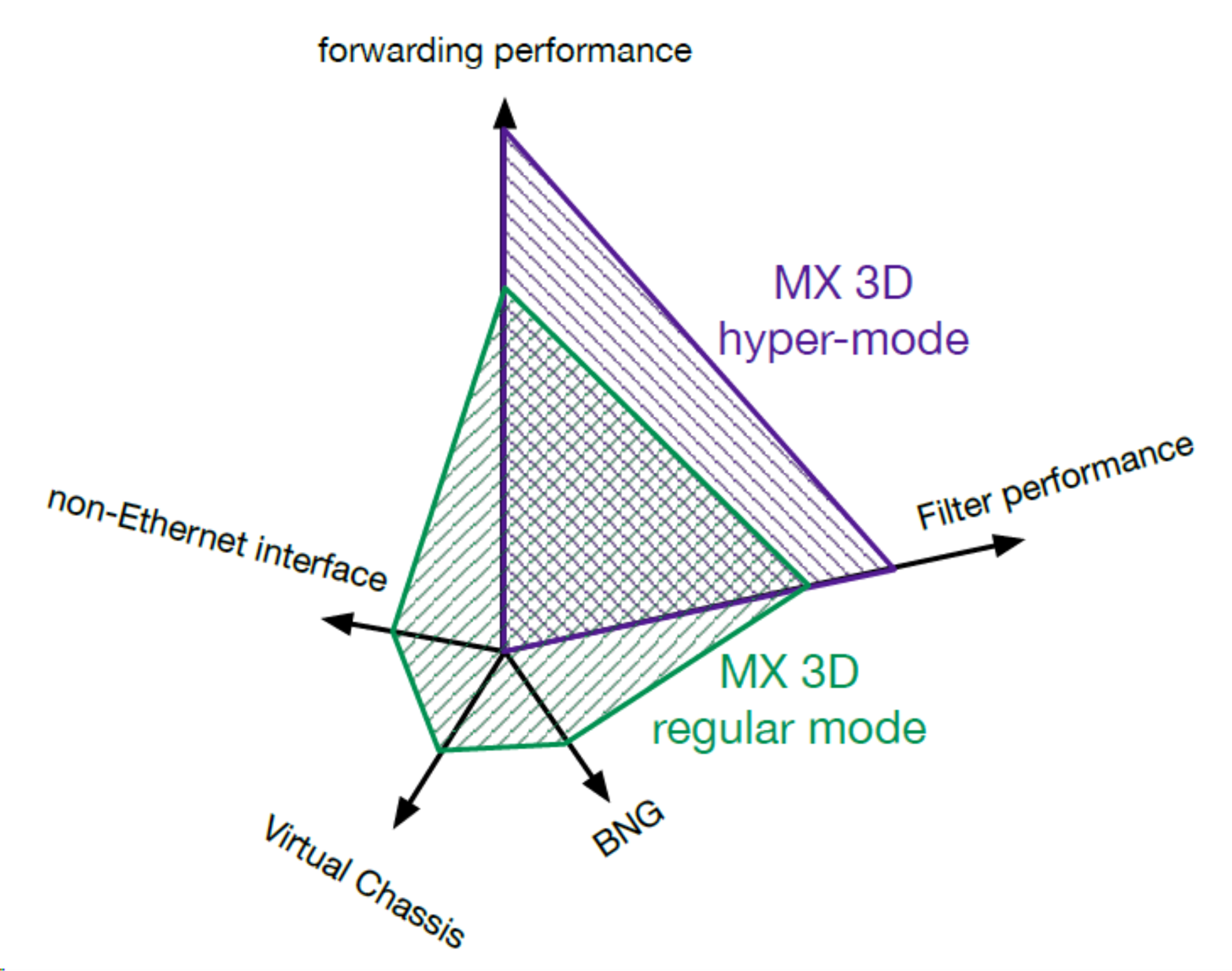

Hypermode feature

Starting with Junos 13.x, Juniper introduced a concept called hypermode. The fact was that MX line cards embed a lot of rich features—from basic routing and queuing, to advanced BNG inline features. The lookup chipset (LU/XL block) is loaded with the full micro-code which supports all the functions from the more basic to the more complex. Each function is viewed as a functional block and depending on the configured features, some of these blocks are used during packet processing. Even if all blocks are not used at a given time, there are some dependencies between each of them. These dependencies request more CPU instructions and thus more time, even if the packet just simply needs to be forwarded.

Warning

Actually, you can only see an impact on the performance when you reach the line rate limit of the ASIC for very small packet size (around 64 bytes).

To overcome this kind of bottleneck and improve the performance at line rate for small packet size, the concept of hypermode was developed. It disables and doesn’t load some of the functional block into the micro-code of the lookup engine, reducing the number of micro-code instructions that need to be executed per packet. This mode is really interesting for core routers or a basic PE with classical configured features such as routing, filtering, and simple queuing models. When hypermode is configured, the following features are no longer supported:

Creation of virtual chassis

Interoperability with legacy DPCs, including MS-DPCs (the MPC in hypermode accepts and transmits data packets only from other existing MPCs)

Interoperability with non-Ethernet MICs and non-Ethernet interfaces such as channelized interfaces, multilink interfaces, and SONET interfaces

Padding of Ethernet frames with VLAN

Sending Internet Control Message Protocol (ICMP) redirect messages

Termination or tunneling of all subscriber-based services

Hypermode dramatically increases the line rate performance for forwarding and filtering purposes as illustrated by the Figure 1-30.

Figure 1-30. Comparison of regular mode and hypermode performance

To configure hypermode, the following statement must be added on the forwarding-options:

{master}[edit]

jnpr@R1# set forwarding-options hyper-mode

Committing this new config requires a reboot of the node:

jnpr@R1# commit re0: warning: forwarding-options hyper-mode configuration changed. A system reboot is mandatory. Please reboot the system NOW. Continuing without a reboot might result in unexpected system behavior. configuration check succeeds

Once the router has been rebooted, you can check if hypermode is enabled with this show command:

{master}

jnpr@R1> show forwarding-options hyper-mode

Current mode: hyper mode

Configured mode: hyper mode

Is hypermode supported on all line cards? Actually the answer is “no,” but “not supported” doesn’t mean not compatible. Indeed, prior to MPC4e, hypermode is said to be compatible, meaning that MPC1, 2, and 3 and 16x10GE MPCs accept the presence of the knob into the configuration but do not take it into account. In other words, the lookup chipset of these cards is fully loaded with all functions. Since MPC4e, hypermode is supported and can be turned on to load smaller micro-code with better performances for forwarding and filtering features onto LU/XL/EA ASICs. Table 1-4 summarizes which MPCs are compatible and which ones support the hypermode feature.

| Line card model | Hypermode compatible? | Hypermode support? |

|---|---|---|

| DPC / MS-DPC | No | No |

| MPC1 / MPC1e | Yes | No |

| MPC2 / MPC2e | Yes | No |

| MPC3e | Yes | No |

| MPC4e | Yes | Yes |

| MPC5e | Yes | Yes |

| MPC6e | Yes | Yes |

| NG-MPC2e / NG-MPC3e | Yes | Yes |

Interfaces Block

One of the optional components is the Interfaces Block (the Buffering Block is part of the IX-specific ASIC). Its primary responsibility is to intelligently handle oversubscription. When using a MIC that supports less than 24x1GE or 2x10GE MACs, the Interfaces Block is used to manage the oversubscription.

Note

As new MICs are released, they may or may not have an Interfaces Block depending on power requirements and other factors. Remember that the Trio function blocks are like building blocks and some blocks aren’t required to operate.

Each packet is inspected at line rate, and attributes such as Ethernet Type Codes, Protocol, and other Layer 4 information are used to evaluate which buffers to enqueue the packet towards the Buffering Block. Preclassification allows the ability to drop excess packets as close to the source as possible, while allowing critical control plane packets through to the Buffering Block.

There are four queues between the Interfaces and Buffering Block: real-time, control traffic, best effort, and packet drop. Currently, these queues and preclassifications are not user configurable; however, it’s possible to take a peek at them.

Let’s take a look at a router with a 20x1GE MIC that has an Interfaces Block:

dhanks@MX960> show chassis hardware Hardware inventory: Item Version Part number Serial number Description Chassis JN10852F2AFA MX960 Midplane REV 02 710-013698 TR0019 MX960 Backplane FPM Board REV 02 710-014974 JY4626 Front Panel Display Routing Engine 0 REV 05 740-031116 9009066101 RE-S-1800x4 Routing Engine 1 REV 05 740-031116 9009066210 RE-S-1800x4 CB 0 REV 10 750-031391 ZB9999 Enhanced MX SCB CB 1 REV 10 750-031391 ZC0007 Enhanced MX SCB CB 2 REV 10 750-031391 ZC0001 Enhanced MX SCB FPC 1 REV 28 750-031090 YL1836 MPC Type 2 3D EQ CPU REV 06 711-030884 YL1418 MPC PMB 2G MIC 0 REV 05 750-028392 JG8529 3D 20x 1GE(LAN) SFP MIC 1 REV 05 750-028392 JG8524 3D 20x 1GE(LAN) SFP

We can see that FPC1 supports two 20x1GE MICs. Let’s take a peek at the preclassification on FPC1:

dhanks@MX960> request pfe execute target fpc1 command "show precl-eng summary" SENT: Ukern command: show precl-eng summary GOT: GOT: ID precl_eng name FPC PIC (ptr) GOT: --- -------------------- ---- --- -------- GOT: 1 IX_engine.1.0.20 1 0 442484d8 GOT: 2 IX_engine.1.1.22 1 1 44248378 LOCAL: End of file

It’s interesting to note that there are two preclassification engines. This makes sense as there is an Interfaces Block per MIC. Now let’s take a closer look at the preclassification engine and statistics on the first MIC:

usr@MX960> request pfe execute target fpc1 command "show precl-eng 1 statistics" SENT: Ukern command: show precl-eng 1 statistics GOT: GOT: stream Traffic GOT: port ID Class TX pkts RX pkts Dropped pkts GOT: ------ ------- ---------- --------- ------------ ------------ GOT: 00 1025 RT 000000000000 000000000000 000000000000 GOT: 00 1026 CTRL 000000000000 000000000000 000000000000 GOT: 00 1027 BE 000000000000 000000000000 000000000000

Each physical port is broken out and grouped by traffic class. The number of packets dropped is maintained in a counter on the last column. This is always a good place to look if the router is oversubscribed and dropping packets.

Let’s take a peek at a router with a 4x10GE MIC that doesn’t have an Interfaces Block:

{master}

dhanks@R1-RE0> show chassis hardware

Hardware inventory:

Item Version Part number Serial number Description

Chassis JN111992BAFC MX240

Midplane REV 07 760-021404 TR5026 MX240 Backplane

FPM Board REV 03 760-021392 KE2411 Front Panel Display

Routing Engine 0 REV 07 740-013063 1000745244 RE-S-2000

Routing Engine 1 REV 06 740-013063 1000687971 RE-S-2000

CB 0 REV 03 710-021523 KH6172 MX SCB

CB 1 REV 10 710-021523 ABBM2781 MX SCB

FPC 2 REV 25 750-031090 YC5524 MPC Type 2 3D EQ

CPU REV 06 711-030884 YC5325 MPC PMB 2G

MIC 0 REV 24 750-028387 YH1230 3D 4x 10GE XFP

MIC 1 REV 24 750-028387 YG3527 3D 4x 10GE XFP

Here we can see that FPC2 has two 4x10GE MICs. Let’s take a closer look at the preclassification engines:

{master}

dhanks@R1-RE0> request pfe execute target fpc2 command "show precl-eng summary"

SENT: Ukern command: show precl-eng summary

GOT:

GOT: ID precl_eng name FPC PIC (ptr)

GOT: --- -------------------- ---- --- --------

GOT: 1 MQ_engine.2.0.16 2 0 435e2318

GOT: 2 MQ_engine.2.1.17 2 1 435e21b8

LOCAL: End of file

The big difference here is the preclassification engine name. Previously, it was listed as “IX_engine” with MICs that support an Interfaces Block. MICs such as the 4x10GE do not have an Interfaces Block, so the preclassification is performed on the Buffering Block, or, as listed here, the “MQ_engine.”

Note

Hidden commands are used here to illustrate the roles and responsibilities of the Interfaces Block. Caution should be used when using these commands, as they aren’t supported by Juniper.

The Buffering Block’s WAN interface can operate either in MAC mode or in the Universal Packet over HSL2 (UPOH) mode. This creates a difference in operation between the MPC1 and MPC2 line cards. The MPC1 only has a single Trio chipset, and thus the MPC2 is only MICs that can operate in MAC mode are compatible with this line card. On the other hand, the MPC2 has two Trio chipsets. Each MIC on the MPC2 is able to operate in either mode, thus compatible with more MICs. This will be explained in more detail later in the chapter.

Dense Queuing Block

The Buffering Block is part of the QX, XQ and EA ASICs. Depending on the line card, Trio offers an optional Dense Queuing Block that offers rich Hierarchical QoS that supports up to 512,000 queues with the current generation of hardware. This allows for the creation of schedulers that define drop characteristics, transmission rate, and buffering that can be controlled separately and applied at multiple levels of hierarchy.

The Dense Queuing Block is an optional functional Trio block. The Buffering Block already supports basic per port queuing. The Dense Queuing Block is only used in line cards that require H-QoS or additional scale beyond the Buffering Block.

Line Cards and Modules

To provide high-density and high-speed Ethernet services, a new type of Flexible Port Concentrator (FPC) had to be created called the Dense Port Concentrator (DPC). This first-generation line card allowed up to 80 Gbps ports per slot.

The DPC line cards utilize a previous ASIC from the M series called the I-Chip. This allowed Juniper to rapidly build the first MX line cards and software.

The Modular Port Concentrator (MPC) is the second-generation line card created to further increase the density to 160 Gbps ports per slot. This generation of hardware is created using the Trio chipset. The MPC supports MICs that allow you to mix and match different modules on the same MPC.

| FPC type/Module type | Description |

|---|---|

| Dense Port Concentrator (DPC) | First-generation high-density and high-speed Ethernet line cards |

| Modular Port Concentrator (MPC) | Second-generation high-density and high-speed Ethernet line cards supporting modules |

| Module Interface Card (MIC) | Second-generation Ethernet and optical modules that are inserted into MPCs |

It’s a common misconception that the “modular” part of MPC derives its name only from its ability to accept different kinds of MICs. This is only half of the story. The MPC also derives its name from being able to be flexible when it comes to the Trio chipset. For example, the MPC-3D-16x10GE-SFPP line card is a fixed port configuration, but only uses the Buffering Block and Lookup Block in the PFE complex. As new line cards are introduced in the future, the number of fundamental Trio building blocks will vary per card as well, thus living up to the “modular” name.

Dense Port Concentrator

The DPC line cards come in six different models to support varying different port configurations. There’s a mixture of 1G, 10G, copper, and optical. There are three DPC types: routing and switching (DPCE-R), switching (DPCE-X), and enhanced queuing (DPCE-Q).

The DPCE-R can operate at either Layer 3 or as a pure Layer 2 switch. It’s generally the most cost-effective when using a sparing strategy for support. The DPCE-R is the most popular choice, as it supports very large route tables and can be used in a pure switching configuration as well.

The DPCE-X has the same features and services as the DPCE-R; the main difference is that the route table is limited to 32,000 prefixes and cannot use L3VPNs on this DPC. These line cards make sense when being used in a very small environment or in a pure Layer 2 switching scenario.

The DPCE-Q supports all of the same features and services as the DPCE-R and adds additional scaling around H-QoS and number of queues.

| Model | DPCE-R | DPCE-X | DPCE-Q |

|---|---|---|---|

| 40x1GE SFP | Yes | Yes | Yes |

| 40x1GE TX | Yes | Yes | No |

| 20x1GE SFP | No | No | Yes |

| 4x10GE XFP | Yes | Yes | Yes |

| 2x10GE XFP | Yes | No | No |

| 20x1GE and 2x10GE | Yes | Yes | Yes |

Note

The DPC line cards are still supported, but there is no active development of new features being brought to these line cards. For new deployments, it’s recommended to use the newer, second-generation MPC line cards. The MPC line cards use the Trio chipset and are where Juniper is focusing all new features and services.

Modular Port Concentrator

The MPC line cards are the second generation of line cards for the MX. There are two significant changes when moving from the DPC to MPC: chipset and modularity. All MPCs are now using the Trio chipset to support more scale, bandwidth, and services. The other big change is that now the line cards are modular using MICs.

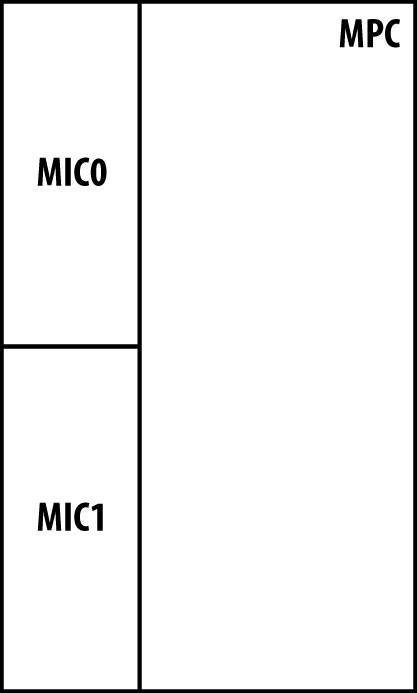

The MPC can be thought of as a type of intelligent shell or carrier for MICs. This change in architecture allows the separation of physical ports, oversubscription, features, and services, as shown in Figure 1-31. All of the oversubscription, features, and services are managed within the MPC. Physical port configurations are isolated to the MIC. This allows the same MIC to be used in many different types of MPCs depending on the number of features and scale required.

Figure 1-31. High-level architecture of MPCs and MICs

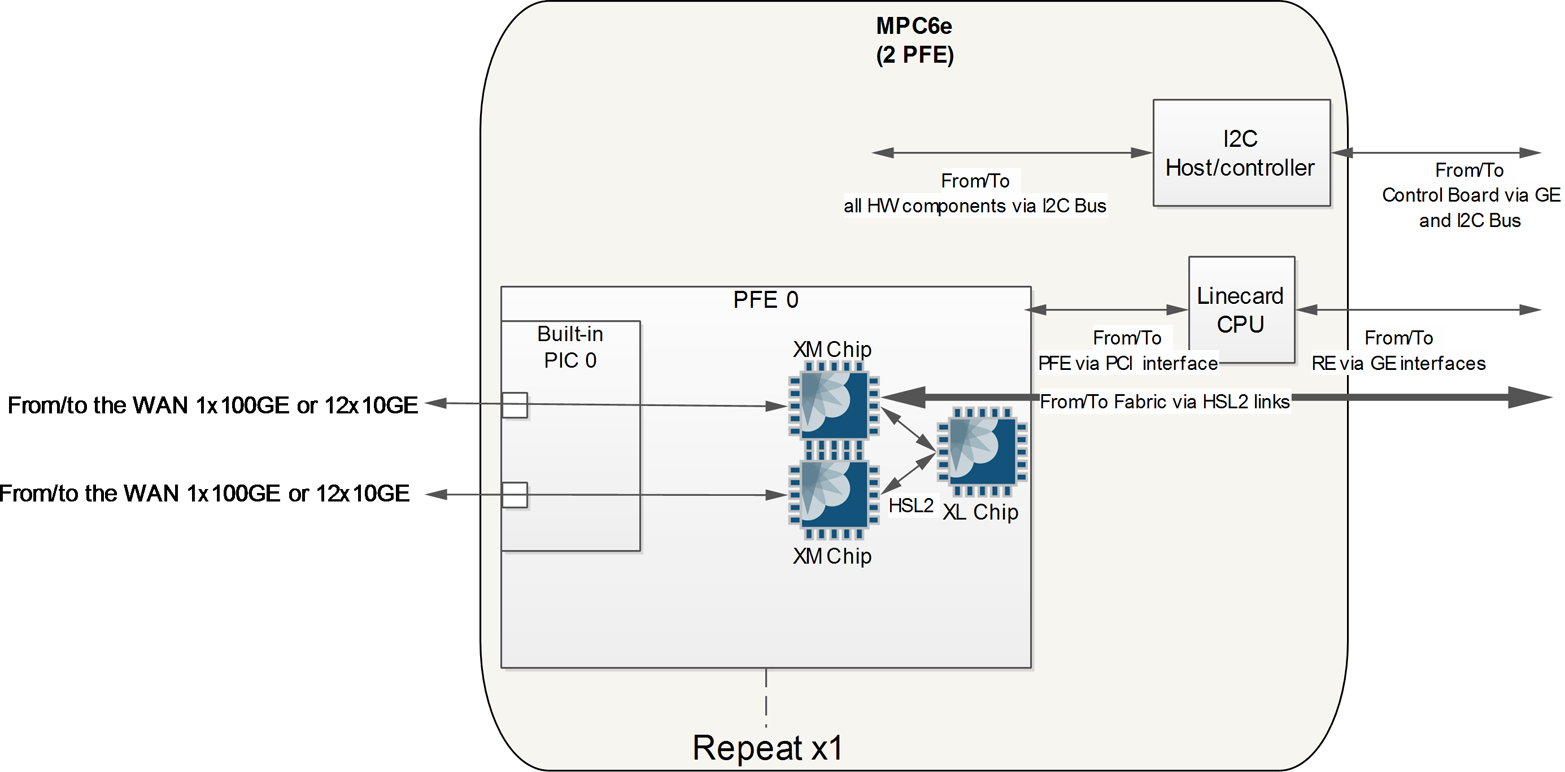

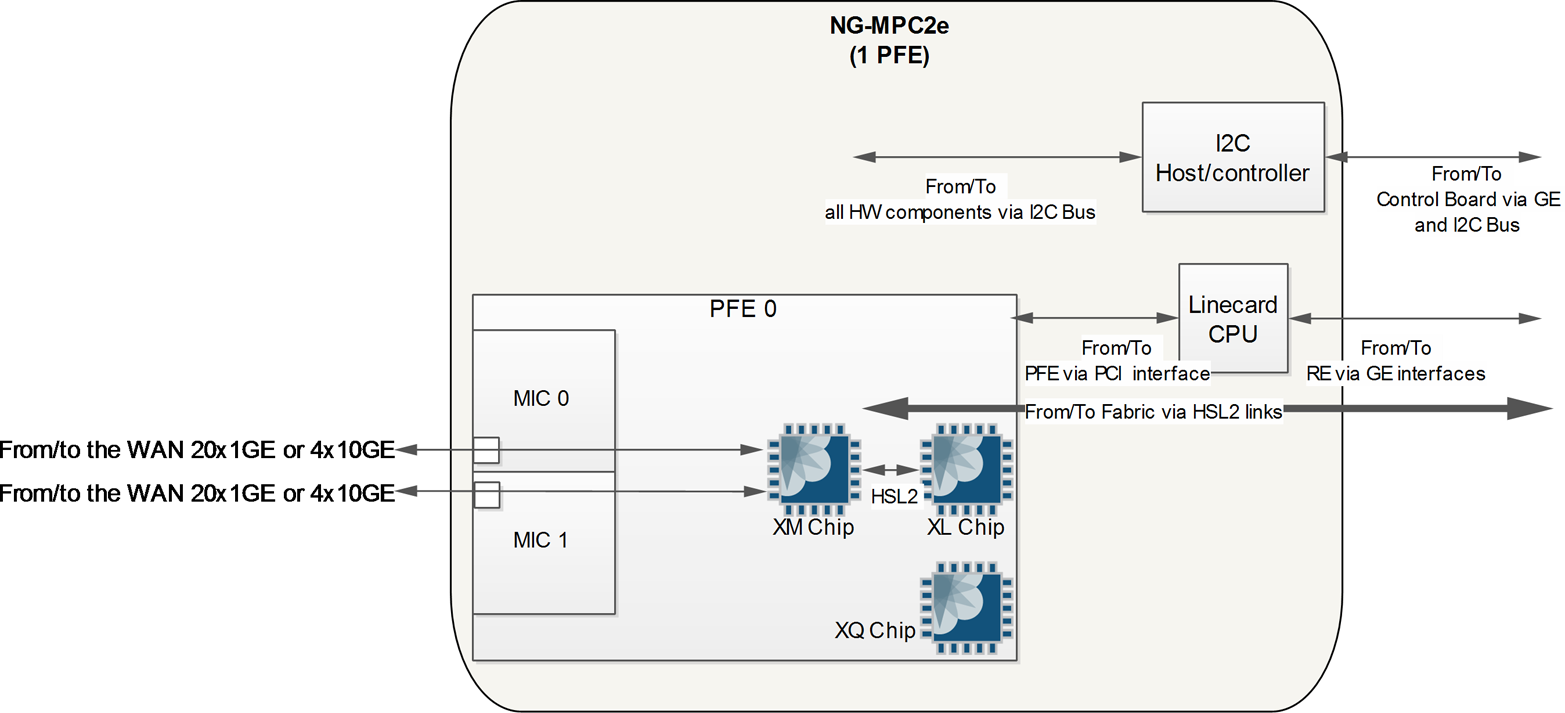

As of Junos 14.2, there are twelve different categories of MPCs. Each model has a different number of Trio chipsets providing different options of scaling and bandwidth, as listed in Table 1-7.

| Model | # of PFE complex | Per MPC bandwidth | Interface support |

|---|---|---|---|

| MPC1 | 1 | 40 Gbps | 1GE and 10GE |

| MPC2 | 2 | 80 Gbps | 1GE and 10GE |

| MPC 16x10GE | 4 | 140 Gbps | 10GE |

| MPC3E | 1 | 130 Gbps | 1GE, 10GE, 40GE, and 100GE |

| MPC4e | 2 | 260 Gbps | 10GE and 100GE |

| MPC5e | 1 | 240 Gbps | 10GE, 40GE, 100GE |

| MPC6e | 2 | 520 Gbps | 10GE and 100GE |

| NG-MPC2e | 1 | 80 Gbps | 1GE, 10GE |

| NG-MPC3e | 1 | 130 Gbps | 1GE, 10GE |

| MPC7e | 2 | 480 Gbps | 10GE 40GE and 100GE |

| MPC8e | 4 | 960 Gbps | 10GE 40GE and 100GE |

| MPC9e | 4 | 1600 Gbps | 10GE 40GE and 100GE |

Warning

Next-generation cards (MPC7e, MPC8e, and MPC9e) host the third generation of Trio ASIC (EA), which has a bandwidth capacity of 480Gbps. The EA ASIC has been “rate-limited” for these card models either at 80Gbps, 130Gbps or 240Gbps

Note

It’s important to note that the MPC bandwidth listed previously represents current-generation hardware that’s available as of the writing of this book and is subject to change with new software and hardware releases.

Similar to the first-generation DPC line cards, the MPC line cards also support the ability to operate in Layer 2, Layer 3, or Enhanced Queuing modes. This allows you choose only the features and services required.

| Model | Full Layer 2 | Full Layer 3 | Enhanced queuing |

|---|---|---|---|

| MX-3D | Yes | No | No |

| MX-3D-Q | Yes | No | Yes |

| MX-3D-R-B | Yes | Yes | No |

| MX-3D-Q-R-B | Yes | Yes | Yes |

Most Enterprise customers tend to choose the MX-3D-R-B model as it supports both Layer 2 and Layer 3. Typically, there’s no need for Enhanced Queuing or scale when building a data center. Most Service Providers prefer to use the MX-3D-Q-R-B as it provides both Layer 2 and Layer 3 services in addition to Enhanced Queuing. A typical use case for a Service Provider is having to manage large routing tables and many customers, and provide H-QoS to enforce customer service-level agreements (SLAs).

The MX-3D-R-B is the most popular choice, as it offers full Layer 3 and Layer 2 switching support.

The MX-3D has all of the same features and services as the MX-3D-R-B but has limited Layer 3 scaling. When using BGP or an IGP, the routing table is limited to 32,000 routes. The other restriction is that MPLS L3VPNs cannot be used on these line cards.

The MX-3D-Q has all of the same features, services, and reduced Layer 3 capacity as the MX-3D, but offers Enhanced Queuing. This adds the ability to configure H-QoS and increase the scale of queues.

The MX-3D-Q-R-B combines all of these features together to offer full Layer 2, Layer 3, and Enhanced Queuing together in one line card.

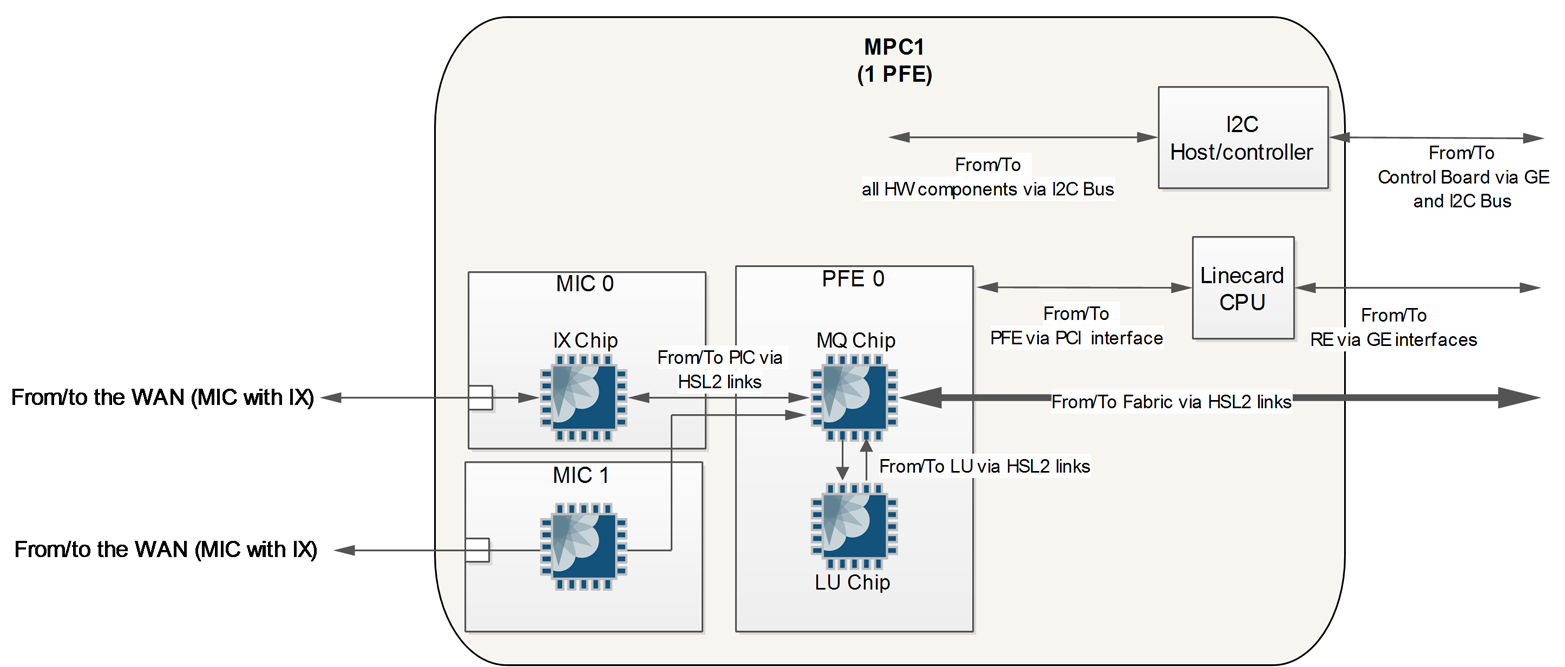

MPC1

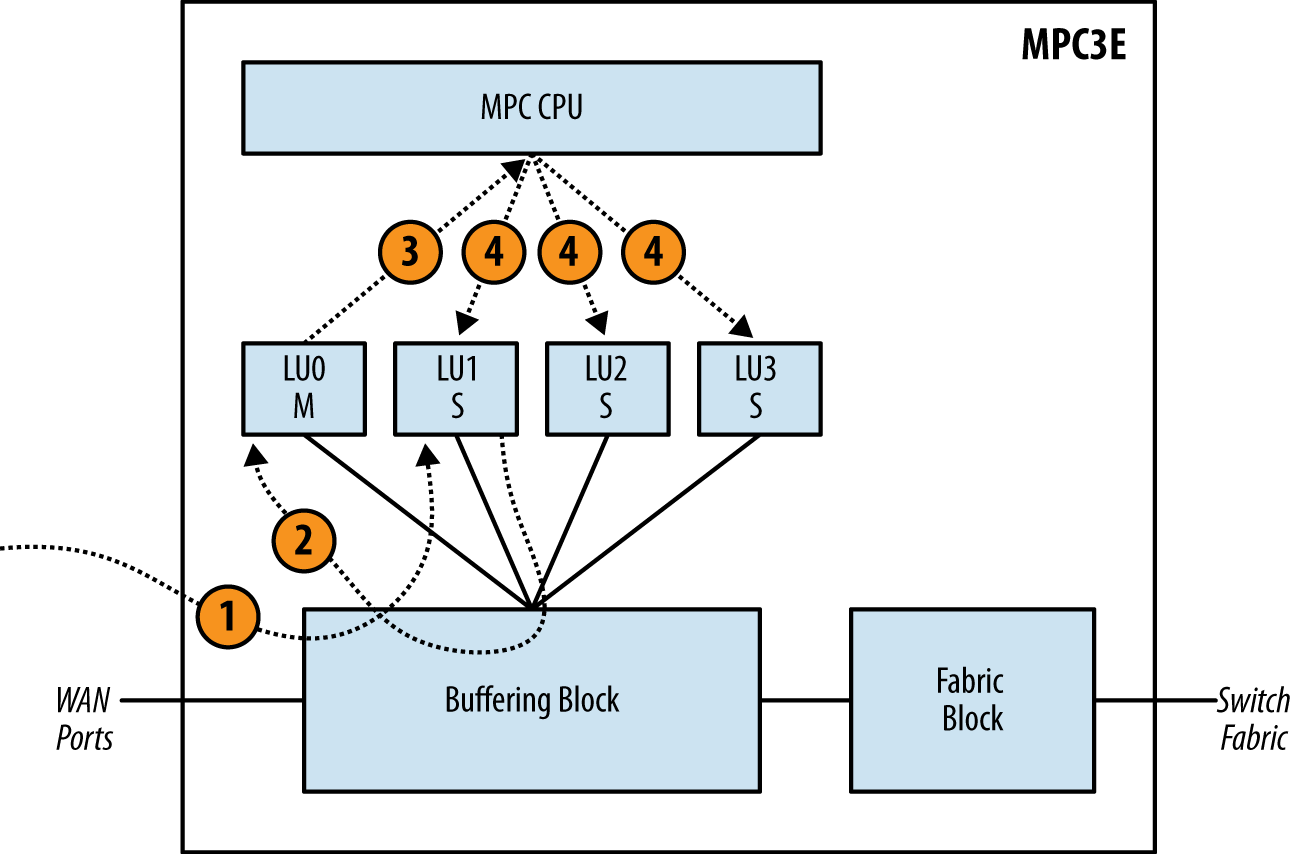

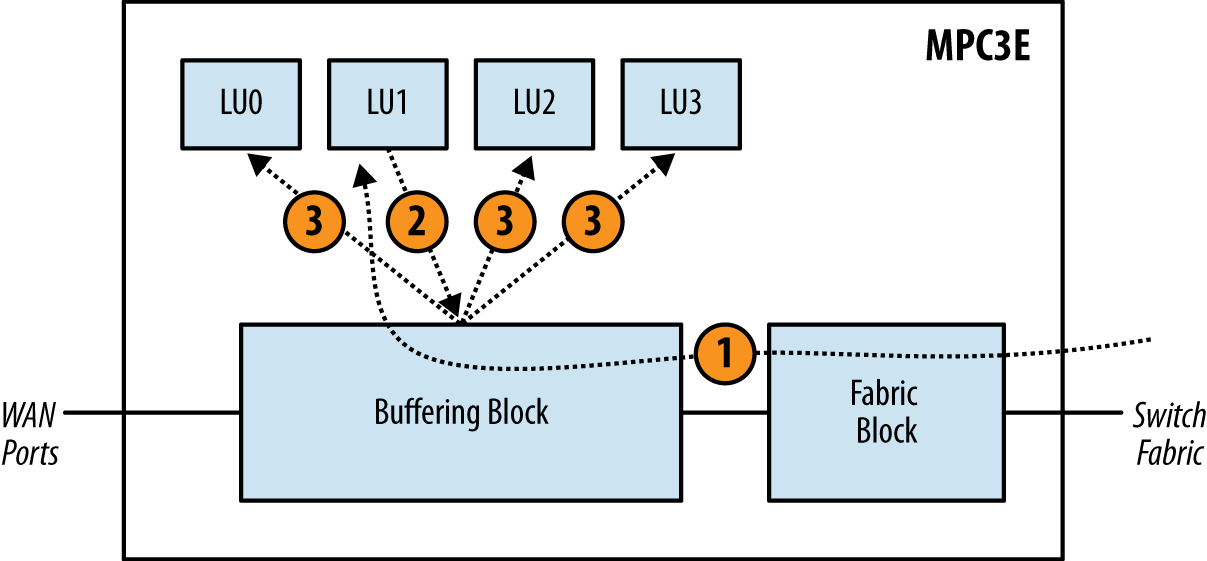

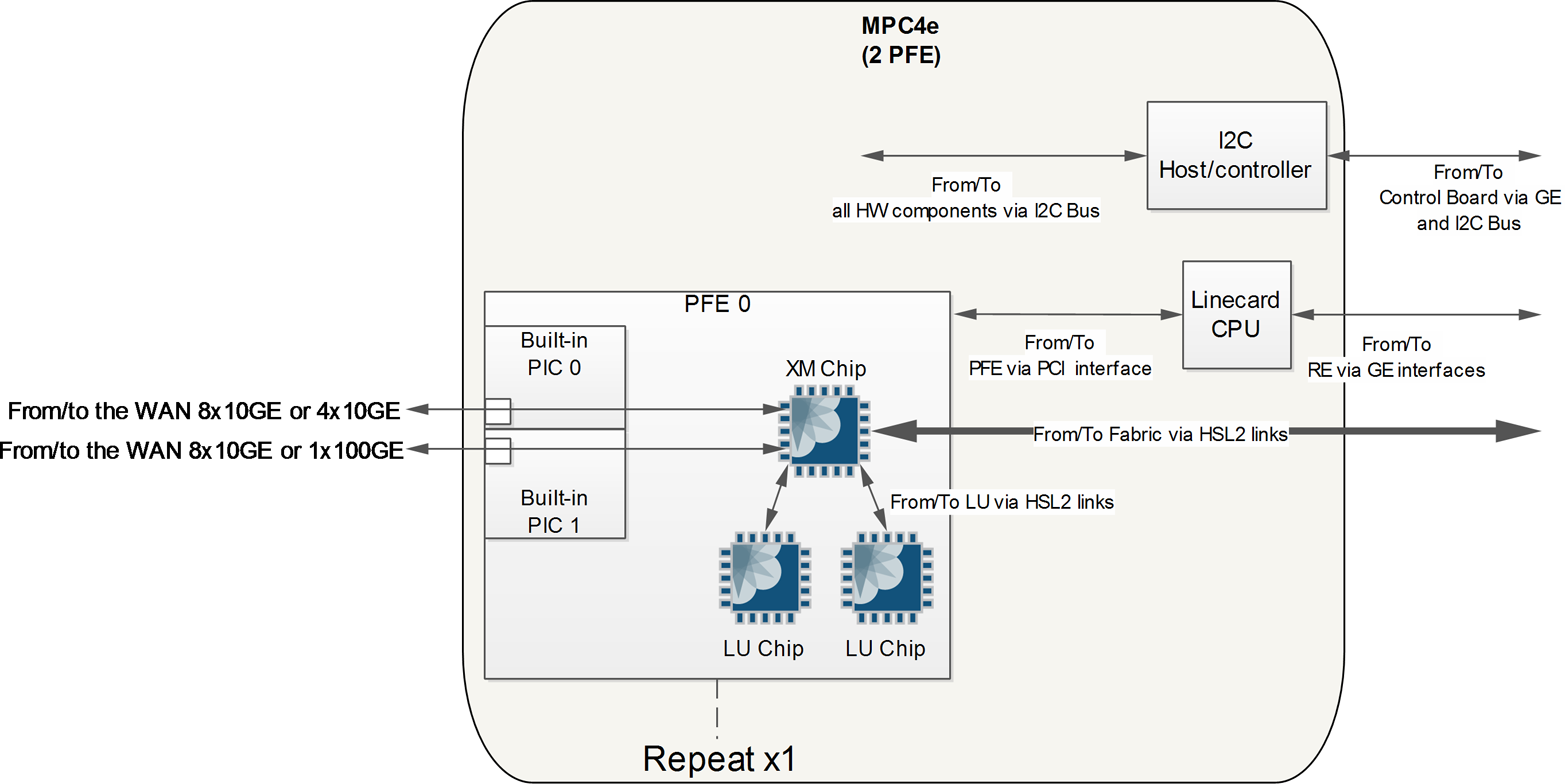

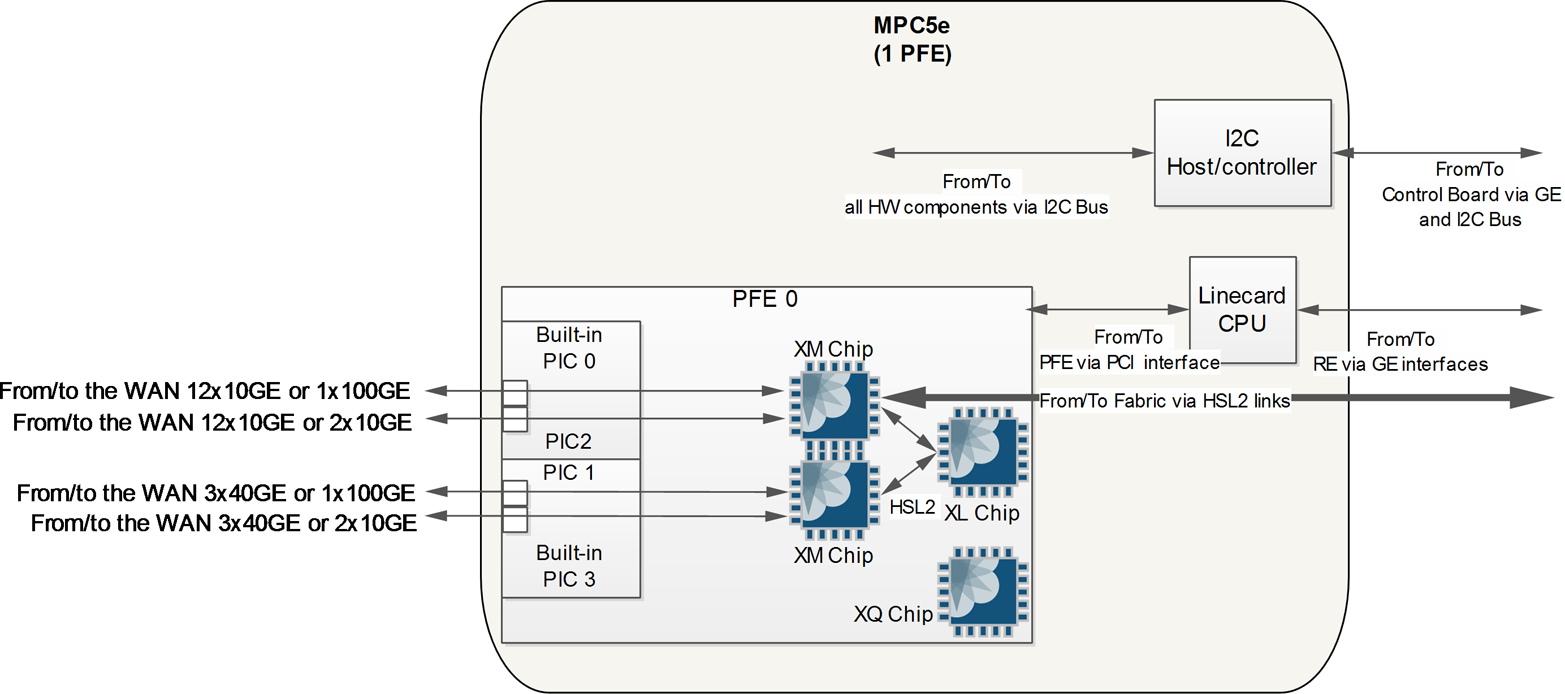

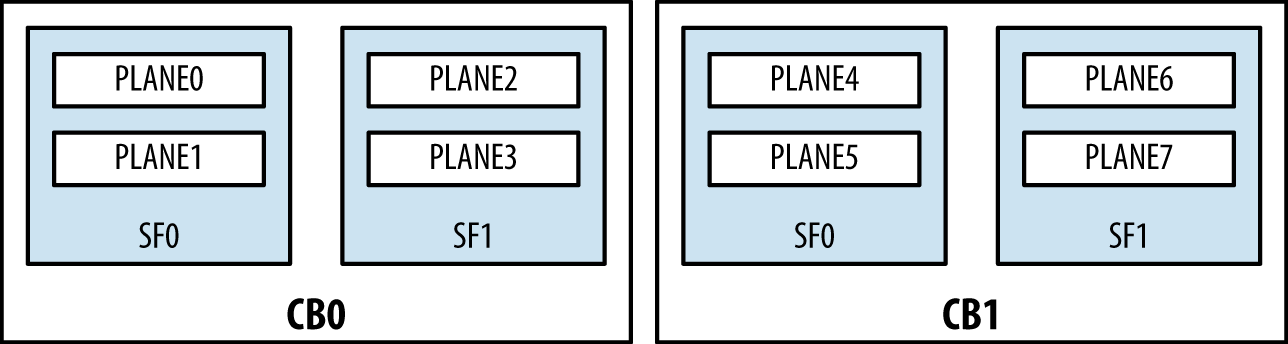

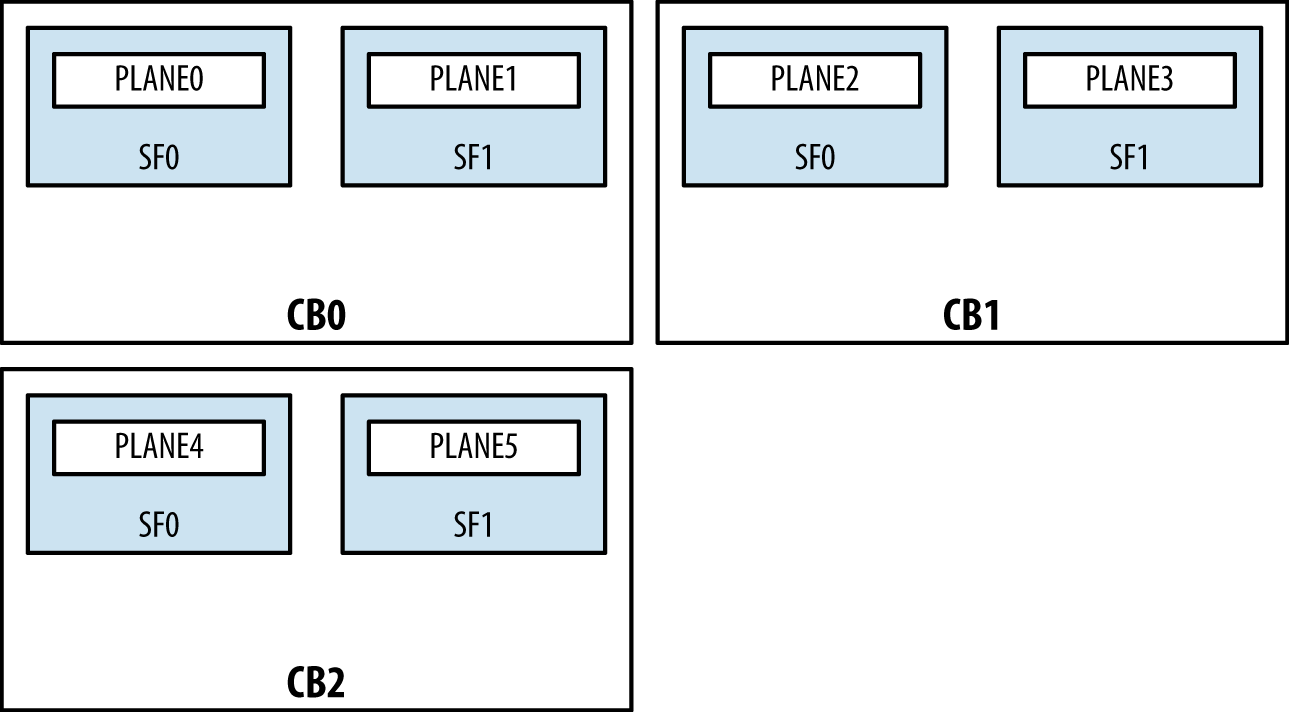

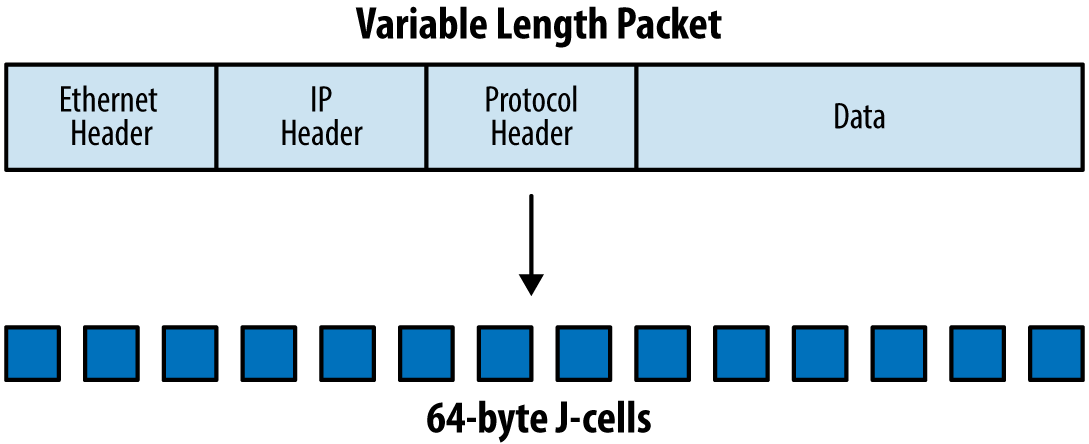

Let’s revisit the MPC models in more detail. The MPC starts off with the MPC1, which has a single Trio chipset (Single PFE). The use case for this MPC is to offer an intelligently oversubscribed line card for an attractive price. All of the MICs that are compatible with the MPC1 have the Interfaces Block (IX Chip) built into the MIC to handle oversubscription, as shown in Figure 1-32.