By taking away an agent's omniscient powers of full observation, we need to allow our agent to better generalize, and thus be able to learn long term. We do this by adding recurrent layers or blocks that are composed of long-short-term-memory cells, or LSTM layers.

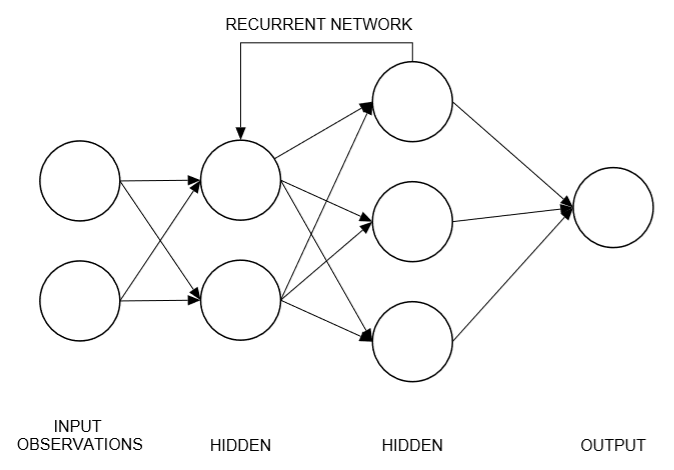

These layers/cells provide the temporal memory for our agent and work as shown in the following diagram:

Recurrent networks

Recurrent networksA recurrent network is essentially a bridge between a couple of hidden layers in the network that reinforce good or bad experiences back through the network. We can look at how this works in code by going through the following ...