Stochastic gradient descent

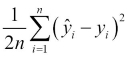

In the models we've seen so far, such as linear regression, we've talked about a criterion or objective function that the model must minimize while it is being trained. This criterion is also sometimes known as the cost function. For example, the least squares cost function for a model can be expressed as:

We've added a constant term of ½ in front of this for reasons that will become apparent shortly. We know from basic differentiation that when we are minimizing a function, multiplying the function by a constant factor does not alter the value of the minimum value of the function. In linear regression, just as with our ...

Get Mastering Predictive Analytics with R now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.