Chapter 4. Setting the Foundation for Your Data Lake

In Chapter 3, we examined the maturation stages that you will go through as you begin to actualize the value of your data. Now, it’s time to look more closely at what it takes to build the data lake.

Setting Up the Storage for the Data Lake

One of your first considerations in building your data lake will be storage. There are three basic types of data storage: immutable raw storage, optimized storage, and scratch databases. The type of data and how you use it will determine which data goes where.

Immutable Raw Storage Bucket

Data kept in immutable storage cannot, and should not, be changed after it has been written. In an immutable raw storage area in your data lake, you store data that hasn’t been scrubbed. You might never have even looked at it. But it should have sufficient self-descriptive language, or metadata, around it—such as table names and column names—so that you can determine where the data came from. You might store it in a text format such as JavaScript Object Notation (JSON) or comma-separated values (CSV), or perhaps even Apache Avro. Most people choose to store it in either JSON or CSV files.

Immutable raw storage fills many data storage needs. Three of the most important are:

- Disaster recovery

-

If anything ever happens to the original data stores, you have an exact replica.

- Forensic analysis

-

Immutable raw storage records can be used to trace problems, such as when bugs were introduced into a program.

- Ability to re-create and update optimized formats

-

Immutable raw storage ensures that the data is always there in its original state, to be used again if needed.

For example, if your transactional tables are dumped every morning into the immutable raw storage area, you would have snapshots of data, which is very important for the three aforementioned reasons. Financial companies may need these snapshots to review data and see how it has changed over time. You might need these tables if you’re ever audited, or if transformed files become corrupted.

After you have an audit table, transactional systems become unwieldy and difficult to manage—that’s why you want a raw data bucket. You shouldn’t change the data in it; after all, it’s raw, static, slow, and pretty damn dirty. But you can query and optimize it.

Warning

When you create this raw storage area, remove all “destructive” privileges from the data to ensure that no one can alter or delete it. You also need to do careful access control if the data contains any personally identifiable information (PII).

Optimized Storage Bucket

As your raw data grows, your queries into it become slower. No one likes waiting hours to see whether their query succeeds, only to find that it failed. Data scientists and analysts need their questions answered to turn data into insights faster than that. To gain this speed, transforming your data by storing it using one of the many optimized formats available. Three open source choices are Parquet, optimized row column (ORC), and Avro.

Apache Parquet

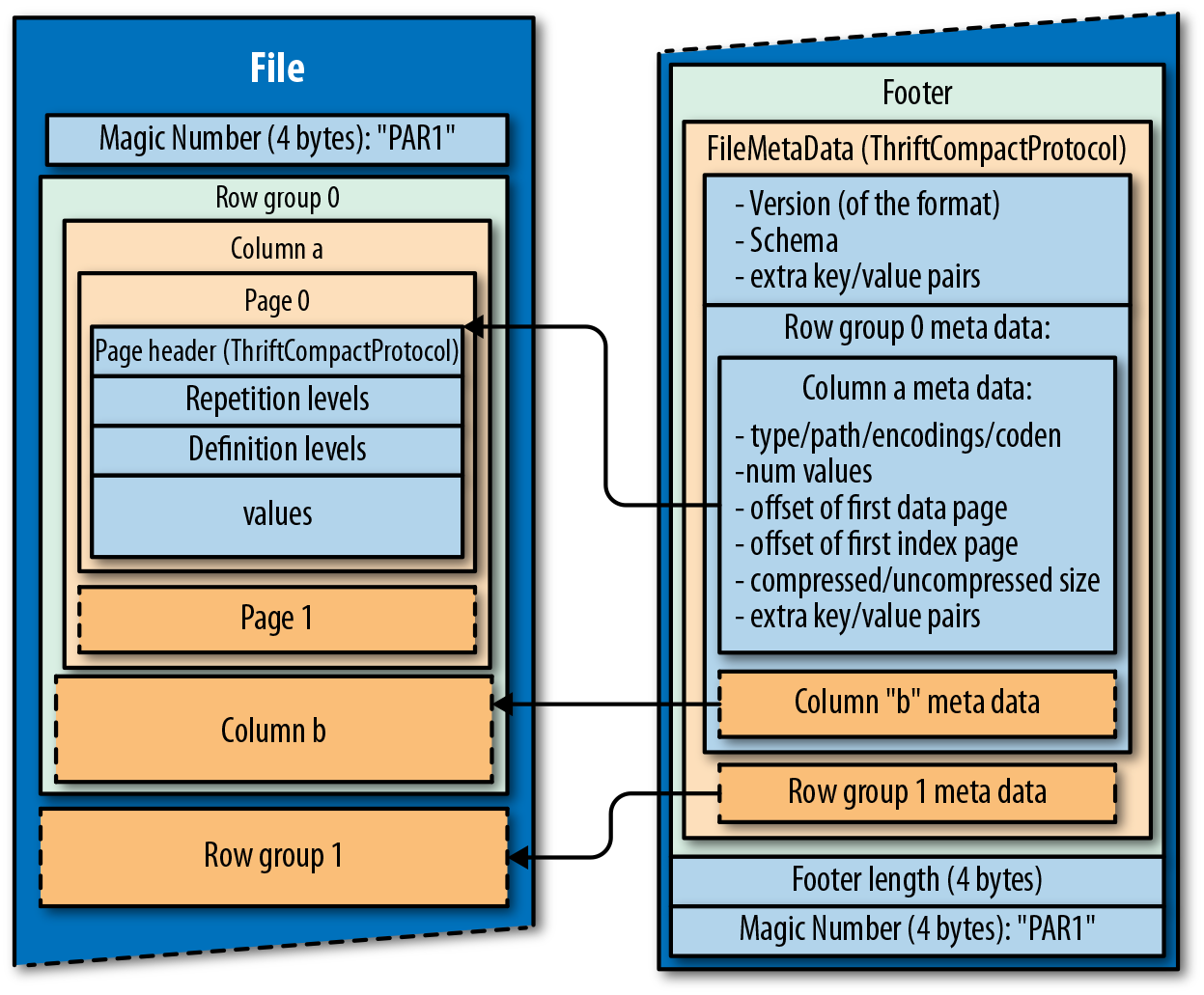

Apache Parquet (Figure 4-1) is an open source, column-oriented storage format for Hadoop. Parquet is optimized to work with complex data in bulk and includes methods for efficient data compression and encoding types.

Figure 4-1. The Parquet file structure

ORC

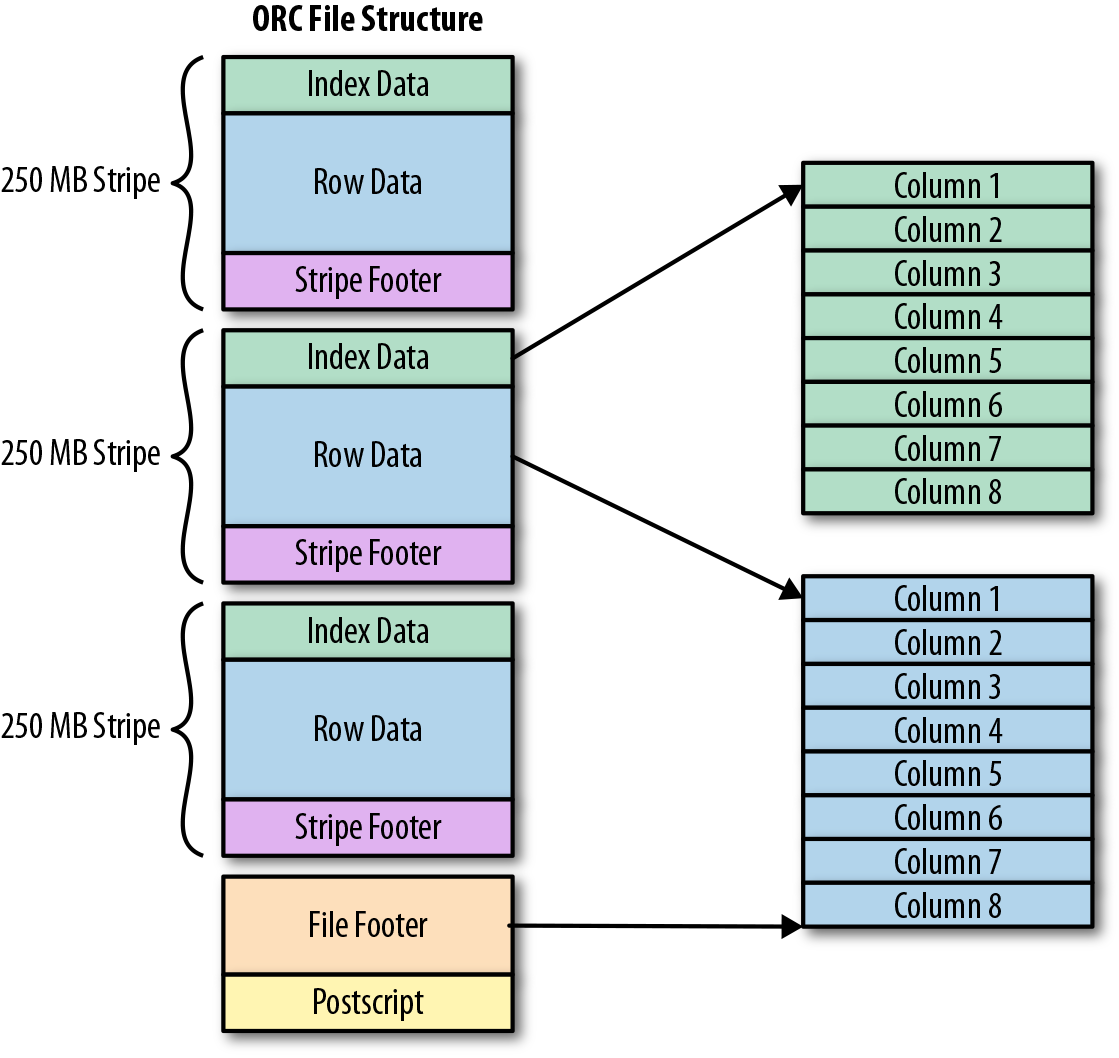

ORC (Figure 4-2) stores collections of rows in one file, with the row data stored in a columnar format. This allows parallel processing of row collections across a cluster. Each file with the columnar layout is optimized for compression. Skipping data and columns reduces both read and decompression loads.

Figure 4-2. The ORC file structure

Avro

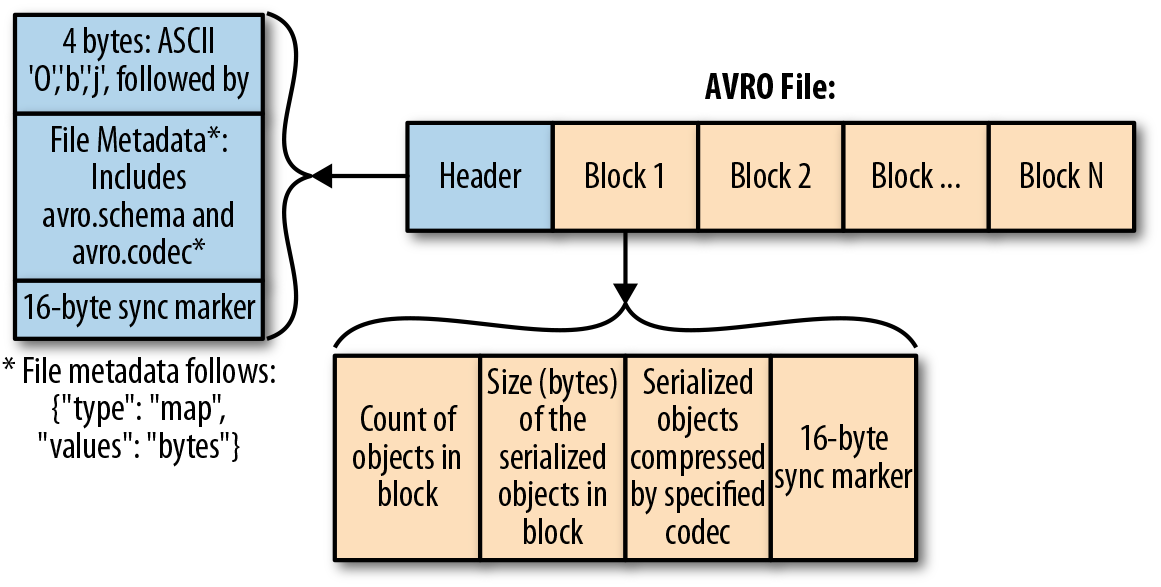

Avro (Figure 4-3) is a remote procedure call and data serialization framework. Developed within Apache’s Hadoop project, it uses JSON to define data types and protocols, and serializes data in a compact binary format.

Figure 4-3. The Avro file format

You can split files stored in Parquet, ORC, and Avro formats across multiple disks, which enables scalability and parallel processing. JSON and XML files cannot be split, which seriously limits their usefulness.

All three formats carry the data schema within the files themselves, which is to say they’re self-described. You can take an ORC, Parquet, or Avro file from one cluster and load it on a completely different machine, and the machine will know what the data is and be able to process it.

In addition to being file formats, Parquet, ORC, and Avro are also on-the-wire formats, which means you can use them to pass data between nodes in your Hadoop cluster. Table 4-1 compares the characteristics of the formats.

| ORC | Parquet | Avro | |

|---|---|---|---|

| Row or column | Column | Column | Row |

| Compression | Great | Great | Good |

| Speedup (compared to text file) | 10–100x | 10–100x | 10x |

| Schema evolution | Good | Better | Best |

| Platforms | Hive, Spark, Presto | Hive, Spark, Presto | Hive, Spark |

| Splittability | Best | Best | Better |

| File statistics | Yes | Yes | No |

| Indexes | Yes | Yes | No |

| Bloom filters | Yes | No | No |

Scratch Database

Finally, you will usually create what are called user scratch databases. These are necessary because data scientists and analysts will want to take data out of the optimized schema and build test tables for their own purposes. But because you don’t want anyone to inadvertently mess up your data lake—or turn it into a data swamp—you need a place where users can have their own little sandboxes to play in that won’t mess up the clean, well-defined, and well-structured data in the optimal data space.

For this, too, you need governance. How big can these databases be? How do you monitor them? Do you need an automated report fired off each week to remind people to clean up their data? There’s a lot of housekeeping to perform when you have scratch databases in your data lake.

Here are some of the benefits of a scratch database:

-

Users can do their work without fear of overwriting sources of truth.

-

DataOps can manage resources by user, team, or product.

-

The business has the ability to place governance controls at the user level.

The Sources of Data

For many businesses, the primary source of data in their data lake is transactional systems, MySQL, PostgreSQL, and Oracle, among others. These are the frontend databases that interact with your customers. We recommend pulling data out of these databases, converting it to JSON or CSV, and then storing it in your immutable raw storage area where it can be made easily available to your users.

You can also have data feeds that come from internal applications or third-party data services.

The other kind of data is the clickstream data coming in from applications, social media, the IoT, or sensors. Those can have any number of formats. Although most applications use JSON for this kind of data, remember that it’s coming from your edge, and that it’s probably had very little scrubbing. Again, we recommend putting that into the raw data bucket.

Getting Data into the Data Lake

Because data can be moved directly into the raw storage area of the data lake from a broad variety of sources in a wide range of formats, the data lake becomes a very compelling business tool. Data scientists and analysts now have the “one source of truth” we talked about in Chapter 2, and they can immediately begin querying the data for insights without having to worry about navigating silos or waiting for the data to be modeled and put through ETL processes.

Any refining, structuring, filtering, or preparation of data can happen whenever the data is needed by the business.

You can move data into this raw storage area using a broad range of tools, including ETL tools, bulk file-loading facilities, data integration tools, and even data movement tools that were specifically created for big data technologies. The result is that over time all the raw data—structured and unstructured—from all over the organization can be made available in one place.

Automating Metadata Capture

If you build it correctly, a data lake can add significant value to your data architecture. By providing you with large data storage, processing, and analytics capabilities,it enables you to be very agile when filling it or accessing it. When used together with a data warehouse, it can free up costly resources and more efficiently process large volumes of data.

To work most efficiently, a data lake needs to take advantage of automated metadata capturing and management tools. You need to capture and maintain attributes like data lineage, data quality, and usage history to make the data actually usable, yet doing this requires a highly automated metadata extraction, capture, and tracking facility. Manual metadata management processes cause the metadata to quickly fall out of synchronization with the data itself, turning the data lake, again, into a data swamp.

As discussed in Chapter 3, a well-designed data lake will integrate closely with the enterprise data warehouse and its ETL, data cleansing, and data profiling tools. Your users should be able to run reports and perform analyses using the tools they’ve always used.

Data Types

You’ll have many, many different types of data to work with as you fill up your data lake. These can be divided into three groups: structured (which includes the data you can put into ORC and Parquet); semi-structured (text, CSV, and JSON); and unstructured (images and binary files). The three data types exist on a continuum, with unstructured data being the least formatted and structured data being the most formatted.

The more structured the data, the easier it is to work with, whereas semi-structured and unstructured data create more challenges. Yet all types of data play roles in effective data analysis.

Let’s now take a closer look at each of them.

Structured Data

Structured data is data that has been organized into a formatted repository, typically a database, so that its elements can be made addressable for more effective processing and analysis. Some examples of structured data include HR data on employees—for example, names, Social Security numbers, addresses—that is captured and put in separate fields so that it can be easily searched and retrieved. The same is true for sales data; when a customer buys a product, you have a structured record of what that person bought, the date it was purchased, and the price.

Semi-Structured Data

Semi-structured data has some structure to it; for example, it maintains internal tags and markings that identify separate data elements, enabling you to create information groupings and hierarchies. Both documents and databases can be semi-structured. Although this type of data is at most 10% of the data pie, it is used in many business-critical applications. CSV, XML, and JSON are all examples of semi-structured data. Some less common examples include email and fixed-length electronic data interchange formats.

Unstructured Data

Unstructured data encompasses everything that isn’t structured or semi-structured data. It has an internal structure but is not structured via predefined data models or schema, and can be textual or nontextual, human- or machine-generated. Text documents and the different kinds of multimedia files (audio, video, photo) are all types of unstructured data file formats.

The reason all of this matters is because a cloud data lake allows you to quickly throw structured, semi-structured, and unstructured datasets into it and to analyze them using the specific technologies that make sense for each particular workload or use case. Table 4-2 compares the three data types.

| Structured data | Semi-structured data | Unstructured data | |

|---|---|---|---|

| Example | RDMS tables, columnar stores | XML, JSON, CSV | Images, audio, binary, text, PDF files |

| Uses | Transactional or analytical stores | Clickstream, logging | Photos, songs, PDF files, binary storage formats |

| Transaction management | Mature transactions and concurrency | Maturing transactions and concurrency | No transaction management or concurrency |

| Version management | Versioned over tuples, rows, tables | Not very common; possible over tuples and graphs | Versioned as a whole |

| Flexibility | Rigorous schema | Flexible, tolerant schema | Flexible due to no schema |

Storage Management in the Cloud

In Chapter 5, we look at how data life cycle management is a policy-based approach to managing the flow of a system’s data throughout its life cycle—from creation and initial storage to the time when it becomes obsolete and is deleted. You will need to make decisions when it comes to where different datasets are stored and when to move them to a different storage type or delete them.

A multitemperature data management solution refers to one system with different types of data storage and access. In such a system there might be data that is frequently accessed on fast storage (hot data), as well as less frequently accessed data stored on slightly slower storage (warm data), and rarely accessed data stored on the slowest storage an organization has (cold data).

AWS, for example, has a cold storage format called, appropriately enough, Glacier. Although it’s much cheaper than the typical, immediately retrievable storage in S3, you don’t have immediate access to data in Glacier. It could take up to 24 hours to get your data out of Glacier and back into your hot storage area.

In fact, Amazon gives you tiers in S3 so that you can have frequent storage access, infrequent access, and probably-never-accessed data (archival data in case you are ever audited).

Data Governance

As your data journey continues, you will need to think of data governance. For example, how do you handle National Provider Identifiers (NPI) such as customers’ financial information and PII?

Questions like the following need to be answered:

-

Where did this data come from?

-

What is the data lineage?

-

How has it been transformed?

-

What did it look like originally?

-

What is the shape of my data?

-

How will the schema evolve?

Though many startups don’t use credit cards at first for fear of fraud or because of cost, eventually they start storing credit card numbers and customers’ PII, and then they’re under a different set of guidelines: payment card industry (PCI) regulations.

At this point there is a shift in data governance needs. These startups need to begin adding more change controls with respect to who can access the data and how they access it to comply with regulations like the European Union’s General Data Protection Regulation (GDPR) or Health Insurance Portability and Accountability Act (HIPAA) for medical data. The next chapter discusses the topic of data governance in more detail.

Get Operationalizing the Data Lake now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.