Chapter 3. Image Vision

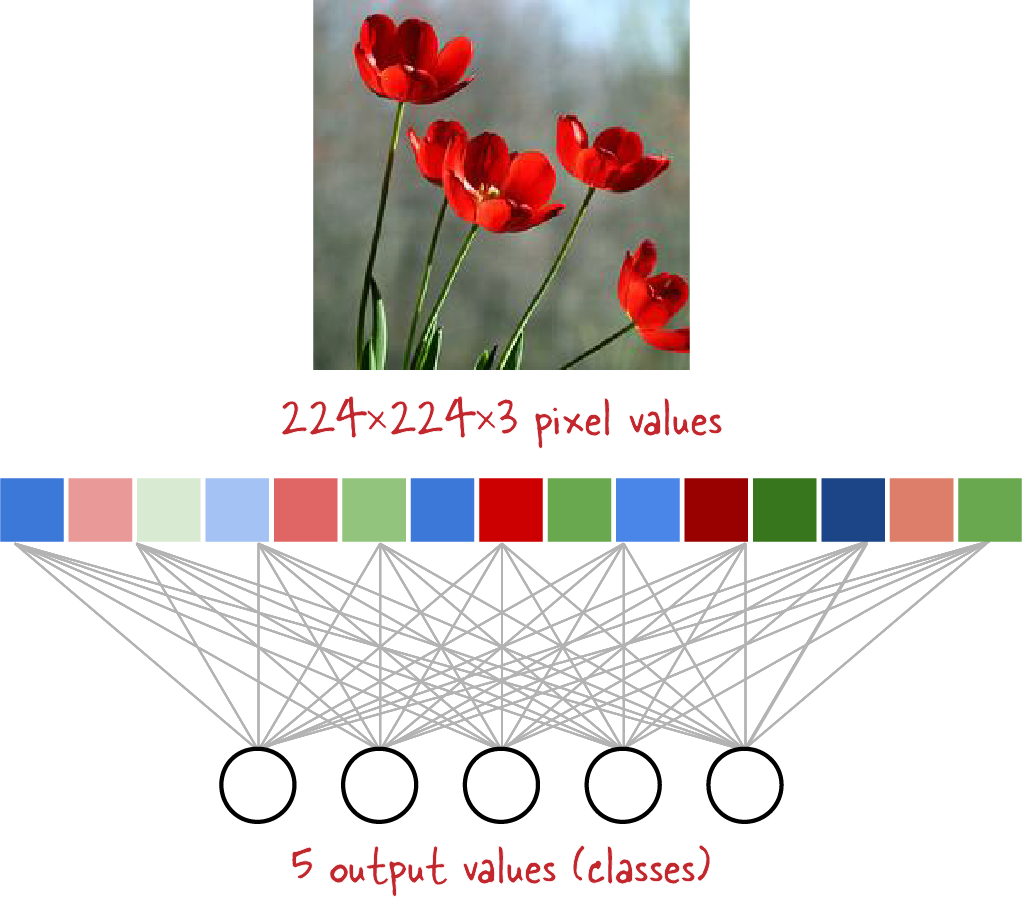

In Chapter 2, we looked at machine learning models that treat pixels as being independent inputs. Traditional fully connected neural network layers perform poorly on images because they do not take advantage of the fact that adjacent pixels are highly correlated (see Figure 3-1). Moreover, fully connecting multiple layers does not make any special provisions for the 2D hierarchical nature of images. Pixels close to each other work together to create shapes (such as lines and arcs), and these shapes themselves work together to create recognizable parts of an object (such as the stem and petals of a flower).

In this chapter, we will remedy this by looking at techniques and model architectures that take advantage of the special properties of images.

Tip

The code for this chapter is in the 03_image_models folder of the book’s GitHub repository. We will provide file names for code samples and notebooks where applicable.

Figure 3-1. Applying a fully connected layer to all the pixels of an image treats the pixels as independent inputs and ignores that images have adjacent pixels working together to create shapes.

Pretrained Embeddings

The deep neural network that we developed in Chapter 2 had two hidden layers, one with 64 nodes and the other with 16 nodes. One way to think about this network architecture is shown in Figure 3-2. In some sense, all the information ...

Get Practical Machine Learning for Computer Vision now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.