February 2019

Beginner to intermediate

382 pages

10h 1m

English

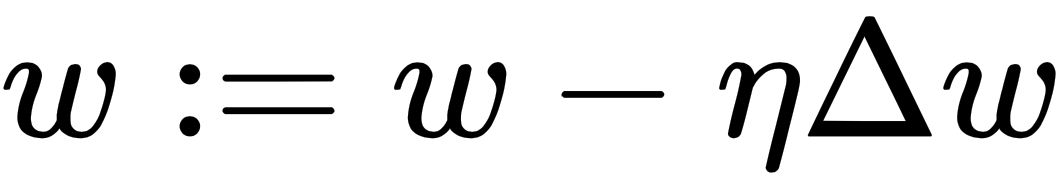

Gradient descent (also called steepest descent) is a procedure of minimizing an objective function by first-order iterative optimization. In each iteration, it moves a step that is proportional to the negative derivative of the objective function at the current point. This means the to-be-optimal point iteratively moves downhill towards the minimal value of the objective function. The proportion we just mentioned is called learning rate, or step size. It can be summarized in a mathematical equation as follows:

Here, the left w is the weight vector after a learning step, and the right ...