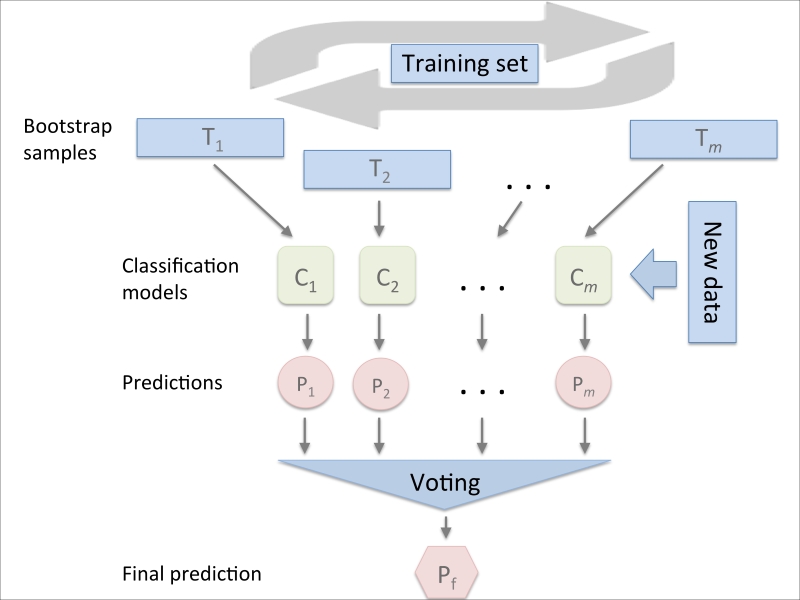

Bagging – building an ensemble of classifiers from bootstrap samples

Bagging is an ensemble learning technique that is closely related to the MajorityVoteClassifier that we implemented in the previous section, as illustrated in the following diagram:

However, instead of using the same training set to fit the individual classifiers in the ensemble, we draw bootstrap samples (random samples with replacement) from the initial training set, which is why bagging is also known as bootstrap aggregating. To provide a more concrete example of how bootstrapping works, let's consider the example shown in the following figure. Here, we have seven different ...

Get Python: Real-World Data Science now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.