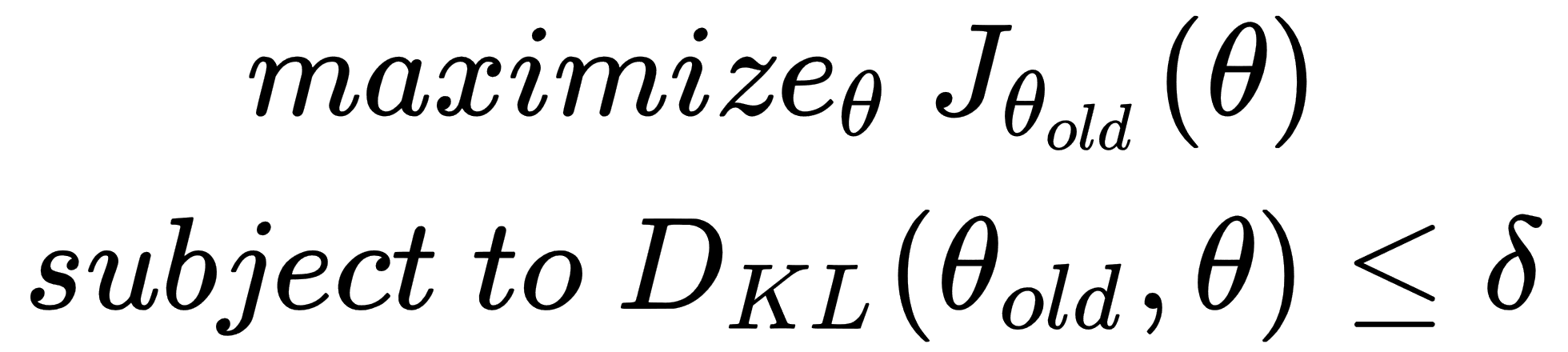

From a broad perspective, TRPO can be seen as a continuation of the NPG algorithm for nonlinear function approximation. The biggest improvement that was introduced in TRPO is the use of a constraint on the KL divergence between the new and the old policy that forms a trust region. This allows the network to take larger steps, always within the trust region. The resulting constraint problem is formulated as follows:

(7.2)

(7.2)

Here,  is the objective surrogate function that we'll see soon, is the KL divergence between the old ...

is the objective surrogate function that we'll see soon, is the KL divergence between the old ...