Chapter 1. Why Stream?

Life doesn’t happen in batches.

Many of the systems we want to monitor and to understand happen as a continuous stream of events—heartbeats, ocean currents, machine metrics, GPS signals. The list, like the events, is essentially endless. It’s natural, then, to want to collect and analyze information from these events as a stream of data. Even analysis of sporadic events such as website traffic can benefit from a streaming data approach.

There are many potential advantages of handling data as streams, but until recently this method was somewhat difficult to do well. Streaming data and real-time analytics formed a fairly specialized undertaking rather than a widespread approach. Why, then, is there now an explosion of interest in streaming?

The short answer to that question is that superb new technologies are now available to handle streaming data at high-performance levels and at large scale—and that is leading more organizations to handle data as a stream. The improvements in these technologies are not subtle. Extremely high performance at scale is one of the chief advances, though not the only one. Previous rates of message throughput for persistent message queues were in the range of thousands of messages per second. The new technologies we discuss in this book can deliver rates of millions of messages per second, even while persisting the messages. These systems can be scaled horizontally to achieve even higher rates, and improved performance at scale isn’t the only benefit you can get from modern streaming systems.

The upshot of these changes is that getting real-time insights from streaming data has gone from promise to widespread practice. As it turns out, stream-based architectures additionally provide fundamental and powerful benefits.

Note

Streaming data is not just for highly specialized projects. Stream-based computing is becoming the norm for data-driven organizations.

New technologies and architectural designs let you build flexible systems that are not only more efficient and easier to build, but that also better model the way business processes take place. This is true in part because the new systems decouple dependencies between processes that deliver data and processes that make use of data. Data from many sources can be streamed into a modern data platform and used by a variety of consumers almost immediately or at a later time as needed. The possibilities are intriguing.

We will explain why this broader view of streaming architecture is valuable, but first we take a look at how people use streaming data, now or in the very near future. One of the foremost sources of continuous data is from sensors in the Internet of Things (IoT), and a rapidly evolving sector in IoT is the development of futuristic “connected vehicles.”

Planes, Trains, and Automobiles: Connected Vehicles and the IoT

In the case of the modern and near-future personal automobile, it will likely be exchanging information with several different audiences. These may include the driver, the manufacturer, the telematics provider, in some cases the insurance company, the car itself, and soon, other cars on the road.

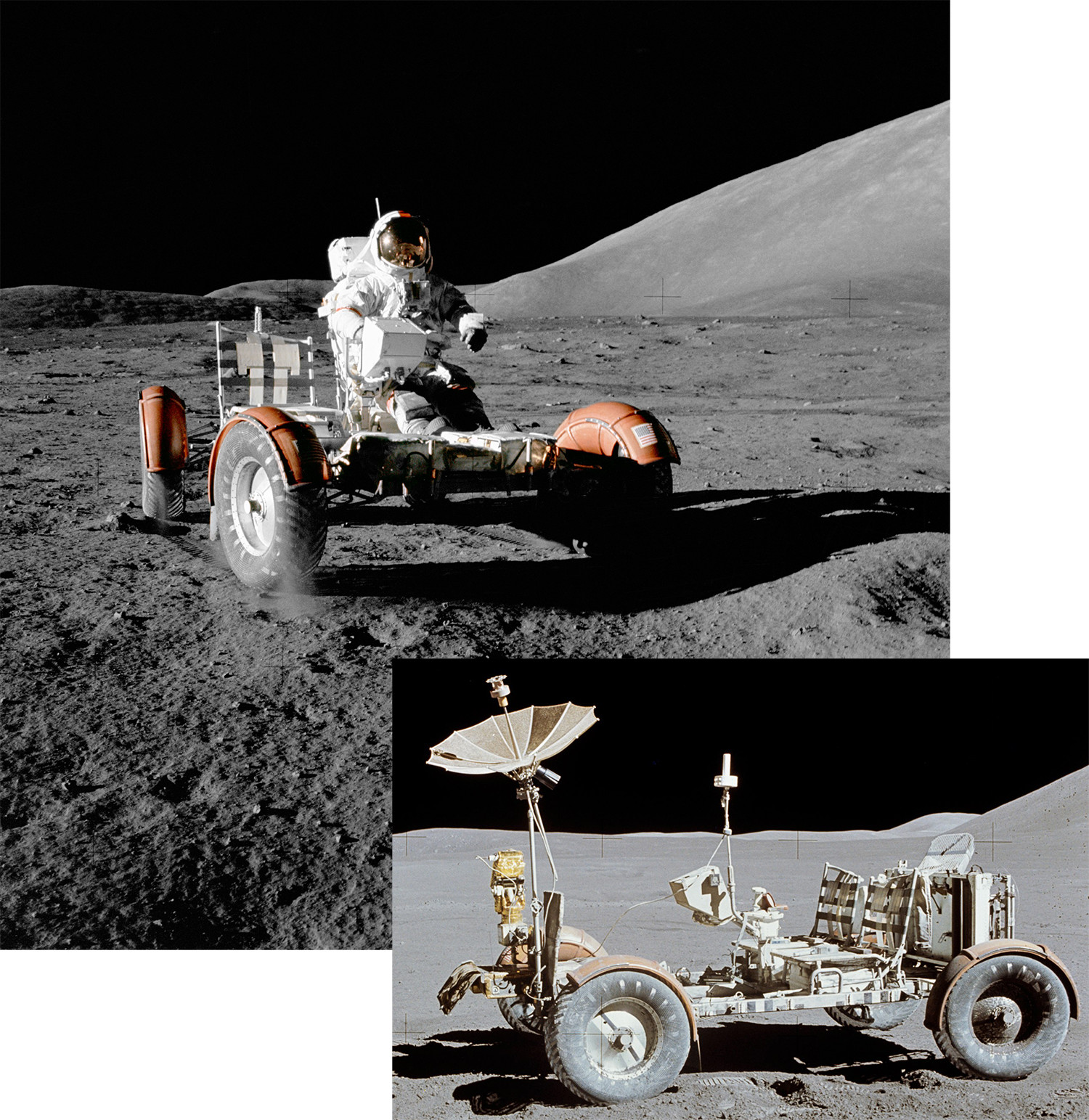

Connected cars are one of the fastest-changing specialties in the IoT connected vehicles arena, but the idea is not entirely new. One of the earliest connected vehicles—a distant harbinger of today’s designs—came to the public’s attention in the early 1970s. It was NASA’s Lunar Roving Vehicle (LRV), shown in action on the moon in the images of Figure 1-1.

At a time when drivers on Earth navigated using paper road maps (assuming they could successfully unfold and refold them) and checked their oil, coolant, and tire pressure levels manually, the astronaut drivers of the LRV navigated on the moon by continuously sending data on direction and distance to a computer that calculated all-important insights needed for the mission. These included overall direction and distance back to the Lunar Module that would carry them home. This “connected car” could talk to Earth via audio or video transmissions. Operators at Mission Control were able to activate and direct the video camera on the LRV from their position on Earth, about a quarter million miles away.

Figure 1-1. Top: US NASA astronaut and mission commander Eugene A. Cernan performs a check on the LRV while on the surface of the moon during the Apollo 17 mission in 1972. The vehicle is stripped down in this photo prior to being loaded up for its mission. (Image credit: NASA/astronaut Harrison H. Schmitt; in the public domain: http://bit.ly/lrv-apollo17.) Bottom: A fully equipped LRV on the moon during the Apollo 15 mission in 1971. This is a connected vehicle with a low-gain antenna for audio and a high-gain antenna to transmit video data back to Mission Control on Earth. (Image credit: NASA/Dave Scott; cropped by User:Bubba73; in the public domain: http://bit.ly/lvr-apollo15.)

Vehicle connectivity for Earth-bound cars has come a long way since the Apollo missions. Surprisingly, among the most requested services that automobile drivers want from their connectivity is to listen to their own music playlist or to more easily use their cell phone while they are driving—it’s almost as though they want a cell phone on wheels. Other desired services for connected cars include being able to get software updates from the car manufacturer, such as an update to make warning signals operate correctly. Newer car models make use of environmental data for real-time adjustments in traction or steering. Data about the car’s function can be used for predictive maintenance or to alert insurance companies about the driver and vehicle performance. (As of the date of writing this book, modern connected cars do not communicate with anyone on the moon, although they readily make use of 4G networks.)

Today’s cars are also equipped with an event data recorder (EDR), also called a “black box,” such as that well-known device on airplanes. Huge volumes of sensor data for a wide variety of parameters are collected and stored, mainly intended to be used in case of an accident or malfunction.

Connectivity is particularly important for high-performance automobiles. Formula I racecars are connected cars. Modern Formula I cars measure hundreds of sensors at up to 1 kHz (or even more with the latest technology) and transmit the data back to the pits via an RF link for analysis and forwarding back to headquarters.

Cars are not the only IoT-enabled vehicles. Trains, planes, and ships also make use of sensor data, GPS tracking, and more. For example, partnerships between British Railways, Cisco Systems, and telecommunication companies are building connected systems to reduce risk for British trains. Heavily equipped with sensors, the trains monitor the tracks, and the tracks monitor the trains while also communicating with operating centers. Data such as information about train speed, location, and function as well as track conditions are transmitted as continuous streams of data that make it possible for computer applications to provide low-latency insights as events happen. In this way, engineers are able to take action in a timely manner.

These examples underline one of the main benefits of real-time analysis of streaming data: the ability to respond quickly to events.

Streaming Data: Life As It Happens

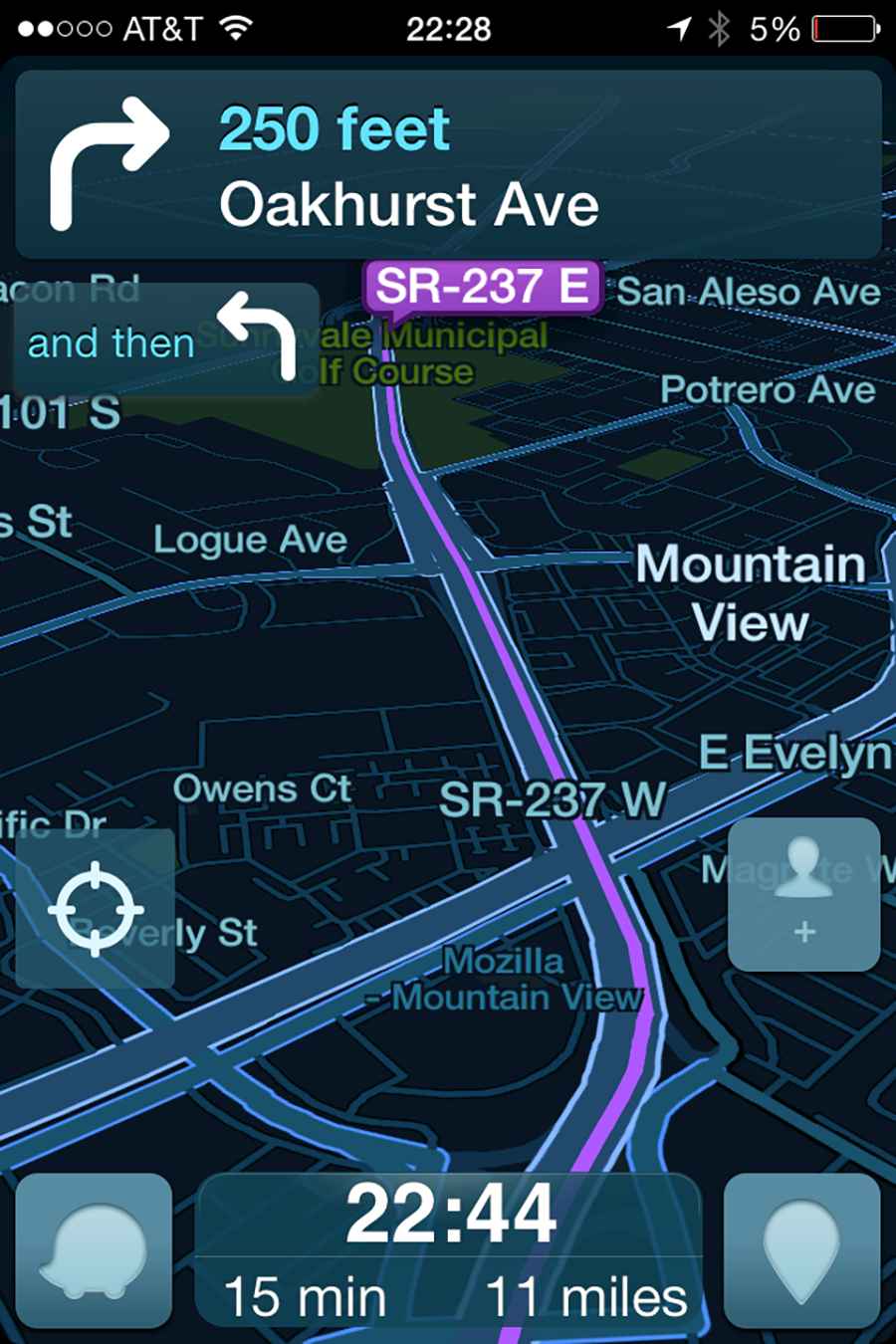

The benefits of handling streaming data well are not limited to getting in-the-moment actionable insights, but that is one of the most widely recognized goals. There are many situations where in order for a response to be of value, it needs to happen quickly. Take for instance the situation of crowd-sourced navigation and traffic updates provided by the mobile application known as Waze. A view of this application is shown in Figure 1-2. Using real-time streaming input from millions of drivers, Waze reports current traffic and road information. These moment-to-moment insights allow drivers to make informed decisions about their route that can reduce gasoline usage, travel time, and aggravation.

Figure 1-2. Display of a smartphone application known as Waze. In addition to providing point-to-point directions, it also adds value by supplying real-time traffic information shared by millions of drivers.

Knowing that there is a slow-down caused by an accident on a particular freeway during the morning commute is useful to a driver while the incident and its effect on traffic are happening. Knowing about this an hour after the event or at the end of the day, in contrast, has much less value, except perhaps as a way to review the history of traffic patterns. But these after-the-fact insights do little to help the morning commuter get to work faster. Waze is just one straightforward example of the time-value of information: the value of that particular knowledge decreases quickly with elapsed time. Being able to process streaming data via a 4G network and deliver reports to drivers in a timely manner is essential for this navigation tool to work as it is intended.

Note

Low-latency analysis of streaming data lets you respond to life as it happens.

Time-value of information is significant in many use cases where the value of particular insights diminishes very quickly after the event. The following section touches on a few more examples.

Where Streaming Matters

Let’s start with retail marketing. Consider the opportunities for improving customer experience and raising a customer’s tendency to buy something as they pass through a brick-and-mortar store. Perhaps the customer would be encouraged by a discount coupon, particularly if it were for an item or service that really appealed to them.

The idea of encouraging sales through coupons is certainly not new, but think of the evolution in style and effectiveness of how this marketing technique can be applied. In the somewhat distant past, discount coupons were mailed en masse to the public, with only very rough targeting in terms of large areas of population—very much a fire hose approach. Improvements were made when coupons were offered to a more selective mailing list based on other information about a customer’s interests or activities. But even if the coupon was well-matched to the customer’s interest, there was a large gap in time and focus between receiving it via mail or newspaper and being able to act on it by going to the store. That left plenty of time for the impact of the coupon to “wear off” as the customer became distracted by other issues, making even this targeted approach fairly hit-or-miss.

Now imagine instead that as a customer passes through a store, a display sign lights up as they pass to offer a nice selection of colors in a specific style of sweater or handbag that interests them. Perhaps a discount coupon code shows up on the customer’s phone as they reach the electronics department. Or suppose the store is an outdoor outfitter that can distinguish customers who are interested in camping plus canoeing from those who like camping plus mountain biking, based on their past purchases or web-viewing habits. Beacons might react to the smartphones of customers as they enter and provide offers via text messages to their phones that fit these different tastes. How much more effective could a discount coupon be if it’s offered not only to the right person but also at just the right moment?

These new approaches to customer-responsive, in-the-moment marketing are already being implemented by some large retail merchants, in some cases developed in-house and in others through vendors who provide innovative new services. The ability to recognize the presence of a particular customer may make use of a WiFi connection to a cell phone or sometimes via beacons placed strategically in a store. These techniques are not limited to retail stores. Hotels and other service organizations are also beginning to look at how these approaches can help them better recognize return customers or be alert to constantly changing levels needed for service at check-in or in the hotel lounge.

These approaches are not limited to retail marketing. Surprisingly, similar techniques can also be used to track the position of garbage trucks and how they service “smart” dumpsters that announce their relative fill levels. Trucks can be deployed on customized schedules that better match actual needs, thus optimizing operations with regard to drivers’ time, gas consumption, and equipment usage.

The main goal in each of these sample situations is to gain actionable insights in a timely manner. The response to these insights may be made by humans or may be automated processes. Either way, timing is the key. The aim is to exploit streaming data and new technologies to be able to respond to life in the moment. But as it turns out, that’s not the only advantage to be gained from using streaming data, as we discuss later in this chapter. It turns out that a streaming architecture forms the core for a wide-ranging set of processes, some of which you may not previously have thought of in terms of streaming.

One of the most important and widespread situations in which it is important to be able to carry out low-latency analytics on streaming data is for defending data security. With a well-designed project, it is possible to monitor a large variety of things that take place in a system. These actions might include the transactions involving a credit card or the sequence of events related to logins for a banking website. With anomaly detection techniques and very low-latency technologies, cyber attacks by humans or robots may be discovered quickly so that action can be taken to thwart the intrusion or at least to mitigate loss.

As mentioned earlier, the benefits of adopting a streaming style of handling data go far beyond the opportunity to carry out real-time or near–real time analytics, as powerful as those immediate insights may be. Some of the broader advantages require durability: you need a message-passing system that persists the event stream data in such a way that you can apply checkpoints to let you restart reading from a specific point in the flow.

Beyond Real Time: More Benefits of Streaming Architecture

Industrial settings provide examples from the IoT where streaming data is of value in a variety of ways. Equipment such as pumps or turbines are now loaded with sensors providing a continuous stream of event data and measurements of many parameters in real or near-real time, and many new technologies and services are being developed to collect, transport, analyze, and store this flood of IoT data.

Modern manufacturing is undergoing its own revolution, with an emphasis on greater flexibility and the ability to more quickly respond to data-driven decisions and reconfigure to make appropriate changes to products or processes. Design, engineering, and production teams are to work much more closely together in future. Some of these innovative approaches are evident in the world-leading work of the University of Sheffield Advanced Manufacturing Centre with Boeing (AMRC) in northern England. A fully reconfigurable futuristic Factory 2050 is scheduled to open there in 2016. It is designed to enable production pods from different companies to “dock” on the factory’s circular structure for additional customization. This facility is depicted in Figure 1-3.

Figure 1-3. Hub-and-spoke design of the fully reconfigurable Factory 2050, a revolutionary facility that is part of the AMRC. Its flexible interior layout will enable rapid changes in product design, a new style in how manufacturing is done. (Image credit Bond Bryan Architects, used with permission.)

This move toward flexibility in manufacturing as part of the IoT is also reflected in the now widespread production of so-called “smart parts.” The idea is that not only will sensor measurements on the factory floor during manufacture provide a fine-grained view of the manufacturing process, the parts being produced will also report back to the manufacturer after they are deployed to the field. This data informs the manufacturer of how well the part performs over its lifetime, which in turn can influence changes in design or manufacture. Additionally, these streams of smart-part reports are also a monetizable product themselves. Manufacturers may sell services that draw insights from this data or in some cases sell or license access to the data itself. What all this means is that streaming data is an essential part of the success of the IoT at many levels.

The value of streaming sensor data goes beyond real-time insights. Consider what happens when sensor data is examined along with long-term detailed maintenance histories for parts used in pumps or other industrial equipment. The event stream for the sensor data now acts as a “time machine” that lets you look back, with the help of machine learning models, to find anomalous patterns in measurement values prior to a failure. Combined with information from the parts’ maintenance histories, potential failures can be noted long before the event, making predictive maintenance alerts possible before catastrophic failures can occur. This approach not only saves money; in some cases, it may save lives.

Emerging Best Practices for Streaming Architectures

An old way of thinking about streaming data is “use it and lose it.” This approach assumed you would have an application for real-time analytics, such as a way to process information from the stream to create updates to a real-time dashboard, and then just discard the data. In cases where an upstream queuing system for messages was used, it was perhaps thought of only as a safety buffer to temporarily hold event data as it was ingested, serving as a short-term insurance against an interruption in the analytics application that was using the data stream. The idea was that the data in the event stream no longer had value beyond the real-time analytics or that there was no easy or affordable way to persist it, but that’s changing.

While queuing is useful as a safety message, with the right messaging technology, it can serve as so much more. One thing that needs to change to gain the full benefit of streaming data is to discard the “use it and lose it” style of thinking.

Tip

When it comes to streaming data, don’t just use it and throw it away. Persistence of data streams has real benefits.

Being able to respond to life as it happens is a powerful advantage, and streaming systems can make that possible. For that to work efficiently, and in order to take advantage of the other benefits of a well-designed streaming system, it’s necessary to look at more than just the computational frameworks and algorithms developed for real-time analytics. There has been a lot of excitement in recent years about low-latency in-memory frameworks, and understandably so. These stream processing analytics technologies are extremely important, and there are some excellent new tools now available, as we discuss in Chapter 2. However, for these to be used effectively you also need to have access to the appropriate data—you need to collect and transport data as streams. In the past, that was not a widespread practice. Now, however, that situation is changing and changing fast.

One of the reasons modern systems can now more easily handle streaming data is improvements in the way message-passing systems work. Highly effective messaging technologies collect streaming data from many sources—sometimes hundreds, thousands, or even millions—and deliver it to multiple consumers of the data, including but not limited to real-time applications. You need the effective message-passing capabilities as a fundamental aspect of your streaming infrastructure.

Note

At the heart of modern streaming architecture design style is a messaging capability that uses many sources of streaming data and makes it available on demand by multiple consumers. An effective message-passing technology decouples the sources and consumers, which is a key to agility.

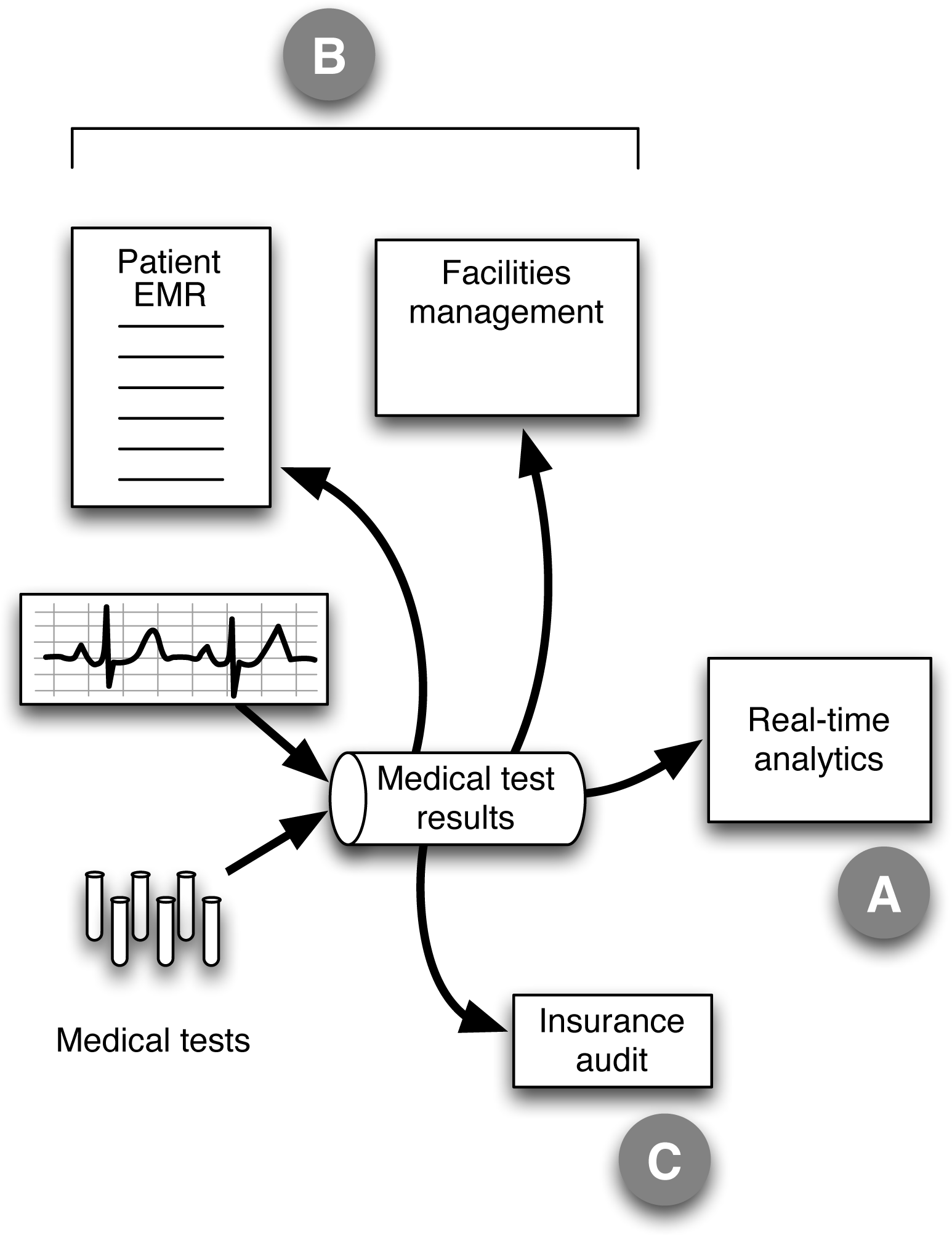

Healthcare Example with Data Streams

Healthcare provides a good example of the way multiple consumers might want to use the same data stream at different times. Figure 1-4 is a diagram showing several different ways that a stream of test results data might be used. In our healthcare example, there are multiple data sources coming from medical tests such as EKGs, blood panels, or MRI machines that feed in a stream of test results. Our stream of medical results is being handled by a modern-style messaging technology, depicted in the figure as a horizontal tube. The stream of medical test results data would not only include test outcomes, but also patient ID, test ID, and possibly equipment ID for the instrumentation used in the lab tests.

With streaming data, what may come to mind first is real-time analytics, so we have shown one consumer of the stream (labeled “A” in the figure) as a real-time application. In the older style of working with streaming data, the data might have been single-purpose: read by the real-time application and then discarded. But with the new design of streaming architecture, multiple consumers might make use of this data right away, in addition to the real-time analytics program. For example, group “B” consumers could include a database of patient electronic medical records and a database or search document for number of tests run with particular equipment (facilities management).

Figure 1-4. Healthcare example with streaming data used for more than just real-time analytics. The diagram shows a schematic design for a system that handles data from several sources such that it can be used in different ways and at different times by multiple consumers. The message-passing technology is represented here by the tube labeled with the content of the data stream (medical test results). EMR stands for electronic medical records. Note that the consumer in group C, the insurance audit, might not have been planned for when the system was designed or deployed.

One of the interesting aspects of this example is that we may want the data stream to serve as a durable, auditable record of the test results for several purposes, such as an insurance audit (labeled as use type “C” in the figure). This audit could happen at a later time and might even be unplanned. This is not a problem if the messaging software has the needed capabilities to support a durable, replayable record.

Streaming Data as a Central Aspect of Architectural Design

In this book, we explore the value of streaming data, explain why and how you can put it to good use, and suggest emerging best practices in the design of streaming architectures. The key ideas to keep in mind about building an effective system that exploits streaming data are the following:

-

Real-time analysis of streaming data can empower you to react to events and insights as they happen.

-

Streaming data does not need to be discarded: data persistence pays off in a variety of ways.

-

With the right technologies, it’s possible to replicate streaming data to geo-distributed data centers.

-

An effective message-passing system is much more than a queue for a real-time application: it is the heart of an effective design for an overall big data architecture.

The latter three points (persistence of streaming data, geo-distributed replication, and the central importance of the correct messaging layer) are relatively new aspects of the preferred design for streaming architectures. Perhaps the most disruptive idea presented here is that streaming architecture should not be limited to specialized real-time applications. Instead, organizations benefit by adopting this streaming approach as an essential aspect of efficient, overall architecture.

Get Streaming Architecture now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.