Chapter 3. How Change Data Capture Fits into Modern Architectures

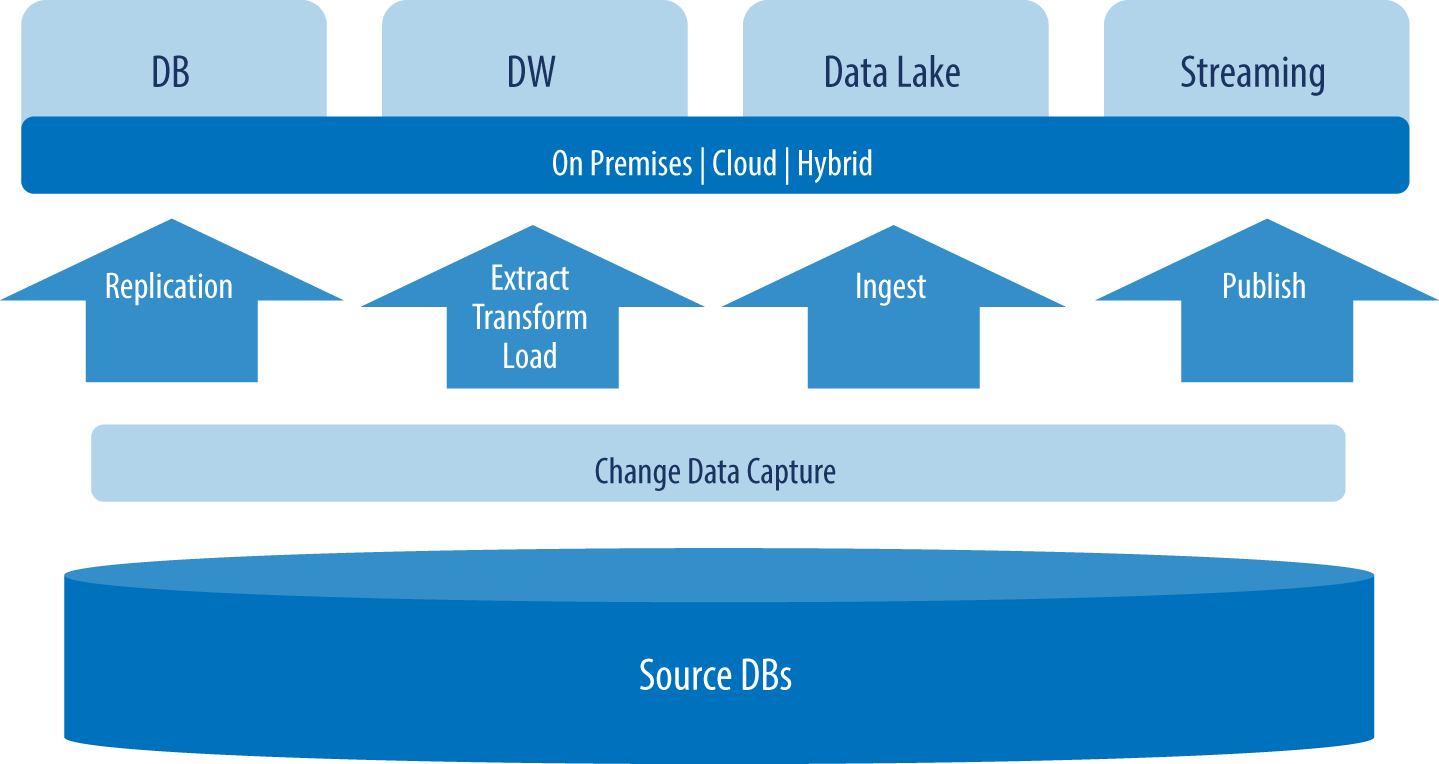

Now let’s examine the architectures in which data workflow and analysis take place, their role in the modern enterprise, and their integration points with change data capture (CDC). As shown in Figure 3-1, the methods and terminology for data transfer tend to vary by target. Even though the transfer of data and metadata into a database involves simple replication, a more complicated extract, transform, and load (ETL) process is required for data warehouse targets. Data lakes, meanwhile, typically can ingest data in its native format. Finally, streaming targets require source data to be published and consumed in a series of messages. Any of these four target types can reside on-premises, in the cloud, or in a hybrid combination of the two.

Figure 3-1. CDC and modern data integration

We will explore the role of CDC in five modern architectural scenarios: replication to databases, ETL to data warehouses, ingestion to data lakes, publication to streaming systems, and transfer across hybrid cloud infrastructures. In practice, most enterprises have a patchwork of the architectures described here, as they apply different engines to different workloads. A trial-and-error learning process, changing business requirements, and the rise of new platforms all mean that data managers will need to keep copying data from one place ...

Get Streaming Change Data Capture now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.