Chapter 4. Case Studies

Now let’s explore some case studies. Each of these illustrates the role of change data capture (CDC) in enabling scalable and efficient analytics architectures that do not affect production application performance. By moving and processing incremental data and metadata updates in real time, these organizations have reduced or eliminated the need for resource-draining and disruptive batch (aka full) loads. They are siphoning data to multiple platforms for specialized analysis on each, consuming CPU and other resources in a balanced and sustainable way.

Case Study 1: Streaming to a Cloud-Based Lambda Architecture

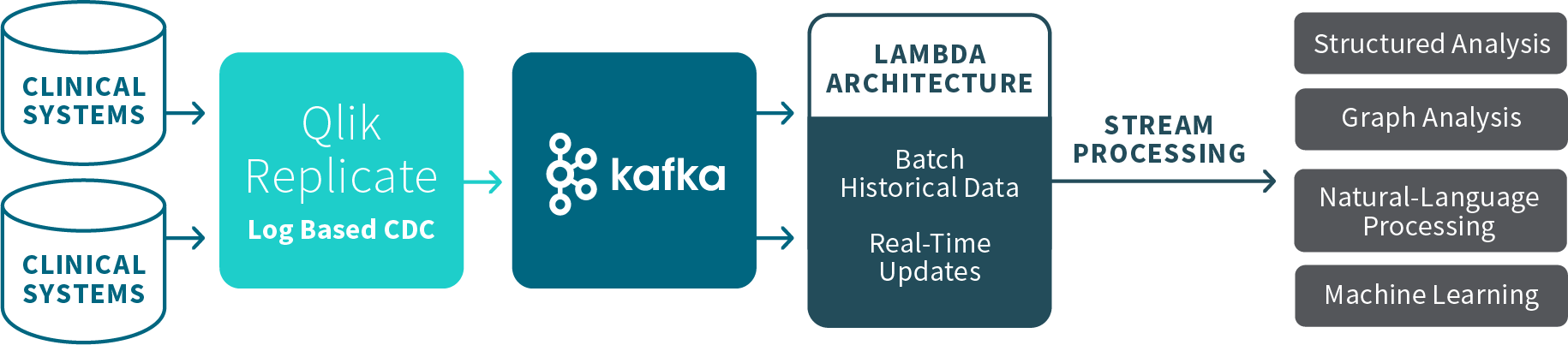

Qlik is working with a Fortune 500 healthcare solution provider to hospitals, pharmacies, clinical laboratories, and doctors that is investing in cloud analytics to identify opportunities for improving quality of care. The analytics team for this company, which we’ll call “GetWell,” is using CDC software to accelerate and streamline clinical data consolidation from on-premises sources such as SQL Server and Oracle to a Kafka message queue that in turn feeds a Lambda architecture on Amazon Web Services (AWS) Simple Storage Service (S3). This architecture is illustrated in Figure 4-1. Log-based CDC has enabled them to integrate this clinical data at scale from many sources with minimal administrative burden and no impact on production operations.

GetWell data scientists conduct therapy research on this Lambda architecture, using both historical batch processing and real-time analytics. In addition to traditional SQL-structured analysis, they run graph analysis to better assess the relationships between clinical drug treatments, drug usage, and outcomes. They also perform natural-language processing (NLP) to identify key observations within physician’s notes and are testing other new AI approaches such as machine learning to improve predictions of clinical treatment outcomes.

Figure 4-1. Data architecture for Kafka streaming to cloud-based Lambda architecture

Case Study 2: Streaming to the Data Lake

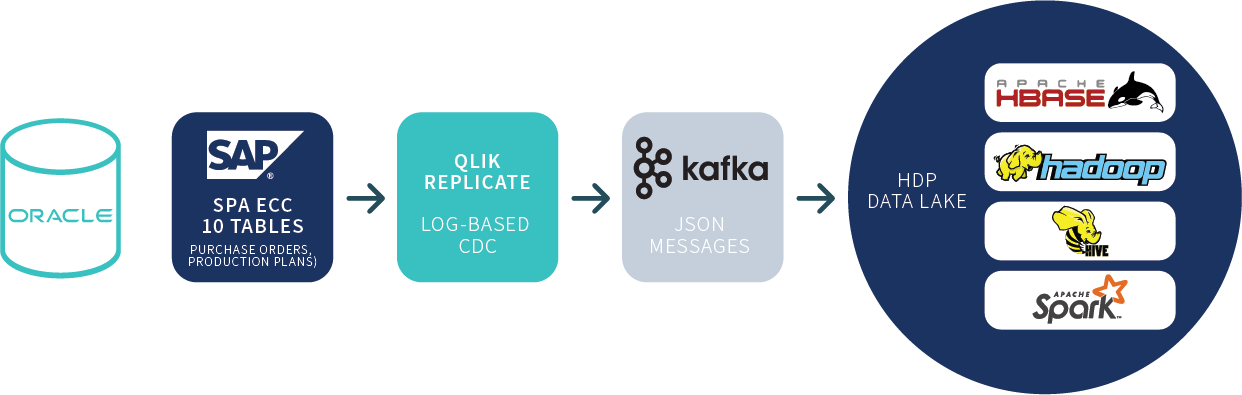

Decision makers at an international food industry leader, which we’ll call “Suppertime,” needed a current view and continuous integration of production capacity data, customer orders, and purchase orders to efficiently process, distribute, and sell tens of millions of chickens each week. But Suppertime struggled to bring together these large datasets, which were distributed across several acquisition-related silos within SAP enterprise resource planning (ERP) applications. Using nightly batch replication, they were unable to match orders and production line-item data fast enough. This slowed plant operational scheduling and prevented sales teams from filing accurate daily reports.

To streamline the process, Suppertime converted to a new Hadoop data lake based on the Hortonworks data platform and Qlik Replicate CDC. It now uses Qlik Replicate to efficiently copy all of the SAP record changes every five seconds, decoding that data from complex source SAP pool and cluster tables. Qlik Replicate injects this data stream, along with any changes to the source metadata and data definition language (DDL) changes, to a Kafka message queue that feeds HDFS and HBase consumers that subscribe to the relevant message topics (one topic per source table). Figure 4-2 illustrates this process.

Figure 4-2. Data architecture for streaming to data lake

After the data arrives in HDFS and HBase, Spark in-memory processing helps match orders to production on a real-time basis and maintain referential integrity for purchase order tables. As a result, Suppertime has accelerated sales and product delivery with accurate real-time operational reporting. It has replaced batch loads with CDC to operate more efficiently and more profitably.

Case Study 3: Streaming, Data Lake, and Cloud Architecture

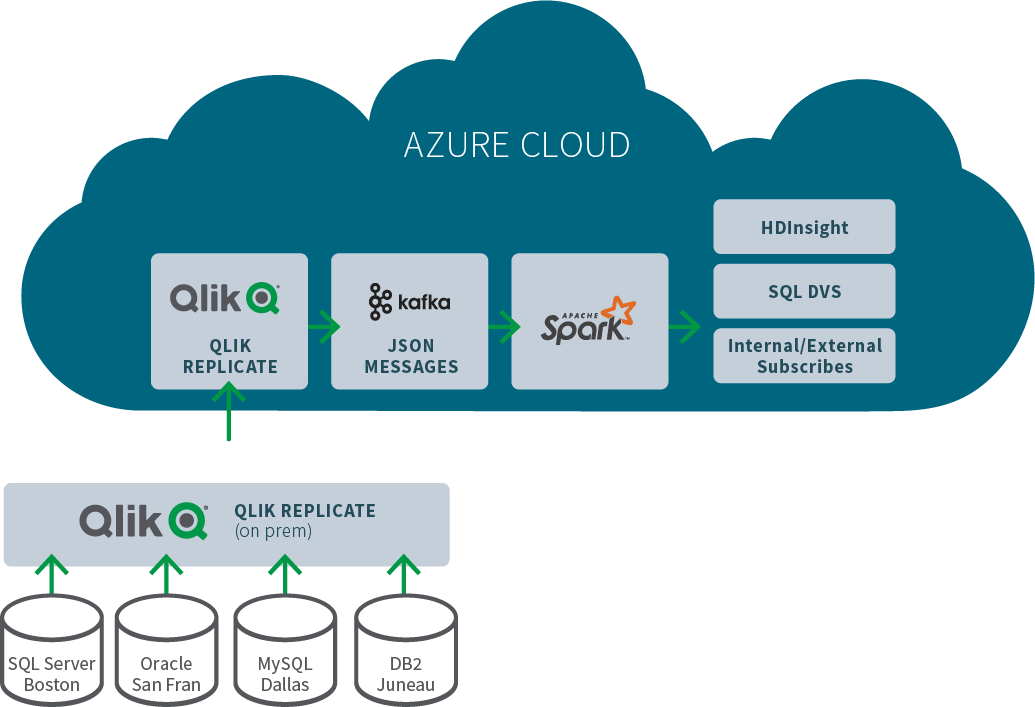

Another example is a US private equity and venture capital firm that built a data lake to consolidate and analyze operational metrics from its portfolio companies. This firm, which we’ll call “StartupBackers,” opted to host its data lake in the Microsoft Azure cloud rather than taking on the administrative burden of an on-premises infrastructure. Qlik Replicate CDC is remotely capturing updates and DDL changes from source databases (Oracle, SQL Server, MySQL, and DB2) at four locations in the United States. Qlik Replicate then sends that data through an encrypted File Channel connection over a wide area network (WAN) to a virtual machine–based instance of Qlik Replicate in the Azure cloud.

As shown in Figure 4-3, this Replicate instance publishes the data updates to a Kafka message broker that relays those messages in the JSON format to Spark. The Spark platform prepares the data in microbatches to be consumed by the HDInsight data lake, SQL data warehouse, and various other internal and external subscribers. These targets subscribe to topics that are categorized by source tables. With this CDC-based architecture, StartupBackers is now efficiently supporting real-time analysis without affecting production operations.

Figure 4-3. Data architecture for cloud-based streaming and data lake architecture

Case Study 4: Supporting Microservices on the AWS Cloud Architecture

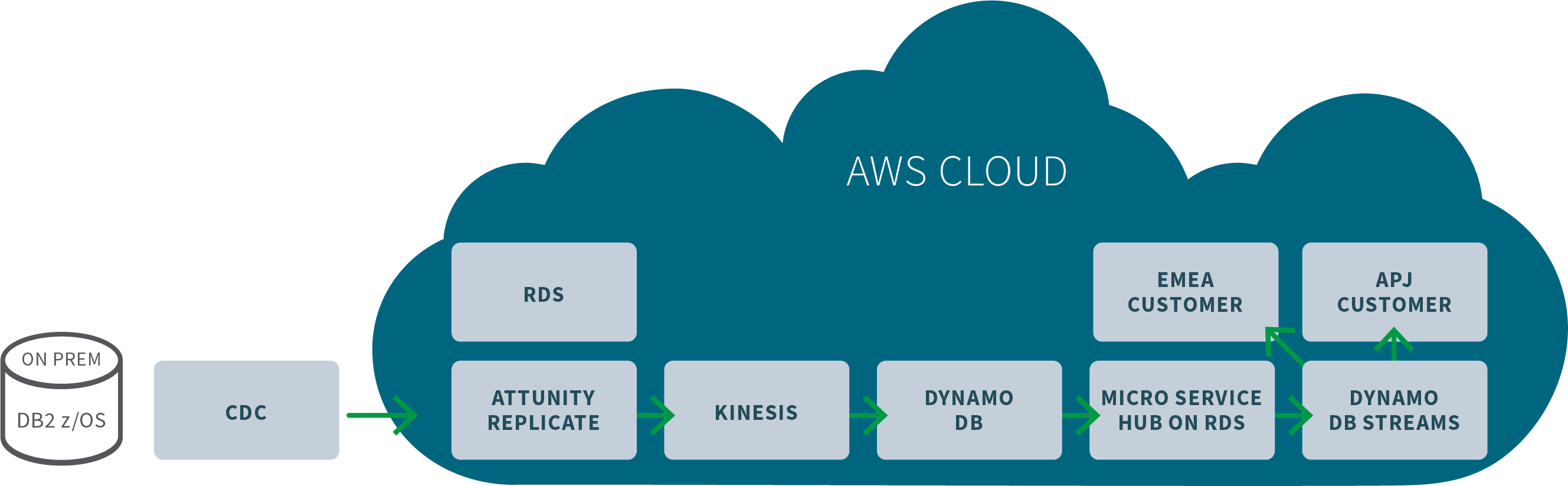

The CIO of a very large investment management firm, which we’ll call “Nest Egg,” has initiated an ambitious rollout of cloud-based microservices as a way to modernize applications that rely on data in core mainframe transactional systems. Its on-premises DB2 z/OS production system continuously processes and reconciles transactional updates for more than a trillion dollars of assets under management. Nest Egg has deployed Qlik Replicate to capture these updates from the DB2 transaction log and send them via encrypted multipathing to the AWS cloud. There, certain transaction records are copied straight to an RDS database, using the same schemas as the source, for analytics by a single line of business.

In addition, Qlik Replicate copies transaction updates to an Amazon Kinesis message stream to which Nest Egg applies custom transformation logic. As shown in Figure 4-4, the transformed data then arrives in the AWS NoSQL platform DynamoDB, which feeds a microservices hub on RDS. Multiple regional centers, including offices based in London and Sydney, receive continuous updates from this hub via DynamoDB Streams.

Figure 4-4. Data architecture for supporting cloud-based microservices

As a result, Nest Egg’s microservices architecture delivers a wide range of modular, independently provisioned services. Clients across the globe have real-time control of their accounts and trading positions. And it all starts with efficient, scalable, and real-time data synchronization via Qlik Replicate CDC.

Case Study 5: Real-Time Operational Data Store/Data Warehouse

A military federal credit union, which we’ll call “USave,” involves a relatively straightforward architecture. USave needed to monitor deposits, loans, and other transactions on a real-time basis to measure the state of the business and identify potentially fraudulent activity.

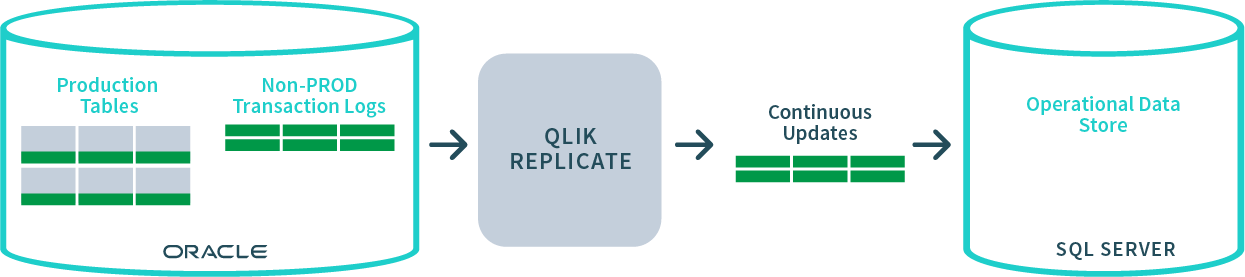

To do this, it had to improve the efficiency of its data replication process. This required continuous copies of transactional data from the company’s production Oracle database to an operational data store (ODS) based on SQL Server. Although the target is an ODS rather than a full-fledged data warehouse, this case study serves our purpose of illustrating the advantages of CDC for high-scale structured analysis and reporting.

As shown in Figure 4-5, USave deployed Qlik Replicate on an intermediate server between Oracle and SQL Server. The company automatically created tables on the SQL Server target, capturing the essential elements of the source schema while still using SQL-appropriate data types and table names. USave was able to rapidly execute an initial load of 30 tables while simultaneously applying incremental source changes. One table of 2.3 million rows took one minute. Updates are now copied continuously to the ODS.

Figure 4-5. Data architecture for real-time ODS

Note

An operational data store is a database that aggregates copies of production data, often on a short-term basis, to support operational reporting. The ODS often serves as an interim staging area for a long-term repository such as a data warehouse, which transforms data into consistent structures for more sophisticated querying and analytics.

Get Streaming Change Data Capture now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.