Chapter 16. Pipeline Orchestration Service

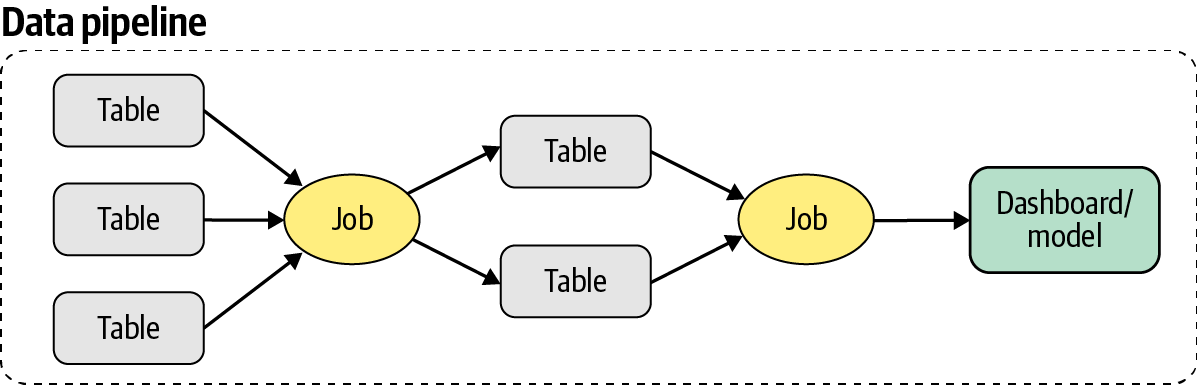

So far, in the operationalize phase, we have optimized the individual queries and programs, and now it’s time to schedule and run these in production. A runtime instance of a query or program is referred to as a job. Scheduling of jobs needs to take into account the right dependencies. For instance, if a job reads data from a specific table, it cannot be run until the previous job populating the table has been completed. To generalize, the pipeline of jobs needs to be orchestrated in a specific sequence, from ingestion to preparation to processing (as illustrated in Figure 16-1).

Figure 16-1. A logical representation of the pipeline as a sequence of dependent jobs executed to generate insights in the form of ML models or dashboards.

Orchestrating job pipelines for data processing and ML has several pain points. First, defining and managing dependencies between the jobs is ad hoc and error prone. Data users need to specify these dependencies and version-control them through the life cycle of the pipeline evolution. Second, pipelines invoke services across ingestion, preparation, transformation, training, and deployment. Monitoring and debugging pipelines for correctness, robustness, and timeliness across these services is complex. Third, orchestration of pipelines is multitenant, supporting multiple teams and business use cases. Orchestration ...

Get The Self-Service Data Roadmap now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.