What containers can do for you

Docker, Rocket, and big industry changes are making it a great time to seriously consider using containers.

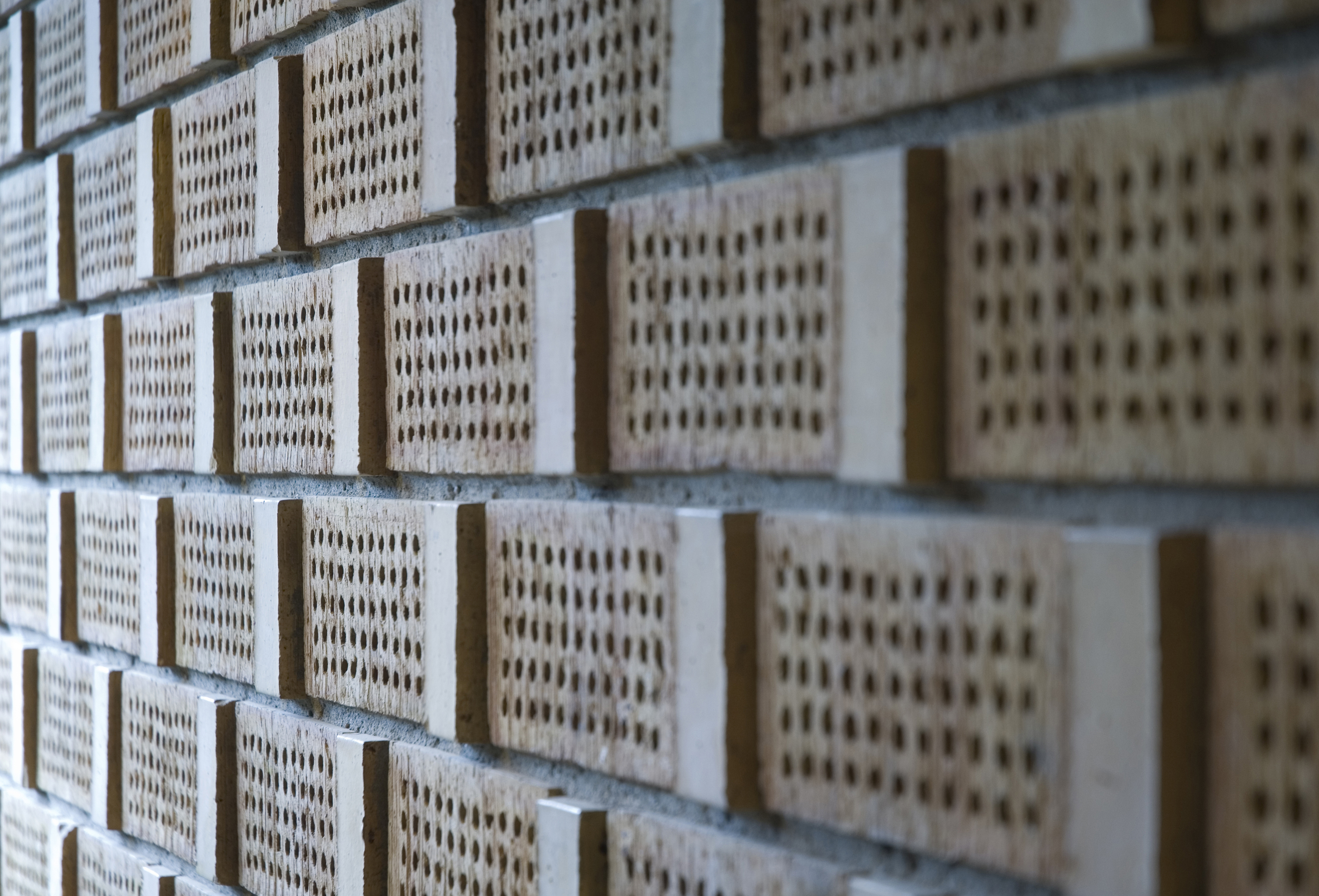

hans christian hansen, architect: tagensbo kirke / church, copenhagen 1966-1970. entrance. (source: SEIER+SEIER)

hans christian hansen, architect: tagensbo kirke / church, copenhagen 1966-1970. entrance. (source: SEIER+SEIER)

If you read any IT news these days it’s hard to miss a headline about “the container revolution.” Docker’s year-and-a-half-old engine had a monopoly on the buzz until CoreOS launched its own project, Rocket, in December.

The technology behind containers can seem esoteric, but the advantages of bringing containers to your organization are more compelling than ever. And containers’ inherent portability opens up exciting new opportunities for how organizations host their applications.

Containerization is having its moment and there’s never been a better time to check it out for yourself.

What’s a container?

In case you missed it, containerization is all about how we run and deploy software applications.

The industry standard today is to use Virtual Machines (VMs) to run software applications. VMs run applications inside a guest Operating System, which runs on virtual hardware powered by the server’s host OS.

VMs are great at providing full process isolation for applications: there are very few ways a problem in the host operating system can affect the software running in the guest operating system, and vice-versa. But this isolation comes at great cost — the computational overhead spent virtualizing hardware for a guest OS to use is substantial.

Containers take a different approach: by leveraging the low-level mechanics of the host operating system, containers provide most of the isolation of virtual machines at a fraction of the computing power.

This key architectural difference gives containers a few other appealing features compared to traditional VMs:

- Portability — Software packaged in containers can be moved and deployed more easily than a full Virtual Machine image

- Startup speed — VMs need to boot the guest OS before they can do anything else. Containers start running your software almost instantly

- Flexible composition — Containers are best used when each process in your stack runs in its own container, making it easier to compose flexible and robust platforms instead of monolithic servers

Containers are still Linux-only for now, but Microsoft is hard at work on its own implementation, called Windows Server Containers.

Getting the most bang for your buck

The portability containers offer is especially appealing for today’s IT organizations as they navigate the cloud computing market.

When it comes to hosting software applications, organizations traditionally have only two real choices: make heavy investments for in-house data centers, or run your business on Amazon Web Services.

Yet in 2014, Google and Microsoft both made big bets in the cloud computing game. Previously unseen price drops across the industry in March were followed by a few smaller reductions later in the year.

A more competitive cloud computing industry is good for all businesses that outsource their IT infrastructure, but few today are flexible enough to react to these price drops. Configuration management tools can help abstract away some provider-specific details, but if you asked most sysadmins to move their stack from AWS to Google, their heart would probably skip a beat.

For containerized applications, however, this task becomes much simpler. Containers’ “build once, run anywhere” philosophy means they will run the same whether on a developer’s laptop, an in-house data center, or an Amazon/Google/Microsoft server. New container-specific hosting services, like Tutum, aim to make switching cloud providers trivial.

Organizations that adopt containers today will have the flexibility to navigate this increasingly competitive cloud services industry.

Finding the best cloud for you

Migrating to the cheapest cloud of the month has obvious appeal, but containers’ portability opens up other interesting options.

When evaluating cloud hosting providers, it’s easy to compare factors like price, RAM, or hard drive storage. But comparing factors like network latency, storage I/O speed, and actual computing power has always been more difficult. How does an AWS EC2 Compute Unit compare to a Microsoft Azure core or a Google Compute Engine core?

Even if you could establish a metric to compare all three, it probably wouldn’t be consistently accurate. Various other factors can affect your actual performance: busy “neighbor” customers sharing the same host as your application or outdated underlying hardware, for example.

Containers offer a simpler solution to this problem: run and profile your application on each and pick the best one. A fully containerized stack can be deployed to any provider with about the same level of effort. If your application is especially sensitive to one of these performance factors, containers make shopping around substantially more practical.

Looking into the future, our applications could become even more adaptive. What if your monitoring tools saw a performance dip in your AWS stack and responded by shutting it down and temporarily moving to Google or Microsoft’s cloud instead?

Of course, optimizing for performance is nice, but the most compelling reason to become cloud provider-agnostic is uptime.

No provider is immune to service disruptions, but when AWS goes down in a region today, most organizations have no choice but to bite their nails until its over. Can you imagine standing up a temporary mirror stack in Microsoft Azure in minutes instead?

It sounds like a DevOps dream.

Less provisioning, more orchestrating

Now is an especially good time to jump into containers because of new container-friendly integrations across the industry.

Popular configuration management tools, like Puppet, Chef, and Ansible, released Docker support this past year. Most CI services now have Docker integration, and some new container-specific services like Drone and Shippable are also noteworthy.

Of greatest interest to operations teams, however, are new container-specific hosting services from the big cloud computing players.

You can deploy containers straight onto Amazon’s infrastructure with the EC2 Container Service (preview) and on Google’s using Google Container Engine (alpha). Microsoft also added some Docker-specific features to Azure.

These services reduce the burden on sysadmins by deploying your containers directly, eliminating the need to manage a host instance which runs the container engine.

These services are still in development, but when they become widely available they will help operations teams focus on making the most of their containers instead of provisioning VMs to run them.

The wave is coming

Now is a great time to jump in and try containers yourself.

Docker hit 1.0 and became production-ready last June, and a series of minor releases last fall rounded out some rough edges which sometimes made Docker difficult for newcomers. According to some recent announcements, making Docker easier to use is a big priority for Docker, Inc. in 2015.

Rocket is still in its infancy, but its guiding philosophy and early popularity are encouraging. Its emphasis on security is especially promising – an area consistently identified as a weakness for Docker. Security-conscious organizations will want to keep an eye on it this year.

One way or another, however, it’s clear the containerization wave is coming. And it’s going to be a wild ride.

Editor’s note: If you’re interested in learning how to deploy applications as portable, self-sufficient containers that can run on almost any server, check out Andrew’s video “Introduction to Docker.”