How do I package a Spark Scala script with SBT for use on an Amazon Elastic MapReduce (EMR) cluster?

Learn how to create, structure, and compile your Scala script to a JAR file, and use SBT to run on a distributed Spark cluster.

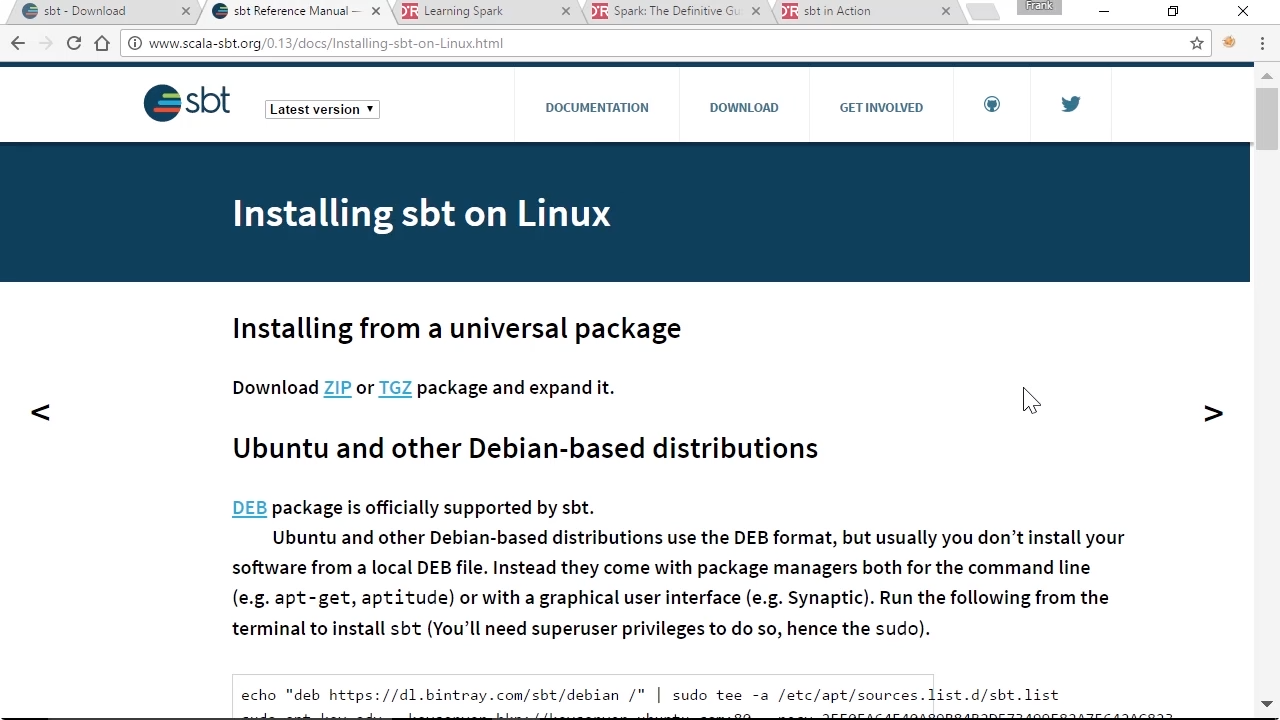

screenshot from "How do I package a Spark Scala script with SBT for use on an Amazon Elastic MapReduce (EMR) cluster?" (source: O'Reilly)

screenshot from "How do I package a Spark Scala script with SBT for use on an Amazon Elastic MapReduce (EMR) cluster?" (source: O'Reilly)

SBT is the tool used to compile your Scala scripts into JAR files and Java bytecode that can be distributed and executed efficiently across your Spark cluster. Amazon Web Services pro Frank Kane shows you how to build SBT files in the correct format so you can deftly compile and package your script, then upload it to Amazon S3 in preparation for running on an Elastic MapReduce (EMR) cluster.