How Flash changes the design of database storage engines

High-performing memory throws many traditional decisions overboard

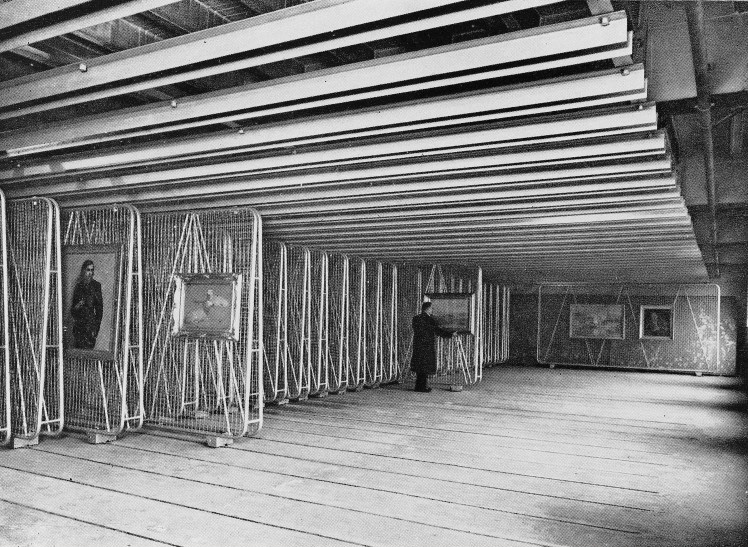

Storage racks at the Walker Art Gallery (source: Wellcome Library)

Storage racks at the Walker Art Gallery (source: Wellcome Library)

Over the past decade, SSD drives (popularly known as Flash) have radically changed computing at both the consumer level — where USB sticks have effectively replaced CDs for transporting files — and the server level, where it offers a price/performance ratio radically different from both RAM and disk drives. But databases have just started to catch up during the past few years. Most still depend on internal data structures and storage management fine-tuned for spinning disks.

Citing price and performance, one author advised a wide range of database vendors to move to Flash. Certainly, a database administrator can speed up old databases just by swapping out disk drives and inserting Flash, but doing so captures just a sliver of the potential performance improvement promised by Flash. For this article, I asked several database experts — including representatives of Aerospike, Cassandra, FoundationDB, RethinkDB, and Tokutek — how Flash changes the design of storage engines for databases. The various ways these companies have responded to its promise in their database designs are instructive to readers designing applications and looking for the best storage solutions.

It’s worth noting that most of the products discussed here would fit roughly into the category known as NoSQL, but that Tokutek’s storage engine runs on MySQL, MariaDB, and Percona Server, as well as MongoDB; the RethinkDB engine was originally developed for MySQL; and many of the tools I cover support various features, such as transactions that are commonly associated with relational databases. But this article is not a description of any database product, much less a comparison — it is an exploration of Flash and its impact on databases.

Key characteristics of Flash that influence databases

We all know a few special traits of Flash — that it is fast, that its blocks wear out after a certain number of writes — but its details can have a profound effect on the performance of databases and applications. Furthermore, discussions of speed should be divided into throughput and latency, as with networks. The characteristics I talked to the database experts about included:

- Random reads

- Like main memory, but unlike traditional disks, Flash serves up data equally fast, no matter how much physical distance lies between the reads. However, one has to read a whole block at a time, so applications may still benefit from locality of reference because a read that is close to an earlier read may be satisfied by main memory or the cache.

- Throughput

- Raw throughput of hundreds of thousands of reads or writes per second has been recorded. Many tools take advantage of this advantage — two orders of magnitude better than disks, or more. And throughput continues to improve as density is improving, according to Aerospike CTO Brian Bulkowski, because there are more blocks per chip at higher densities, leading to higher throughput.

- Latency

- According to David Rosenthal, CEO of FoundationDB, read latency is usually around 50 to 100 microseconds. As pointed out by Slava Akhmechetat, CEO of RethinkDB, Flash is at least a hundred times faster than disks, which tend more toward 5-50 milliseconds per read. Like CPU speeds, however, Flash seems to have reached its limit in latency and is not improving.

- Parallelism

- Flash drives offer multiple controllers (originally nine, and now usually more), or single higher-performance controllers. This rewards database designs that can use multiple threads and cores, and split up workloads into many independent reads or writes.

At the risk of oversimplifying, you could think of Flash as combining the convenient random access of main memory with the size and durability of disk, having access speeds between the two. Ideally, for performance, an application would run all in memory, and several database solutions were created as in-memory solutions (VoltDB, Oracle Times Ten, and the original version of MySQL Cluster known as NDB Cluster, come to mind). But as data sets grow, memory has become too expensive, and Flash arises as an attractive alternative.

The order of the day

Several of the products I researched replace the file system with their own storage algorithms, as many relational databases have also done. Locality, as we’ve seen, can affect the speed of reads. But it isn’t crucial to strive for locality of data. The overhead of writing data in the “right” spot may override the trivial overhead of doing multiple reads when Flash makes throughput so high.

Aerospike is the first database product designed from the beginning with Flash in mind. Bulkowski says this choice was made when the founders noted that data too large or too expensive to fit in RAM is well suited for Flash.

Although it can run simply with RAM backed by rotational disks, Aerospike stores indexes in RAM and the rest of the data in Flash. This way, they can quickly look up the index in RAM and then retrieve data from multiple Flash drives in parallel. Because the indexes are updated in RAM, writes to Flash are greatly reduced. Monica Pal, chief marketing officer for Aerospike, comments that the new category of real-time, big data driven applications, striving to personalize the user experience, have a random read/write data access pattern. Therefore, many customers use Aerospike to replace the caching tier at a site.

Of the databases covered in this article, Cassandra is perhaps the most explicit in ordering data to achieve locality of reference. Its fundamental data structure is a log-structured merge-tree (LSM-tree), very different from the B-tree family found in most relational and NoSQL databases. An LSM-tree tries to keep data sorted into ordered sets, and regularly combines sets to make them as long as possible. Developed originally to minimize extra seeks on disk, LSM-trees later proved useful with Flash to dramatically reduce writes (although random reads become less efficient).

According to Jonathan Ellis, project chair of Apache Cassandra, the database takes on a lot of the defragmentation operations that most applications leave up to the file system, in order to maintain its LSM-trees efficiently. It also takes advantage of its knowledge of the data (for instance, which blocks belong to a single BLOB) to minimize the amount of garbage collection necessary.

Rosenthal, in contrast, says that the FoundationDB team counted on Flash controllers to solve the problem of fragmented writes. Just as sophisticated disk drives buffer and reorder writes to minimize seeks (such as with elevator algorithms), Flash drives started six or seven years ago to differentiate themselves in the market by offering controllers that could do what LSM does at the database engine level. Now, most Flash controllers offer these algorithms.

Tokutek offers a clustered database, keeping data with the index. They find clustering ideal for retrieving ranges of data, particularly when there are repeated values, as in denormalized NoSQL document stores like the MongoDB database that Tokutek supports. Compression also reduces read and write overhead, saving money on storage as well as time. Tokutek generally gets a compression ratio of 5 or 7 to 1 for MySQL and MariaDB. They do even better on MongoDB — perhaps 10 to 1 compression — because its document stores are denormalized compared to a relational database.

Write right

Aerospike, FoundationDB, RethinkDB, and Tokutek use MVCC or a similar concept to write new versions of data continuously and clean up old versions later, instead of directly replacing stored data with new values.

In general, a single write request by a database can turn into multiple writes because of the need to update the data as well as multiples indexes. But Bulkowski says that, by storing indexes in memory, Aerospike achieves a predictable write amplification of 2, whereas other applications often suffer from a factor of 10. He points out that this design choice, like many made by Aerospike, is always being reconsidered as they research the best ways to speed up and scale applications.

He is also not worried about the fabled problem of wear in Flash. They can actually last as long as the systems’ sites normally expect to last — for instance, the blocks on an Intel S3700 SSD can last five years with 10 writes per day.

Both Aerospike and FoundationDB offer strict durability guarantees. All writes go to Flash and are synched.

Keep ‘em coming

Rosenthal says that the increased speed and parallelism of Flash — perhaps 100,000 operations per second, compared to 300 for typical disks — create the most change to database design. “The traditional design of relational databases, with one thread per connection, worked fine when disks were bottleneck,” he says, “but now the threads become the bottleneck.” FoundationDB internally uses its own light-weight processes, like Erlang does. Rosenthal says that the stagnation in latency improvement makes parallelism even more important.

Because of extensive parallelism, Bulkowski says that deep queues work better on Flash than rotational disks. Aerospike is particularly useful for personalization at real-time speeds, which is used by applications as diverse as ecommerce sites, web ad auctions (real-time bidding), the notoriously variable airline pricing, and even telecom routing. These applications combine a large number of small bits of information (from just gigabytes to more than 100TB) about pages, tickets, and products, as well as subscribers, customers or users, requiring a lot of little database transactions. The concurrency provided by Aerospike allows sites to complete these database operations consistently, 99% of the time, within a couple of milliseconds, and to scale cost effectively, on surprisingly small clusters.

These new database storage engine designs have clearly thrown many traditional decisions overboard. It is up to application developers now to review their database schemas and access pattern assumptions to take advantage of these developments.

This post is part of a collaboration between O’Reilly and Aerospike. See our statement of editorial independence.