From USENET to Facebook: The second time as farce

Demanding and building a social network that serves us and enables free speech, rather than serving a business metric that amplifies noise, is the way to end the farce.

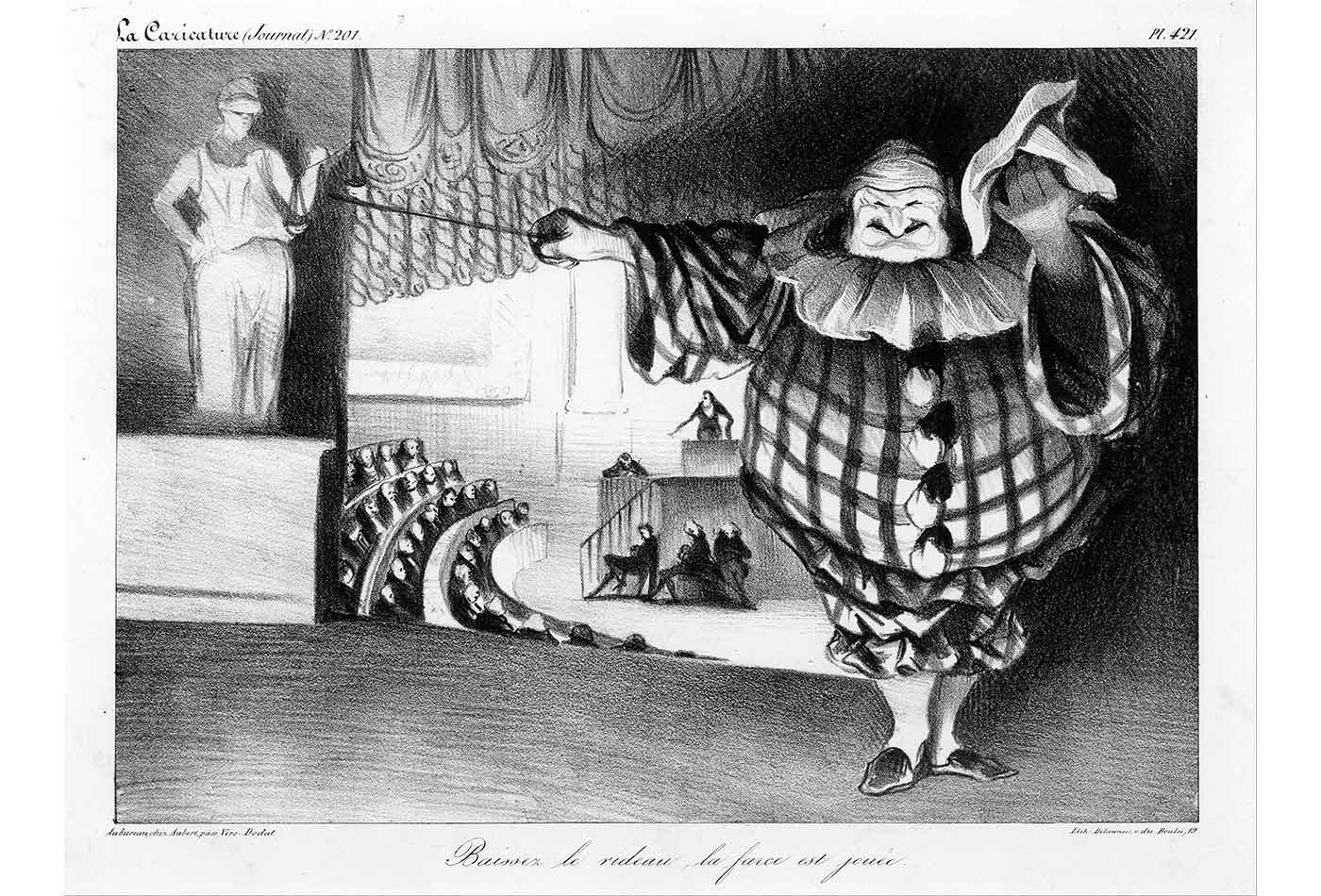

"Baissez le rideau, la farce est jouée," by Honoré Daumier, 1834 (source: The Google Art Project on Wikimedia Commons)

"Baissez le rideau, la farce est jouée," by Honoré Daumier, 1834 (source: The Google Art Project on Wikimedia Commons)

Re-interpreting Hegel, Marx said that everything in history happens twice, the first time as tragedy, the second as farce. That’s a fitting summary of Facebook’s Very Bad Month. There’s nothing here we haven’t seen before, nothing about abuse, trolling, racism, spam, porn, and even bots that hasn’t already happened. This time as farce? Certainly Zuckerberg’s 14-year Apology Tour, as Zeynep Tufecki calls it, has the look and feel of a farce. He just can’t stop apologizing for Facebook’s messes.

Except that the farce isn’t over yet. We’re in the middle of it. As Tufekci points out, 2018 isn’t the first time Zuckerberg has said “we blew it, we’ll do better.” Apology has been a roughly biennial occurrence since Facebook’s earliest days. So, the question we face is simple: how do we bring this sad history to an endpoint that isn’t farce? The third time around, should there be one, it isn’t even farce; it’s just stupidity. We don’t have to accept future apologies, whether they come from Zuck or some other network magnate, as inevitable.

I want to think about what we can learn from the forerunners of modern social networks—specifically about USENET, the proto-internet of the 1980s and 90s. (The same observations probably apply to BBSs, though I’m less familiar with them.) USENET was a decentralized and unmanaged system that allowed Unix users to exchange “posts” by sending them to hundreds of newsgroups. It started in the early 80s, peaked sometime around 1995, and arguably ended as tragedy (though it went out with a whimper, not a bang).

As a no-holds-barred Wild West sort of social network, USENET was filled with everything we rightly complain about today. It was easy to troll and be abusive; all too many participants did it for fun. Most groups were eventually flooded by spam, long before spam became a problem for email. Much of that spam distributed pornography or pirated software (“warez”). You could certainly find newsgroups in which to express your inner neo-Nazi or white supremacist self. Fake news? We had that; we had malicious answers to technical questions that would get new users to trash their systems. And yes, there were bots; that technology isn’t as new as we’d like to think.

But there was a big divide on USENET between moderated and unmoderated newsgroups. Posts to moderated newsgroups had to be approved by a human moderator before they were pushed to the rest of the network. Moderated groups were much less prone to abuse. They weren’t immune, certainly, but moderated groups remained virtual places where discussion was mostly civilized, and where you could get questions answered. Unmoderated newsgroups were always spam-filled and frequently abusive, and the alt.* newsgroups, which could be created by anyone, for any reason, matched anything we have now for bad behavior.

So, the first thing we should learn from USENET is the importance of moderation. Fully human moderation at Facebook scale is impossible. With seven billion pieces of content shared per day, even a million moderators would have to scan seven thousand posts each: roughly 4 seconds per post. But we don’t need to rely on human moderation. After USENET’s decline, research showed that it was possible to classify users as newbies, helpers, leaders, trolls, or flamers, purely by their communications patterns—with only minimal help from the content. This could be the basis for automated moderation assistants that kick suspicious posts over to human moderators, who would then have the final word. Whether automated or human, moderators prevent many of the bad posts from being made in the first place. It’s no fun being a troll if you can’t get through to your victims.

Automated moderation can also do fact checking. The technology that won Jeopardy a decade ago is more than capable of checking basic facts. It might not be capable of checking complex logic, but most “fake news” centers around facts that can easily be evaluated. And automated systems are very capable of detecting bots: Google’s Gmail has successfully throttled spam.

What else can we learn from USENET? Trolls were everywhere, but the really obnoxious stuff stayed where it was supposed to be. I’m not naive enough to think that neo-Nazis and white supremacists will dry up and go away, on Facebook or elsewhere. And I’m even content to allow them to have their own Facebook pages: Facebook can let these people talk to each other all they want, because they’re going to do that anyway, whatever tools you put in place. The problem we have now is that Facebook’s engagement metric paves the road to their door. Once you give someone a hit of something titillating, they’ll come back for more. And the next hit has to be stronger. That’s how you keep people engaged, and that’s (as Tufekci has argued about YouTube) how you radicalize them.

USENET had no engagement metrics, no means of linking users to stronger content. Islands of hatred certainly existed. But in a network that didn’t optimize for engagement, hate groups didn’t spread. Neo-Nazis and their like were certainly there, but you had to search them out, you weren’t pushed to them. The platform didn’t lead you there, trying to maximize your “engagement.” I can’t claim that was some sort of brilliant design on USENET’s part; it just wasn’t something anyone thought about at the time. And as a free service, there was a need to maximize profit. Facebook’s obsession with engagement is ultimately more dangerous than their sloppy handling of personal data. “Engagement” allows—indeed, encourages—hate groups to metastasize.

Engagement metrics harm free speech, another ideal carried to the modern internet from the USENET world. But in an “attention economy,” where the limiting factor is attention, not speech, we have to rethink what those values mean. I’ve said that USENET ended in a “whimper”—but what drained the energy away? The participants who contributed real value just got tired of wading through the spam and fighting off the trolls. They went elsewhere. USENET’s history gives us a warning: good speech was crowded off the stage by bad speech.

Speech that exists to crowd out other speech isn’t the unfettered interchange of ideas. Free speech doesn’t mean the right to a platform. Indeed, the U.S. Constitution already makes that distinction: “freedom of the press” is about platforms, and you don’t get freedom of the press unless you have a press. Again, Zeynep Tufekci has it: in “It’s the (Democracy-Poisoning) Golden Age of Free Speech,” she writes “The most effective forms of censorship today involve meddling with trust and attention, not muzzling speech itself.” Censorship isn’t about arresting dissidents; it’s about generating so much noise that voices you don’t like can’t be heard.

If we’re to put an end to the farce, we need to understand what it means to enable speech, rather than to drown it out. Abandoning “engagement” is part of the solution. We will be better served by a network that, like USENET, doesn’t care how people engage, and that allows them to make their own connections. Automated moderation can be a tool that makes room for speech, particularly if we can take advantage of communication patterns to moderate those whose primary goal is to be the loudest voice.

Marx certainly would have laid blame at the feet of Zuckerberg, for naively and profitably commoditizing the social identities of his users. But blame is not a solution. As convenient a punching bag as Zuckerberg is, we have to recognize that Facebook’s problems extend to the entire social world. That includes Twitter and YouTube, many other social networks past and present, and many networks that are neither online nor social. Expecting Zuck to “fix Facebook” may be the best way to guarantee that the farce plays on.

History is only deterministic in hindsight, and it doesn’t have to end in farce (or worse). We all build our social networks, and Mark Zuckerberg isn’t the only player on history’s stage. We need to revisit, reassess, and learn from all of our past social networks. Demanding and building a social network that serves us and enables free speech, rather than serving a business metric that amplifies noise, is the way to end the farce.

Is that a revolution? We have nothing to lose but our chains.