In an ideal world, all writes would be instantly, permanently saved to disks and be instantly retrievable from anywhere. Unfortunately, this is impossible in the real world, you can either take more time to make sure your data is safe or save it faster with less safety. MongoDB gives you more knobs to twiddle in this area than it has knobs for everything else combined and it’s important to understand your options.

Replication and journaling are the two approaches to data safety that you can take with MongoDB.

Generally, you should run with replication and have at least one of your servers journaled. The MongoDB blog has a good post on why you shouldn’t run MongoDB (or any database) on a single server.

MongoDB’s replication automatically copies all of your writes to other servers. If your current master goes down, you can bring up another server as the new master (this happens automatically with replica sets).

If a member of a replica set shuts down uncleanly and it was not

running with --journal, MongoDB makes no guarantees about

its data (it may be corrupt). You have to get a clean copy of the data:

either wipe the data and resync it or load a backup and fastsync.

Journaling gives you data safety on a single server. All operations are written to a log (the journal) that is flushed to disk regularly. If your machine crashes but the hardware is OK, you can restart the server and the data will repair itself by reading the journal. Keep in mind that MongoDB cannot save you from hardware problems: if your disk gets corrupted or damaged, your database will probably not be recoverable.

Replication and journaling can be used at the same time, but you must do this strategically to minimize the performance penalty. Both methods basically make a copy of all writes, so you have:

- No safety

One server write per write request

- Replication

Two server writes per write request

- Journaling

Two server writes per write request

- Replication+journaling

Three server writes per write request

Writing each piece of information three times is a lot, but if your application does not require high performance and data safety is very important, you could consider using both. There are some very safe alternative deployments that are more performant covered next.

If you’re running a single server, use the

--journal option.

Note

In development, there is no reason not to use

--journal all of the time. Add journaling to your local

MongoDB configuration to make sure that you don’t lose data while in

development.

Given the performance penalties involved in using journaling, you might want to mix journaled an unjournaled servers if you have multiple machines. Backup slaves could be journaled, whereas primaries and secondaries (especially those balancing read load) could be unjournaled.

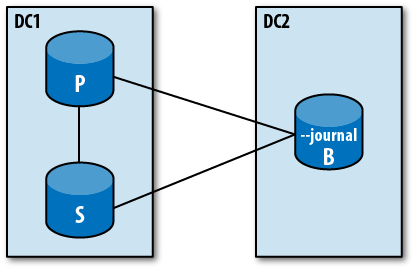

A sturdy small setup is shown in Figure 4-1. The primary and secondary are not run with journaling, keeping them fast for reads and writes. In a normal server crash, you can fail over to the secondary and restart the crashed machine at your leisure.

If either data center goes down entirely, you still have a safe copy of your data. If DC2 goes down, once it’s up again you can restart the backup server. If DC1 goes down, you can either make the backup machine master, or use its data to re-seed the machines in DC1. If both data centers go down, you at least have a backup in DC2 that you can bootstrap everything from.

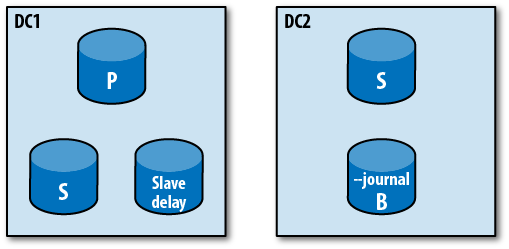

Another safe setup for five servers is shown in Figure 4-2. This is a slightly more robust setup than above: there are secondaries in both data centers, a delayed member to protect against user error, and a journaled member for backup.

If your database crashes and you were not running with

--journal, do not use that server’s data

as-is. It might seem fine for weeks until you suddenly access a

corrupt document which causes your application to go screwy. Or your

indexes might be messed up so you only get partial results back from the

database. Or a hundred other things; corruption is bad, insidious, and

often undetectable...for a while.

You have a couple of options. You can run repair.

This is a tempting option, but it’s really a last resort. First, repair

goes through every document it can find and makes a clean copy of it. This

takes a long time, a lot of disk space (an equal amount to the space

currently being used), and skips any corrupted records. This means that if

it can’t find millions of documents because of corruption, it will not

copy them and they will be lost. Your database may not be corrupted

anymore, but it also may be much smaller. Also, repair doesn’t introspect the documents: there

could be corruption that makes certain fields unparsable that repair will not find or fix.

The preferable option is fastsyncing from a backup or resyncing from scratch. Remember that you must wipe the possibly corrupt data before resyncing; MongoDB’s replication cannot “fix” corrupted data.

By default, writes do not return any response from the database. If you send the database an update, insert, or remove, it will process it and not return anything to the user. Thus, drivers do not expect any response on either success or failure.

However, obviously there are a lot of situations where you’d like to

have a response from the database. To handle this, MongoDB has an “about

that last operation...” command, called getlasterror. Originally, it just described any

errors that occurred in the last operation, but has branched out into

giving all sorts of information about the write and providing a variety of

safety-related options.

To avoid any inadvertent read-your-last-write mistakes (see Tip #50: Use a single connection to read your own writes), getlasterror is

stuck to the butt of a write request, essentially forcing the database to

treat the write and getlasterror as a

single request. They are sent together and guaranteed to be processed one

after the other, with no other operations in between. The drivers bundle

this functionality in so you don’t have to take care of it yourself,

generally calling it a “safe” write.

In development, you want to make sure that your application is

behaving as you expect and safe writes can help you with that. What sort

of things could go wrong with a write? A write could try to push something

onto a non-array field, cause a duplicate key exception (trying to store

two documents with the same value in a uniquely indexed field), remove an

_id field, or a million other user errors. You’ll want

to know that the write isn’t valid before you deploy.

One insidious error is running out of disk space: all of a sudden

queries are mysteriously returning less data. This one is tricky if you

are not using safe writes, as free disk space isn’t something that you

usually check. I’ve often accidentally set --dbpath to

the wrong partition, causing MongoDB to run out of space much sooner than

planned.

During development, there are lots of reasons that a write might not go through due to developer error, and you’ll want to know about them.

For important operations, you should make sure that the writes have been replicated to a majority of the set. A write is not “committed” until it is on a majority of the servers in a set. If a write has not been committed and network partitions or server crashes isolate it from the majority of the set, the write can end up getting rolled back. (It’s a bit outside the scope of this tip, but if you’re concerned about rollbacks, I wrote a post describing how to deal with it.)

w controls the number of servers that a response

should be written to before returning success. The way this works is that

you issue getlasterror to the server

(usually just by setting w for a

given write operation). The server notes where it is in its oplog (“I’m at

operation 123”) and then waits for w-1 slaves to have

applied operation 123 to their data set. As each slave writes the given

operation, w is decremented on the master. Once

w is 0, getlasterror returns success.

Note that, because the replication always writes operations in order, various servers in your set might be at different “points in history,” but they will never have an inconsistent data set. They will be identical to the master a minute ago, a few seconds ago, a week ago, etc. They will not be missing random operations.

This means that you can always make sure num-1

> db.runCommand({"getlasterror" : 1, "w" : num})So, the question from an application developer’s point-of-view is:

what do I set w to? As mentioned above, you need a

majority of the set for a write to truly be “safe.” However, writing to a

minority of the set can also have its uses.

If w is set to a minority of servers, it’s easier

to accomplish and may be “good enough.” If this minority is segregated

from the set through network partition or server failure, the majority of

the set could elect a new primary and not see the operation that was

faithfully replicated to w servers. However, if even

one of the members that received the write was not segregated, the other

members of the set would sync up to that write before electing a new

master.

If w is set to a majority of servers and some

network partition occurs or some servers go down, a new master will not be

able to be elected without this write. This is a powerful guarantee, but

it comes at the cost of w being less likely to succeed:

the more servers needed for success, the less likely the success.

Suppose you have a three-member replica set (one primary and two secondaries) and want to make sure that your two slaves are up-to-date with the master, so you run:

> db.runCommand({"getlasterror" : 1, "w" : 2})But what if one of your secondaries is down? MongoDB doesn’t

sanity-check the number of secondaries you put: it’ll happily wait until

it can replicate to 2, 20, or 200 slaves (if that’s what

w was).

Thus, you should always run getlasterror with the

wtimeout option set to a sensible value for your

application. wtimeout gives the number of milliseconds

to wait for slaves to report back and then fails. This example would wait

100 milliseconds:

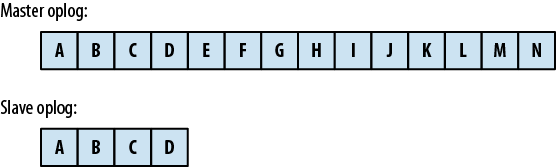

> db.runCommand({"getlasterror" : 1, "w" : 2, "wtimeout" : 100})Note that MongoDB applies replicated operations in order: if you do

writes A, B, and

C on the master, these will be replicated to

the slave as A, then

B, then C. Suppose

you have the situation pictured in Figure 4-3. If you do

write N on master and call getlasterror, the slave must replicate writes

E-N before getlasterror can report success. Thus,

getlasterror can significantly slow

your application if you have slaves that are behind.

Another issue is how to program your application to handle getlasterror timing out, which is only a

question that only you can answer. Obviously, if you are guaranteeing

replication to another server, this write is pretty important: what do you

do if the write succeeds locally, but fails to replicate to enough

machines?

If you have important data that you want to ensure makes it to the

journal, you must use the fsync option when you do a

write. fsync waits for the next flush (that is, up to

100ms) for the data to be successfully written to the journal before

returning success. It is important to note that fsync

does not immediately flush data to disk, it just puts

your program on hold until the data has been flushed to disk. Thus, if you

run fsync on every insert, you will only be able to do

one insert per 100ms. This is about a zillion times slower than MongoDB

usually does inserts, so use fsync sparingly.

fsync generally should only be used with

journaling. Do not use it when journaling is not enabled unless you’re

sure you know what you’re doing. You can easily hose your performance for

absolutely no benefit.

If you were running with journaling and your system crashes in a

recoverable way (i.e., your disk isn’t destroyed, the machine isn’t

underwater, etc.), you can restart the database normally. Make sure you’re

using all of your normal options, especially --dbpath (so

it can find the journal files) and --journal, of course.

MongoDB will take care of fixing up your data automatically before it

starts accepting connections. This can take a few minutes for large data

sets, but it shouldn’t be anywhere near the times that people who have run

repair on large data sets are familiar

with (probably five minutes or so).

Journal files are stored in the journal directory. Do not delete these files.

To take a backup of a database with journaling enabled, you can

either take a filesystem snapshot or do a normal

fsync+lock and then dump. Note that you can’t just copy

all of the files without fsync and locking, as copying

is not an instantaneous operation. You might copy the journal at a

different point in time than the databases, and then your backup would be

worse than useless (your journal files might corrupt your data files when

they are applied).

Get 50 Tips and Tricks for MongoDB Developers now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.