Chapter 4. Database

All non-trivial abstractions, to some degree, are leaky.

—Joel Spolsky

For many developers, Rails starts with the database. One of the most compelling features of Rails is ActiveRecord, the object-relational mapping(ORM) layer. ActiveRecord does such a good job of hiding the gory details of SQL from the programmer that it almost seems like magic.

However, as Joel Spolsky says, all abstractions are leaky. There is no perfectly transparent ORM system, and there never will be, due to the fundamentally different nature of the object-oriented and relational models. Ignore the underlying database at your own peril.

Database Management Systems

The Rails community has been built around the MySQL database management system (DBMS[30] ) for years. However, there are still a lot of misconceptions surrounding DBMSs, especially when used with Rails. While MySQL has its place, it is certainly not the only option. In the past few years, support for other databases has vastly grown. I encourage you to keep an open mind throughout this chapter, and weigh all criteria before making a decision on a DBMS.

Rails supports many DBMSs; at the time of this writing, DB2, Firebird, FrontBase, MySQL, OpenBase, Oracle, PostgreSQL, SQLite, Microsoft SQL Server, and Sybase are supported. You will probably know if you need to use a DBMS other than the ones mentioned here. Check the RDoc for the connection adapter for any caveats specific to your DBMS; some features such as migrations are only supported on a handful of connection adapters.

PostgreSQL

I list PostgreSQL[31] first because it is my platform of choice. It is one of the most advanced open source databases available today. It has a long history, dating back to the University of California at Berkeley’s Ingres project from the early 1980s. In contrast to MySQL, Postgres has supported advanced features such as triggers, stored procedures, custom data types, and transactions for much longer.

PostgreSQL’s support for concurrency is more mature than MySQL’s. Postgres supports multiversion concurrency control (MVCC), which is even more advanced than row-level locking. MVCC can isolate transactions, using timestamps to give each concurrent transaction its own snapshot of the data set. Under the Serializable isolation level, this prevents such problems as dirty reads, nonrepeatable reads, and phantom reads. [32]See the upcoming sidebar, “Multiversion Concurrency Control,” for more information about MVCC.

One advantage that PostgreSQL may have in the enterprise is its similarity to commercial enterprise databases such as Oracle, MS SQL Server, or DB2. Although Postgres is not by any means a clone or emulation of any commercial database, it will nevertheless be familiar to programmers and DBAs who have experience with one of the commercial databases. It will also likely be easier to migrate an application from Postgres to (say) Oracle than from MySQL to Oracle.

PostgreSQL has an unfortunate reputation for being slow. It got this reputation because the default configuration is optimized for performance on a tiny machine. Therefore, it will perform fairly consistently out of the box on a server with as little as 64 MB of RAM or as much as 64 GB. Like any database, Postgres must be tuned for any serious use. The official documentation at http://www.postgresql.org/docs/ has lots of great information on performance tuning.

One disadvantage of using PostgreSQL is that it has a smaller community around it. There are more developers, especially in the Rails world, workingwith MySQL. There are more tested solutions built around MySQL than PostgreSQL. The company behind MySQL, MySQL AB, provides commercial support for its product. There is no such centralized support structure for Postgres, as there is no single company behind PostgreSQL; however, there are several companies that specialize in Postgres consulting and provide support contracts.

MySQL

The MySQL DBMS is controversial. Some hold it to be a toy, while others consider it to be a good foundation for web applications. Nevertheless, MySQL is the dominant DBMS in use for Rails web applications today, and it has improved greatly between versions 3 and 5.

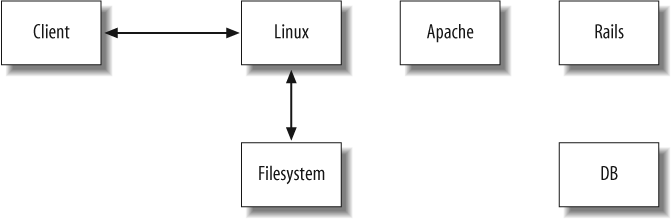

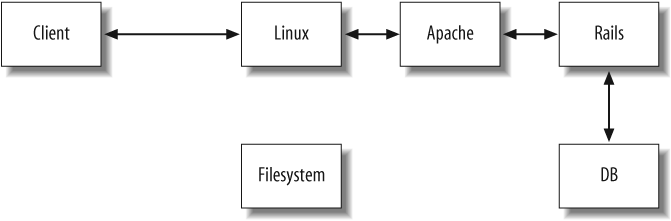

Part of the Rails scalability mantra is “shared nothing”: each application server should be able to stand on its own. Thus, you can throw five of them behind a load balancer and it doesn’t matter if a user is served by different servers throughout the course of a session. However, the bottleneck is the database. A big assumption of this shared-nothing architecture is that the application servers all share a database. If you use a database that doesn’t have great support for concurrency, you will have problems.

Old versions of MySQL had some fairly serious issues, many revolving around the issue of data integrity and constraints. The problem was not so much that the issues existed as that MySQL’s developers seemed to have an attitude of “you aren’t going to need it.” Even transactions are not supported with the default storage engine (MyISAM) to this day. In versions prior to 5.0, there were many bugs that would silently discard incorrect data rather than raising an error. To be fair, new versions of MySQL are addressing a lot of its issues. I would still recommend PostgreSQL as a general rule where speed is not the primary criterion, since it has had enterprise-level features for much longer. If you use MySQL, take these recommendations:

Use version 5.0 or later. Many of the issues that existed with previous versions have been fixed or improved in 5.0 and newer versions.

Use InnoDB for absolutely anything where data integrity or concurrency matter. MyISAM, the default engine on most MySQL installations, does not support features that most RDBMSs consider essential: foreign key constraints, row-level locking, and transactions. In most business environments, these features are non-negotiable. InnoDB is a journaled storage engine that is much more resilient to failures. Rails does the right thing here and defaults to the InnoDB storage engine when creating tables.

Unfortunately, InnoDB can be much slower than MyISAM, and the table sizes are usually several times larger. MyISAM is usually faster when reads vastly out-number writes or vice versa, while InnoDB is generally faster when reads and writes are balanced. It all comes down to the requirements of the specific application; these are general rules. You should always benchmark with your real data, and an accurate sample of queries and statements you will be issuing, in a realistic environment.

There are a few exceptions to this guideline: MyISAM may be a better choice if you need full-text indexing(which is only supported on MyISAM tables at this time). In addition, if raw speed of reads or writes is the primary concern, MyISAM can help. For example, a logging server for web analytics might use MyISAM tables: you want to be able to dump logs into it as fast as possible, and reads are performed far less often than writes.

Set the SQL mode to

TRADITIONAL. This can be accomplished with the following command:SET GLOBAL sql_mode='TRADITIONAL';

This will make MySQL a little bit more strict, raising errors on incorrect data rather than silently discarding it.

MySQL does have some clear advantages over PostgreSQL in some situations. On the whole, MySQL tends to be faster. For many web applications, query speed may be the most important factor. MySQL also has more stable, tested replication and clustering options available. MySQL is also somewhat better at handling binary data stored in the database (we discuss this at length later in the chapter). For many web applications, MySQL may be a clear win.

SQLite

SQLite is a minimalist database that is excellent for small projects. Although it does not support many fancy features, it is a great choice for projects that will not grow very large. It supports ACID transactions [33] out of the box. SQLite is a library that is linked into your program; there is no server process to speak of. The library code residing in your application’s process space accesses a database file.

SQLite provides no concurrency, as there is no server process to enforce the ACID properties. Therefore, it uses file-level locking: the entire database file is locked at the filesystem level during a transaction. Still, for many small applications, it fits the bill perfectly. It is a good replacement for data that may have been stored in flat files, as it supports most of the SQL-92 standard and would be easy to migrate to a more traditional DBMS as needs grow.

Microsoft SQL Server

Though Rails grew up in the Linux/Unix world, it has developed great community support for the Windows platform as well. Not only are Microsoft SQL Server database connections supported in Rails, there are also provisions for connecting to SQL Server from a Linux-based systems as well, using the FreeTDS library. Instructions are at http://wiki.rubyonrails.org/rails/pages/HowtoConnectToMicrosoftSQLServerFromRailsOnLinux; FreeTDS is available from http://www.freetds.org/.

From a Windows client, the standard approach is to use Ruby-DBI (a Ruby database-independent adapter) with ADO. The configuration looks like this:

development: adapter: sqlserver host: server_name database: my_db username: user password: pass

Your configuration may vary, depending on the version of SQL Server and the ADO libraries you have installed. Once the database configuration is in place, the standard ActiveRecord API methods can be used to manipulate data.

Oracle

Rails supports Oracle versions 8i, 9i, and 10g through the ruby-oci8 library, http://rubyforge.org/projects/ruby-oci8/ which supports the OCI8 API. Windows, Linux, and OS X are supported as clients. The connection configuration is fairly standard, using oci as the connection adapter name.

However, the Oracle client library still maps net service names

to connection specifications, so the host parameter provides a service name

rather than a physical hostname:

development: adapter: oci host: ORCL username: user password: pass

The ORCL in the preceding

configuration corresponds to an entry in the TNSNAMES.

ORA file, which will look something like this:

ORCL = (DESCRIPTION = (ADDRESS_LIST = (ADDRESS = (PROTOCOL = TCP)(HOST = srv)(PORT = 1521)) ) ... )

Alternatively, you can provide the connection specification on one line with the Rails database configuration:

development: dapter: oci host: (DESCRIPTION = (ADDRESS_LIST = (...))) username: user password: pass

The connection setup is the hardest part. Once the database is

connected, Rails supports Oracle connections just as it does

connections to any other DBMS. Stored procedures and other

Oracle-specific syntax are available through the standard methods that

expose an SQL interface, such as ActiveRecord::Base.find_by_sql.

Large/Binary Objects

Sooner or later, many web applications must deal with the issue of LOB (large object) data. LOB data may be small, but it is usually large compared to other attributes being stored (tens of kilobytes to hundreds of gigabytes or larger). The defining characteristic of LOB data, however, is that the application has no knowledge of the semantics of the internal structure of the data.

The canonical example is image data; a web application usually has no need to know the data in a JPEG file representing a user’s avatar as long as it can send it to the client, replace it, and delete it when needed.

LOB storage is usually divided into CLOB (character large object) for text data and BLOB (binary large object) for everything else. Some DBMSs separate the two as separate data types. CLOB types can often be indexed, collated, and searched; BLOBs cannot.

Database Storage

The DBA types among us might prefer database storage of large objects. From a theoretical standpoint, storing binary data in the database is the most clean and straight-forward solution. It offers some immediate advantages:

All of your application data is in the same place: the database. There is only one interface to the data, and one program is responsible for managing the data in all its forms.

You have greater flexibility with access control, which really helps when working with large-scale projects. DBMS permitting, different permissions may be assigned to different tables within the same database.

The binary data is not tied to a physical file path; when using filesystem storage, you must update the file paths in the referring database if you move the storage location.

There are many practical considerations, though, depending on your DBMS’s implementation of large objects.

PostgreSQL

PostgreSQL has some downright weird support for binary data. There are two ways to store binary data in a PostgreSQL database: the BYTEA data type and large objects.

The BYTEA [34] type is the closest thing PostgreSQL has to a BLOB

type—just a sequence of bytes—but it is really terrible for large

amounts of binary data. The protocol for shuttling BYTEA types back

and forth from the database requires escaping all non-printable

bytes, so a single null byte would be encoded as the ASCII string

\000 (4 bytes). Needless to say, this causes unnecessary expansion

of the data. In addition, it is impossible to stream data from the

database to the web browser without running it through an un escape

filter. Pulling a 2 MB binary file from the data-base usually means

streaming somewhere around 6 MB of data through the un escape

code.[35] The naïve method runs all of the data through Ruby

strings, where it balloons tremendously in memory. A better option

would be to have the postgres C

library handle quoting and unquoting, but this is a lot of work and

still suboptimal. Up to 1 GB of data can be stored in a BYTEA

column.

The other option is large objects. The large object features

in PostgreSQL work well enough, but they are also a little bit

clunky. Files are kept in the pg_largeobject system catalog in small

pages.[36] A pointer is kept in the referring table to the OID

(object ID) of the file. Up to 2 GB of data may be stored in a large

object. This method is fast, and has good APIs, but there are

drawbacks. There is no per-table or per-object access control; the

pg_largeobject catalog is global

to the database, and accessible by anyone with permission to connect

to the database. The large object mechanism is also slightly

deprecated in favor of in-table storage, as the TOAST storage

technique allows values of up to 1 GB in length to be stored

directly as attributes within the table.

My recommendation is to use filesystem storage for all binary objects if you use PostgreSQL. Although the database might be the more proper place for this type of data, it just does not work well enough yet. If you have to use the database, large objects actually perform pretty well. Avoid BYTEA at all costs.

MySQL

MySQL does a fairly good job with binary data. LOB-type columns (including the TEXT types) can store up to 4 GB of data, using the LONGBLOB type. Actual storage and performance depend on the wire protocol being used, buffer size, and available memory. Storage is efficient, using up to 4 bytes to store the data length, followed by the binary data itself. However, MySQL suffers from issues similar to PostgreSQL with streaming data, and it is always more awkward for a web application to stream data from the database than from the filesystem.

Oracle

Oracle supports the BLOB data type, for objects up to 4 GB. It is supported by a fairly mature API, and can be used directly from Rails.

Oracle also provides the BFILE type, which is a pointer to a binary file on disk. Consider it a formalization of the filesystem storage method discussed below. This may prove to be of value in some situations.

Filesystem Storage

The reality is that filesystem storage is the best option, as a

general rule. Filesystems are optimized to handle large amounts of

binary and/or character data, and they are fast at it. The Linux

kernel has syscalls such as sendfile() that work on physical files.

There are hundreds of third-party utilities that you can only leverage

when using physical files:

Image processing is arguably the most popular application for storing binary data. Programs like ImageMagick are much easier to use in their command-line form, operating on files, rather than getting often-problematic libraries like RMagick to work with Ruby.

Physical files can be shared with NFS or AFS, put on a MogileFS host, or otherwise clustered. Achieving high availability or load balancing with database large objects can be tricky.

Any other utility that works on files will have to be integrated or otherwise modified to work from a database.

Sending Data with X-Sendfile

Often you will need to send a file to the client for download

after doing some processing in Rails. The most common example is an

access-controlled file—you need to verify that the logged-in user has

the appropriate level of access before sending the file, for example.

The easy way to do this is with the send_file or send_data API calls, which stream data from

the server to the client:

class DataController < ApplicationController before_filter :check_authenticated def private_document file = File.find params[:id] send_file file.path if file end end

This method is easy, but it is slow if you are sending static files. Rails reads the file and streams it byte-by-byte to the client. The X-Sendfile protocol makes this easy and fast, by allowing Rails to do its processing but then offloading the “heavy lifting” to the web server (which may offload that processing to the operating system kernel, as described previously).

The X-Sendfile protocol is a very simple standard, first

introduced in the Lighttpd web server, which directs the web server to

send a file from the filesystem to the client rather than a response

generated by the application server. Because the web server is

optimized for throwing files at the client, this usually yields a

decent speed improvement over reading the file into memory and sending

it from Rails with the send_file or

send_data API calls.

Because the web server requires access to the file in order to send it to the client, you must use filesystem large object storage. In addition, the files to be sent must have permissions set so as to be accessible to the web server. However, the files should be outside of the web root, lest someone guess a filename and have free access to your private files.

X-Sendfile uses the X-Sendfile HTTP header pointing to the

server’s path to the file to send, in conjunction with the other

standard HTTP headers. A typical response using X-Sendfile would look

something like this:

X-Sendfile: /home/rails/sample_application/private/secret_codes_69843.zip Content-Type: application/octet-stream Content-Disposition: attachment; file="secret_codes.zip" Content-Length: 654685

Assuming the web server is properly configured, it will ignore any response body and stream the file from disk to the client.

From Rails, you can set the response.headers by modifying the response.headers hash:

response.headers['X-Sendfile'] = file_path response.headers['Content-Type'] = 'application/octet-stream' response.headers['Content-Disposition'] = "attachment; file=\"#{file_name}\"" response.headers['Content-Length'] = File.size(file_path)

Web server configuration

Of course, the front end web server must be properly

configured to recognize and process the X-Sendfile header. Mongrel does not

support X-Sendfile, as it assumes you will proxy to it from a server

more capable of serving static content.

If you are using Lighttpd, it has X-Sendfile support built in.

For Lighttpd/FastCGI, just enable the allow-x-send-file option in the server

configuration:

fastcgi.server = ( ".fcgi" => ( "localhost" => ( ... "allow-x-send-file" => "enable", ... ) ) )

If you are using Apache 2, things are a little more

complicated (although not by much). You have to install the mod_xsendfile module[37] into Apache. There are two configuration flags, both

accepting on/off values, which can then be used to control

X-Sendfile behavior:

XSendFileDetermines whether the

X-Sendfileheader is processed at all.XsendFileAllowAboveDetermines whether that header can send files above the path of the request. It defaults to

offfor security reasons.

Both of these configuration options can be used in any

configuration context, down to the

.htaccess file (per-directory). Best

practice dictates that you should only specify XSendFile on in the narrowest possible

context. HavingX-Sendfile unnecessarily enabled is a security risk,

as it allows a server application to send any file that the web

server can access to the client.

To my knowledge, there is no way to use X-Sendfile on Apache 1.3 at this time.

Serving Static Files

One advantage of filesystem storage is that as long as the file data doesn’t need to be protected with access control or otherwise acted upon dynamically, you can leverage your static web servers to serve that data. By exporting the storage path via NFS (or a caching filesystem such as AFS to conserve bandwidth), you can share the application’s files with the static servers on your content distribution network. This completely removes the load from the application servers and provides a more scalable solution.

Managing Uploads in Rails

Most applications that use large objects must deal with uploads. This can be tricky in any framework, but Rails handles most of the details and there are some best practices to guide you with the rest.

Attachment plugins

One of the easiest ways to handle Rails uploads is to use one of the popular plugins for upload processing. The standard plugin used to be Rick Olson’s acts_as_attachment (http://svn.techno-weenie.net/projects/plugins/acts_as_attachment/). Many Rails developers are familiar with its interface, and for quite a while it was the standard way to handle uploaded data. However, there were a few factors that made it unsuitable for many applications:

It is tied to RMagick (and therefore ImageMagick) for image processing. ImageMagick is notoriously difficult to install, primarily because it depends on many backend libraries for processing different image formats. At the time acts_as_attachment was written, ImageMagick was the best option. Now, however, there is a lighter alternative, ImageScience, based on the FreeImage library.

The entire attachment data must be read into memory and converted to a Ruby string. For large files, this is expensive—Rails passes the application a

TempFile, which is slurped into aString. If using filesystem storage, the stringis then written back out into a file!There is no support for alternative storage methods such as Amazon’s S3.

Luckily, there is an alternative. Rick has rewritten acts_as_attachment to resolve these issues. The rewrite is called attachment_fu, and it is publicly available at http:// svn.techno-weenie.net/projects/plugins/attachment_fu/.

The attachment_fu library supports all of acts_as_attachment’s options and more. It can use RMagick as a processor, but it also supports MiniMagick (a lightweight alternative to RMagick that still wraps ImageMagick) and ImageScience. It can store attachments in a database, the filesystem, or S3 out of the box. It also has great facilities for expansion; it is easy to write your own processor or storage backend. A typical use of attachment_fu looks like this:

class UserAvatar < ActiveRecord::Base belongs_to :user has_attachment :content_type => :image, :max_size => 100.kilobytes, :storage => :file_system, :resize_to => [100, 100] end

Attachment_fu is almost completely backward-compatible with

acts_as_attachment. Simply change the acts_as_attachment method call to has_attachment. Of course, complete API

documentation is provided with the plugin as RDoc.

Rolling your own

The attachment plugins are powerful, but they cannot do everything. If you do decide to do your own upload processing, here are some things to take into account:

You must validate the uploaded data. What constitutes a valid file upload? Are there restrictions on the size of the uploaded data (minimum or maximum size)? Must the uploaded file have a certain MIME type or extension?

Rails can hand you any of several different types of objects, depending on what was uploaded and its size. James Edward Gray II has an article* on how to correctly and efficiently handle all cases.

Ensure that files can be cloned properly when the associated record is cloned. (In the case of filesystem storage, this should just be a

FileUtils.cpcall.)Make sure that you delete the file from storage when the record is deleted. This can be done with an

after_destroycallback on the model. In the case of data-base storage, you may find it more efficient to use a trigger or rule.

Upload progress

One feature that many applications require is upload progress notification: showing the user a progress bar that indicates how much of the file has been uploaded. This is surprisingly hard and server-dependent, but there are tools to make it easier. For simplicity, we will restrict discussion in this section to the Mongrel application server.

Mongrel serializes Rails requests; at any given time, a single Mongrel process can only execute one Rails request. This is required because ActionController is not thread-safe. But upload progress requires two simultaneous requests: the upload itself as well as AJAX requests to check its progress. How do we reconcile this?

The answer is that Mongrel is very conservative about what it locks; it only serializes requests while they are actually executing controller code. While the file is being transferred, Mongrel buffers it into memory, but during that time it allows other requests to complete. When the file transfer completes, Mongrel processes that Rails request all at once, only locking during the time the Rails code executes.

The mongrel_upload_progress

gem hooks into Mongrel to provide a shared variable that the

multiple requests can use to communicate about the status of file

uploads. This variable is accessible to the Rails handler as

Mongrel::Uploads. A simple Rails

action (called via AJAX) calls Mongrel::Uploads.check(upload_id) to check

the status and update the client.

Though all of this complication makes it possible to use just one Mongrel process, most moderately trafficked applications will require multiple Mongrels. All actual Rails requests are still serialized, so the number of requests being processed in Rails concurrently is limited to the number of Mongrel processes. However, the shared-memory solution used previously does not work with more than one Mongrel—each Mongrel is a separate process and they have no shared memory.

The solution is to use DRb (Distributed Ruby). A background process is started as a shared repository for upload status. Each upload handler notifies the background process of its status via DRb as it receives blocks from the file. The Rails handlers can then query the common backend for the status of any file, regardless of which Mongrel handled the original upload or status request.

The upload progress gem can be installed with gemin stall mongrel_upload_progress.A

sample Rails application illustrating how to use the gem is located

at http://svn.techno-weenie.net/projects/mongrel_upload_progress/.

The official Mongrel upload progress documentation is available at

http://mongrel.rubyforge.org/docs/upload_progress.html.

Advanced Database Features

Among Rails programmers, advanced database features are often a point of contention. Some contend that constraints, triggers, and procedures are essential; some shun them completely, saying that intelligence belongs in the application only. I am sympathetic to the argument that all business logic belongs in the application; it is nearly impossible to make agile changes to changing requirements when logic is split between two locations. Still, I believe that constraints, triggers, and even stored procedures have their place in enterprise applications. In order to explain why, we’ll have to examine a distinction that comes up often in relation to this debate: the difference between application and integration databases.

Application Versus Integration Databases

Martin Fowler differentiates between application databases and integration data-bases.[38] The basic distinction is that an integration database is shared among many applications, while an application database “belongs” to the one application using it.

In this sense, “application” can mean one program or multiple programs within an application boundary (the same logical application). Usually this distinction refers to how the schema is organized; in Rails, integration databases are often referred to as databases with “legacy schemas.” In application databases, integration can still be performed through messaging at the application layer rather than the database layer.

Rails is opinionated about how your database schemas should be

structured: the primary key should be id, foreign keys should be

thing_id, and table names should be

plural. This is not database bigotry; Rails has to choose a sensible

default for the “convention over configuration” paradigm to be

effective. It is relatively painless to change almost any of these

defaults. Rails plays nice with integration databases.

Many Rails developers shun integration databases as unnecessary; they maintain that all integration should be done at the application layer. Some take that a step further and state that data integrity checking belongs in the application only, to keep all business logic in the same place. Although this might be ideal, the real world is not always that nice. Even if all integration can be done at the application level, there are still plenty of valid reasons to use database constraints.

In addition, most databases in the enterprise tend to become

integration databases over time. Databases that are useful for one

purpose are often appropriated for another use. Sometimes you have a

database that isn’t under your control, and you want to use the data

without performing a full ETL (extract, transform, load). Even running

a small script against your database without using the ActiveRecord

model, or maintaining the database manually through a console client

such as mysql or psql, means you have something accessing

your database outside of your domain model. If the validations in the

domain are the only way to ensure that the data is consistent, this

may lead to problems.

Constraints

Database-level constraints provide a way to explicitly specify an application’s implicit assumptions about its data. There are two types of constraints, which should not be confused:

Business logic“A manager may not manage more than five employees.” The key characteristic of business logic constraints is that they could conceivably change throughout the lifetime of the database. Business logic constraints should never be in the database, barring a very good reason to the contrary.

Integrity“U.S. Social Security numbers, when provided, must contain exactly nine digits.” Integrity constraints define the nature of the data being represented. Admittedly, “the nature of the data” is a somewhat nebulous concept; the meaning will differ between databases. Integrity constraints must reside in the database, if for no other reason than to provide a last-level sanity check on the data.

As with any other area of data modeling, there are gray areas. An example would be “an employee’s salary must be positive,” which could conceivably go either way.[39] The advantage of constraints is that they narrow the domain of possible results the database can generate. When you know the DBMS for an online store can never out-put a negative price for a product, you can sum the prices for the line items belonging to an order without worrying about invalid prices. Though the line is drawn in different places for different applications, the basic principle is this: the database should not enforce business logic, but it should enforce consistency and integrity.

Regardless of differences of opinion on check constraints, one type of constraint is non-negotiable: foreign-key constraints. If a foreign-key relationship is required, an unassociated record is semantically meaningless and must not be allowed to happen. It only makes practical sense to formalize that association.

The only truly robust way to ensure that a database maintains integrity over years as it accumulates data (as databases tend to do) is to declare appropriate constraints on the data. Unless you can say for certain that every application or person accessing the data-base will do so through the domain model (going through all associated validations) every time, the only sensible option is to treat the database as an integration database.

There is a bonus to providing constraints: typically, the more constraints provided on a database, the better job the query optimizer can do at creating a query plan.

A common complaint about database constraints is that they require you to specify semantic information in two places: your database and your application code (you usually want to trap invalid data in your application’s validations before attempting to insert it into your database, even if the database would catch the error anyway). The DrySQL library [40] goes a long way toward removing this duplication. It infers the schema relationships and validation rules from the database’s types and constraints, so they don’t have to be specified in the application. DrySQL works with all of the major DBMSs: PostgreSQL 8 and up, MySQL 5 and up, SQL Server, Oracle, and DB2.

With DrySQL installed, you can simply require the library in the environment configuration file:

require 'drysql'

Then, all that is needed is to inform ActiveRecord of the mapping between tables and model classes (even that is not necessary if the tables are named according to the defaults):

class Client set_table_name "customers" end

If the table had been named clients, you would not even need the

set_table_name call. The

relationships and constraints will be inferred from the customers table’s constraints.

Composite Keys

Composite keys, primary keys made up of two or more attributes, are best avoided. Not only are they harder to manage than simple primary keys, they are usually more fragile. The motivation for using composite keys is usually based in some inherently unique aspect of the data, which means the composite key will be meaningful (tied to the data) rather than meaningless (tied to the database only). It is usually much more resilient to assign a meaningless primary key used only within the database. That way, data integrity is internal to the database rather than being tied to an external system or process.

As an example, consider a database that tracks U.S. members by

their driver’s license numbers. A candidate key would be

{Issuing state, License number}. One

immediate advantage of a meaningless key is that integer values are

easier to represent than lists; it is easier to refer to a record as

12345 than as [IL,1234]. This makes foreign keys much

simpler, and it simplifies web services and other protocols used for

interoperability.

But the most basic problem is that a primary key is usually treated as a unique, stable identifier for a record. A composite key may not actually be unique in practice and may even change. If you were to use the preceding composite key, you should be prepared to answer questions like:

What happens when a member moves or has a new license number issued?

What happens if some inherent characteristic of the key changes? For example, how would you handle it if license numbers were always 9 digits and changed to 10? This is a problem in general with keying off of meaningful data.

Are you prepared to have every record with a duplicate or missing key rejected? Or might it be desirable to have the system hold invalid data for a time until it is corrected?

There are some valid situations for using composite keys, though. A good example is in multimaster replication. One big problem in asynchronous multimaster replication is synchronizing primary key sequences. If you insert two records at roughly the same time to two master servers, there must be some mechanism to ensure that the two servers issue different values for the primary keys on each record, lest problems ensue when the records are replicated.

The composite-key solution to the problem of multimaster

sequences is to issue each server an ID and use that as part of the

key; then each server can maintain its own sequence independently of

the others. The two records could have primary keys of

{ServerA, 5} and {ServerB,

5} and there would be no conflict. Note that this is a

legitimate use of composite keys, since the keys are meaningless

(relative to the data being stored in attributes).

For situations such as this, Dr Nic Williams has made composite keys work with ActiveRecord. The composite_primary_keys gem is available at

http://compositekeys. rubyforge.org/.

As an example, consider the multimaster sequence problem

discussed previously. We have an Order model that is replicated between two

servers using multimaster replication. We must use a composite key to

ensure unique primary keys regardless of which server an order is

created on. First, we install the gem:

gem install composite_primary_keys

Then, we have to require this library in our application. From

Rails, we can include this statement at the end of our environment.rb:

require 'composite_primary_keys'

The next step is to call the set_primary_keys(*keys) method to inform

ActiveRecord that we will be using composite keys:

class Order < ActiveRecord::Base set_primary_keys :node_id, :order_id end

After setting up the composite key, most ActiveRecord operations take place as usual, with the exception that primary keys are now represented by an array rather than an integer.

Order.primary_key # => [:node_id, :order_id]

Order.primary_key.to_s # => "node_id,order_id"

Order.find 1, 5 # => #<Order:0x1234567 @attributes={"node_id"=>"1",

"order_id"=>"5"}>Even associations work normally; you only have to specify the

foreign key explicitly on both sides of the association. To

demonstrate this, we can add a LineIte model that belongs to a

corresponding Order.

class Order < ActiveRecord::Base set_primary_keys :node_id, :order_id has_many :line_items, :foreign_key => [:order_node_id, :order_id] end class LineItem < ActiveRecord::Base set_primary_keys :node_id, :line_item_id belongs_to :order, :foreign_key => [:order_node_id, :order_id] end

Note that as in regular associations, the foreign keys are the

same on both sides of the association, as there is only one foreign

key that defines the relationship (even though, in this case, the

foreign key is composed of two attributes). This can be confusing if

you don’t consider the way the relationship is represented in the

schema, because the foreign_key

option defined in Order's has_many

:line_items statement actually refers to attributes of

LineItem.

As a final touch, we can set things up so that we don’t have to worry about the keys at all in code. Remember that the original reason for using composite keys was to allow us to use independent sequences on each database server. First, we create those sequences in SQL when creating the tables. The way we set this up is DBMS-specific; the PostgreSQL syntax would be:

CREATE SEQUENCE orders_order_id_seq;

CREATE TABLE orders(

node_id integer not null,

order_id integer not null default nextval('orders_order_id_seq'),

(other attributes)

PRIMARY KEY (node_id, order_id)

);

CREATE SEQUENCE line_items_line_item_id_seq;

CREATE TABLE line_items(

node_id integer not null,

line_item_id integer not null default nextval('line_items_line_item_id_seq'),

-- FK to orders

order_node_id integer not null,

order_id integer not null,

(other attributes)

PRIMARY KEY (node_id, line_item_id)

);When we execute this DDL on all database nodes and enable

replication between them, each node has its own sequence independent

of the others. Now we just have to make sure that each node uses its

own node ID. We could either do this in the database with column

defaults (if we can use different DDL for each node) or in the

application with a before_create

callback (if each application accesses only one node).

Triggers, Rules, and Stored Procedures

Now we’re in dangerous territory. Let it be known that you should probably have a good reason to use triggers, rules, or stored procedures for anything terribly complicated. That is not to say that they have no purpose; they can be lifesavers. But they should be used to address a specific problem or concern, such as the following:

A complicated process that involves searching through lots of data (such as OLAP or log analysis) can be much faster if offloaded to the database server. As always, profiling is key; premature optimization can cost you execution speed, not just developer time.

Concerns that have little to do with the application logic, such as audit logs, can usually be safely moved to the database as triggers.

PostgreSQL can use rules to create updateable views. Unfortunately, this is currently the only way to get updateable views.

When using Postgres large objects, you should use a trigger to delete the large object when the corresponding record (containing the LOB’s OID) is deleted. Consider this a form of referential integrity.

Extended or non-native types will use stored procedures for access. PostGIS, a geospatial database for Postgres, uses functions to manage spatial data and indexes.

The TSearch2 library, integrated into PostgreSQL 8.3 and later, uses functions to access full-text indexing functions.

Some applications use stored procedures for all data access, in order to enforce access control. This is definitely not the Rails way. Although it can be made to work, it will be more difficult than directly accessing tables and views. Views provide sufficient access control for most enterprise applications; only use stored procedures if you have to. ActiveRecord can transparently use updateable views as if they were concrete tables.

Examples

Large object deletion

Since PostgreSQL’s large objects are decoupled from their associated record, it is useful to set up a simple rule to delete them when the corresponding record is deleted. The rule can be implemented as follows:

-- (table name is 'attachments'; LOB OID is 'file_oid') CREATE RULE propagate_deletes_to_lob AS ON DELETE TO attachments DO ALSO SELECT lo_unlink(OLD.file_oid) AS lo_unlink

Data partitioning

PostgreSQL has a very powerful rule system that can rewrite incoming queries in many ways. One use for this rule system is to implement partitioning, where data from one table is federated into one of several tables depending on some condition. Consider a database of real estate listings. For historical purposes, we may want to keep listings that have expired, been sold, or been removed from the system. However, most of the data being used on a day-to-day basis is derived from listings that are current and for sale.

In addition, the datasets of “current listings” and “all listings” will have differing data needs; the former is likely to be used transactionally while the latter is probably used analytically. It makes sense to store these separately, as they may have different characteristics.

First, we assume that we already have listing data in a table

called listings, and it has a

status column representing the

status of the listing. We create the two tables, current_listings and non_current_listings,

which inherit from the main table. This way, we can say SELECT*FROM listings and Postgres will

include the data from the two inherited tables automatically.

CREATE TABLE current_listings (CHECK (status = 'C')) INHERITS (listings); CREATE TABLE non_current_listings (CHECK (status != 'C')) INHERITS (listings);

Next, we create rules that rewrite inserts on the parent table to inserts on the proper child:

CREATE RULE listings_insert_current AS ON INSERT TO listings WHERE (status = 'C') DO INSTEAD INSERT INTO current_listings VALUES(NEW.*); CREATE RULE listings_insert_non_current AS ON INSERT TO listings WHERE (status != 'C') DO INSTEAD INSERT INTO non_current_listings VALUES(NEW.*);

Now that the rules are set up, we move the existing data in listings to the proper subtable:

INSERT INTO current_listings SELECT * FROM listings WHERE STATUS = 'C'; INSERT INTO non_current_listings SELECT * FROM listings WHERE STATUS != 'C'; DELETE FROM listings;

We know that the DELETE

statement is safe because no new data has been inserted into the

listings table, thanks to the

rewrite rules. This is why it is important that the partition

conditions are a proper partitioning such as status = 'C' and status != 'C' (non-overlapping and

completely covering all possibilities). This ensures that every row

is inserted into one of the child tables, not the parent. Note that

this would not be a proper partitioning if the status column allowed NULL values, as both conditions would be

false.

Now we can insert and select data against listings as if it were one table, while

PostgreSQL transparently handles the partitioning and works with the

proper partition. This is a very simple example. In particular, we

need to implement rules for UPDATE and DELETE queries before usingthis scheme.

This method can easily be extended to many partitions, even on

complicated conditions.

Connecting to Multiple Databases

Occasionally, you will have the need to connect to several different databases from one application. This is useful for migrating from an old schema to a new one. It is also helpful if you have differing data requirements within one application; perhaps some data is more critical and is stored on a high-availability database cluster. In any case, it is easy in Rails. First, specify multiple database environments in the database.yml configuration file:

legacy: adapter: mysql database: my_db username: user password: pass host: legacy_host new: adapter: mysql database: my_db username: user password: pass host: new_host

Then, you can simply refer to these configuration blocks from the

ActiveRecord class definition using the ActiveRecord::Base.establish_connection

method:

class LegacyClient < ActiveRecord::Base

establish_connection "legacy"

end

class Client < ActiveRecord::Base

establish_connection "new"

endThis approach also works with multiple Rails environments. Just specify each environment in the database.yml file as usual:

legacy_development: # ... legacy_test: # ... legacy_production: # ... new_development: # ... new_test: # ... new_production: # ...

Then, use the RAILS_ENV

constant in the database configuration block name:

class LegacyClient < ActiveRecord::Base

establish_connection "legacy_#{RAILS_ENV}"

end

class Client < ActiveRecord::Base

establish_connection "new_#{RAILS_ENV}"

endYou can go one step further and DRY this code up by using class

inheritance to define which database an ActiveRecord class belongs to:

class LegacyDb < ActiveRecord::Base

self.abstract_class = true

establish_connection "legacy_#{RAILS_ENV}"

end

class NewDb < ActiveRecord::Base

self.abstract_class = true

establish_connection "new_#{RAILS_ENV}"

end

class LegacyClient < LegacyDb

end

class Client < NewDb

endThe self.abstract_class = true

statements tell ActiveRecord that the LegacyDb and NewDb classes cannot be instantiated

themselves; since they represent database connections, they are not

backed by concrete tables in the database.

Magic Multi-Connections

Dr Nic Williams’s Magic Multi-Connections gem (http://magicmodels.rubyforge.org/magic_multi_connections/)

allows you to connect to different databases concurrently from the same application. This

is very useful when using one master and several read-only slaves

serving the same models. The syntax is transparent; it uses module

namespaces and imports the models (ActiveRecord::Base subclasses) into the

namespaces.

For a single-master situation, you could define another database connection in database.yml for the read slave:

read_slave: adapter: postgresql database: read_only_production username: user password: pass host: read_slave_host

This database is backed by a module, which mirrors the ActiveRecord classes using this database connection:

require 'magic_multi_connections'

module ReadSlave

establish_connection :read_slave

endNow, all pre-existing models can be accessed through the

read_slave connection by prefixing

the model class with ReadSlave::.

# use the read-only connection @user = ReadSlave::User.find(params[:id]) # write to the master (can't use @user.update_attributes because it would# try to write to the read slave) User.update(@user.id, :login => "new_login")

Caching

If you have far more reads than writes, model caching may help lighten the load on the database server. The standard in-memory cache these days is memcached.[41] Developed for LiveJournal, memcached is a distributed cache that functions as a giant hashtable. Because of its simplicity, it is scalable and fast. It is designed never to block, so there is no risk of deadlock. There are four simple operations on the cache, each completing in constant time.

You can actually use memcached in several different places in Rails. It is available as a session store or a fragment cache store out of the box, assuming the ruby-memcache gem is installed. It can also be used to store complete models—but remember that this will only be effective for applications where reads vastly outnumber writes. There are two libraries that cover model caching: cached_model and acts_as_cached.

The cached_model library (http://dev.robotcoop.com/Libraries/cached_model/index. html)

provides an abstract subclass of ActiveRecord::Base, CachedModel. It attempts

to be as transparent as possible, just caching the simple queries

against single objects and not trying to do anything fancy. It does have

the disadvantage that all cached models must inherit from CachedModel. Use of cached_model is dead

simple:

class Client < CachedModel end

On the other hand, the acts_as_cached plugin (http://errtheblog.com/post/27) gives you more specificity over what is cached. It feels more like programming against memcached’s API, but there is more power and less verbosity. It has support for relationships between objects, and it can even version each key to invalidate old keys during a schema change. A sample instance of acts_as_cached might look like this:

class Client < ActiveRecord::Base acts_as_cached # We have to expire the cache ourselves upon significant changes after_save :expire_me after_destroy :expire_me protected def expire_me expire_cache(id) end end

Of course, the proper solution for you will depend on the specific needs of the application. Keep in mind that any caching is primarily about optimization, and the old warnings against premature optimization always apply. Optimization should always be targeted at a specific, measured performance problem. Without specificity, you don’t know what metric you are (or should be) measuring. Without measurement, you don’t know when or by how much you’ve improved it.

Load Balancing and High Availability

Many applications require some form of load balancing and/or high availability. Though these terms are often used together and they can often be obtained by the same methods, they are fundamentally two different requirements. We define them thus:

These are completely different things, but they are often required and/or provided together. It is important to understand the difference between them in order to properly analyze the requirements of an application. It is possible to provide load balancing without high availability—for example, consider a group of servers presented to the Internet via round-robin DNS. The load is distributed roughly equally over the group of servers, but the system is certainly not highly available! If one server goes down, DNS will still faithfully distribute requests to it, and every one in N requests will go unanswered.

Conversely, high availability can be provided without load balancing. High availability necessitates the use of redundant components, but nothing says that those components must be online and in use. A common configuration is the hot spare: a duplicate server that stays powered up but offline, continually monitoring its online twin, ready to take over if necessary. This can actually be more economical than trying to balance requests between the two servers and keep them in sync.

In this section, we review the primary load balancing and high availability solutions for common database management systems.

MySQL

Replication

MySQL has built-in support for master-slave replication. The master logs all transactions to a binlog (binary log). During replication, the binlog is replayed on the slaves, which apply the transactions to themselves. The slaves can use different storage engines, which makes this facility useful for ancillary purposes such as backup or full-text indexing. Master-slave replication works well for load balancing in applications where reads outnumber writes, since all writes must be applied to the master.

However, master-slave replication as described does not provide high availability; there is a single master that is a single point of failure. A slave can be promoted to be the master during failover, but the commands to do this must be executed manually by a custom monitoring script. There is currently no facility for automatically promoting a slave. Additionally, all clients must be able to determine which member is currently the master. The MySQL documentation suggests setting up a dynamic DNS entry pointing to the current master; however, this will introduce another potential failure point.

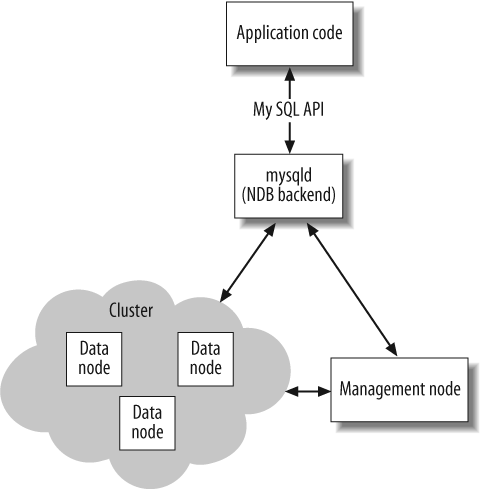

MySQL cluster

The primary high-availability solution for MySQL is the MySQL Cluster technology, available since version 4.1. Cluster is primarily an in-memory database, though as of version 5, disk storage is supported. The Cluster product is based on the NDB storage engine, backed by data nodes.

MySQL Cluster is designed for localized clusters; distributed clusters are not supported as the protocol used between nodes is not encrypted or optimized for band-width usage. The interconnect can use Ethernet (100 Mbps or greater) or SCI (Scalable Coherent Interconnect, a high-speed cluster interconnect protocol). It is most effective for clusters with medium to large datasets; the recommended configuration is 1–8 nodes with 16 GB of RAM each.

Because the majority of the data is stored in memory, the cluster must have enough memory to store as many redundant copies of the full working set as the application dictates. This number is called the replication factor. With a replication factor of 2, each piece of data is stored on two separate servers, and you can lose only one server out of the cluster without losing data.

For high availability, at least three physical servers must be used: two data nodes and a management node. The management node is needed to arbitrate between the two data nodes if they become disconnected and out of synchronization with each other. A replication factor of 2 is used, so the two data nodes must each have enough memory to hold the working set, unless disk storage is used.

Since the Cluster software is simply a storage engine, the cluster is accessed through a standard MySQL server with tables defined with the NDB backend. The server accesses the cluster to fulfill requests from the client. The overall architecture is shown in Figure 4-3.

Because the mysqld servers

only differ from nonclustered servers in their backend, they can be

replicated with binlogs just as nonclustered servers can. So, it is

possible to achieve long-distance master-slave replication among

multiple clusters.

It is also possible to have several mysqld servers accessing the same cluster

and serving the same clients for redundancy. In the preceding

diagram, the MySQL server is a single point of failure; if it goes

down, there is no way for the application to access the cluster.

There are three approaches to handling load balancing and failover when multiple MySQL

servers are involved:

Modify the application code to handle failed servers and retry queries to different servers. Each MySQL server will have its own IP address in this scenario.

Use a separate hardware or software load balancer between the application and the MySQL servers. This will create one Virtual IP address (VIP) that will be directed to one of the physical servers via DNAT. This method is expensive, as you need at least two load balancers for high availability.

Use a software high-availability solution such as Wackamole (http://www. backhand.org/wackamole/). This will expose a pool of virtual IP addresses and ensure that exactly one live server has each IP address at all times. If a server fails, its VIPs are redistributed among those remaining. The pool of VIPs is distributed via a DNS round-robin list, so the application will pick a VIP more or less at random.

PostgreSQL

There are several load-balancing and high-availability options for PostgreSQL. Because there is no single company behind PostgreSQL, the options are provided by different organizations and companies. Each product typically embodies a different replication or clustering paradigm. Some of the options are described in this section.

High availability: Warm standby

Warm standby is a simple way to achieve high availability under PostgreSQL. It takes some configuration, but the configuration is documented well. Warm standby uses the write-ahead log (WAL) that PostgreSQL logs activity to. Changes are written in the WAL prior to being committed, so the database state can be reconstructed even if a transaction is interrupted catastrophically. Log shipping is the process of sending the WAL as files from the master to a slave.

Under a warm standby setup, a server is on standby, in restore mode. It is continuously restoring from the primary server, using a restore command that waits for WALs to become available and applies them as soon as they do. If the primary server dies, a monitoring system (which must be provided by the user) designates the standby as the new primary server.

Master-slave replication: Slony-I

Slony-I is a master-slave replication system similar to the replication mechanisms included with MySQL. It supports promoting slaves to masters, but, like MySQL, does not provide any mechanism to detect that nodes have failed.

An upgrade to Slony, Slony-II, is in the very early stages of development now. It plans to provide multimaster synchronous replication for PostgreSQL based on the Spread group-communication framework.

Multimaster replication: PGCluster

PGCluster (http://pgcluster.projects.postgresql.org/) is a product that offers multimaster replication and clustering for PostgreSQL. It provides both load balancing and high availability for a database cluster. The software handles failover, and yields a readily available solution if three or more physical servers are used.

PGCluster’s replication style is synchronous; updates are propagated to all servers before the update transaction succeeds. Thus, it should only be used in environments where all master servers are at the same location and are always connected. Asynchronous replication, in which changes are propagated to other servers some time after the transaction commits, is generally considered a hard problem. Asynchronous replication is also application-specific, as the proper way to handle conflicts between two committed transactions depends on the application’s needs.

Oracle

Oracle’s clustering product is Oracle Real Application Clusters (RAC). In contrast to the shared-nothing clustering solutions available for other DBMSs, RAC is a shared-everything clustering product. In RAC, multiple Oracle instances access a shared database cluster. The shared-everything architecture depends on a common data store such as a storage area network (SAN).

Oracle supports many flexible replication options, from simple data-only one-way replication to distributed multimaster replication. These solutions are very powerful but also very complicated.

Microsoft SQL Server

Like Oracle, SQL Server has extensive features supporting both replication and clustering. SQL Server even supports “merge replication,” which is essentially asynchronous multimaster replication. Of course, both the clustering and replication options require large amounts of configuration.

There is no out-of-the-box load-balancing solution for SQL Server yet; once you have a replicated database, you still must write application code so as to direct requests to the appropriate server.

LDAP

LDAP, the Lightweight Directory Access Protocol, is a database system optimized for user directory information. It is most often used in large organizations, integrated with the enterprise authentication and email systems. However, it is a database in its own right. We do not have space to cover LDAP in detail, but there are many resources available for working with LDAP in Rails.

ActiveLDAP

The ActiveLDAP library (http://ruby-activeldap.rubyforge.org/) is an almost drop-in replacement for ActiveRecord that uses LDAP instead of an RDBMS as a backend. To use it from Rails, set up a configuration file, config/ldap.yml, as follows:

development: host: (ldap server name) port: 389 base:dc=mycompany,dc=com password: my_password production: ...

Then, at the bottom of config/environment.rb, set up the connection:

ldap_path = File.join(RAILS_ROOT,"config","ldap.yml") ldap_config = YAML.load(File.read(ldap_path))[RAILS_ENV] ActiveLDAP::Base.establish_connection(ldap_config)

To set up ActiveLDAP, just subclass ActiveLDAP::Base and set the LDAP mapping on

a class-by-class basis:

class Employee < ActiveLDAP::Base ldap_mapping :prefix => "ou=Employees" end

LDAP queries can then be executed using the class methods on

ActiveLDAP::Base:

@dan = Employee.find :attribute => "cn", :value => "Dan"

Authenticating with LDAP

One of the most common reasons for using LDAP is to integrate into an existing authentication structure. If an LDAP server is provided for a Windows domain, this will allow the web application to authenticate users against that domain rather than maintaining its own user models separately.

Set up the ldap.yml file as described previously (without specifying a password), but do not bind to the LDAP server from environment.rb. We will perform the bind as part of the authentication process. The following code is adapted from the Rails wiki:[42]

class LdapUser < ActiveLDAP::Base

ldap_mapping :prefix => (LDAP prefix for your users)

LDAP_PATH = File.join(RAILS_ROOT,"config","ldap.yml")

LDAP_CONFIG = YAML.load(File.read(ldap_path))[RAILS_ENV]

def self.authenticate username, password

begin

ActiveLDAP::Base.establish_connection(config.merge(

:bind_format => "uid=#{username},cn=users,dc=mycompany,dc=com",

:password => password,

:allow_anonymous => false

))

ActiveLDAP::Base.close

return true

rescue ActiveLDAP::AuthenticationError

return false

end

end

endAuthentication is then very simple:

LdapUser.authenticate "my_username", "password" # => true or false

Further Reading

Chris Date’s Database in Depth (O’Reilly) is a very accessible introduction to relational theory aimed at software developers who are experienced in the use of relational databases. It reintroduces readers into the technical foundations behind the relational model.

Theo Schlossnagle’s Scalable Internet Architectures (Sams) is a short but comprehensive treatment of ways to accomplish scalability (both high availability and load balancing are covered); it covers ground from the smallest two-server failover cluster up to global server load balancing.

Both the MySQL manual (http://dev.mysql.com/doc/) and the PostgreSQL manual (http://www.postgresql.org/docs/) have a wealth of information about general database topics, as well as specific information pertaining to the use of those DBMSs.

[30] Informally, DBMSs are often referred to as “databases.” Consistent with industry practices, in this book “database management system” refers to the software package or installation thereof, while “database” refers to the actual data set being managed.

[31] Technically pronounced “post-gres-Q-L,” and usually just called “Postgres.” This is a contender for the least intuitive name in computing today. It has its roots in PostgreSQL’s long-ago predecessor, Postgres, which did not support SQL.

[32] For a detailed look at how Postgres handles concurrency, including a summary of the potential problems and how Postgres handles them, see the documentation at http://www.postgresql.org/docs/8.2/interactive/transaction-iso.html.

[33] ACID stands for Atomic, Consistent, Isolated, and Durable, which are necessary properties for transactional integrity within a database. See http://en.wikipedia.org/wiki/ACID for a full definition and explanation.

[34] Short for “byte array”.

[35] Assuming fairly uniform binary data, the BYTEA quoting rules lead to an average expansion ratio of 1:2.9.

[36] The size is defined in LOBLKSIZE. It defaults to 2 KB.

[39] I would probably keep that one at the application level, because it contains the business rule that no employee’s salary is zero. However, “an employee’s salary must be non-negative” would most likely be an integrity constraint, as it is nearly inconceivable that you would “pay” an employee a negative salary.

[41] Pronounced “mem-cache-dee,” for “memory cache daemon.” Available from http://danga.com/memcached/.

Get Advanced Rails now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.