Chapter 1. Introduction: Agile AI Processes and Outcomes

With a claim in a potentially $13 trillion market1 at stake over the next decade, companies are working diligently to take advantage of the high returns of embedding artificial intelligence (AI) in their business processes—but project cost and failure rates are on the rise. Problematically, there is no standard practice for how to implement AI in your business. That makes it very difficult for business leaders to reduce their risk of project failure.

Although this is a book about potential project failures, we’d be remiss not to point out the potential damage of organizational failures. An organization fails without a corporate will to even consider AI projects because its leadership doesn’t understand how far behind they are from industry leaders. To use a bike race as a simplified metaphor: in AI, industry leaders are the small number of riders in the breakaway, most businesses are in the peloton, and those without the will to adopt AI forgot they were in the race and are watching on TV. We don’t speak to building institutionalized beliefs that enable you to avoid organizational failures in this book, but we do help AI projects address failure.

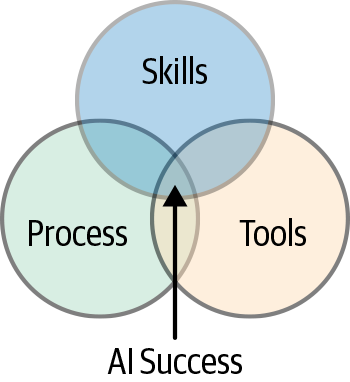

Why are project failure rates so high? There are three areas in which things can go wrong (see Figure 1-1):

- Skills

-

First, high skill levels are needed to harness AI.

- Process

-

Every use case can be developed in a different way. There is no blueprint for developing AI applications and integrating them into your current business workflow.

- Tools

-

There are hundreds of open source and proprietary AI tools. Each can have hidden limitations and costs. There is no guarantee of success.

Skills, processes, and tools are like a three-legged stool: if just one of the three legs fails, the stool falls over. With this in mind, this book offers an agile AI approach for business that will allow you to innovate quickly and reduce your risk of failure.

Figure 1-1. The three pillars of AI success

This book was written with technical leaders in mind, especially those who have an understanding of data warehousing, analytics, software engineering, and data science. But anyone interested in an Agile approach to AI for business will find it useful.

In 2017, IBM created the IBM Data Science Elite (DSE) team, a team of the world’s top data scientists, to help clients on their AI journey. We have used AI in hundreds of projects to bring real business value to our clients quickly. We’ve built a unique Agile methodology using AI and teamed up with leaders in the open source space. We’ve written this book as a guide for business leaders and all technical teams working on AI projects.

In hundreds of conversations about data science that we’ve conducted with practitioners, we have come to learn that there is no singular way to pursue AI to achieve their analytics goals. There are only quicker ways or longer ways. Some businesses are just picking up data science, and some are deep into their analytics journeys, but their approaches all differ. In this book, we talk about those journeys and aim to impress upon you the value of an Agile AI practice.

The Agile Approach

Agile is a software development methodology designed to address the shortfalls of antiquated Waterfall development systems. In old software development practices, a team would scope a product a length of time into the future and build work-back plans. Each separate participatory team would address those capability requirements week by week and then hand off their work to the next group.

For example, to build my factory widget-counting software product, I know I need components A through C. The design team comes up with the design of A, hands that to the product team, and then the product team scopes my work for the engineering team. The design team then picks up component B, and so on.

In new software development practices (Agile), teams work cross-functionally to design minimally viable products (MVPs) and ship iterations of those products quickly. The handoff becomes less of a waterfall, in which one team’s work flows downstream—cascading, if you will—to the next group. With Agile, the handoff is much more collaborative.

Let’s now look at building my factory widget-counting software product from an Agile perspective. I know that I need components A through C. Design works with the product ownership team and engineering to build and certify the MVP of component A, while another cross-functional team can pick up component B.

Agile methodology tends to work so well because the process of building software inevitably runs into unexpected roadblocks (i.e., one group doesn’t have sufficient permissions, infrastructure, or budget). Delays happen. Bugs pop up. By building MVPs in smaller chunks, you’re not going to push off the entire development timeline as you would when one group botches its delivery date in handing off to a separate siloed group.

IBM has incorporated a unique Agile software development life cycle (SDLC) approach in our engagements with clients, but focused on building AI products. In it we use sprint development principles, work on cross-functional teams, and focus on readouts. Sprints are two-week-long time boxes, and readouts are times to “show & tell” your work.

Within each sprint, we prioritize understanding the business problem, engineering data features based on our in-depth understanding of the business problem, and then we quickly move to train and test our models based on that understanding. These iterative cycles allow us space for creativity as well as establish a rhythm for fast-paced problem solving. If we’re able to explain as much of the variance in our data as we need, we’re done! Otherwise, when we run into roadblocks with finding signal among the noise in our data, we can shift our focus, include new insights from the business, and then begin to perform feature engineering all over again.

Note

There are some basic statistical principles you need to account for as you go through these iterations so that you don’t invalidate your hypotheses.

This process—with its incorporated principles of design and solid fundamentals of software development—has worked time after time and is something we love to talk to leaders about.

AI Processes in Businesses Today

When we hear clients or coworkers discussing AI, our first thought is to ask them to define what AI means to them so that we can have a shared understanding. Lots of people think of AI as magic. Think of it instead as analytics that you and your enterprise depend upon to make decisions to drive your business. The term artificial intelligence reflects a broad practice of systems that mimic human intelligence to make informed business decisions based on your company data. At the heart of human intelligence is the ability to learn, which means that AI must involve mastering and democratizing machine learning. If you and your team have used predictive analytics to optimize your business workflows, you might be as close to AI as anyone else out there.

AI has not yet reached the capacities of generalized intelligence; that is, self-empowered, decision-making computers: an AI system with the ability to reason and decide with the same capacity of an industry expert. Currently, it produces decisions informed by the historical data stored over the years by your enterprise. Generalized AI will be achieved at some point as tools and business applications develop, but it might be that every industry will reach that milestone in different time frames. For example, AI researchers hypothesize that AI will automate all retail jobs in 20 years, whereas automating human surgeries will take 40 years. But using AI for your business? That is something you can do today—and something you need to do today.

When we began working with the credit-reporting service Experian, we discussed some of its challenges with entity matching to build a corporate hierarchy for all companies. Experian’s existing process required a lot of human capital and time, and that limited its ability to match entities across its full population. Although the company understood AI, the best approach for this specific line of business was not clear, so we worked with developers on small steps and in quick iterations. The companies leading the way in adopting AI understand the value of incremental changes, and Experian became one of these successes.

Tip

Entity matching is resolving records of a database and deciding which align to one another. In the corporate hierarchy application, the question is framed as “which two businesses (and, importantly, their subsidiaries) are the same?”

When we share our experience with clients, we push them to move fast: if you’re going to fail, you should do it quickly. You don’t really know whether your approach will work until you model it with data. So, you need to invest. It makes client projects more successful because we test the theories we suppose to be true, and if those theories aren’t borne out in data, we quickly discover that and adjust.

Contrast this development cycle to a traditional modeling life cycle—think of the Waterfall design of software development in the past, with the antiquated premise that good software can go through a “design first, deliver final” process instead of iterating on work constantly.

Each of these approaches (Agile and traditional) was a decision our clients made intentionally, and each is valid. Ultimately, the difference distills down to the rate of adoption of each enterprise’s AI use case.

Beyond R&D

The most effective campaigns to achieve AI at enterprise scale tend to focus related projects on business objectives. Let’s compare the different ways companies are approaching AI to help us understand data science compared to R&D.

If machine learning is the language behind AI, statistics are the grammar of that language. Enterprises have long had analysts reviewing historical data in search of trends using statistical principles that are useful for reports and experimentation. Those reports and experiments are one function of advising the business, and business analysts have achieved great success utilizing them, but often the report is the final—or only—outcome of that wider analysis. Predictive capabilities for AI, on the other hand, must be wrapped into broader application deployments and made available.

By comparison, when they participate in conversations about AI, many enterprises think only of their research and development function. For line-of-business leaders, AI can feel unachievable, so they might believe that whatever comes out of the research organization will be the closest thing to AI that their enterprise will achieve. Predictive algorithms are improving all the time, thanks to R&D, and many organizations, from IBM to McKinsey, to Google, have excellent research functions to do just that. AI, however, is not the improvement of a function; rather, it’s the broad and strategic application of a set of predictive capabilities designed up front to solve difficult business problems.

As we look to drive business outcomes, IBM data science teams embed with client teams working to solve the business problems at hand, not their R&D functions. As we build out their AI capabilities, we design our applications to operationalize the models from the very beginning. It is common for enterprises, and our clients, to struggle to make substantive use of their predictive solutions because they don’t begin their data science exercise with the intention of consuming the models they’ve spent many hours training. If enterprises are to achieve AI or the ability to apply predictive features in every function where it is economically feasible, they need to begin each problem-solving exercise with the expectation that their modeling will be consumed by some other application elsewhere.

Leaders like Quicken Loans that adopt an innovation culture confirm our view that innovation occurs when it is focused on business problems and embedded in each line of business. We’ve seen many enterprise companies treat AI as only a scholastic science fair project or as a research and development project. Research is essential and can make our applications better, more accurate, or more efficient, but it is the widespread and ease-of-use tooling of predictive capability that has enabled their AI. R&D might have improved it, and analysis might have shown that it was effective, but designing their predictive capabilities with business problems in mind is what makes those solutions feasible.

Organizing for AI

We frequently hear from technical leaders when they struggle with how to best organize the talent within their teams to enable AI development to thrive. This is always a revelatory conversation.

First of all, when the IBM DSE team begins any engagement, we mandate that each client bring data scientists from within their organization to the table. We strive to help grow skilled data science practitioners; if we didn’t, each engagement would fail as soon as IBM left. Those data scientists can then serve as Centers of Excellence for other groups or lines of business needing to build AI solutions. It was never intentionally decided that the DSE team would help lead enterprises in this direction, but ultimately, we help clients grow their data science practice. Clients ask all the time about how best to scale data science in their enterprise. Our answer is to build the appropriate organizational structure. Companies can innovate with separate pockets of data scientists serving different purposes in a decentralized model, but the best model is a hub-and-spoke model. The hub serves as a Center of Excellence focused on supporting all parts of your company, whereas the spokes are located in each line of business focused on business problems.

Data Scientists

For each use case you will implement, each project team needs a variety of skills. First, the data scientists tend to be generalists with a toolbox, which allows them to serve as service centers. The principles of their practice endow them with comfort in handling many different sets and types of data and applying best practices when it comes to cleaning, preparing, and understanding that data. They are mandated to maintain an understanding of the state of the art in the field, but as it applies broadly to data science.

Data Engineers

Data engineers are masters of the data pipeline, and they’re essential for owning the data cleaning and architecture that data scientists depend upon. They also don’t pray to any singular type of data, so their skill sets generalize well to teams in a broad sense.

Business Analysts

By contrast, business analysts are often tied to business units and are experts at the intricacies and practices within the field of concern of that unit. They know data within their field well, and when a data scientist doesn’t know what a specific feature means, they turn to the business analyst. When a data scientist doesn’t understand a problem in any specific sense, it would likely be the business analyst to whom they turn.

There is a naturally cohesive partnership between the data engineers and data scientists, with transferable skills across many teams, whereas business analysts tend to remain embedded and act as a stationary Center of Excellence. Teams that are set up to build applications with full modeling and data science pipelines might think each business unit requires its own set of data engineers and scientists, but in the enterprise that tends to be overkill, especially when all enterprises are competing for relatively scarce data scientists and data engineers.

In summary, there are a few essential conditions for the hub-and-spoke model to work. The business analysts should know the business problem as well as the data, in detail. The data scientists should have the know-how to dissect that problem and data, and model an approach. The data engineers can then help with the data pipeline, create the architecture of a solution, and model operations. Together, these clusters of talent and institutional domain expertise can work together to deliver AI solutions.

Why Agile for AI?

Failure is good. And the best way to fail is to do it quickly.

Leaders, developers, and data scientists alike blindly believe in the dogma that adding predictive capabilities to their businesses is worth the effort only if it is an overnight success. That understanding is neither practical nor realistic when the reality is that an iterative approach is rooted in the scientific method of forming hypotheses and validating your theories. Not all theories or predictions can be proven to be true.

First, make sure you’re working on the correct business problems. It is difficult to identify business cases for which AI solutions will drive impactful change to the business. Some business problems are not economical to solve with a model because gathering, cleaning, and storing the data are cost prohibitive. For example, we embarked on a project to use machine learning to reduce noise in file processing. That’s a very common use case, and machine learning is very effective on these kinds of projects. Although the client was interested in using machine learning, when we quantified the business impact, the solution would save two resources 10 hours of manual work per week. The cost to do the project didn’t justify the savings. We always tell clients that even though AI can transform your business, it’s best to first use it on big problems until you have proper teams in place and can democratize the technology across your company.

Second, for some use cases, the existing data doesn’t carry the essential signal for modeling efforts. Data scientists can save all the data they want, but if there iss no discernible historic relationship between the data and the outcome, it will be impossible to predict the behavior of interest. This is not too common: typically, we can perform additional feature engineering and pull in more relevant data to get signal in our models. But it does happen, so you need to understand it as a risk and adjust your use case and approach when faced with it.

The salient point for all enterprises interested in modeling from their data is that it is important to try to model many behaviors, quickly. Leaders should establish a broad swath of hypotheses for their teams and enable them to attempt each modeling experiment. Many times, when the IBM DSE team is brought in to solve a modeling problem, we see enterprises despair at the slightest roadblock. We always tell them: the first model snafu is not the end of the exercise. As long as we’re responsible with how we treat the significance and power of our experiments, we can persevere.

As an example of perseverance, let’s talk about a project with one of the largest automotive parts manufacturers in Canada: Spectra Premium. We were asked to help the company build a better forecasting model than a third-party tool it had been using. Spectra Premium manufactures many parts, but runs are relatively low volume. Each part takes a long time to manufacture because it requires special machinery adaptations during production. Because of the lengthy production time, forecasting accurately was critically important. If Spectra doesn’t forecast the demand properly, it could potentially have items back-ordered and open the door for competitors. In our first few modeling attempts, we were only able to match the results that the company was already achieving, which was 80% accuracy. As a collective group, we were disappointed, but we dug deeper into the data and found that our accuracy for some manufactured parts was as low as 30%, whereas for other parts it was greater than 90%. We changed our approach and instead began to try different algorithms for each part instead of modeling on the entirety of the inventory. This approach pushed our overall accuracy to more than 90% and led to a successful example of the team adapting in the face of adversity.

In contrast to software engineering teams, data science teams can offer no guarantees about modeling outcomes. When your enterprise adds a traditional software application, the development life cycle is well-known and well-documented. Even then, leadership needs patience as their teams iterate over the product features and behaviors, but the exercise is not predicated upon an unforeseen set of data: its success is largely driven by time and scope. Data science exercises, however, all begin with a hypothesis. as in any experiment, sometimes your teams cannot reject the null hypothesis.

The faster your teams iterate through their experiments and test their hypotheses, the more likely you are to drive the amazing outcomes that AI can offer enterprises. Your willingness and flexibility to pivot is a key factor in the success of your AI projects.

Summary

The IBM DSE team built a unique Agile process for our clients’ projects, and in this book, we draw from those experiences to show you how your teams can succeed with an Agile approach to AI.

Legendary science-fiction author Arthur C. Clarke famously wrote that “any sufficiently advanced technology is indistinguishable from magic.” Despite what you might have read, AI is not magic. You really need to understand this technology in order for your teams to harness it. This book is a chance for us to share the stories and key practices of our successful client engagements to help you succeed in your Agile AI practices.

1 McKinsey & Company, “AI Adoption Advances, but Foundational Barriers Remain”, November 2018; T. Fountaine, B. McCarthy, T. Saleh, “Building the AI Powered Organization”, Harvard Business Review (2019); S. Ransbotham, D. Kiron, P. Gerbert, M. Reeves, “Reshaping Business with Artificial Intelligence”, MIT Sloan Management Review (2017).

Get Agile AI now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.