If we reflect on the performance of Median Sort, we see that a random choice of pivotIndex still enables the average-case performance of selectKth to operate in linear time. Is it possible to simplify the algorithm without incurring an extra performance penalty? Would the simpler implementation perhaps be faster in some situations? The Quicksort algorithm, first introduced by C.A.R. Hoare, is indeed simpler than Median Sort, although it uses many of the same concepts, which is why we introduced Median Sort first.

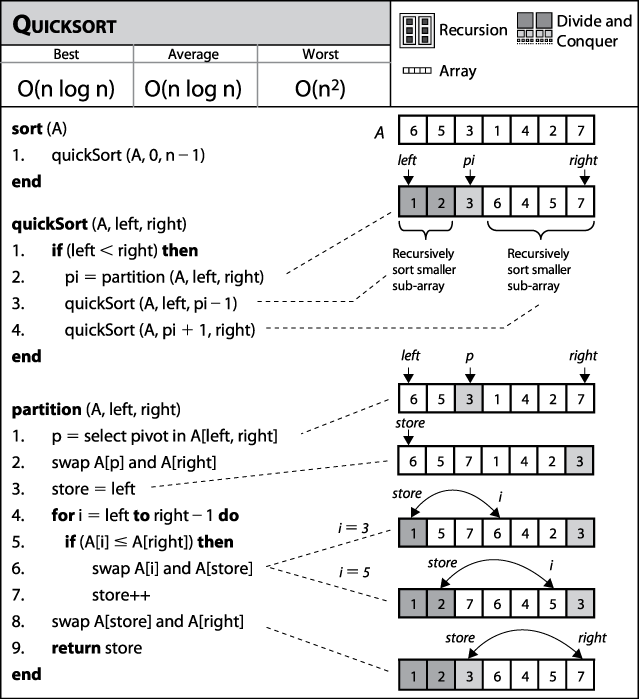

In Quicksort, we no longer seek the median value, and instead select an element according to some strategy (sometimes randomly, sometimes the leftmost, sometimes the middle one) to partition an array into subarrays. Thus Quicksort has two steps, as shown in Figure 4-11. The array is partitioned around a pivot value, creating a left subarray that contains elements less than or equal to the pivot, and a right subarray that contains elements greater than or equal to the pivot. Each of these subarrays is then recursively sorted.

The random nature of the algorithm as described makes it a challenge to prove that the average-case performance is still O(n log n). We do not cover here the advanced mathematical analytic tools needed to prove this result, but further details on this topic are available in (Cormen et al., 2001).

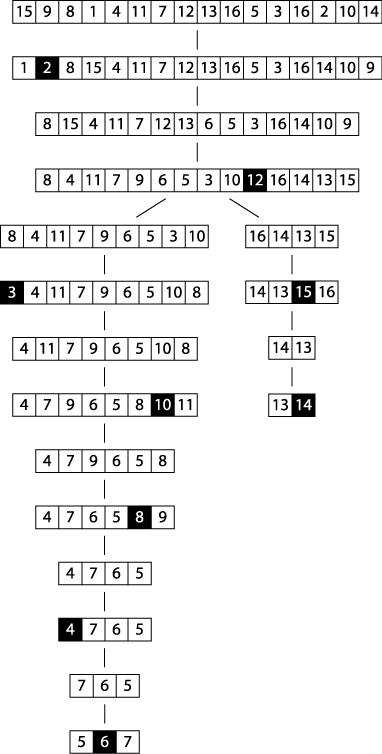

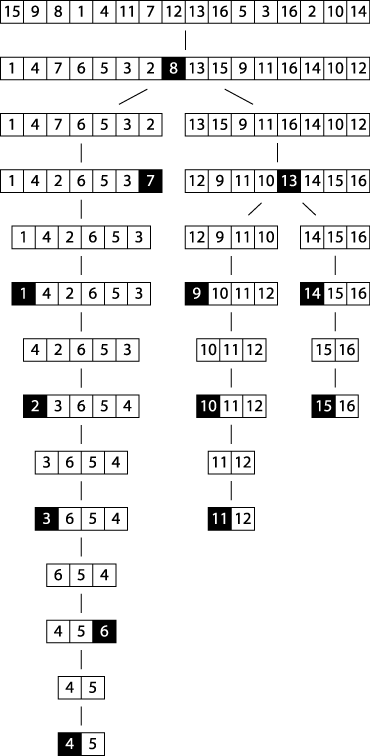

Figure 4-12 shows Quicksort in action. Each of the black squares in the figure represents a pivot selection in line 1 of partition. The first pivot selected is "2", which turns out to be a poor choice since it produces two subarrays of size 1 and size 14. During the next recursive invocation of Quicksort on the right subarray, "12" is selected to be the pivot, which produces two subarrays of size 9 and 4, respectively. Already you can see the benefit of using partition since the last four elements in the array are, in fact, the largest four elements. Because of the random nature of the pivot selection, different behaviors are possible. In a different execution, shown in Figure 4-13, the first selected pivot nicely subdivides the problem into two more-or-less comparable tasks.

Context

Quicksort exhibits worst-case quadratic behavior if the partitioning at each recursive step only divides a collection of n elements into an "empty" and "large" set, where one of these sets has no elements and the other has n−1 (note that the pivot element provides the last of the n elements, so no element is lost).

The choice of pivot strongly influences the relative sizes of the two subarrays after the execution of partition. Instead of just choosing pivot to be a random element, one can choose it to be the median (middle value) of k random elements for some odd k. Later, in the "Variations" section, we discuss different strategies for selecting the pivot.

Solution

The Quicksort implementation shown in Example 4-7 can be defined in terms of the functions already presented in "Median Sort." We use a standard optimization technique that uses Insertion Sort when the size of the subarray to be sorted falls below a predetermined minimum size.

Example 4-7. Quicksort implementation in C

/**

* Sort array ar[left,right] using Quicksort method.

* The comparison function, cmp, is needed to properly compare elements.

*/

void do_qsort (void **ar, int(*cmp)(const void *,const void *),

int left, int right) {

int pivotIndex;

if (right <= left) { return; }

/* partition */

pivotIndex = selectPivotIndex (ar, left, right);

pivotIndex = partition (ar, cmp, left, right, pivotIndex);

if (pivotIndex-1-left <= minSize) {

insertion (ar, cmp, left, pivotIndex-1);

} else {

do_qsort (ar, cmp, left, pivotIndex-1);

}

if (right-pivotIndex-1 <= minSize) {

insertion (ar, cmp, pivotIndex+1, right);

} else {

do_qsort (ar, cmp, pivotIndex+1, right);

}

}

/** Qsort straight */

void sortPointers (void **vals, int total_elems,

int(*cmp)(const void *,const void *)) {

do_qsort (vals, cmp, 0, total_elems-1);

}The choice of pivot is made by the external method selectPivotIndex(ar, left, right), which provides the array element for which to partition.

Consequences

Surprisingly, the random algorithm for selecting a pivot enables Quicksort to provide an average-case performance that usually outperforms other sorting algorithms. In addition, there are numerous enhancements and optimizations researched for Quicksort that have wrought the most efficiency out of any sorting algorithm. The various options are discussed in detail later, in the upcoming "Variations" section.

Analysis

In the ideal case, partition divides the original array in half; if this behavior is consistent for each recursion, then the resulting behavior produces the same results as Median Sort without incurring an additional performance penalty. Let's define t(n) to be the time to perform Quicksort on an array of n elements. Because Quicksort is recursive, we can state that:

| t(n) = 2*t(n/2)+O(n) |

where O(n) captures the linear work needed to partition the subarrays. As shown in Chapter 2, algorithms that behave according to this t(n) definition have performance of O(n log n). Quicksort is empirically faster than Median Sort simply because the constants abstracted by O(n) are smaller. There is less overhead for Quicksort because it does not have to find the median element when constructing the subproblems; indeed, even a randomly selected pivot is shown, in practice, to suffice, and this requires only a single execution of partition for each recursive invocation of Quicksort (whereas Median Sort might need to recursively invoke partition multiple times when it seeks to compute the median element).

In the worst case, the largest or the smallest item is picked as the pivot. When this happens, Quicksort makes a pass over all elements in the array (in linear time) to sort just a single item in the array. In the worst case, this process is repeated n−1 times, resulting in O(n2) worst-case behavior.

Variations

Quicksort is the sorting method of choice on most systems. On Unix- and Linux-based systems, there is a built-in library function called qsort.[10] Often, the operating system uses optimized versions of the default Quicksort algorithm. Two of the commonly cited sources for optimizations are by Sedgewick (1978) and Bentley and McIlroy (1993).

Various optimizations include:

Create a stack that stores the subtasks to be processed to eliminate recursion.

Choose the pivot based upon median-of-three.

Set minimum partition size to use Insertion Sort instead (which varies by implementation and machine architecture; for example, on the Sun4 architecture the minimum value is set to 4 based on empirical evaluation).

When processing subarrays, push larger partition onto the stack first to minimize the total size of the stack by ensuring that the smaller problem is worked on first.

It is important to recognize that none of these optimizations will eliminate the O(n2) worst-case behavior of Quicksort. The only way to ensure an O(n log n) worst-case performance is to use a selection algorithm such as BFPRT, described in Example 4-6, although this will result in a degraded average-case performance. If a true O(n log n) worst-case performance is required, consider using Heap Sort, discussed in the next section.

Picking a pivot

Selecting the pivot element from a subarray A[left, left+n) must be an efficient operation; it shouldn't require checking all n elements of the subarray. Some alternatives are:

Select first or last: A[left] or A[left+n−1]

Select random element in A[left, left+n−1]

Select median-of-k: the middle value of k random elements in A[left, left+n−1]

Often one chooses k=3, and, not surprisingly, this variation is known as median-of-three. Sedgewick reports that this approach returns an improvement of 5%, but note that some arrangements of data will force even this alternative into subpar performance (Musser, 1997). A median-of-five pivot selection has also been used. Performing further computation to identify the proper pivot is rarely able to provide beneficial results because of the incurred costs, which make the algorithm approach Median Sort in performance (indeed, Median Sort takes this variation to the extreme by selecting the median-of-n).

Processing the partition

In the partition method shown in Example 4-3, elements less than or equal to the selected pivot are inserted toward the front of the subarray. This approach might skew the size of the subarrays for the recursive step if the selected pivot has many duplicate elements in the array. One way to reduce the imbalance is to place elements equal to the pivot alternatively in the first subarray and second subarray.

Processing subarrays

Quicksort usually yields two recursive invocations of Quicksort on smaller subarrays. While processing one, the activation record of the other is pushed onto the execution stack. If the larger subarray is processed first, it is possible to have a linear number of activation records on the stack at the same time (although modern compilers may eliminate this observed overhead). To minimize the possible depth of the stack, process the smaller subarray first.

If the depth of the system stack is a foreseeable issue, then perhaps Quicksort is not appropriate for your application. One way to break the impasse is to use a stack data structure to store the set of subproblems to be solved, rather than relying on recursion.

Using simpler insertion technique for small subarrays

On small arrays, Insertion Sort is faster than Quicksort, but even when used on large arrays, Quicksort ultimately decomposes the problem to require numerous small subarrays to be sorted. One commonly used technique to improve the recursive performance Quicksort is to invoke Quicksort for large subarrays only, and use Insertion Sort for small ones, as shown in Example 4-7.

Sedgewick (1978) suggests that a combination of median-of-three and using Insertion Sort for small subarrays offers a speedup of 20–25% over pure Quicksort.

IntroSort

Switching to Insertion Sort for small subarrays is a local decision that is made based upon the size of the subarray. Musser (1997) introduced a Quicksort variation called IntroSort, which monitors the recursive depth of Quicksort to ensure efficient processing. If the depth of the Quicksort recursion exceeds log (n) levels, then IntroSort switches to Heap Sort. The SGI implementation of the C++ Standard Template Library uses IntroSort as its default sorting mechanism (http://www.sgi.com/tech/stl/sort.html).

[10] It is instructive that some versions of the Linux operating system implement qsort using Heap Sort, discussed in the upcoming section "Heap Sort."

Get Algorithms in a Nutshell now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.