Chapter 4. Intertwingled

Intertwingularity is not generally acknowledged—people keep pretending they can make things deeply hierarchical, categorizable and sequential when they can’t. Everything is deeply intertwingled. —Theodor Holm Nelson

As a sociology student at Harvard in the early 1960s, Ted Nelson enrolled in a computer course for the humanities that changed his life. For his term project, he tried to develop a text-handling system that would enable writers to edit and compare their work easily. Considering he was coding on a mainframe in Assembler language before word processing had been invented, it’s no surprise his attempt fell short. Despite this early setback, Ted was captivated by the potential of nonsequential text to transform how we organize and share ideas. His pioneering work on “hypertext” and “hypermedia” laid an intellectual foundation for the World Wide Web, and his views on “intertwingularity " will haunt the house of ubicomp for many years to come.

We experience Nelson’s intertwingularity every time we click a hypertext link. We move fluidly between different pages, documents, sites, authors, formats, and topics. In this nonlinear world, the contrasts can be dramatic. A single blog post may link to an article about dinosaurs, a pornographic video, a presidential speech, and a funny song about cabbage. We routinely travel vast semantic distances in the space of a second, and these dramatic transitions aren’t limited to the Web. Our remote controls put hundreds of television channels at our fingertips. Terrorism on CNN. Click. Sumo wrestling on ESPN. Click. Sesame Street on PBS. Click. And our cell phones relentlessly punctuate the flow of daily life. One minute we’re playing soccer with our kids at the neighborhood park. Seconds later we’re in the midst of a business crisis half way around the world. The juxtapositions are worthy of shock and awe. Business and pleasure. Reality and fiction. Humor and horror. And yet, we’re not shocked. We’ve become accustomed to dramatic transition. We expect it. We enjoy it. We’re addicted.

Hypermedia technologies permeate our environment, shaping a bizarre hyper-reality that delivers information and commands attention. And even as we complain of information anxiety, we’re about to elevate intertwingularity to a whole new level with the advent of “ubiquitous computing.” The late Mark Weiser, formerly chief technology officer at Xerox PARC, coined the term in 1988 to define a future in which PCs are replaced with tiny, invisible computers embedded in everyday objects. So, whether we call it ubiquitous, pervasive, mobile, embedded, invisible, ambient, or calm computing, the vision is nothing new. What’s new is the rapid transformation of this vision into reality. It’s really happening, right now. Where Moore’s Law meets Metcalfe’s Law , we’ve reached a tipping point, and there’s no going back. Faster, smaller, cheaper processors and devices. A rich tapestry of communication networks with ever-increasing bandwidth. A constant stream of acronyms tumbling into our vernacular: GPS, RFID, MEMS, IPv6, UWB. We don’t need a crystal ball to see the road ahead. As William Gibson warned us “The future exists today. It’s just unevenly distributed.”

My fascination with this future present dwells at the crossroads of ubiquitous computing and the Internet. We’re creating new interfaces to export networked information while simultaneously importing vast amounts of data about the real world into our networks. Familiar boundaries blur in this great intertwingling. Toilets sprout sensors. Objects consume their own metadata. Ambient devices, findable objects, tangible bits, wearables, implants, and ingestibles are just some of the strange mutations residing in this borderlands of atoms and bits. They are signposts on the road to ambient findability, a realm in which we can find anyone or anything from anywhere at anytime. Of course, ambient findability is not necessarily a goal. We may have serious reservations about life in the global Panopticon.[*] And from a practical perspective, it’s an unreachable destination. Perfect findability is unattainable. And yet, we’re surely headed in the general direction of the unexplored territory of ambient findability. So strap on your seatbelts, power up your smartphones, and prepare for turbulence. Beyond this place, there be dragons. Or is it streets paved with silicon? Either way, we’ll soon find out.

Everyware

In April 2001, after the agonizing process of closing my former company, I managed to escape into the sanctuary of Yosemite National Park. I enjoyed the romantic notion of figuring out what to do with the rest of my life while hiking in the wilderness. So, armed with a bottle of water and some beef jerky, I headed for the snowy peaks in search of transcendental moments and healing visions. Upon reaching the summit, I found myself alone, amidst the most breathtaking panorama I have ever seen. I sat for a while, enjoying the beauty and tranquility of the Sierra Nevada mountains. Then, I reached into my pocket, pulled out my cell phone, and called my mom. Can you hear me now?

These days, people use cell phones everywhere: in planes, trains, automobiles, grocery stores, golf courses, and bathtubs. During a half-marathon last summer, I saw a fellow runner with a cell phone held to his sweaty ear. In today’s society, such behavior barely raises eyebrows. Conspicuous consumption is hip. Leather holsters, swivel belt clips, colored faceplates, and personalized ringtones transform consumer appliance into hi-tech fashion statement: everyware for everybody who’s anybody. Until yesterday. Haven’t you heard? Cell phones are passé. GSM smart phones are where it’s at. Web, email, calendar, contacts, stereo, camera, television, and global positioning system in a single device. Moblogging from a ski lift in the Swiss Alps? Now that’s cool. Checking email while driving? Not so cool, though I’m guilty as charged. As William Gibson says, “the street finds its own use for things.” And that’s part of the fun. The search space for novel uses of mobile devices is immense and stretches well beyond findability into art, business, education, entertainment, healthcare, politics, and warfare. We can read, write, buy, sell, talk, listen, work, play, attack, and defend.

In Smart Mobs, Howard Rheingold emphasizes the potential of mobile communications to create a social revolution by enabling new forms of cooperation. He describes the emergent behavior exhibited by thumb tribes of connected teenagers: “the term ’swarming’ was frequently used by the people I met in Helsinki to describe the cybernegotiated public flocking behavior of texting adolescents.”[*] Rheingold notes that mobile devices enable groups of people to act in concert even if they don’t know each other, and cites numerous examples of peaceful (and not so peaceful) public demonstrations from Manila to Seattle in which tens of thousands of protestors were mobilized and coordinated by cell phones and waves of text messages. In his book, Rheingold tends toward the sunny side of this future by asserting the wisdom of crowds:

The right kinds of online social networks know more than the sum of their parts: connected and communicating in the right ways, populations of humans can exhibit a kind of collective intelligence.[†]

Of course, there’s also a dark side to these technologies of cooperation. Smart phones don’t always make for smart mobs. Groups of uninformed, agitated individuals can be dangerous and dumb, whether wielding pitch forks, flaming torches, or Nokia 7710s.

Fortunately, our mobile devices also enable us to become smarter (or at least more informed) individuals. We have instant access to an astonishing array of news sources, from CNN, Aljazeera, and the Hindustan Times to http://slashdot.org and http://rageboy.com. We can look up almost any fact from anywhere at anytime. Second and third and fourth opinions sprout like mushrooms after a rainfall. We have an unprecedented ability to choose our news and to see all sides of a story before making an informed decision. We can learn, and even better, we can remember, for our mobile devices also serve as outboard memory. They memorize schedules, names, addresses, phone numbers, passwords, birthdays, and grocery lists, so we don’t have to. And increasingly, we rely on them. Our gadgets become part of our lives. The transition from nice to necessary can happen surprisingly fast, as novel use becomes expected facility. Consider the following:

Student use of wireless laptops during classroom lectures for real-time reference (e.g., to fact check the professor’s claims) and backchannel communications with fellow students (i.e., the digital equivalent of passing notes).

Calling your spouse from the video store to gauge interest in a specific movie or from the grocery store to ask where to find the hot chocolate.

Googling a new acquaintance while waiting for him to arrive at a restaurant (he just called from the road to let you know he’d be five minutes late).

Using a smartphone to check Amazon customer reviews (and prices) of books found while browsing inside a Barnes & Noble bookstore.

Distributed, collaborative shopping by teenage girls using picture phones. How do you like this dress? Does this color look good on me? Should I buy one for you?

All of these uses and many more are becoming commonplace. Sometimes our mobile devices simply make us more efficient. Sometimes they cause fundamental and surprising changes in behavior. At the ragged edges of meatspace and cyberspace, the intertwingling has just begun. The users are inexperienced, the applications are immature, and the interfaces are exacting and temperamental. Tiny screens and keyboards don’t make for optimal usability under the best of conditions. Even a teenager with nimble fingers and good eyes will interact better with a desktop computer than a smartphone. But mobile computing involves imperfect conditions: poor lighting, limited power, erratic motion, divided attention, and fractional connectivity. Try reading a white paper or typing an email message on a Treo while walking on the beach on a sunny day with your three-year-old daughter. Watch out for seagulls and hold on tight. Treos aren’t waterproof, yet.

We will overcome some of these limitations. Batteries, which today contribute roughly 35% of a laptop computer’s weight, will grow smaller, last longer, and recharge faster. Evolutionary progress in traditional lithium batteries continues while micro fuel cells and 3D architectures built with nanotech promise revolution. And in connectivity, the patchy mosaic of Bluetooth, Wi-Fi, and cellular data wireless (GSM/GPRS, CDMA) will inevitably be transformed into what we experience as a seamless utility. Already, Wi-Fi hot spots in offices and cafés are morphing into hot zones covering urban cores and in some cases entire metropolitan areas. And ultra-wideband (UWB) technologies promise effective wireless data rates of well over one gigabit per second, easily enough for feature-length films and high-definition videoconferencing.

Interface advances are a bit trickier. Screen resolution, brightness, and contrast will improve, but size will remain an inherent problem of mobile computing. Our pockets aren’t getting any bigger. Here we must look to more exotic solutions like digital paper, head-mounted displays, and web on the wall. It’s tough to predict when and whether these technologies will shift from prototype to product. For now, visibility is limited. Similar challenges exist with input. Fat fingers on tiny keyboards are a major obstacle to mobile productivity. Chorded keyboards like the Twiddler, shown in Figure 4-1, are unlikely to enjoy widespread adoption despite the efforts of wearable computing advocates.[*] And, voice recognition has gone nearly nowhere in over a decade, thanks to inter-and intrapersonal variation (we don’t even speak consistently ourselves) and disruptive background noise.

Even major breakthroughs in speech-to-text software won’t remove all the problems. Do you really want strangers listening to you write email? Speaking of which, we must also acknowledge the limits of attention. Can we truly focus on reading and writing while walking and talking? Can we be entirely productive in taxi cabs and noisy cafés? Inveterate multitaskers will answer yes. Others will argue they’ve no choice, and for many globe trotters and road warriors, this is true. But many of us will find that much of our work is still best performed in a safe, familiar office environment with an ergonomic keyboard, a mouse, and a big flat panel monitor. In other words, smartphones will not replace desktops and laptops, but their use will expand into a growing number of growing niches. We may use them everywhere, but not for everything. We may rely on them for ready reference, but not so much for research. Just because we can doesn’t mean we will.

What’s most exciting is the anticipation of unforeseen applications. In the Cluetrain Manifesto, David Weinberger notes, “We don’t know what the Web is for but we’ve adopted it faster than any technology since fire.”[*] At the crossroads of pervasive computing and the Internet, this sentiment only rings louder. Adam Greenfield, a pioneer of everyware and a passionate advocate for ethical ubicomp notes:

Ubicomp is here right now. It lives on your cellphone, or in a chip on your dashboard, and comes out to play anytime you turn down iTunes’ volume with your phone or cruise through an EZPass lane. It is also, and simultaneously, what Gene Becker calls ‘a hundred-year problem’: a technical, social, ethical and political challenge of extraordinary subtlety and difficulty, resistant to comprehensive solution in anything like the near term.[†]

Visions of pervasive computing and ambient findability ignite our imaginations, but we’re a far cry from best practices for everyware, and the road ahead is neither straight nor narrow. But we should not fear this journey for we will not walk alone. As we wander the wilderness of ubicomp, our mobile devices will be our lifeline, connecting us as never before: indivisible and intertwingled. Can you hear me now?

Wayfinding 2.0

Speaking of journeys, it should come as no surprise that wayfinding is among the most fertile soils for technologies of intertwinglement. For even as we advance into a connected century, we continue to spend great scads of time moving our physical bodies through space, and despite the ready availability of maps and street signs, we still manage to get ourselves lost. Lost in cities or inside buildings or on the way.

One of my more memorable experiences happened a couple of years ago on the way to the doctor’s office. You see, our youngest daughter was born with an underdeveloped tear duct system that failed to properly drain the lubricants of her eyes. She would often wake in the morning with one or both eyes sealed shut with guck (that’s the technical term). Blocked tear ducts are a common problem in infants, but fortunately 90% of cases resolve themselves. Unfortunately, Claudia was in the 10% that require surgical probing, an outpatient procedure in which a blunt metal wire is inserted through the tear duct while the child lies wrapped in a blanket screaming bloody murder.

Suffice it to say, my wife and I were not very happy as we piled into the minivan and headed for our visit with the pediatric ophthalmologist. And after 20 minutes of trying to reconcile our map, shown in Figure 4-2, with the territory, we were considerably less happy. So, we’re late. We’re lost. Claudia is presciently crying in the back seat. My wife is trying to decode the worse than useless map. And I’m calling the doctor’s office on my cell phone to ask for directions while our minivan swerves violently through city streets.

This is the gritty reality of transmedia wayfinding at the dawn of the 21st century. Concrete mazes rendered barely navigable by the combination of lousy maps, illegible signs, missing landmarks, and desperate phone calls. Perhaps I exaggerate, but only to make an important point. Wayfinding remains an inefficient and even dangerous activity. At best, we waste time and endure needless stress. At worst, lives are lost when distracted drivers intertwingle their cars with immovable objects. There must be a better way, and fortunately we appear poised on the brink of breakthrough. After eons of bumbling around the planet, we’re about to take navigation to a whole new level. Wayfinding 2.0. And it begins with location awareness.

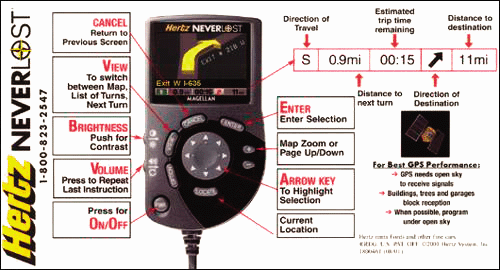

The crown jewel of next-generation wayfinding is the Global Positioning System (GPS), a satellite-based radionavigation system that enables land, sea, and airborne users to determine their three-dimensional position (latitude, longitude, altitude) and velocity from anywhere at any time. Compliments of the U.S. Department of Defense, 24 satellites orbiting 20,200 kilometers above the earth provide us with location awareness, accurate to within three meters. Equipped with a GPS receiver and map database, we can find our way like never before. In-car navigation systems like Hertz NeverLost , shown in Figure 4-3, are among the earliest mainstream applications. Select your destination from pre-programmed points of interest or enter an address or intersection, and they provide voice and visual turn-by-turn directions. These navigation systems are becoming increasingly accurate and affordable, and will eventually become user-friendly.

Our kids will wonder how we ever survived without them, and not just in the car. GPS receivers grow smaller and more ubiquitous every year. Handheld units are increasingly common for hiking in natural and urban environments. Many runners now chart their course and track mileage using a GPS watch, like that in Figure 4-4. And a variety of GPS attachments are available for smartphones. I’m expecting my next Treo will come with embedded GPS. No more printed maps. No more getting lost on the way to the doctor.

Of course, GPS isn’t perfect. It doesn’t actually work everywhere. Buildings, terrain, electronic interference, and sometimes even dense foliage can block signal reception. And for certain applications, such as wayfinding inside buildings, three meter accuracy isn’t sufficient. For extreme ubiquity and precision, we will rely (like sea turtles and honeybees) on a composite of positioning approaches. Outdoors, receivers that combine GPS with complementary dead-reckoning technologies to estimate position by continuously tracking course, direction, and speed can handle the big picture.

Indoors, and for any application in which extreme precision is required, we will depend on the location-sensing capabilities of other radiofrequency (RF) technologies such as Wi-Fi, Bluetooth, Ultra-wideband, and RFID. UWB, for example, is not just a standard for fast wireless communication. It just so happens that by calculating relative distances between nodes, UWB can achieve fine spatial resolution, identifying location to within one inch.

In considering potential applications of location-sensing technologies, it’s important to recognize a few important distinctions beyond range and accuracy. First, there’s the difference between physical position and symbolic location. GPS provides physical position such as 47°39’17” N by 122°18’23” W at a 20.5 meter elevation. A separate database or geographic information system is required to convert physical positions into symbolic locations such as in the kitchen, on the ninth floor, in Ann Arbor, next to a mailbox, or on an airplane approaching Amsterdam. Today’s crude databases and flat map interfaces are insufficient for modeling the complexity of 3D urban environments.

Similarly, there’s the distinction between absolute and relative location. GPS receivers use latitude, longitude, and altitude to define a shared reference grid (absolute position) for all located objects. In relative systems, on the other hand, each object has its own frame of reference. For example, a receiver used in a mountain rescue effort indicates the relative location (direction and proximity) of an avalanche victim’s transceiver.

Finally, there are issues of recognition and privacy. Systems that perform localized location computation ensure privacy. In GPS, the receiving device computes its own position, and the satellites have no knowledge about who uses their signals. In contrast, active badge and RFID systems require the located object to self-identify so that position may be computed by the external infrastructure. In other words, navigation and surveillance can intertwingle. Technology architecture has social impact.

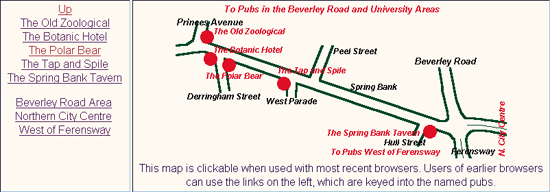

But let’s not wallow in privacy paranoia just yet. Why not dwell for a moment on the bright side of the big picture? The experience of becoming lost involuntarily is headed towards extinction. And our newfangled networked appliances promise all sorts of fascinating applications. Even before the widespread use of location-sensing technologies, wayfinding is being transformed by the Web. MapQuest and Google Maps provide coast-to-coast coverage, delivering maps and step-by-step driving directions to our computers and mobile phones. Google Local and Yahoo! Local enable the quick lookup of nearby businesses and services. Simply enter an address, city, or Zip Code to find a coffee shop, restaurant, movie theater, gas station, museum, or dentist near you. In merry old England, even the ancient tradition of wayfinding while intoxicated has been revolutionized by the Web, where countless web sites provide detailed maps for pub crawls of varying levels of difficulty. Pub crawl generators, like the one shown in Figure 4-5, make recommendations based on postal code, desired number of pubs, and the maximum distance between each pub.

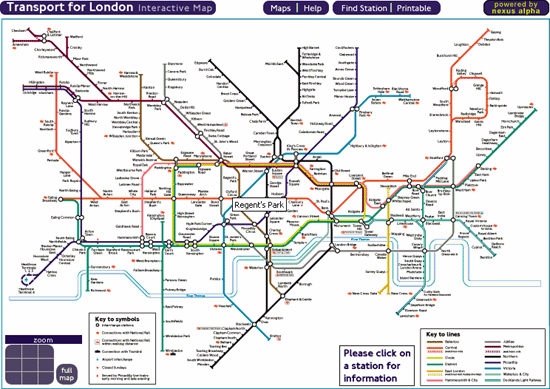

And on a more sober note, before visiting a hospital, shopping mall, or subway, we can grab a map from the web site, like that in Figure 4-6, and plan our trip from start to finish. Some bed and breakfasts even provide photos of each room, so we can see (and select) destinations from our point of origin. This brings new meaning to the experience of déjà vu.

Researchers have begun to intertwingle these applications with location-aware devices. At IBM’s Almaden Research Laboratory, for instance,

J.C. Spohrer has advanced the notion of geocoding through the WorldBoard infrastructure:

What if we could put information in places? More precisely, what if we could associate relevant information with a place and perceive the information as if it were really there? WorldBoard is a vision of doing just that on a planetary scale and as a natural part of everyday life. For example, imagine being able to enter an airport and see a virtual red carpet leading you right to your gate, look at the ground and see property lines or underground buried cables, walk along a nature trail and see virtual signs near plants and rocks, or simply look at the night sky and see the outlines of the constellations.[*]

We’re still a head-mounted display away from fulfilling this vision of augmented reality in a mainstream sense. The technologies exist, but the costs remain prohibitive, for now. But that’s not stopping us from transforming our world into a vast planetary chalkboard by annotating physical locations with virtual notes and images. These memes have long since escaped the laboratory and can be seen playing in the wild and on the Web.

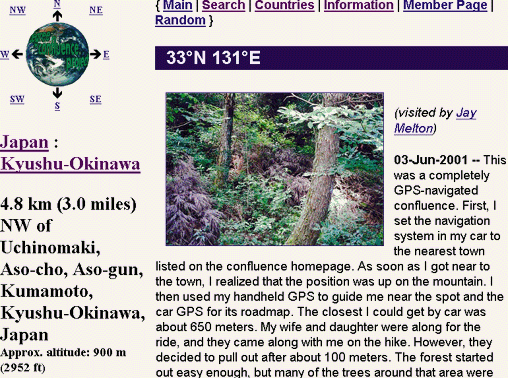

For instance, the Degree Confluence Project is collecting photographs and stories from all of the latitude and longitude integer degree intersections in the world: precise places in space, tagged with images and text, as shown in Figure 4-7. Anybody with a GPS receiver and a digital camera can contribute. Thus far, the collection boasts 40,000 photographs from 159 countries. So what is the purpose of this global database of images? Well, the stated goals include creating an organized sampling of the world and documenting the changes to these locations over time. But deep down, it’s about experimentation and fun. It’s about using technology to rediscover our natural world. In the words of one seeker:

One thing that I like a lot about confluence hunting is that this is a place that you have never seen (and many times will never see again), that you know exactly where it is, you do not how to get there but will find a way and, no matter how it turns out to be you will be so glad and satisfied to have been there![*]

A similar adventurous spirit infuses the sport of geocaching, a high-tech treasure hunting game for GPS users. In this game, people set up caches of maps, books, CDs, videos, pictures, money, jewelry, tickets, antiques, and other treasures. They then post the location coordinates on the Internet, and participants try to find the caches. The challenge derives from the distinction between knowing the location and reaching the location. Caches have been hidden on mountains, in trees, and even underwater. On any given day, http://geocaching.com lists over 100,000 caches in hundreds of countries.

And, in anticipation of GPS-enhanced photography, Mappr , shown in Figure 4-8, is already tapping into the wealth of descriptive metadata supplied by Flickr users to match pictures to places. For now, Mappr’s reliance on the uncontrolled, imprecise vocabulary of free tagging results in unreliable geographic data, but the maps sure are cool.

These games spread like wildfire because they capture the imagination. They are harbingers of a new relationship with physical space. It’s still early for mainstream applications, so we play our way into the future. That said, serious uses are beginning to take shape. The BrailleNote GPS , pitched as the

“Cadillac of Wayfinding Systems,” enables people who are blind or visually impaired to more easily navigate unfamiliar areas. Drawing upon a massive points-of-interest database, blind pedestrians can locate a train station or bus stop, meet a friend for lunch at a new restaurant, or find their way back to a hotel. Independence through ubicomp. And in Europe, a smartphone software provider named Psiloc sells an application for defining location-based actions and events. Sleep-deprived commuters can set an alarm to wake them when their train approaches the station, even if it’s ahead of schedule. If desired, an SMS message will automatically alert colleagues of your impending arrival. You can even use the Periodic SMS feature to provide family members with regular updates on your location. And all of a sudden, for better or for worse, we’ve once again dropped through the looking glass, for the same technology that helps us avoid getting lost can also help us be found.

Findable Objects

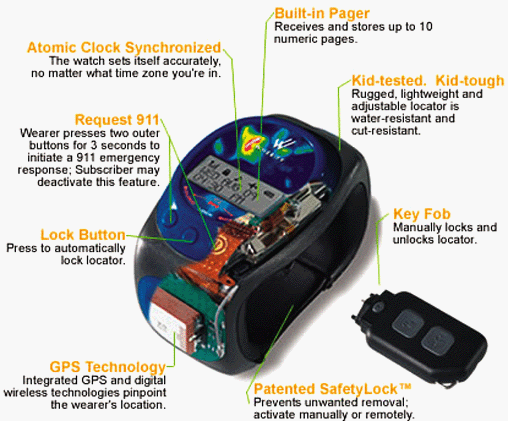

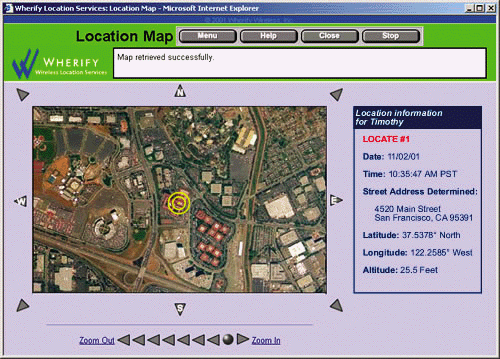

My favorite artifact from the future is the Wherify Wireless GPS Personal Locator for Kids, shown in Figure 4-9. It’s a watch, clock, pager, and tracking device all in one. You can buy it on Amazon. It’s available in Galactic Blue and Cosmic Purple. With a special key fob, you lock it on your kid’s wrist. And then, from the comfort of your home or office, you track your child’s location via the Internet, as shown in Figure 4-10. Features include:

Choose from a standard street map or custom aerial photo.

Define preset times for automatic “locates.”

Use “breadcrumbs” to see travel routes and location history.

Unlock the locator remotely once your child arrives safely at soccer practice.

Is this the greatest product ever or what? As Wherify explains, “Now you can have peace of mind 24 hours a day while your child is the high tech envy of the neighborhood!”

Of course, knowing where they are and knowing what they’re doing are very different things. The latter will require video and audio surveillance. Don’t worry. That’s coming.

Are you freaking out yet? Do you find this product disturbing in a profound Orwellian sense? Or, are you on the other side of the fence? Do you see it as yet another miracle of modern convenience? Perhaps you’re already on Amazon, placing your order.

That’s what I love about this product. It forces us to think about how we want to use technology. As parents, we go to great lengths to protect our kids. In an imperfect world, we use the available raw materials to craft solutions that work for our families. One size does not fit all, as illustrated by this amusing confession of George Brett:

My folks used a chicken wire pen for me. Sounds bad, but then again we lived near a large lake and my older cousins wanted me to join them swimming—so they were locked out and I was locked in. Other side benefit is that the alligators couldn’t get me either.[*]

We’ve been improvising in this fashion for millennia, making tough decisions that balance freedom and privacy with safety. But never before have we had so much choice. For when it comes to toddler tracking, the diversity of technologies and applications is amazing. Invisible perimeters or “geofences " alert you if your child leaves the house or yard or campsite. Radiofrequency leashes sound the alarm if they wander too far in a shopping mall or at the beach: you set the “safe distance” from 15 to 75 feet. And if visiting an amusement park, why buy when you can rent? At Legoland in Denmark, parents can pay three euros to have their child tagged for the day. The locator, attached by disposable wristband, lets the park’s 2.5 million square foot Wi-Fi network track the child anywhere in Legoland. Since approximately 1,600 children are separated from their parents in Legoland each year, this promises to be a seriously useful service.

Personal locator devices are also used to help care for people with Alzheimer’s disease, a progressive, irreversible condition that robs victims of their memory, cognitive abilities, and social skills. Alzheimer’s patients tend to lose track of time and become disoriented, so wandering can be a huge problem:

Between 60 to 70 percent of all patients with Alzheimer’s will wander, and possibly get lost, at some point during the course of their disease. Of these, a staggering 50 percent will die if they are not found within 24 hours.[†]

Applied Digital Solutions sells a device called the Digital Angel , which is worn as a watch and comes with a clip-on pager. Using GPS mapping software and cell phone networks, the Digital Angel alerts caretakers by email (sent to a cell phone, computer, PDA, or text pager) when a patient has wandered out of a designated area.

This intertwingling of GPS with cellular communication is an increasingly popular tracking solution. It is the foundation of vehicle location and management systems such as OnStar and Networkcar .[‡] GPS-enabled cell phones are used by law enforcement agencies to keep track of officers, and by parents to monitor the location and velocity of their teenage children:

As her daughter enjoyed a weekend road trip, Donna Butler sat back home 120 miles away at her personal computer and watched a blue dot tick slowly across the screen. But not slowly enough. ‘They were going 85 on the interstate where the speed limit is 70,’ said Butler, who interrupted Danielle’s getaway to let her know, ‘I will personally come up there and drive you home.’[*]

And if you don’t want someone to know they’re being watched, a wide variety of covert tracking devices are sold at web sites like http://spyville.com. One “satisfied” customer explained, “My husband was saying he was working late and it turned out he was going to the Holiday Inn. Now he’s living at the Holiday Inn.” These devices are also used for high-tech stalking. In a recent case, a man who attached one under his estranged wife’s car was ordered by a judge to wear a GPS device himself as part of his sentence for felony menacing by stalking: a punishment to fit the crime.

So, which of these location-sensing applications are acceptable? Some can be lifesavers while others are just plain spooky. Stalking clearly crosses the line. But what about tracking your teenager? Is that okay? Should you inform them of their status as findable object? Legally, you can track without telling, but you’ll have to work out the ethics yourself.[†] These are decisions we’ll have to make as individuals, corporations, and societies. And before we have time to decide, our relationships to findable objects are going to get a whole lot weirder thanks to the wonders of radiofrequency identification.

RFID is a disruptive technology poised to shift paradigms by transforming our ability to identify and locate physical objects. Initially, RFID is being sold as a next-generation barcode system on steroids that enables real-time supply chain visibility. Major retailers such as Wal-Mart and Tesco are in the midst of high-profile RFID rollouts designed to streamline logistics, reduce costs, stop theft, and improve demand forecasting accuracy. Key advantages of RFID over traditional barcode systems include:

RFID tags can be read from a distance through walls, packaging, clothes, and wallets. There is no requirement for line of sight between label and reader.

With barcodes, every can of Coke has the same universal product code (UPC). With RFID, each can has its own unique ID number. It’s classified as a can of Coke but also identified as a unique individual object.

RFID spills beyond identification into positioning. The same radiofrequency technologies that support communication (e.g., Wi-Fi, UWB) also enable the precise location and tracking of tagged objects.

It is this combination of advantages that compels RFID to migrate far beyond the supply chain. Consider this astonishing hodgepodge of applications :

Pharmaceutical companies are using RFID to provide track-and-trace protection for drugs and reduce drug counterfeiting and thefts. Each 100-tablet bottle of OxyContin, a widely abused pain killer, is now tagged by the manufacturer.

Hotels have deployed wireless RFID-enabled mini-bar systems to track their Toblerones, Pringles, and $5 Cokes. Remove any item for more than 30 seconds and the e-fridge chalks up a sale and notifies the hotel’s central database.

Electronic toll collection systems such as E-ZPass rely on RFID to identify moving vehicles and charge the associated accounts.

The European Central Bank is reportedly embedding RFID tags in euro notes to cut down on counterfeiting and money laundering.

Delta and United Airlines are actively exploring RFID baggage tracking programs to eliminate the errors and delays that plague the current system.

For more than a decade, pets have been injected with RFID tags. Estimates put the number of RFID-enabled recoveries in the U.S. and Canada at 5,000 per month. In Portugal, under a government initiative to control rabies, all two million dogs must be implanted with radio tags and registered in a national database by 2007.

Hospitals are using RFID bracelets to keep track of doctors, nurses, and patients. The same technology is used in prisons to track prisoners and in schools to track students.

At the Baja Beach Club in Barcelona, patrons with subdermal RFID implants can access the VIP lounge and pay for drinks without needing to carry a wallet or cash.

In a bid to fight government corruption, Mexico’s attorney general and several key staff members had RFID chips implanted to support tracking and authentication.

Clearly, radiofrequency identification is not your grandfather’s barcode. RFID represents a big step towards ambient findability. We’re talking about an Internet of Things without precedence in human history. Products, possessions, pets, and people all rendered into findable objects: cataloged, searchable, and locatable in space and time. The future exists today, and we’re just waiting for the world to catch up. As Adam Greenfield notes:

It is a future structurally latent in the new schema for Internet Protocol addressing, IPv6, which, with its 128-bit address space, provides some 6.5 × 1023 addresses for every square meter on the surface of our planet, and therefore quite abundantly enough for every pen and stamp and book and door in the world to talk to each other.[*]

But before we presume the ability to find anyone or anything from anywhere at any time, it’s worth evaluating the limits of today’s technology. After all, RFID is subject to the familiar tradeoffs of size, range, power, and cost. Weakness in a single area can rule out a whole suite of potential applications. To understand these tradeoffs, it’s important to distinguish between active and passive RFID. While both technologies use radiofrequency energy to communicate between the tag and reader, the method of powering the tags is different.

Passive tags have no internal power source. They depend on signals from the reader for activation. In passive systems, the tags are small and cheap, but the readers are expensive, their range is constrained to roughly three meters, and they’re unable to read multiple tags at once. This limits passive tag systems to scenarios in which tagged items move past readers (through a doorway or along a conveyor belt) in single file: great for supermarket checkout but mostly useless for nonlinear applications beyond the supply chain. In other words, you shouldn’t worry about Victoria’s Secret tracking your underwear, unless you’re being tailed by a suspicious operative wielding a bulky RFID reader.

Active tags, on the other hand, rely on internal batteries to continuously power their communication circuitry. With active RFID, the tags aren’t as small or cheap, but the systems can track thousands of items moving at more than 100 mph with operating ranges of 100 meters or more.[†] Additionally, active tags can support read/write data storage, thereby enabling a hospital wristband or badge to store a patient’s complete, editable medical record. So, active tags have some great advantages, but they’re too costly for most retail applications, and too big for many covert operations. For now, you wouldn’t want a subdermal active tag implant. It would be quite lumpy and changing the battery could be a real pain in the neck or arm or wherever. In any case, you get the picture. When it comes to ambient findability, RFID is as much promise as product.

But, it would be a shame to allow these due diligence findings to obscure our foresight and dim our curiosity. These barriers will not stand. The future that exists today will spread and mutate like a virus into tomorrow. Location-aware mobile computing devices. Ubiquitous high-speed radiofrequency networks. Active tags that are smaller, cheaper, and more abundant than postage stamps. We will have the technology. But how will we use it? To find our missing keys, socks, and remote controls? To locate our pets, kids, and spouses? To track our own movements through space and time? What small apparent oddities of today are destined to become commonplace? This question is asked and answered by science-fiction author and futurist Bruce Sterling, who describes a new class of self-revealing, user-configurable objects called spimes:

The most important thing to know about spimes is that they are precisely located in space and time. They have histories. They are recorded, tracked, inventoried, and always associated with a story. Spimes have identities, they are protagonists of a documented process. They are searchable, like Google.[*]

Sterling notes that books are well on their way to becoming spimes, for a book on Amazon is far more than the words between its covers. We can learn its cost and publisher; what other books the author has written; what readers think about the book; what other books those readers have bought; and we can keyword search the full text. Data and metadata intertwingle with patterns of purchase and use:

When you shop for Amazon, you’re already adding value to everything you look at on an Amazon screen. You don’t get paid for it, but your shopping is unpaid work for them. Imagine this blown to huge proportions and attached to all your physical possessions. Whenever you use a spime, you’re rubbing up against everybody else who has that same kind of spime. A spime is a users group first, and a physical object second.[†]

How will we handle that leap from class of product to individual object? The possibilities are intriguing. Let me Google my own bookcase. Show me all the books my friends own and where they’re located. Figure 4-11 shows one implementation. Does anybody in my neighborhood have this book? Where are they right now? But these imaginings also invoke questions about metadata and trust. What (and who) will we tag, and with whom will we share that information?

Already mobile social software services such as AT&T’s Find People Nearby are moving beyond the binary presence management of instant messaging by

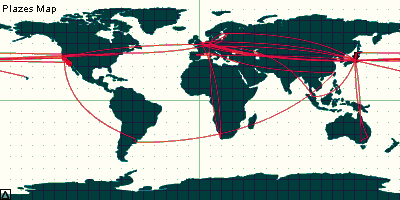

enabling us to share our location within trusted networks of friends, family members, and colleagues. Participants are learning to manage the intricacies of their own privacy. How much detail should we divulge? Will we store and share our location history, as Joi Ito has done in Figure 4-12? And when do we choose to be totally unfindable?

Conversely, we must define acceptable levels of metadata awareness. How much do we really want to know about the locations of our acquaintances? How wide are our circles, socially and spatially? Open the gates too wide and we’ll drown in sociospatial metadata, victims of virtual claustrophobia. And as we turn our products, possessions, pets, physical objects, and places into spime, we risk information overload and metadata madness. How will we choose the right tags? How will we find what we need? Location is easy, but what about aboutness? Can the folksonomies of Flickr and del.icio.us survive in the wild? Will free tagging deliver a physical world of findable objects, or will we find ourselves lost in the chaos of spime synonymy? This is the paradox of ambient findability. As information volume increases, our ability to find any particular item decreases. How will we Google our way through a trillion objects in motion? We’re staring down the barrel of the biggest vocabulary control challenge imaginable, and we can’t stop adding powder.

Imports

At the soft edges of cyberspace, we’re importing vast amounts of information about the real world while simultaneously designing new interfaces for export. It is this great intertwingling of physical and digital that promises a radical departure from the present, for we’re talking about nothing less than adding eyes and ears to our digital nervous system. The amount of information on today’s Web is insignificant in relation to the oceans of data that will pour into cyberspace through a global network of sensory devices. Change won’t come overnight, but our children will inherit a different world.

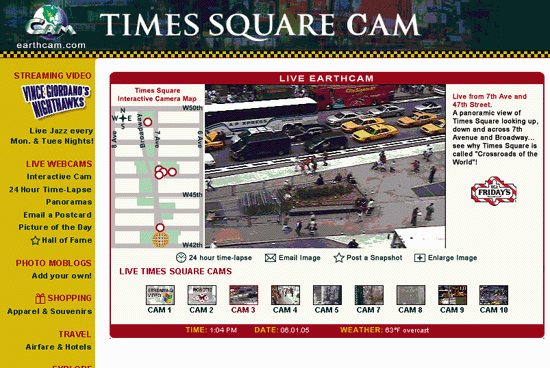

I stole a glimpse at this future a few years ago through the eyes of our eldest daughter, Claire. It was Christmas break, and I finally had a chance to play with my new laptop and wireless network while concurrently entertaining Claire. In the spirit of “embracing the genius of the AND,” I decided to try out some webcams, eventually settling on the Live Earthcam at Times Square, shown in Figure 4-13. So, I’m sitting on the couch in Ann Arbor with our two year old, and we’re streaming a real-time video feed from New York City, and she loves it! Traffic lights orchestrate an ebb and flow of people and cars, while honking horns punctuate the constant buzz of the big city. For some time, Claire and I are simultaneously in Ann Arbor and New York. This is not the willing suspension of disbelief. It’s not like television or the movies. We are experiencing real places in real time. The people are not actors. There is no script. Claire is fascinated by the bright yellow cars, and I explain they are “taxi cabs” and they help people get from place to place. When one appears on screen, she yells “taxi cab, taxi cab.” And this experience is seamlessly transferred into the real world where cries of “taxi cab, taxi cab” ring out on trips to the grocery store for the next several years. And each time this occurs, I’m struck by the oddity of a lesson learned at home through a virtual window onto Times Square.

A different yet related event occurred in Ann Arbor the following year. A new Blockbuster Video opened less than a mile from our home, and within months of the grand opening, the manager was found brutally murdered inside her own store. We were horrified and worried, particularly since the police had no immediate suspects. Fortunately, the crime was solved within

days, thanks to a surveillance video camera located across the street which had captured on tape a disgruntled employee entering Blockbuster at the time of the crime. It was the “across the street” part that caught my attention. I hadn’t realized the ubiquity and power of surveillance technology, until then.

The strange connection between these two stories is, of course, the video camera, which every year grows smaller, cheaper, more powerful, and better networked. From the fun of webcams to the gravity of telemedicine and remote surgery, the camera connects us to distant places and people. Yet it also raises serious concerns about the fate of privacy in a world of nanny cams and ceiling bubbles.

In 1998, the New York Civil Liberties Union issued a report detailing the prevalence of surveillance cameras in New York City and found 2,397 government and private cameras on the streets of Manhattan. In recent years, however, particularly after 9/11, we have seen these numbers grow exponentially. For example, in one of the more alarming cases, NYCLU volunteers found 13 video surveillance cameras in Chinatown in 1998; a similar search in 2004 found more than 600 such cameras.[*]

And these are only the visible, outdoor cameras that volunteers were able to identify by wandering the streets. Who knows how many hidden cameras populate the homes, businesses, parking lots, and roads we pass through each day? Not that nighttime is any real obstacle, for infrared cameras provide the ability to see clearly for thousands of feet in total darkness. And then there are those eyes in the sky we call satellites. Constellations of spacecraft hurtling through space, hundreds or thousands of miles above our planet, taking snapshots at sub-meter resolution. We have one of these snapshots hanging on the wall in our living room. It’s a picture of our neighborhood taken from space. Our house is clearly visible, and upon close examination, you can pick out the Japanese Zelkova tree we planted in our front yard a few years back. These images can be personal and powerful. They provide a new way to see our world at its best and worst, as Figure 4-14 illustrates.

Of course, eyes work even better when aided by ears, and this potent combination is now being used to stem gun violence on the streets of Chicago and Los Angeles. SENTRI employs microphone surveillance to recognize the sound of a gunshot. The system can precisely locate the point of origin, turn a camera to center the shooter in the viewfinder, and make a 911 call to summon the police.[*] The key innovation of SENTRI is its ability to distinguish a gunshot from other loud noises typical in an urban environment. In fact, this type of automatic pattern recognition is critical in a world where the flow of data far exceeds the limits of human attention. We can’t possibly watch all the video or view all the satellite imagery, so we must rely on computers to identify the important events and patterns, converting physical data into symbolic information. This is an area of active research and development, as the following examples illustrate:

In Microsoft’s Easy Living project, real-time 3D cameras provide stereo-vision positioning capabilities. These vision location systems can identify individuals by analyzing combinations of silhouette, skin color, and face pattern.

In Georgia Tech’s Smart Floor , embedded sensors capture footfalls. By utilizing biometric features such as weight, gait distance, and gait period, these smart floors are able to identify and track individuals by their unique footfall signatures.

Sensors are increasingly used in homes and businesses and by law enforcement agencies to detect chemical and biological agents in our air and water.

Sensors that track velocity, force, and location are finding their way into baseball gloves, football helmets, and soccer balls. Hockey pucks are loaded with infrared sensors that enable television viewers to see 95 mph slap shots as streaking comets.

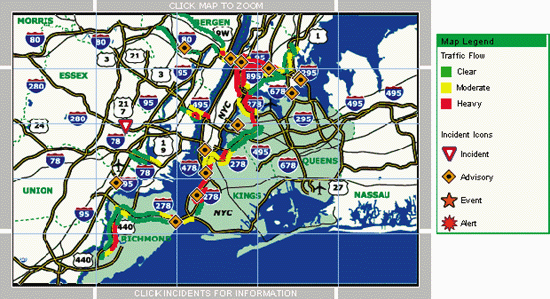

In many cities, networks of video cameras, radar devices, and road-embedded sensors provide commuters with real-time, online information about accidents, construction, road conditions, and traffic speeds, like the map in Figure 4-15.

And here’s one of my favorites. An English company is developing a healthcare toilet with embedded sensors that can monitor your diet and detect health problems:

The sensors can analyse for diabetes, for instance. Blood sugar can be monitored and the results sent via the Internet to the user’s doctor or pharmacist.[*]

Physical output becomes digital input in this transformation of waste into metadata. Sensors are coming to a loo near you. And this strange business of sensory cyberspace imports has just begun. We can hardly imagine all the weird and wonderful possibilities.

Exports

We will experience a growing trade deficit with cyberspace as we deposit far more data than we can ever withdraw, but that’s not to say that exports won’t be equally fascinating as we design new interfaces to networked information. After all, the future of interface is not just about huge flat panel monitors and tiny PDA screens. It’s about listening to your car navigation system. It’s about reading the New York Times on e-paper. And if David Rose has his way, it’s about feeling your email. Let me explain.

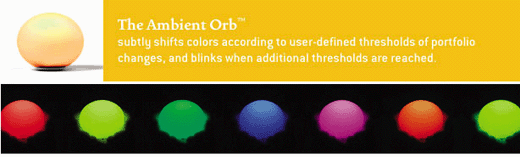

I first met David Rose in 2002 at the AIGA Experience Design Summit held at the Bellagio Hotel in Las Vegas. David is founder and chief creative officer of an MIT startup called Ambient Devices . At the conference, he captured our attention with a brilliant show-and-tell featuring a colorful array of products and prototypes. First up was a beautiful frosted glass orb that slowly transitions between thousands of colors to show changes in the weather, traffic, or the health of your stock portfolio, shown in Figure 4-16. Simply plug the orb into a power outlet, and it’s instantly up and running on a nationwide wireless network. Then, visit Ambient’s web portal to customize your orb. You can even track news, pollen forecasts, and the presence of colleagues on Instant Messenger. Designed to leverage the cognitive psychology phenomenon of pre-attentive processing, this crystal ball delivers glanceable, back-channel information. This is calm computing at its best.

But David didn’t stop with the orb. He had a whole table full of groovy gadgets, including an inbox-connectable pinwheel that spins faster and faster as your messages pile up (until the hurricane force compels you to check email) and a web-configurable health watch to remind people when to take their

prescription medicines. It didn’t take long for us to appreciate the full potential:

Ambient’s vision is to embed information representation in everyday objects: lights, pens, watches, walls, and wearables. With Ambient, the physical environment becomes an interface to digital information rendered as subtle changes in form, movement, sound, color or light.[*]

And if these ideas intrigue you, it’s definitely worth taking a short trip upstream to the MIT Media Laboratory and the Tangible Media Group of Hiroshi Ishii.

Tangible Bits, our vision of Human Computer Interaction (HCI), seeks to realize seamless interfaces between humans, digital information, and the physical environment by giving physical form to digital information and computation, making bits directly manipulable and perceptible. The goal is to blur the boundary between our bodies and cyberspace and to turn the architectural space into an interface.[*]

Hiroshi’s group has created a whole slew of exhibits and prototypes to illustrate the possibilities of tangible user interfaces. They include:

- Bricks

Graspable user interfaces that allow direct control of virtual objects through physical handles called “bricks.”

- Lumitouch

A pair of interactive, Internet-enabled picture frames for emotional communication. When a user touches their frame, the other’s frame lights up.

- MusicBottles

Three corked bottles that serve as containers and controls for the sounds of the violin, the cello, and the piano, shown in Figure 4-17.

Unfortunately, it’s hard to convey the rich, dynamic, interactive nature of tangible bits through print media. Direct experience is ideal, but the project videos available at http://tangible.media.mit.edu/ are the next best substitute.

Meanwhile, not so far away physically or philosophically, Jeffrey Huang at Harvard’s Graduate School of Design has been exploring the intersection of the Internet and architecture. As a proof of concept in “convergent architecture,” Huang worked with the architect Muriel Waldvogel to build the Swisshouse, a new type of consulate that connects a geographically dispersed scientific community. Persistent audio-video linkages and “web on the wall” are among the innovations used to build a bridge between academic institutions in the greater Boston area and a network of universities in Switzerland. The physical building serves as a large interface for knowledge exchange and as a testbed for studying telepresence, remote brainstorming, and distance learning.

In Digital Ground, University of Michigan professor Malcolm McCullough explores the emerging relationships between physical and digital architectures:

The built environment organizes flows of people, resources, and ideas. Social infrastructure has long involved architecture, but has also more recently included network computing. The latter tends to augment rather than replace the former; architecture has acquired a digital layer.[*]

At this point of intersection, McCullough believes the study of how people deal with technology and how people deal with each other through technology will be central to success, noting “as a consequence of pervasive computing, interaction design is poised to become one of the main liberal arts of the twenty-first century.”

Convergence

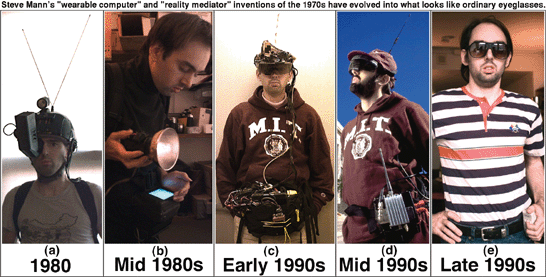

Of course, the ultimate convergence will happen even closer to home as the human body becomes an environment for computers. The first big step in this corporal merger is wearable computing, an area of active research at many of the world’s leading universities. Steve Mann, a pioneer in the field, has been working on WearComp for more than 20 years, with results shown in Figure 4-18. It now looks like his dedication is about to pay off. Wearable computing is disappearing into the fabric of life, becoming ubiquitous and invisible.

For instance, Xybernaut’s wearable computing platform is already being used by aircraft mechanics and engineers at Federal Express, Boeing, and the U.S. Department of Defense to dramatically improve mobile worker productivity. And in the consumer realm, Motorola recently announced a deal with Oakley to create wearable wireless glasses (to be used in combination with cell phones and MP3 players) and a separate venture with Burton to build Bluetooth-enabled jackets and headgear for skiers and snowboarders. Motorola’s vision is to enable “seamlessly mobile wireless communications anywhere and everywhere consumers want to be.”[*] One example from Philips is shown in Figure 4-19.

Beyond the ability to check email while skiing down a mountain, one of the more interesting applications of WearComp is the capture of life experiences. In the MyLifeBits project, a team at Microsoft is exploring the mix of hardware, software, and metadata tags necessary to store and retrieve everything we see, hear, and read. Based on success with wearable prototypes that integrate cameras, sensors, and terabyte hard drives, the researchers predict that “users will eventually be able to keep every document they read, every picture they view, all the audio they hear, and a good portion of what they see.” They expect terabyte drives to be common and inexpensive (<$300) by 2007. In addition to serving as digital scrapbooks and photo albums, these wearables will provide memory augmentation, helping us recall the name of the person we just met. This idea of personal video capture is nothing new to Steve Mann who “built the world’s first covert fully functional WearComp with display and camera concealed in ordinary eyeglasses in 1995.” His vision of inverse surveillance has recently taken on new life under the label of sousveillance, which means in French “to watch from below.” In a backlash against government and corporate surveillance, growing numbers of citizens are donning wearables to watch the watchers, a trend toward the “reciprocal transparency” that David Brin explores in The Transparent Society.[*]

But we shouldn’t get too hung up on wearable computing, which is really only a stepping stone on the path to cybernetic transformation. Despite our uneasy relationship with such terms as “cyborg” and “transhuman,” the convergence of mechanical, electronic, and biological systems is well underway. For instance, Stephen Hawking has a progressive neurodegenerative disease called ALS that has rendered him physically unable to walk or talk. And yet, he is able to live a productive and rewarding life as a husband, father, and preeminent physicist. He does so with the help of a customized wheelchair with an onboard laptop with cellular and wireless devices, a universally programmable infrared remote control for opening doors and operating consumer electronics, a speech synthesizer, and a handheld input device with one button. It is this single button and the equipment behind it that connects Stephen Hawking to his family, his colleagues, and the global Internet. With respect to his personal experience and his contributions to society, man and machine have been intertwingled. And he is not alone. Consider the following:

About 78% of Americans have had an amalgam of copper, silver, and mercury permanently implanted in their bodies. We call them fillings.

More than 60,000 people worldwide have had cochlear implants surgically embedded to compensate for damaged or non-working parts of the inner ear.

In 2004, the U.S. Food and Drug Administration approved the country’s first radiofrequency identification chip for implantation into patients in hospitals. The intent is to provide immediate positive identification. The tags are injected into the fatty tissue of the upper arm. Their estimated life is 20 years.

A company in Israel has developed an ingestible “camera-in-a-pill” which obtains color video of the gastrointestinal tract as it passes through the body. And Mini Mitter in the U.S. sells a core body temperature monitor that features ingestible capsules that communicate wirelessly with the outside world.

New video games with wireless headsets allow players to control the action with their brain waves. And scientists at Duke University have built a brain implant that lets monkeys control a robotic arm via the Internet with their thoughts.

In the realm of implants and ingestibles, fact is stranger than fiction. We’ve already stepped onto the slippery slope of corporal convergence. As technology relentlessly increases the angle of inclination, direction can be assumed, while distance and velocity remain in question. Healthcare supplies the wedge for early adoption. Who wants to fight against lifesaving technologies? Fun and fashion will carry them from hospital to high school, as ringtones and belly rings intertwingle themselves into the bodies of teenagers. The conspicuous consumption of cybernetics will drive parents crazy, though it will be what’s hidden that keeps us up at night. But eventually, we’ll reach a techno-cultural tipping point, and convergence will go mainstream. Will we be chipped at birth? Will it become illegal to live implant-free? How far will we go? Only time will tell. Our destination lies shrouded in fog, but our direction is clear. We’re on the yellow brick road to ambient findability, and we’ve got magic slippers to help us find our way.

Asylum

Do we really want to go there? This is a question we must continue to ask as we intertwingle ourselves into a future with exciting benefits but cloudy costs. Though subdermal RFID chips were approved on the basis of their lifesaving potential, the FDA raised serious questions about their safety including electrical hazards, MRI incompatibility, adverse tissue reaction, and migration of the implanted transponder. In their guidance document, the FDA also detailed the risks of compromised information security, noting that transmissions of medical and financial data could be intercepted, and that the devices could be used to track an individual’s movements and location. I don’t know about you, but I plan to wait out the beta test.

But even as we spurn the bleeding edge, these technologies seep into every nook and cranny of our lives. I recently stumbled across a telling story about ubiquitous computing at a leading psychiatric hospital in Manhattan.[*] Based on the belief that allowing patients to talk on the phone may speed their recovery from depression or hasten their emergence from psychosis, doctors had approved the use of cell phones and other wireless devices. The patients loved this new freedom, and the ward was soon bustling with cell phones, laptops, Palm Pilots, and BlackBerries. As you might suspect, the results were mixed. The enhanced personal communication appeared to have real clinical benefit for many patients, but on the other hand, nurses found themselves constantly recharging batteries. In a place where people may use power cords to hang themselves, wireless has special meaning. However, the most serious problem was disruption. As one doctor noted:

There was a constant ringing on the unit. All these different ring tones. Some people would put them on vibrate mode and sneak them into group and then want to walk out to answer their calls. Or they would be talking to their friends and would ignore the nurses.

The final straw was the new camera phones, which threatened to eliminate any semblance of privacy. The intertwingling of inside and outside had spiraled out of control. The very essence of the asylum as a save haven and protective shelter was under attack. The decision was made. Laptops and Palm Pilots were permitted, but no more cell phones. At first, there were protests as patients argued their right to communicate, but eventually the right to privacy and the virtues of peaceful sanctuary prevailed. Of course, as the lines between cell phones and Palm Pilots blur, the debate may be revisited. Said one doctor:

Is it over? I don’t know. Here at the institute, our enthusiasm for wireless connectivity has been tempered by reality. We have reclaimed a fragment of asylum. But my guess is that we will face a next challenge by the wireless world, and that we will continually have to work to define our relation to it. My guess is that the battle has just begun.

This story resonates in the outside world. We love our cell phones but not the disruption. We love our email but not the spam. Our enthusiasm for ubiquitous computing will undoubtedly be tempered by reality. Our future will be at least as messy as our present. But we will muddle through as usual, satisficing under conditions of bounded rationality. And if we are lucky, and if we make good decisions about how to intertwingle our lives with technology, perhaps we too can reclaim a fragment of asylum.

[*] The Panopticon is a type of prison designed by the philosopher Jeremy Bentham. The concept of the design is to allow an observer to observe all prisoners without the prisoners being able to tell if they are being observed or not, thus conveying a “sentiment of an invisible omniscience.” From http://en.wikipedia.org/wiki/Panopticon.

[*] Smart Mobs by Howard Rheingold. Perseus (2002), p. 13.

[†] Rheingold, p. 179.

[*] Georgia Tech professor Thad Starner invented a four-inch strip of Velcro that sticks a Twiddler to a shoulder bag, enabling conversion from storage to use in two seconds, the optimal speed of access based upon his usability research. Are you ready for geek chic?

[*] The Cluetrain Manifesto by Rick Levine, Christopher Locke, Doc Searls, and David Weinberger. Perseus (2000), p. 43.

[†] “Design Engaged: The Final Programme” by Adam Greenfield. From http://v-2.org/displayArticle.php?article_num=908.

[*] “Location Systems for Ubiquitous Computing” by Jeffrey Hightower and Gaetano Borriello. Available at http://www.intel-research.net/Publications/Seattle/062120021154_45.pdf.

[*] “Information in Places” by J.C. Spohrer. Available at http://www.research.ibm.com/journal/sj/384/spohrer.pdf.

[*] The Degree Confluence Project, http://www.confluence.org/.

[*] Comment by George Brett during a discussion on Ed Vielmetti’s Vacuum mailing list.

[†] “GPS Technology and Alzheimer’s Disease,” http://alzheimers.upmc.com/GPS.htm.

[‡] GM’s OnStar pioneered the concept of smart cars with GPS and cellular communications to support wayfinding, remote diagnostics, emergency services, and stolen vehicle recovery. Networkcar’s innovation has been to eliminate the need for professional dispatchers by placing the information directly into the consumers’ hands via the Web. Companies (such as trucking firms and car rental agencies) can monitor their vehicle fleets. Individuals can monitor their own cars.

[*] “Cell Phones Ring Knell on Privacy.” Chicago Tribune, January 1, 2005.

[†] “Tracking Your Children With GPS: Do You Have the Right?” by Stephen N. Roberts. Available at http://wireless.sys-con.com/read/41433.htm.

[*] “All Watched Over by Machines of Loving Grace” by Adam Greenfield. Available at http://www.boxesandarrows.com/archives/all_watched_over_by_machines_of_loving_grace.php.

[†] “Active and Passive RFID: Two Distinct, But Complementary Technologies for Real-Time Supply Chain Visibility,” http://www.autoid.org/2002_Documents/sc31_wg4/docs_501-520/520_18000-7_WhitePaper.pdf.

[*] “When Blobjects Rule the Earth” by Bruce Sterling. SIGGRAPH 2004 Keynote. Available from http://www.boingboing.net/images/blobjects.htm.

[†] Sterling, http://www.boingboing.net/images/blobjects.htm.

[*] Surveillance Camera Project, http://www.nyclu.org/surveillance_camera_main.html.

[*] “Waiting for the Gun” by Eric Mankin. From http://www.usc.edu/uscnews/stories/10810.html.

[*] Twyford Bathrooms, http://www.twyfordbathrooms.com/.

[*] Ambient Devices, http://www.ambientdevices.com/.

[*] Tangible Media Group at MIT, http://tangible.media.mit.edu/.

[*] Digital Ground by Malcolm McCullough. MIT Press (2004), p. 47.

[*] “Motorola, Oakley Team to Make Wearable Wireless” by Keith Regan. E-Commerce Times, January 21, 2005.

[*] The Transparent Society by David Brin. Perseus (1998).

[*] “In a Mental Institute, the Call of the Outside” by David Hellerstein, M.D. New York Times, January 27, 2004.

Get Ambient Findability now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.