Chapter 4. Mitigation and Recovery

We’ve talked about scaling incident management, and using component responders and SoS responders to help manage incidents as your company scales. We’ve also covered the characteristics of a successful incident response organization, and discussed managing risk and preventing on-call burnout. Here, we talk about recovery after an incident has occurred. We’ll start by focusing on urgent mitigations.

Urgent Mitigations

Previously, we encouraged you to “stop the bleeding” during a service incident. We also established that recovery includes the urgent mitigations1 needed in order to avoid impact or prevent growth in impact severity. Let’s touch on what that means and some ways to make mitigation easier during urgent circumstances.

Imagine that your service is having a bad time. The outage has begun, it’s been detected, it’s causing user impact, and you’re at the helm. Your first priority should always be to stop or lessen the user impact, not to figure out what’s causing the issue. Imagine you’re in a house and the roof begins to leak. The first thing you’re likely to do is place a bucket under the dripping water to prevent further water damage, before you grab your roofing supplies and head upstairs to figure out what’s causing the leak. (As we’ll find out later, if the roofing failures are the root cause, the rain is the trigger.) The bucket reduces the impact until the roof is fixed and the sky clears. To stop or lessen user impact during a service breakage, you’ll want to have some buckets ready to go. We refer to these metaphorical buckets as generic mitigations.

A generic mitigation is an action that you can take to reduce the impact of a wide variety of outages while you’re figuring out what needs to be fixed.

The mitigations that are most applicable to your service vary, depending on the pathways by which your users can be impacted. Some of the basic building blocks are the ability to roll back a binary, drain or relocate traffic, and add capacity. These Band-Aids are intended to buy you and your service time so that you can figure out a meaningful fix which can fully resolve the underlying issues. In other words, they fix the symptoms of the outage rather than the causes. You shouldn’t have to fully understand your outage to use a generic mitigation.

Consider doing the research and investing in the development of some quick-fix buttons (the metaphorical buckets). Remember that a bucket might be a simple tool, but it can still be used improperly. Therefore, to use generic mitigations correctly, it’s important to practice them during regular resilience testing.

Reducing the Impact of Incidents

Besides generic mitigations, which you first use to mitigate an urgent situation or an incident, you’ll need to think about how to reduce the impact of incidents in the long run. Incidents is an internal term. In reality, your customers don’t really care about incidents or the number of incidents—what they do really care about is reliability. To meet your users’ expectations and achieve the desired level of reliability, you need to design and run reliable systems. To do that you need to align your actions for each stage in the incident management lifecycle mentioned previously: preparedness, response, and recovery. Think about the things you can do before, during, and after the incident to improve your systems.

While it’s very difficult to measure customer trust, there are some proxies you can use to measure how well you’re providing a reliable customer experience. We call the measurement of customer experience a service-level indicator (SLI). An SLI tells you how well your service is doing at any moment in time. Is it performing acceptably, or not?

For this scope, the customer can be an end user, a human or system (such as an API), or another internal service. The internal service is like a core service serving another internal service, which serves the end user. In that regard, you can be as reliable as your critical dependencies (i.e., a hard dependency or a dependency that cannot be mitigated—if it fails, you fail). This means that if your customer-facing services depend on internal services, those services need to provide a higher level of reliability to provide the needed reliability level to the customer.

The reliability target for an SLI is called a service-level objective (SLO). An SLO aggregates the target over time: it says that during a certain period, this is your target and this is how well you are performing against it (often measured as a percentage).2

Most of you are probably familiar with service-level agreements (SLAs). An SLA defines what you’ve promised to provide your customers; that is, what you’re willing to do (e.g., refund money) if you fail to meet your objectives. To achieve this, you need your SLOs—your targets—to be more restrictive than your SLAs.3

The tool we use to examine and measure our users’ happiness is called the user journey. User journeys are textual statements written to represent the user perspective. User journeys explore how your users are interacting with your service to achieve a set of goals. The most important user journey is the critical user journey (CUJ).4

Once you’ve defined the targets that are important to you and your users or customers, you can start to think about what happens when you fail to meet those targets.

Calculating the Impact of Incidents

Incidents impact the reliability target. They are affected by the number of failures you have, the length and the blast radius, and the “size” of these failures. So, to reduce the impact of an incident, you first need to understand what you can do to reduce the impact. Let’s look at how to quantify and measure the impact of an incident.

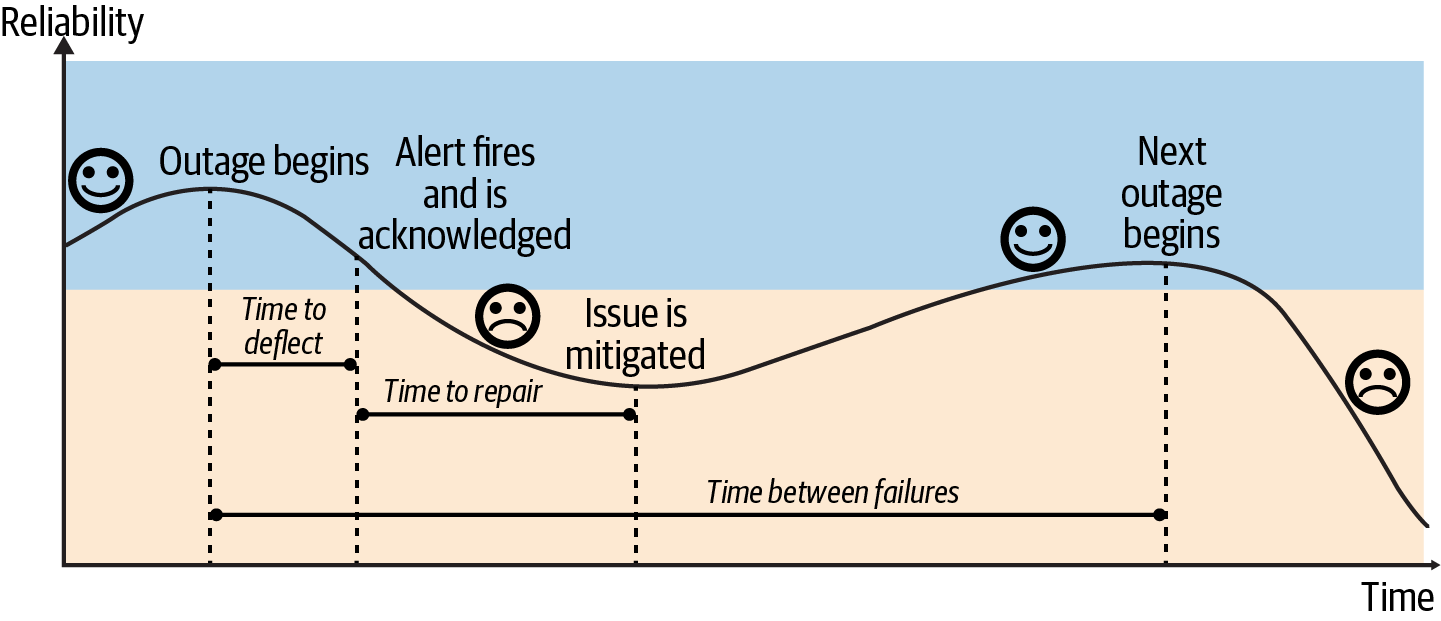

Figure 4-1 demonstrates that to measure the impact, you calculate the time that you are not reliable. This is the time it takes for you to detect that there is an impact, plus the time it takes to repair (mitigate) it. You then multiply this by the number of incidents, which is determined by the frequency of incidents.

Figure 4-1. Outage lifecycle

The key metrics are time to detect, time to repair, and time between failures:

-

Time to detect (TTD) is the amount of time from when an outage occurs to some human being notified or alerted that an issue is occurring.

-

Time to repair (TTR) begins when someone is alerted to the problem and ends when the problem has been mitigated. The key word here is mitigated! This doesn’t mean the time it took you to submit code to fix the problem. It’s the time it took the responder to mitigate the customer impact; for example, by shifting traffic to another region.

-

Time between failures (TBF) is the time from the beginning of one incident to the beginning of the next incident of the same type.

Reducing customer impact means reducing the four axes in the following equation—time to detect, time to repair, time between failures, and impact.

To reduce the impact of incidents and enable systems to recover to a known state, you need a combination of technology and “human” aspects, such as processes and enablement. At Google, we found that once a human is involved, the outage will last at least 20 to 30 minutes. In general, automation and self-healing systems are a great strategy, since both help reduce the time to detect and time to repair.

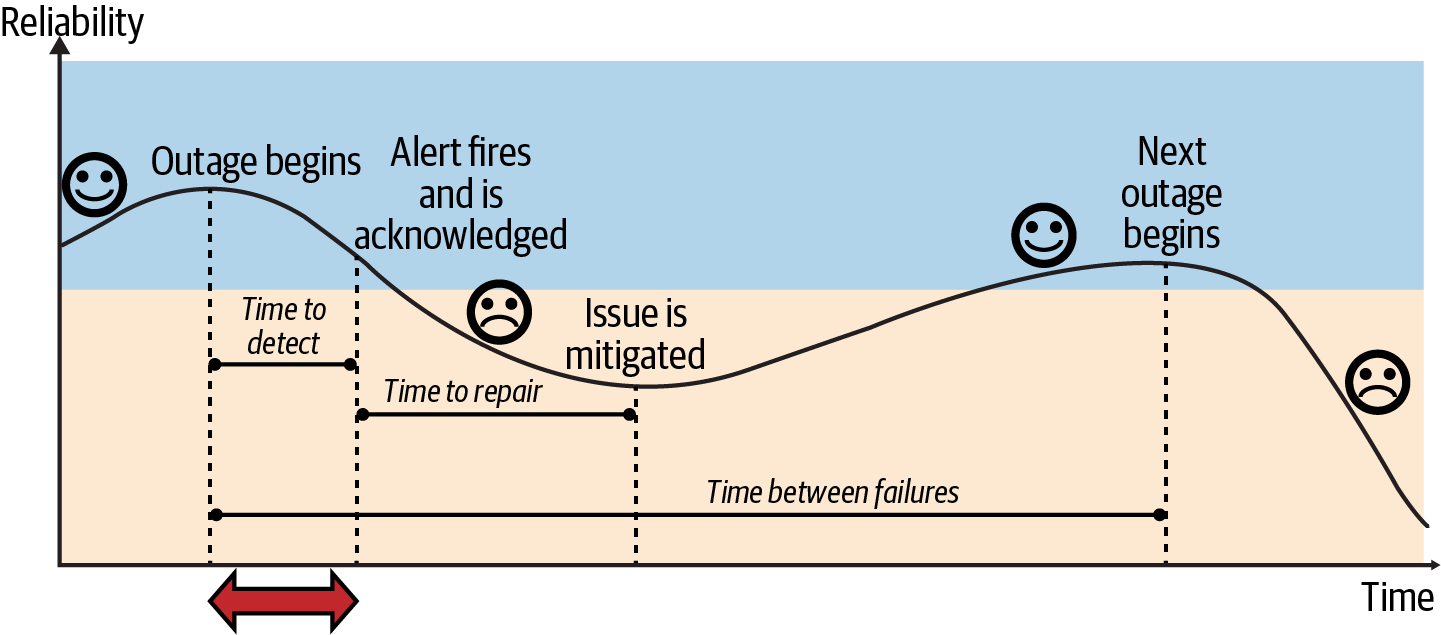

Figure 4-2.

It’s important to note that you should also be mindful of the method you use. Simply decreasing your alerting threshold can lead to false positives and noise, and relying too heavily on implementing quick fixes using automation might reduce the time to repair but lead to ignoring the underlying issue. In the next section, we share several strategies that can help reduce the time to detect, time to repair, and frequency of your incidents in a more strategic way.

Reducing the Time to Detect

One way to reduce the impact of incidents is to reduce the time to detect the incident (Figure 4-3). As part of drafting your SLO (your reliability target), you perform a risk analysis and figure out what you need to prioritize, and then you identify what can prevent you from achieving your SLO; this can also help you reduce the time to detect an incident. In addition, you can do the following to minimize the time to detect:

-

Align your SLIs, your indicators for customer happiness, as close as you can to the expectations of your users, which can be real people or other services. Furthermore, align your alerts with your SLOs (i.e., your targets), and review them periodically to make sure they still represent your users’ happiness.

-

Use fresh signal data. By this, we mean that you should measure your quality alerts using different measurement strategies, as we discussed earlier. It’s important to choose what works best to get the data: streams, logs, or batch processing. In that regard, it’s also important to find the right balance between alerting too quickly, which can cause noise, and alerting too slowly, which may impact your users. (Note that noisy alerts are one of the most common complaints you hear from Ops teams, be they traditional DevOps teams or SREs.)

-

Use effective alerts to avoid alert fatigue. Use pages when you need immediate action. Only the right responders—the specific team and owners—should get the alerts. (Note that another common complaint is having alerts that are not actionable.)

However, a follow-up question to this is: “If you page only on things that require immediate action, what do you do with the rest of the issues?” One solution is to have different tools and platforms for different reasons. Maybe the “right platform” is a ticketing system or a dashboard, or maybe you only need the metric for troubleshooting and debugging in a “pull” mode.

Figure 4-3. Outage lifecycle: time to detect

Reducing the Time to Repair

We discussed reducing the time to detect as one way to reduce the impact of incidents. Another way to reduce the impact is by reducing the time to repair (Figure 4-4). Reducing the time to repair is mostly about the “human side.” Using incident management protocols and organizing an incident management response reduces the ambiguity of incident management and the time to mitigate the impact on your users. Beyond that, you want to train the responders, have clear procedures and playbooks, and reduce the stress around on-call. Let’s look at these strategies in detail.

Figure 4-4. Outage lifecycle: time to repair

Train the responders

Unprepared on-callers lead to longer repair times. Consider conducting on-call training sessions on topics such as disaster recovery testing, or running the Wheel of Misfortune exercise we mentioned earlier. Another technique is a mentored ramp-up to on-call. Having on-callers work in pairs (“pair on call”), or having an apprenticeship where the mentee joins an experienced on-caller during their shifts (“shadowing”), can be helpful in growing confidence in new teammates. Remember that on-call can be stressful. Having clear incident management processes can reduce that stress as it eliminates any ambiguity and clarifies the actions that are needed.5

Establish an organized incident response procedure

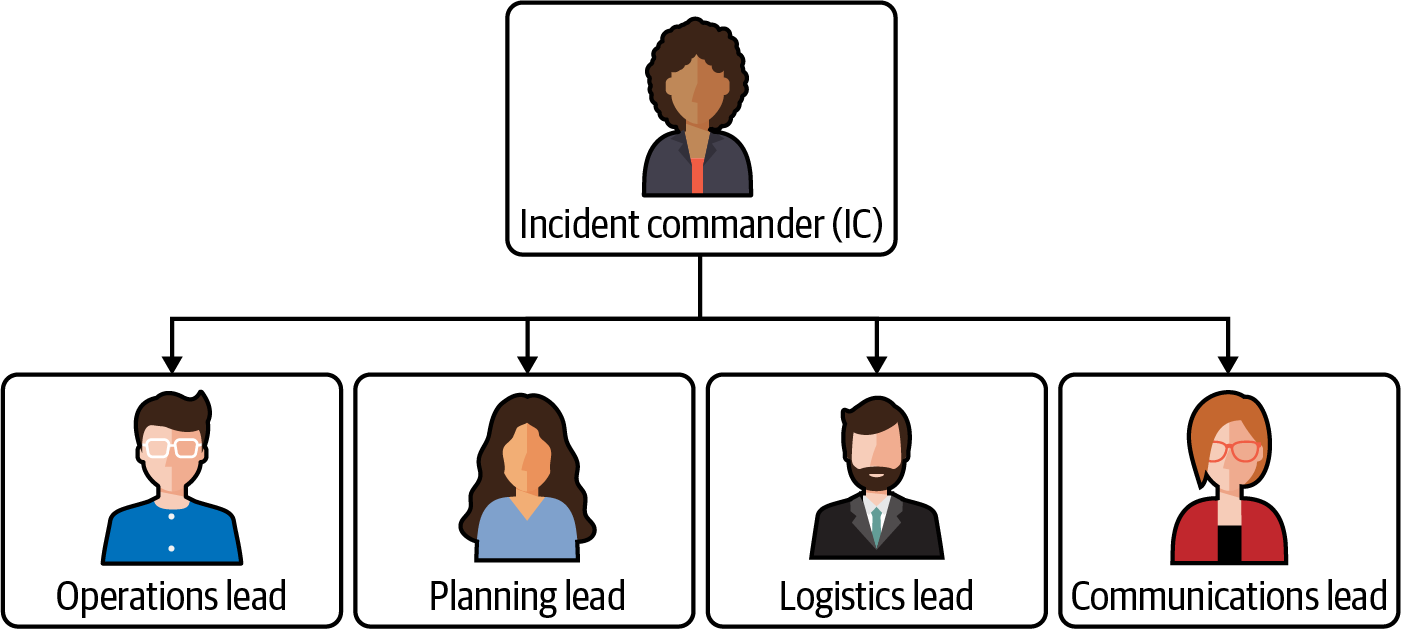

There are some common problems regarding incident management. For example, lack of accountability, poor communications, missing hierarchy, and freelancing/heroes can result in longer resolution times, add additional stress for the on-callers and responders, and impact your customers. To address this, we recommend organizing a response by establishing a hierarchical structure with clear roles, tasks, and communication channels. This helps maintain a clear line of command and designates clearly defined roles.

At Google, we use IMAG (Incident Management at Google), a flexible framework based on the Incident Command System (ICS) used by firefighters and medics. IMAG teaches you how to organize an emergency response by establishing a hierarchical structure with clear roles, tasks, and communication channels (Figure 4-5). It establishes a standard, consistent way to handle emergencies and organize an effective response.6

Figure 4-5. An example ICS hierarchy

The IMAG protocol provides a framework for those working to resolve an incident, enabling the emergency response team to be self-organized and efficient by ensuring communication between responders and relevant stakeholders, keeping control over the incident response, and helping coordinate the response effort. It asserts that the incident commander (IC) is responsible for coordinating the response and delegating responsibilities, while everyone else reports to the IC. Each person has a specific, defined role—for example, the operations lead is responsible for fixing the issue, and the communications lead is responsible for handling communication.

By using such a protocol, you can reduce ambiguity, make it clear that it’s a team effort, and reduce the time to repair.7

Establish clear on-call policies and processes

We recommend documenting your incident response and on-call policies, as well as your emergency response processes, both during and after an outage. This includes a clear escalation path and the assignment of responsibilities during an outage. This reduces the ambiguity and stress associated with handling outages.

Write useful runbooks/playbooks

Documentation is important, since it helps turn on-the-job experience into knowledge available to all teammates regardless of tenure. By prioritizing and setting time aside for documentation, as well as creating playbooks and policies that capture procedures, you can have teammates who readily recognize how an incident might present itself—a valuable advantage. Playbooks don’t have to be robust at first; start simple to provide a clear starting point, and then iterate. A good rule of thumb is Google’s see it, fix it approach (i.e., solve problems as you uncover them), and letting new team members update those playbooks as part of their onboarding.

Make playbook development a key postmortem action item, and recognize it as a positive team contribution from a performance management perspective. This usually requires leadership prioritization and allocating the necessary resources as part of the development sprint.

Reduce responder fatigue

As mentioned in Chapter 2, the mental cost of responder fatigue is well documented. Furthermore, if your responders are exhausted, this will affect their ability to resolve issues. You need to make sure shifts are balanced, and if they aren’t, to use data to help you understand why and reduce the toil.8

Invest in data collection and observability

You want to be able to make decisions based on data, so a lack of monitoring or observability is an antipattern. If you cannot see, you won’t know where you are going. Therefore, encourage a culture of measurement in the organization, collecting metrics that are close to the customer experience, and measure how well you are doing against your targets and your error budget burn rate so that you can react and adjust priorities. Also, measure the team’s toil and review your SLIs and SLOs periodically.

You want to have as much quality data as you can. It’s especially important to measure things as close to the customer experience as possible; this helps you troubleshoot and debug the problem. Collect application and business metrics, in order to have dashboards and visualization focused on the customer experience and critical user journeys. This means having dashboards that aim for a specific audience with specific goals in mind. A manager’s view of SLOs is very different from a dashboard that needs to be used for troubleshooting an incident.

As you can see, there are several things you can do to reduce the time to repair and minimize the impact of incidents. Now let’s look at increasing the time between failures as another way to reduce the impact of incidents.

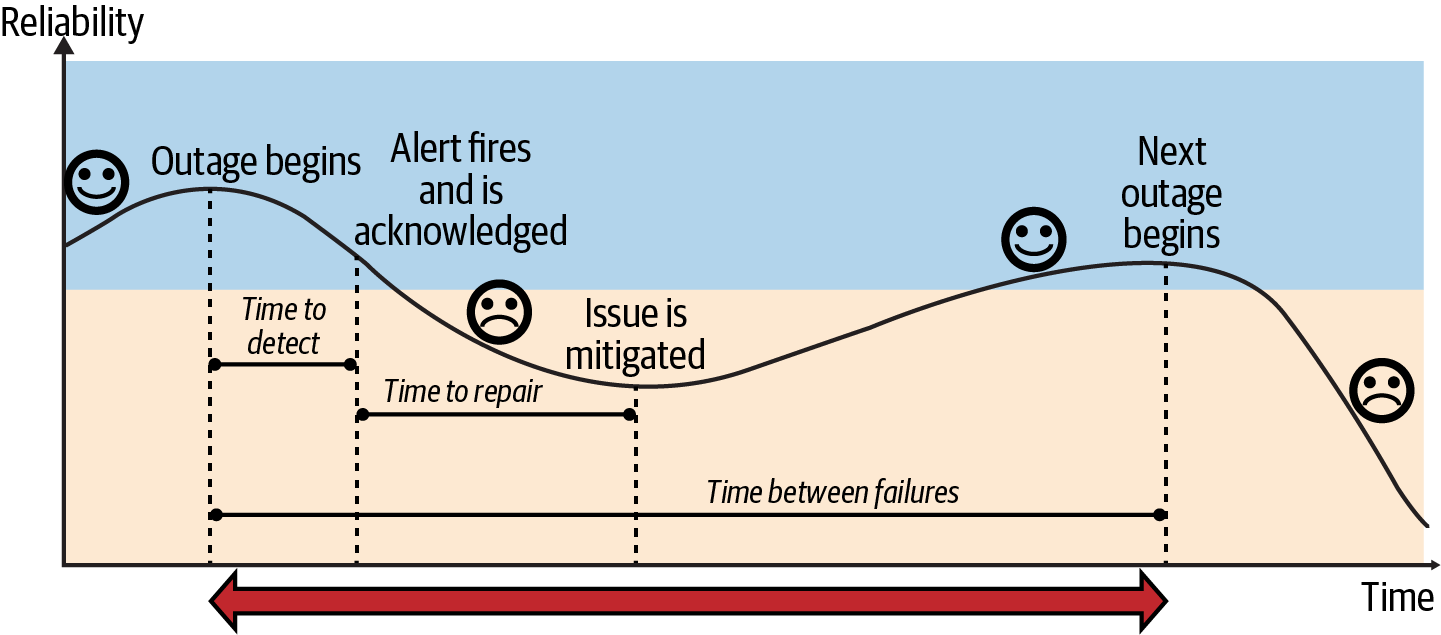

Increasing the Time Between Failures

To increase the time between failures and reduce the number of failures, you can refactor the architecture and address the points of failure that were identified during risk analysis and process improvement (Figure 4-6). You can also do several additional things to increase the TBF.

Figure 4-6. Outage lifecycle: time between failures

Avoid antipatterns

We mentioned several antipatterns throughout this report, including a lack of observability and having positive feedback loops, which can overload the system and can cause cascading issues like crashing. You want to avoid these antipatterns.

Spread risks

You should spread the risks by having redundancies, decoupling responsibilities, avoiding single points of failure and global changes, and adopting advanced deployment strategies. Consider progressive and canary rollouts over the course of hours, days, or weeks, which allow you to reduce risk and identify an issue before all your users are affected. Similarly, it’s good to have automated testing, gradual rollouts, and automatic rollbacks to catch any issues early on. Find the issues before they find you; achieve this by practicing chaos engineering and introducing fault injection and automated disaster recovery testing such as DiRT (see Chapter 2).

Adopt dev practices

You can also adopt dev practices that foster a culture of quality, and create an integrated process of code review and robust testing which can be integrated into Continuous Integration/Continuous Delivery (CI/CD) pipelines. CI/CD saves engineering time and reduces customer impact, allowing you to deploy with confidence.

Design with reliability in mind

In SRE, we have a saying: “Hope is not a strategy.” When it comes to failures, it’s not a question of if, but when. Therefore, you design with reliability in mind from the very beginning, and have robust architectures that can accommodate failures. It’s important to understand how you deal with failure by asking yourself the following questions:

-

What type of failure is my system resilient to?

-

Can it tolerate unexpected, single-instance failure or a reboot?

-

How will it deal with zonal or regional failures?

After you are aware of the risks and their potential blast radius, you can move to risk mitigation (as you do during risk analysis). For example, to mitigate single-instance issues, you should use persistent disks and provision automation, and, of course, you should back up your data. To mitigate zonal and regional failures, you can have a variety of resources across regions and zones and implement load balancing. Another thing you can do is scale horizontally. For example, if you decouple your monolith to microservices, it’s easier to scale them independently (“Do one thing and do it well”). Scaling horizontally can also mean scaling geographically, such as having multiple data centers to take advantage of elasticity. We recommend avoiding manual configuration and special hardware whenever possible.

Graceful degradation

It’s important to implement graceful degradation methods in your architecture. Consider degradation as a strategy, like throttling and load shedding. Ask yourself, if I can’t serve all users with all features, can I serve all users with minimal functionality? Can I throttle user traffic and drop expensive requests? Of course, what is considered an acceptable degradation is highly dependent on the service and user journey. There is a difference between returning x products and returning a checking account balance that is not updated. As a rule of thumb, however, degraded service is better than no service.9

Defense-in-depth

Defense-in-depth is another variation of how you build your system to deal with failures, or more correctly, to tolerate failures. If you rely on a system for configuration or other runtime information, ensure that you have a fallback, or a cached version that will continue to work when the dependency becomes unavailable.10

N+2 resources

Having N+2 resources is a minimum principle for achieving reliability in a distributed system. N+2 means you have N capacity to serve the requests at peak, and +2 instances to allow for one instance (of the complete system) to be unavailable due to unexpected failure and another instance to be unavailable due to planned upgrades. As we mentioned, you can only be as reliable as your critical dependencies are, so choose the right building blocks in your architecture. When building in the cloud, ensure the reliability levels of the services that you use and correlate them with your application targets. Be mindful of their scope (e.g., in Google Cloud Platform’s building blocks [zonal, regional, global]). Remember, when it comes to design and architecture, addressing reliability issues during design reduces the cost later.11 There is no one-size-fits-all solution; you should let your requirements guide you.

Learn from failures

Finally, you can learn from failures in order to make tomorrow better (more on that in “Psychological Safety”). As we mentioned before, the tool for this is postmortems. Ensure that you have a consistent postmortem process that produces action items for bug fixes, mitigations, and documentation updates. Track postmortem action items the same as you would any other bug (if you are not doing so already), and prioritize postmortem work over “regular” work.12 We discuss postmortems in more detail in the next section.

1 Recommended reading: “Generic mitigations” by Jennifer Mace.

2 See Chapter 4, “Service Level Objectives”, in Site Reliability Engineering (O’Reilly).

3 See Adrian Hilton’s post “SRE Fundamentals 2021: SLIs vs SLAs vs SLOs”, May 7, 2021.

4 See Chapter 2, “Implementing SLOs”, in The Site Reliability Workbook (O’Reilly).

5 See Jesus Climent’s post “Shrinking the Time to Mitigate Production Incidents—CRE Life Lessons”, December 5, 2019.

6 See Chapter 9, “Incident Response”, in The Site Reliability Workbook.

7 See Chapter 14, “Managing Incidents”, in Site Reliability Engineering.

8 See Eric Harvieux’s post“Identifying and Tracking Toil Using SRE Principles”, January 31, 2020.

9 For more on load shedding and graceful degradation, see Chapter 22, “Addressing Cascading Failures”, in Site Reliability Engineering.

10 See the Google Blog post by Ines Envid and Emil Kiner: “Google Cloud Networking in Depth: Three Defense-in-Depth Principles for Securing Your Environment”, June 20, 2019.

11 See Chapter 12, “Introducing Non-Abstract Large System Design”, in The Site Reliability Workbook.

12 See the article for Google Research by Betsy Beyer, John Lunney, and Sue Lueder: “Postmortem Action Items: Plan the Work and Work the Plan”.

Get Anatomy of an Incident now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.