Chapter 4. HBase Sizing and Tuning Overview

The two most important aspects of building an HBase appplication are sizing and schema design. This chapter will focus on the sizing considerations to take into account when building an application. We will discuss schema design in Part II.

In addition to negatively impacting performance, sizing an HBase cluster incorrectly will reduce stability. Many clusters that are undersized tend to suffer from client timeouts, RegionServer failures, and longer recovery times. Meanwhile, a properly sized and tuned HBase cluster will perform better and meet SLAs on a consistent level because the internals will have less fluctuation, which in turn means fewer compactions (major and minor), fewer region splits, and less block cache churn.

Sizing an HBase cluster is a fine art that requires an understanding of the application needs prior to deploying. You will want to make sure to understand both the read and the write access patterns before attempting to size the cluster. Because it involves taking numerous aspects into consideration, proper HBase sizing can be challenging. Before beginning cluster sizing, itâs important to analyze the requirements for the project. These requirements should be broken down into three categories:

- Workload

-

This requires understanding general concurrency, usage patterns, and ingress/egress workloads.

- Service-level agreements (SLAs)

-

You should have an SLA that guarantees fully quantified read and write latencies, and understanding the tolerance for variance.

- Capacity

-

You need to consider how much data is ingested daily, the total data retention time, and total data size over the lifetime of the project.

After fully understanding these project requirements, we can move on to cluster sizing. Because HBase relies on HDFS, it is important to take a bottom-up approach when designing an HBase cluster. This means starting with the hardware and then the network before moving on to operating system, HDFS, and finally HBase.

Hardware

The hardware requirements for an HBase-only deployment are cost friendly compared to a large, multitenant deployment. HBase currently can use about 16â24 GB of memory for the heap, though that will change with Java 7 and the G1GC algorithm. The initial testing with the G1GC collector has shown very promising results with heaps over 100 GB is size. This becomes especially important when attempting to vertically scale HBase, as we will see later in our discussion of the sizing formulas.

When using heaps larger than 24 GB of allocated heap space, garbage collection pauses will become so long (30 seconds or more) that RegionServers will start to time out with ZooKeeper causing failures. Currently, stocking the DataNodes with 64â128 GB of memory will be sufficient to cover the RegionServers, DataNodes, and operating system, while leaving enough space to allow the block cache to assist with reads. In a read-heavy environment looking to leverage bucketcache, 256 GB or more of RAM could add significant value. From a CPU standpoint, an HBase cluster does not need a high core count, so mid-range speed and lower-end core count is quite sufficient. As of early 2016, standard commodity machines are shipping with dual octacore processors on the low end, and dual dodecacore processors with a clock speed of about 2.5 GHz. If you are creating a multitenant cluster (particularly if you plan to utilize MapReduce), it is beneficial to use a higher core count (the exact number will be dictated by your use case and the components used). Multitenant use cases can be very difficult, especially when HBase is bound by tight SLAs, so it is important to test with both MapReduce jobs as well as an HBase workload. In order to maintain SLAs, it is sometimes necessary to have a batch cluster and a real-time cluster. As the technology matures, this will become less and less likely. One of the biggest benefits of HBase/Hadoop is that a homogenous environment is not required (though it is recommended for your sanity). This allows for flexibility when purchasing hardware, and allows for different hardware specifications to be added to the cluster on an ongoing basis whenever they are required.

Storage

As with Hadoop, HBase takes advantage of a JBOD disk configuration. The benefits offered by JBOD are two-fold: first, it allows for better performance by leveraging short-circuit reads (SCR) and block locality; and second, it helps to control hardware costs by eliminating expensive RAID controllers. Disk count is not currently a major factor for an HBase-only cluster (where no MR, no Impala, no Solr, or any other applications are running). HBase functions quite well with 8â12 disks per node. The HBase writepath is limited due to HBaseâs choice to favor consistency over availability. HBase has implemented a write-ahead log (WAL) that must acknowledge each write as it comes in. The WAL records every write to disk as it comes in, which creates slower ingest through the API, REST, and thrift interface, and will cause a write bottleneck on a single drive. Planning for the future drive count will become more important with the work being done in HBASE-10278, which is is an upstream JIRA to add multi-WAL support; it will remove the WAL write bottleneck and share the incoming write workload over all of the disks per node. HBase shares a similar mentality with Hadoop where SSDs are currently overkill and not necessary from a deployment standpoint. When looking to future-proof a long-term HBase cluster, a purchase of 25%â50% of the storage could be beneficial. This is accounting for multi-WAL and archival storage.

Archival Storage

For more information about archival storage, check out The Apache Software Foundationâs guide to archival storage, SSD, and memory.

We would only recommend adding the SSDs when dealing with tight SLAs, as they are still quite expensive per GB.

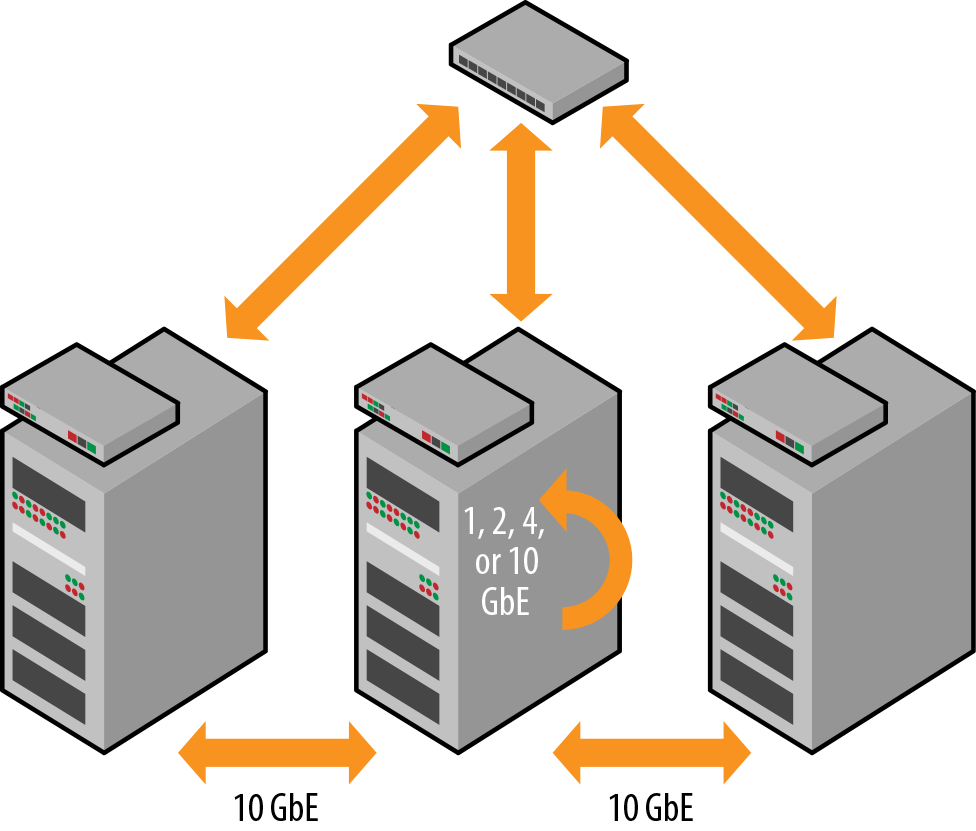

Networking

Networking is an important consideration when designing an HBase cluster. HBase clusters typically employ standard Gigabit Ethernet (1 GbE) or 10 Gigabit Ethernet (10 GbE) rather than more expensive alternatives such as fiber or InfiniBand. While the minimum recommendation is bonded 1 GbE, 10 GbE is ideal for future scaling. The minimum recommended is bonded 1 GbE with either two or four ports. Both hardware and software continue to scale up, so 10 GbE is ideal (itâs always easier to plan for the future now). 10 GbE really shines during hardware failures and major compactions. During hardware failures, the underlying HDFS has to re-replicate all of the data stored on that node. Meanwhile, during major compactions, HFiles are rewritten to be local to the RegionServer that is hosting the data. This can lead to remote reads on clusters that have experienced node failures or many regions being rebalanced. Those two scenarios will oversaturate the network, which can cause performance impacts to tight SLAs. Once the cluster moves to multiple racks, top-of-rack (TOR) switches will need to be selected. TOR switches connect the nodes and bridge multiple racks. For a successful deployment, the TOR switches should be no slower than 10 GbE for inter-rack connections (Figure 4-1). Because it is important to eliminate single points of failure, redundancy between racks is highly recommended. Although HBase and Hadoop can survive a lost rack, it doesnât make for a fun experience. If the cluster gets so large that HBase begins to cross multiple aisles in the datacenter, core/aggregation switches may need to be introduced. These switches should not run any slower than 40 GbE, and again, redundancy is recommended. The cluster should be isolated on its own network and network equipment. Hadoop and HBase can quickly saturate a network, so separating the cluster on its own network can help ensure HBase does not impact any other systems in the datacenter. For ease of administration and security, VLANs may also be implemented on the network for the cluster.

Figure 4-1. Networking example

OS Tuning

There is not a lot of special consideration for the operating system with Hadoop/HBase.

The standard operating system for a Hadoop/HBase cluster is Linux based.

For any real deployment, an enterprise-grade distribution should be used (e.g., RHEL, CentOS, Debian, Ubuntu, or SUSE).

Hadoop writes its blocks directly to the operating systemâs filesystem.

With any of the newer operating system versions, it is recommended to use EXT4 for the local filesystem.

XFS is an acceptable filesystem, but it has not been as widely deployed for production environments.

The next set of considerations for the operating system is swapping and swap space.

It is important for HBase that swapping is not used by the kernel of the processes.

Kernel and process swapping on an Hbase node can cause serious performance issues and lead to failures.

It is recommended to disable swap space by setting the partition size to 0; process swapping should be disabled by setting vm.swappiness to 0 or 1.

Hadoop Tuning

Apache HBase 0.98+ only supports Hadoop2 with YARN. It is important to understand the impact that YARN (MR2) can have on an HBase cluster. Numerous cases have been handled by Cloudera support where MapReduce workloads were causing HBase to be starved of resources. This leads to long pauses while waiting for resources to free up.

Hadoop2 with YARN adds an extra layer of complexity, as resource management has been moved into the YARN framework. YARN also allows for more granular tuning of resources between managed services. There is some work upstream to integrate HBase within the YARN framework (HBase on YARN, or HOYA), but it is currently incomplete. This means YARN has to be tuned to allow for HBase to run smoothly without being resource starved. YARN allows for specific tuning around the number of CPUs utilized and memory consumption. The three main functions to take into consideration are:

yarn.nodemanager.resource.cpu-vcores-

Number of CPU cores that can be allocated for containers.

yarn.nodemanager.resource.memory-mb-

Amount of physical memory, in megabytes, that can be allocated for containers. When using HBase, it is important not to over allocate memory for the node. It is recommended to allocate 8â16 GB for the operating system, 2â4 GB for the DataNode, 12â24 GB for HBase, and the rest for the YARN framework (assuming there are not other workloads such as Impala or Solr).

yarn.scheduler.minimum-allocation-mb-

The minimum allocation for every container request at the RM, in megabytes. Memory requests lower than this wonât take effect, and the specified value will get allocated at minimum. The recommended value is between 1â2 GB of data.

When performing the calculations, it is important to remember that the vast majority of servers use an Intel chip set with hyper-threading enabled. Hyper-threading allows the operating system to present two virtual or logical cores for every physical core present. Here is some back-of-the-napkin tuning for using HBase with YARN (this will vary depending on workloadsâyour mileage may vary):

- physicalCores * 1.5 = total v-cores

We also must remember to leave room for HBase:

- Total v-cores â 1 for HBase â 1 for DataNode â 1 for NodeManager â 1 for OS ... â 1 for any other services such as Impala or Solr

For an HBase and YARN cluster with a dual 10-core processor, the tuning would look as follows:

- 20 * 1.5 = 30 virtual CPUs

- 30 â 1 â 1 â 1 â 1 = 26 total v-cores

Some sources recommend going one step further and dividing the 26 v-cores in half when using HBase with YARN. You should test your application at production levels to determine the best tuning.

HBase Tuning

As stated at the beginning of the chapter, HBase sizing requires a deep understanding of three project requirements: workload, SLAs, and capacity. Workload and SLAs can be organized into three main categories: read, write, or mixed. Each of these categories has its own set of tunable parameters, SLA requirements, and sizing concerns from a hardware-purchase standpoint.

The first one to examine is the write-heavy workload. There are two main ways to get data into HBase: either through an API (Java, Thrift, or REST) or by using bulk load. This is an important distinction to make, as the API will use the WAL and memstore, while bulk load is a short-circuit write and bypasses both. As mentioned in âHardwareâ, the primary bottleneck for HBase is the WAL followed by the memstore. There are a few quick formulas for determining optimal write performance in an API-driven write model.

To determine region count per node:

- HBaseHeap * memstoreUpperLimit = availableMemstoreHeap

- availableMemstoreHeap / memstoreSize = recommendedActiveRegionCount

(This is actually column family based. The formula is based on one CF.)

To determine raw space per node:

- recommendedRegionCount * maxfileSize * replicationFactor = rawSpaceUsed

To determine the number of WALs to keep:

- availableMemstoreHeap / (WALSize * WALMultiplier) = numberOfWALs

Here is an example of this sizing using real-world numbers:

- HBase heap = 16 GB

- Memstore upper limit = 0.5*

- Memstore size = 128 MB

- Maximum file size = 20 GB

- WAL size = 128 MB

- WAL rolling multiplier = 0.95

First, weâll determine region count per node:

- 16,384 MB * 0.5 = 8,192 MB

- 8,192 MB / 128 MB = 64 activeRegions

Next, weâll determine raw space per node:

- 64 activeRegions * 20 GB * 3 = 3.75 TB used

Finally, we need to determine the number of WALs to keep:

- 8,192 GB / (128 MB * 0.95) = 67 WALs

When using this formula, it is recommended to have no more than 67 regions per RegionServer while keeping a total of 67 WALs. This will use 3.7 TB (with replication) of storage per node for the HFiles, which does not include space used for snapshots or compactions.

Bulk loads are a short-circuit write and do not run through the WAL, nor do they use the memstore.

During the reduce phase of the MapReduce jobs, the HFiles are created and then loaded using the completebulkload tool.

Most of the region count limitations come from the WAL and memstore.

When using bulkload, the region count is limited by index size and response time.

It is recommended to test read response time scaling up from 100 regions; it is not unheard of for successful deployments to have 150â200 regions per RegionServer.

When using bulk load, it is important to reduce the upper limit (hbase.regionserver.global.memstore.upper limit) and lower limit (hbase.regionserver.global.memstore.lower limit) to 0.11 and 0.10, respectively, and then raise the block cache to 0.6â0.7 depending on available heap. Memsore and block cache tuning will allow HBase to keep more data in memory for reads.

Tip

It is important to note these values are percentages of the heap devoted to the memstore and the block cache.

The same usable space from before per node calculation can be used once the desired region count has been determined through proper testing.

Again, the formula to determine raw space per node is as follows:

- recommendedRegionCount * maxfileSize * replicationFactor = rawSpaceUsed

Using this formula, weâd calculate the raw space per node as follows in this instance:

- 150 activeRegions * 20 GB * 3 = 9 TB used

Capacity is the easiest portion to calculate. Once the workload has been identified and properly tested (noticing a theme with testing yet?), it is a simple matter of dividing the total amount of data by capacity per node. It is important to leave scratch space available (the typical recommendation is 20%â30% overhead). This space will be used for MapReduce scratch space, extra space for snapshots, and major compactions. Also, if using snapshots with high region counts, testing should be done to ensure there will be enough space for the desired backup pattern, as snapshots can quickly take up space.

Different Workload Tuning

For primary read workloads, it is important to understand and test the SLA requirements. Rigorous testing is required to determine the best settings. The primary settings that need to be tweaked are the same as write workloads (i.e., lowering memstore settings and raising the block cache to allow for more data to be stored in memory). In HBase 0.96, bucket cache was introduced. This allows for data to be stored either in memory or on low-latency disk (SSD/flash cards). You should use 0.98.6 or higher for this feature due to HBASE-11678.

In a mixed workload environment, HBase bucket cache becomes much more interesting, as it allows HBase to be tuned to allow more writes while maintaining a higher read response time. When running in a mixed environment, it is important to overprovision your cluster, as doing so will allow for the loss of a RegionServer or two. It is recommended to allow one extra RegionServer per rack when sizing the cluster. This will also give the cluster better response times with more memory for reads and extra WALs for writes. Again, this is about finding the perfect balance to fit the workload, which means tuning the number of WALs, memstore limits, and total allocated block cache.

HBase can run into multiple issues when leveraging other Hadoop components. We will cover deploying HBase with Impala, Solr, and Spark throughout Part II. Unfortunately, there really isnât a great answer for running HBase with numerous components. The general concerns that arise include the following:

-

CPU contention

-

Memory contention

-

I/O contention

The first two are reasonably easy to address with proper YARN tuning (this means running Spark in YARN mode) and overprovisioning of resources. What we mean here is if you know your application needs W GB for YARN containers, Impala will use X memory, HBase heap needs Y to meet SLAs, and I want to save Z RAM for the OS, then my formula should be:

- W + X + Y + Z = totalMemoryNeeded

This formula makes sense at first glance, but unfortunately it rarely works out to be as straightforward. We would recommend upgrading to have at least 25%â30% free memory. The benefits of this approach are twofold: first, when itâs time to purchase additional nodes, it will give you room to vertically grow until those new nodes are added to the cluster; and second, the OS will take advantage of the additional memory with OS buffers helping general performance across the board.

The I/O contention is where the story really starts to fall apart. The only viable answer is to leverage control groups (cgroups) in the Linux filesystem. Setting up cgroups can be quite complex, but they provide the ability to assign specific process such as Impala, or most likely YARN/MapReduce, into specific I/O-throttled groups.

Most people do not bother with configuring cgroups or other throttle technologies. We tend to recommend putting memory limits on Impala, container limits on YARN, and memory and heap limits on Solr. Then properly testing your application at scale will allow for fine-tuning of the components working together.

Our colleague Eric Erickson, who specializes in all things Solr, has trademarked the phrase âit dependsâ when asked about sizing. He believes that back-of-the-napkin math is a great starting point, but only proper testing can verify the sizing estimates. The good news with HBase and Hadoop is that they provide linear scalability, which allows for testing on a smaller level and then scaling out from there. The key to a successful HBase deployment is no secretâyou need to utilize commodity hardware, keep the networking simple using Ethernet versus more expensive technologies such as fiber or InfiniBand, and maintain detailed understanding of all aspects of the workload.

Get Architecting HBase Applications now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.