Chapter 1. The Internet, Routing, and BGP

One of the many remarkable qualities of the Internet is that it has scaled so well to its current size. This doesn’t mean that nothing has changed since the early days of the ARPANET in 1969. The opposite is true: our current TCP and IP protocols weren’t constructed until the late 1970s. Since that time, TCP/IP has become the predominant networking protocol for just about every kind of digital communication.

The story goes that the Internet—or rather the ARPANET, which is regarded as the origin of today’s Internet—was invented by the military as a network that could withstand a nuclear attack. That isn’t how it actually happened. In the early 1960s, Paul Baran, a researcher for the RAND Corporation, wrote a number of memoranda proposing a digital communications network for military use that could still function after sustaining heavy damage from an enemy attack.[2] Using simulations, Baran proved that a network with only three or four times as many connections as the minimum required to operate comes close to the theoretical maximum possible robustness. This of course implies that the network adapts when connections fail, something the telephone network and the simple digital connections of that time couldn’t do, because every connection was manually configured. Baran incorporated numerous revolutionary concepts into his proposed network: packet switching, adaptive routing, the use of digital circuits to carry voice communication, and encryption inside the network. Many people believed such a network couldn’t work, and it was never built.

Several years later, the Department of Defense’s Advanced Research Project Agency (ARPA) grew unsatisfied with the fact that many universities and other research institutions that worked on ARPA projects were unable to easily exchange results on computer-related work. Because computers from the many different vendors used different operating systems and languages, and because they were usually customized to some extent by their users, it was extremely hard to make a program developed on one computer run on another machine. ARPA wanted a network that would enable researchers to access computers located at different research institutions throughout the United States.

Access to a remote computer wasn’t a novelty in the late 1960s: connecting remote terminals over a phone line or dedicated circuit was complex but nonetheless a matter of routine. In these situations, however, the mainframe or minicomputer always controlled the communication: a user typed a command, the characters were sent to the central computer, the computer sent back the results after some time, and the terminal displayed them on the screen or on paper. Connecting two computers together was still a rather revolutionary concept, and the research institutions didn’t like the idea of connecting their computers to a network one bit. Only after it was decided that dedicated minicomputers would be used to perform all network-related tasks were people persuaded to connect their systems to the network. The use of minicomputers as Interface Message Processors (IMPs) made building the network a lot easier: rather than having to deal with a large number of very different systems on the network, each computer had to talk only to the local IMP, and the IMPs only to a single local computer and, over the network, to other IMPs. Today’s routers function in a similar way to the ARPANET IMPs.

During the 1970s, the ARPANET continued to evolve. The original Network Control Protocol (NCP) was replaced by two different protocols: the Internet Protocol (IP), which connects (internetworks) different networks, and the Transport Control Protocol (TCP), which applications use to communicate without having to deal with the intricacies of IP. IP and TCP are often mentioned together as TCP/IP to encompass the entire family of related protocols used on the Internet.

Topology of the Internet

Because it’s a “network of networks,” there was always a need to interconnect the different networks that together form the global Internet. In the beginning, everyone simply connected to the ARPANET, but over the years, the topology of the Internet has changed radically.

The NSFNET Backbone

During the late 1980s, the ARPANET was replaced as the major “backbone” of the Internet by a new National Science Foundation-sponsored network between five supercomputer locations: the NSFNET Backbone. Federal Internet Exchanges on the East and West Coasts (FIX East and FIX West) were built in 1989 to aid in the transition from the ARPANET to the NSFNET Backbone. Originally, the FIXes were 10-Mbps Ethernets, but 100-Mbps FDDI was added later to increase bandwidth. The Commercial Internet Exchange (CIX, “kicks”) on the West Coast came into existence because the people in charge of the FIXes were hesitant to connect commercial networks. CIX operated a CIX router and several FDDI rings for some time, but it abandoned those activities and turned into a trade association in the late 1990s. In 1992, Metropolitan Fiber Systems (MFS, now Worldcom) built a Metropolitan Area Ethernet (MAE) in the Washington, DC, area, which quickly became a place where many different (commercial) networks interconnected. Interconnecting at an Internet Exchange (IX) or MAE is attractive, because many networks connect to the IX or MAE infrastructure, so all that’s needed is a single physical connection to interconnect with many other networks.

Commercial Backbones and NAPs

Before the early 1990s, the Internet was almost exclusively used as a research network. Some businesses were connected, but this was limited to their research divisions. All this changed when email became more pervasive outside the research community, and the World Wide Web made the network much more visible. More and more business and nonresearch organizations connected to the network, and the additional traffic became a burden for the NSFNET Backbone. Also, the NSFNET Backbone Acceptable Use Policy didn’t allow “for-profit activities.” In 1995, the NSFNET Backbone was decommissioned, giving room to large ISPs to compete with each other by operating their own backbone networks. To ensure connectivity between the different networks, four contracts for Network Access Points (NAPs) were awarded by the NSF, each run by a different telecommunication company:

The Pacific Bell NAP in San Jose, California

The Ameritech NAP in Chicago, Illinois

The Sprint NAP in Pennsauken, New Jersey (in the Philadelphia metropolitan area, but often referred to as “the New York NAP”)

The already existing MAE East,[3] run by MCI Worldcom, in Vienna, Virginia

The NAPs were created as large-scale exchange points where commercial networks could interconnect without being limited by the NSFNET Acceptable Use Policy. The NAPs were also used to interconnect with a new national research network for high-bandwidth applications, the “very high performance Backbone Network Service” (vBNS).

The Ameritech (Chicago) NAP was built on ATM technology from the start; the Sprint (New Jersey) and PacBell (San Francisco) NAPs used FDDI at first and migrated to ATM later. MAE East also adopted FDDI in addition to Ethernet at this point, and the (Worldcom-trademarked) acronym was quickly changed to mean “Metropolitan Area Exchange.” After decommissioning the last FDDI location in 2001, MAE East is now ATM-only as well. Note that it’s possible to interconnect Ethernet and FDDI at the datalink level (bridge), so if an IX uses both, a connection to either suffices. However, it isn’t possible to bridge easily from Ethernet or FDDI to ATM and vice versa. Over the past several years, the importance of the NAPs has diminished as the main interconnect locations for Internet traffic. Large networks are showing a tendency to interconnect privately, and smaller networks are looking more and more at regional public interconnect locations. There are now numerous small Internet Exchanges in the United States, and in addition to Worldcom, two other companies now operate Internet Exchanges as a commercial service: Equinix and PAIX. Figure 1-1 shows the distribution of NAPs, MAEs, Equinix Internet Business Exchanges, and PAIX exchanges.

The Rest of the World

The traffic volumes for the Internet Exchanges in Europe and the Asia/Pacific region were much lower at the time the NAPs were being created, so these exchange were not forced to adopt expensive (FDDI) or then still immature (ATM) technologies as the American NAPs were. Because Ethernet is cheap, easier to configure than ATM, and conveniently available in several speeds, most of the non-NAP and non-MAE Internet Exchanges use Ethernet. There are also a few that use frame relay, SMDS, or SRP, usually when the Internet Exchange isn’t limited to a single location or a small number of locations but allows connections to any ISP office or point of presence (POP) within a metropolitan area.

In Europe, most countries have an Internet Exchange. From an international perspective, the main ones are the London Internet Exchange (LINX), the Amsterdam Internet Exchange (AMS-IX), and the Deutsche Commercial Internet Exchange (DE-CIX) in Frankfurt. Internet Exchanges in the rest of the world haven’t yet reached the scale of those in the United States and Europe and are used mainly to exchange national traffic.

Transit and Peering

When a customer connects to an Internet service provider (ISP), the customer pays. This seems natural. Because the customer pays, the ISP has to carry packets to and from all possible destinations worldwide for this customer. This is called transit service. Smaller ISPs buy transit from larger ISPs, just as end-user organizations do. But ISPs of roughly similar size also interconnect in a different way: they exchange traffic as equals. This is called peering, and typically, there is no money exchanged. Unlike transit, peering traffic always has one network (or one of its customers) as the source and the other network (or one of its customers) as its destination. Chapter 12 offers more details on interconnecting with other networks and peering.

Classification of ISPs

All ISPs aren’t created equal: they range from huge, with worldwide networks, to tiny, with only a single Ethernet as their “backbone.” Generally, ISPs are categorized in three groups:

- Tier-1

Tier-1 ISPs are so large they don’t pay anyone else for transit. They don’t have to, because they peer with all other tier-1 networks. All other networks pay at least one tier-1 ISP for transit, so peering with all tier-1 ISPs ensures connectivity to the entire Internet.

- Tier-2

Tier-2 ISPs have a sizable network of their own, but they aren’t large enough to convince all tier-1 networks to peer with them, so they get transit service from at least one tier-1 ISP.

- Tier-3

Tier-3 ISPs don’t have a network to speak of, so they purchase transit service from one or more tier-1 or tier-2 ISPs that operate in the area. If they peer with other networks, it’s usually at just a single exchange point. Many don’t even multihome.

The line between tier-1 networks and the largest tier-2 is somewhat blurred, with some tier-2 networks doing “paid peering” with tier-1 networks and calling themselves tier-1. The real difference is that tier-2 networks generally have a geographically limited presence. For instance, even some very large European networks with trans-Atlantic connections of their own pay a U.S. network for transit, rather than interconnecting with a large number of other networks at NAPs throughout the United States. Because tier-1 networks see these regional ISPs as potential customers, they are less likely to peer with them. This goes double for tier-3 networks. Tier-2 networks, on the other hand, may not peer with many tier-1 networks, but they often peer with all other tier-2 networks operating in the same region and with many tier-3 networks.

TCP/IP Design Philosophy

The fact that TCP/IP runs well over all kinds of underlying networks is no coincidence. Today, every imaginable kind of computer is connected to the Net, even though those connected over the fastest links, such as Gigabit Ethernet, can transfer more data in a second than the slowest, connected through wireless modems, can transfer in a day. This flexibility is the result of the philosophy that network failures shouldn’t impede communication between two hosts and that no assumptions should be made about the underlying communications channels. Any kind of circuit that can carry packets from one place to another with some reasonable degree of reliability may be used.[4]

This philosophy makes it necessary to move all the decision-making to the source and destination hosts: it would be very hard to survive the loss of a router somewhere along the way if this router holds important, necessary information about the connection. This way of doing things is very different from the way telephony and virtual circuit-oriented networks such as X.25 work: they go through a setup phase, in which a path is configured at central offices or telephone switches along the way before any communication takes place. The problem with this approach is that when a switch fails, all paths that use this switch fail, disrupting ongoing communication. In a network built on an unreliable datagram service, such as the Internet, packets can simply be diverted around the failure and still be delivered. The price to be paid for this flexibility is that end hosts have to do more work. Packets that were on their way over the broken circuit may be lost; some packets may be diverted in the wrong direction at first, so that they arrive after subsequent packets have already been received; or the new route may be of a different speed or capacity. The networking software in the end hosts must be able to handle any and all of these eventualities.

The IP Protocol

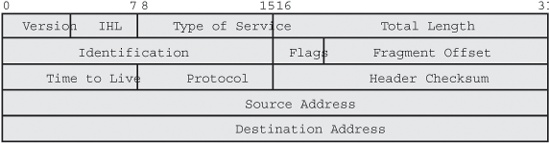

Because the TCP protocol takes care of the most complex tasks, IP processing along the way becomes extremely simple: basically, just take the destination address, look it up in the routing table to find the next-hop address and/or interface, and send the packet on its way to this next hop over the appropriate interface. This isn’t immediately obvious by looking at the IP header (Figure 1-2), because there are 12 fields in it, which seems like a lot at first glance. The function of each field, except perhaps the Type of Service and fragmentation-related fields, is simple enough, however.

The first 32 bits of the header are mainly for housekeeping: the Version field indicates the IP version (4), the Internet Header Length (“IHL”), and the length of the header (usually 5 32-bit words); the Total Length is the length of the entire IP packet, including the header, in bytes. The Type of Service field can be used by applications to indicate that they desire a nonstandard service level or quality of service (QoS). In most networks, the contents of this field are ignored.

The next 32 bits are used when the IP packet needs to be fragmented. This happens when the maximum packet size on a network link isn’t enough to transmit the packet whole. The router breaks up the packet in smaller packets, and the receiving host can later reassemble the original packet using the information in the Identifier, Flags, and Fragment Offset fields.

The middle 32 bits contains the Time to Live (TTL), Protocol, and Header Checksum fields. The TTL is initialized at a sufficiently high value (usually 60) by the source host and then decremented by each router. When the TTL reaches zero, the router throws away the packet. This is done to prevent packets from circling the Net indefinitely when there are routing loops.[5] The Protocol field indicates what’s inside the IP packet: usually TCP or UDP data, or an ICMP control message. The Header Checksum is just that, and it’s used to protect the header from inadvertent changes en route. As with all checksums, the receiver performs the checksum calculation over the received information, and if the computed checksum is different from the received checksum, the packet contains invalid information and is discarded. The final two 32-bit words contain the address of the source system that generated the packet and the destination system to which the packet is addressed.

When there are errors during IP processing, the system experiencing the error (this can be a router along the way or the destination host) sends back an Internet Control Message Protocol (ICMP) message to inform the source host of the problem.

The Routing Table

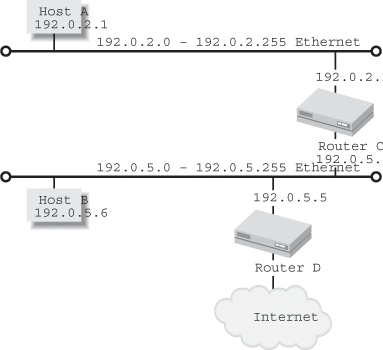

The routing table is just a big list of destination networks, along with information on how to reach those networks. Figure 1-3 shows an example network consisting of two hosts connected to different Ethernets and a router connecting the two Ethernets, with a second router connecting the network to the Internet.[6]

Each router and host has a different routing table, telling it how to reach all possible destinations. The contents of these routing tables is shown in Table 1-1.

Destination | Host A | Host B | Router C | Router D |

192.0.2.0 net | Directly connected | 192.0.5.4 (Router C) | Directly connected | 192.0.5.4 (Router C) |

192.0.5.0 net | 192.0.2.3 (Router C) | Directly connected | Directly connected | Directly connected |

Default route | 192.0.2.3 (Router C) | 192.0.5.5 (Router D) | 192.0.5.5 (Router D) | Over ISP connection |

The actual routing table looks different inside a host or router, of course. Most hosts have a route command, which can be used to list and manipulate entries (routes) in the routing table. This is how the route to host B (192.0.5.6) looks in host A’s routing table, if host A is a FreeBSD system:

# route get 192.0.5.6

route to: 192.0.5.6

destination: 192.0.5.0

mask: 255.255.255.0

gateway: 192.0.2.3

interface: xl0Because there is no specific route to the IP address 192.0.5.6, the routing table returns a route for a range of addresses starting at 192.0.5.0. The mask indicates how big the range is, and the gateway is the router that is used to reach this destination. The xl0 Ethernet interface is used to transmit the packets. Hosts usually have a limited number of routes in their routing table, so for most (nonlocal) destinations, there is no specific route to an address range that includes the destination IP address. In this case, the routing table returns the default route:

# route get 207.25.71.5

route to: 207.25.71.5

destination: default

mask: default

gateway: 192.0.2.3

interface: xl0Packets match the default route and are sent to the default gateway (the router the default route points to, in this case 192.0.2.3) when there is no better, specific route available. The default gateway may have a route for this destination, or it may send the packet “upstream” (in the direction of the elusive core of the Internet) to its own default gateway, until the packet arrives at a router that has the desired route in its routing table. From there, the packet is forwarded hop by hop until it reaches its destination.

Routing Protocols

This leaves just one problem unsolved: how do we maintain an up-to-date routing table? Simply entering the necessary information manually isn’t good enough: the routing table has to reflect the actual way in which everything is connected at any given time, the network topology. This means using dynamic routing protocols so that topology changes, such as cable cuts and failed routers, are communicated promptly throughout the network.

A simple routing protocol is the Routing Information Protocol (RIP). RIP basically broadcasts the contents of the routing table periodically over every connection and listens for other routers to do the same. Routes received through RIP are added to the routing table and, from then on, are broadcast along with the rest of the routing table. Every route contains a “hop count” that indicates the distance to the destination network, so routers have a way to select the best path when they receive multiple routes to the same destination. RIP is considered a distance-vector routing protocol, because it only stores information about where to send packets for a certain destination and how many hops are necessary to get there. Open Shortest Path First (OSPF)[7] is a much more advanced routing protocol, so much so that it was even questioned whether Dijkstra’s Shortest Path First algorithm, on which the protocol is based, wouldn’t be too complex for routers to run. This turned out not to be a problem as long as some restrictions are taken into account when designing OSPF networks. Instead of broadcasting all routes periodically, OSPF keeps a topology map of the network and sends updates to the other routers throughout the network only when something changes. Then all routers recompute the topology map using the SPF algorithm. This makes OSPF a link-state protocol. Rather than the number of hops, OSPF also takes into account the cost, which usually translates to the link bandwidth, of every link when computing the best path to a destination.

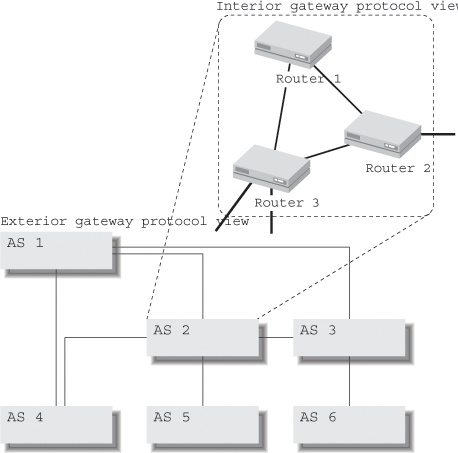

Obviously, periodically broadcasting all the routes or keeping topology information about every single connection isn’t possible for the entire Internet. Thus, in addition to interior routing protocols such as RIP and OSPF for use within a single organization’s network, exterior protocols are needed to relay routing information between organizations. Routers, especially routers connecting one type of network to another, were called “gateways” in the early days of the TCP/IP protocol family, so we usually talk about interior gateway protocols (IGPs) and exterior gateway protocols (EGPs). To confuse the uninitiated even further, one of the older EGPs is named EGP. There may be some time-forgotten Internet sites where EGP is still used, but the present protocol of choice for interdomain routing in the Internet is the Border Gateway Protocol Version 4 (BGP-4), a more advanced exterior gateway protocol.

BGP is sometimes called a distance-path protocol. It isn’t satisfied with a simple hop count, but it doesn’t keep track of the full topology of the entire network either. Every router receives reachability information from its neighbors; it then chooses the route with the shortest path for inclusion in the routing table and announces this path to other neighbors, if the routing policy permits it. The path is a list of every Autonomous System (AS) between the router and the destination. The idea behind Autonomous Systems is that networks don’t care about the inner details of other networks. Thus, instead of listing every router along the way, BGP groups network together within ASes so they may be viewed as a single entity, whether an AS contains only a single BGP-speaking router or hundreds of BGP- and non-BGP-speaking routers. Figure 1-4 shows the differences between the two views: the EGP sees ASes as a whole; the IGP sees individual routers within an AS but is limited to a view of a single AS.

An AS is sometimes described as “a single administrative domain,” but this isn’t completely accurate. An AS can span more than one organization, for instance, an ISP and its non-BGP speaking customers. The ISP doesn’t necessarily have any control over its customers’ routers, but the customers do fall within the ISP’s AS and are subject to the same routing policy, because without BGP, they have no way to express a routing policy of their own.

It may seem strange that in EGPs, the policies take precedence over the reachability information, but there is a good reason for this. ISPs will, of course, receive all routes from their upstream ISPs and announce all routes to their customers, thereby providing transit services to remote destinations. Someone who is a customer of two ISPs wouldn’t want to announce ISP 1’s routes to ISP 2, however. And using a customer’s infrastructure for your own purposes is usually not considered good business practice. Thus, the most basic routing policy is “send routes only to paying customers.” Policies become more complex when two networks peer. When networks are similar in size, it makes sense to exchange traffic at exchange points rather than to pay a larger network for handling it. In this case, the routing policy is to send just your own routes and your customer’s routes to the peer and keep the expensive routes from upstream ISPs to yourself. Announcing a route means inviting the other side to send traffic, so this policy is the BGP way of inviting your peering partner to send you traffic with you or your customer as its destination.

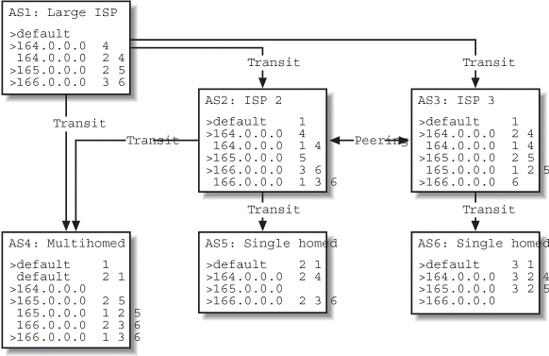

Figure 1-5 shows part of the Internet with one large ISP (AS 1), two medium-sized ISPs (AS 2 and AS 3) that resell the AS 1 transit service, and three customers (ASes 4, 5, and 6). Customer 4 is connected to two ISPs, ASes 1 and 2, and is therefore said to be “multihomed.” Transit routes are distributed from the top down (from 1 to 2 and 4, from 2 to 4 and 5, and from 3 to 6), and there is a peering connection between ISPs 2 and 3.

For the purposes of this example, there are only four routes: AS 1 announces a default route, indicating that it can handle traffic to every destination connected to the Net; ASes 4, 5, and 6 each announce a single route: 164.0.0.0, 165.0.0.0, and 166.0.0.0, respectively. After all routes have propagated throughout the network, the routing tables[8] will be populated as illustrated in Figure 1-5. The > character indicates the preferred route when there are several routes to the same destination. The numbers after the destination IP network form the AS path, which is used to make policy decisions and to make sure there are no routing loops.

- AS 1, the large ISP

The route from AS 4 (

164.0.0.0) shows up twice in the AS 1 routing table, because AS 1 receives the announcement from both AS 4 itself and through AS 2. BGP sends only the route with the best path to its neighbors, but it doesn’t remove the less preferred routes from memory. In this case, the best path is the one directly to AS 4, because it’s obviously shorter. The other route to164.0.0.0is used only when the one with the shorter path becomes unavailable.- AS 2, a smaller ISP

The BGP table for AS 2 is a bit more complex than the one for AS 1. AS 2 relays the customer routes

164.0.0.0and165.0.0.0that it receives from ASes 4 and 5 to AS 1, so the rest of the world knows how to reach them. The peering link between AS 2 and AS 3 is used to exchange traffic to (and thus routes from) each other’s customers. So AS 2 sends the routes it received from ASes 4 and 5 to AS 3, but not the routes received from AS 1.- AS 3, another small ISP

The situation for AS 3 is similar to that of AS 2, but AS 3 has only one customer route (from AS 6) to announce to AS 1. The paths for both

164.0.0.0routes are the same length, but AS 3 will prefer the path over AS 2 (by means that are discussed later in the book) because it’s cheaper to send traffic to a peer rather than to a transit network.[9]- AS 4, a multihomed customer of both AS 1 and AS 2

AS 4 gets two copies of every route: one from AS 1 and one from AS 2. The default route has a shorter path over AS 1, and the

165.0.0.0has a shorter path over AS 2. For166.0.0.0, the path is the same length, so in the absence of any policies that instruct it to act differently, the BGP routing process will use several tie-breaking rules to make a choice. The164.0.0.0route has an empty path, because it’s a locally sourced route, generated by AS 4 itself.- ASes 5 and 6, single-homed customers of ASes 2 and 3, respectively

The routing tables for ASes 5 and 6 are simple: transit routes and a single local route that is announced to their respective upstream ISPs. For networks with only one connection to the outside world, there is rarely any need to run BGP: setting a static default route has the same effect.

Multihoming

Having connections to two or more ISPs and running BGP means cooperating in worldwide interdomain routing. This is the only way to make sure your IP address range is still reachable when your connection to an ISP fails or when the ISP itself fails. Compared to just connecting to a single ISP, multihoming is like driving your own car rather than taking the bus. In the bus, someone else does the driving, and you’re just along for the ride. Under most circumstances, driving your own car isn’t very difficult, and the extra speed and flexibility are well worth it. However, you need to stay informed about issues such as traffic congestion, and you need to maintain the car yourself.

There are some important disadvantages to using BGP. A pessimist might say that you gain a lot of complexity to lose a lot of stability. Implementing BGP shouldn’t be taken lightly. Even if you do everything right, there will be times when you are unreachable because of BGP problems, when your network would have been reachable if you hadn’t used BGP. There is a lot you can do to keep the number of these incidents and the time to repair to a minimum, however. On the other hand, if you don’t run BGP, and your ISP has a problem in their network or the connection to them fails, there is usually very little you can do, and the downtime can be considerable. So in most cases, BGP will increase your uptime, but only if you carefully correct potential problems before they interfere with proper operation of the network.

[2] The “On Distributed Communications” series is available online at http://www.rand.org/publications/RM/baran.list.html.

[3] There was now also a MAE West, interconnected with FIX West.

[4] “The Design Philosophy of the DARPA Internet Protocols” contains a good overview; it can be found at http://www.cs.umd.edu/class/fall1999/cmsc711/papers/design-philosophy.pdf.

[5] This happens when router A thinks a certain destination is reachable over router B, but router B thinks this destination is reachable over router A. The packet is then forwarded back and forth between the two routers. A routing loop is usually caused by incorrect configuration or by temporary inconsistencies when there is a change in the network.

[6] To avoid confusion between routers and switches or hubs, Ethernets are drawn in this and other examples to resemble a strand of coaxial wiring with terminators at the ends and with hosts and routers connecting to the coax wire in different places.

[7] “Open” refers to OSPF being an open standard, not to the openness of the shortest path.

[8] The existence of separate routing tables for BGP processing (BGP table) and forwarding packets (“the routing table” or Forwarding Information Base) is ignored here.

[9] The relationship between traffic and cost is usually indirect, but in the long run, it’s cheaper to upgrade a peering connection for more traffic rather than a transit connection. The business case for peering with other networks is discussed later in the book.

Get BGP now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.