The future belongs to the companies and people that turn data into products.

We’ve all heard it: according to Hal Varian, statistics is the next sexy job. Five years ago, in What is Web 2.0, Tim O’Reilly said that “data is the next Intel Inside.” But what does that statement mean? Why do we suddenly care about statistics and about data?

In this post, I examine the many sides of data science—the technologies, the companies and the unique skill sets.

The web is full of “data-driven apps.” Almost any e-commerce application is a data-driven application. There’s a database behind a web front end, and middleware that talks to a number of other databases and data services (credit card processing companies, banks, and so on). But merely using data isn’t really what we mean by “data science.” A data application acquires its value from the data itself, and creates more data as a result. It’s not just an application with data; it’s a data product. Data science enables the creation of data products.

One of the earlier data products on the Web was the CDDB database. The developers of CDDB realized that any CD had a unique signature, based on the exact length (in samples) of each track on the CD. Gracenote built a database of track lengths, and coupled it to a database of album metadata (track titles, artists, album titles). If you’ve ever used iTunes to rip a CD, you’ve taken advantage of this database. Before it does anything else, iTunes reads the length of every track, sends it to CDDB, and gets back the track titles. If you have a CD that’s not in the database (including a CD you’ve made yourself), you can create an entry for an unknown album. While this sounds simple enough, it’s revolutionary: CDDB views music as data, not as audio, and creates new value in doing so. Their business is fundamentally different from selling music, sharing music, or analyzing musical tastes (though these can also be “data products”). CDDB arises entirely from viewing a musical problem as a data problem.

Google is a master at creating data products. Here’s a few examples:

Google’s breakthrough was realizing that a search engine could use input other than the text on the page. Google’s PageRank algorithm was among the first to use data outside of the page itself, in particular, the number of links pointing to a page. Tracking links made Google searches much more useful, and PageRank has been a key ingredient to the company’s success.

Spell checking isn’t a terribly difficult problem, but by suggesting corrections to misspelled searches, and observing what the user clicks in response, Google made it much more accurate. They’ve built a dictionary of common misspellings, their corrections, and the contexts in which they occur.

Speech recognition has always been a hard problem, and it remains difficult. But Google has made huge strides by using the voice data they’ve collected, and has been able to integrate voice search into their core search engine.

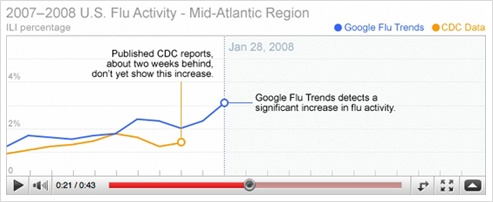

During the Swine Flu epidemic of 2009, Google was able to track the progress of the epidemic by following searches for flu-related topics.

Google isn’t the only company that knows how to use data. Facebook and LinkedIn use patterns of friendship relationships to suggest other people you may know, or should know, with sometimes frightening accuracy. Amazon saves your searches, correlates what you search for with what other users search for, and uses it to create surprisingly appropriate recommendations. These recommendations are “data products” that help to drive Amazon’s more traditional retail business. They come about because Amazon understands that a book isn’t just a book, a camera isn’t just a camera, and a customer isn’t just a customer; customers generate a trail of “data exhaust” that can be mined and put to use, and a camera is a cloud of data that can be correlated with the customers’ behavior, the data they leave every time they visit the site.

The thread that ties most of these applications together is that data collected from users provides added value. Whether that data is search terms, voice samples, or product reviews, the users are in a feedback loop in which they contribute to the products they use. That’s the beginning of data science.

In the last few years, there has been an explosion in the amount of data that’s available. Whether we’re talking about web server logs, tweet streams, online transaction records, “citizen science,” data from sensors, government data, or some other source, the problem isn’t finding data, it’s figuring out what to do with it. And it’s not just companies using their own data, or the data contributed by their users. It’s increasingly common to mashup data from a number of sources. “Data Mashups in R” analyzes mortgage foreclosures in Philadelphia County by taking a public report from the county sheriff’s office, extracting addresses and using Yahoo to convert the addresses to latitude and longitude, then using the geographical data to place the foreclosures on a map (another data source), and group them by neighborhood, valuation, neighborhood per-capita income, and other socio-economic factors.

The question facing every company today, every startup, every non-profit, every project site that wants to attract a community, is how to use data effectively—not just their own data, but all the data that’s available and relevant. Using data effectively requires something different from traditional statistics, where actuaries in business suits perform arcane but fairly well-defined kinds of analysis. What differentiates data science from statistics is that data science is a holistic approach. We’re increasingly finding data in the wild, and data scientists are involved with gathering data, massaging it into a tractable form, making it tell its story, and presenting that story to others.

To get a sense for what skills are required, let’s look at the data lifecycle: where it comes from, how you use it, and where it goes.

Data is everywhere: your government, your web server, your business partners, even your body. While we aren’t drowning in a sea of data, we’re finding that almost everything can (or has) been instrumented. At O’Reilly, we frequently combine publishing industry data from Nielsen BookScan with our own sales data, publicly available Amazon data, and even job data to see what’s happening in the publishing industry. Sites like Infochimps and Factual provide access to many large datasets, including climate data, MySpace activity streams, and game logs from sporting events. Factual enlists users to update and improve its datasets, which cover topics as diverse as endocrinologists to hiking trails.

Much of the data we currently work with is the direct consequence of Web 2.0, and of Moore’s Law applied to data. The web has people spending more time online, and leaving a trail of data wherever they go. Mobile applications leave an even richer data trail, since many of them are annotated with geolocation, or involve video or audio, all of which can be mined. Point-of-sale devices and frequent-shopper’s cards make it possible to capture all of your retail transactions, not just the ones you make online. All of this data would be useless if we couldn’t store it, and that’s where Moore’s Law comes in. Since the early ‘80s, processor speed has increased from 10 MHz to 3.6 GHz—an increase of 360 (not counting increases in word length and number of cores). But we’ve seen much bigger increases in storage capacity, on every level. RAM has moved from $1,000/MB to roughly $25/GB—a price reduction of about 40000, to say nothing of the reduction in size and increase in speed. Hitachi made the first gigabyte disk drives in 1982, weighing in at roughly 250 pounds; now terabyte drives are consumer equipment, and a 32 GB microSD card weighs about half a gram. Whether you look at bits per gram, bits per dollar, or raw capacity, storage has more than kept pace with the increase of CPU speed.

The importance of Moore’s law as applied to data isn’t just geek pyrotechnics. Data expands to fill the space you have to store it. The more storage is available, the more data you will find to put into it. The data exhaust you leave behind whenever you surf the web, friend someone on Facebook, or make a purchase in your local supermarket, is all carefully collected and analyzed. Increased storage capacity demands increased sophistication in the analysis and use of that data. That’s the foundation of data science.

So, how do we make that data useful? The first step of any data analysis project is “data conditioning,” or getting data into a state where it’s usable. We are seeing more data in formats that are easier to consume: Atom data feeds, web services, microformats, and other newer technologies provide data in formats that’s directly machine-consumable. But old-style screen scraping hasn’t died, and isn’t going to die. Many sources of “wild data” are extremely messy. They aren’t well-behaved XML files with all the metadata nicely in place. The foreclosure data used in “Data Mashups in R” was posted on a public website by the Philadelphia county sheriff’s office. This data was presented as an HTML file that was probably generated automatically from a spreadsheet. If you’ve ever seen the HTML that’s generated by Excel, you know that’s going to be fun to process.

Data conditioning can involve cleaning up messy HTML with tools like Beautiful Soup, natural language processing to parse plain text in English and other languages, or even getting humans to do the dirty work. You’re likely to be dealing with an array of data sources, all in different forms. It would be nice if there was a standard set of tools to do the job, but there isn’t. To do data conditioning, you have to be ready for whatever comes, and be willing to use anything from ancient Unix utilities such as awk to XML parsers and machine learning libraries. Scripting languages, such as Perl and Python, are essential.

Once you’ve parsed the data, you can start thinking about the quality of your data. Data is frequently missing or incongruous. If data is missing, do you simply ignore the missing points? That isn’t always possible. If data is incongruous, do you decide that something is wrong with badly behaved data (after all, equipment fails), or that the incongruous data is telling its own story, which may be more interesting? It’s reported that the discovery of ozone layer depletion was delayed because automated data collection tools discarded readings that were too low[1]. In data science, what you have is frequently all you’re going to get. It’s usually impossible to get “better” data, and you have no alternative but to work with the data at hand.

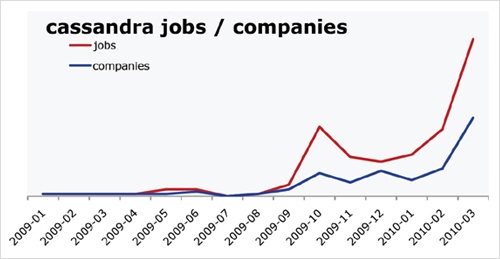

If the problem involves human language, understanding the data adds another dimension to the problem. Roger Magoulas, who runs the data analysis group at O’Reilly, was recently searching a database for Apple job listings requiring geolocation skills. While that sounds like a simple task, the trick was disambiguating “Apple” from many job postings in the growing Apple industry. To do it well you need to understand the grammatical structure of a job posting; you need to be able to parse the English. And that problem is showing up more and more frequently. Try using Google Trends to figure out what’s happening with the Cassandra database or the Python language, and you’ll get a sense of the problem. Google has indexed many, many websites about large snakes. Disambiguation is never an easy task, but tools like the Natural Language Toolkit library can make it simpler.

When natural language processing fails, you can replace artificial intelligence with human intelligence. That’s where services like Amazon’s Mechanical Turk come in. If you can split your task up into a large number of subtasks that are easily described, you can use Mechanical Turk’s marketplace for cheap labor. For example, if you’re looking at job listings, and want to know which originated with Apple, you can have real people do the classification for roughly $0.01 each. If you have already reduced the set to 10,000 postings with the word “Apple,” paying humans $0.01 to classify them only costs $100.

We’ve all heard a lot about “big data,” but “big” is really a red herring. Oil companies, telecommunications companies, and other data-centric industries have had huge datasets for a long time. And as storage capacity continues to expand, today’s “big” is certainly tomorrow’s “medium” and next week’s “small.” The most meaningful definition I’ve heard: “big data” is when the size of the data itself becomes part of the problem. We’re discussing data problems ranging from gigabytes to petabytes of data. At some point, traditional techniques for working with data run out of steam.

What are we trying to do with data that’s different? According to Jeff Hammerbacher[2] (@hackingdata), we’re trying to build information platforms or dataspaces. Information platforms are similar to traditional data warehouses, but different. They expose rich APIs, and are designed for exploring and understanding the data rather than for traditional analysis and reporting. They accept all data formats, including the most messy, and their schemas evolve as the understanding of the data changes.

Most of the organizations that have built data platforms have found it necessary to go beyond the relational database model. Traditional relational database systems stop being effective at this scale. Managing sharding and replication across a horde of database servers is difficult and slow. The need to define a schema in advance conflicts with reality of multiple, unstructured data sources, in which you may not know what’s important until after you’ve analyzed the data. Relational databases are designed for consistency, to support complex transactions that can easily be rolled back if any one of a complex set of operations fails. While rock-solid consistency is crucial to many applications, it’s not really necessary for the kind of analysis we’re discussing here. Do you really care if you have 1,010 or 1,012 Twitter followers? Precision has an allure, but in most data-driven applications outside of finance, that allure is deceptive. Most data analysis is comparative: if you’re asking whether sales to Northern Europe are increasing faster than sales to Southern Europe, you aren’t concerned about the difference between 5.92 percent annual growth and 5.93 percent.

To store huge datasets effectively, we’ve seen a new breed of databases appear. These are frequently called NoSQL databases, or Non-Relational databases, though neither term is very useful. They group together fundamentally dissimilar products by telling you what they aren’t. Many of these databases are the logical descendants of Google’s BigTable and Amazon’s Dynamo, and are designed to be distributed across many nodes, to provide “eventual consistency” but not absolute consistency, and to have very flexible schema. While there are two dozen or so products available (almost all of them open source), a few leaders have established themselves:

Cassandra: Developed at Facebook, in production use at Twitter, Rackspace, Reddit, and other large sites. Cassandra is designed for high performance, reliability, and automatic replication. It has a very flexible data model. A new startup, Riptano, provides commercial support.

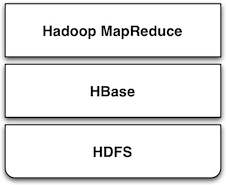

HBase: Part of the Apache Hadoop project, and modelled on Google’s BigTable. Suitable for extremely large databases (billions of rows, millions of columns), distributed across thousands of nodes. Along with Hadoop, commercial support is provided by Cloudera.

Storing data is only part of building a data platform, though. Data is only useful if you can do something with it, and enormous datasets present computational problems. Google popularized the MapReduce approach, which is basically a divide-and-conquer strategy for distributing an extremely large problem across an extremely large computing cluster. In the “map” stage, a programming task is divided into a number of identical subtasks, which are then distributed across many processors; the intermediate results are then combined by a single reduce task. In hindsight, MapReduce seems like an obvious solution to Google’s biggest problem, creating large searches. It’s easy to distribute a search across thousands of processors, and then combine the results into a single set of answers. What’s less obvious is that MapReduce has proven to be widely applicable to many large data problems, ranging from search to machine learning.

The most popular open source implementation of MapReduce is the Hadoop project. Yahoo’s claim that they had built the world’s largest production Hadoop application, with 10,000 cores running Linux, brought it onto center stage. Many of the key Hadoop developers have found a home at Cloudera, which provides commercial support. Amazon’s Elastic MapReduce makes it much easier to put Hadoop to work without investing in racks of Linux machines, by providing preconfigured Hadoop images for its EC2 clusters. You can allocate and de-allocate processors as needed, paying only for the time you use them.

Hadoop goes far beyond a simple MapReduce implementation (of which there are several); it’s the key component of a data platform. It incorporates HDFS, a distributed filesystem designed for the performance and reliability requirements of huge datasets; the HBase database; Hive, which lets developers explore Hadoop datasets using SQL-like queries; a high-level dataflow language called Pig; and other components. If anything can be called a one-stop information platform, Hadoop is it.

Hadoop has been instrumental in enabling “agile” data analysis. In software development, “agile practices” are associated with faster product cycles, closer interaction between developers and consumers, and testing. Traditional data analysis has been hampered by extremely long turn-around times. If you start a calculation, it might not finish for hours, or even days. But Hadoop (and particularly Elastic MapReduce) make it easy to build clusters that can perform computations on long datasets quickly. Faster computations make it easier to test different assumptions, different datasets, and different algorithms. It’s easer to consult with clients to figure out whether you’re asking the right questions, and it’s possible to pursue intriguing possibilities that you’d otherwise have to drop for lack of time.

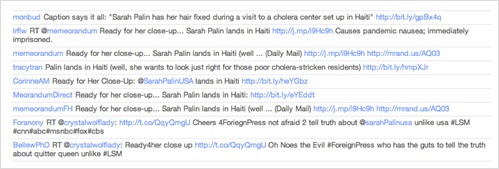

Hadoop is essentially a batch system, but Hadoop Online Prototype (HOP) is an experimental project that enables stream processing. Hadoop processes data as it arrives, and delivers intermediate results in (near) real-time. Near real-time data analysis enables features like trending topics on sites like Twitter. These features only require soft real-time; reports on trending topics don’t require millisecond accuracy. As with the number of followers on Twitter, a “trending topics” report only needs to be current to within five minutes—or even an hour. According to Hilary Mason (@hmason), data scientist at bit.ly, it’s possible to precompute much of the calculation, then use one of the experiments in real-time MapReduce to get presentable results.

Machine learning is another essential tool for the data scientist. We now expect web and mobile applications to incorporate recommendation engines, and building a recommendation engine is a quintessential artificial intelligence problem. You don’t have to look at many modern web applications to see classification, error detection, image matching (behind Google Goggles and SnapTell) and even face detection—an ill-advised mobile application lets you take someone’s picture with a cell phone, and look up that person’s identity using photos available online. Andrew Ng’s Machine Learning course is one of the most popular courses in computer science at Stanford, with hundreds of students (this video is highly recommended).

There are many libraries available for machine learning: PyBrain in Python, Elefant, Weka in Java, and Mahout (coupled to Hadoop). Google has just announced their Prediction API, which exposes their machine learning algorithms for public use via a RESTful interface. For computer vision, the OpenCV library is a de-facto standard.

Mechanical Turk is also an important part of the toolbox. Machine learning almost always requires a “training set,” or a significant body of known data with which to develop and tune the application. The Turk is an excellent way to develop training sets. Once you’ve collected your training data (perhaps a large collection of public photos from Twitter), you can have humans classify them inexpensively—possibly sorting them into categories, possibly drawing circles around faces, cars, or whatever interests you. It’s an excellent way to classify a few thousand data points at a cost of a few cents each. Even a relatively large job only costs a few hundred dollars.

While I haven’t stressed traditional statistics, building statistical models plays an important role in any data analysis. According to Mike Driscoll (@dataspora), statistics is the “grammar of data science.” It is crucial to “making data speak coherently.” We’ve all heard the joke that eating pickles causes death, because everyone who dies has eaten pickles. That joke doesn’t work if you understand what correlation means. More to the point, it’s easy to notice that one advertisement for R in a Nutshell generated 2 percent more conversions than another. But it takes statistics to know whether this difference is significant, or just a random fluctuation. Data science isn’t just about the existence of data, or making guesses about what that data might mean; it’s about testing hypotheses and making sure that the conclusions you’re drawing from the data are valid. Statistics plays a role in everything from traditional business intelligence (BI) to understanding how Google’s ad auctions work. Statistics has become a basic skill. It isn’t superseded by newer techniques from machine learning and other disciplines; it complements them.

While there are many commercial statistical packages, the open source R language—and its comprehensive package library, CRAN—is an essential tool. Although R is an odd and quirky language, particularly to someone with a background in computer science, it comes close to providing “one stop shopping” for most statistical work. It has excellent graphics facilities; CRAN includes parsers for many kinds of data; and newer extensions extend R into distributed computing. If there’s a single tool that provides an end-to-end solution for statistics work, R is it.

A picture may or may not be worth a thousand words, but a picture is certainly worth a thousand numbers. The problem with most data analysis algorithms is that they generate a set of numbers. To understand what the numbers mean, the stories they are really telling, you need to generate a graph. Edward Tufte’s Visual Display of Quantitative Information is the classic for data visualization, and a foundational text for anyone practicing data science. But that’s not really what concerns us here. Visualization is crucial to each stage of the data scientist. According to Martin Wattenberg (@wattenberg, founder of Flowing Media), visualization is key to data conditioning: if you want to find out just how bad your data is, try plotting it. Visualization is also frequently the first step in analysis. Hilary Mason says that when she gets a new data set, she starts by making a dozen or more scatter plots, trying to get a sense of what might be interesting. Once you’ve gotten some hints at what the data might be saying, you can follow it up with more detailed analysis.

There are many packages for plotting and presenting data. GnuPlot is very effective; R incorporates a fairly comprehensive graphics package; Casey Reas’ and Ben Fry’s Processing is the state of the art, particularly if you need to create animations that show how things change over time. At IBM’s Many Eyes, many of the visualizations are full-fledged interactive applications.

Nathan Yau’s FlowingData blog is a great place to look for creative visualizations. One of my favorites is this animation of the growth of Walmart over time. And this is one place where “art” comes in: not just the aesthetics of the visualization itself, but how you understand it. Does it look like the spread of cancer throughout a body? Or the spread of a flu virus through a population? Making data tell its story isn’t just a matter of presenting results; it involves making connections, then going back to other data sources to verify them. Does a successful retail chain spread like an epidemic, and if so, does that give us new insights into how economies work? That’s not a question we could even have asked a few years ago. There was insufficient computing power, the data was all locked up in proprietary sources, and the tools for working with the data were insufficient. It’s the kind of question we now ask routinely.

Data science requires skills ranging from traditional computer science to mathematics to art. Describing the data science group he put together at Facebook (possibly the first data science group at a consumer-oriented web property), Jeff Hammerbacher said:

... on any given day, a team member could author a multistage processing pipeline in Python, design a hypothesis test, perform a regression analysis over data samples with R, design and implement an algorithm for some data-intensive product or service in Hadoop, or communicate the results of our analyses to other members of the organization[3]

Where do you find the people this versatile? According to DJ Patil, chief scientist at LinkedIn (@dpatil), the best data scientists tend to be “hard scientists,” particularly physicists, rather than computer science majors. Physicists have a strong mathematical background, computing skills, and come from a discipline in which survival depends on getting the most from the data. They have to think about the big picture, the big problem. When you’ve just spent a lot of grant money generating data, you can’t just throw the data out if it isn’t as clean as you’d like. You have to make it tell its story. You need some creativity for when the story the data is telling isn’t what you think it’s telling.

Scientists also know how to break large problems up into smaller problems. Patil described the process of creating the group recommendation feature at LinkedIn. It would have been easy to turn this into a high-ceremony development project that would take thousands of hours of developer time, plus thousands of hours of computing time to do massive correlations across LinkedIn’s membership. But the process worked quite differently: it started out with a relatively small, simple program that looked at members’ profiles and made recommendations accordingly. Asking things like, did you go to Cornell? Then you might like to join the Cornell Alumni group. It then branched out incrementally. In addition to looking at profiles, LinkedIn’s data scientists started looking at events that members attended. Then at books members had in their libraries. The result was a valuable data product that analyzed a huge database—but it was never conceived as such. It started small, and added value iteratively. It was an agile, flexible process that built toward its goal incrementally, rather than tackling a huge mountain of data all at once.

This is the heart of what Patil calls “data jiujitsu”—using smaller auxiliary problems to solve a large, difficult problem that appears intractable. CDDB is a great example of data jiujitsu: identifying music by analyzing an audio stream directly is a very difficult problem (though not unsolvable—see midomi, for example). But the CDDB staff used data creatively to solve a much more tractable problem that gave them the same result. Computing a signature based on track lengths, and then looking up that signature in a database, is trivially simple.

Entrepreneurship is another piece of the puzzle. Patil’s first flippant answer to “what kind of person are you looking for when you hire a data scientist?” was “someone you would start a company with.” That’s an important insight: we’re entering the era of products that are built on data. We don’t yet know what those products are, but we do know that the winners will be the people, and the companies, that find those products. Hilary Mason came to the same conclusion. Her job as scientist at bit.ly is really to investigate the data that bit.ly is generating, and find out how to build interesting products from it. No one in the nascent data industry is trying to build the 2012 Nissan Stanza or Office 2015; they’re all trying to find new products. In addition to being physicists, mathematicians, programmers, and artists, they’re entrepreneurs.

Data scientists combine entrepreneurship with patience, the willingness to build data products incrementally, the ability to explore, and the ability to iterate over a solution. They are inherently interdiscplinary. They can tackle all aspects of a problem, from initial data collection and data conditioning to drawing conclusions. They can think outside the box to come up with new ways to view the problem, or to work with very broadly defined problems: “here’s a lot of data, what can you make from it?”

The future belongs to the companies who figure out how to collect and use data successfully. Google, Amazon, Facebook, and LinkedIn have all tapped into their datastreams and made that the core of their success. They were the vanguard, but newer companies like bit.ly are following their path. Whether it’s mining your personal biology, building maps from the shared experience of millions of travellers, or studying the URLs that people pass to others, the next generation of successful businesses will be built around data. The part of Hal Varian’s quote that nobody remembers says it all:

The ability to take data—to be able to understand it, to process it, to extract value from it, to visualize it, to communicate it—that’s going to be a hugely important skill in the next decades.

Data is indeed the new Intel Inside.

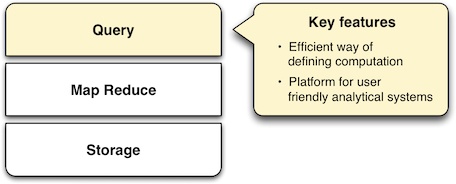

Storage, MapReduce and Query are ushering in data-driven products and services.

by Edd Dumbill

“Big data” is data that becomes large enough that it cannot be processed using conventional methods. Creators of web search engines were among the first to confront this problem. Today, social networks, mobile phones, sensors and science contribute to petabytes of data created daily.

To meet the challenge of processing such large data sets, Google created MapReduce. Google’s work and Yahoo’s creation of the Hadoop MapReduce implementation has spawned an ecosystem of big data processing tools.

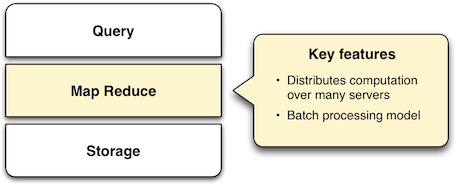

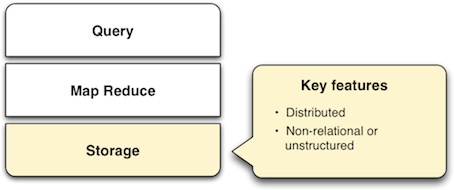

As MapReduce has grown in popularity, a stack for big data systems has emerged, comprising layers of Storage, MapReduce and Query (SMAQ). SMAQ systems are typically open source, distributed, and run on commodity hardware.

In the same way the commodity LAMP stack of Linux, Apache, MySQL and PHP changed the landscape of web applications, SMAQ systems are bringing commodity big data processing to a broad audience. SMAQ systems underpin a new era of innovative data-driven products and services, in the same way that LAMP was a critical enabler for Web 2.0.

Though dominated by Hadoop-based architectures, SMAQ encompasses a variety of systems, including leading NoSQL databases. This paper describes the SMAQ stack and where today’s big data tools fit into the picture.

Created at Google in response to the problem of creating web search indexes, the MapReduce framework is the powerhouse behind most of today’s big data processing. The key innovation of MapReduce is the ability to take a query over a data set, divide it, and run it in parallel over many nodes. This distribution solves the issue of data too large to fit onto a single machine.

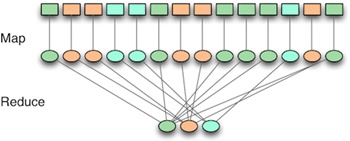

To understand how MapReduce works, look at the two phases suggested by its name. In the map phase, input data is processed, item by item, and transformed into an intermediate data set. In the reduce phase, these intermediate results are reduced to a summarized data set, which is the desired end result.

A simple example of MapReduce is the task of counting the number of unique words in a document. In the map phase, each word is identified and given the count of 1. In the reduce phase, the counts are added together for each word.

If that seems like an obscure way of doing a simple task, that’s because it is. In order for MapReduce to do its job, the map and reduce phases must obey certain constraints that allow the work to be parallelized. Translating queries into one or more MapReduce steps is not an intuitive process. Higher-level abstractions have been developed to ease this, discussed under Query below.

An important way in which MapReduce-based systems differ from conventional databases is that they process data in a batch-oriented fashion. Work must be queued for execution, and may take minutes or hours to process.

Using MapReduce to solve problems entails three distinct operations:

Loading the data—This operation is more properly called Extract, Transform, Load (ETL) in data warehousing terminology. Data must be extracted from its source, structured to make it ready for processing, and loaded into the storage layer for MapReduce to operate on it.

MapReduce—This phase will retrieve data from storage, process it, and return the results to the storage.

Extracting the result—Once processing is complete, for the result to be useful to humans, it must be retrieved from the storage and presented.

Many SMAQ systems have features designed to simplify the operation of each of these stages.

Hadoop is the dominant open source MapReduce implementation. Funded by Yahoo, it emerged in 2006 and, according to its creator Doug Cutting, reached “web scale” capability in early 2008.

The Hadoop project is now hosted by Apache. It has grown into a large endeavor, with multiple subprojects that together comprise a full SMAQ stack.

Since it is implemented in Java, Hadoop’s MapReduce implementation is accessible from the Java programming language. Creating MapReduce jobs involves writing functions to encapsulate the map and reduce stages of the computation. The data to be processed must be loaded into the Hadoop Distributed Filesystem.

Taking the word-count example from above, a suitable map function might look like the following (taken from the Hadoop MapReduce documentation, the key operations shown in bold).

public static class Map

extends Mapper<LongWritable, Text, Text, IntWritable> {

private final static IntWritable one = new IntWritable(1);

private Text word = new Text();

public void map(LongWritable key, Text value, Context context)

throws IOException, InterruptedException {

String line = value.toString();

StringTokenizer tokenizer = new StringTokenizer(line);

while (tokenizer.hasMoreTokens()) {

word.set(tokenizer.nextToken());

context.write(word, one);

}

}

}The corresponding reduce function sums the counts for each word.

public static class Reduce

extends Reducer<Text, IntWritable, Text, IntWritable> {

public void reduce(Text key, Iterable<IntWritable> values,

Context context) throws IOException, InterruptedException {

int sum = 0;

for (IntWritable val : values) {

sum += val.get();

}

context.write(key, new IntWritable(sum));

}

}The process of running a MapReduce job with Hadoop involves the following steps:

Defining the MapReduce stages in a Java program

Loading the data into the filesystem

Submitting the job for execution

Retrieving the results from the filesystem

Run via the standalone Java API, Hadoop MapReduce jobs can be complex to create, and necessitate programmer involvement. A broad ecosystem has grown up around Hadoop to make the task of loading and processing data more straightforward.

MapReduce has been implemented in a variety of other programming languages and systems, a list of which may be found in Wikipedia’s entry for MapReduce. Notably, several NoSQL database systems have integrated MapReduce, and are described later in this paper.

MapReduce requires storage from which to fetch data and in which to store the results of the computation. The data expected by MapReduce is not relational data, as used by conventional databases. Instead, data is consumed in chunks, which are then divided among nodes and fed to the map phase as key-value pairs. This data does not require a schema, and may be unstructured. However, the data must be available in a distributed fashion, to serve each processing node.

The design and features of the storage layer are important not just because of the interface with MapReduce, but also because they affect the ease with which data can be loaded and the results of computation extracted and searched.

The standard storage mechanism used by Hadoop is the Hadoop Distributed File System, HDFS. A core part of Hadoop, HDFS has the following features, as detailed in the HDFS design document.

Fault tolerance—Assuming that failure will happen allows HDFS to run on commodity hardware.

Streaming data access—HDFS is written with batch processing in mind, and emphasizes high throughput rather than random access to data.

Extreme scalability—HDFS will scale to petabytes; such an installation is in production use at Facebook.

Portability—HDFS is portable across operating systems.

Write once—By assuming a file will remain unchanged after it is written, HDFS simplifies replication and speeds up data throughput.

Locality of computation—Due to data volume, it is often much faster to move the program near to the data, and HDFS has features to facilitate this.

HDFS provides an interface similar to that of regular filesystems. Unlike a database, HDFS can only store and retrieve data, not index it. Simple random access to data is not possible. However, higher-level layers have been created to provide finer-grained functionality to Hadoop deployments, such as HBase.

One approach to making HDFS more usable is HBase. Modeled after Google’s BigTable database, HBase is a column-oriented database designed to store massive amounts of data. It belongs to the NoSQL universe of databases, and is similar to Cassandra and Hypertable.

HBase uses HDFS as a storage system, and thus is capable of storing a large volume of data through fault-tolerant, distributed nodes. Like similar column-store databases, HBase provides REST and Thrift based API access.

Because it creates indexes, HBase offers fast, random access to its contents, though with simple queries. For complex operations, HBase acts as both a source and a sink (destination for computed data) for Hadoop MapReduce. HBase thus allows systems to interface with Hadoop as a database, rather than the lower level of HDFS.

Data warehousing, or storing data in such a way as to make reporting and analysis easier, is an important application area for SMAQ systems. Developed originally at Facebook, Hive is a data warehouse framework built on top of Hadoop. Similar to HBase, Hive provides a table-based abstraction over HDFS and makes it easy to load structured data. In contrast to HBase, Hive can only run MapReduce jobs and is suited for batch data analysis. Hive provides a SQL-like query language to execute MapReduce jobs, described in the Query section below.

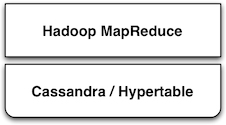

Cassandra and Hypertable are both scalable column-store databases that follow the pattern of BigTable, similar to HBase.

An Apache project, Cassandra originated at Facebook and is now in production in many large-scale websites, including Twitter, Facebook, Reddit and Digg. Hypertable was created at Zvents and spun out as an open source project.

Both databases offer interfaces to the Hadoop API that allow them to act as a source and a sink for MapReduce. At a higher level, Cassandra offers integration with the Pig query language (see the Query section below), and Hypertable has been integrated with Hive.

The storage solutions examined so far have all depended on Hadoop for MapReduce. Other NoSQL databases have built-in MapReduce features that allow computation to be parallelized over their data stores. In contrast with the multi-component SMAQ architectures of Hadoop-based systems, they offer a self-contained system comprising storage, MapReduce and query all in one.

Whereas Hadoop-based systems are most often used for batch-oriented analytical purposes, the usual function of NoSQL stores is to back live applications. The MapReduce functionality in these databases tends to be a secondary feature, augmenting other primary query mechanisms. Riak, for example, has a default timeout of 60 seconds on a MapReduce job, in contrast to the expectation of Hadoop that such a process may run for minutes or hours.

These prominent NoSQL databases contain MapReduce functionality:

CouchDB is a distributed database, offering semi-structured document-based storage. Its key features include strong replication support and the ability to make distributed updates. Queries in CouchDB are implemented using JavaScript to define the map and reduce phases of a MapReduce process.

MongoDB is very similar to CouchDB in nature, but with a stronger emphasis on performance, and less suitability for distributed updates, replication, and versioning. MongoDB MapReduce operations are specified using JavaScript.

Riak is another database similar to CouchDB and MongoDB, but places its emphasis on high availability. MapReduce operations in Riak may be specified with JavaScript or Erlang.

In many applications, the primary source of data is in a relational database using platforms such as MySQL or Oracle. MapReduce is typically used with this data in two ways:

Using relational data as a source (for example, a list of your friends in a social network).

Re-injecting the results of a MapReduce operation into the database (for example, a list of product recommendations based on friends’ interests).

It is therefore important to understand how MapReduce can interface with relational database systems. At the most basic level, delimited text files serve as an import and export format between relational databases and Hadoop systems, using a combination of SQL export commands and HDFS operations. More sophisticated tools do, however, exist.

The Sqoop tool is designed to import data from relational databases into Hadoop. It was developed by Cloudera, an enterprise-focused distributor of Hadoop platforms. Sqoop is database-agnostic, as it uses the Java JDBC database API. Tables can be imported either wholesale, or using queries to restrict the data import.

Sqoop also offers the ability to re-inject the results of MapReduce from HDFS back into a relational database. As HDFS is a filesystem, Sqoop expects delimited text files and transforms them into the SQL commands required to insert data into the database.

For Hadoop systems that utilize the Cascading API (see the Query section below) the cascading.jdbc and cascading-dbmigrate tools offer similar source and sink functionality.

In addition to relational data sources, streaming data sources, such as web server log files or sensor output, constitute the most common source of input to big data systems. The Cloudera Flume project aims at providing convenient integration between Hadoop and streaming data sources. Flume aggregates data from both network and file sources, spread over a cluster of machines, and continuously pipes these into HDFS. The Scribe server, developed at Facebook, also offers similar functionality.

Several massively parallel processing (MPP) database products have MapReduce functionality built in. MPP databases have a distributed architecture with independent nodes that run in parallel. Their primary application is in data warehousing and analytics, and they are commonly accessed using SQL.

The Greenplum database is based on the open source PostreSQL DBMS, and runs on clusters of distributed hardware. The addition of MapReduce to the regular SQL interface enables fast, large-scale analytics over Greenplum databases, reducing query times by several orders of magnitude. Greenplum MapReduce permits the mixing of external data sources with the database storage. MapReduce operations can be expressed as functions in Perl or Python.

Aster Data’s nCluster data warehouse system also offers MapReduce functionality. MapReduce operations are invoked using Aster Data’s SQL-MapReduce technology. SQL-MapReduce enables the intermingling of SQL queries with MapReduce jobs defined using code, which may be written in languages including C#, C++, Java, R or Python.

Other data warehousing solutions have opted to provide connectors with Hadoop, rather than integrating their own MapReduce functionality.

Vertica, famously used by Farmville creator Zynga, is an MPP column-oriented database that offers a connector for Hadoop.

Netezza is an established manufacturer of hardware data warehousing and analytical appliances. Recently acquired by IBM, Netezza is working with Hadoop distributor Cloudera to enhance the interoperation between their appliances and Hadoop. While it solves similar problems, Netezza falls outside of our SMAQ definition, lacking both the open source and commodity hardware aspects.

Although creating a Hadoop-based system can be done entirely with open source, it requires some effort to integrate such a system. Cloudera aims to make Hadoop enterprise-ready, and has created a unified Hadoop distribution in its Cloudera Distribution for Hadoop (CDH). CDH for Hadoop parallels the work of Red Hat or Ubuntu in creating Linux distributions. CDH comes in both a free edition and an Enterprise edition with additional proprietary components and support. CDH is an integrated and polished SMAQ environment, complete with user interfaces for operation and query. Cloudera’s work has resulted in some significant contributions to the Hadoop open source ecosystem.

Specifying MapReduce jobs in terms of defining distinct map and reduce functions in a programming language is unintuitive and inconvenient, as is evident from the Java code listings shown above. To mitigate this, SMAQ systems incorporate a higher-level query layer to simplify both the specification of the MapReduce operations and the retrieval of the result.

Many organizations using Hadoop will have already written in-house layers on top of the MapReduce API to make its operation more convenient. Several of these have emerged either as open source projects or commercial products.

Query layers typically offer features that handle not only the specification of the computation, but the loading and saving of data and the orchestration of the processing on the MapReduce cluster. Search technology is often used to implement the final step in presenting the computed result back to the user.

Developed by Yahoo and now part of the Hadoop project, Pig provides a new high-level language, Pig Latin, for describing and running Hadoop MapReduce jobs. It is intended to make Hadoop accessible for developers familiar with data manipulation using SQL, and provides an interactive interface as well as a Java API. Pig integration is available for the Cassandra and HBase databases.

Below is shown the word-count example in Pig, including both the data loading and storing phases (the notation $0 refers to the first field in a record).

input = LOAD 'input/sentences.txt' USING TextLoader(); words = FOREACH input GENERATE FLATTEN(TOKENIZE($0)); grouped = GROUP words BY $0; counts = FOREACH grouped GENERATE group, COUNT(words); ordered = ORDER counts BY $0; STORE ordered INTO 'output/wordCount' USING PigStorage();

While Pig is very expressive, it is possible for developers to write custom steps in User Defined Functions (UDFs), in the same way that many SQL databases support the addition of custom functions. These UDFs are written in Java against the Pig API.

Though much simpler to understand and use than the MapReduce API, Pig suffers from the drawback of being yet another language to learn. It is SQL-like in some ways, but it is sufficiently different from SQL that it is difficult for users familiar with SQL to reuse their knowledge.

As introduced above, Hive is an open source data warehousing solution built on top of Hadoop. Created by Facebook, it offers a query language very similar to SQL, as well as a web interface that offers simple query-building functionality. As such, it is suited for non-developer users, who may have some familiarity with SQL.

Hive’s particular strength is in offering ad-hoc querying of data, in contrast to the compilation requirement of Pig and Cascading. Hive is a natural starting point for more full-featured business intelligence systems, which offer a user-friendly interface for non-technical users.

The Cloudera Distribution for Hadoop integrates Hive, and provides a higher-level user interface through the HUE project, enabling users to submit queries and monitor the execution of Hadoop jobs.

The Cascading project provides a wrapper around Hadoop’s MapReduce API to make it more convenient to use from Java applications. It is an intentionally thin layer that makes the integration of MapReduce into a larger system more convenient. Cascading’s features include:

A data processing API that aids the simple definition of MapReduce jobs.

An API that controls the execution of MapReduce jobs on a Hadoop cluster.

Access via JVM-based scripting languages such as Jython, Groovy, or JRuby.

Integration with data sources other than HDFS, including Amazon S3 and web servers.

Validation mechanisms to enable the testing of MapReduce processes.

Cascading’s key feature is that it lets developers assemble MapReduce operations as a flow, joining together a selection of “pipes”. It is well suited for integrating Hadoop into a larger system within an organization.

While Cascading itself doesn’t provide a higher-level query language, a derivative open source project called Cascalog does just that. Using the Clojure JVM language, Cascalog implements a query language similar to that of Datalog. Though powerful and expressive, Cascalog is likely to remain a niche query language, as it offers neither the ready familiarity of Hive’s SQL-like approach nor Pig’s procedural expression. The listing below shows the word-count example in Cascalog: it is significantly terser, if less transparent.

(defmapcatop split [sentence]

(seq (.split sentence "\\s+")))

(?<- (stdout) [?word ?count]

(sentence ?s) (split ?s :> ?word)

(c/count ?count))An important component of large-scale data deployments is retrieving and summarizing data. The addition of database layers such as HBase provides easier access to data, but does not provide sophisticated search capabilities.

To solve the search problem, the open source search and indexing platform Solr is often used alongside NoSQL database systems. Solr uses Lucene search technology to provide a self-contained search server product.

For example, consider a social network database where MapReduce is used to compute the influencing power of each person, according to some suitable metric. This ranking would then be reinjected to the database. Using Solr indexing allows operations on the social network, such as finding the most influential people whose interest profiles mention mobile phones, for instance.

Originally developed at CNET and now an Apache project, Solr has evolved from being just a text search engine to supporting faceted navigation and results clustering. Additionally, Solr can manage large data volumes over distributed servers. This makes it an ideal solution for result retrieval over big data sets, and a useful component for constructing business intelligence dashboards.

MapReduce, and Hadoop in particular, offers a powerful means of distributing computation among commodity servers. Combined with distributed storage and increasingly user-friendly query mechanisms, the resulting SMAQ architecture brings big data processing within reach for even small- and solo-development teams.

It is now economic to conduct extensive investigation into data, or create data products that rely on complex computations. The resulting explosion in capability has forever altered the landscape of analytics and data warehousing systems, lowering the bar to entry and fostering a new generation of products, services and organizational attitudes—a trend explored more broadly in Mike Loukides’ “What is Data Science?” report.

The emergence of Linux gave power to the innovative developer with merely a small Linux server at their desk: SMAQ has the same potential to streamline data centers, foster innovation at the edges of an organization, and enable new startups to cheaply create data-driven businesses.

Infochimps execs discuss the challenges of data scraping.

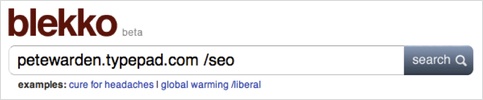

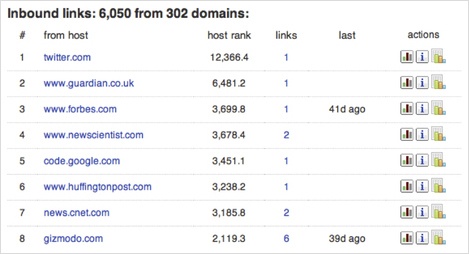

In 2008, the Austin-based data startup Infochimps released a scrape of Twitter data that was later taken down at the request of the microblogging site because of user privacy concerns. Infochimps has since struck a deal with Twitter to make some datasets available on the site, and the Infochimps marketplace now contains more than 10,000 datasets from a variety of sources. Not all these datasets have been obtained via scraping, but nevertheless, the company’s process of scraping, cleaning, and selling big data is an interesting topic to explore, both technically and legally.

With that in mind, Infochimps CEO Nick Ducoff, CTO Flip Kromer, and business development manager Dick Hall explain the business of data scraping in the following interview.

What are the legal implications of data scraping?

Dick Hall: There are three main areas you need to consider: copyright, terms of service, and “trespass to chattels.”

United States copyright law protects against unauthorized copying of “original works of authorship.” Facts and ideas are not copyrightable. However, expressions or arrangements of facts may be copyrightable. For example, a recipe for dinner is not copyrightable, but a recipe book with a series of recipes selected based on a unifying theme would be copyrightable. This example illustrates the “originality” requirement for copyright.

Let’s apply this to a concrete web-scraping example. The New York Times publishes a blog post that includes the results of an election poll arranged in descending order by percentage. The New York Times can claim a copyright on the blog post, but not the table of poll results. A web scraper is free to copy the data contained in the table without fear of copyright infringement. However, in order to make a copy of the blog post wholesale, the web scraper would have to rely on a defense to infringement, such as fair use. The result is that it is difficult to maintain a copyright over data, because only a specific arrangement or selection of the data will be protected.

Most websites include a page outlining their terms of service (ToS), which defines the acceptable use of the website. For example, YouTube forbids a user from posting copyrighted materials if the user does not own the copyright. Terms of service are based in contract law, but their enforceability is a gray area in US law. A web scraper violating the letter of a site’s ToS may argue that they never explicitly saw or agreed to the terms of service.

Assuming ToS are enforceable, they are a risky issue for web scrapers. First, every site on the Internet will have a different ToS — Twitter, Facebook, and The New York Times may all have drastically different ideas of what is acceptable use. Second, a site may unilaterally change the ToS without notice and maintain that continued use represents acceptance of the new ToS by a web scraper or user. For example, Twitter recently changed its ToS to make it significantly more difficult for outside organizations to store or export tweets for any reason.

There’s also the issue of volume. High-volume web scraping could cause significant monetary damages to the sites being scraped. For example, if a web scraper checks a site for changes several thousand times per second, it is functionally equivalent to a denial of service attack. In this case, the web scraper may be liable for damages under a theory of “trespass to chattels,” because the site owner has a property interest in his or her web servers. A good-natured web scraper should be able to avoid this issue by picking a reasonable frequency for scraping.

What are some of the challenges of acquiring data through scraping?

Flip Kromer: There are several problems with the scale and the metadata, as well as historical complications.

Scale — It’s obvious that terabytes of data will cause problems, but so (on most filesystems) will having tens of millions of files in the same directory tree.

Metadata — It’s a chicken-and-egg problem. Since few programs can draw on rich metadata, it’s not much use annotating it. But since so few datasets are annotated, it’s not worth writing support into your applications. We have an internal data-description language that we plan to open source as it matures.

Historical complications — Statisticians like SPSS files. Semantic web advocates like RDF/XML. Wall Street quants like Mathematica exports. There is no One True Format. Lifting each out of its source domain is time consuming.

But the biggest non-obvious problem we see is source domain complexity. This is what we call the “uber” problem. A developer wants the answer to a reasonable question, such as “What was the air temperature in Austin at noon on August 6, 1998?” The obvious answer — “damn hot” — isn’t acceptable. Neither is:

Well, it’s complicated. See, there are multiple weather stations, all reporting temperatures — each with its own error estimate — at different times. So you simply have to take the spatial- and time-average of their reported values across the region. And by the way, did you mean Austin’s city boundary, or its metropolitan area, or its downtown region?

There are more than a dozen incompatible yet fundamentally correct ways to measure time: Earth-centered? Leap seconds? Calendrical? Does the length of a day change as the earth’s rotational speed does?

Data at “everything” scale is sourced by domain experts, who necessarily live at the “it’s complicated” level. To make it useful to the rest of the world requires domain knowledge, and often a transformation that is simply nonsensical within the source domain.

How will data marketplaces change the work and direction of data startups?

Nick Ducoff: I vividly remember being taught about comparative advantage. This might age me a bit, but the lesson was: Michael Jordan doesn’t mow his own lawn. Why? Because he should spend his time practicing basketball since that’s what he’s best at and makes a lot of money doing. The same analogy applies to software developers. If you are best at the presentation layer, you don’t want to spend your time futzing around with databases

Infochimps allows these developers to spend their time doing what they do best — building apps — while we spend ours doing what we do best — making data easy to find and use. What we’re seeing is startups focusing on pieces of the stack. Over time the big cloud providers will buy these companies to integrate into their stacks.

Companies like Heroku (acquired by Salesforce) and CloudKick (acquired by Rackspace) have paved the way for this. Tools like ScraperWiki and Junar will allow anybody to pull down tables off the web, and companies like Mashery, Apigee and 3scale will continue to make APIs more prevalent. We’ll help make these tables and APIs findable and usable. Developers will be able to go from idea to app in hours, not days or weeks.

This interview was edited and condensed.

A data task illustrates the importance of simple and flexible tools.

The flowering of data science has both driven, and been driven by, an explosion of powerful tools. R provides a great platform for doing statistical analysis, Hadoop provides a framework for orchestrating large clusters to solve problems in parallel, and many NoSQL databases exist for storing huge amounts of unstructured data. The heavy machinery for serious number crunching includes perennials such as Mathematica, Matlab, and Octave, most of which have been extended for use with large clusters and other big iron.

But these tools haven’t negated the value of much simpler tools; in fact, they’re an essential part of a data scientist’s toolkit. Hilary Mason and Chris Wiggins wrote that “Sed, awk, grep are enough for most small tasks,” and there’s a layer of tools below sed, awk, and grep that are equally useful. Hilary has pointed out the value of exploring data sets with simple tools before proceeding to a more in-depth analysis. The advent of cloud computing, Amazon’s EC2 in particular, also places a premium on fluency with simple command-line tools. In conversation, Mike Driscoll of Metamarkets pointed out the value of basic tools like grep to filter your data before processing it or moving it somewhere else. Tools like grep were designed to do one thing and do it well. Because they’re so simple, they’re also extremely flexible, and can easily be used to build up powerful processing pipelines using nothing but the command line. So while we have an extraordinary wealth of power tools at our disposal, we’ll be the poorer if we forget the basics.

With that in mind, here’s a very simple, and not contrived, task that I needed to accomplish. I’m a ham radio operator. I spent time recently in a contest that involved making contacts with lots of stations all over the world, but particularly in Russia. Russian stations all sent their two-letter oblast abbreviation (equivalent to a US state). I needed to figure out how many oblasts I contacted, along with counting oblasts on particular ham bands. Yes, I have software to do that; and no, it wasn’t working (bad data file, since fixed). So let’s look at how to do this with the simplest of tools.

(Note: Some of the spacing in the associated data was edited to fit on the page. If you copy and paste the data, a few commands that rely on counting spaces won’t work.)

Log entries look like this:

QSO: 14000 CW 2011-03-19 1229 W1JQ 599 0001 UV5U 599 0041 QSO: 14000 CW 2011-03-19 1232 W1JQ 599 0002 SO2O 599 0043 QSO: 21000 CW 2011-03-19 1235 W1JQ 599 0003 RG3K 599 VR QSO: 21000 CW 2011-03-19 1235 W1JQ 599 0004 UD3D 599 MO ...

Most of the fields are arcane stuff that we won’t need for these exercises. The Russian entries have a two-letter oblast abbreviation at the end; rows that end with a number are contacts with stations outside of Russia. We’ll also use the second field, which identifies a ham radio band (21000 KHz, 14000 KHz, 7000 KHz, 3500 KHz, etc.) So first, let’s strip everything but the Russians with grep and a regular expression:

$ grep '599 [A-Z][A-Z]' rudx-log.txt | head -2 QSO: 21000 CW 2011-03-19 1235 W1JQ 599 0003 RG3K 599 VR QSO: 21000 CW 2011-03-19 1235 W1JQ 599 0004 UD3D 599 MO

grep may be the most useful tool in the Unix toolchest. Here, I’m just searching for lines that have 599 (which occurs everywhere) followed by a space, followed by two uppercase letters. To deal with mixed case (not necessary here), use grep -i. You can use character classes like :upper: rather than specifying the range A-Z, but why bother? Regular expressions can become very complex, but simple will often do the job, and be less error-prone.

If you’re familiar with grep, you may be asking why I didn’t use $ to match the end of line, and forget about the 599 noise. Good question. There is some whitespace at the end of the line; we’d have to match that, too. Because this file was created on a Windows machine, instead of just a newline at the end of each line, it has a return and a newline. The $ that grep uses to match the end-of-line only matches a Unix newline. So I did the easiest thing that would work reliably.

The simple head utility is a jewel. If you leave head off of the previous command, you’ll get a long listing scrolling down your screen. That’s rarely useful, especially when you’re building a chain of commands. head gives you the first few lines of output: 10 lines by default, but you can specify the number of lines you want. -2 says “just two lines,” which is enough for us to see that this script is doing what we want.

Next, we need to cut out the junk we don’t want. The easy way to do this is to use colrm (remove columns). That takes two arguments: the first and last column to remove. Column numbering starts with one, so in this case we can use colrm 1 72.

$ grep '599 [A-Z][A-Z]' rudx-log.txt | colrm 1 72 | head -2 VR MO ...

How did I know we wanted column 72? Just a little experimentation; command lines are cheap, especially with command history editing. I should actually use 73, but that additional space won’t hurt, nor will the additional whitespace at the end of each line. Yes, there are better ways to select columns; we’ll see them shortly. Next, we need to sort and find the unique abbreviations. I’m going to use two commands here: sort (which does what you’d expect), and uniq (to remove duplicates).

$ grep '599 [A-Z][A-Z]' rudx-log.txt | colrm 1 72 | sort |\ uniq | head -2 AD AL

Sort has a -u option that suppresses duplicates, but for some reason I prefer to keep sort and uniq separate. sort can also be made case-insensitive (-f), can select particular fields (meaning we could eliminate the colrm command, too), can do numeric sorts in addition to lexical sorts, and lots of other things. Personally, I prefer building up long Unix pipes one command at a time to hunting for the right options.

Finally, I said I wanted to count the number of oblasts. One of the most useful Unix utilities is a little program called wc: “word count.” That’s what it does. Its output is three numbers: the number of lines, the number of words, and the number of characters it has seen. For many small data projects, that’s really all you need.

$ grep '599 [A-Z][A-Z]' rudx-log.txt | colrm 1 72 | sort | uniq | wc

38 38 342So, 38 unique oblasts. You can say wc -l if you only want to count the lines; sometimes that’s useful. Notice that we no longer need to end the pipeline with head; we want wc to see all the data.

But I said I also wanted to know the number of oblasts on each ham band. That’s the first number (like 21000) in each log entry. So we’re throwing out too much data. We could fix that by adjusting colrm, but I promised a better way to pull out individual columns of data. We’ll use awk in a very simple way:

$ grep '599 [A-Z][A-Z]' rudx-log.txt | awk '{print $2 " " $11}' |\

sort | uniq

14000 AD

14000 AL

14000 AN

...awk is a very powerful tool; it’s a complete programming language that can do almost any kind of text manipulation. We could do everything we’ve seen so far as an awk program. But rather than use it as a power tool, I’m just using it to pull out the second and eleventh fields from my input. The single quotes are needed around the awk program, to prevent the Unix shell from getting confused. Within awk’s print command, we need to explicitly include the space, otherwise it will run the fields together.

The cut utility is another alternative to colrm and awk. It’s designed for removing portions of a file. cut isn’t a full programming language, but it can make more complex transformations than simply deleting a range of columns. However, although it’s a simple tool at heart, it can get tricky; I usually find that, when colrm runs out of steam, it’s best jumping all the way to awk.

We’re still a little short of our goal: how do we count the number of oblasts on each band? At this point, I use a really cheesy solution: another grep, followed by wc:

$ grep '599 [A-Z][A-Z]' rudx-log.txt | awk '{print $2 " " $11}' |\

sort | uniq | grep 21000 | wc

20 40 180

$ grep '599 [A-Z][A-Z]' rudx-log.txt | awk '{print $2 " " $11}' |\

sort | uniq | grep 14000 | wc

26 52 234

...OK, 20 oblasts on the 21 MHz band, 26 on the 14 MHz band. And at this point, there are two questions you really should be asking. First, why not put grep 21000 first, and save the awk invocation? That’s just how the script developed. You could put the grep first, though you’d still need to strip extra gunk from the file. Second: What if there are gigabytes of data? You have to run this command for each band, and for some other project, you might need to run it dozens or hundreds of times. That’s a valid objection. To solve this problem, you need a more complex awk script (which has associative arrays in which you can save data), or you need a programming language such as perl, python, or ruby. At the same time, we’ve gotten fairly far with our data exploration, using only the simplest of tools.

Now let’s up the ante. Let’s say that there are a number of directories with lots of files in them, including these rudx-log.txt files. Let’s say that these directories are organized by year (2001, 2002, etc.). And let’s say we want to count oblasts across all the years for which we have records. How do we do that?

Here’s where we need find. My first approach is to take the filename (rudx-log.txt) out of the grep command, and replace it with a find command that looks for every file named rudx-log.txt in subdirectories of the current directory:

$ grep '599 [A-Z][A-Z]' `find . -name rudx-log.txt -print` |\

awk '{print $2 " " $11}' | sort | uniq | grep 14000 | wc

48 96 432OK, so 48 directories on the 14 MHz band, lifetime. I thought I had done better than that. What’s happening, though? That find command is simply saying “look at the current directory and its subdirectories, find files with the given name, and print the output.” The backquotes tell the Unix shell to use the output of find as arguments to grep. So we’re just giving grep a long list of files, instead of just one. Note the -print option: if it’s not there, find happily does nothing.

We’re almost done, but there are a couple of bits of hair you should worry about. First, if you invoke grep with more than one file on the command line, each line of output begins with the name of the file in which it found a match:

... ./2008/rudx-log.txt:QSO: 14000 CW 2008-03-15 1526 W1JQ 599 0054 \\ UA6YW 599 AD ./2009/rudx-log.txt:QSO: 14000 CW 2009-03-21 1225 W1JQ 599 0015 \\ RG3K 599 VR ...

We’re lucky. grep just sticks the filename at the beginning of the line without adding spaces, and we’re using awk to print selected whitespace-separated fields. So the number of any field didn’t change. If we were using colrm, we’d have to fiddle with things to find the right columns. If the filenames had different lengths (reasonably likely, though not possible here), we couldn’t use colrm at all. Fortunately, you can suppress the filename by using grep -h.

The second piece of hair is less common, but potentially more troublesome. If you look at the last command, what we’re doing is giving the find command a really long list of filenames. How long is long? Can that list get too long? The answers are “we don’t know,” and “maybe.” In the nasty old days, things broke when the command line got longer than a few thousand characters. These days, who knows what’s too long ... But we’re doing “big data,” so it’s easy to imagine the find command expanding to hundreds of thousands, even millions of characters. More than that, our single Unix pipeline doesn’t parallelize very well; and if we really have big data, we want to parallelize it.

The answer to this problem is another old Unix utility, xargs. Xargs dates back to the time when it was fairly easy to come up with file lists that were too long. Its job is to break up command line arguments into groups and spawn as many separate commands as needed, running in parallel if possible (-P). We’d use it like this:

$ find . -name rudx-log.txt -print | xargs grep '599 [A-Z][A-Z]' |\

awk '{print $2 " " $11}' | grep 14000 | sort | uniq | wc

48 96 432This command is actually a nice little map-reduce implementation: the xargs command maps grep all the cores on your machine, and the output is reduced (combined) by the awk/sort/uniq chain. xargs has lots of command line options, so if you want to be confused, read the man page.

Another approach is to use find’s -exec option to invoke arbitrary commands. It’s somewhat more flexible than xargs, though in my opinion, find -exec has the sort of overly flexible but confusing syntax that’s surprisingly likely to lead to disaster. (It’s worth noting that the examples for -exec almost always involve automating bulk file deletion. Excuse me, but that’s a recipe for heartache. Take this from the guy who once deleted the business plan, then found that the backups hadn’t been done for about 6 months.) There’s an excellent tutorial for both xargs and find -exec at Softpanorama. I particularly like this tutorial because it emphasizes testing to make sure that your command won’t run amok and do bad things (like deleting the business plan).

That’s not all. Back in the dark ages, I wrote a shell script that did a recursive grep through all the subdirectories of the current directory. That’s a good shell programming exercise which I’ll leave to the reader. More to the point, I’ve noticed that there’s now a -R option to grep that makes it recursive. Clever little buggers ...

Before closing, I’d like to touch on a couple of tools that are a bit more exotic, but which should be in your arsenal in case things go wrong. od -c gives a raw dump of every character in your file. (-c says to dump characters, rather than octal or hexadecimal). It’s useful if you think your data is corrupted (it happens), or if it has something in it that you didn’t expect (it happens a LOT). od will show you what’s happening; once you know what the problem is, you can fix it. To fix it, you may want to use sed. sed is a cranky old thing: more than a hand tool, but not quite a power tool; sort of an antique treadle-operated drill press. It’s great for editing files on the fly, and doing batch edits. For example, you might use it if NUL characters were scattered through the data.

Finally, a tool I just learned about (thanks, @dataspora): the pipe viewer, pv. It isn’t a standard Unix utility. It comes with some versions of Linux, but the chances are that you’ll have to install it yourself. If you’re a Mac user, it’s in macports. pv tells you what’s happening inside the pipes as the command progresses. Just insert it into a pipe like this:

$ find . -name rudx-log.txt -print | xargs grep '599 [A-Z][A-Z]' |\

awk '{print $2 " " $11}' | pv | grep 14000 | sort | uniq | wc

3.41kB 0:00:00 [ 20kB/s] [<=>

48 96 432The pipeline runs normally, but you’ll get some additional output that shows the command’s progress. If something’s getting malfunctioning or performing too slowly, you’ll find out. pv is particularly good when you have huge amounts of data, and you can’t tell whether something has ground to a halt, or you just need to go out for coffee while the command runs to completion.

Whenever you need to work with data, don’t overlook the Unix “hand tools.” Sure, everything I’ve done here could be done with Excel or some other fancy tool like R or Mathematica. Those tools are all great, but if your data is living in the cloud, using these tools is possible, but painful. Yes, we have remote desktops, but remote desktops across the Internet, even with modern high-speed networking, are far from comfortable. Your problem may be too large to use the hand tools for final analysis, but they’re great for initial explorations. Once you get used to working on the Unix command line, you’ll find that it’s often faster than the alternatives. And the more you use these tools, the more fluent you’ll become.

Oh yeah, that broken data file that would have made this exercise superfluous? Someone emailed it to me after I wrote these scripts. The scripting took less than 10 minutes, start to finish. And, frankly, it was more fun.

Cloudera CEO Mike Olson on Hadoop’s architecture and its data applications.

by James Turner

Hadoop gets a lot of buzz these days in database and content management circles, but many people in the industry still don’t really know what it is and or how it can be best applied.

Cloudera CEO and Strata speaker Mike Olson, whose company offers an enterprise distribution of Hadoop and contributes to the project, discusses Hadoop’s background and its applications in the following interview.

Where did Hadoop come from?

Mike Olson: The underlying technology was invented by Google back in their earlier days so they could usefully index all the rich textural and structural information they were collecting, and then present meaningful and actionable results to users. There was nothing on the market that would let them do that, so they built their own platform. Google’s innovations were incorporated into Nutch, an open source project, and Hadoop was later spun-off from that. Yahoo has played a key role developing Hadoop for enterprise applications.

What problems can Hadoop solve?

Mike Olson: The Hadoop platform was designed to solve problems where you have a lot of data — perhaps a mixture of complex and structured data — and it doesn’t fit nicely into tables. It’s for situations where you want to run analytics that are deep and computationally extensive, like clustering and targeting. That’s exactly what Google was doing when it was indexing the web and examining user behavior to improve performance algorithms.

Hadoop applies to a bunch of markets. In finance, if you want to do accurate portfolio evaluation and risk analysis, you can build sophisticated models that are hard to jam into a database engine. But Hadoop can handle it. In online retail, if you want to deliver better search answers to your customers so they’re more likely to buy the thing you show them, that sort of problem is well addressed by the platform Google built. Those are just a few examples.

How is Hadoop architected?