Chapter 1. Careers in Data

In this chapter, Michael Li offers five tips for data scientists looking to strengthen their resumes. Jerry Overton seeks to quash the term “unicorn” by discussing five key habits to adopt that develop that magical combination of technical, analytical, and communication skills. Finally, Daniel Tunkelang explores why some employers prefer generalists over specialists when hiring data scientists.

Five Secrets for Writing the Perfect Data Science Resume

You can read this post on oreilly.com here.

Data scientists are in demand like never before, but nonetheless, getting a job as a data scientist requires a resume that shows off your skills. At The Data Incubator, we’ve received tens of thousands of resumes from applicants for our free Data Science Fellowship. We work hard to read between the lines to find great candidates who happen to have lackluster CVs, but many recruiters aren’t as diligent. Based on our experience, here’s the advice we give to our Fellows about how to craft the perfect resume to get hired as a data scientist.

Be brief: A resume is a summary of your accomplishments. It is not the right place to put your Little League participation award. Remember, you are being judged on something a lot closer to the average of your listed accomplishments than their sum. Giving unnecessary information will only dilute your average. Keep your resume to no more than one page. Remember that a busy HR person will scan your resume for about 10 seconds. Adding more content will only distract them from finding key information (as will that second page). That said, don’t play font games; keep text at 11-point font or above.

Avoid weasel words: “Weasel words” are subject words that create an impression but can allow their author to “weasel” out of any specific meaning if challenged. For example “talented coder” contains a weasel word. “Contributed 2,000 lines to Apache Spark” can be verified on GitHub. “Strong statistical background” is a string of weasel words. “Statistics PhD from Princeton and top thesis prize from the American Statistical Association” can be verified. Self-assessments of skills are inherently unreliable and untrustworthy; finding others who can corroborate them (like universities, professional associations) makes your claims a lot more believable.

Use metrics: Mike Bloomberg is famous for saying “If you can’t measure it, you can’t manage it and you can’t fix it.” He’s not the only manager to have adopted this management philosophy, and those who have are all keen to see potential data scientists be able to quantify their accomplishments. “Achieved superior model performance” is weak (and weasel-word-laden). Giving some specific metrics will really help combat that. Consider “Reduced model error by 20% and reduced training time by 50%.” Metrics are a powerful way of avoiding weasel words.

Cite specific technologies in context: Getting hired for a technical job requires demonstrating technical skills. Having a list of technologies or programming languages at the top of your resume is a start, but that doesn’t give context. Instead, consider weaving those technologies into the narratives about your accomplishments. Continuing with our previous example, consider saying something like this: “Reduced model error by 20% and reduced training time by 50% by using a warm-start regularized regression in scikit-learn.” Not only are you specific about your claims but they are also now much more believable because of the specific techniques you’re citing. Even better, an employer is much more likely to believe you understand in-demand scikit-learn, because instead of just appearing on a list of technologies, you’ve spoken about how you used it.

Talk about the data size: For better or worse, big data has become a “mine is bigger than yours” contest. Employers are anxious to see candidates with experience in large data sets—this is not entirely unwarranted, as handling truly “big data” presents unique new challenges that are not present when handling smaller data. Continuing with the previous example, a hiring manager may not have a good understanding of the technical challenges you’re facing when doing the analysis. Consider saying something like this: “Reduced model error by 20% and reduced training time by 50% by using a warm-start regularized regression in scikit-learn streaming over 2 TB of data.”

While data science is a hot field, it has attracted a lot of newly rebranded data scientists. If you have real experience, set yourself apart from the crowd by writing a concise resume that quantifies your accomplishments with metrics and demonstrates that you can use in-demand tools and apply them to large data sets.

There’s Nothing Magical About Learning Data Science

You can read this post on oreilly.com here.

There are people who can imagine ways of using data to improve an enterprise. These people can explain the vision, make it real, and affect change in their organizations. They are—or at least strive to be—as comfortable talking to an executive as they are typing and tinkering with code. We sometimes call them “unicorns” because the combination of skills they have are supposedly mystical, magical…and imaginary.

But I don’t think it’s unusual to meet someone who wants their work to have a real impact on real people. Nor do I think there is anything magical about learning data science skills. You can pick up the basics of machine learning in about 15 hours of lectures and videos. You can become reasonably good at most things with about 20 hours (45 minutes a day for a month) of focused, deliberate practice.

So basically, being a unicorn, or rather a professional data scientist, is something that can be taught. Learning all of the related skills is difficult but straightforward. With help from the folks at O’Reilly, we designed a tutorial for Strata + Hadoop World New York, 2016, “Data science that works: best practices for designing data-driven improvements, making them real, and driving change in your enterprise,” for those who aspire to the skills of a unicorn. The premise of the tutorial is that you can follow a direct path toward professional data science by taking on the following, most distinguishable habits:

Put Aside the Technology Stack

The tools and technologies used in data science are often presented as a technology stack. The stack is a problem because it encourages you to to be motivated by technology, rather than business problems. When you focus on a technology stack, you ask questions like, “Can this tool connect with that tool” or, “What hardware do I need to install this product?” These are important concerns, but they aren’t the kinds of things that motivate a professional data scientist.

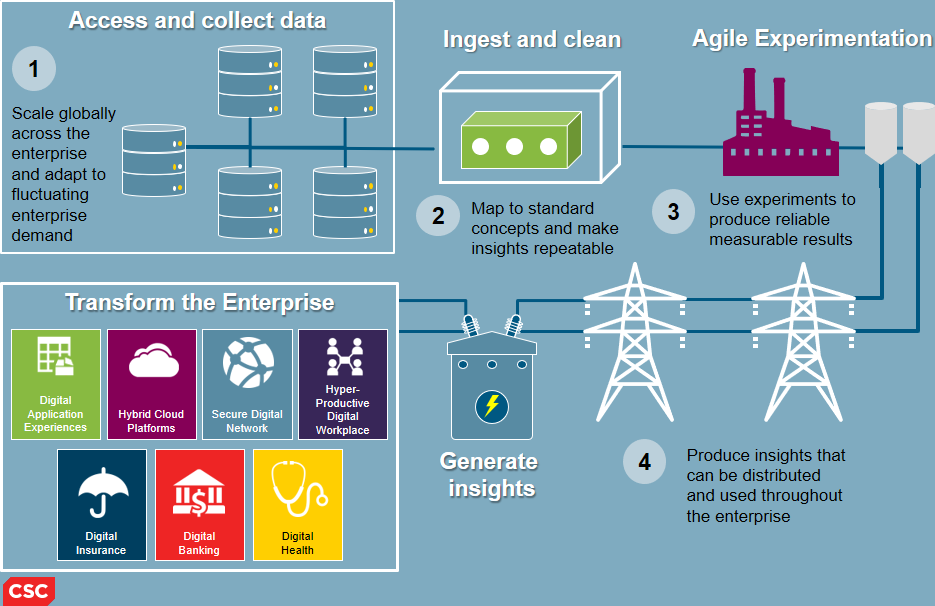

Professionals in data science tend to think of tools and technologies as part of an insight utility, rather than a technology stack (Figure 1-1). Focusing on building a utility forces you to select components based on the insights that the utility is meant to generate. With utility thinking, you ask questions like, “What do I need to discover an insight?” and, “Will this technology get me closer to my business goals?”

Figure 1-1. Data science tools and technologies as components of an insight utility, rather than a technology stack. Credit: Jerry Overton.

In the Strata + Hadoop World tutorial in New York, I taught simple strategies for shifting from technology-stack thinking to insight-utility thinking.

Keep Data Lying Around

Data science stories are often told in the reverse order from which they happen. In a well-written story, the author starts with an important question, walks you through the data gathered to answer the question, describes the experiments run, and presents resulting conclusions. In real data science, the process usually starts when someone looks at data they already have and asks, “Hey, I wonder if we could be doing something cool with this?” That question leads to tinkering, which leads to building something useful, which leads to the search for someone who might benefit. Most of the work is devoted to bridging the gap between the insight discovered and the stakeholder’s needs. But when the story is told, the reader is taken on a smooth progression from stakeholder to insight.

The questions you ask are usually the ones for which you have access to enough data to answer. Real data science usually requires a healthy stockpile of discretionary data. In the tutorial, I taught techniques for building and using data pipelines to make sure you always have enough data to do something useful.

Have a Strategy

Data strategy gets confused with data governance. When I think of strategy, I think of chess. To play a game of chess, you have to know the rules. To win a game of chess, you have to have a strategy. Knowing that “the D2 pawn can move to D3 unless there is an obstruction at D3 or the move exposes the king to direct attack” is necessary to play the game, but it doesn’t help me pick a winning move. What I really need are patterns that put me in a better position to win—“If I can get my knight and queen connected in the center of the board, I can force my opponent’s king into a trap in the corner.”

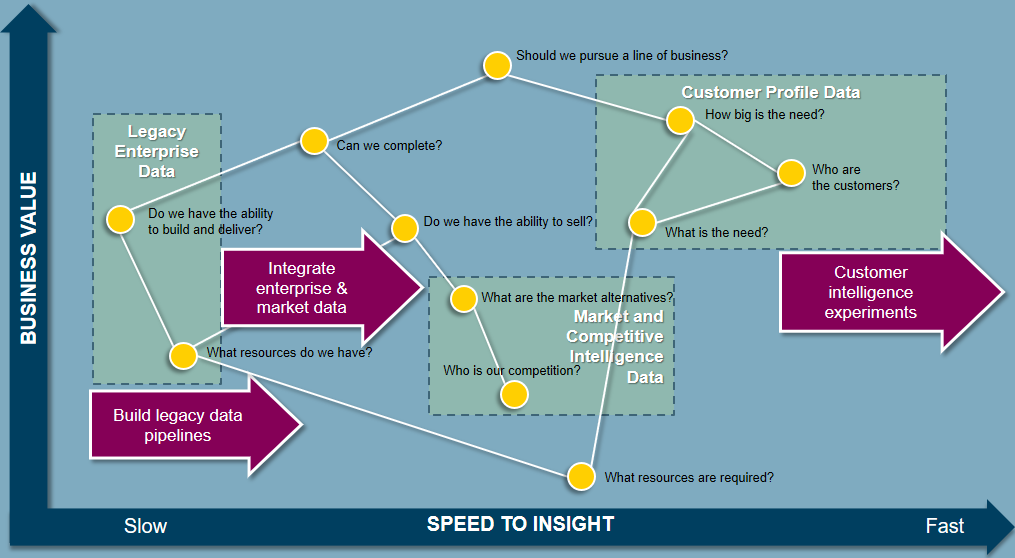

This lesson from chess applies to winning with data. Professional data scientists understand that to win with data, you need a strategy, and to build a strategy, you need a map. In the tutorial, we reviewed ways to build maps from the most important business questions, build data strategies, and execute the strategy using utility thinking (Figure 1-2).

Figure 1-2. A data strategy map. Data strategy is not the same as data governance. To execute a data strategy, you need a map. Credit: Jerry Overton.

Hack

By hacking, of course, I don’t mean subversive or illicit activities. I mean cobbling together useful solutions. Professional data scientists constantly need to build things quickly. Tools can make you more productive, but tools alone won’t bring your productivity to anywhere near what you’ll need.

To operate on the level of a professional data scientist, you have to master the art of the hack. You need to get good at producing new, minimum-viable, data products based on adaptations of assets you already have. In New York, we walked through techniques for hacking together data products and building solutions that you understand and are fit for purpose.

Experiment

I don’t mean experimenting as simply trying out different things and seeing what happens. I mean the more formal experimentation as prescribed by the scientific method. Remember those experiments you performed, wrote reports about, and presented in grammar-school science class? It’s like that.

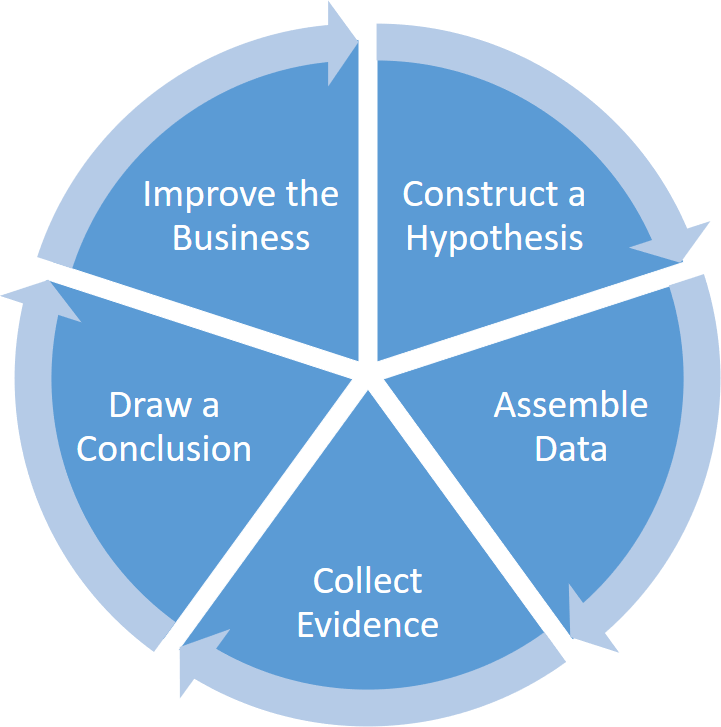

Running experiments and evaluating the results is one of the most effective ways of making an impact as a data scientist. I’ve found that great stories and great graphics are not enough to convince others to adopt new approaches in the enterprise. The only thing I’ve found to be consistently powerful enough to affect change is a successful example. Few are willing to try new approaches until they have been proven successful. You can’t prove an approach successful unless you get people to try it. The way out of this vicious cycle is to run a series of small experiments (Figure 1-3).

Figure 1-3. Small continuous experimentation is one of the most powerful ways for a data scientist to affect change. Credit: Jerry Overton.

In the tutorial at Strata + Hadoop World New York, we also studied techniques for running experiments in very short sprints, which forces us to focus on discovering insights and making improvements to the enterprise in small, meaningful chunks.

We’re at the beginning of a new phase of big data—a phase that has less to do with the technical details of massive data capture and storage and much more to do with producing impactful scalable insights. Organizations that adapt and learn to put data to good use will consistently outperform their peers. There is a great need for people who can imagine data-driven improvements, make them real, and drive change. I have no idea how many people are actually interested in taking on the challenge, but I’m really looking forward to finding out.

Data Scientists: Generalists or Specialists?

You can read this post on oreilly.com here.

Editor’s note: This is the second in a three-part series of posts by Daniel Tunkelang dedicated to data science as a profession. In this series, Tunkelang will cover the recruiting, organization, and essential functions of data science teams.

When LinkedIn posted its first job opening for a “data scientist” in 2008, the company was clearly looking for generalists:

Be challenged at LinkedIn. We’re looking for superb analytical minds of all levels to expand our small team that will build some of the most innovative products at LinkedIn.

No specific technical skills are required (we’ll help you learn SQL, Python, and R). You should be extremely intelligent, have quantitative background, and be able to learn quickly and work independently. This is the perfect job for someone who’s really smart, driven, and extremely skilled at creatively solving problems. You’ll learn statistics, data mining, programming, and product design, but you’ve gotta start with what we can’t teach—intellectual sharpness and creativity.

In contrast, most of today’s data scientist jobs require highly specific skills. Some employers require knowledge of a particular programming language or tool set. Others expect a PhD and significant academic background in machine learning and statistics. And many employers prefer candidates with relevant domain experience.

If you are building a team of data scientists, should you hire generalists or specialists? As with most things, it depends. Consider the kinds of problems your company needs to solve, the size of your team, and your access to talent. But, most importantly, consider your company’s stage of maturity.

Early Days

Generalists add more value than specialists during a company’s early days, since you’re building most of your product from scratch, and something is better than nothing. Your first classifier doesn’t have to use deep learning to achieve game-changing results. Nor does your first recommender system need to use gradient-boosted decision trees. And a simple t-test will probably serve your A/B testing needs.

Hence, the person building the product doesn’t need to have a PhD in statistics or 10 years of experience working with machine-learning algorithms. What’s more useful in the early days is someone who can climb around the stack like a monkey and do whatever needs doing, whether it’s cleaning data or native mobile-app development.

How do you identify a good generalist? Ideally this is someone who has already worked with data sets that are large enough to have tested his or her skills regarding computation, quality, and heterogeneity. Surely someone with a STEM background, whether through academic or on-the-job training, would be a good candidate. And someone who has demonstrated the ability and willingness to learn how to use tools and apply them appropriately would definitely get my attention. When I evaluate generalists, I ask them to walk me through projects that showcase their breadth.

Later Stage

Generalists hit a wall as your products mature: they’re great at developing the first version of a data product, but they don’t necessarily know how to improve it. In contrast, machine-learning specialists can replace naive algorithms with better ones and continuously tune their systems. At this stage in a company’s growth, specialists help you squeeze additional opportunity from existing systems. If you’re a Google or Amazon, those incremental improvements represent phenomenal value.

Similarly, having statistical expertise on staff becomes critical when you are running thousands of simultaneous experiments and worrying about interactions, novelty effects, and attribution. These are first-world problems, but they are precisely the kinds of problems that call for senior statisticians.

How do you identify a good specialist? Look for someone with deep experience in a particular area, like machine learning or experimentation. Not all specialists have advanced degrees, but a relevant academic background is a positive signal of the specialist’s depth and commitment to his or her area of expertise. Publications and presentations are also helpful indicators of this. When I evaluate specialists in an area where I have generalist knowledge, I expect them to humble me and teach me something new.

Conclusion

Of course, the ideal data scientist is a strong generalist who also brings unique specialties that complement the rest of the team. But that ideal is a unicorn—or maybe even an alicorn. Even if you are lucky enough to find these rare animals, you’ll struggle to keep them engaged in work that is unlikely to exercise their full range of capabilities.

So, should you hire generalists or specialists? It really does depend—and the largest factor in your decision should be your company’s stage of maturity. But if you’re still unsure, then I suggest you favor generalists, especially if your company is still in a stage of rapid growth. Your problems are probably not as specialized as you think, and hiring generalists reduces your risk. Plus, hiring generalists allows you to give them the opportunity to learn specialized skills on the job. Everybody wins.

Get Big Data Now: 2016 Edition now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.