Chapter 1. Designing for the Next 50 Billion Devices

Four Waves of Computing

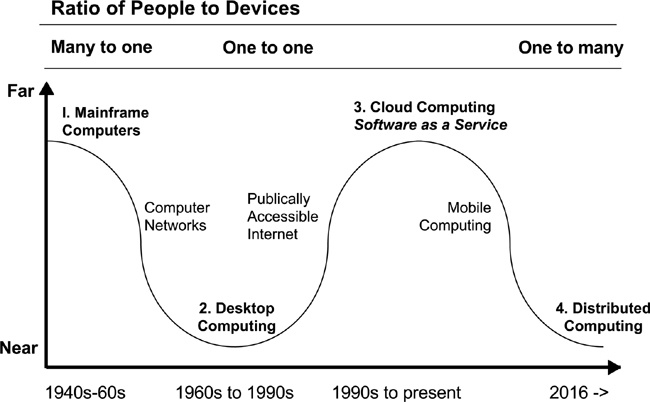

THE FIRST WAVE OF COMPUTING, from 1940 to about 1980, was dominated by many people serving one computer. This was the era of the large and limited mainframe computer. Mainframe use was largely reserved for technically proficient experts who took on the task of learning difficult, poorly designed interfaces as a source of professional pride.

The second wave, or desktop era, had one person to one computer. The computer increased in power, but it was still tethered into place. We saw the era of desktop publishing and the user interface replace difficult-to-use text inputs of the generation before.

The third wave, Weiser posited, would be ushered in by the Internet, with many desktops connected through widespread distributed computing. This would be the transition between the desktop era and ubiquitous computing. It would enable many smaller objects to be connected to a larger network.

This final wave, just beginning (and unevenly distributed), has many computers serving each person, everywhere in the world. Mark Weiser called this wave the era of “Ubiquitous Computing,” or “Ubicomp.”

Weiser’s idea of Ubiquitous Computing was that devices would outnumber individuals globally by a factor of five or more. In other words, if there’s a world population of 10 billion (which Weiser considered not so far-fetched in the 21st century), then 50 billion devices globally is a conservative estimate. Obviously, the ratio will be much higher in some parts of the world than others, but even this is beginning to level off.

Some of us are still interacting with one desktop, but most of us have multiple devices in our lives, from smartphones and laptops to small tablets and Internet-connected thermostats in our homes.

What happens when we have many devices serving one person? We run up against limits in data access and bandwidth that may lead us, through necessity, into the fourth wave, an era of Distributed Computing. Figure 1-1 illustrates these four waves of computing.

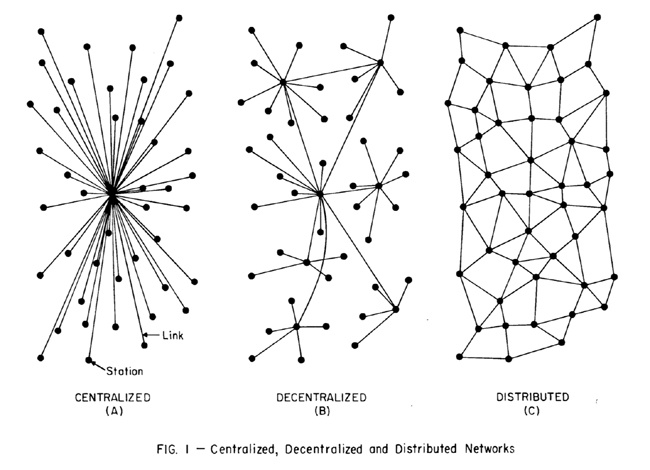

Ubiquitous Computing describes the state of affairs in which many devices in our personal landscape possess some kind of processing power but are not all necessarily connected to one another. What we know today as the “Internet of Things” is meant to describe a network between many devices, so represents a networked stage of Ubiquitous Computing; it also implies that many everyday objects, like your tennis shoes, may also become wirelessly connected to the network, opening up a whole range of new functionality, data collection possibilities, and security risks. Although it might be great to be able to track your daily steps, it might not be as nice if that data falls into the wrong hands. In Distributed Computing, every device on the network is used as a potential node for storing information. This means that even if a central server is taken out, it is still possible to access a file or piece of information normally hosted by the central server, because these bytes of information are “distributed” throughout the network.

Weiser’s original vision for Ubicomp also included a philosophy about how to handle the increase in devices per person. What happens when 50 billion devices are out there? In a world like this, the way devices communicate with us is crucial. If we were to expand their number but maintain our current standards of communication, we’d soon find ourselves—our entire world—buried under an indistinguishable pile of dialog boxes, pop-up boxes, push notifications, and alarms.

The World Is Not a Desktop

Gone are the luxurious dinosaurs of the desktop era, where code could be the size of a CD-ROM and be updated every two years. The act of using a desktop computer is an all-consuming process—a luxury that not all current devices share.

Desktop applications were able to take advantage of many resources in terms of processing power, bandwidth, and attention. A desktop computer assumes that you will sit in front of it in a chair, rooted to place, with all of your attention on a single screen. A mobile device, on the other hand, may be competing with your environment for attention. Rarely will you be sitting down in front of it. Instead, you might be outside or in a restaurant, in a state of focus that technologist Linda Stone calls “continuous partial attention.” A smaller, connected device today may work on tiny processors and need to make use of what it has.

Small devices must be cheap in order to be ubiquitous. They must be fast in order to be used. They must be capable of easily connecting if they are to survive. We’re looking more at a whole new species than we are a mechanical set of items. That means nature’s laws apply. Fast, small, and quickly reproducing devices will end up being part of the next generation, but good design can make products that span multiple generations, reducing complexity and the need for support.

We’re moving toward an ecosystem that is more organic than it is mechanical. We have computer viruses that operate similarly to their counterparts in nature. In this new era, code bloat is not only unnecessary—it is dangerous. To follow this analogy, a poorly written system invites illness and decay.

The Growing Ephemerality of Hardware

In the desktop era, hardware was stable. You bought a computer and kept it for several years—the hardware itself was an investment. You’d change or update the software very infrequently. It would come on a CD-ROM or packaged with the computer. Now, people hold on to the data streams and the software longer than the devices themselves. How many different phones has the average person used to connect to their profile on Facebook? Facebook, despite comprising dozens of rapidly shifting apps and programs, is more stable as a whole than the technology on which it is used.

No longer do people buy devices to use for a decade; now, technology may be upgraded within a single year. Companies and carriers are now offering monthly payment plans that allow you to be automatically “subscribed” to the latest device, eliminating the process of buying each device as it comes out. It’s an investment in the functionality and the data, not the device itself. It’s more about the data than the technology that serves it; the technology is just there to serve the data to the user.

In the past, technology’s primary value lay in hardware. Now a greater value lies in user-generated content. This means the simplest technology to get to that data wins; it’s easier to use, develop, support, and maintain.

The Social Network of 50 Billion Things

In the future, connected things will far outnumber connected people.

Consider a social network of 50 billion devices versus a social network of 10 billion humans. The social network of objects won’t just be about alerts for humans via machines, but alerts from one machine to another.

With so many objects and systems, one of the most important issues will be how those separate networks communicate with one another. This can lead to some real problems. Have you ever been stuck in a parking garage because the ticket machine won’t accept your money? When an entire system is automated with no human oversight, it can get stuck in a loop. What if a notification gets stuck in one system and can’t be read by another? What if a transaction drops entirely? Will there be notifications that the system failed, or will humans be put on pause while a human operator intervenes?

Technology in the real world can’t work well all of the time. In reality, things mess up when you need them most—like when you can’t get to the AAA app when your car is stuck on the side of the road, or when you can’t access your insurance card when you get to the emergency room because your phone is dead or your card is at home, and your life is at risk.

The Next 50 Billion Devices

Tech can’t take up too many resources in the future. The most efficient tech will eventually begin to win out, as resources, time, attention, and support become scarce commodities. People will have to make less complex systems or suffer the consequences.

Though technology might not have a limit, we do. Our environment also has limited connectivity and power. Things are going to become much more expensive over time.

The IDC FutureScape: Worldwide Internet of Things (IoT) 2015 Predictions report[3] suggests that “IT networks are expected to go from having excess capacity to being overloaded by the stress of Internet of Things (IoT) connected devices” in just three years.

This means that devices using too much bandwidth will experience connectivity and performance issues, and generate unnecessary costs. Overcoming this bandwidth limitation will take a combination of solutions, all of which need to happen at some point. One solution is Distributed Computing, which might be a natural outcome of bandwidth and content restraints. Another solution is to limit bandwidth usage by placing limits on how large websites and content can be. We’ll examine some of these solutions later in this chapter.

Where did telephone lines, the electrical grid, or modern roads come from? All of these required an invested government and business-based effort. Without them, we wouldn’t have the access and connectivity we have today.

Today’s telecom carriers and Internet providers build competing, redundant networks and don’t share network capacity, which makes it much more difficult for devices to communicate with one another.

It is ultimately in companies’ best interests to build more bandwidth as they grow, but the costs associated with this development could harm initial earnings and allow competitors a leg up while they build. Telecom and Internet providers might be forced to work differently in the future. If they worked together, they might be able to devise a way to share the costs and rewards of building infrastructure. If not, the government might need to take over this role, much like President Eisenhower’s creation of the Interstate Highway System to eliminate unsafe roads and inefficient routes.

Limit Bandwidth Usage

The websites we visit are designed to be appealing, and resource usage is considered primarily where it impacts usability. Websites that require significant bandwidth can slow down entire networks. The average smartphone user today is able to stream a variety of online media at will, over cell networks and WiFi. All of this volume and inefficiency is eating up the bandwidth that will soon be needed to connect the Internet of Things. This is already causing corporate conflict, with users caught up in the middle. In 2014, the Internet service providers Comcast and Verizon were caught throttling the traffic from video-streaming service provider Netflix. Netflix countered this limitation by paying the providers for more bandwidth, but even then, Verizon was still found to be throttling Netflix traffic.

Over time, bandwidth constraints might naturally push people to write software that uses less bandwidth. Companies that do well in the future might use technologies and protocols that rewrite the fabric of the Web, instituting protocols that eliminate redundancy in streaming data to many devices.

A distributed Web in which many devices also act as servers is one way the Web can evolve and scale. Instead of many devices requesting data from a single server, devices could increasingly request chunks of data from one another. We’re seeing slow developments in this area today, and hopefully there will be many more in the future.

Dedicate Separate Channels for Different Kinds of Technology

Connected devices could have their own connected channels. Dedicated channels could also serve as a backbone for devices to communicate in case of emergencies. That way, one network can still stay up if the other one becomes overloaded, so that millions of people streaming a popular video won’t get in the way of a tsunami alert or a 911 call.

Use Lower-Level Languages for Mission-Critical SystemS

If we’re going to be building truly resilient technology, we need to borrow a page from the past—where technologies were made with very low failure rates, or had enough edge cases accounted for in the design. Edge cases are unpredictable problems that arise at extremes. For instance, a running shoe might work well on typical pavement, but melt on track material on very hot summer days. Oftentimes edge cases are discovered after products are launched. In the worst circumstances, they may cause recalls. In June 2006, a Dell laptop burst into flames[5] during a technical conference in Japan. The issue was a defective battery prone to overheating. This prompted a worldwide recall of laptops that contained the battery, but not before six other people reported flaming machines. Edge cases are a fact of life for all products. They might be difficult to predict, but there are some ways to lessen the blow. If possible, involve industry veterans in your project and have them help think through various edge cases and ways that the software or hardware could go wrong. Chances are, they’ve seen it all before. With their help, a crisis or uncomfortable situation could be prevented.

If we’re making devices that absolutely need to work, then we can’t use the same development methods we’ve become accustomed to today. We need to go back to older, more reliable methods of building systems that do not fail.

COBOL was the first widely used high-level programming language for business applications. Although many people consider the language to be old news today, it is worth noting that:

70–75% of the business and transaction systems around the world run on COBOL. This includes credit card systems, ATMs, ticket purchasing, retail/POS systems, banking, payroll systems, telephone/cell calls, grocery stores, hospital systems, government systems, airline systems, insurance systems, automotive systems, and traffic signal systems. 90% of global financial transactions are processed in COBOL.[7]

COBOL may be complex to write, but the systems that use it run most of the time.

Create More Local Networks

Today, it is only slightly inconvenient if websites like Twitter go down or become overloaded. What’s less cute is when the lock on your door stops working because your phone’s battery is flat, or your electric car only works some of the time because it is connected to a remote power grid. The preceding advice is especially crucial for people and agencies building websites—the real world is not a website.

Do we really want the lights in our homes to connect to the cloud before they can turn on or off? In the case of a server failure, do we want to be stuck without light? No, we want the light switch to be immediate. A light switch is best connected to a local network, or an analog network. It’s OK for a website to go down, but not the lights in your house.

We need to prioritize the creation of a class of devices that do things locally, then go to the network to upload statistics or other information. Not all technology needs to operate in this fashion, but the physical technology that we live with and rely on daily must be resilient enough that it can work regardless of whether or not it is connected to a network.

Distributed and Individual Computing

Increasingly, our computing happens elsewhere. We make use of data on the cloud that’s far away from us, all the while having perfectly advanced computers in our pockets. There are loads of privacy and security issues with so much data going up and down to the cloud. A December 2014 report on the future of the Internet of Things[8] made a prediction that within five years, over 90% of IoT-generated data would be hosted in the cloud. Although this might make the access to data and the interaction among various connected devices easier, “cloud-based data storage will also increase the chance of cyber attacks with 90 percent of IT networks experiencing breaches related to IoT.” The amount of data generated by the devices will make it a tempting target.

As an illustration of this point, in 2014, the iCloud photo storage accounts of 100 celebrities were hacked and nude photos of several A-list celebrities were leaked onto the Web. One way to reduce the insecurity of cloud-based data storage is to have devices run on private local networks. Doing so will prevent hackers from hacking all of the data from the cloud at once, even if some opportunity is lost in connecting to larger networks in real time.

The best products and services in the future will make use of local networks and personal resources. For instance, if sensitive data is stored on shared servers in the cloud, there are privacy and security issues. Sensitive data is better stored close to the person, on a personal device with boundaries for sharing (and protections preventing that private data from being searched without a warrant), and backed up on another local device such as a desktop computer or hard drive. Individual computing will help keep personal data where it is safe instead of remotely stored in a place where it can be compromised. Storing data on your personal devices will also speed up interaction time. Your applications will only go to the network if they absolutely need to.

In the era of Distributed Computing, there will also be more options for where data is stored. Table 1-1 lists several data types and provides suggestions for the best places for each type to be stored. It also shows the potential consequences in case the security of this data is compromised.

Best location for data | Consequences if data is lost, or the network is compromised or disrupted | |

Sensitive/personal data | On a personal device such as a phone, laptop, backup hard drive, or home computer | Loss of employment; public humiliation; bullying or social isolation, which could potentially lead to suicide |

Medical data | On a local device that can be shared with medical professionals on a timed clock (“Share your data with this system for this purpose for a specific period of time”; afterward, the data is deleted and the system sanitized) | Blackmailing; loss of employment |

Business data (e.g., LinkedIn profile) | On publicly accessible servers (shared) | N/A (this data was created with the intention of sharing it) |

Home automation system | On a local network within the home without access to a larger network | Loss of access to or control of lights, thermostats, or other home systems |

Interoperability

One of the biggest technology issues in the future is going to come from systems that don’t talk to one another. Without connectivity throughout different networks, people can get caught in very difficult situations.

I rented a car once for a conference in Denver, Colorado. Initially it seemed fine, but once I got it onto the highway, the car wouldn’t go above 30 miles per hour.

I pulled into a parking lot and called emergency roadside assistance. Instead of being instantly connected to an emergency line, I was put on hold for 22 minutes. I was told to leave the car in the lot and that a tow truck would come pick it up. I was going to be late for my meeting, so I called a cab and headed into town. I figured I’d cancel my entire rental car reservation for the trip.

On the 50-minute cab ride to the hotel, I called the rental company to cancel the car reservation. I was put through to four different people, connected by a support person to two discontinued support numbers, and had to identify myself and explain my situation every time. They wondered where the car was. I told them emergency roadside assistance had picked it up. They didn’t have confirmation of the pickup or who I was.

I finally got them to cancel the rental, and I asked for a confirmation code. Three days later, I got the charge for the full rental. I had to get my employers to call a special number to reverse the charge and explain the situation. I was stuck in an automation trap. The systems didn’t talk to each other.

How can one product inform another? What can be done to keep different systems, or at least the people who are manning them, informed the entire way through a process? The real world runs on interconnected systems, not separate ones. Without ways for systems to communicate, you can get completely stuck.

Human Backup

Without feedback, people won’t be able to tell what’s going on with a system. They might assume something is happening when it isn’t, or get frustrated or stuck as automation increases. For critical systems, always ensure people are around in case something breaks, and make sure there are systems that pass information from one system to another in human-readable fashion!

The Future of Technology

Poorly made products are everywhere, waiting for innovation.

We are accustomed to buying products as they come out, in seasons. People are advised not to buy an Apple product halfway through a lifecycle, but to wait for the next one. We discard the old for the new. And it makes sense: devices quickly become incompatible with current hardware and software. The last few generations of devices are unusable, toxic garbage that gets shipped off to the landfill.

The past was about having very few high-quality products in the home. Already we’re finding people moving from the suburbs to walkable communities in the city. The question is: can we improve the future in time to prevent the worst outcomes in terms of pollution, a growing population, and a warming climate, or are we going to be too late?

Want to make great products? Improve the mundane! A high-quality product can keep you employed for the rest of your life, and your community, too. So many of us are caught up in designing something “new” that we forget that we can simply improve what’s already around us. All of those things you don’t like in your everyday life, but put up with? Ripe for innovation! Design them in a way that lasts for more than a couple of years and you will be on your way to a successful and beloved product with passionate users.

Conclusions

In this chapter, we covered the four waves of computing and what that means for the future of connected devices. We also covered how in the near future technology will run into issues such as bandwidth and design limitations, and some possible outcomes for technology and humanity.

Weiser and Brown hinted at a number of guidelines in their published work. In the following chapter, we’ll take these guidelines and put them into an organized philosophy of designing Calm Technology.

These are the key takeaways from this chapter:

We’ve gone from many people to one computer, to many computers per person. The next wave of computing will make demands on us in terms of privacy, security, bandwidth, and attention.

We can no longer design technology in the way we designed for desktops. We need to think about how we’ll design for the next 50 billion devices. We can help make the future more reasonable by writing efficient code, using lower-level languages for mission-critical systems, and creating more local networks. Consider distributed and individual computing, and design with interoperability in mind.

[2] Source: Paul Baran, “On Distributed Communications,” Rand Corporation, 1964. http://www.rand.org/content/dam/rand/pubs/research_memoranda/2006/RM3420.pdf.

[3] IDC FutureScape: Worldwide Internet of Things (IoT) 2015 Predictions. (http://www.thewhir.com/web-hosting-news/half-networks-will-feel-stranglehold-iot-devices-idc-report)

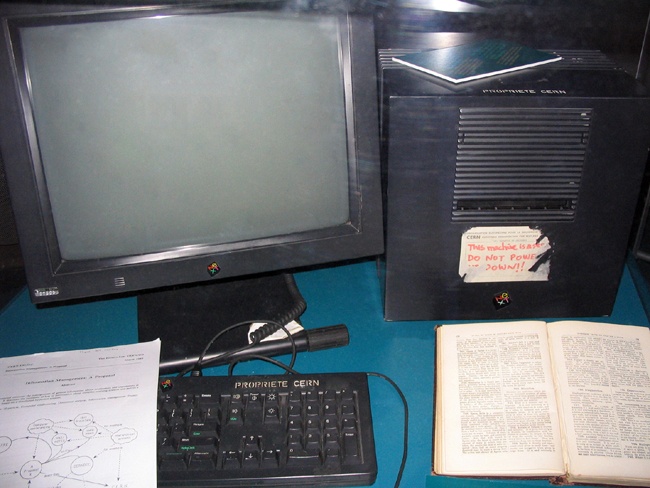

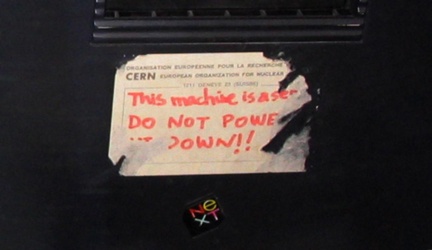

[4] Photo by Coolcaesar (https://commons.wikimedia.org/wiki/File:First_Web_Server.jpg) (GFDL (http://www.gnu.org/copyleft/fdl.html) or CC-BY-SA-3.0 (http://creativecommons.org/licenses/by-sa/3.0)), via Wikimedia Commons.

[5] “Dell Laptop Explodes in Flames,” 2006. (http://gizmodo.com/182257/dell-laptop-explodes-in-flames)

[6] Credit: Ibid.

[7] “Is there still a market for Cobol skills/developers?” report by Henry Ford College Computer Information Systems, 2009. https://cis.hfcc.edu/faq/cobol.

[8] “Half of IT Networks Will Feel the Stranglehold of IoT Devices: IDC Report,” 2014. (http://www.thewhir.com/web-hosting-news/half-networks-will-feel-stranglehold-iot-devices-idc-report)

Get Calm Technology now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.