Chapter 4. Scheduling and Tooling

The scheduling portion of the CKA focuses on the effects of defining resource boundaries when evaluated by the Kubernetes scheduler. The default runtime behavior of the scheduler can also be modified by defining node affinity rules, as well as taints and tolerations. Of those concepts, you are expected only to understand the nuances of resource boundaries and their effect on the scheduler in different scenarios. Finally, this domain of the curriculum mentions high-level knowledge of manifest management and templating tools.

At a high level, this chapter covers the following concepts:

-

Resource boundaries for Pods

-

Imperative and declarative manifest management

-

Common templating tools like Kustomize,

yq, and Helm

Understanding How Resource Limits Affect Pod Scheduling

A Kubernetes cluster can consist of multiple nodes. Depending on a variety of rules (e.g., node selectors, node affinity, taints and tolerations), the Kubernetes scheduler decides which node to pick for running the workload. The CKA exam doesn’t ask you to understand the scheduling concepts mentioned previously, but it would be helpful to have a rough idea how they work on a high level.

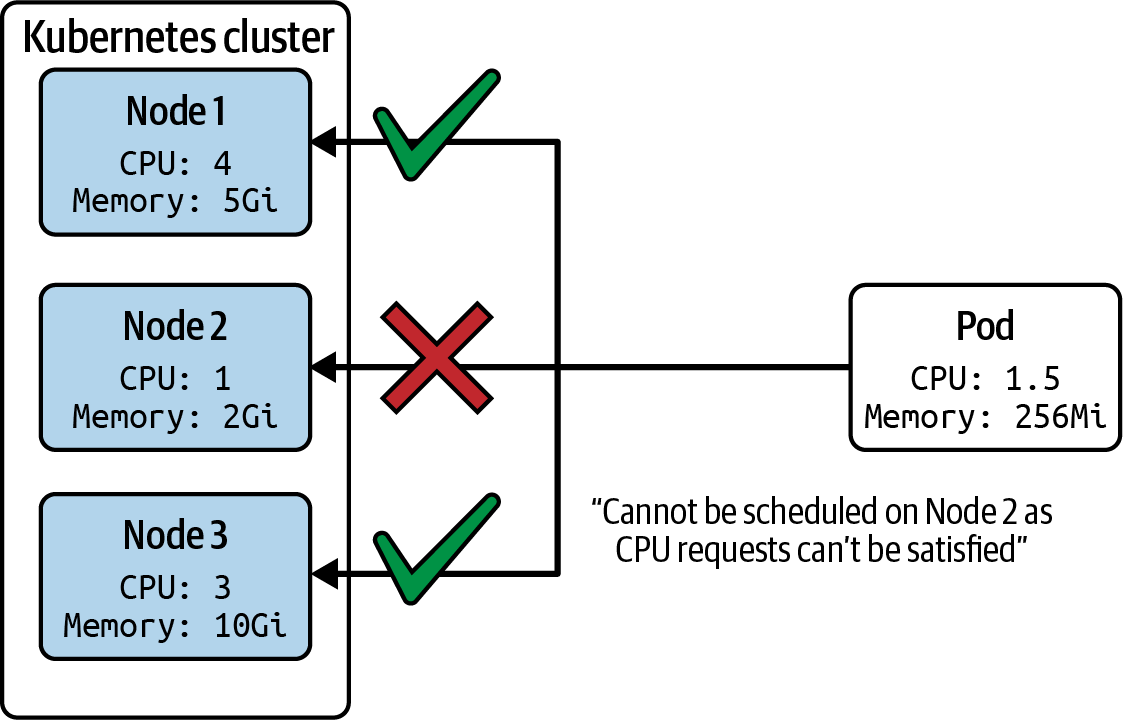

One metric that comes into play for workload scheduling is the resource request defined by the containers in a Pod. Commonly used resources that can be specified are CPU and memory. The scheduler ensures that the node’s resource capacity can fulfill the resource requirements of the Pod. More specifically, the scheduler determines the sum of resource requests per type across all containers defined in the Pod and compares them with the node’s available resources. Figure 4-1 illustrates the scheduling process based on the resource requests.

Figure 4-1. Pod scheduling based on resource requests

Defining Container Resource Requests

Each container in a Pod can define its own resource requests. Table 4-1 describes the available options including an example value.

| YAML Attribute | Description | Example Value |

|---|---|---|

|

CPU resource type |

|

|

Memory resource type |

|

|

Huge page resource type |

|

|

Ephemeral storage resource type |

|

Kubernetes uses resource units for resource types that deviate from standard resource units like megabytes and gigabytes. Explaining all intricacies of those units goes beyond the scope this book, but you can read up on the details in the documentation.

To make the use of those resource requests transparent, we’ll take a look at an example definition. The Pod YAML manifest shown in Example 4-1 defines two containers, each with their own resource requests. Any node that is allowed to run the Pod needs to be able to support a minimum memory capacity of 320Mi and 1250m CPU, the sum of resources across both containers.

Example 4-1. Setting container resource requests

apiVersion:v1kind:Podmetadata:name:rate-limiterspec:containers:-name:business-appimage:bmuschko/nodejs-business-app:1.0.0ports:-containerPort:8080resources:requests:memory:"256Mi"cpu:"1"-name:ambassadorimage:bmuschko/nodejs-ambassador:1.0.0ports:-containerPort:8081resources:requests:memory:"64Mi"cpu:"250m"

In this scenario, we are dealing with a Minikube Kubernetes cluster consisting of three nodes, one control plane node, and two worker nodes. The following command lists all nodes:

$ kubectl get nodes NAME STATUS ROLES AGE VERSION minikube Ready control-plane,master 12d v1.21.2 minikube-m02 Ready <none> 42m v1.21.2 minikube-m03 Ready <none> 41m v1.21.2

In the next step, we’ll create the Pod from the YAML manifest. The scheduler places the Pod on the node named minikube-m03:

$ kubectl create -f rate-limiter-pod.yaml pod/rate-limiter created $ kubectl get pod rate-limiter -o yaml | grep nodeName: nodeName: minikube-m03

Upon further inspection of the node, you can inspect its maximum capacity, how much of this capacity is allocatable, and the memory requests of the Pods scheduled on the node. The following command lists the information and condenses the output to the relevant bits and pieces:

$ kubectl describe node minikube-m03 ... Capacity: cpu: 2 ephemeral-storage: 17784752Ki hugepages-2Mi: 0 memory: 2186612Ki pods: 110 Allocatable: cpu: 2 ephemeral-storage: 17784752Ki hugepages-2Mi: 0 memory: 2186612Ki pods: 110 ... Non-terminated Pods: (3 in total) Namespace Name CPU Requests CPU Limits \ Memory Requests Memory Limits AGE --------- ---- ------------ ---------- \ --------------- ------------- --- default rate-limiter 1250m (62%) 0 (0%) \ 320Mi (14%) 0 (0%) 9m ...

It’s certainly possible that a Pod cannot be scheduled due to insufficient resources available on the nodes. In those cases, the event log of the Pod will indicate this situation with the reasons PodExceedsFreeCPU or PodExceedsFreeMemory. For more information on how to troubleshoot and resolve this situation, see the relevant section in the documentation.

Defining Container Resource Limits

Another metric you can set for a container are its resource limits. Resource limits ensure that the container cannot consume more than the allotted resource amounts. For example, you could express that the application running in the container should be constrained to 1000m of CPU and 512Mi of memory.

Depending on the container runtime used by the cluster, exceeding any of the allowed resource limits results in a termination of the application process running in the container or results in the system preventing the allocation of resources beyond the limits altogether. For an in-depth discussion on how resource limits are treated by the container runtime Docker, see the documentation.

Table 4-2 describes the available options including an example value.

| YAML Attribute | Description | Example Value |

|---|---|---|

|

CPU resource type |

|

|

Memory resource type |

|

|

Huge page resource type |

|

|

Ephemeral storage resource type |

|

Example 4-2 shows the definition of limits in action. Here, the container named business-app cannot use more than 512Mi of memory and 2000m of CPU. The container named ambassador defines a limit of 128Mi of memory and 500m of CPU.

Example 4-2. Setting container resource limits

apiVersion:v1kind:Podmetadata:name:rate-limiterspec:containers:-name:business-appimage:bmuschko/nodejs-business-app:1.0.0ports:-containerPort:8080resources:limits:memory:"512Mi"cpu:"2"-name:ambassadorimage:bmuschko/nodejs-ambassador:1.0.0ports:-containerPort:8081resources:limits:memory:"128Mi"cpu:"500m"

Assume that the Pod was scheduled on the node minikube-m03. Describing the node’s details reveals that the CPU and memory limits took effect. But there’s more. Kubernetes automatically assigns the same amount of resources for the requests if you only define the limits:

$ kubectl describe node minikube-m03 ... Non-terminated Pods: (3 in total) Namespace Name CPU Requests CPU Limits \ Memory Requests Memory Limits AGE --------- ---- ------------ ---------- \ --------------- ------------- --- default rate-limiter 1250m (62%) 1250m (62%) \ 320Mi (14%) 320Mi (14%) 11s ...

Defining Container Resource Requests and Limits

It’s recommended practice that you specify resource requests and limits for every container. Determining those resource expectations is not always easy, specifically for applications that haven’t been exercised in a production environment yet. Load testing the application early on during the development cycle can help with analyzing the resource needs. Further adjustments can be made by monitoring the application’s resource consumption after deploying it to the cluster. Example 4-3 combines resource requests and limits in a single YAML manifest.

Example 4-3. Setting container resource requests and limits

apiVersion:v1kind:Podmetadata:name:rate-limiterspec:containers:-name:business-appimage:bmuschko/nodejs-business-app:1.0.0ports:-containerPort:8080resources:requests:memory:"256Mi"cpu:"1"limits:memory:"512Mi"cpu:"2"-name:ambassadorimage:bmuschko/nodejs-ambassador:1.0.0ports:-containerPort:8081resources:requests:memory:"64Mi"cpu:"250m"limits:memory:"128Mi"cpu:"500m"

As a result, you can see the different settings for resource requests and limits:

$ kubectl describe node minikube-m03 ... Non-terminated Pods: (3 in total) Namespace Name CPU Requests CPU Limits \ Memory Requests Memory Limits AGE --------- ---- ------------ ---------- \ --------------- ------------- --- default rate-limiter 1250m (62%) 2500m (125%) \ 320Mi (14%) 640Mi (29%) 3s ...

Managing Objects

Kubernetes objects can be created, modified, and deleted by using imperative kubectl commands or by running a kubectl command against a configuration file declaring the desired state of an object, a so-called manifest. The primary definition language of a manifest is YAML, though you can opt for JSON, which is the less widely adopted format among the Kubernetes community. It’s recommended that development teams commit and push those configuration files to version control repositories as it will help with tracking and auditing changes over time.

Modeling an application in Kubernetes often requires a set of supporting objects, each of which can have their own manifest. For example, you may want to create a Deployment that runs the application on five Pods, a ConfigMap to inject configuration data as environment variables, and a Service for exposing network access.

This section primarily focuses on the declarative object management support with the help of manifests. For a deeper discussion on the imperative support, see the relevant portions in the documentation. Furthermore, we’ll touch on tools like Kustomize and Helm to give you an impression of their benefits, capabilities, and workflows.

Declarative Object Management Using Configuration Files

Declarative object management requires one or several configuration files in the format of YAML or JSON describing the desired state of an object. You create, update, and delete objects with this approach.

Creating objects

To create new objects, run the apply command by pointing to a file, a directory of files, or a file referenced by an HTTP(S) URL using the -f option. If one or many of the objects already exist, the command will synchronize the changes made to the configuration with the live object.

To demonstrate the functionality, we’ll assume the following directories and configuration files. The following commands create objects from a single file, from all files within a directory, and from all files in a directory recursively:

.

├── app-stack

│ ├── mysql-pod.yaml

│ ├── mysql-service.yaml

│ ├── web-app-pod.yaml

│ └── web-app-service.yaml

├── nginx-deployment.yaml

└── web-app

├── config

│ ├── db-configmap.yaml

│ └── db-secret.yaml

└── web-app-pod.yaml

Creating an object from a single file:

$ kubectl apply -f nginx-deployment.yaml deployment.apps/nginx-deployment created

Creating objects from multiple files within a directory:

$ kubectl apply -f app-stack/ pod/mysql-db created service/mysql-service created pod/web-app created service/web-app-service created

Creating objects from a recursive directory tree containing files:

$ kubectl apply -f web-app/ -R configmap/db-config configured secret/db-creds created pod/web-app created

Creating objects from a file referenced by an HTTP(S) URL:

$ kubectl apply -f https://raw.githubusercontent.com/bmuschko/cka-study-guide/ \ master/ch04/object-management/nginx-deployment.yaml deployment.apps/nginx-deployment created

The apply command keeps track of the changes by adding or modifying the annotation with the key kubectl.kubernetes.io/last-applied-configuration. You can find an example of the annotation in the output of the get pod command here:

$ kubectl get pod web-app -o yaml

apiVersion: v1

kind: Pod

metadata:

annotations:

kubectl.kubernetes.io/last-applied-configuration: |

{"apiVersion":"v1","kind":"Pod","metadata":{"annotations":{}, \

"labels":{"app":"web-app"},"name":"web-app","namespace":"default"}, \

"spec":{"containers":[{"envFrom":[{"configMapRef":{"name":"db-config"}}, \

{"secretRef":{"name":"db-creds"}}],"image":"bmuschko/web-app:1.0.1", \

"name":"web-app","ports":[{"containerPort":3000,"protocol":"TCP"}]}], \

"restartPolicy":"Always"}}

...

Updating objects

Updating an existing object is done with the same apply command. All you need to do is to change the configuration file and then run the command against it. Example 4-4 modifies the existing configuration of a Deployment in the file nginx-deployment.yaml. We added a new label with the key team and changed the number of replicas from 3 to 5.

Example 4-4. Modified configuration file for a Deployment

apiVersion:apps/v1kind:Deploymentmetadata:name:nginx-deploymentlabels:app:nginxteam:redspec:replicas:5...

The following command applies the changed configuration file. As a result, the number of Pods controlled by the underlying ReplicaSet is 5. The Deployment’s annotation kubectl.kubernetes.io/last-applied-configuration reflects the latest change to the configuration:

$ kubectl apply -f nginx-deployment.yaml

deployment.apps/nginx-deployment configured

$ kubectl get deployments,pods

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/nginx-deployment 5/5 5 5 10m

NAME READY STATUS RESTARTS AGE

pod/nginx-deployment-66b6c48dd5-79j6t 1/1 Running 0 35s

pod/nginx-deployment-66b6c48dd5-bkkgb 1/1 Running 0 10m

pod/nginx-deployment-66b6c48dd5-d26c8 1/1 Running 0 10m

pod/nginx-deployment-66b6c48dd5-fcqrs 1/1 Running 0 10m

pod/nginx-deployment-66b6c48dd5-whfnn 1/1 Running 0 35s

$ kubectl get deployment nginx-deployment -o yaml

apiVersion: apps/v1

kind: Deployment

metadata:

annotations:

kubectl.kubernetes.io/last-applied-configuration: |

{"apiVersion":"apps/v1","kind":"Deployment","metadata":{"annotations":{}, \

"labels":{"app":"nginx","team":"red"},"name":"nginx-deployment", \

"namespace":"default"},"spec":{"replicas":5,"selector":{"matchLabels": \

{"app":"nginx"}},"template":{"metadata":{"labels":{"app":"nginx"}}, \

"spec":{"containers":[{"image":"nginx:1.14.2","name":"nginx", \

"ports":[{"containerPort":80}]}]}}}}

...

Deleting objects

While there is a way to delete objects using the apply command by providing the options --prune -l <labels>, it is recommended to delete an object using the delete command and point it to the configuration file. The following command deletes a Deployment and the objects it controls (ReplicaSet and Pods):

$ kubectl delete -f nginx-deployment.yaml deployment.apps "nginx-deployment" deleted $ kubectl get deployments,replicasets,pods No resources found in default namespace.

Declarative Object Management Using Kustomize

Kustomize is a tool introduced with Kubernetes 1.14 that aims to make manifest management more convenient. It supports three different use cases:

-

Generating manifests from other sources. For example, creating a ConfigMap and populating its key-value pairs from a properties file.

-

Adding common configuration across multiple manifests. For example, adding a namespace and a set of labels for a Deployment and a Service.

-

Composing and customizing a collection of manifests. For example, setting resource boundaries for multiple Deployments.

The central file needed for Kustomize to work is the kustomization file. The standardized name for the file is kustomization.yaml and cannot be changed. A kustomization file defines the processing rules Kustomize works upon.

Kustomize is fully integrated with kubectl and can be executed in two modes: rendering the processing output on the console or creating the objects. Both modes can operate on a directory, tarball, Git archive, or URL as long as they contain the kustomization file and referenced resource files:

- Rendering the produced output

-

The first mode uses the

kustomizesubcommand to render the produced result on the console but does not create the objects. This command works similar to the dry-run option you might know from theruncommand:$ kubectl kustomize <target>

- Creating the objects

-

The second mode uses the

applycommand in conjunction with the-kcommand-line option to apply the resources processed by Kustomize, as explained in the previous section:$ kubectl apply -k <target>

The following sections demonstrate each of the use cases by a single example. For a full coverage on all possible scenarios, refer to the documentation or the Kustomize GitHub repository.

Composing Manifests

One of the core functionalities of Kustomize is to create a composed manifest from other manifests. Combining multiple manifests into a single one may not seem that useful by itself, but many of the other features described later will build upon this capability. Say you wanted to compose a manifest from a Deployment and a Service resource file. All you need to do is to place the resource files into the same folder as the kustomization file:

. ├── kustomization.yaml ├── web-app-deployment.yaml └── web-app-service.yaml

The kustomization file lists the resources in the resources section, as shown in Example 4-5.

Example 4-5. A kustomization file combining two manifests

resources:-web-app-deployment.yaml-web-app-service.yaml

As a result, the kustomize subcommand renders the combined manifest containing all of the resources separated by three hyphens (---) to denote the different object definitions:

$ kubectl kustomize ./

apiVersion: v1

kind: Service

metadata:

labels:

app: web-app-service

name: web-app-service

spec:

ports:

- name: web-app-port

port: 3000

protocol: TCP

targetPort: 3000

selector:

app: web-app

type: NodePort

---

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: web-app-deployment

name: web-app-deployment

spec:

replicas: 3

selector:

matchLabels:

app: web-app

template:

metadata:

labels:

app: web-app

spec:

containers:

- env:

- name: DB_HOST

value: mysql-service

- name: DB_USER

value: root

- name: DB_PASSWORD

value: password

image: bmuschko/web-app:1.0.1

name: web-app

ports:

- containerPort: 3000

Generating manifests from other sources

Earlier in this chapter, we learned that ConfigMaps and Secrets can be created by pointing them to a file containing the actual configuration data for it. Kustomize can help with the process by mapping the relationship between the YAML manifest of those configuration objects and their data. Furthermore, we’ll want to inject the created ConfigMap and Secret in a Pod as environment variables. In this section, you will learn how to achieve this with the help of Kustomize.

The following file and directory structure contains the manifest file for the Pod and the configuration data files we need for the ConfigMap and Secret. The mandatory kustomization file lives on the root level of the directory tree:

. ├── config │ ├── db-config.properties │ └── db-secret.properties ├── kustomization.yaml └── web-app-pod.yaml

In kustomization.yaml, you can define that the ConfigMap and Secret object should be generated with the given name. The name of the ConfigMap is supposed to be db-config, and the name of the Secret is going to be db-creds. Both of the generator attributes, configMapGenerator and secretGenerator, reference an input file used to feed in the configuration data. Any additional resources can be spelled out with the resources attribute. Example 4-6 shows the contents of the kustomization file.

Example 4-6. A kustomization file using a ConfigMap and Secret generator

configMapGenerator:-name:db-configfiles:-config/db-config.propertiessecretGenerator:-name:db-credsfiles:-config/db-secret.propertiesresources:-web-app-pod.yaml

Kustomize generates ConfigMaps and Secrets by appending a suffix to the name. You can see this behavior when creating the objects using the apply command. The ConfigMap and Secret can be referenced by name in the Pod manifest:

$ kubectl apply -k ./ configmap/db-config-t4c79h4mtt unchanged secret/db-creds-4t9dmgtf9h unchanged pod/web-app created

This naming strategy can be configured with the attribute generatorOptions in the kustomization file. See the documentation for more

information.

Let’s also try the kustomize subcommand. Instead of creating the objects, the command renders the processed output on the console:

$ kubectl kustomize ./

apiVersion: v1

data:

db-config.properties: |-

DB_HOST: mysql-service

DB_USER: root

kind: ConfigMap

metadata:

name: db-config-t4c79h4mtt

---

apiVersion: v1

data:

db-secret.properties: REJfUEFTU1dPUkQ6IGNHRnpjM2R2Y21RPQ==

kind: Secret

metadata:

name: db-creds-4t9dmgtf9h

type: Opaque

---

apiVersion: v1

kind: Pod

metadata:

labels:

app: web-app

name: web-app

spec:

containers:

- envFrom:

- configMapRef:

name: db-config-t4c79h4mtt

- secretRef:

name: db-creds-4t9dmgtf9h

image: bmuschko/web-app:1.0.1

name: web-app

ports:

- containerPort: 3000

protocol: TCP

restartPolicy: Always

Adding common configuration across multiple manifests

Application developers usually work on an application stack set comprised of multiple manifests. For example, an application stack could consist of a frontend microservice, a backend microservice, and a database. It’s common practice to use the same, cross-cutting configuration for each of the manifests. Kustomize offers a range of supported fields (e.g., namespace, labels, or annotations). Refer to the documentation to learn about all supported fields.

For the next example, we’ll assume that a Deployment and a Service live in the same namespace and use a common set of labels. The namespace is called persistence and the label is the key-value pair team: helix. Example 4-7 illustrates how to set those common fields in the kustomization file.

Example 4-7. A kustomization file using a common field

namespace:persistencecommonLabels:team:helixresources:-web-app-deployment.yaml-web-app-service.yaml

To create the referenced objects in the kustomization file, run the apply command. Make sure to create the persistence namespace beforehand:

$ kubectl create namespace persistence namespace/persistence created $ kubectl apply -k ./ service/web-app-service created deployment.apps/web-app-deployment created

The YAML representation of the processed files looks as follows:

$ kubectl kustomize ./

apiVersion: v1

kind: Service

metadata:

labels:

app: web-app-service

team: helix

name: web-app-service

namespace: persistence

spec:

ports:

- name: web-app-port

port: 3000

protocol: TCP

targetPort: 3000

selector:

app: web-app

team: helix

type: NodePort

---

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: web-app-deployment

team: helix

name: web-app-deployment

namespace: persistence

spec:

replicas: 3

selector:

matchLabels:

app: web-app

team: helix

template:

metadata:

labels:

app: web-app

team: helix

spec:

containers:

- env:

- name: DB_HOST

value: mysql-service

- name: DB_USER

value: root

- name: DB_PASSWORD

value: password

image: bmuschko/web-app:1.0.1

name: web-app

ports:

- containerPort: 3000

Customizing a collection of manifests

Kustomize can merge the contents of a YAML manifest with a code snippet from another YAML manifest. Typical use cases include adding security context configuration to a Pod definition or setting resource boundaries for a Deployment. The kustomization file allows for specifying different patch strategies like

patchesStrategicMerge and patchesJson6902. For a deeper discussion on the differences between patch strategies, refer to the documentation.

Example 4-8 shows the contents of a kustomization file that patches a Deployment definition in the file nginx-deployment.yaml with the contents of the file security-context.yaml.

Example 4-8. A kustomization file defining a patch

resources:-nginx-deployment.yamlpatchesStrategicMerge:-security-context.yaml

The patch file shown in Example 4-9 defines a security context on the container-level for the Pod template of the Deployment. At runtime, the patch strategy tries to find the container named nginx and enhances the additional configuration.

Example 4-9. The patch YAML manifest

apiVersion:apps/v1kind:Deploymentmetadata:name:nginx-deploymentspec:template:spec:containers:-name:nginxsecurityContext:runAsUser:1000runAsGroup:3000fsGroup:2000

The result is a patched Deployment definition, as shown in the output of the

kustomize subcommand shown next. The patch mechanism can be applied to other files that require a uniform security context definition:

$ kubectl kustomize ./

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: nginx

name: nginx-deployment

spec:

replicas: 3

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- image: nginx:1.14.2

name: nginx

ports:

- containerPort: 80

securityContext:

fsGroup: 2000

runAsGroup: 3000

runAsUser: 1000

Common Templating Tools

As demonstrated in the previous section, Kustomize offers templating functionality. The Kubernetes ecosystem offers other solutions to the problem that we will discuss here. We will touch on the YAML processor yq and the templating engine Helm.

Using the YAML Processor yq

The tool yq is used to read, modify, and enhance the contents of a YAML file. This section will demonstrate all three use cases. For a detailed list of usage example, see the GitHub repository. During the CKA exam, you may be asked to apply those techniques though you are not expected to understand all intricacies of the tools at hand. The version of yq used to describe the functionality below is 4.2.1.

Reading values

Reading values from an existing YAML file requires the use of a YAML path expression. A path expression allows you to deeply navigate the YAML structure and extract the value of an attribute you are searching for. Example 4-10 shows the YAML manifest of a Pod that defines two environment variables.

Example 4-10. The YAML manifest of a Pod

apiVersion:v1kind:Podmetadata:name:spring-boot-appspec:containers:-image:bmuschko/spring-boot-app:1.5.3name:spring-boot-appenv:-name:SPRING_PROFILES_ACTIVEvalue:prod-name:VERSIONvalue:'1.5.3'

To read a value, use the command eval or the short form e, provide the YAML path expression, and point it to the source file. The following two commands read the Pod’s name and the value of the second environment variable defined by a single container. Notice that the path expression needs to start with a mandatory dot character (.) to denote the root node of the YAML structure:

$ yq e .metadata.name pod.yaml spring-boot-app $ yq e .spec.containers[0].env[1].value pod.yaml 1.5.3

Modifying values

Modifying an existing value is as easy as using the same command and adding the -i flag. The assignment of the new value to an attribute happens by assigning it to the path expression. The following command changes the second environment variable of the Pod YAML file to the value 1.6.0:

$ yq e -i .spec.containers[0].env[1].value = "1.6.0" pod.yaml

$ cat pod.yaml

...

env:

- name: SPRING_PROFILES_ACTIVE

value: prod

- name: VERSION

value: 1.6.0

Merging YAML files

Similar to Kustomize, yq can merge multiple YAML files. Kustomize is definitely more powerful and convenient to use; however, yq can come in handy for smaller projects. Say you wanted to merge the sidecar container definition shown in Example 4-11 into the Pod YAML file.

Example 4-11. The YAML manifest of a container definition

spec:containers:-image:envoyproxy/envoy:v1.19.1name:proxy-containerports:-containerPort:80

The command to achieve this is eval-all. We won’t go into details given the multitude of configuration options for this command. For a deep dive, check the yq user manual on the “Multiply (Merge)” operation. The following command appends the sidecar container to the existing container array of the Pod manifest:

$ yq eval-all 'select(fileIndex == 0) *+ select(fileIndex == 1)' pod.yaml \

sidecar.yaml

apiVersion: v1

kind: Pod

metadata:

name: spring-boot-app

spec:

containers:

- image: bmuschko/spring-boot-app:1.5.3

name: spring-boot-app

env:

- name: SPRING_PROFILES_ACTIVE

value: prod

- name: VERSION

value: '1.5.3'

- image: envoyproxy/envoy:v1.19.1

name: proxy-container

ports:

- containerPort: 80

Using Helm

Helm is a templating engine and package manager for a set of Kubernetes manifests. At runtime, it replaces placeholders in YAML template files with actual, end-user defined values. The artifact produced by the Helm executable is a so-called chart file bundling the manifests that comprise the API resources of an application. This chart file can be uploaded to a package manager to be used during the deployment process. The Helm ecosystem offers a wide range of reusable charts for common use cases on a central chart repository (e.g., for running Grafana or PostgreSQL).

Due to the wealth of functionality available to Helm, we’ll discuss only the very basics. The CKA exam does not expect you be a Helm expert; rather, it wants to be familiar with the benefits and concepts. For more detailed information on Helm, see the user documentation. The version of Helm used to describe the functionality here is 3.7.0.

Standard Chart Structure

A chart needs to follow a predefined directory structure. You can choose any name for the root directory. Within the directory, two files need to exist: Chart.yaml and values.yaml. The file Chart.yaml describes the meta information of the chart (e.g., name and version). The file values.yaml contains the key-value pairs used at runtime to replace the placeholders in the YAML manifests. Any template file meant to be packaged into the chart archive file needs to be put in the templates directory. Files located in the template directory do not have to follow any naming conventions.

The following directory structure shows an example chart. The templates directory contains a file for a Pod and a Service:

$ tree . ├── Chart.yaml ├── templates │ ├── web-app-pod-template.yaml │ └── web-app-service-template.yaml └── values.yaml

The chart file

The file Chart.yaml describes the chart on a high level. Mandatory attributes include the chart’s API version, the name, and the version. Additionally, optional attributes can be provided. For a full list of attributes, see the relevant documentation. Example 4-12 shows the bare minimum of a chart file.

Example 4-12. A basic Helm chart file

apiVersion:1.0.0name:web-appversion:2.5.4

The values file

The file values.yaml defines key-value pairs to be used to replace placeholders in the YAML template files. Example 4-13 specifies four key-value pairs. Be aware that the file can be empty if you don’t want to replace values at runtime.

Example 4-13. A Helm values file

db_host:mysql-servicedb_user:rootdb_password:passwordservice_port:3000

The template files

Template files need to live in the templates directory. A template file is a regular YAML manifest that can (optionally) define placeholders with the help of double curly braces ({{ }}). To reference a value from the values.yaml file, use the expression {{ .Values.<key> }}. For example, to replace the value of the key db_host at runtime, use the expression {{ .Values.db_host }}. Example 4-14 defines a Pod as a template while defining three placeholders that reference values from values.yaml.

Example 4-14. The YAML template manifest of a Pod

apiVersion:v1kind:Podmetadata:labels:app:web-appname:web-appspec:containers:-image:bmuschko/web-app:1.0.1name:web-appenv:-name:DB_HOSTvalue:{{.Values.db_host}}-name:DB_USERvalue:{{.Values.db_user}}-name:DB_PASSWORDvalue:{{.Values.db_password}}ports:-containerPort:3000protocol:TCPrestartPolicy:Always

Executing Helm commands

The Helm executable comes with a wide range of commands. Let’s demonstrate some of them. The template command renders the chart templates locally and displays results on the console. You can see the operation in action in the following output. All placeholders have been replaced by their actual values sourced from the values.yaml file:

$ helm template .

---

# Source: Web Application/templates/web-app-service-template.yaml

...

---

# Source: Web Application/templates/web-app-pod-template.yaml

apiVersion: v1

kind: Pod

metadata:

labels:

app: web-app

name: web-app

spec:

containers:

- image: bmuschko/web-app:1.0.1

name: web-app

env:

- name: DB_HOST

value: mysql-service

- name: DB_USER

value: root

- name: DB_PASSWORD

value: password

ports:

- containerPort: 3000

protocol: TCP

restartPolicy: Always

Once you are happy with the result, you’ll want to bundle the template files into a chart archive file. The chart archive file is a compressed TAR file with the file ending .tgz. The package command evaluates the metadata information from Chart.yaml to derive the chart archive filename:

$ helm package . Successfully packaged chart and saved it to: /Users/bmuschko/dev/projects/ \ cka-study-guide/ch04/templating-tools/helm/web-app-2.5.4.tgz

For a full list of commands and typical workflows, refer to the Helm documentation page.

Summary

Resource boundaries are one of the many factors that the kube-scheduler algorithm considers when making decisions on which node a Pod can be scheduled. A container can specify resource requests and limits. The scheduler chooses a node based on its available hardware capacity.

Declarative manifest management is the preferred way of creating, modifying, and deleting objects in real-world, cloud-native projects. The underlying YAML manifest is meant to be checked into version control and automatically tracks the changes made to a object including its timestamp for a corresponding commit hash. The kubectl apply and delete command can perform those operations for one or many YAML manifests.

Additional tools emerged for more convenient manifest management. Kustomize is fully integrated with the kubectl tool chain. It helps with the generation, composition, and customization of manifests. Tools with templating capabilities like yq and Helm can further ease various workflows for managing application stacks represented by a set of manifests.

Exam Essentials

- Understand the effects of resource boundaries on scheduling

-

A container defined by a Pod can specify resource requests and limits. Work through scenarios where you define those boundaries individually and together for single- and multi-container Pods. Upon creation of the Pod, you should be able to see the effects on scheduling the object on a node. Furthermore, practice how to identify the available resource capacity of a node.

- Manage objects using the imperative and declarative approach

-

YAML manifests are essential for expressing the desired state of an object. You will need to understand how to create, update, and delete objects using the

kubectl applycommand. The command can point to a single manifest file or a directory containing multiple manifest files. - Have a high-level understanding of common templating tools

-

Kustomize,

yg, and Helm are established tools for managing YAML manifests. Their templating functionality supports complex scenarios like composing and merging multiple manifests. For the exam, take a practical look at the tools, their functionality, and the problems they solve.

Sample Exercises

Solutions to these exercises are available in the Appendix.

-

Write a manifest for a new Pod named

ingress-controllerwith a single container that uses the imagebitnami/nginx-ingress-controller:1.0.0. For the container, set the resource request to 256Mi for memory and 1 CPU. Set the resource limits to 1024Mi for memory and 2.5 CPU. -

Using the manifest, schedule the Pod on a cluster with three nodes. Once created, identify the node that runs the Pod. Write the node name to the file

node.txt. -

Create the directory named

manifests. Within the directory, create two files:pod.yamlandconfigmap.yaml. Thepod.yamlfile should define a Pod namednginxwith the imagenginx:1.21.1. Theconfigmap.yamlfile defines a ConfigMap namedlogs-configwith the key-value pairdir=/etc/logs/traffic.log. Create both objects with a single, declarative command. -

Modify the ConfigMap manifest by changing the value of the key

dirto/etc/logs/traffic-log.txt. Apply the changes. Delete both objects with a single declarative command. -

Use Kustomize to set a common namespace

t012for the resource filepod.yaml. The filepod.yamldefines the Pod namednginxwith the imagenginx:1.21.1without a namespace. Run the Kustomize command that renders the transformed manifest on the console.

Get Certified Kubernetes Administrator (CKA) Study Guide now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.