Chapter 4. Dynamic Microservices

After basic microservices are in place, businesses can begin to take advantage of cloud features. A dynamic microservices architecture allows rapid scaling-up or scaling-down, as well as deployment of services across datacenters or across cloud platforms. Resilience mechanisms provided by the cloud platform or built into the microservices themselves allow for self-healing systems. Finally, networks and datacenters become software defined, providing businesses with even more flexibility and agility for rapidly deploying applications and infrastructure.

Scale with Dynamic Microservices

In the earlier stages of cloud-native evolution, capacity management was about ensuring that each virtual server had enough memory, CPU, storage, and so on. Autoscaling allows a business to scale the storage, network, and compute resources used (e.g., by launching or shutting down instances) based on customizable conditions.

However, autoscaling on a cloud instance–level is slow—too slow for a microservices architecture for which dynamic scaling needs to occur within minutes or seconds. In a dynamic microservices environment, rather than scaling cloud instances, autoscaling occurs at the microservice level. For example for a service with low-traffic, only two instances might run, and be scaled up at load-peak time to seven instances. After the load-peak, the challenge is to scale the service down again; for example, back down to two running instances.

In a monolithic application, there is little need for orchestration and scheduling; however, as the application begins to be split up into separate services, and those services are deployed dynamically and at scale, potentially across multiple datacenters and cloud platforms, it no longer becomes possible to hardwire connections between the various services that make up the application. Microservices allow businesses to scale their applications rapidly, but as the architecture becomes more complex, scheduling and orchestration increase in importance.

Service Discovery and Orchestration

Service discovery is a prerequisite of a scalable microservices environment because it allows microservices to avoid creating hardwired connections but instead for instances of services to be discovered at runtime. A service registry keeps track of active instances of particular services—distributed key-value stores such as etcd and Consul are frequently used for this purpose.

As services move into containers, container orchestration tools such as Kubernetes coordinate how services are arranged and managed at the container level. Orchestration tools often provide built-in service automation and registration services. An API Gateway can be deployed to unify individual microservices into customized APIs for each client. The API Gateway discovers the available services via service discovery and is also responsible for security features; for example, HTTP throttling, caching, filtering wrong methods, authentication, and so on.

Just as Infrastructure as a Service (IaaS), Platform as a Service (PaaS), and Containers as a Service (CaaS) provide increasing layers of abstraction over physical servers, the idea of liquid infrastructure applies virtualization to the infrastructure. Physical infrastructure like datacenters and networks are abstracted by means of Software-Defined Datacenters (SDDCs) and Software-Defined Networks (SDNs), to enable truly scalable environments.

Health Management

The increased complexity of the infrastructure means that microservice platforms need to be smarter about dealing with (and preferably, avoiding) failures, and ensuring that there is enough redundancy and that they remain resilient.

Fault-tolerance is the the ability of the application to continue operating after a failure of one or more components. However, it is better to avoid failures by detecting unhealthy instances and shutting them down before they fail. Thus, the importance of monitoring and health management rises in a dynamic microservices environment.

Monitoring

In highly dynamic cloud environments, nothing is static anymore. Everything moves, scales up or down dependent on the load at any given moment and eventually dies—all at the same time. In addition to the platform orchestration and scheduling layer, services often come with their own built-in resiliency (e.g., Netflix OSS circuit breaker). Every service might have different versions running because they are released independently. And every version usually runs in a distributed environment. Hence, monitoring solutions for cloud environments must be dynamic and intelligent, and include the following characteristics:

- Autodiscovery and instrumentation

-

In these advanced scenarios, static monitoring is futile—you will never be able to keep up! Rather, monitoring systems need to discover and identify new services automatically as well as inject their monitoring agents on the fly.

- System health management

-

Advanced monitoring solutions become system health management tools, which go far beyond the detection of problems within and individual service or container. They are capable of identifying dependencies and incompatibilities between services. This requires transaction-aware data collection for which metrics from multiple ephemeral and moving services can be mapped to a particular transaction or user action.

- Artificial intelligence

-

Machine learning approaches are required to distinguish, for example, a killed container as a routine load balancing measure from a state change that actually affects a real user. All this requires a tight integration with individual cloud technologies and all application components.

- Predictive monitoring

-

The future will bring monitoring solutions that will be able to predict upcoming resource bottlenecks based on empirical evidence and make suggestions on how to improve applications and architectures. Moving toward a closed-loop feedback system, monitoring data will be used as input to the cloud orchestration and scheduling mechanisms and allow a new level of dynamic control based on the health and constraints of the entire system.

Enabling Technologies

As the environment becomes more dynamic, the way that services are scaled also needs to become more dynamic to match.

Load Balancing, Autoscaling, and Health Management

Dynamic load balancing involves monitoring the system in real time and distributing work to nodes in response. With traditional static load balancing, after work has been assigned to a node, it can’t be redistributed, regardless of whether the performance of that node or availability of other nodes changes over time. Dynamic load-balancing helps to address this limitation, leading to better performance; however, the downside is that it is more complex.

Autoscaling based on metrics such as CPU, memory, and network doesn’t work for transactional apps because these often depend on third-party services, service calls, or databases, with transactions that belong to a session and usually have state in shared storage. Instead, scaling based on the current and predicted load within a given timeframe is required.

The underlying platform typically enables health management for deployed microservices. Based on these health checks, you can apply failover mechanisms for failing service instances (i.e., containers), and so the platform allows for running “self-healing systems.” Beyond platform health management capabilities, the microservices might also come with built-in resilience. For instance, a microservice might implement the Netflix OSS components—open source libraries and frameworks for building microservices at scale —to automate scaling cloud instances and reacting to potential service outages. The Hystrix fault-tolerance library enables built-in “circuit breakers” that trip when failures reach a threshold. The Hystrix circuit makes service calls more resilient by keeping track of each endpoint’s status. If Hystrix detects timeouts, it reports that the service is unavailable, so that subsequent requests don’t run into the same timeouts, thus preventing cascading failures across the complete microservice environment.

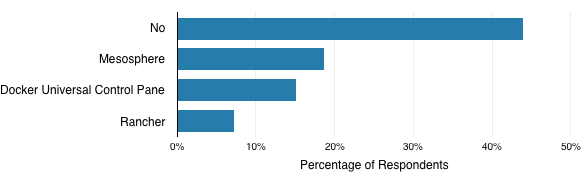

Container Management

Container management tools assist with managing containerized apps deployed across environments (Figure 4-1). Of the 139 respondents to this question in the Cloud Platform Survey, 44 percent don’t use a container management layer. The most widely adopted management layer technologies were Mesosphere (19 percent of respondents to this question) and Docker Universal Control Pane (15 percent). Rancher was also used. Let’s take a look at these tools:

- Mesosphere

-

Mesosphere is a datacenter-scale operating system that uses Marathon orchestrator. It also supports Kubernetes or Docker Swarm.

- Docker Universal Control Pane (UCP)

-

This is Docker’s commercial cluster management solution built on top of Docker Swarm.

- Rancher

-

Racher is an open source platform for managing containers, supporting Kubernetes, Mesos, or Docker Swarm.

Figure 4-1. Top container management layer technologies

SDN

Traditional physical networks are not agile. Scalable cloud applications need to be able to provision and orchestrate networks on demand, just like they can provision compute resources like servers and storage. Dynamically created instances, services, and physical nodes need to be able to communicate with one another, applying security restrictions and network isolation dynamically on a workload level. This is the premise of SDN: with SDN, the network is abstracted and programmable, so it can be dynamically adjusted in real-time.

Hybrid SDN allows traditional networks and SDN technologies to operate within the same environment. For example, the OpenFlow standard allows hybrid switches—an SDN controller will make forwarding decisions for some traffic (e.g., matching a filter for certain types of packets only) and the rest are handled via traditional switching.

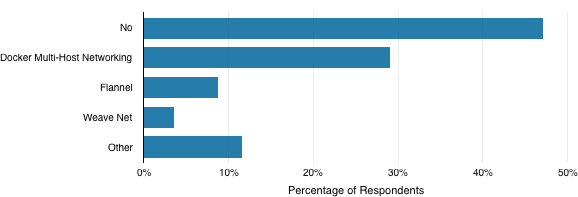

Forty-seven percent of 138 survey respondents to this question are not using SDNs (Figure 4-2). Most of the SDN technologies used by survey respondents support connecting containers across multiple hosts.

Figure 4-2. SDN Adoption

Docker’s Multi-Host Networking was officially released with Docker 1.9 in November 2015. It is based on SocketPlane’s SDN technology. Docker’s original address mapping functionality was very rudimentary and did not support connecting containers across multiple hosts, so other solutions including WeaveNet, Flannel, and Project Calico were developed in the interim to address its limitations. Despite its relative newness compared to the other options, Docker Multi-Host Networking was the most popular SDN technology in use by respondents to the Cloud Platform Survey (Figure 4-2)—29 percent of the respondents to this question are using it. Docker Multi-Host Networking creates an overlay network to connect containers running on multiple hosts. The overlay network is created by using the Virtual Extensible LAN (VXLAN) encapsulation protocol.

A distributed key-value store (i.e., a store that allows data to be shared across a cluster of machines) is typically used to keep track of the network state including endpoints and IP addresses for multihost networks, for example, Docker’s Multi-Host Networking supports using Consul, etcd, or ZooKeeper for this purpose.

Flannel (previously known as Rudder), is also designed for connecting Linux-based containers. It is compatible with CoreOS (for SDN between VMs) as well as Docker containers. Similar to Docker Multi-Host Networking, Flannel uses a distributed key-value store (etcd) to record the mappings between addresses assigned to containers by their hosts, and addresses on the overlay network. Flannel supports VXLAN overlay networks, but also provides the option to use a UDP backend to encapsulate the packets as well as host-gw, and drivers for AWS and GCE. The VXLAN mode of operation is the fastest option because of the Linux kernel’s built-in support for VxLAN and support of NIC drivers for segmentation offload.

Weave Net works with Docker, Kubernetes, Amazon ECS, Mesos and Marathon. Orchestration solutions like Kubernetes rely on each container in a cluster having a unique IP address. So, with Weave, like Flannel, each container has an IP address, and isolation is supported through subnets. Unlike Docker Networking, Flannel, and Calico, Weave Net does not require a cluster store like etcd when using the weavemesh driver. Weave runs a micro-DNS server at each node to allow service discovery.

Another SDN technology that some survey participants use is Project Calico. It differs from the other solutions in the respect that it is a pure Layer 3 (i.e., Network layer) approach. It can be used with any kind of workload: containers, VMs, or bare metal. It aims to be simpler and to have better performance than SDN approaches that rely on overlay networks. Overlay networks use encapsulation protocols, and in complex environments there might be multiple levels of packet encapsulation and network address translation. This introduces computing overhead for de-encapsulation and less room for data per network packet because the encapsulation headers take up several bytes per packet. For example, encapsulating a Layer 2 (Data Link Layer) frame in UDP uses an additional 50 bytes. To avoid this overhead, Calico uses flat IP networking with virtual routers in each node, and uses the Border Gateway Protocol (BGP) to advertise the routes to the containers or VMs on each host. Calico allows for policy based networking, so that you can containers into schemas for isolation purposes, providing a more flexible approach than the CIDR isolation supported by Weave, Flannel, and Docker Networking, with which containers can be isolated only based on their IP address subnets.

SDDC

An SDDC is a dynamic and elastic datacenter, for which all of the infrastructure is virtualized and available as a service. The key concepts of the SDDC are server virtualization (i.e., compute), storage virtualization, and network virtualization (through SDNs). The end result is a truly “liquid infrastructure” in which all aspects of the SDDC can be automated; for example, for load-based datacenter scaling.

Conclusion

Service discovery, orchestration, and a liquid infrastructure are the backbone of a scalable, dynamic microservices architecture.

-

For cloud-native applications, everything is virtualized— including the computer, storage, and network infrastructure.

-

As the environment becomes too complex to manage manually, it becomes increasingly important to take advantage of automated tools and management layers to perform health management and monitoring to maintain a resilient, dynamic system.

Case Study: YaaS—Hybris as a Service

Hybris, a subsidiary of SAP, offers one of the industry’s leading ecommerce, customer engagement, and product content management systems. The existing Hybris Commerce Suite is the workhorse of the company. However, management realized that future ecommerce solutions needed to be more scalable, faster in implementing innovations, and more customer centered.

In early 2015, Brian Walker, Hybris and SAP chief strategy officer, introduced YaaS—Hybris-as-a-Service.1 In a nutshell, YaaS is a microservices marketplace in the public cloud where a consumer (typically a retailer) can subscribe to individual capabilities like the product catalog or the checkout process, whereas billing is based on actual usage. For SAP developers, on the other hand, it is a platform for publishing their own microservices.

The development of YaaS was driven by a vision with four core elements:

- Cloud first

-

Scaling is a priority.

- Retain development speed

-

Adding new features should not become increasingly difficult; the same should hold true with testing and maintenance.

- Autonomy

-

Reduce dependencies in the code and dependencies between teams.

- Community

-

Share extensions within our development community.

A core team of about 50 engineers is in charge of developing YaaS. In addition, a number of globally distributed teams are responsible for developing and operating individual microservices. Key approaches and challenges during the development and operation of the YaaS microservices include the following:

- Technology stack

-

YaaS uses a standard IaaS and CloudFoundry as PaaS. The microservices on top can include any technologies the individual development teams choose, as long as the services will run on the given platform and exposes a RESTful API. This is the perfect architecture for process improvements and for scaling services individually. It enables high-speed feature delivery and independence of development teams.

- Autonomous teams and ownership

-

The teams are radically independent from one another and chose their own technologies including programing languages. They are responsible for their own code, deployment, and operations. A microservice team picks its own CI/CD pipeline whether it is Jenkins, Bamboo, or TeamCity. Configuring dynamic scaling as well as built-in resilience measures (like NetflixOSS technologies) also fall into the purview of the development teams. They are fully responsible for balancing performance versus cost.

- Time-to-market

-

This radical decoupling of both the microservices themselves and the teams creating them dramatically increased speed of innovation and time-to-market. It takes only a couple days from feature idea, to code, to deployment. Only the nonfunctional aspects like documentation and security controls take a bit longer.

- Managing independent teams

-

Rather than long and frequent meetings (like scrum of scrums) the teams are managed by objectives and key results (OKR). The core team organized a kick-off meeting and presented five top-level development goals. Then, the microservice teams took two weeks to define the scope of their services and created a roadmap. When that was accepted, the teams worked on their own until the next follow-up meeting half a year later. Every six weeks all stakeholders and interested parties are invited to a combined demo, to see the overall progress.

- Challenges

-

The main challenge in the beginning was to slim down the scope of each microservice. Because most engineers came from the world of big monoliths, the first microservices were hopelessly over-engineered and too broad in scope. Such services would not scale, and it took a while to establish a new mindset. Another challenge was to define a common API standard. It took more than 100 hours of tedious discussions to gain consensus on response codes and best practices, but it was time well spent.

Key Takeaways

The digital economy is about efficiency in software architecture.2 A dynamically scalable microservice architecture goes hand in hand with radical organizational changes toward autonomous teams who own a service end to end.

Get Cloud-Native Evolution now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.