Chapter 1. Introduction

The Journey Begins

My journey with big data began at Oracle, led me to Facebook, and, finally, to founding Qubole. It’s been an exciting and informative ride, full of learnings and epiphanies. But two early “ah-ha’s” in particular stand out. They both occurred at Facebook. One was that users were eager to get their hands on data directly, without going through the data engineers in the data team. The second was how powerful data could be in the hands of the people.

I joined Facebook in August 2007 as part of the data team. It was a new group, set up in the traditional way for that time. The data infrastructure team supported a small group of data professionals who were called upon whenever anyone needed to access or analyze data located in a traditional data warehouse. As was typical in those days, anyone in the company who wanted to get data beyond some small and curated summaries stored in the data warehouse had to come to the data team and make a request. Our data team was excellent, but it could only work so fast: it was a clear bottleneck.

I was delighted to find a former classmate from my undergraduate days at the Indian Institute of Technology already at Facebook. Joydeep Sen Sarma had been hired just a month previously. Our team’s charter was simple: to make Facebook’s rich trove of data more available.

Our initial challenge was that we had a nonscalable infrastructure that had hit its limits. So, our first step was to experiment with Hadoop. Joydeep created the first Hadoop cluster at Facebook and the first set of jobs, populating the first datasets to be consumed by other engineers—application logs collected using Scribe and application data stored in MySQL.

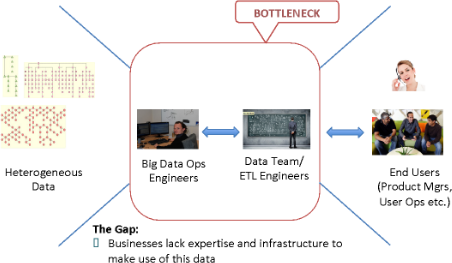

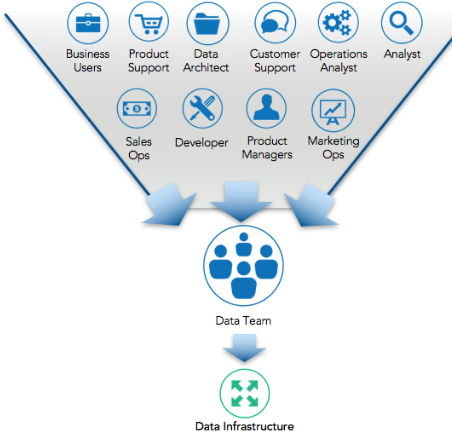

But Hadoop wasn’t (and still isn’t) particularly user friendly, even for engineers. Gartner found that even today—due to how difficult it is to find people with adequate Hadoop skills—more than half of businesses (54 percent) have no plans to invest in it.1 It was, and is, a challenging environment. We found that the productivity of our engineers suffered. The bottleneck of data requests persisted (see Figure 1-1).

Figure 1-1. Human bottlenecks (source: Qubole)

SQL, on the other hand, was widely used by both engineers and analysts, and was powerful enough for most analytics requirements. So Joydeep and I decided to make the programmability of Hadoop available to everyone. Our idea: to create a SQL-based declarative language that would allow engineers to plug in their own scripts and programs when SQL wasn’t adequate. In addition, it was built to store all of the metadata about Hadoop-based datasets in one place. This latter feature was important because it turned out indispensable for creating the data-driven company that Facebook subsequently became.

That language, of course, was Hive, and the rest is history. Still, the idea was very new to us. We had no idea whether it would succeed. But it did. The data team immediately became more productive. The bottleneck eased. But then something happened that surprised us.

In January of 2008, when we released the first version of Hive internally at Facebook, a rush of employees—data scientists and engineers—grabbed the interfaces for themselves. They began to access the data they needed directly. They didn’t bother to request help from the data team. With Hive, we had inadvertently brought the power of big data to the people. We immediately saw tremendous opportunities in completely democratizing data. That was our first “ah-ha!”

One of the things driving employees to Hive was that at that same time (January 2008) Facebook released its Ad product.

Over the course of the next six months, a number of employees began to use the system heavily. Although the initial use case for Hive and Hadoop centered around summarizing and analyzing clickstream data for the launch of the Facebook Ad program, Hive quickly began to be used by product teams and data scientists for a number of other projects. In addition, we first talked about Hive at the first Hadoop summit, and immediately realized the tremendous potential beyond just what Facebook was doing with it.

With this, we had our second “ah-ha”—that by making data more universally accessible within the company, we could actually disrupt our entire industry. Data in the hands of the people was that powerful. As an aside, some time later we saw another example of what happens when you make data universally available.

Facebook used to have “hackathons,” where everyone in the company stayed up all night, ordered pizza and beer, and coded into the wee hours with the goal of coming up with something interesting. One intern—Paul Butler—came up with a spectacular idea. He performed analyses using Hadoop and Hive and mapped out how Facebook users were interacting with each other all over the world. By drawing the interactions between people and their locations, he developed a global map of Facebook’s reach. Astonishingly, it mapped out all continents and even some individual countries.

In Paul’s own words:

When I shared the image with others within Facebook, it resonated with many people. It’s not just a pretty picture, it’s a reaffirmation of the impact we have in connecting people, even across oceans and borders.

To me, this was nothing short of amazing. By using data, this intern came up with an incredibly creative idea, incredibly quickly. It could never have happened in the old world when a data team was needed to fulfill all requests for data.

Data was clearly too important to be left behind lock and key, accessible only by data engineers. We were on our way to turning Facebook into a data-driven company.

The Emergence of the Data-Driven Organization

84 percent of executives surveyed said they believe that “most to all” of their employees should use data analysis to help them perform their job duties.

Let’s discuss why data is important, and what a data-driven organization is. First and foremost, a data-driven organization is one that understands the importance of data. It possesses a culture of using data to make all business decisions. Note the word all. In a data-driven organization, no one comes to a meeting armed only with hunches or intuition. The person with the superior title or largest salary doesn’t win the discussion. Facts do. Numbers. Quantitative analyses. Stuff backed up by data.

Why become a data-driven company? Because it pays off. The MIT Center for Digital Business asked 330 companies about their data analytics and business decision-making processes. It found that the more companies characterized themselves as data-driven, the better they performed on objective measures of financial and operational success.2

Specifically, companies in the top third of their industries when it came to making data-driven decisions were, on average, five percent more productive and six percent more profitable than their competitors. This performance difference remained even after accounting for labor, capital, purchased services, and traditional IT investments. It was also statistically significant and reflected in increased stock market prices that could be objectively measured.

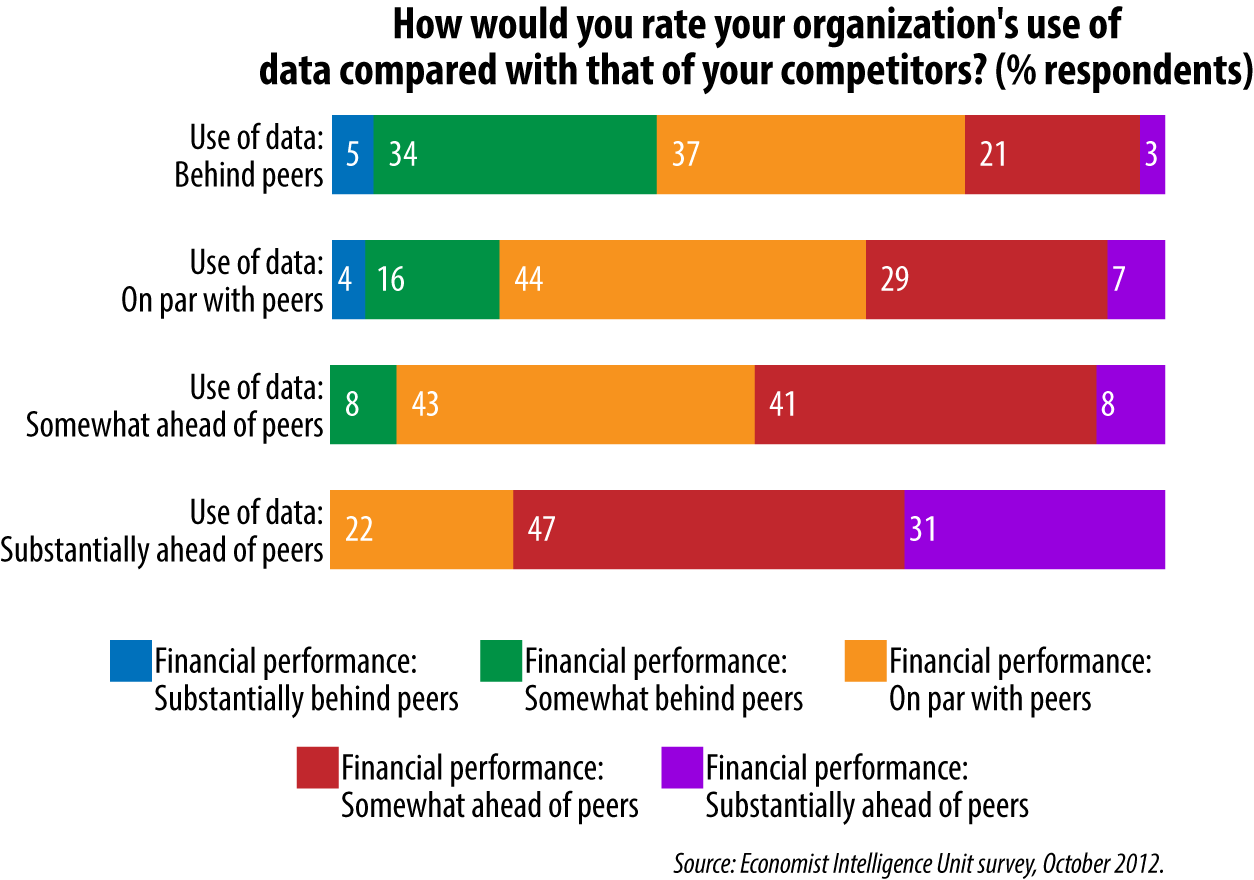

Another survey, by The Economist Intelligence Unit, showed a clear connection between how a company uses data, and its financial success. Only 11 percent of companies said that their organization makes “substantially” better use of data than their peers. Yet more than a third of this group fell into the category of “top performing companies.”3 The reverse also indicates the relationship between data and financial success. Of the 17 percent of companies that said they “lagged” their peers in taking advantage of data, not one was a top-performing business.

Figure 1-2. Rating an organization’s use of data (data from Economist Intelligence Unit survey, October 2012)

Another Economist Intelligence Unit survey found that 70 percent of senior business executives said analyzing data for sales and marketing decisions is already “very” or “extremely important” to their company’s competitive advantage. A full 89 percent of respondents expect this to be the case within two years.4

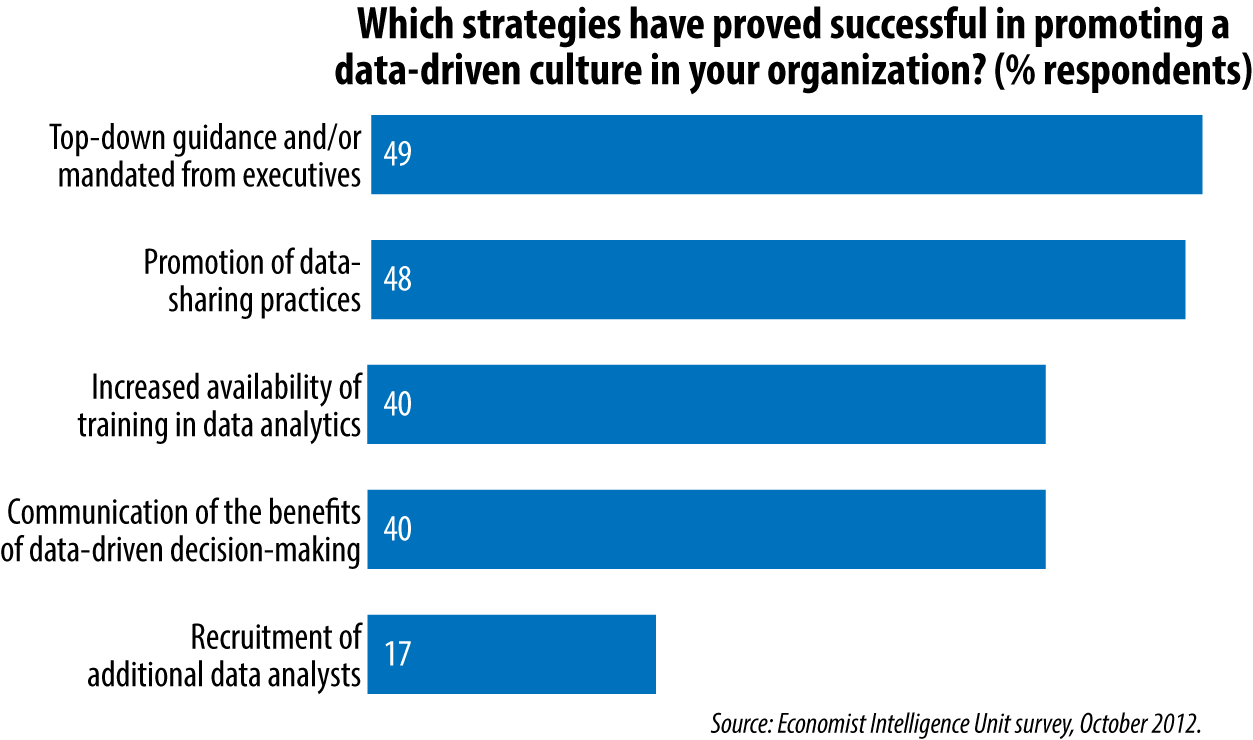

According to the aforementioned MIT report, 50 percent of “above-average” performing businesses said they had achieved a data-driven company by the promotion of data sharing. More than half (57 percent) said that a data-driven company was driven by top-down mandates from the highest level. And an eye-opening 84 percent of executives surveyed said they believe that “most to all” of their employees should use data analysis to help them perform their job duties, not just IT workers or data scientists and analysts.5

Figure 1-3. Successful strategies for promoting a data-driven culture (data from Economist Intelligence Unit survey, October 2012)

But how do you become a data-driven company? That is something this book will address in later chapters. But according to a Harvard Business Review article written by McKinsey executives, being a data-driven company requires simultaneously undertaking three interdependent initiatives:6

- Identify, combine, and manage multiple sources of data

You might already have all the data you need. Or you might need to be creative to find other sources for it. Either way, you need to eliminate silos of data while constantly seeking out new sources to inform your decision-making. And it’s critical to remember that when mining data for insights, demanding data from different and independent sources leads to much better decisions. Today, both the sources and the amount of data you can collect has increased by orders of magnitude. It’s a connected world, given all the transactions, interactions, and, increasingly, sensors that are generating data. And the fact is, if you combine multiple independent sources, you get better insight. The companies that do this are in much better shape, financially and operationally.

- Build advanced analytics models for predicting and optimizing outcomes

The most effective approach is to identify a business opportunity and determine how the model can achieve it. In other words, you don’t start with the data—at least at first—but with a problem.

- Transform the organization and culture of the company so that data actually produces better business decisions

Many big data initiatives fail because they aren’t in sync with a company’s day-to-day processes and decision-making habits. Data professionals must understand what decisions their business users make, and give users the tools they need to make those decisions. (More on this in Chapter 5.)

So, why are we hearing about the failure of so many big data initiatives? One PricewaterhouseCoopers study found that only four percent of companies with big data initiatives consider them successful. Almost half (43 percent) of companies “obtain little tangible benefit from their information,” and 23 percent “derive no benefit whatsoever.”7 Sobering statistics.

It turns out that despite the benefits of a data-driven culture, creating one can be difficult. It requires a major shift in the thinking and business practices of all employees at an organization. Any bottlenecks between the employees who need data and the keepers of data must be completely eliminated. This is probably why only two percent of companies in the MIT report believe that attempts to transform their companies using data have had a “broad, positive impact.”8

Indeed, one of the reasons that we were so quickly able to move to a data-driven environment at Facebook was the company culture. It is very empowering, and everyone is encouraged to innovate when seeking ways to do their jobs better. As Joydeep and I began building Hive, and as it became popular, we transitioned to being a new kind of company. It was actually easy for us, because of the culture. We talk more about that in Chapter 3.

Moving to Self-Service Data Access

After we released Hive, the genie was out of the bottle. The company was on fire. Everyone wanted to run their own queries and analyses on Facebook data.

In just six months, we had fulfilled our initial charter, to make data more easily available to the data team. By March 2008, we were given the official mandate to make data accessible to everyone in the company. Suddenly, we had a new problem: keeping the infrastructure up and available, and scaling it to meet the demands of hundreds of employees (which would over the next few years become thousands). So, making sure everyone had their fair share of the company’s data infrastructure quickly became our number-one challenge.

That’s when we realized that data delayed is data denied. Opportunities slip by quickly. Not being able to leap immediately onto a trend and ride it to business success could hurt the company directly.

We had the first steps to self-service data access. Now we needed an infrastructure that could support self-service access at scale. Self-service data infrastructure. Instead of simply building infrastructure for the data team, we had to think about how to build infrastructure that could fairly share the resources across different teams, and could do so in a way that was controlled and easily auditable. We also had to make sure that this infrastructure could be built incrementally so that we could add capacity as dictated by the demands of the users.

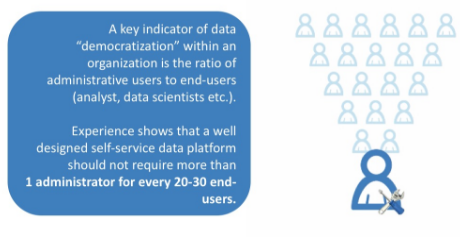

As Figure 1-4 illustrates, moving from manual infrastructure provisioning processes—which creates the same bottlenecks that occurred with the old model of data access—to a self-service one gives employees a much faster response to their data-access needs at a much lower operating cost. Think about it: just as you had the data team positioned between the employees and the data, now you had the same wall between employees and infrastructure. Having theoretical access to data did employees no good when they had to go to the data team to request infrastructure resources every time they wanted to query the data.

Figure 1-4. User-to-admin ratio

The absence of such capabilities in the data infrastructure caused delays. And it hurt the business. Employees often needed fast iterations on queries to make their creative ideas come to fruition. All too often, a great idea is a fast idea: it must be seized in a moment.

An infrastructure that does not support fair sharing also creates friction between prototype projects and production projects. Prototype stage projects need agility and flexibility. On the other hand, production projects need stability and predictability. A common infrastructure must also support these two diametrically opposite requirements. This single fact was one of the biggest challenges of coming up with mechanisms to promote a shared infrastructure that could support both ad hoc (prototyping or data exploration) self-service data access and production self-service data access.

Giving data access to everyone—even those who had no data training—was our goal. An additional aspect of the infrastructure to support self-service access to data is how the tools with which they are familiar integrate with the infrastructure. An employee’s tools need to talk directly to the compute grid. If access to infrastructure is controlled by a specialized central team, you’re effectively going back to your old model (Figure 1-5).

Figure 1-5. Reality of data access for a typical enterprise (source: Qubole)

The lesson learned: to truly democratize data, you need to transform both data access tools and infrastructure provisioning to a self-service model.

But this isn’t just a matter of putting the right technology in place. Your company also needs to make a massive cultural shift. Collaboration must exist between data engineers, scientists, and analysts. You need to adopt the kind of culture that allows your employees to iterate rapidly when refining their data-driven ideas.

You need to create a DataOps culture.

The Emergence of DataOps

Once upon a time, corporate developers and IT operations professionals worked separately, in heavily armored silos. Developers wrote application code and “threw it over the wall” to the operations team, who then were responsible for making sure the applications worked when users actually had them in their hands. This was never an optimal way to work. But it soon became impossible as businesses began developing web apps. In the fast-paced digital world, they needed to roll out fresh code and updates to production rapidly. And it had to work. Unfortunately, it often didn’t. So, organizations are now embracing a set of best practices known as DevOps that improve coordination between developers and the operations team.

DevOps is the practice of combining software engineering, quality assurance (QA), and operations into a single, agile organization. The practice is changing the way applications—particularly web apps—are developed and deployed within businesses.

Now a similar model, called DataOps, is changing the way data is consumed.

Here’s Gartner’s definition of DataOps:

[A] hub for collecting and distributing data, with a mandate to provide controlled access to systems of record for customer and marketing performance data, while protecting privacy, usage restrictions, and data integrity.9

That mostly covers it. However, I prefer a slightly different, perhaps more pragmatic, hands-on definition:

DataOps is a new way of managing data that promotes communication between, and integration of, formerly siloed data, teams, and systems. It takes advantage of process change, organizational realignment, and technology to facilitate relationships between everyone who handles data: developers, data engineers, data scientists, analysts, and business users. DataOps closely connects the people who collect and prepare the data, those who analyze the data, and those who put the findings from those analyses to good business use.

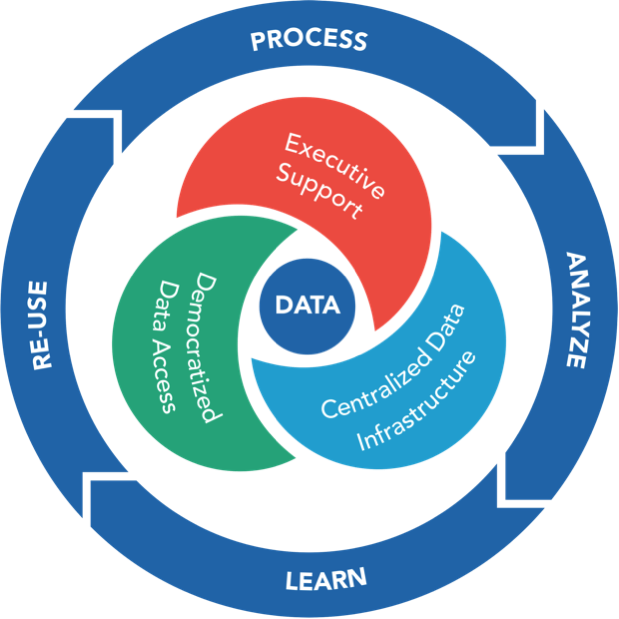

Figure 1-6 summarizes the aspirations for a data-driven enterprise—one that follows the DataOps model. At the core of the data-driven enterprise are executive support, a centralized data infrastructure, and democratized data access. In this model, data is processed, analyzed for insights, and reused.

Figure 1-6. The aspirations for a data-driven enterprise (source: Qubole)

Two trends are creating the need for DataOps:

- The need for more agility with data

Businesses today run at a very fast pace, so if data is not moving at the same pace, it is dropped from the decision-making process. This is similar to how the agility in creating web apps led to the creation of the DevOps culture. The same agility is now also needed on the data side.

- Data becoming more mainstream

This ties back to the fact that in today’s world there is a proliferation of data sources because of all the advancements in collection: new apps, sensors on the Internet of Things (IoT), and social media. There’s also the increasing realization that data can be a competitive advantage. As data has become mainstream, the need to democratize it and make it accessible is felt very strongly within businesses today. In light of these trends, data teams are getting pressure from all sides.

In effect, data teams are having the same problem that application developers once had. Instead of developers writing code, we now have data scientists designing analytic models for extracting actionable insights from large volumes of data. But there’s the problem: no matter how clever and innovative those data scientists are, they don’t help the business if they can’t get hold of the data or can’t put the results of their models into the hands of decision-makers.

DataOps has therefore become a critical discipline for any IT organization that wants to survive and thrive in a world in which real-time business intelligence is a competitive necessity. Three reasons are driving this:

- Data isn’t a static thing

According to Gartner, big data can be described by the “Three Vs”:10 volume, velocity, and variety. It’s also changing constantly. On Monday, machine learning might be a priority; on Tuesday, you need to focus on predictive analytics. And on Friday, you’re processing transactions. Your infrastructure needs to be able to support all these different workloads, equally well. With DataOps, you can quickly create new models, reprioritize workloads, and extract value from your data by promoting communication and collaboration.

- Technology is not enough

- Data science and the technology that supports it is getting stronger every day. But these tools are only good if they are applied in a consistent and reliable way.

- Greater agility is needed

- The agility needed today is much more than what was needed in the 1990s, which is when the data-warehousing architecture and best practices emerged. Organizational agility around data is much, much faster today—so many times faster, in fact, that we need to change the very cadence of the data organization itself.

DataOps is actually a very natural way to approach data access and infrastructure when building a data environment or data lake from scratch. Because of that, newer companies embrace DataOps much more quickly and easily than established companies, which need to dramatically shift their existing practices and way of thinking about data. Many of these newer companies were born when DevOps became the norm and so they intrinsically possess an aversion to a silo-fication culture. As a result, adopting DataOps for their data needs has been a natural course of evolution; their DNA demands it. Facebook was again a great example of this. In 2007, product releases at Facebook happened every week. As a result, there was an expectation that the data from these launches would be immediately available. Taking weeks and months to have access to this data was not acceptable. In such an environment, and with such demand for agility, a DataOps culture became an absolute necessity, not just a nice-to-have feature.

In more traditional companies, corporate policies around security and control, in particular, must change. Established companies worry: how do I ensure that sensitive data remains safe and private if it’s available to everyone? DataOps requires many businesses to comply with strict data governance regulations. These are all legitimate concerns.

However, these concerns can be solved with software and technology, which is what we’ve tried to do at Qubole. We discuss this more in Chapter 5.

In This Book

In this book, we explain what is required to become a truly data-driven organization that adopts a self-service data culture. You’ll read about the organizational, cultural, and—of course—technical transformations needed to get there, along with actionable advice. Finally, we’ve profiled five famously leading companies on their data-driven journeys: Facebook, Twitter, Uber, eBay, and LinkedIn.

1 http://www.gartner.com/newsroom/id/3051717

2 https://hbr.org/2012/10/big-data-the-management-revolution

3 https://www.tableau.com/sites/default/files/whitepapers/tableau_dataculture_130219.pdf

4 http://www.zsassociates.com/publications/articles/Broken-links-Why-analytics-investments-have-yet-to-pay-off.aspx

5 http://www.contegix.com/the-importance-of-a-data-driven-company-culture/

6 https://hbr.org/2012/10/making-advanced-analytics-work-for-you

7 http://www.cio.com/article/3003538/big-data/study-reveals-that-most-companies-are-failing-at-big-data.html

8 http://www.zsassociates.com/publications/articles/Broken-links-Why-analytics-investments-have-yet-to-pay-off.aspx

Get Creating a Data-Driven Enterprise with DataOps now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.