Chapter 1. What Is Data Governance?

Data governance is, first and foremost, a data management function to ensure the quality, integrity, security, and usability of the data collected by an organization. Data governance needs to be in place from the time a factoid of data is collected or generated until the point in time at which that data is destroyed or archived. Along the way in this full life cycle of the data, data governance focuses on making the data available to all stakeholders in a form that they can readily access. In addition, it must be one they can use in a manner that generates the desired business outcomes (insights, analysis) and conforms to regulatory standards, if relevant. These regulatory standards are often an intersection of industry (e.g., healthcare), government (e.g., privacy), and company (e.g., nonpartisan) rules and codes of behavior. Moreover, data governance needs to ensure that the stakeholders get a high-quality integrated view of all the data within the enterprise. There are many facets to high-quality data—the data needs to be correct, up to date, and consistent. Finally, data governance needs to be in place to ensure that the data is secure, by which we mean that:

It is accessed only by permitted users in permitted ways

It is auditable, meaning all accesses, including changes, are logged

It is compliant with regulations

The purpose of data governance is to enhance trust in the data. Trustworthy data is necessary to enable users to employ enterprise data to support decision making, risk assessment, and management using key performance indicators (KPIs). Using data, you can increase confidence in the decision-making process by showing supporting evidence. The principles of data governance are the same regardless of the size of the enterprise or the quantity of data. However, data governance practitioners will make choices with regard to tools and implementation based on practical considerations driven by the environment within which they operate.

What Data Governance Involves

The advent of big data analytics, powered by the ease of moving to the cloud and the ever-increasing capability and capacity of compute power, has motivated and energized a fast-growing community of data consumers to collect, store, and analyze data for insights and decision making. Nearly every computer application these days is informed by business data. It is not surprising, therefore, that new ideas inevitably involve the analysis of existing data in new ways, as well as the collection of new datasets, whether through new systems or by purchase from external vendors. Does your organization have a mechanism to vet new data analysis techniques and ensure that any data collected is stored securely, that the data collected is of high quality, and that the resulting capabilities accrue to your brand value? While it’s tempting to look only toward the future power and possibilities of data collection and big data analytics, data governance is a very real and very important consideration that cannot be ignored. In 2017, Harvard Business Review reported that more than 70% of employees have access to data they should not.1 This is not to say that companies should adopt a defensive posture; it’s only to illustrate the importance of governance to prevent data breaches and improper use of the data. Well-governed data can lead to measurable benefits for an organization.

Holistic Approach to Data Governance

Several years ago, when smartphones with GPS sensors were becoming ubiquitous, one of the authors of this book was working on machine learning algorithms to predict the occurence of hail. Machine learning requires labeled data—something that was in short supply at the temporal and spatial resolution the research team needed. Our team hit on the idea of creating a mobile application that would allow citizen scientists to report hail at their location.2 This was our first encounter with making choices about what data to collect—until then, we had mostly been at the receiving end of whatever data the National Weather Service was collecting. Considering the rudimentary state of information security tools in an academic setting, we decided to forego all personally identifying information and make the reporting totally anonymous, even though this meant that certain types of reported information became somewhat unreliable. Even this anonymous data brought tremendous benefits—we started to evaluate hail algorithms at greater resolutions, and this improved the quality of our forecasts. This new dataset allowed us to calibrate existing datasets, thus enhancing the data quality of other datasets as well. The benefits went beyond data quality and started to accrue toward trustworthiness—involvement of citizen scientists was novel enough that National Public Radio carried a story about the project, emphasizing the anonymous nature of the data collection.3 The data governance lens had allowed us to carefully think about which report data to collect, improve the quality of enterprise data, enhance the quality of forecasts produced by the National Weather Service, and even contribute to the overall brand of our weather enterprise. This combination of effects—regulatory compliance, better data quality, new business opportunities, and enhanced trustworthiness—was the result of a holistic approach to data governance.

Fast-forward a few years, and now, at Google Cloud, we are all part of a team that builds technology for scalable cloud data warehouses and data lakes. One of the recurring concerns that our enterprise customers have is around what best practices and policies they should put in place to manage the classification, discovery, availability, accessibility, integrity, and security of their data—data governance—and customers approach it with the same sort of apprehension that our small team in academia did.

Yet the tools and capabilities that an enterprise has at its disposal to carry out data governance are quite powerful and diverse. We hope to convince you that you should not be afraid of data governance, and that properly applying data governance can open up new worlds of possibility. While you might initially approach data governance purely from a legal or regulatory compliance standpoint, applying governance policies can drive growth and lower costs.

Enhancing Trust in Data

Ultimately, the purpose of data governance is to build trust in data. Data governance is valuable to the extent that it adds to stakeholders’ trust in the data—specifically, in how that data is collected, analyzed, published, or used.

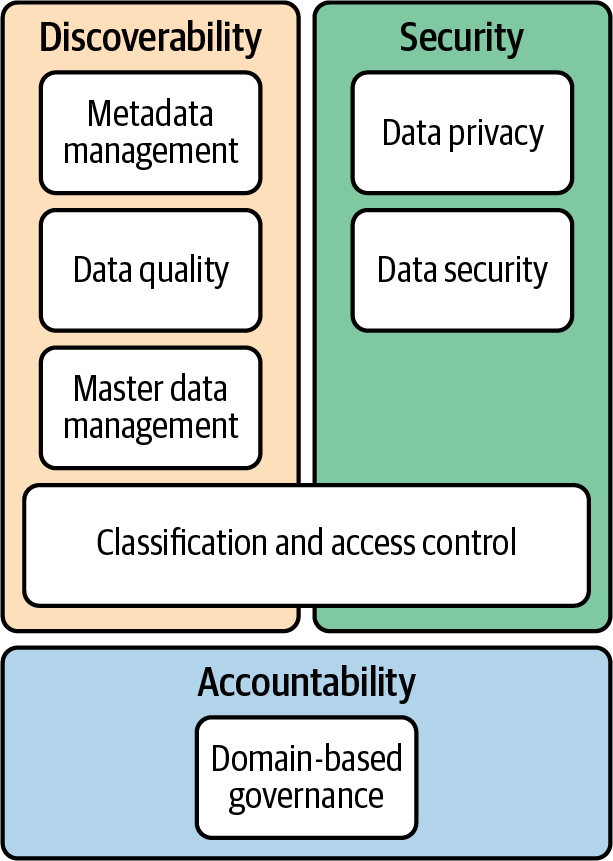

Ensuring trust in data requires that a data governance strategy address three key aspects: discoverability, security, and accountability (see Figure 1-2). Discoverability itself requires data governance to make technical metadata, lineage information, and a business glossary readily available. In addition, business critical data needs to be correct and complete. Finally, master data management is necessary to guarantee that data is finely classified and thus ensure appropriate protection against inadvertent or malicious changes or leakage. In terms of security, regulatory compliance, management of sensitive data (personally identifiable information, for example), and data security and exfiltration prevention may all be important depending on the business domain and the dataset in question. If discoverability and security are in place, then you can start treating the data itself as a product. At that point, accountability becomes important, and it is necessary to provide an operating model for ownership and accountability around boundaries of data domains.

Figure 1-2. The three key aspects of data governance that must be addressed to enhance trust in data

Classification and Access Control

While the purpose of data governance is to increase the trustworthiness of enterprise data so as to derive business benefits, it remains the case that the primary activity associated with data governance involves classification and access control. Therefore, to understand the roles involved in data governance, it is helpful to consider a typical classification and access control setup.

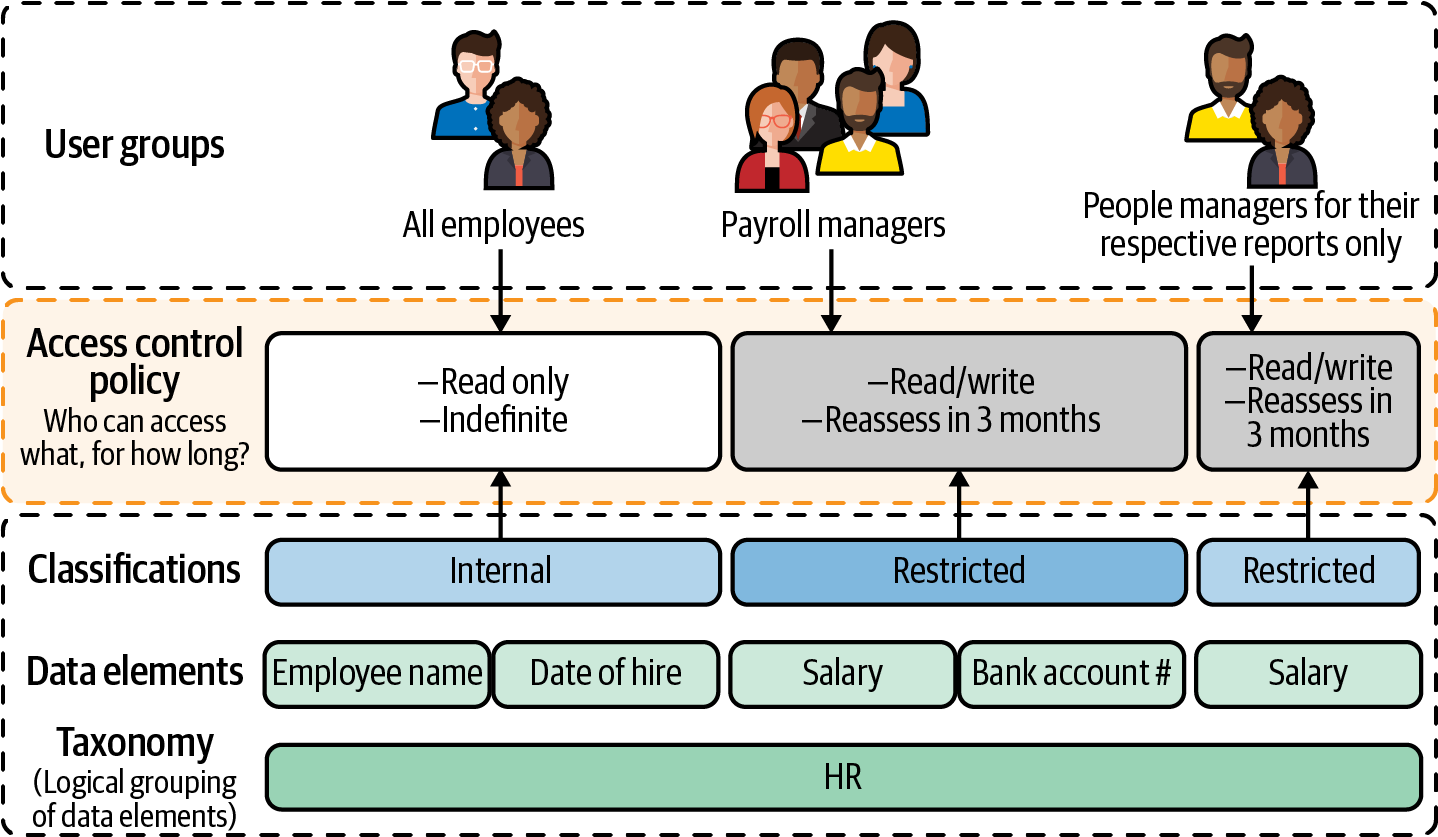

Let’s take the case of protecting the human resources information of employees, as shown in Figure 1-3.

Figure 1-3. Protecting the human resources information of employees

The human resources information includes several data elements: each employee’s name, their date of hire, past salary payments, the bank account into which those salary payments were deposited, current salary, etc. Each of these data elements is protected in different ways, depending on the classification level. Potential classification levels might be public (things accessible by people not associated with the enterprise), external (things accessible by partners and vendors with authorized access to the enterprise internal systems), internal (things accessible by any employee of the organization), and restricted. For example, information about each employee’s salary payments and which bank account they were deposited into would be restricted to managers in the payroll processing group only. On the other hand, the restrictions could be more dynamic. An employee’s current salary might be visible only to their manager, and each manager might be able to see salary information only for their respective reports. The access control policy would specify what users can do when they access the data—whether they can create a new record, or read, update, or delete existing records.

The governance policy is typically specified by the group that is accountable for the data (here, the human resources department)—this group is often referred to as the governors. The policy itself might be implemented by the team that operates the database system or application (here, the information technology department), and so changes such as adding users to permitted groups are often carried out by the IT team—hence, members of that team are often referred to as approvers or data stewards. The people whose actions are being circumscribed or enabled by data governance are often referred to as users. In businesses where not all employees have access to enterprise data, the set of employees with access might be called knowledge workers to differentiate them from those without access..

Some enterprises default to open—for example, when it comes to business data, the domain of authorized users may involve all knowledge workers in the enterprise. Other enterprises default to closed—business data may be available only to those with a need to know. Policies such as these are within the purview of the data governance board in the organization—there is no uniquely correct answer on which approach is best.

Data Governance Versus Data Enablement and Data Security

Data governance is often conflated with data enablement and with data security. Those topics intersect but have different emphases:

-

Data governance is mostly focused on making data accessible, reachable, and indexed for searching across the relevant constituents, usually the entire organization’s knowledge-worker population. This is a crucial part of data governance and will require tools such as a metadata index, a data catalog to “shop for” data. Data governance extends data enablement into including a workflow in which data acquisition can take place. Users can search for data by context and description, find the relevant data stores, and ask for access, including the desired use case as justification. An approver (data steward) will need to review the ask, determine whether the ask is justified and whether the data being requested can actually serve the use case, and kick off a process through which the data can be made accessible.

-

Data enablement goes further than making data accessible and discoverable; it extends into tooling that allows rapid analysis and processing of the data to answer business-related questions: “how much is the business spending on this topic,” “can we optimize this supply chain,” and so on. The topic is crucial and requires knowledge of how to work with data, as well as what the data actually means—best addressed by including, from the get-go, metadata that describes the data and includes value proposition, origin, lineage, and a contact person who curates and owns the data in question, to allow for further inquiry.

-

Data security, which intersects with both data enablement and data governance, is normally thought about as a set of mechanics put in place to prevent and block unauthorized access. Data governance relies on data security mechanics to be in place but goes beyond just prevention of unauthorized access and into policies about the data itself, its transformation according to data class (see Chapter 7), and the ability to prove that the policies set to access and transform the data over time are being complied with. The correct implementation of security mechanics promotes the trust required to share data broadly or “democratize access” to the data.

Why Data Governance Is Becoming More Important

Data governance has been around since there was data to govern, although it was often restricted to IT departments in regulated industries, and to security concerns around specific datasets such as authentication credentials. Even legacy data processing systems needed a way to not only ensure data quality but also control access to data.

Traditionally, data governance was viewed as an IT function that was performed in silos related to data source type. For example, a company’s HR data and financial data, typically highly controlled data sources with strictly controlled access and specific usage guidelines, would be controlled by one IT silo, whereas sales data would be in a different, less restrictive silo. Holistic or “centralized” data governance may have existed within some organizations, but the majority of companies viewed data governance as a departmental concern.

Data governance has come into prominence because of the recent introductions of GDPR- and CCPA-type regulations that affect every industry, beyond just healthcare, finance, and a few other regulated industries. There has also been a growing realization about the business value of data. Because of this, the data landscape is vastly different today.

The following are just a few ways in which the topography has changed over time, warranting very different approaches to and methods for data governance.

The Size of Data Is Growing

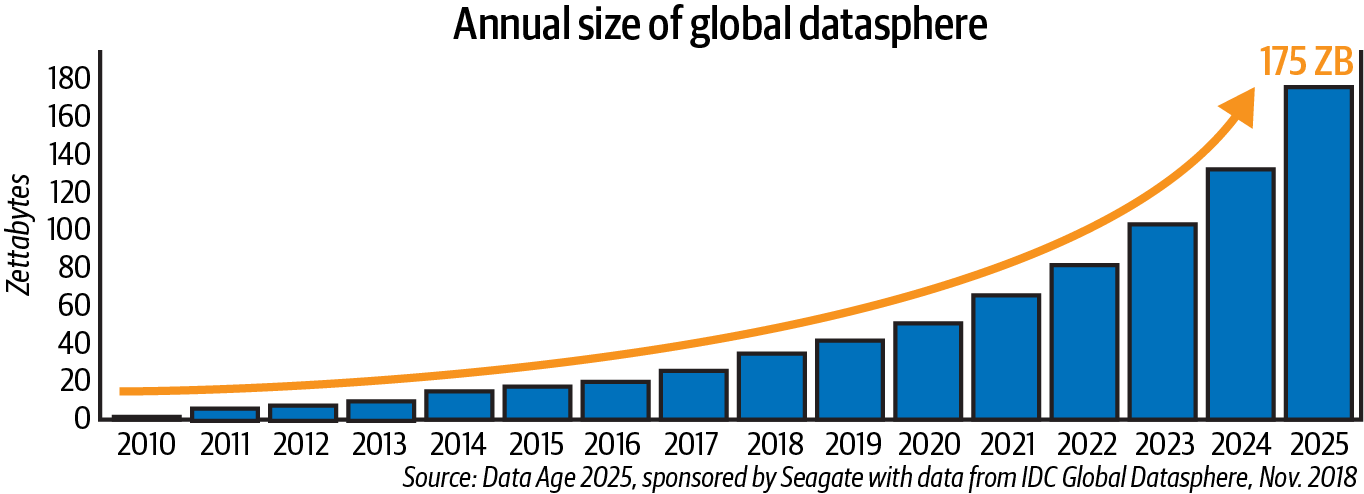

There is almost no limit to the kinds and amount of data that can now be collected. In a whitepaper published in November 2018, International Data Corporation predicts that the global datasphere will balloon to 175 ZB by 2025 (see Figure 1-4).4

This rise in data captured via technology, coupled with predictive analyses, results in systems nearly knowing more about today’s users than the users themselves.

Figure 1-4. The size of the global datasphere is expected to exhibit dramatic growth

The Number of People Working and/or Viewing the Data Has Grown Exponentially

A report by Indeed shows that the demand for data science jobs had jumped 78% between 2015 and 2018.5 IDC also reports that there are now over five billion people in the world interacting with data, and it projects this number to increase to six billion (nearly 75% of the world’s population) in 2025. Companies are obsessed with being able to make “data-driven decisions,” requiring an inordinate amount of headcount: from the engineers setting up data pipelines to analysts doing data curation and analyses, and business stakeholders viewing dashboards and reports. The more people working and viewing data, the greater the need for complex systems to manage access, treatment, and usage of data because of the greater chance of misuse of the data.

Methods of Data Collection Have Advanced

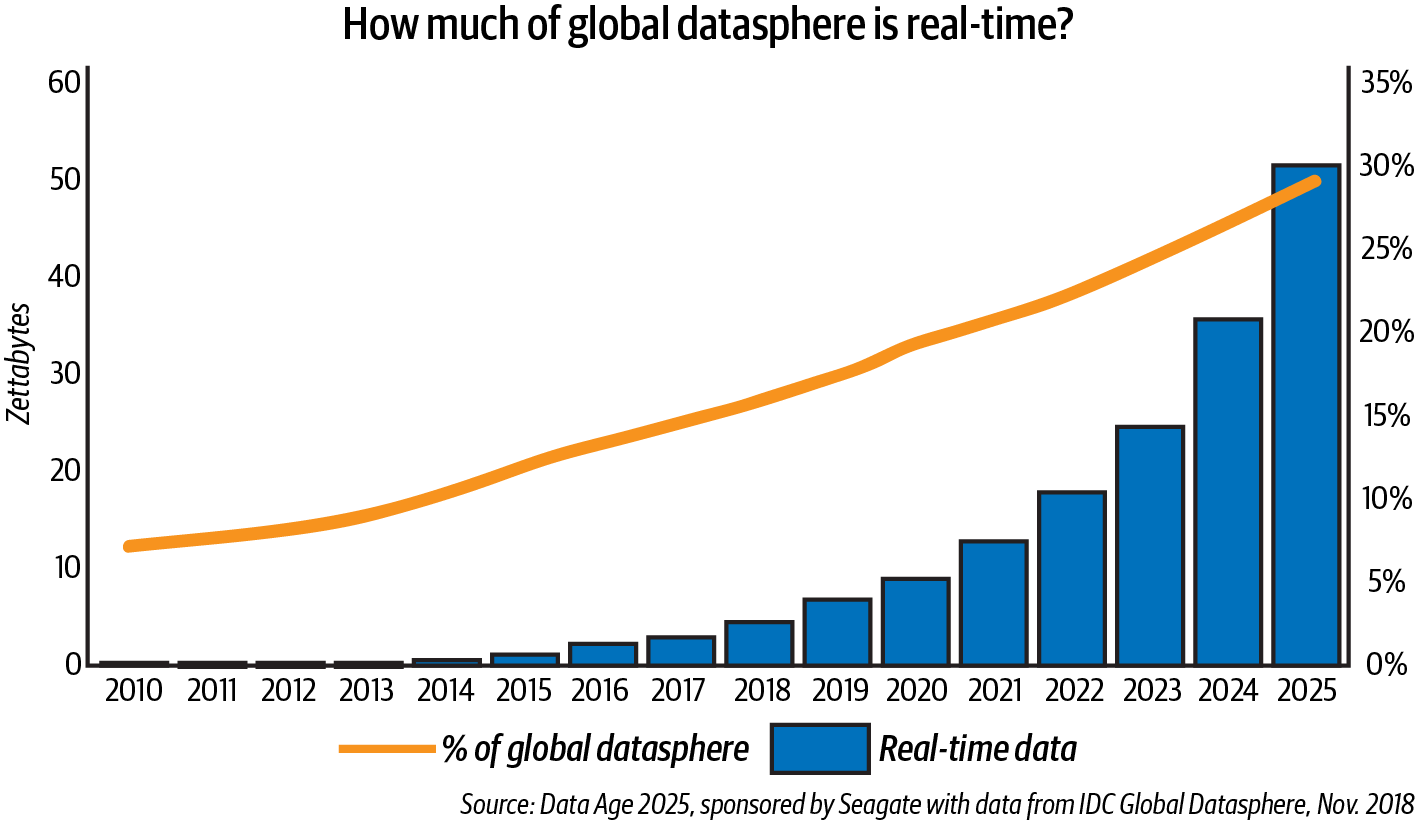

No longer must data only be batch processed and loaded for analysis. Companies are leveraging real-time or near real-time streaming data and analytics to provide their customers with better, more personalized engagements. Customers now expect to access products and services wherever they are, over whatever connection they have, and on any device. IDC predicts that this infusion of data into business workflows and personal streams of life will result in nearly 30% of the global datasphere to be real-time by 2025, as shown in Figure 1-5.6

Figure 1-5. More than 25% of the global datasphere will be real-time data by 2025

The advent of streaming, however, while greatly increasing the speed to analytics, also carries with it the potential risk of infiltration, bringing about the need for complex setup and monitoring for protection.

More Kinds of Data (Including More Sensitive Data) Are Now Being Collected

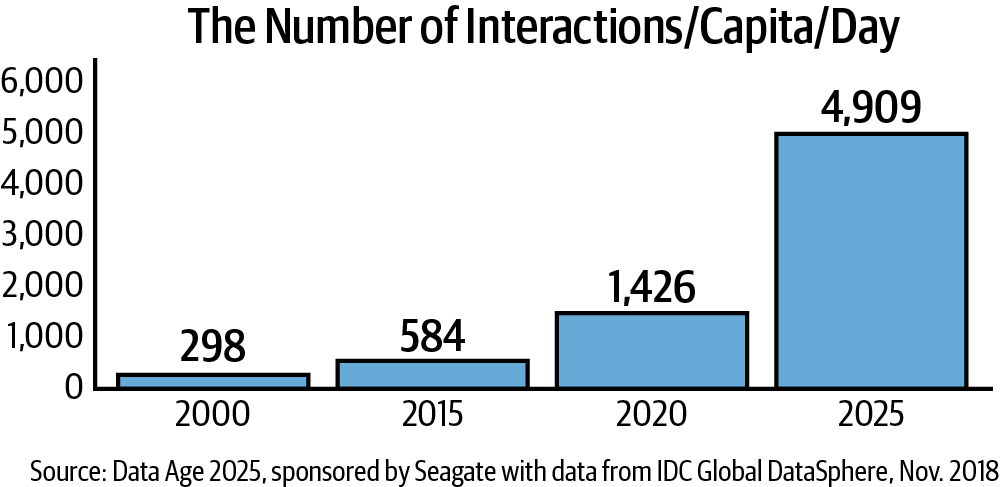

It’s projected that by 2025 every person using technology and generating data will have more than 4,900 digital data engagements per day; that’s about about one digital interaction every eighteen seconds (see Figure 1-7).8

Figure 1-7. By 2025, a person will interact with data-creating technology more than 4,900 times a day

Many of those interactions will include the generation and resulting collection of a myriad of sensitive data such as social security numbers, credit card numbers, names, addresses, and health conditions, to name a few categories. The proliferation of the collection of these extremely sensitive types of data carries with it great customer (and regulator) concern about how that data is used and treated, and who gets to view it.

The Use Cases for Data Have Expanded

Companies are striving to use data to make better business decisions, coined data-driven decision making. They not only are using data internally to drive day-to-day business execution, but are also using data to help their customers make better decisions. Amazon is an example of a company doing this via collecting and analyzing items in customers’ past purchases, items the customers have viewed, and items in their virtual shopping carts, as well as the items they’ve ranked/reviewed after purchase, to drive targeted messaging and recommendations for future purchases.

While this Amazon use case makes perfect business sense, there are types of data (sensitive) coupled with specific use cases for that data that are not appropriate (or even legal). For sensitive types of data, it matters not only how that data is treated but also how it’s used. For example, employee data may be used/viewed internally by a company’s HR department, but it would not be appropriate for that data to be used/viewed by the marketing department.

New Regulations and Laws Around the Treatment of Data

The increase in data and data availability has resulted in the desire and need for regulations on data, data collection, data access, and data use. Some regulations that have been around for quite some time—for example, the Health Insurance Portability and Accountability Act of 1996 (HIPAA), the law protecting the collection and use of personal health data—not only are well known, but companies that have had to comply with them have been doing so for decades—meaning their processes and methodology for treatment of this sensitive data are fairly sophisticated. New regulations, such as the EU’s General Data Protection Regulation (GDPR) and the California Consumer Privacy Act (CCPA) in the US, are just two examples of usage and collection controls that apply to myriad companies, for many of whom such governance of data was not baked into their original data architecture strategy. Because of this, companies that have not had to worry about regulatory compliance before have a more difficult time modifying their technology and business processes to maintain compliance with these new regulations.

Ethical Concerns Around the Use of Data

While use cases themselves can fit into the category of ethical use of data, new technology around machine learning and artificial intelligence has spawned new concerns around the ethical use of data.

One recent example from 2018 is that of Elaine Herzberg, who, while wheeling her bike across a street in Tempe, Arizona, was struck and killed by a self-driving car.9 This incident raised questions about responsibility. Who was responsible for Elaine’s death? The person in the driver’s seat? The company testing the car’s capabilities? The designers of the AI system?

While not deadly, consider the following additional examples:

In 2014, Amazon developed a recruiting tool for identifying software engineers it might want to hire; however, it was found that the tool discriminated against women. Amazon eventually had to abandon the tool in 2017.

In 2016, ProPublica analyzed a commercially developed system that was created to help judges make better sentencing decisions by predicting the likelihood that criminals would reoffend, and it found that it was biased against Black people.10

Incidents such as these are enormous PR nightmares for companies.

Consequently, regulators have published guidelines on the ethical use of data. For example, EU regulators published a set of seven requirements that must be met for AI systems to be considered trustworthy:

-

AI systems should be under human oversight.

-

They need to have a fall-back plan in case something goes wrong. They also need to be accurate, reliable, and reproducible.

-

They must ensure full respect for privacy and data protection.

-

Data, system, and AI business models should be transparent and offer traceability.

-

AI systems must avoid unfair bias.

-

They must benefit all human beings.

-

They must ensure responsibility and accountability.

However, the drive for data-driven decisions, fueled by more data and robust analytics, calls for a necessary consideration of and focus on the ethics of data and data use that goes beyond these regulatory requirements.

Examples of Data Governance in Action

This section takes a closer look at several enterprises and how they were able to derive benefits from their governance efforts. These examples demonstrate that data governance is being used to manage accessibility and security, that it addresses the issue of trust by tackling data quality head-on, and that the governance structure makes these endeavors successful.

Managing Discoverability, Security, and Accountability

In July 2019, Capital One, one of the largest issuers of consumer and small business credit cards, discovered that an outsider had been able to take advantage of a misconfigured web application firewall in its Apache web server. The attacker was able to obtain temporary credentials and access files containing personal information for Capital One customers.11 The resulting leak of information affected more than 100 million individuals who had applied for Capital One credit cards.

Two aspects of this leak limited the blast radius. First, the leak was of application data sent to Capital One, and so, while the information included names, social security numbers, bank account numbers, and addresses, it did not include log-in credentials that would have allowed the attacker to steal money. Second, the attacker was swiftly caught by the FBI, and the reason for the attacker being caught is why we include this anecdote in this book.

Because the files in question were stored in a public cloud storage bucket where every access to the files was logged, access logs were available to investigators after the fact. They were able to figure out the IP routes and narrow down the source of the attack to a few houses. While misconfigured IT systems that create security vulnerabilities can happen anywhere, attackers who steal admin credentials from on-premises systems will usually cover their tracks by modifying the system access logs. On the public cloud, though, these access logs are not modifiable because the attacker doesn’t have access to them.

This incident highlights a handful of lessons:

-

Make sure that your data collection is purposeful. In addition, store as narrow a slice of the data as possible. It was fortunate that the data store of credit card applications did not also include the details of the resulting credit card accounts.

-

Turn on organizational-level audit logs in your data warehouse. Had this not been done, it would not have been possible to catch the culprit.

-

Conduct periodic security audits of all open ports. If this is not done, no alerts will be raised about attempts to get past security safeguards.

-

Apply an additional layer of security to sensitive data within documents. Social security numbers, for example, should have been masked or tokenized using an artificial intelligence service capable of identifying PII data and redacting it.

The fourth best practice is an additional safeguard—arguably, if only absolutely necessary data is collected and stored, there would be no need for masking. However, most organizations have multiple uses of the data, and in some use cases, the decrypted social security number might be needed. In order to do such multi-use effectively, it is necessary to tag or label each attribute based on multiple categories to ensure the appropriate controls and security are placed on it. This tends to be a collaborative effort among many organizations within the company. It is worth noting that systems like these that remove data from consideration come with their own challenges and risks.12

As the data collected and retained by enterprises has grown, ensuring that best practices like these are well understood and implemented correctly has become more and more important. Such best practices and the policies and tools to implement them are at the heart of data governance.

Improving Data Quality

Data governance is not just about security breaches. For data to be useful to an organization, it is necessary that the data be trustworthy. The quality of data matters, and much of data governance focuses on ensuring that the integrity of data can be trusted by downstream applications. This is especially hard when data is not owned by your organization and when that data is moving around.

A good example of data governance activities improving data quality comes from the US Coast Guard (USCG). The USCG focuses on maritime search and rescue, ocean spill cleanup, maritime safety, and law enforcement. Our colleague Dom Zippilli was part of the team that proved the data governance concepts and techniques behind what became known as the Authoritative Vessel Identification Service (AVIS). The following sidebar about AVIS is in his words.

The USCG program is a handy reminder that data quality is something to strive for and constantly be on the watch for. The cleaner the data, the more likely it is to be usable for more critical use cases. In the USCG case, we see this in the usability of the data for search and rescue tasks as well.

The Business Value of Data Governance

Data governance is not solely a control practice. When implemented cohesively, data governance addresses the strategic need to get knowledge workers the insights they require with a clear process to “shop for data.” This makes possible the extraction of insights from multiple sources that were previously siloed off within different business units.

In organizations where data governance is a strategic process, knowledge workers can expect to easily find all the data required to fulfill their mission, safely apply for access, and be granted access to the data under a simple process with clear timelines and a transparent approval process. Approvers and governors of data can expect to easily pull up a picture of what data is accessible to whom, and what data is “outside” the governance zone of control (and what to do about any discrepancies there). CIOs can expect to be able to review a high-level analysis of the data in the organization in order to holistically review quantifiable metrics such as “total amount of data” or “data out of compliance” and even understand (and mitigate) risks to the organization due to data leakage.

Fostering Innovation

A good data governance strategy, when set in motion, combines several factors that allow a business to extract more value from the data. Whether the goal is to improve operations, find additional sources of revenue, or even monetize data directly, a data governance strategy is an enabler of various value drivers in enterprises.

A data governance strategy, if working well, is a combination of process (to make data available under governance), people (who manage policies and usher in data access across the organization, breaking silos where needed), and tools that facilitate the above by applying machine learning techniques to categorize data and indexing the data available for discovery.

Data governance ideally will allow all employees in the organization to access all data (subject to a governance process) under a set of governance rules (defined in greater detail below), while preserving the organization’s risk posture (i.e., no additional exposure or risks are introduced due to making data accessible under a governance strategy). Since the risk posture is maintained and possibly even improved with the additional controls data governance brings, one could argue there is only an upside to making data accessible. Giving all knowledge workers access to data, in a governed manner, can foster innovation by allowing individuals to rapidly prototype answers to questions based on the data that exists within the organization. This can lead to better decision making, better opportunity discovery, and a more productive organization overall.

The quality of the data available is another way to ascertain whether governance is well implemented in the organization. A part of data governance is a well-understood way to codify and inherit a “quality signal” on the data. This signal should tell potential data users and analysts whether the data was curated, whether it was normalized or missing, whether corrupt data was removed, and potentially how trustworthy the source for the data is. Quality signals are crucial when making decisions on potential uses of the data; for example, within machine learning training datasets.

The Tension Between Data Governance and Democratizing Data Analysis

Very often, complete data democratization is thought of as conflicting with data governance. This conflict is not necessarily an axiom. Data democratization, in its most extreme interpretation, can mean that all analysts or knowledge workers can access all data, whatever class it may belong to. The access described here makes a modern organization uncomfortable when you consider specific examples, such as employee data (e.g., salaries) and customer data (e.g., customer names and addresses). Clearly, only specific people should be able to access data of the aforementioned types, and they should do so only within their specific job-related responsibilities.

Data governance is actually an enabler here, solving this tension. The key concept to keep in mind is that there are two layers to the data: the data itself (e.g., salaries) and the metadata (data about the data—e.g., “I have a table that contains salaries, but I won’t tell you anything further”).

With data governance, you can accomplish three things:

Access a metadata catalog, which includes an index of all the data managed (full democratization, in a way) and allows you to search for the existence of certain data. A good data catalog also includes certain access control rules that limit the bounds of the search (for example, I will be able to search “sales-related data,” but “HR” is out of my purview completely, and therefore even HR-metadata is inaccessible to me).

Govern access to the data, which includes an acquisition process (described above) and a way to adhere to the principle of least access: once access is requested, provide access limited to the boundaries of the specific resource; don’t overshare.

Independently of the other steps, make an “audit trail” available to the data access request, the data access approval cycle, and the approver (data steward), as well as to all the subsequent access operations. This audit trail is data itself and therefore must comply with data governance.

In a way, data governance becomes the facility where you can enable data democratization, allowing more of your data to be accessible to more of the knowledge employee population, and therefore be an accelerator to the business in making the use of data easier and faster.

Business outcomes, such as visibility into all parts of a supply chain, understanding of customer behavior on every online asset, tracking the success of a multipronged campaign, and the resulting customer journeys, are becoming more and more possible. Under governance, different business units will be able to pull data together, analyze it to achieve deeper insight, and react quickly to both local and global changes.

Manage Risk (Theft, Misuse, Data Corruption)

The key concerns CIOs and responsible data stewards have had for a long time (and this has not changed with the advent of big data analytics) have always been: What are my risk factors, what is my mitigation plan, and what is the potential damage?

CIOs have been using these concerns to assign resources based on the answer to those questions. Data governance comes to provide a set of tools, processes, and positions for personnel to manage the risk to data, among other topics presented therein (for example, data efficiency, or getting value from data). Those risks include:

- Theft

- Data theft is a concern in those organizations where data is either the product or a key factor in generating value. Theft of data about parts, suppliers, or price in an electronics manufacturer supply chain can cause a crippling blow to the business if competition uses that information to negotiate with those very same suppliers, or to derive a product roadmap from the supply-chain information. Theft of a customer list can be very damaging to any organization. Setting data governance around information that the organization considers to be sensitive can encourage confidence in the sharing of surrounding data, aggregates, and so on, contributing to business efficiency and breaking down barriers to sharing and reusing data.

- Misuse

- Misuse is often the unknowing use of data in a way that’s different from the purpose it was collected for—sometimes to support the wrong conclusions. This is often a result of a lack of information about the data source, its quality, or even what it means. There is sometimes malicious misuse of data as well, meaning that information gathered with consent for benign purposes is used for other unintended and sometimes nefarious purposes. An example is AT&T’s payout to the FCC in 2015, after its call center employees were found to have disclosed consumers’ personal information to third parties for financial gain. Data governance can protect against misuse with several layers. First, establish trust before sharing data. Another way to protect against misuse is declarative—declare the source of the data within the container, the way it was collected, and what it was intended for. Finally, limiting the length of time for which data is accessible can prevent possible misuse. This does not mean placing a lid on the data and making it inaccessible. Remember, the fact that the data exists should be shared alongside its purpose and description—which should make data democratization a reality.

- Data corruption

- Data corruption is an insidious risk because it is hard to detect and hard to protect against. The risk materializes when deriving operational business conclusions from corrupt (and therefore incorrect) data. Data corruption often occurs outside of data governance control and can be due to errors on data ingest, joining “clean” data with corrupt data (creating a new, corrupt product). Partial data, autocorrected to include some default values, can be misinterpeted, for example, as curated data. Data governance can step into the fray here and allow recording, even at the structured data column level, of the processes and lineage of the data, and the level of confidence, or quality, of the top-level source of the data.

Regulatory Compliance

Data governance is often leveraged when a set of regulations are applicable to the business, and specifically to the data the business processes. Regulations are, in essence, policies that must be adhered to in order to play within the business environment the organization operates in. GDPR is often referred to as an example regulation around data. This is because, among other things, GDPR mandates a separation of (European citizens') personal data from other data, and treatment of that data in a different way, especially around data that can be used to identify a person. This manuscript does not intend to go into the specifics of GDPR.

Regulation will usually refer to one or more of the following specifics:

-

Fine-grained access control

-

Data retention and data deletion

-

Audit logging

-

Sensitive data classes

Let’s discuss these one by one.

Regulation around fine-grained access control

Access control is already an established topic that relates to security most of all. Fine-grained access control adds the following considerations to access control:

- When providing access, are you providing access to the right size of container?

- This means making sure you provide the minimal size of the container of the data (table, dataset, etc.) that includes the requested information. In structured storage this will most commonly be a single table, rather than the whole dataset or project-wide permission.

- When providing access, are you providing the right level of access?

Different levels of access to data are possible. A common access pattern is being able to either read the data or write the data, but there are additional levels: you can choose to allow a contributor to append (but possibly not change) the data, or an editor may have access to modify or even delete data. In addition, consider protected systems in which some data is transformed on access. You could redact certain columns (e.g., US social security numbers, which serve as a national ID) to expose just the last four digits, or coarsen GPS coordinates to city and country. A useful way to share data without exposing too much is to tokenize (encrypt) the data with symmetric (reversible) encryption such that key data values (for example, a person’s ID) preserve uniqueness (and thus you can count how many distinct persons you have in your dataset) without being exposed to the specific details of a person’s ID.

All the levels of access mentioned here should be considered (read/write/delete/update and redact/mask/tokenize).

- When providing access, for how long should access remain open?

- Remember that access is usually requested for a reason (a specific project must be completed), and permissions granted should not “dangle” without appropriate justification. The regulator will be asking “who has access to what,” and thus limiting the number of personnel who have access to a certain class of data will make sense and can prove efficient.

Data retention and data deletion

A significant body of regulation deals with the deletion and the preservation of data. A requirement to preserve data for a set period, and no less than that period, is common. For example, in the case of financial transaction regulations, it is not uncommon to find a requirement that all business transaction information be kept for a duration of as much as seven years to allow financial fraud investigators to backtrack.

Conversely, an organization may want to limit the time it retains certain information, allowing it to draw quick conclusions while limiting liability. For example, having constantly up-to-date information about the location of all delivery trucks is useful for making rapid decisions about “just-in-time” pickups and deliveries, but it becomes a liability if you maintain that information over a period of time and can, in theory, plot a picture of the location of a specific delivery driver over the course of several weeks.

Audit logging

Being able to bring up audit logs for a regulator is useful as evidence that policies are complied with. You cannot present data that has been deleted, but you can show an audit trail of the means by which the data was created, manipulated, shared (and with whom), accessed (and by whom), and later expired or deleted. The auditor will be able to verify that policies are being adhered to. Audit logs can serve as a useful forensic tool as well.

To be useful for data governance purposes, audit logs need to be immutable, write-only (unchangeable by internal or external parties), and preserved, by themselves, for a lengthy period—as long as the most demanding data preservation policy (and beyond that, in order to show the data being deleted).

Audit logs need to include information not only about data and data operations by themselves but also about operations that happen around the data management facility. Policy changes need to be logged, and data schema changes need to be logged. Permission management and permission changes need to be logged, and the logging information should contain not only the subject of the change (be it a data container or a person to be granted permission) but also the originator of the action (the administrator or the service process that initiated the activity).

Sensitive data classes

Very often, a regulator will determine that a class of data should be treated differently than other data. This is the heart of the regulation that is most commonly concerned with a group of protected people, or a kind of activity. The regulator will be using legal language (e.g., personally identifiable data about European Union residents, or “financial transaction history”). It will be up to the organization to correctly identify what portion of that data it actually processes, and how this data compares to the data stored in structured or unstructured storage. For structured data it is sometimes easier to bind a data class into a set of columns (PII is stored in these columns) and tag the columns so that certain policies apply to these columns specifically, including access and retention. This supports the principles of fine-grained access control as well as adhering to the regulation about the data (not the data store or the personnel manipulating that data).

Considerations for Organizations as They Think About Data Governance

When an organization sits down and begins to define a data governance program and the goals of such a program, it should take into consideration the environment in which it operates. Specifically, it should consider what regulations are relevant and how often these change, whether or not a cloud deployment makes sense for the organization, and what expertise is required from IT and data analysts/owners. We discuss these factors next.

Changing regulations and compliance needs

In past years, data governance regulations have garnered more attention. With GDPR and CCPA joining the ranks of HIPAA- and PCI-related regulations, the affected organizations are reacting.

The changing regulation environment has meant that organizations need to remain vigilant when it comes to governance. No organization wants to be in the news for getting sued for failing to handle customer information as per a set of regulations. In a world where customer information is very precious, firms need to be careful how they handle customer data. Not only should firms know about existing regulations, but they also need to keep up with any changing mandates or stipulations, as well as any new regulations that might affect how they do business. In addition, changes to technology have also created additional challenges. Machine learning and AI have allowed organizations to predict future outcomes and probabilities. These technologies also create a ton of new datasets as a part of this process. With these new predicted values, how do companies think about governance? Should these new datasets assume the same policies and governance that the original datasets had, or should they have their own set of policies for governance? Who should have access to this data? How long should it be retained for? These are all questions that need to be considered and answered.

Data accumulation and organization growth

With infrastructure cost rapidly decreasing, and organizations growing both organically and through acquisition of additional business units (with their own data stores), the topic of data accumulation, and how to properly react to quickly amassing large amounts of data, becomes important. With data accumulation, an organization is collecting more data from more sources and for more purposes.

Big data is a term you will keep hearing, and it alludes to the vast amounts of data (structured and unstructured) now collected from connected devices, sensors, social networks, clickstreams, and so on. The volume, variety, and velocity of data has changed and accelerated over the past decade. The effort to manage and even consolidate this data has created data swamps (disorganized and inconsistent collections of data without clear curation) and even more silos—i.e., customers decided to consolidate on System Applications and Products (SAP), and then they decided to consolidate on Hive Metastore, and some consolidated on the cloud, and so on. Given these challenges, knowing what you have and applying governance to this data is complicated, but it’s a task that organizations need to undertake. Organizations thought that building a data lake would solve all their issues, but now these data lakes are becoming data swamps with so much data that is impossible to understand and govern. In an environment in which IDC predicts that more than a quarter of the data generated by 2025 will be real-time in nature, how do organizations make sure that they are ready for this changing paradigm?

Moving data to the cloud

Traditionally, all data resided in infrastructure provided and maintained by the organization. This meant the organization had full control over access, and there was no dynamic sharing of resources. With the emergence of cloud computing—which in this context implies cheap but shared infrastructure—organizations need to think about their response and investment in on-premises versus cloud infrastructure.

Many large enterprises still mention that they have no plans to move their core data, or governed data, to the cloud anytime soon. Even though the largest cloud companies have invested money and resources to protect customer data in the cloud, most customers still feel the need to keep this data on-prem. This is understandable, because data breaches in the cloud feel more consequential. The potential for damage, monetary as well as to reputation, explains why enterprises want more transparency in how governance works to protect their data on the cloud. With this pressure, you’re seeing cloud companies put more guardrails in place. They need to “show” and “open the hood” to how governance is being implemented, as well as provide controls that not only engender trust among customers, but also put some power into customers’ hands. We discuss these topics in Chapter 7.

Data infrastructure expertise

Another consideration for organizations is the sheer complexity of the infrastructure landscape. How do you think about governance in a hybrid and multi-cloud world? Hybrid computing allows organizations to have both on-premise and cloud infrastructure, while multicloud allows organizations to utilize more than one cloud provider. How do you implement governance across the organization when the data resides on-premises and on other clouds? This makes governance complicated and therefore goes beyond the tools used to implement it. When organizations start thinking about the people, the processes, and the tools and define a framework that encompasses these facets, then it becomes a little easier to extend governance across on-prem and in the cloud.

Why Data Governance Is Easier in the Public Cloud

Data governance involves managing risk. The practitioner is always trading off the security inherent in never allowing access to the data against the agility that is possible if data is readily available within the organization to support different types of decisions and products. Regulatory compliance often dictates the minimal requirements for access control, lineage, and retention policies. As we discussed in the previous sections, the implementation of these can be challenging as a result of changing regulations and organic growth.

The public cloud has several features that make data governance easier to implement, monitor, and update. In many cases, these features are unavailable or cost-prohibitive in on-premises systems.

Location

Data locality is mostly relevant for global organizations that store and use data across the globe, but a deeper look into regulation reveals that the situation is not so simple. For example, if, for business reasons, you want to leverage a data center in a central location (say, in the US, next to your potential customers) but your company is a German company, regulation requires that data about employees remains on German soil; thus your data strategy just became more involved.

The need to store user data within sovereign boundaries is an increasingly common regulatory requirement. In 2016, the EU Parliament approved data sovereignty measures within GDPR, wherein the storage and processing of records about EU citizens and residents must be carried out in a manner that follows EU law. Specific classes of data (e.g., health records in Australia, telecommunications metadata in Germany, or payment data in India) may also be subject to data locality regulations; these go beyond mere sovereignty measures by requiring that all data processing and storage occur within the national boundaries. The major public cloud providers offer the ability to store your data in accordance with these regulations. It can be convenient to simply mark a dataset as being within the EU multi-region and know that you have both redundancy (because it’s a multi-region) and compliance (because data never leaves the EU). Implementing such a solution in your on-premises data center can be quite difficult, since it can be cost-prohibitive to build data centers in every sovereign location that you wish to do business in and that has locality regulations.

Another reason that location matters is that secure transaction-aware global access matters. As your customers travel or locate their own operations, they will require you to provide access to data and applications wherever they are. This can be difficult if your regulatory compliance begins and ends with colocating applications and data in regional silos. You need the ability to seamlessly apply compliance roles based on users, not just on applications. Running your applications in a public cloud that runs its own private fiber and offers end-to-end physical network security and global time synchronization (not all clouds do this) simplifies the architecture of your applications.

Reduced Surface Area

In heavily regulated industries, there are huge advantages if there is a single “golden” source of truth for datasets, especially for data that requires auditability. Having your enterprise data warehouse (EDW) in a public cloud, particularly in a setting in which you can separate compute from storage and access the data from ephemeral clusters, provides you with the ability to create different data marts for different use cases. These data marts are provided data through views of the EDW that are created on the fly. There is no need to maintain copies, and examination of the views is enough to ensure auditability in terms of data correctness.

In turn, the lack of permanent storage in these data marts greatly simplifies their governance. Since there is no storage, complying with rules around data deletion is trivial at the data mart level. All such rules have to be enforced only at the EDW. Other rules around proper use and control of the data still have to be enforced, of course. That’s why we think of this as a reduced surface area, not zero governance.

Ephemeral Compute

In order to have a single source of data and still be able to support enterprise applications, current and future, we need to make sure that the data is not stored within a compute cluster, or scaled in proportion to it. If our business is spiky, or if we require the ability to support interactive or occasional workloads, we will require infinitely scalable and readily burstable compute capability that is separate from storage architecture. This is possible only if our data processing and analytics architecture is serverless and/or clearly separates computes and storage.

Why do we need both data processing and analytics to be serverless? Because the utility of data is often realized only after a series of preparation, cleanup, and intelligence tools are applied to it. All these tools need to support separation of compute and storage and autoscaling in order to realize the benefits of a serverless analytics platform. It is not sufficient just to have a serverless data warehouse or application architecture that is built around serverless functions. You need your tooling frameworks themselves to be serverless. This is available only in the cloud.

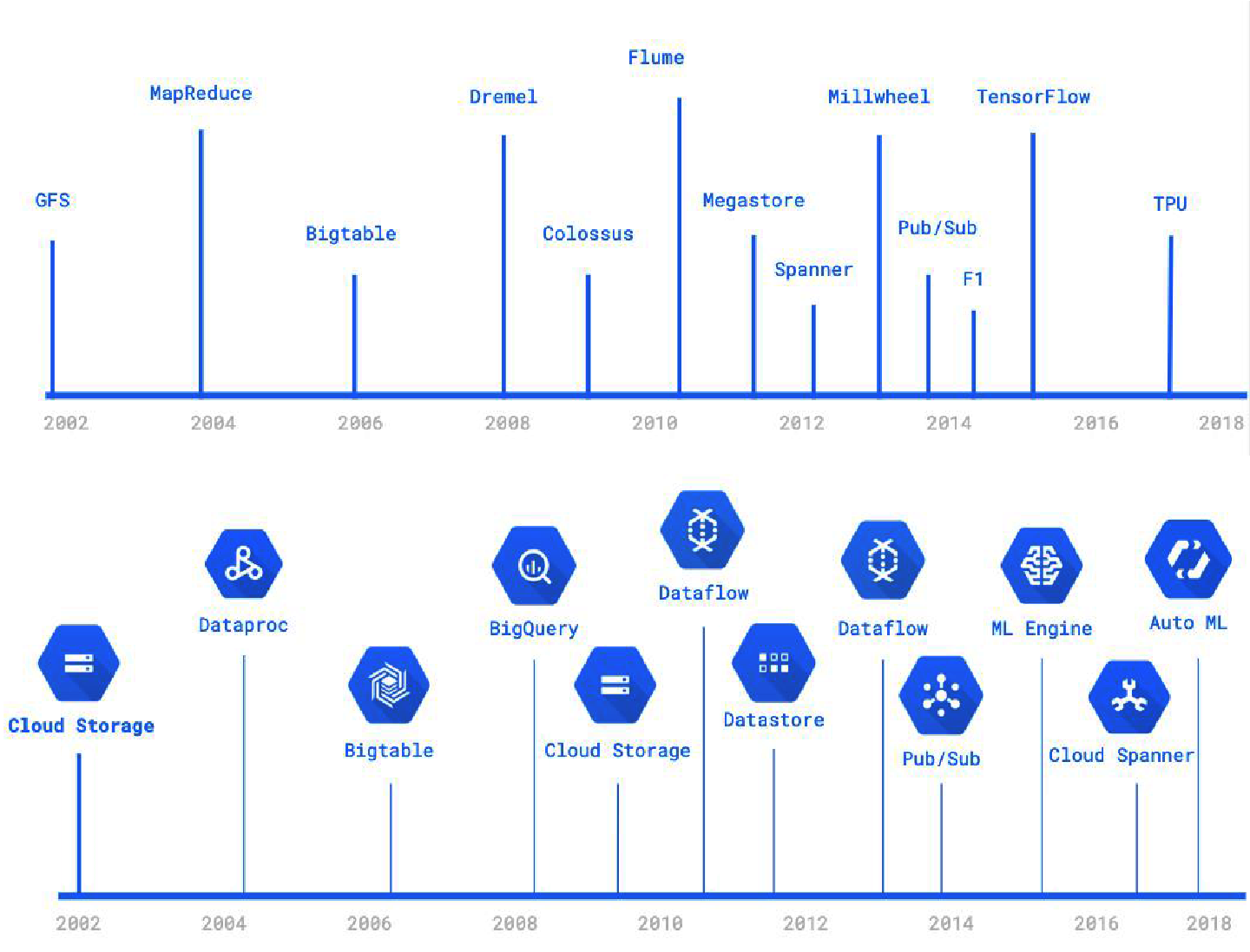

Serverless and Powerful

In many enterprises, lack of data is not the problem—it’s the availability of tools to process data at scale. Google’s mission of organizing the world’s information has meant that Google needed to invent data processing methods, including methods to secure and govern the data being processed. Many of these research tools have been hardened through production use at Google and are available on Google Cloud as serverless tools (see Figure 1-14). Equivalents exist on other public clouds as well. For example, the Aurora database on Amazon Web Services (AWS) and Microsoft’s Azure Cosmos DB are serverless; S3 on AWS and Azure Cloud Storage are the equivalent of Google Cloud Storage. Similarly, Lambda on AWS and Azure Functions provide the ability to carry out stateless serverless data processing. Elastic Map Reduce (EMR) on AWS and HDInsight on Azure are the equivalent of Google Cloud Dataproc. At the time of writing, serverless stateful processing (Dataflow on Google Cloud) is not yet available on other public clouds, but this will no doubt be remedied over time. These sorts of capabilities are cost-prohibitive to implement on-premises because of the necessity to implement serverless tools in an efficient manner while evening out the load and traffic spikes across thousands of workloads.

Figure 1-14. Many of the data-processing techniques invented at Google (top panel; see also http://research.google.com/pubs/papers.html) exist as managed services on Google Cloud (bottom panel).

Labeled Resources

Public cloud providers provide granular resource labeling and tagging in order to support a variety of billing considerations. For example, the organization that owns the data in a data mart may not be the one carrying out (and therefore paying for) the compute. This gives you the ability to implement regulatory compliance on top of the sophisticated labeling and tagging features of these platforms.

These capabilities might include the ability to discover, label, and catalog items (ask your cloud provider whether this is the case). It is important to be able to label resources, not just in terms of identity and access management but also in terms of attributes, such as whether a specific column is considered PII in certain jurisdictions. Then it is possible to apply consistent policies to all such fields everywhere in your enterprise.

Security in a Hybrid World

The last point about having consistent policies that are easily applicable is key. Consistency and a single security pane are key benefits to hosting your enterprise software infrastructure on the cloud. However, such an all-or-nothing approach is unrealistic for most enterprises. If your business operates equipment (handheld devices, video cameras, point-of-sale registers, etc.) “on the edge,” it is often necessary to have some of your software infrastructure there as well. Sometimes, as with voting machines, regulatory compliance might require physical control of the equipment being used. Your legacy systems may not be ready to take advantage of the separation of compute and storage that the cloud offers. In these cases, you’d like to continue to operate on-premises. Systems that involve components that live in a public cloud and one other place—in two public clouds, or in a public cloud and on the edge, or a in public cloud and on-premises—are termed hybrid cloud systems.

It is possible to greatly expand the purview of your cloud security posture and policies by employing solutions that allow you to control both on-premises and cloud infrastructure using the same tooling. For example, if you have audited an on-premises application and its use of data, it is easier to approve that identical application running in the cloud than it is to reaudit a rewritten application. The cost of entry to this capability is to containerize your applications, and this might be a cost well worth paying for, for the governance benefits alone.

Summary

When discussing a successful data-governance strategy, you must consider more than just the data architecture/data pipeline structure or the tools that perform “governance” tasks. Consideration of the actual humans behind the governance tools as well as the “people processes” put into place is also highly important and should not be discounted. A truly successful governance strategy must address not only the tools involved but the people and processes as well. In Chapters 2 and 3, we will discuss these ingredients of data governance.

In Chapter 4, we take an example corpus of data and consider how data governance is carried out over the entire life cycle of that data; from ingest to preparation and storage, to incorporation into reports, dashboards, and machine learning models, and on to updates and eventual deletion. A key concern here is that data quality is an ongoing concern; new data-processing methods are invented, and business rules change. How to handle the ongoing improvement of data quality is addressed in Chapter 5.

By 2025, more than 25% of enterprise data is expected to be streaming data. In Chapter 6, we address the challenges of governing data that is on the move. Data in flight involves governing data at the source and at the destination, and any aggregations and manipulations that are carried in flight. Data governance also has to address the challenges of late-arriving data and what it means for the correctness of calculations if storage systems are only eventually correct.

In Chapter 7, we delve into data protection and the solutions available for authentication, security, backup, and so on. The best data governance is of no use if monitoring is not carried out and leaks, misuse, and accidents are not discovered early enough to be mitigated. Monitoring is covered in Chapter 8.

Finally, in Chapter 9, we bring together the topics in this book and cover best practices in building a data culture—a culture in which both the user and the opportunity is respected.

One question we often get asked is how Google does data governance internally. In Appendix A, we use Google as an example (one that we know well) of a data governance system, and point out the benefits and challenges of the approaches that Google takes and the ingredients that make it all possible.

1 Leandro DalleMule and Thomas H. Davenport, “What’s Your Data Strategy?” Harvard Business Review (May–June 2017): 112–121.

2 This application is the Meteorological Phenomena Identification Near the Ground (mPING) Project, developed through a partnership between NSSL, the University of Oklahoma, and the Cooperative Institute for Mesoscale Meteorological Studies.

3 It was on the radio, but you can read about it on NPR’s All Tech Considered blog.

4 David Reinsel, John Gantz, and John Rydning, “The Digitization of the World: From Edge to Core”, November 2018.

5 “The Best Jobs in the US: 2019”, Indeed, March 19, 2019.

6 Reinsel et al. “The Digitization of the World.”

8 Reinsel et al. “The Digitization of the World.”

9 Aarian Marshall and Alex Davies, “Uber’s Self-Driving Car Saw the Woman It Killed, Report Says”, Wired, May 24, 2018.

10 Jonathan Shaw, “Artificial Intelligence and Ethics”, Harvard Magazine, January–February 2019, 44-49, 74.

11 “Information on the Capital One Cyber Incident”, Capital One, updated September 23, 2019; Brian Krebs, “What We Can Learn from the Capital One Hack”, Krebs on Security (blog), August 2, 2019.

12 See, for example, the book Dark Data: Why What You Don’t Know Matters by David Hand (Princeton University Press).

13 David Winkler, “AIS Data Quality and the Authoritative Vessel Identification Service (AVIS)” (PowerPoint presentation, National GMDSS Implementation Task Force, Arlington, VA, January 10, 2012).

Get Data Governance: The Definitive Guide now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.