Chapter 1. The Role of a Modern Data Warehouse in the Age of AI

Actors: Run Business, Collect Data

Applications might rule the world, but data gives them life. Nearly 7,000 new mobile applications are created every day, helping drive the world’s data growth and thirst for more efficient analysis techniques like machine learning (ML) and artificial intelligence (AI). According to IDC,1 AI spending will grow 55% over the next three years, reaching $47 billion by 2020.

Applications Producing Data

Application data is shaped by the interactions of users or actors, leaving fingerprints of insights that can be used to measure processes, identify new opportunities, or guide future decisions. Over time, each event, transaction, and log is collected into a corpus of data that represents the identity of the organization. The corpus is an organizational guide for operating procedures, and serves as the source for identifying optimizations or opportunities, resulting in saving money, making money, or managing risk.

Enterprise Applications

Most enterprise applications collect data in a structured format, embodied by the design of the application database schema. The schema is designed to efficiently deliver scalable, predictable transaction-processing performance. The transactional schema in a legacy database often limits the sophistication and performance of analytic queries. Actors have access to embedded views or reports of data within the application to support recurring or operational decisions. Traditionally, for sophisticated insights to discover trends, predict events, or identify risk requires extracting application data to dedicated data warehouses for deeper analysis. The dedicated data warehouse approach offers rich analytics without affecting the performance of the application. Although modern data processing technology has, to some degree and in certain cases, undone the strict separation between transactions and analytics, data analytics at scale requires an analytics-optimized database or data warehouse.

Operators: Analyze and Refine Operations

Actionable decisions derived from data can be the difference between a leading or lagging organization. But identifying the right metrics to drive a cost-saving initiative or identify a new sales territory requires the data processing expertise of a data scientist or analyst. For the purposes of this book, we will periodically use the term operators to refer to the data scientists and engineers who are responsible for developing, deploying, and refining predictive models.

Targeting the Appropriate Metric

The processing steps required of an operator to identify the appropriate performance metric typically requires a series of trial-and-error steps. The metric can be a distinct value or offer a range of values to support a potential event. The analysis process requires the same general set of steps, including data selection, data preparation, and statistical queries. For predicting events, a model is defined and scored for accuracy. The analysis process is performed offline, mitigating disruption to the business application, and offers an environment to test and sample. Several tools can simplify and automate the process, but the process remains the same. Also, advances in database technology, algorithms, and hardware have accelerated the time required to identify accurate metrics.

Accelerating Predictions with ML

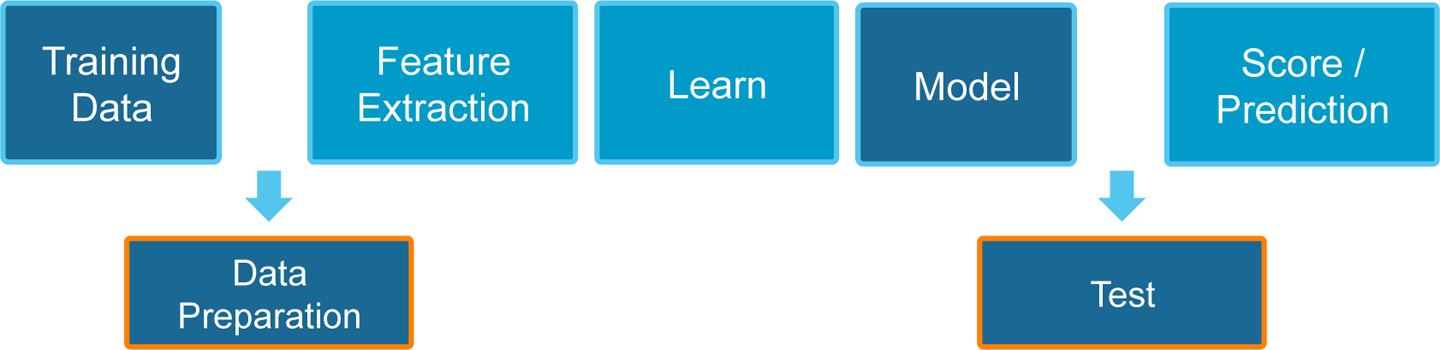

Even though operational measurements can optimize the performance of an organization, often the promise of predicting an outcome or identifying a new opportunity can be more valuable. Predictive metrics require training models to “learn” a process and gradually improve the accuracy of the metric. The ML process typically follows a workflow that roughly resembles the one shown in Figure 1-1.

Figure 1-1. ML process model

The iterative process of predictive analytics requires operators to work offline, typically using a sandbox or datamart environment. For analytics that are used for long-term planning or strategy decisions, the traditional ML cycle is appropriate. However, for operational or real-time decisions that might take place several times a week or day, the use of predictive analytics has been difficult to implement. We can use the modern data warehouse technologies to inject live predictive scores in real time by using a connected process between actors and operators called a machine learning feedback loop.

The Modern Data Warehouse for an ML Feedback Loop

Using historical data and a predictive model to inform an application is not a new approach. A challenge of this approach involves ongoing training of the model to ensure that predictions remain accurate as the underlying data changes. Data science operators mitigate this with ongoing data extractions, sampling, and testing in order to keep models in production up to date. The offline process can be time consuming. New approaches to accelerate this offline and manual process automate retraining and form an ML feedback loop. As database and hardware performance accelerate, model training and refinement can occur in parallel using the most recent live application data. This process is made possible with a modern data warehouse that reduces data movement between the application store and the analysis process. A modern data warehouse can support efficient query execution, along with delivering high-performance transactional functionality to keep the application and the analysis synchronized.

Dynamic Feedback Loop Between Actors and Operators

As application data flows into the database, subtle changes might occur, resulting in a discrepancy between the original model and the latest dataset. This change happens because the model was designed under conditions that might have existed several weeks, months, or even years before. As users and business processes evolve, the model requires retraining and updating. A dynamic feedback loop can orchestrate continuous model training and score refinement on live application data to ensure the analysis and the application remain up to date and accurate. An added advantage of an ML feedback loop is the ability to apply predictive models to previously difficult-to-predict events due to high data cardinality issues and resources required to develop a model.

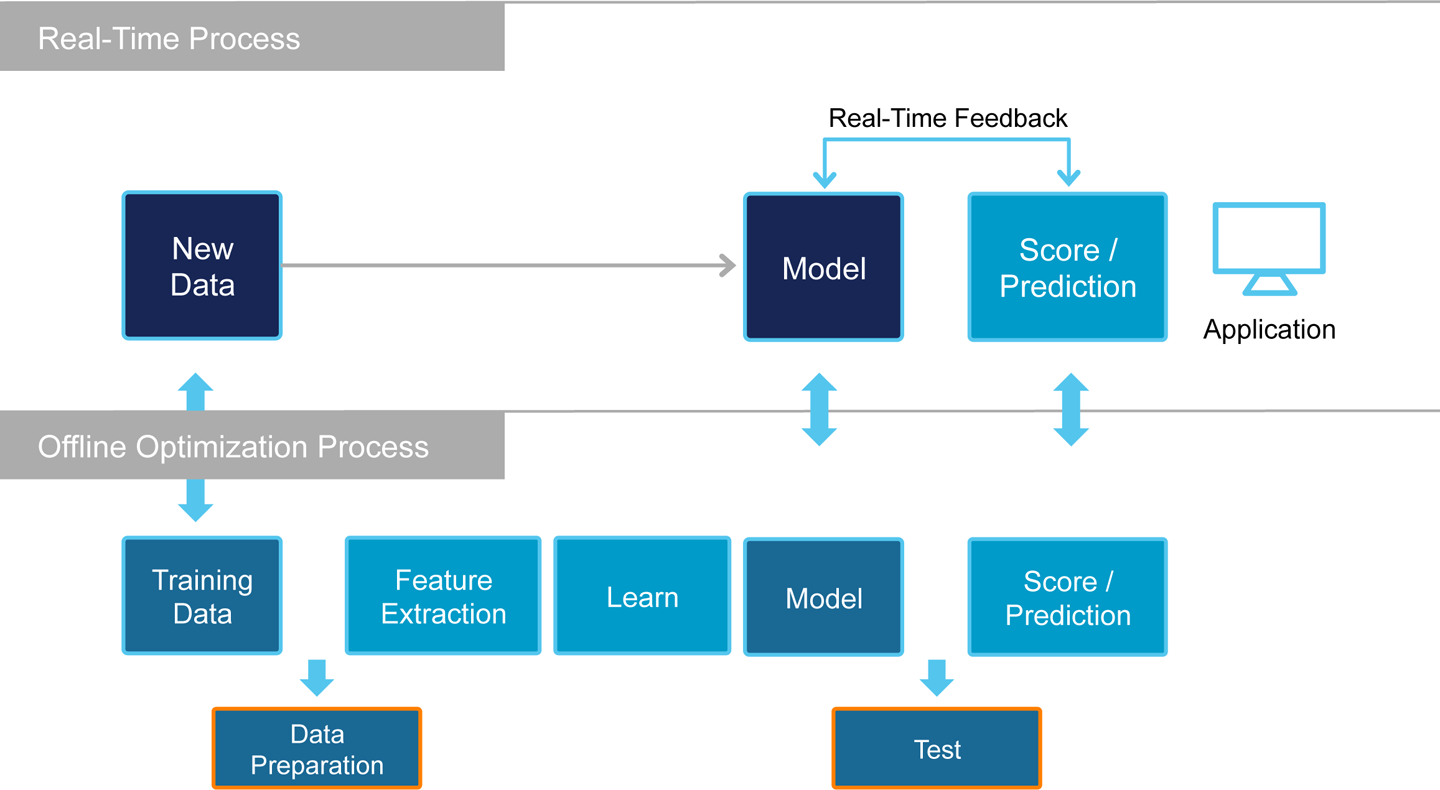

Figure 1-2 describes an operational ML process that is supervised in context with an application.

Figure 1-2. The operational ML process

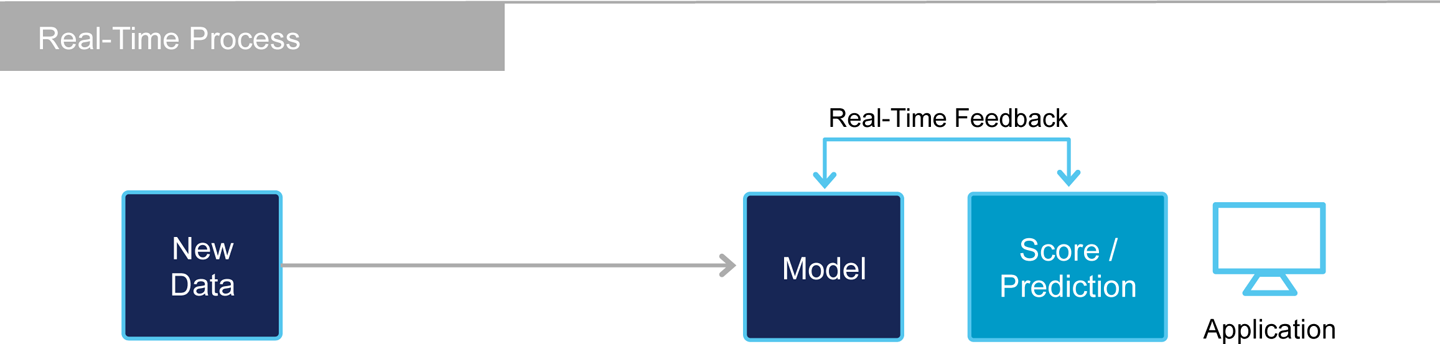

Figure 1-3 shows the use of a modern data warehouse that is capable of driving live data directly to a model for immediate scoring for the application to consume. The ML feedback loop requires specific operational conditions, as we will discuss in more depth in Chapter 2. When the operational conditions are met, the feedback loop can continuously process new data for model training, scoring, and refinement, all in real time. The feedback loop delivers accurate predictions on changing data.

Figure 1-3. An ML feedback loop

1 For more information, see the Worldwide Semiannual Cognitive/Artificial Intelligence Systems Spending Guide.

Get Data Warehousing in the Age of Artificial Intelligence now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.