Chapter 4. Operational Visibility

Visibility (often referred to as monitoring) is the cornerstone of the craft of database reliability engineering. Operational visibility means that we have awareness of the working characteristics of a database service due to the regular measuring and collection of data points about the various components. Why is this important? Why do we need operational visibility? Here are just some of the reasons:

- Break/fix and alerting

We need to know when things break, or are about to break, so that we can fix them to avoid violating our Service-Level Objectives (SLOs).

- Performance and behavior analysis

Itâs important to understand the latency distribution in our applications, including outliers, and we need to know the trends over time. This data is critical to understanding the impact of new features, experiments, and optimization.

- Capacity planning

Being able to correlate user behavior and application efficiency to real resources (CPU, network, storage, throughput, memory) is critical to ensuring that you never encounter a lack of capacity at a critical business moment.

- Debugging and postmortems

Moving fast means things do break. Good operational visibility gives you the ability to rapidly identify failure points and optimization points to mitigate future risk. Human error is never a root cause, but systems can always be improved upon and made to be more resilient.

- Business analysis

Understanding how your business functionality is being utilized can be a leading indicator of issues, but it is also critical for everyone to see how people are using your features and how much value versus cost is being driven.

- Correlation and causation

By having events in the infrastructure and application register themselves in your operational visibility stack, you can rapidly correlate changes in workload, behavior, and availability. Examples of these events are application deployments, infrastructure changes, and database schema changes.

Pretty much every facet of your organization requires true operational visibilityâOpViz. Our goal in this chapter is to help you to understand observability in the architectures with which you will be working. Although there is no one set of tools we espouse, there are principles, a general taxonomy, and usage patterns to learn. We present this via numerous case studies and example approaches. First, letâs consider the evolution of OpViz from traditional approaches to those utilized today.

Operational visibility is a big deal! We need some rules on how we design, build, and utilize this critical process.

The New Rules of Operational Visibility

Modern operational visibility assumes that data stores are distributed, often massively. It recognizes that collection, and even presentation of data, are not as crucial as the analysis. It always asksâand hopefully elicits rapid answering ofâtwo questions: âHow is this impacting my SLOs?â and âHow is this broken, and why?â In other words, rather than treating your OpViz stack as a set of utilities to be relegated to the Ops team, you must design, build, and maintain it as a business intelligence (BI) platform. This that you must treat it the same way you would a data warehouse or big data platform. The rules of the game have changed to reflect this.

Treat OpViz Systems Like BI Systems

When designing a BI system, you begin by thinking about the kinds of questions your users will be asking and building out from there. Consider your users needs for data latency (âHow quickly is data available?â), data resolution (âHow deep down can the user drill?â), and data availability. In other words, you are defining SLOs for your OpViz service. (Refer to Chapter 2.)

The hallmark of a mature OpViz platform is that it can provide not only the state of the infrastructure running the application, but also the behavior of the applications running on that infrastructure. Ultimately, this should also be able to show anyone how the business is doing and how that is being affected by the infrastructure and applications on which the business is relying. With that in mind, the OpViz platform must support operations and database engineers, software engineers, business analysts, and executives.

Distributed Ephemeral Environments Trending to the Norm

Weâve already discussed the fact that our database instance life cycles are trending down with the adoption of virtual infrastructures. Even though they are still much longer lived than other infrastructure components, we still must be able to gather metrics for services consisting of short-lived components that are aggregated rather than individual database hosts.

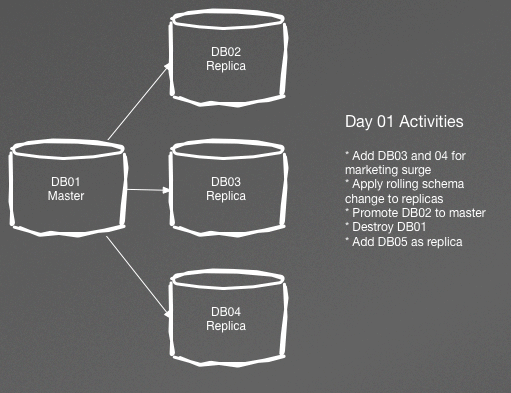

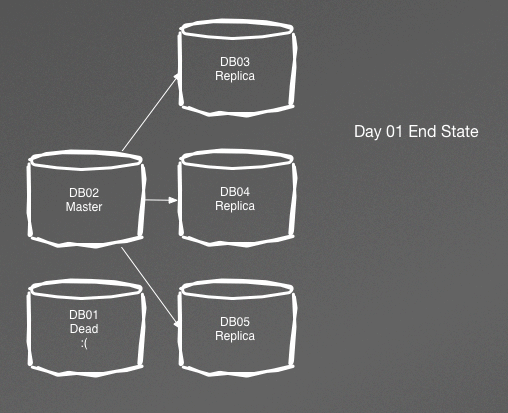

Figure 4-1 demonstrates a fairly stable master/replica setup for a relational datastore in which numerous activities can occur in one day. By the end of the day, we can see a completely new setup, as illustrated in Figure 4-2.

Figure 4-1. Typical master/replica setup

Figure 4-2. End of day 1

This kind of dynamic infrastructure requires us to store metrics based on roles rather than hostnames or IPs. So, instead of storing a set of metrics as DB01, we would add metrics to the âmasterâ role, allowing us to see all master behavior even after switching to a new master. Service discovery systems do a great job of maintaining abstraction above the dynamic portions of infrastructure to facilitate this.

Store at High Resolutions for Key Metrics

As reviewed in Chapter 2, high resolution is critical for understanding busy application workloads. At a minimum, anything related to your SLOs should be kept at one-second or lower sampling rates to ensure that you understand what is going on in the system. A good rule of thumb is to consider whether the metric has enough variability to affect your SLOs in the span of 1 to 10 seconds and to base granularity on that.

For instance, if you are monitoring a constrained resource, such as CPU, you would want to collect this data at a one-second or smaller sample given that CPU queues can build up and die down quite quickly. With latency SLOs in the milliseconds, this data must be good enough to see if CPU saturation is the reason your application latency is being affected. Database connection queues are another area that can be missed without very frequent sampling.

Conversely, for infrequently changing items such as disk space or service availability, you can measure these in the one-minute or higher sampling rates without losing data. High sample rates consume a lot of resources, and you should be judicious in using them. Similarly, you should probably keep less than five different sampling rates to maintain simplicity and structure in your OpViz platform.

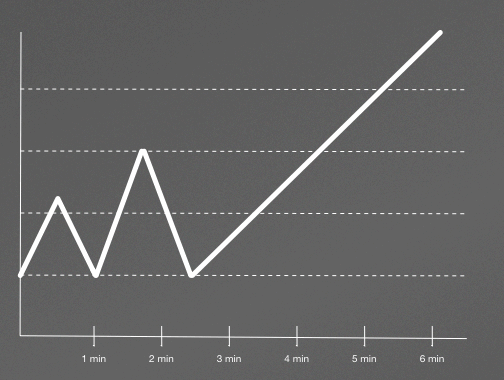

For an example of the impacts of a sampling rate that is too long, letâs consider the graph in Figure 4-3.

Figure 4-3. Real workload showing spikes

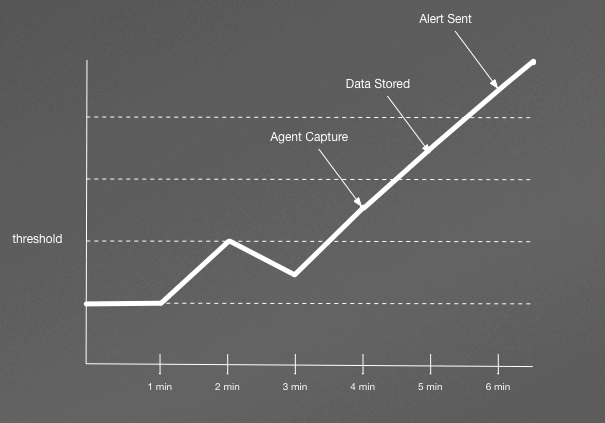

Figure 4-3 shows two spikes followed by a long ascension. Now, if we are sampling this metric at one-minute intervals, the graph would look like Figure 4-4.

Notice now that we donât see even a single spike, and the second graph looks much more benign. In fact, our alerting threshold is not even exceeded until minute three. Assuming a one-minute schedule for storage and for alert rules checking, we wouldnât even send an alert to an operator until 7.5 minutes after the rule was violated!

Figure 4-4. Workload visualized via one-minute sampling.

Keep Your Architecture Simple

It is not unusual for a growing platform to have 10,000 or more metrics being checked at various levels of granularity for any number of instances/servers that are going in and out of service in the infrastructure at any time. Your goal is to be able to rapidly answer the aforementioned questions, which means that you must continually push for reducing the signal-to-noise ratio. This means being ruthless in the amount of data you allow into your system, particularly at the human interaction points, such as presentation and alerting.

âMonitor everythingâ has been the watch-cry for quite a while and was a reaction to environments in which monitoring was sparse and ad hoc. The truth, though, is that distributed systems and multiservice applications create too many metrics. Early stage organizations have neither the money nor the time to manage this amount of monitoring data. Larger organizations should have the knowledge to focus on what is critical to their systems.

Simplicity and signal amplification also includes standardization. This means standardizing templates, resolutions, retentions, and any other knobs and features presented to engineers. By doing so, you ensure that your system is easy to understand and thus user friendly for answering questions and identifying issues.

Remembering these four rules will help keep you on track with designing and building incredibly valuable and useful monitoring systems. If you find yourself violating them, ask yourself why. Unless you have a really good answer, consider going back to the foundation.

An OpViz Framework

We could write an entire book on this stuff. As you begin to gather and prepare the appropriate data to go into the OpViz platform for you to do your job, you should be able to recognize a good platform and advocate for a better platform. This is our goal for this section.

Letâs think of our OpViz platform as a great big distributed I/O device. Data is sent in, routed, structured, and eventually comes out the other side in useful ways that help you to understand your systems better, to identify behaviors caused by broken or soon to be broken components, and to meet your SLOs. Letâs take a closer look at the process:

-

Data is generated by agents on clients and then sent to a central collector for that datatype (i.e., metrics to Sensu or CollectD, events to Graphite, logs to Logstash or Splunk):

Occasionally a centralized monitoring system (like Sensu or Nagios) will execute checks via a pull method in addition to the aforementioned push method.

A benefit of distributed checkingâthe app generates checks and forwards themâis that there is a significantly smaller amount of configuration management required than in tightly coupled systems like Nagios for which you must configure the agent and the monitoring server together.

The collectors store data (in systems like Graphite, InfluxDB, or ElasticSearch) or forward to event routers/processors (such as Riemann).

Event routers uses heuristics to send data to the right places.

Data output includes long-term storage, graphs, alerts, tickets, and external calls.

These outputs are where we get the true value of our OpViz stack.

Data In

To create outputs, we require good inputs. Wherever possible, use data already generated by your environments rather than artificial probes. When you simulate a user by sending a request into the system, it is called blackbox monitoring. Blackbox monitoring is sending âcanaryâ users, or watching the inputs and outputs from the internet edge. Blackbox monitoring can be effective if you have low traffic periods or items that just donât run frequently enough for you to monitor. But, if you are generating sufficient data, getting real metrics, aka whitebox monitoring, is infinitely more appealing. Whitebox testing involves knowing a lot about your application, and at its most specific, includes instrumenting the internals of the application. Great tools for this include AppDynamics, NewRelic, or Honeycomb. With tools such as this you can trace the flow of a single user through the application all the way to the database.

One benefit of this approach is that anything creating data becomes an agent. A centralized, monolithic solution that is generating checks and probes will have challenges scaling as you grow. But with whitebox testing, youâve distributed this job across your entire architecture. This kind of architecture also allows new services and components to easily register and deregister with your collection layer, which is a good thing based on our OpViz rules. That being said, there are still times when having a monitoring system that can perform remote executions as a pull can be valuable, such as checking to see whether a service is up, monitoring to see whether replication is running, or checking to see whether an important parameter is enabled on your database hosts.

OK, so we are looking to send all of this valuable data to our OpViz platform. What kind of data are we talking about anyway?

Telemetry/Metrics

Ah metrics! So diverse. So ubiquitous. A metric is the measurement of a property that an application or a component of your infrastructure possesses. Metrics are observed periodically, creating a time-series that contains the property or properties, the timestamp, and the value. Some properties that might apply include the host, service, or datacenter. The true value of this data comes in observing it over time through visualizations such as graphs.

Metrics are typically stored in four different ways:

- Counters

These are cumulative metrics that represent how many times a specific occurrence of something has occurred.

- Gauges

These are metrics that change in any direction, and indicate a current value, such as temperature, jobs in queue, or active locks.

- Histograms

A number of events broken up into configured buckets to show distribution.

- Summaries

This is similar to histogram but focused on proving counts over sliding windows of time.

Metrics often have mathematical functions applied to them to assist humans in deriving value from their visualizations. These functions create more value, but it is important to remember that they are derived data and that the raw data is just as critical. If youâre tracking means per minute but do not have the underlying data, you wonât be able to create means on larger windows, such as hours or days. The following are some of the functions:

Count

Sum

Average

Median

Percentiles

Standard Deviation

Rates of Change

Distributions

Visualizing Distributions

Visualizing a distribution is very valuable to look at the kind of data that is often generated in web architectures. This data is rarely distributed normally and often has long tails. It can be challenging to see this in normal graphs. But, with the ability to generate distribution maps over buckets of time, you enable new styles of visualization such as histograms over time and flame graphs that can really help a human operator visualize the workloads that are occurring in your systems.1

Metrics are the source for identifying symptoms of underlying issues, and thus are crucial to early identification and rapid resolution of any number of issues that might affect your SLOs.

Logs

A log is created for an event, so you can consider log events to be a subset of an event. Operating systems, databases, and applications all create logs during certain events. Unlike metrics, logs can provide additional data and context to something that has occurred. For instance, a database query log can tell you when a query was executed, important metrics about that query, and even the database user who executed it.

Data Out

So, data is flowing into our systems, which is nice and all but doesnât help us answer our questions or meet our SLOs, now does it? What should we be looking to create in this OpViz framework? Letâs examine this more closely:

- Alerts

An alert is an interrupt to a human that instructs him to drop what heâs doing and investigate a rules violation that caused the alert to be sent. This is an expensive operation and should be utilized only when SLOs are in imminent danger of violation.

- Tickets/tasks

Tickets and tasks are generated when work must be done, but there is not an imminent disaster. The output of monitoring should be tickets/tasks that go in engineer queues for work.

- Notifications

Sometimes you just want to record that an event has occurred to help create context for folks, such as when code deploy events are registered. Notifications will often go to a chat room, a wiki, or collaboration tool to make it visible without interrupting workflow.

- Automation

There are times when data, particularly utilization data, advises of the need for more or less capacity. Autoscaling groups can be called to modify resource pools in such cases. This is but one example of automation as an output of monitoring.

- Visualization

Graphs are one of the most common outputs of OpViz. These are collected into dashboards that suit the needs of a particular user community and are a key tool on which humans can perform pattern recognition.

Bootstrapping Your Monitoring

If you are like most rational people, you might be beginning to feel overwhelmed by all of these things that should be happening. That is normal! This is a good time to remind you that everything we build here is part of an iterative process. Start small, let things evolve, and add in more as you need it. Nowhere is this more true than in a startup environment.

As a brand new startup, you begin with zero. Zero metrics, zero alerting, zero visibilityâjust a bunch of engineers cranking out overly optimistic code. Many startups somehow end up with an instance somewhere in a public cloud that was a prototype or testbed and then it somehow turned into their master production database. Head? Meet desk!

Maybe you were just hired as the first Ops/database engineer at a young startup and youâre taking stock of what the software engineers have built around monitoring or visibility, and itâs effectivelyâ¦zero.

Sound familiar? If you have any experience with startups, it should. Itâs nothing to be ashamed of! This is how startup sausage gets made. A startup that began by building out an elaborate operational visibility ecosystem in advance of their actual needs would be a stupid startup. Startups succeed by focusing hard on their core product, iterating rapidly, aggressively seeking out customers, responding to customer feedback and production realities, and making difficult decisions about where to spend their precious engineering resources. Startups succeed by instrumenting elaborate performance visibility systems as soon as they need them, not before. Startups fail all the time but usually not because the engineers failed to anticipate and measure every conceivable storage metric in advance. What we need to begin with is a Minimum Viable Monitoring Set.

There are an infinite number of metrics that you can monitor between the database, system, storage, and various application layers. In the physiological needs state, you should be able to determine if your database is up or down. As you work toward fulfilling the âesteemâ state, you begin by monitoring other symptoms that you have identified that correlate with real problems, such as connection counts or lock percentages. One common progression looks like this:

Monitor if your databases are up or down (pull checks).

Monitor overall latency/error metrics and end-to-end health checks (push checks).

Instrument the application layer to measure latency/errors for every database call (push checks).

Gather as many metrics as possible about the system, storage, database, and app layers, regardless of whether you think they will be useful. Most operating systems, services, and databases will have plug-ins that are fairly comprehensive.

Create specific checks for known problems. For example, checks based on losing x percent of database nodes or a global lock percent that is too high (do this iteratively as well as proactively, see Chapter 3).

Sometimes you can take a shortcut to the âesteemâ level by plugging in third-party monitoring services like VividCortex, Circonus, HoneyComb, or NewRelic. But itâs kind of a hack if youâre storing these database metrics in a system separate from the rest of your monitoring. Storing in disparate systems makes it more challenging to correlate symptoms across multiple monitoring platforms. Weâre not saying this is bad or you shouldnât do this; elegant hacks can take you a really long way! But the âself-actualizationâ phase generally includes consolidating all monitoring feeds into a single source of truth.

Okay. Now that youâve safeguarded against your company going out of business when you lose a disk or an engineer makes a typo, you can begin asking yourself questions about the health of your service. As a startup, the key questions to ask yourself are: âIs my data safe?â âIs my service up?â and âAre my customers experiencing pain?â This is your minimum viable product monitoring set.

Is the Data Safe?

For any mission-critical data that you truly care about, you should avoid running with less than three live copies. Thatâs one primary and two-plus secondaries for leader-follower data stores like MySQL or MongoDB or a replication factor of three for distributed data stores like Cassandra or Hadoop. Because you never, ever want to find yourself in a situation in which you have a single copy of any data you care about, ever. This means that you need to be able to lose one instance while still maintaining redundancy, which is why three is a minimum number of copies, not two. Even when you are penny-pinching and worrying about your run rate every month as a baby startup, mission-critical data is not the appropriate place to cut those costs. (We discuss availability architecture in Chapter 5, Infrastructure Engineering.)

But not all data is equally precious! If you can afford to lose some data or if you could reconstruct the data from immutable logs if necessary, running with n + 1, (where n is the required number of nodes for normal activity) copies is perfectly ok. This is a judgment callâonly you can know how critical and how irreplaceable each dataset is for your company, and how tight your financial resources are. You also need backups, and you need to regularly validate that the backups are restorable and that the backup process is completing successfully. If you arenât monitoring that your backups are good, you cannot assume that your data is safe.

Is the Service Up?

End-to-end checks are the most powerful tool in your arsenal because they most closely reflect your customer experience. You should have a top-level health check that exercises not just the aliveness of the web tier or application tier, but all the database connections in the critical path. If your data is partitioned across multiple hosts, the check should fetch an object on each of the partitions, and it should automatically detect the full list of partitions or shards so that you do not need to manually add new checks any time you add more capacity.

Howeverâand this is importantâyou should have a simpler aliveness check for your load balancers to use that does not exercise all of your database connections. Otherwise, you can easily end up health-checking yourself to death.

Excessive Health Checking

Charity once worked on a system for which a haproxy health check endpoint did a simple SELECT LIMIT 1 from a mysql table. One day, they doubled the capacity of some stateless services, thus doubling the number of proxy servers running these health checks. Adding capacity to other systems accidentally took the entire site down by overloading the database servers with health checks. More than 95% of all database queries were those stupid health checks. Donât do that!

Speaking of lessons learned the hard way, you should always have some off-premises monitoringâif nothing else, an offsite health check for your monitoring service itself. It doesnât matter how amazing and robust your on-premises monitoring ecosystem is if your datacenter or cloud region goes down and takes your entire monitoring apparatus with it. Setting up an external check for each major product or service, as well as a health check on the monitoring service itself, is a good best practice.

Are the Consumers in Pain?

Okay, you are monitoring that your service is alive. The patient has a heartbeat. Good job!

But what if your latency subtly doubles or triples, or what if 10% of your requests are erroring in a way that cleverly avoids triggering your health check? What if your database is not writable but can be read from, or the replicas are lagging, which is causing your majority write concern to hang? What if your RAID array has lost a volume and is running in degraded mode, you have an index building, or you are experiencing hot spotting of updates to a single row?

Well, this is why systems engineering, and databases in particular, are so much fun. There are infinite ways your systems can fail, and you can probably only guess about five percent of them in advance. Yay!

This is why you should gradually develop a library of comprehensive high-level metrics about the health of the serviceâhealth checks, error rates, latency. Anything that materially affects and disrupts your customer experience. And then? Go work on something else for a while and see what breaks.

We are almost entirely serious. As discussed in Chapter 3, there is only so much to be gained by sitting around trying to guess how your service is going to break. You just donât have the data yet. You might as well go build more things, wait for things to break, and then pay a lot of attention when things actually begin failing.

Now that weâve provided a bootstrapping method and an evolution method, letâs breakdown what you should be measuring, with a focus on what you as the DBRE need.

Instrumenting the Application

Your application is the first place to begin. Although we can measure most things at the datastore layer, the first leading indicators of problems should be changes in user and application behavior. Between application instrumentation by your engineers and application performance management solutions (APM) such as New Relic and AppDynamics, you can get a tremendous amount of data for everyone in the organization:

You should already be measuring and logging all requests and responses to pages or API endpoints.

You should also be doing this to all external services, which includes databases, search indexes, and caches.

Any jobs or independent workflows that should be similarly monitored.

Any independent, reusable code like a method or function that interacts with databases, caches, and other datastores should be similarly instrumented.

Monitor how many database calls are executed by each endpoint, page, or function/method.

Tracking the data access code (such as SQL calls) called by each operation allows for rapid cross-referencing to more detailed query logs within the database. This can prove challenging with object-relational mapping systems (ORMs), for which SQL is dynamically generated.

SQL Comments

When doing SQL tuning, a big challenge is mapping SQL running in the database to the specific place in the codebase from which it is being called. In many database engines, you can add comments for information. These comments will show up in the database query logs. This is a great place to insert the codebase location.

Distributed Tracing

Tracing performance at all stages from the application to the datastore is critical for optimizing long-tail latency issues that can be difficult to capture. Systems like New Relic or Zipkin (open source) allow for distributed traces from application calls to the external services, such as your databases. A full transaction trace from the application to datastore should ideally give timing for all external service calls, not just the database query.

Tracing with full visibility through to the database can become a powerful arsenal in educating your software engineer (SWE) teams and creating autonomy and self-reliance. Rather than needing you to tell them where to focus, they are able to get the information themselves. As Aaron Morton at the Last Pickle says in his talk, âReplacing Cassandraâs Tracing with Zipkinâ:

Knowing in advance which tools create such positive cultural shifts is basically impossible to foretell, but Iâve seen it with Git and its practice of pull requests and stable master branches, and Iâve seen it with Grafana, Kibana, and Zipkin.

You can read more about this on The Last Pickleâs blog.

There are many components of an end-to-end call that can occur and be of interest to the DBRE. These include, but are not limited to, the following:

Establishing a connection to a database or a database proxy

Queuing for a connection in a database connection pool

Logging a metric or event to a queuing or message service

Creating a user ID from a centralized UUID service

Selecting a shard based on a variable (such as user ID)

Searching, invalidating, or caching at a cache tier

Compressing or encrypting data at the application layer

Querying a search layer

If a transaction has a performance âbudgetâ and the latency requirements are known, the staff responsible for every component are incentivized to work as a team to identify the most expensive aspects and make the appropriate investments and compromises to get there.

Events and Logs

It goes without saying that all application logs should be collected and stored. This includes stack traces. Additionally, there are numerous events that will occur that are incredibly useful to register with OpViz, such as the following:

Code deployments

Deployment time

Deployment errors

Application monitoring is a crucial first step, providing realistic looks at behavior from the userâs perspective, and is directly related to latency SLOs. These are the symptoms providing clues into faults and degradations within the environment. Now, letâs look at the supporting data that can help with root cause analysis and provisioning: host data.

Instrumenting the Server or Instance

Next is the individual host, real or virtual, on which the database instance resides. It is here that we can get all of the data regarding the operating system and physical resources devoted to running our databases. Even though this data is not specifically application/service related, it is valuable to use when youâve seen symptoms such as latency or errors in the application tier.

When using this data to identify causes for application anomalies, the goal is to find resources that are over or underutilized, saturated, or throwing errors. (USE, as Brendan Gregg defined in his methodology.) This data is also crucial for capacity planning for growth and performance optimization. Recognizing a bottleneck or constraint allows you to prioritize your optimization efforts to maximize value.

Distributed Systems Aggregation

Keep in mind that individual host data is not especially useful, other than for indicating that a host is unhealthy and should be culled from the herd. Rather, think about your utilization, saturation, and errors from an aggregate perspective for the pool of hosts performing the same function. In other words, if you have 20 Cassandra hosts, you are mostly interested in the overall utilization of the pool, the amount of waiting (saturation) that is going on, and any errors faults that are occurring. If errors are isolated to one host, it is time to remove that one from the ring and replace it with a new host.

On a Linux system, a good starting place for resources to monitoring in a linux environment includes the following:

CPU

Memory

Network interfaces

Storage I/O

Storage capacity

Storage controllers

Network controllers

CPU interconnect

Memory interconnect

Storage interconnect

Understanding Your Operating System

We cannot overemphasize just how much it is of value to dig deeply into the operating characteristics of your operating system. Although many database specialists leave this to system administrators, there is simply too tight of a relationship between database service levels and the operating system to not dive in. A perfect example of this is how Linux fills all of your memory with Page Cache, and thus the âFree Memoryâ gauge is virtually useless to monitor your memory usage. Pagescans per second becomes a much more useful metric in this case, which is not obvious without a deeper understanding of how Linux memory management works.

In addition to hardware resource monitoring, operating system software has a few items to track:

Kernel mutex

User mutex

Task capacity

File descriptors

If this is new to you, we suggest going to Brendan Greggâs USE page for Linux because it is incredibly detailed in regard to how to monitor this data. Its obvious that a significant amount of time and effort went into the data he presents.

Events and Logs

In addition to metrics, you should be sending all logs to an appropriate event processing system such as RSyslog or Logstash. This includes kernel, cron, authentication, mail, and general messages logs as well as process- or application-specific log to ingest, such as MySQL, or nginx.

Your configuration management and provisioning processes should also be registering critical events to your OpViz stack. Here is a decent starting point:

A host being brought into our out of service

Configuration changes

Host restarts

Service restarts

Host crashes

Service crashes

If you can get the preceding into your OpViz stack, you will be in great shape for understanding whatâs going on at the host- and operating-system levels of the stack. Now, letâs look at the databases themselves.

Instrumenting the Datastore

What do we monitor and track in our databases, and why? Some of this will depend on the kind of datastore. We focus here on areas that are generic enough to be universal, but specific enough to help you track to your own databases. We can break this down into four areas:

Datastore connection layer

Internal database visibility

Database objects

Database calls/queries

Each of these will get its own section, beginning with the datastore connection layer.

Datastore Connection Layer

We have discussed the importance of tracking the time it takes to connect to the backend datastore as part of the overall transaction. A tracing system should also be able to break out time talking to a proxy and time from the proxy to the backend as well. You can capture this via tcpdump and Tshark/Wireshark for ad hoc sampling if something like Zipkin is not available. You can automate this for occasional sampling or run it ad hoc.

If you are seeing latency and/or errors between the application and the database connection, you will require additional metrics to help identify causes. Taking the aforementioned USE method we recommended, letâs see what other metrics can assist us.

Utilization

Databases can support only a finite number of connections. The maximum number of connections is constrained in multiple locations. Database configuration parameters will direct the database to accept only a certain number of connections, setting an artificial top boundary to minimize overwhelming the host. Tracking this maximum as well as the actual number of connections is crucial because it might be set arbitrarily low by a default configuration.

Connections also open resources at the operating system level. For instance, PostgreSQL uses one Unix process per connection. MySQL, Cassandra, and MongoDB use a thread per connection. All of them use memory and file descriptors. So, there are multiple places we want to look at to understand connection behaviors:

Connection upper bound and connection count

Connection states (working, sleeping, aborted, and others)

Kernel-level Open file utilization

Kernel-level max processes utilization

Memory utilization

Thread pool metrics such as MySQL table cache or MongoDB thread pool utilization

Network throughput utilization

This should inform you as to whether you have a capacity or utilization bottleneck somewhere in the connection layer. If you are seeing 100% utilization and saturation is also high, this is a good indicator. But, low utilization combined by saturation is also an indicator of a bottleneck somewhere. High, but not full, utilization of resources is also often quite impactful to latency and could be causing latency as well.

Saturation

Saturation is often most useful when paired with utilization. If you are seeing a lot of waits for resources that are also showing 100% utilization, you are seeing a pretty clear capacity issue. However, if you are seeing waits/saturation without full utilization, there might be a bottleneck elsewhere that is causing the stack up. Saturation can be measured at these inflection points:

TCP connection backlog

Database-specific connection queuing, such as MySQL back_log

Connection timeout errors

Waiting on threads in the connection pools

Memory swapping

Database processes that are locked

Queue length and wait timeouts are crucial for understanding saturation. Any time you find connections or processes waiting, you have an indicator of a potential bottleneck.

Errors

With utilization and saturation, you can determine whether capacity constraints and bottlenecks are affecting the latency of your database connection layer. This is great information for deciding whether you need to increase resources, remove artificial configuration constraints, or make some architectural changes. Errors should also be monitored and used to help eliminate or identify faults and/or configuration problems. Errors can be captured as follows:

Database logs will provide error codes when database-level failures occur. Sometimes you have configurations with various degrees of verbosity. Make sure you have logging verbose enough to identify connection errors, but do be careful about overhead, particularly if your logs are sharing storage and IO resources with your database.

Application and proxy logs will also provide rich sources of errors.

Host errors discussed in the previous section should also be utilized here.

Errors will include network errors, connection timeouts, authentication errors, connection terminations, and much more. These can point to issues as varied as corrupt tables, reliance on DNS, deadlocks, auth changes, and so on.

By utilizing application latency/error metrics, tracing and appropriate telemetry on utilization, saturation, and specific error states, you should have the information you need to identify degraded and broken states at the database connection layer. Next, we will look at what to measure inside of the connections.

Internal Database Visibility

When we look inside of the database, we can see that there is a substantial increase in the number of moving parts, number of metrics, and overall complexity. In other words, this is where things start to get real! Again, letâs keep in mind USE. Our goal is to understand bottlenecks that might be affecting latency, constraining requests, or causing errors.

It is important to be able to look at this from an individual host perspective and in aggregate by role. Some databases, like MySQL, PostgreSQL, ElasticSearch, and MongoDB, have master and replica roles. Cassandra and Riak have no specific roles, but they are often distributed by region or zone. That too is important to aggregate by.

Throughput and Latency Metrics

How many and what kind of operations are occurring in the datastores? This data is a very good high-level view of database activity. As SWEs put in new features, these workloads will shift and provide good indicators of how the workload is shifting. Some examples of metrics to collect to understand these shifting workloads include the following:

Reads

Writes

Inserts

Updates

Deletes

Other Operations

Commits

Rollbacks

DDL statements

Other administrative tasks

When we discuss latency here, we are talking in the aggregate only, meaning averages. We will discuss granular and more informative query monitoring further in this section. Thus, you are getting no outliers in this kind of data, only very basic workload information.

Commits, Redo, and Journaling

Although the specific implementations will depend on the datastore, there are almost always a set of I/O operations involved in flushing data to disk. In MySQLâs InnoDB storage engine and in PostgreSQL, writes are changed in the buffer pool (memory) and operations are recorded in a redo log (or write-ahead log in PostgreSQL). Background processes will then flush this to disk while maintaining checkpoints for recovery. In Cassandra, data is stored in a memtable (memory), whereas a commit log is appended to. Memtables are flushed periodically to an SSTable. SSTables are periodically compacted, as well. Following are some metrics you might monitor:

Dirty buffers (MySQL)

Checkpoint age (MySQL)

Pending and completed compaction tasks (Cassandra)

Tracked dirty bytes (MongoDB)

(Un)Modified pages evicted (MongoDB)

log_checkpointsconfiguration (PostgreSQL)pg_stat_bgwriterview (PostgreSQL)

All checkpointing, flushing, and compaction are operations that have significant performance impacts on activity in the database. Sometimes, the impact is increased I/O, and sometimes it can be a full stop of all write operations while a major operation occurs. Gathering metrics here allows you to tune specific configurables to minimize the effects that will occur during such operations. So in this case, when we see latency increasing and see metrics related to flushing showing excessive background activity, we will be pointed toward tuning operations related to these processes.

Replication State

Replication is the copying of data across multiple nodes so that the data on one node is identical to another. It is a cornerstone of availability and read scaling as well as a part of disaster recovery and data safety. There are three replication states that can occur, however, that are not healthy and can lead to big problems if they are not monitored and caught. We discuss replication in detail in Chapter 10.

Replication latency is the first of the fault states. Sometimes, the application of changes to other nodes can slow down. This can be the result of network saturation, single-threaded applies that cannot keep up, or any number of other reasons. Occasionally, replication will never catch up during peak activity, causing the data to be hours old on the replicas. This is dangerous because stale data can be served, and if you are using this replica as a failover, you can lose data.

Most database systems have easily tracked replication latency metrics; you can see the difference between the timestamp on the master and the timestamp on the replica. In systems like Cassandra, with eventually consistent models, you are looking for backlogs of operations used to synchronize replicas after unavailability. For instance, in Cassandra, this is hinted handoffs.

Broken replication is the second of the fault states. In this case, the processes required to maintain data replication simply break due to any number of errors. Resolution requires rapid response facilitated by appropriate monitoring, followed by repair of the cause of the errors, and replication allowed to resume and catch up. In this case, you can monitor the state of replication threads.

The last error state is the most insidious: replication drift. In this case, data has lost synchronization, causing replication to be useless and potentially dangerous. Identifying replication drift for large datasets can be challenging and depends on the workloads and kind of data that you are storing.

For instance, if your data is relatively immutable and insert/read operations are the norm, you can run checksums on data ranges across replicas and then compare checksums to see if they are identical. You can do this in a rolling method behind replication, allowing for an easy safety check at the cost of extra CPU utilization on the database hosts. If you are doing a lot of mutations, however, this proves more challenging because you must either repeatedly run checksums on data that has already been reviewed or just do occasional samples.

Memory Structures

Data stores will maintain numerous memory structures in their regular operation. One of the most ubiquitous in databases is a data cache. Although it might have many names, the goal of this is to maintain frequently accessed data in memory rather than from disk. Other caches like this can exist, including caches for parsed SQL, connection caches, query result caches, and many more.

The typical metrics we use when monitoring these structures are as follows:

- Utilization

The overall amount of allocated space that is in use over time.

- Churn

The frequency that cached objects are removed to make room for other objects or because the underlying data has been invalidated.

- Hit ratios

The frequency with which cached data is used rather than uncached data. This can help with performance optimization exercises.

- Concurrency

Often these structures have their own serialization methods, such as mutexes, that can become bottlenecks. Understanding saturation of these components can help with optimization as well.

Some systems, like Cassandra, use Java Virtual Machines (JVMs) for managing memory, exposing whole new areas to monitor. Garbage collection and usage of the various object heap spaces are also critical in such environments.

Locking and Concurrency

Relational databases in particular utilize locks to maintain concurrent access between sessions. Locking allows mutations and reads to occur while guaranteeing that nothing might be changed by other processes. Even though this is incredibly useful, it can lead to latency issues as processes stack up waiting for their turn. In some cases, you can have processes timing-out due to deadlocks, for which there is simply no resolution for the locks that have been put in place but to roll back. The details of locking implementations are reviewed in Chapter 11.

Monitoring locks includes monitoring the amount of time spent waiting on locks in the datastore. This can be considered a saturation metric, and longer queues can indicate application and concurrency issues or underlying issues that affect latency, with sessions holding locks taking longer to complete. Monitoring rollbacks and deadlocks is also crucial because it is another indicator that applications are not releasing locks cleanly, causing waiting sessions to timeout and roll back. Rollbacks can be part of a normal, well-behaved transaction, but they often are a leading indicator that some underlying action is affecting transactions.

As discussed in the memory structures section earlier, there are also numerous points in the database that function as synchronization primitives designed to safely manage concurrency. These are generally either mutexes or semaphores. A mutex (Mutually Exclusive Lock) is a locking mechanism used to synchronize access to a resource such as a cache entry. Only one task can acquire the mutex. This means that there is ownership associated with mutexes, and only the owner can release the lock (mutex). This protects from corruption.

A semaphore restricts the number of simultaneous users of a shared resource up to a maximum number. Threads can request access to the resource (decrementing the semaphore) and can signal that they have finished using the resource (incrementing the semaphore). Examples of using mutexes/semaphores to monitor MySQLâs InnoDB storage engine are listed in Table 4-1.

| Name | Description |

|---|---|

| Mutex Os Waits (Delta) | The number of InnoDB semaphore/mutex waits yielded to the OS. |

| Mutex Rounds (Delta) | The number of InnoDB semaphore/mutex spin rounds for the internal sync array. |

| Mutex Spin Waits (Delta) | The number of InnoDB semaphore/mutex spin waits for the internal sync array. |

| Os Reservation Count (Delta) | The number of times an InnoDB semaphore/mutex wait was added to the internal sync array. |

| Os Signal Count (Delta) | The number of times an InnoDB thread was signaled using the internal sync array. |

| Rw Excl Os Waits (Delta) | The number of exclusive (write) semaphore waits yielded to the OS by InnoDB. |

| Rw Excl Rounds (Delta) | The number of exclusive (write) semaphore spin rounds within the InnoDB sync array. |

| Rw Excl Spins (Delta) | The number of exclusive (write) semaphore spin waits within the InnoDB sync array. |

| Rw Shared Os Waits (Delta) | The number of shared (read) semaphore waits yielded to the OS by InnoDB. |

| RW Shared Rounds (Delta) | The number of shared (read) semaphore spin rounds within the InnoDB sync array. |

| RW Shared Spins (Delta) | The number of shared (read) semaphore spin waits within the InnoDB sync array. |

| Spins Per Wait Mutex (Delta) | The ratio of InnoDB semaphore/mutex spin rounds to mutex spin waits for the internal sync array. |

| Spins Per Wait RW Excl (Delta) | The ratio of InnoDB exclusive (write) semaphore/mutex spin rounds to spin waits within the internal sync array. |

| Spins Per Wait RW Shared (Delta) | The ratio of InnoDB shared (read) semaphore/mutex spin rounds to spin waits within the internal sync array. |

Increasing values in these can indicate that your datastores are reaching concurrency limits on specific areas in the code base. You can resolve this via tuning configurables and/or by scaling out to maintain sustainable concurrency on a datastore to satisfy traffic requirements.

Locking and concurrency can truly kill even the most performant of queries once you start experiencing a tipping point in scale. By tracking and monitoring these metrics during load tests and in production environments, you can understand the limits of your database software and identify how your own applications must be optimized to scale up to large numbers of concurrent users.

Database Objects

It is crucial to understand what your database looks like and how it is stored. At the simplest level, this is an understanding of how much storage each database object and its associated keys/indexes takes. Just like filesystem storage, understanding the rate of growth and the time to reaching the upper boundary is as crucial, if not more, than the current storage usage.

In addition to understanding the storage and growth, monitoring the distribution of critical data is helpful. For instance, understanding the high and low bounds, means and cardinality of data is helpful to understanding index and scan performance. This is particularly important for integer datatypes and low cardinality character-based datatypes. Having this data at your SWE fingertips allows you and them to recognize optimizations on datatypes and indexing.

If you have sharded your dataset using key ranges or lists, understanding the distribution across shards can help ensure you are maximizing output on each node. These sharding methodologies allow for hot spots because they are not even distributions using a hash or modulus approach. Recognizing this will advise you and your team on needs to rebalance or reapproach your sharding models.

Database Queries

Depending on the database system you are working with, the actual data access and manipulation activity can prove to be highly instrumented or not at all. Trying to drink at the firehose of data that results in logging queries in a busy system can cause critical latency and availability issues to your system and users. Still, there is no more valuable data than this. Some solutions, such as Vivid Cortex and Circonus, have focused on TCP and wire protocols for getting the data they need, which dramatically reduces performance impact of query logging. Other methods include sampling on a less loaded replica, only turning logging on for fixed periods of time or only logging statements that execute slowly.

Regardless of all this, you want to store as much as possible about the performance and utilization of your database activity. This will include the consumption of CPU and IO, number of rows read or written, detailed execution times and wait times, and execution counts. Understanding optimizer paths, indexes used, and statistics around joining, sorting, and aggregating is also critical for optimization.

Database Asserts and Events

Database and client logs are a rich source of information, particularly for asserts and errors. These logs can give you crucial data that canât be monitored any other way, such as the following:

Connection attempts and failures

Corruption warnings and errors

Database restarts

Configuration changes

Deadlocks

Core dumps and stack traces

You can aggregate some of this data and push it to your metrics systems. You should treat others as events to be tracked and used for correlations.

Wrapping Up

Well, after all of that, I think we all need a break! Youâve come out of this chapter with a solid understanding of the importance of operational visibility, how to start an OpViz program, and how to build and evolve an OpViz architecture. You can never have enough information about the systems you are building and running. You can also quickly find the systems built to observe the services that have become a large part of your operational responsibilities! They deserve just as much attention as every other component of the infrastructure.

Get Database Reliability Engineering now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.