Chapter 1. The Evolution of Data Architectures

As a data engineer, you want to build large-scale data, machine learning, data science, and AI solutions that offer state-of-the-art performance. You build these solutions by ingesting large amounts of source data, then cleansing, normalizing, and combining the data, and ultimately presenting this data to the downstream applications through an easy-to-consume data model.

As the amount of data you need to ingest and process is ever increasing, you need the ability to scale your storage horizontally. Additionally, you need the ability to dynamically scale your compute resources to address processing and consumption spikes. Since you are combining your data sources into one data model, you not only need to append data to tables, but you often need to insert, update, or delete (i.e., MERGE or UPSERT) records based upon complex business logic. You want to be able to perform these operations with transactional guarantees, and without having to constantly rewrite large data files.

In the past, the preceding set of requirements was addressed by two distinct toolsets. The horizontal scalability and decoupling of storage and compute were offered by cloud-based data lakes, while relational data warehouses offered transactional guarantees. However, traditional data warehouses tightly coupled storage and compute into an on-premises appliance and did not have the degree of horizontal scalability associated with data lakes.

Delta Lake brings capabilities such as transactional reliability and support for UPSERTs and MERGEs to data lakes while maintaining the dynamic horizontal scalability and separation of storage and compute of data lakes. Delta Lake is one solution for building data lakehouses, an open data architecture combining the best of data warehouses and data lakes.

In this introduction, we will take a brief look at relational databases and how they evolved into data warehouses. Next, we will look at the key drivers behind the emergence of data lakes. We will address the benefits and drawbacks of each architecture, and finally show how the Delta Lake storage layer combines the benefits of each architecture, enabling the creation of data lakehouse solutions.

A Brief History of Relational Databases

In his historic 1970 paper,1 E.F. Codd introduced the concept of looking at data as logical relations, independent of physical data storage. This logical relation between data entities became known as a database model or schema. Codd’s writings led to the birth of the relational database. The first relational database systems were introduced in the mid-1970s by IBM and UBC.

Relational databases and their underlying SQL language became the standard storage technology for enterprise applications throughout the 1980s and 1990s. One of the main reasons behind this popularity was that relational databases offered a concept called transactions. A database transaction is a sequence of operations on a database that satisfies four properties: atomicity, consistency, isolation, and durability, commonly referred to by their acronym ACID.

Atomicity ensures that all changes made to the database are executed as a single operation. This means that the transaction succeeds only when all changes have been performed successfully. For example, when the online banking system is used to transfer money from savings to checking, the atomicity property will guarantee that the operation will only succeed when the money is deducted from my savings account and added to my checking account. The complete operation will either succeed or fail as a complete unit.

The consistency property guarantees that the database transitions from one consistent state at the beginning of the transaction to another consistent state at the end of the transaction. In our earlier example, the transfer of the money would only happen if the savings account had sufficient funds. If not, the transaction would fail, and the balances would stay in their original, consistent state.

Isolation ensures that concurrent operations happening within the database are not affecting each other. This property ensures that when multiple transactions are executed concurrently, their operations do not interfere with each other.

Durability refers to the persistence of committed transactions. It guarantees that once a transaction is completed successfully, it will result in a permanent state even in the event of a system failure. In our money transfer example, durability will ensure that updates made to both my savings and checking account are persistent and can survive a potential system failure.

Database systems continued to mature throughout the 1990s, and the advent of the internet in the mid-1990s led to an explosive growth of data and the need to store this data. Enterprise applications were using relational database management system (RDBMS) technology very effectively. Flagship products such as SAP and Salesforce would collect and maintain massive amounts of data.

However, this development was not without its drawbacks. Enterprise applications would store the data in their own, proprietary formats, leading to the rise of data silos. These data silos were owned and controlled by one department or business unit. Over time, organizations recognized the need to develop an enterprise view across these different data silos, leading to the rise of data warehouses.

Data Warehouses

While each enterprise application has some type of reporting built in, business opportunities were missed because of the lack of a comprehensive view across the organization. At the same time, organizations recognized the value of analyzing data over longer periods of time. Additionally, they wanted to be able to slice and dice the data over several cross-cutting subject matters, such as customers, products, and other business entities.

This led to the introduction of the data warehouse, a central relational repository of integrated, historical data from multiple data sources that presents a single integrated, historical view of the business with a unified schema, covering all perspectives of the enterprise.

Data Warehouse Architecture

A simple representation of a typical data warehouse architecture is shown in Figure 1-1.

Figure 1-1. Data warehouse architecture

When we look at the diagram in Figure 1-1, we start with the data source layer on the left. Organizations need to ingest data from a set of heterogeneous data sources. While the data from the organization’s enterprise resource planning (ERP) system(s) forms the backbone of the organizational model, we need to augment this data with the data from the operational systems running the day-to-day operations, such as human resources (HR) systems and workflow management software. Additionally, organizations might want to leverage the customer interaction data covered by their customer relationship management (CRM) and point of sale (POS) systems. In addition to the core data sources listed here, there is a need to ingest data from a wide array of external data sources, in a variety of formats, such as spreadsheets, CSV files, etc.

These different source systems each might have their own data format. Therefore, the data warehouse contains a staging area where the data from the different sources can be combined into one common format. To do this the system must ingest the data from the original data sources. The actual ingestion process varies by data source type. Some systems allow direct database access, and others allow data to be ingested through an API, while many data sources still rely on file extracts.

Next, the data warehouse needs to transform the data into a standardized format, allowing the downstream processes to access the data easily. Finally, the transformed data is loaded into the staging area. In relational data warehouses, this staging area is typically a set of flat relational staging tables without any primary or foreign keys or simple data types.

This process of extracting data, transforming it to a standard format, and loading it into the data warehouse is commonly referred to as extract, transform, and load (ETL). ETL tools can perform several other tasks on the ingested data before finally loading the data into the data warehouse. These tasks include the elimination of duplicate records. Since a data warehouse will be the one source of truth, we do not want it to contain multiple copies of the same data. Additionally, duplicate records prevent the generation of a unique key for each record.

ETL tools also allow us to combine data from multiple data sources. For example, one view of our customers might be captured in CRM systems, while other attributes are found in an ERP system. The organization needs to combine these different aspects into one comprehensive view of a customer. This is where we start to introduce a schema to the data warehouse. In our example of a customer, the schema will define the different columns for the customer table, which columns are required, the data type and constraints of each column, and so on.

Having canonical, standardized representations of columns, such as date and time, is important. ETL tools can ensure that all temporal columns are formatted using the same standard throughout the data warehouse.

Finally, organizations want to perform quality checks on the data in keeping with their data governance standards. This might include dropping low-quality data rows that do not meet this minimal standard.

Data warehouses are physically implemented on a monolithic physical architecture, made up of a single large node, combining memory, compute, and storage. This monolithic architecture forces organizations to scale their infrastructure vertically, resulting in expensive, often overdimensioned infrastructure, which was provisioned for peak user load, while being near idle at other times.

A data warehouse typically contains data that can be classified as follows:

- Metadata

Contextual information about the data. This data is often stored in a data catalog. It enables the data analysts to describe, classify, and easily locate the data stored in the data warehouse.

- Raw data

Maintained in its original format without any processing. Having access to the raw data enables the data warehouse system to reprocess data in case of load failures.

- Summary data

Automatically created by the underlying data management system. The summary data will automatically be updated as new data is loaded into the warehouse. It contains aggregations across several conformed dimensions. The main purpose of the summary data is to accelerate query performance.

The data in the warehouse is consumed in the presentation layer. This is where the consumers can interact with the data stored in the warehouse. We can broadly identify two large groups of consumers:

- Human consumers

These are the people within the organization who have a need to consume the data in the warehouse. These consumers can vary from knowledge workers, who need access to the data as an essential part of their job, to executives who typically consume highly summarized data, often in the form of dashboards and key performance indicators (KPIs).

- Internal or external systems

The data in a data warehouse can be consumed by a variety of internal or external systems. This can include machine learning and AI toolsets, or internal applications that need to consume warehouse data. Some systems might directly access the data, others might work with data extracts, while still others might directly consume the data in a pub-sub model.

Human consumers will leverage various analytical tools and technologies to create actionable insights into the data, including:

- Reporting tools

These tools enable the user to develop insights into the data through visualizations such as tabular reports and a wide array of graphical representations.

- Online analytical processing (OLAP) tools

Consumers need to slice and dice the data in a variety of ways. OLAP tools present the data in a multidimensional format, allowing it to be queried from multiple perspectives. They leverage pre-stored aggregations, often stored in memory, to serve up the data with fast performance.

- Data mining

These tools allow a data analyst to find patterns in the data through mathematical correlations and classifications. They assist the analysts in recognizing previously hidden relationships between different data sources. In a way, data mining tools can be seen as a precursor to modern data science tools.

Dimensional Modeling

Data warehouses introduced the need for a comprehensive data model that spans the different subject areas in a corporate enterprise. The technique used to create these models became known as dimensional modeling.

Driven by the writings and ideas of visionaries such as Bill Inmon and Ralph Kimball, dimensional modeling was first introduced in Kimball’s seminal book The Data Warehouse Toolkit: The Complete Guide to Dimensional Modeling.2 Kimball defines a methodology that focuses on a bottom-up approach, ensuring that the team delivers real value with the data warehouse as soon as possible.

A dimensional model is described by a star schema. A star schema organizes data for a given business process (e.g., sales) into a structure that facilitates easy analytics. It consists of two types of tables:

-

A fact table, which is the primary, or central table for the schema. The fact table captures the primary measurements, metrics, or “facts” of the business process. Staying with our sales business process example, a sales fact table would include units sold and sales amount.

-

Fact tables have a well-defined grain. Grain is determined by the combination of dimensions (columns) represented in the table. A sales fact table can be of low granularity if it is just an annual rollup of sales, or high granularity if it includes sales by date, store, and customer identifier.

-

-

Multiple dimension tables that are related to the fact table. A dimension provides the context surrounding the selected business process. In a sales scenario example, the list of dimensions could include product, customer, salesperson, and store.

The dimension tables “surround” the fact table, which is why these types of schemas are referred to as “star schemas.” A star schema consists of fact tables, linked to their associated dimensional tables through primary and foreign key relationships. A star schema for our sales subject area is shown in Figure 1-2.

Figure 1-2. Sales dimensional model

Data Warehouse Benefits and Challenges

Data warehouses have inherent strengths that have served the business community well. They serve up high-quality, cleansed, and normalized data from different data sources in a common format. Since data from the different departments is presented in a common format, each department will review results in line with the other departments. Having timely, accurate data is the basis for strong business decisions.

-

Since they store large amounts of historical data, they enable historical insights, allowing users to analyze different periods and trends.

-

Data warehouses tend to be very reliable, based on the underlying relational database technology, which executes ACID transactions.

-

Warehouses are modeled with standard star-schema modeling techniques, creating fact tables and dimensions. More and more prebuilt template models became available for various subject areas, such as sales and CRM, further accelerating the development of such models.

-

Data warehouses are ideally suited for business intelligence and reporting, basically addressing the “What happened?” question of the data maturity curve. A data warehouse combined with business intelligence (BI) tools can generate actionable insights for marketing, finance, operations, and sales.

The fast rise of the internet and social media and the availability of multimedia devices such as smartphones disrupted the traditional data landscape, giving rise to the term big data. Big data is defined as data that arrives in ever higher volumes, with more velocity, in a greater variety of formats with higher veracity. These are known as the four Vs of data:

- Volume

The volume of data created, captured, copied, and consumed globally is increasing rapidly. As described in Statista, over the next two years, global data creation is projected to grow to more than 200 zettabytes (a zettabyte is a 2 to the power 70 number of bytes).

- Velocity

In today’s modern business climate, timely decisions are critical. To make these decisions, organizations need their information to flow quickly, ideally as close to real time as possible. For example, stock trading applications need to have access to near-real-time data so advanced trading algorithms can make millisecond decisions, and need to communicate these decisions to their stakeholders. Access to timely data can give organizations a competitive advantage.

- Variety

Variety refers to the number of different “types” of data that are now available. The traditional data types were all structured and typically offered as relational databases, or extracts thereof. With the rise of big data, data now arrives in new unstructured types. Unstructured and semi-structured data types, such as Internet of Things (IoT) device messages, text, audio, and video, require additional preprocessing to derive business meaning. Variety is also expressed through the different types of ingestion. Some data sources are best ingested in batch mode, while others lend themselves to incremental ingestion, or real-time, event-based ingestion such as IoT data streams.

- Veracity

Veracity defines the trustworthiness of the data. Here, we want to make sure that the data is accurate and of high quality. Data can be ingested from several sources; it is important to understand the chain of custody of the data, ensure we have rich metadata, and understand the context under which the data was collected. Additionally, we want to ensure that our view of the data is complete, with no missing components or late-arriving facts.

Data warehouses have a hard time addressing these four Vs.

Traditional data warehouse architectures struggle to facilitate exponentially increasing data volumes. They suffer from both storage and scalability issues. With volumes reaching petabytes, it becomes challenging to scale storage capabilities without spending large amounts of money. Traditional data warehouse architectures do not use in-memory and parallel processing techniques, preventing them from scaling the data warehouse vertically.

Data warehouse architectures are also not a good fit to address the velocity of big data. Data warehouses do not support the types of streaming architecture required to support near-real-time data. ETL data load windows can only be shortened so much until the infrastructure starts to buckle.

While data warehouses are very good at storing structured data, they are not well suited to store and query the variety of semi-structured or unstructured data.

Data warehouses have no built-in support for tracking the trustworthiness of the data. Data warehouse metadata is mainly focused on schema, and less on lineage, data quality, and other veracity variables.

Further, data warehouses are based upon a closed, proprietary format and typically only support SQL-based query tools. Because of their proprietary format, data warehouses do not offer good support for data science and machine learning tools.

Because of these limitations, data warehouses are expensive to build. As a result, projects often fail before going live, and those that do go live have a hard time keeping up with the ever-changing requirements of the modern business climate and the four Vs.

The limitations of the traditional data warehouse architecture gave rise to a more modern architecture, based upon the concept of a data lake.

Introducing Data Lakes

A data lake is a cost-effective central repository to store structured, semi-structured, or unstructured data at any scale, in the form of files and blobs. The term “data lake” came from the analogy of a real river or lake, holding the water, or in this case data, with several tributaries that are flowing the water (aka “data”) into the lake in real time. A canonical representation of a typical data lake is shown in Figure 1-3.

Figure 1-3. Canonical data lake

The initial data lakes and big data solutions were built with on-premises clusters, based upon the Apache Hadoop open source set of frameworks. Hadoop was used to efficiently store and process large datasets ranging in size from gigabytes to petabytes of data. Instead of using one large computer to store and process the data, Hadoop leveraged the clustering of multiple commodity compute nodes to analyze large volumes of datasets in parallel more quickly.

Hadoop would leverage the MapReduce framework to parallelize compute tasks over multiple compute nodes. The Hadoop Distributed File System (HDFS) was a file system that was designed to run on standard or low-end hardware. HDFS was very fault-tolerant and supported large datasets.

Starting in 2015, cloud data lakes, such as Amazon Simple Storage Service (Amazon S3), Azure Data Lake Storage Gen 2 (ADLS), and Google Cloud Storage (GCS), started replacing HDFS. These cloud-based storage systems have superior service-level agreements (SLAs) (often greater than 10 nines), offer geo-replication, and, most importantly, offer extremely low cost with the option to utilize even lower-cost cold storage for archival purposes.

At the lowest level, the unit of storage in a data lake is a blob of data. Blobs are by nature unstructured, enabling the storage of semi-structured and unstructured data, such as large audio and video files. At a higher level, the cloud storage systems provide file semantics and file-level security on top of the blob storage, enabling the storage of highly structured data. Because of their high bandwidth ingress and egress channels, data lakes also enable streaming use cases, such as the continuous ingestion of large volumes of IoT data or streaming media.

Compute engines enable large volumes of data to be processed in an ETL-like fashion and delivered to consumers, such as traditional data warehouses and machine learning and AI toolsets. Streaming data can be stored in real-time databases, and reports can be created with traditional BI and reporting tools.

Data lakes are enabled through a variety of components:

- Storage

Data lakes require very large, scalable storage systems, like the ones typically offered in cloud environments. The storage needs to be durable and scalable and should offer interoperability with a variety of third-party tools, libraries, and drivers. Note that data lakes separate the concepts of storage and compute, allowing both to scale independently. Independent scaling of storage and compute allows for on-demand, elastic fine-tuning of resources, allowing our solution architectures to be more flexible. The ingress and egress channels to the storage systems should support high bandwidths, enabling the ingestion or consumption of large batch volumes, or the continuous flow of large volumes of streaming data, such as IoT and streaming media.

- Compute

High amounts of compute power are required to process the large amounts of data stored in the storage layer. Several compute engines are available on the different cloud platforms. The go-to compute engine for data lakes is Apache Spark. Spark is an open source unified analytics engine, which can be deployed through various solutions such as Databricks or other cloud providers’ developed solutions. Big data compute engines will leverage compute clusters. Compute clusters pool compute nodes to tackle complete data collection and processing tasks.

- Formats

The shape of the data on disk defines the formats. A wide array of storage formats are available. Data lakes use mostly standardized, open source formats, such as Parquet, Avro JSON, or CSV.

- Metadata

Modern, cloud-based storage systems maintain metadata (i.e., contextual information about the data). This includes various timestamps that describe when data was written or accessed, data schemas, and a variety of tags which contain information about the usage and owner of the data.

Data lakes have some very strong benefits. A data lake architecture enables the consolidation of an organization’s data assets into one central location. Data lakes are format agnostic and rely on open source formats, such as Parquet and Avro. These formats are well understood by a variety of tools, drivers, and libraries, enabling smooth interoperability.

Data lakes are deployed on mature cloud storage subsystems, allowing them to benefit from the scalability, monitoring, ease of deployment, and low storage costs associated with these systems. Automated DevOps tools, such as Terraform, have well-established drivers, enabling automated deployments and maintenance.

Unlike data warehouses, data lakes support all data types, including semi-structured and unstructured data, enabling workloads such as media processing. Because of their high throughput ingress channels, they are very well suited for streaming use cases, such as ingesting IoT sensor data, media streaming, or web clickstreams.

However, as data lakes become more popular and widely used, organizations started recognizing some challenges with traditional data lakes. While the underlying cloud storage is relatively inexpensive, building and maintaining an effective data lake requires expert skills, resulting in high-end staffing or increased consulting services costs.

While it is easy to ingest data in its raw form, transforming the data into a form that can deliver business values can be very expensive. Traditional data lakes have poor latency query performance, so they cannot be used for interactive queries. As a result, the organization’s data teams must still transform and load the data into something like a data warehouse, resulting in an extended time to value. This resulted in a data lake + warehouse architecture. This architecture continued to dominate the industry for quite a while (we have personally implemented dozens of those types of these systems), but is now declining because of the rise of lakehouses.

Data lakes typically use a “schema on read” strategy, where data can be ingested in any format without schema enforcement. Only when the data is read can some type of schema be applied. This lack of schema enforcement can result in data quality issues, allowing the pristine data lake to become a “data swamp.”

Data lakes do not offer any kind of transactional guarantees. Data files can only be appended to, leading to expensive rewrites of previously written data to make a simple update. This leads to an issue called the “small file problem,” where multiple small files are created for a single entity. If this issue is not managed well, these small files slow the read performance of the overall data lake, leading to stale data and wasted storage. Data lake administrators need to run repeated operations to consolidate these smaller files into larger files optimized for efficient read operations.

Now that we have discussed the strengths and weaknesses of both data warehouses and data lakes, we will introduce the data lakehouse, which combines the strengths and addresses the weaknesses of both technologies.

Data Lakehouse

Armbrust, Ghodsi, Xin, and Zaharia first introduced the concept of the data lakehouse in 2021. The authors define a lakehouse as “a data management system based upon low-cost and directly accessible storage that also provides analytics DBMS management and performance features such as ACID transactions, data versioning, auditing, indexing, caching and query optimization.”

When we unpack this statement, we can define a lakehouse as a system that merges the flexibility, low cost, and scale of a data lake with the data management and ACID transactions of data warehouses, addressing the limitations of both. Like data lakes, the lakehouse architecture leverages low-cost cloud storage systems with the inherent flexibility and horizontal scalability of those systems. The goal of a lakehouse is to use existing high-performance data formats, such as Parquet, while also enabling ACID transactions (and other features). To add these capabilities, lakehouses use an open-table format, which adds features like ACID transactions, record-level operations, indexing, and key metadata to those existing data formats. This enables data assets stored on low-cost storage systems to have the same reliability that used to be exclusive to the domain of an RDBMS. Delta Lake is an example of an open-table format that supports these types of capabilities.

Lakehouses are an especially good match for most, if not all, cloud environments with separate compute and storage resources. Different computing applications can run on demand on completely separate computing nodes, such as a Spark cluster, while directly accessing the same storage data. It is, however, conceivable that one could implement a lakehouse over an on-premises storage system such as the aforementioned HDFS.

Data Lakehouse Benefits

An overview of the lakehouse architecture is shown in Figure 1-4.

Figure 1-4. Lakehouse architecture overview

With the lakehouse architecture, we no longer need to have a copy of our data in the data lake, and another copy in some type of data warehouse storage. Indeed, we can source our data from the data lake through the Delta Lake storage format and protocol with comparable performance to a traditional data warehouse.

Since we can continue to leverage the low-cost cloud-based storage technologies and no longer need to copy data from the data lake to a data warehouse, we can realize significant cost savings, both in infrastructure and in staff and consulting overhead.

Since less data movement takes place and our ETL is simplified, opportunities for data quality issues are significantly reduced, and finally, because the lakehouse combines the ability to store large data volumes and refined dimensional models, development cycles are reduced, and the time to value is significantly reduced.

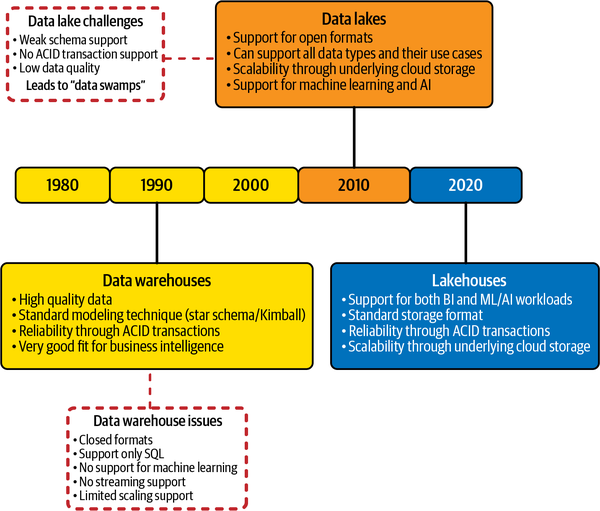

The evolution from data warehouses to data lakes to a lakehouse architecture is shown in Figure 1-5.

Figure 1-5. Evolution of data architectures

Implementing a Lakehouse

As we mentioned earlier, lakehouses leverage low-cost object stores, like Amazon S3, ADLS, or GCS, storing the data in an open source table format, such as Apache Parquet. However, since lakehouse implementations run ACID transactions against this data, we need a transactional metadata layer on top of the cloud storage, defining which objects are part of the table version.

This will allow a lakehouse to implement features such as ACID transactions and versioning within that metadata layer, while keeping the bulk of the data in the low-cost object storage. The lakehouse client is able to keep using data in an open source format that they are already familiar with.

Next, we need to address system performance. As we mentioned earlier, lakehouse implementations need to achieve great SQL performance to be effective. Data warehouses were very good at optimizing performance because they worked with a closed storage format and a well-known schema. This allowed them to maintain statistics, build clustered indexes, move hot data on fast SSD devices, etc.

In a lakehouse, which is based upon open source standard formats, we do not have that luxury, since we cannot change the storage format. However, lakehouses can leverage a plethora of other optimizations which leave the data files unchanged. This includes caching, auxiliary data structures such as indexes and statistics, and data layout optimizations.

The final tool that can speed up analytic workloads is the development of a standard DataFrame API. Most of the popular ML tools out there, such as TensorFlow and Spark MLlib, have support for DataFrames. DataFrames were first introduced by R and pandas and provide a simple table abstraction of the data with a multitude of transformation operations, most of which originate from relational algebra.

In Spark, the DataFrame API is declarative, and lazy evaluation is used to build an execution DAG (directed acyclic graph). This graph can then be optimized before any action consumes the underlying data in the DataFrame. This gives the lakehouse several new optimization features, such as caching and auxiliary data. Figure 1-6 shows how these requirements fit into an overall lakehouse system design.

Since Delta Lake is the focus of this book, we will illustrate how Delta Lake facilitates the requirements for implementing a lakehouse.

Figure 1-6. Lakehouse implementation

Delta Lake

As mentioned in the previous section, a possible lakehouse solution can be built on top of Delta Lake. Delta Lake is an open-table format that combines metadata, caching, and indexing with a data lake storage format. Together these provide an abstraction level to serve ACID transactions and other management features.

The Delta Lake open-table format, open source metadata layer ultimately enables lakehouse implementations. Delta Lake provides ACID transactions, scalable metadata handling, a unified process model that spans batch and streaming, full audit history, and support for SQL data manipulation language (DML) statements. It can run on existing data lakes and is fully compatible with several processing engines, including Apache Spark.

Delta Lake is an open source framework, the specification of which can be found at https://delta.io. The work of Armbrust et al. offers a detailed description of how Delta Lake provides ACID transactions.

Delta Lake provides the following features:

- Transactional ACID guarantees

Delta Lake will make sure that all data lake transactions using Spark, or any other processing engine, are committed for durability and exposed to other readers in an atomic fashion. This is made possible through the Delta transaction log. In Chapter 2, we will cover the transaction log in detail.

- Full DML support

Traditional data lakes do not support transactional, atomic updates to the data. Delta Lake fully supports all DML operations, including deletes and updates, and complex data merge or upsert scenarios. This support greatly simplifies the creation of dimensions and fact tables when building a modern data warehouse (MDW).

- Audit history

The Delta Lake transaction log records every change made to the data, in the order that these changes were made. Therefore, the transaction log becomes the full audit trail of any changes made to the data. This enables admins and developers to roll back to earlier versions of data after accidental deletions and edits. This feature is called time travel and is covered in detail in Chapter 6.

- Unification of batch and streaming into one processing model

Delta Lake can work with batch and streaming sinks or sources. It can perform MERGEs on a data stream, which is a common requirement when merging IoT data with device reference data. It also enables use cases where we receive CDC data from external data sources. We will cover streaming in detail in Chapter 8.

- Schema enforcement and evolution

Delta Lake enforces a schema when writing or reading data from the lake. However, when explicitly enabled for a data entity, it allows for a safe schema evolution, enabling use cases where the data needs to evolve. Schema enforcement and evolution are covered in Chapter 7.

- Rich metadata support and scaling

Having the ability to support large volumes of data is great, but if the metadata cannot scale accordingly, the solution will fall short. Delta Lake scales out all metadata processing operations by leveraging Spark or other compute engines, allowing it to efficiently process the metadata for petabytes of data.

A lakehouse architecture is made up of three layers, as shown in Figure 1-7. The lakehouse storage layer is built on standard cloud-storage technology, such as ADLS, GCS, or Amazon S3 storage. This provides the lakehouse with a highly scalable, low-cost storage layer.

Figure 1-7. Lakehouse layered architecture

The transactional layer of the lakehouse is provided by Delta Lake. This brings ACID guarantees to the lakehouse, enabling an efficient transformation of raw data into curated, actionable data. Besides the ACID support, Delta Lake offers a rich set of additional features, such as DML support, scalable metadata processing, streaming support, and a rich audit log. The top layer of the lakehouse stack is made up of high-performance query and processing engines, which leverage underlying cloud compute resources. Supported query engines include:

-

Apache Spark

-

Apache Hive

-

Presto

-

Trino

Please consult the Delta Lake website for a complete list of supported compute engines.

The Medallion Architecture

An example of a Delta Lake-based lakehouse architecture is provided in Figure 1-8. This architectural pattern with Bronze, Silver, and Gold layers is often referred to as the medallion architecture. While it is only one of many lakehouse architecture patterns, it is a great fit for modern data warehouses, data marts, and other analytical solutions.

Figure 1-8. Data lakehouse solution architecture

At the highest level, we have three components in this solution. To the left we have the different data sources. A data source can take on many forms; some examples are provided here:

-

A set of CSV or TXT files on an external data lake

-

An on-premises database, such as Oracle or SQL Server

-

Streaming data sources, such as Kafka or Azure Event Hubs

-

REST APIs from a SAS service, such as Salesforce or ADP

The central component implements the medallion architecture. A medallion architecture is a data design pattern used to logically organize data in a lakehouse, through Bronze, Silver, and Gold layers. The Bronze layer is where we land the data ingested from our source systems on the left. Data in the Bronze zone is typically landed “as is,” but can be augmented with additional metadata, such as the loading date and time, processing identifiers, etc.

In the Silver layer, the data from the Bronze layer is cleansed, normalized, merged, and conformed. This is where the enterprise view of the data across the different subject areas is gradually coming together.

The data in the Gold layer is “consumption-ready” data. This data can be in the format of a classic star schema, containing dimensions and fact tables, or it could be in any data model that is befitting to the consuming use case.

The goal of the medallion architecture is to improve the structure and quality of the data incrementally and progressively as it flows through each layer of the architecture, with each layer having an inherent purpose. This data design pattern will be covered in much greater depth in Chapter 10, but it is important to understand how a lakehouse, together with Delta Lake, can support reliable, performant data design patterns, or multihop architectures. Design patterns, like the medallion architecture, provide some of the architectural foundations for unifying your data pipelines in a lakehouse in order to support multiple use cases (e.g., batch data, streaming data, and machine learning).

The Delta Ecosystem

As we have laid out in this chapter, Delta Lake enables us to build data lakehouse architectures, which enable data warehousing and machine learning/AI applications to be hosted directly on a data lake. Today, Delta Lake is the most widely utilized lakehouse format, currently used by over 7,000 organizations, processing exabytes of data per day.

However, data warehouses and machine learning applications are not the only application target of Delta Lake. Beyond its core transactional ACID support, which brings reliability to data lakes, Delta Lake enables us to seamlessly ingest and consume both streaming and batch data with one solution architecture.

Another important component of Delta Lake is Delta Sharing, which enables companies to share data sets in a secure manner. Delta Lake 3.0 now supports standalone readers and writers, enabling any client (Python, Ruby, or Rust) to write data directly to Delta Lake without requiring any big data engine, such as Spark or Flink. Delta Lake ships with an extended set of open source connectors, including Presto, Flink, and Trino. The Delta Lake storage layer is now used extensively on many platforms, including ADLS, Amazon S3, and GCS. All components of Delta Lake 2.0 have been open sourced by Databricks.

The success of Delta Lake and lakehouses has spawned a completely new ecosystem, built around the Delta technology. This ecosystem is made up of a variety of individual components, including the Delta Lake storage format, Delta Sharing, and Delta Connectors.

Delta Lake Storage

The Delta Lake storage format is an open source storage layer that runs on top of cloud-based data lakes. It adds transactional capabilities to data lake files and tables, bringing data warehouse-like features to a standard data lake. Delta Lake storage is the core component of the ecosystem because all other components depend on this layer.

Delta Sharing

Data sharing is a common use case in business. For example, a mining company might want to securely share IoT information from its massive mining truck engines with the manufacturer for preventative maintenance and diagnostic purposes. A thermostat manufacturer might want to securely share HVAC data with a public utility to optimize the power grid load on high-usage days. However, in the past, implementing a secure, reliable data sharing solution was very challenging, and required expensive, custom development.

Delta Sharing is an open source protocol for securely sharing large datasets of Delta Lake data. It allows users to securely share data stored in Amazon S3, ADLS, or GCS. With Delta Sharing, users can directly connect to the shared data, using their favorite toolsets like Spark, Rust, Power BI, etc., without having to deploy any additional components. Notice that the data can be shared across cloud providers, without any custom development.

Delta Sharing enables use cases such as:

-

Data stored in ADLS can be processed by a Spark Engine on AWS.

-

Data stored in Amazon S3 can be processed by Rust on GCP.

Please refer to Chapter 9 for a detailed discussion of Delta Sharing.

Delta Connectors

The main goal of Delta Connectors3,4 is to bring Delta Lake to other big data engines outside of Apache Spark. Delta Connectors are open source connectors that directly connect to Delta Lake. The framework includes Delta Standalone, a Java native library that allows direct reading and writing of the Delta Lake tables without requiring an Apache Spark cluster. Consuming applications can use Delta Standalone to directly connect to Delta tables written by their big data infrastructure. This eliminates the need for data duplication into another format before it can be consumed.

Other native libraries are available for:

- Hive

The Hive Connector reads Delta tables directly from Apache Hive.

- Flink

The Flink/Delta Connector reads and writes Delta tables from the Apache Flink application. The connector includes a sink for writing to Delta tables from Apache Flink, and a source for reading Delta tables using Flink.

- sql-delta-import

This connector allows for importing data from a JDBC data source directly into a Delta table.

- Power BI

The Power BI connector is a custom Power Query function that allows Power BI to read a Delta table from any file-based data source supported by Microsoft Power BI.

Delta Connectors is a fast-growing ecosystem, with more connectors becoming available regularly. In fact, included in the recently announced Delta Lake 3.0 release is Delta Kernel. Delta Kernel and its simplified libraries remove the need to understand Delta protocol details, thus making it much easier to build and maintain connectors.

Conclusion

Given the volume, velocity, variety, and veracity of data, the limitations and challenges of both data warehouses and data lakes have driven a new paradigm of data architectures. The lakehouse architecture, set forth by advancements in open-table formats such as Delta Lake, provides a modern data architecture that harnesses the best elements of its predecessors to bring a unified approach to a data platform.

As mentioned in the Preface, this book will do more than just scratch the surface; it will dive into some of the core features of Delta Lake already touched on in this chapter. You will learn how to best set up Delta Lake, identify use cases for different features, learn about best practices and different things to consider, and much more. It will continually provide data practitioners with context and evidence of how this open-table format supports a modern data platform in the form of a lakehouse architecture. By the end of this book you will feel confident in getting up and running with Delta Lake and building a modern data lakehouse architecture.

Get Delta Lake: Up and Running now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.