Chapter 1. Learning and Thinking with Things

Tangible Interfaces

The study of how humans learn is nothing new and not without many solid advances. And yet, in the rush to adopt personal computers, tablets, and similar devices, we’ve traded the benefits of hands-on learning and instruction for the scale, distribution, and easy data collection that’s part and parcel to software programs. The computational benefits of computers have come at a price; we’ve had to learn how to interact with these machines in ways that would likely seem odd to our ancestors: mice, keyboards, awkward gestures, and many other devices and rituals that would be nothing if not foreign to our predecessors. But what does the future hold for learning and technology? Is there a way to reconcile the separation between all that is digital with the diverse range of interactions for which our bodies are capable? And how does the role of interaction designer change when we’re working with smart, potentially shape-shifting, objects? If we look at trends in technology, especially related to tangible computing (where physical objects are interfaced with computers), they point to a sci-fi future in which interactions with digital information come out from behind glass to become things we can literally grasp.

One such sign of this future comes from Vitamins, a multidisciplinary design and invention studio based in London. As Figure 1-1 shows, it has developed a rather novel system for scheduling time by using... what else... Lego bricks!

Figure 1-1. Vitamins Lego calendar1

Vitamins describes their Lego calendar as the following:

...a wall-mounted time planner, made entirely of Lego blocks, but if you take a photo of it with a smartphone, all of the events and timings will be magically synchronized to an online digital calendar.

Although the actual implementation (converting a photo of colored bricks into Google calendar information) isn’t in the same technical league as nanobots or mind-reading interfaces, this project is quite significant in that it hints at a future in which the distinctions between physical and digital are a relic of the past.

Imagine ordinary objects—even something as low-tech as Lego bricks—augmented with digital properties. These objects could identify themselves, trace their history, and react to different configurations. The possibilities are limitless. This is more than an “Internet of Things,” passively collecting data; this is about physical objects catching up to digital capabilities. Or, this is about digital computing getting out from behind glass. However you look at this, it’s taking all that’s great about being able to pick up, grasp, squeeze, play with, spin, push, feel, and do who-knows-what-else to a thing, while simultaneously enjoying all that comes with complex computing and sensing capabilities.

Consider two of the studio’s design principles (from the company’s website) that guided this project:

It had to be tactile: “We loved the idea of being able to hold a bit of time, and to see and feel the size of time”

It had to work both online and offline: “We travel a lot, and we want to be able to see what’s going on wherever we are.”

According to Vitamins, this project “makes the most of the tangibility of physical objects, and the ubiquity of digital platforms, and it also puts a smile on our faces when we use it!”2 Although this project and others I’ll mention hint at the merging of the physical and the digital, it’s important to look back and assess what has been good in the move from physical to digital modes of interaction—and perhaps what has been lost.

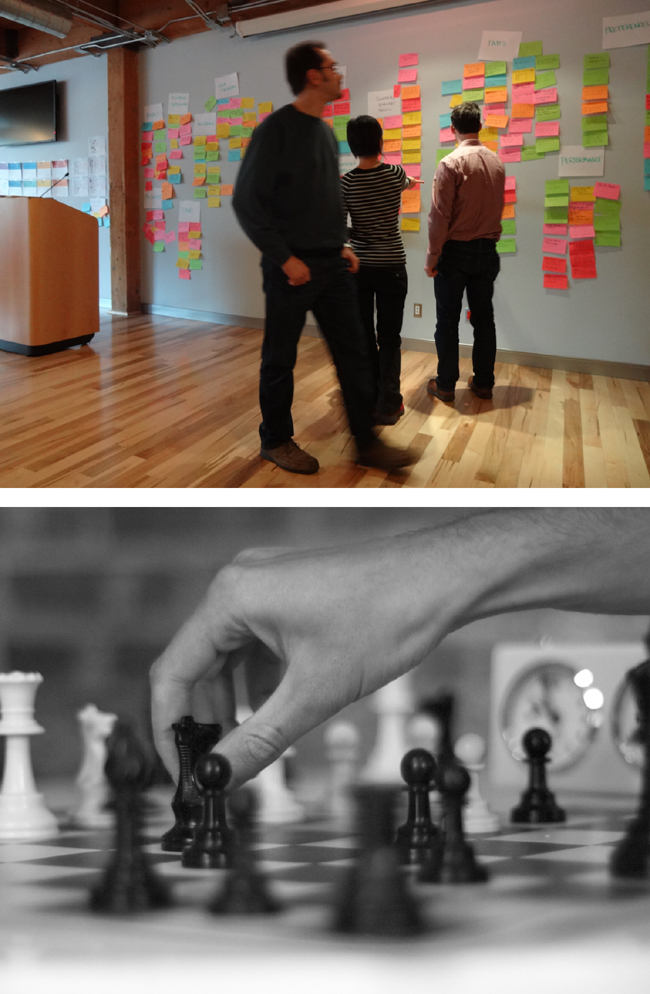

Kanban Walls, Chess, and Other Tangible Interactions

Oddly enough, it is the software teams (the folks most immersed in the world of virtual representations) who tend to favor tangibility when it comes to things such as project planning; it’s common for Agile or Scrum development teams to create Kanban walls, such as that shown in Figure 1-2. Imagine sticky notes arranged in columns, tracking the progress of features throughout the development cycle, from backlog through to release. Ask most teams and they will say there is something about the tangibility of these sticky notes that cannot be replicated by virtual representations.

There’s something about moving and arranging this sticky little square, feeling the limitations of different size marker tips with respect to how much can be written, being able to huddle around a wall of these sticky notes as a team—there’s something to the physical nature of working with sticky notes. But, is there any explanation as to “why” this tangible version might be advantageous, especially where understanding is a goal?

Before answering that question, first consider this question: where does thinking occur?

If your answer is along the lines of “in the brain,” you’re not alone. This view of a mind that controls the body has been the traditional view of cognition for the better part of human history. In this view, the brain is the thinking organ, and as such it takes input from external stimuli, processes those stimuli, and then directs the body as to how to respond.

Thinking; then doing.

But, a more recent and growing view of cognition rejects this notion of mind-body dualism. Rather than thinking and then doing, perhaps we think through doing.

Consider the game of chess. Have you ever lifted up a chess piece, hovered over several spots where you could move that piece, only to return that piece to the original space, still undecided on your move? What happened here? For all that movement, there was no pragmatic change to the game. If indeed we think and then do (as mind-body dualism argues), what was the effect of moving that chess piece, given that there was no change in the position? If there is no outward change in the environment, why do we instruct our bodies to do these things? The likely answer is that we were using our environment to extend our thinking skills. By hovering over different options, we are able to more clearly see possible outcomes. We are extending the thinking space to include the board in front of us.

Thinking through doing.

This is common in chess. It’s also common in Scrabble, in which a player frequently rearranges tiles in order to see new possibilities.

Let’s return to our Kanban example.

Even though many cognitive neuroscientists (as well as philosophers and linguists) would likely debate a precise explanation for the appeal of sticky notes as organizational tools, the general conversation would shift the focus away from the stickies themselves to the role of our bodies in this interaction, focusing on how organisms and the human mind organize themselves by interacting with their environment. This perspective, generally described as embodied cognition, postulates that thinking and doing are so closely linked as to not be serial processes. We don’t think and then do; we think through doing.

But there’s more to embodied cognition than simply extending our thinking space. When learning is embodied, it also engages more of our senses, creating stronger neural networks in the brain, likely to increase memory and recall.

Moreover, as we continue to learn about cognition ailments such as autism, ADHD, or sensory processing disorders, we learn about this mind-body connection. With autism for example, I’ve heard from parents who told me that learning with tangible objects has been shown to be much more effective for kids with certain types of autism.

Our brain is a perceptual organ that relies on the body for sensory input, be it tangible, auditory, visual, spatial, and so on. Nowhere is the value of working with physical objects more understood than in early childhood education, where it is common to use “manipulatives”—tangible learning objects—to aid in the transfer of new knowledge.

Manipulatives in Education

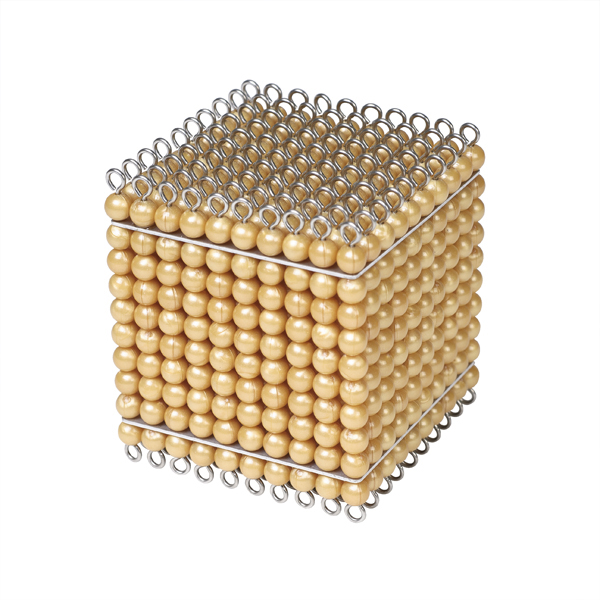

My mother loves to recall my first day at Merryhaven Montessori, the elementary school I attended through the sixth grade. I recall her asking, “What did you learn today?” I also remember noticing her curiosity at my response: “I didn’t learn anything—we just played!”

Of course “playing” consisted of tracing sandpaper letters, cutting a cheese slice into equal parts, and (my favorite) counting beads; I could count with single beads, rods consisting of 10 beads, the flat squares of 100 beads (or 10 rods, I suppose), and the mammoth of them all: a giant cube of 1000 beads! (See Figure 1-3.) These “manipulatives” are core to the Montessori method of education, and all examples—dating back to the late 1800s—of learning through tangible interactions. Playing is learning, and these “technologies” (in the anthropological sense) make otherwise abstract concepts quite, concrete.

But why is this so?

Jean Piaget, the influential Swiss developmental psychologist, talks about stages of development, and how learning is—at the earliest ages—physical (sensorimotor). As babies, we grasp for things and make sense of the world through our developing senses. At this stage, we learn through physical interactions with our environment. This psychological theory, first proposed in the 1960s, is supported by recent advances in cognitive neuroscience and theories about the mind and body.

Figure 1-3. Montessori beads5

Essentially, we all start off understanding the world only through physical (embodied) interactions. As infants, even before we can see, we are grasping at things and seeking tactile comforts. We learn through our physical interactions with our environment.

Contrast this with the workbooks and photocopied assignments common in most public schools. These pages represent “what” students should be learning, but ignore the cognitive aspects of “how” we learn, namely through interactions. Much of learning is cause and effect. Think of the young child who learns not to touch a hot stove either through her own painful experience or that of a sibling. It is through interactions and experimentation (or observing others) that we begin to recognize patterns and build internal representations of otherwise abstract ideas.

Learning is recognizing or adding to our collection of patterns.

In this regard, computers can be wonderful tools for exploring possibilities. This is true of young children playing with math concepts, to geneticists looking for patterns in DNA strands. Interactive models and simulations are some of the most effective means of sensemaking. Video games also make for powerful learning tools because they create possibility spaces where players can explore potential outcomes. Stories such as Ender’s Game (in which young children use virtual games to explore military tactics) are a poignant testimony to the natural risk-taking built into simulations. “What happens if I push this?” “Can we mix it with...?” “Let’s change the perspective.” Computers make it possible for us to explore possibilities much more quickly in a playful, risk-free manner. In this regard, physical models are crude and limiting. Software, by nature of being virtual, is limited only by what can be conveyed on a screen.

But, what of the mind-body connection? What about the means by which we explore patterns through a mouse or through our fingertips sliding across glass? Could this be improved? What about wood splinters and silky sheets and hot burners and stinky socks and the way some objects want to float in water—could we introduce sensations like these into our interactions? For all the brilliance of virtual screens, they lack the rich sensory associations inherent in the physical world.

Virtual Manipulatives

For me, it was a simple two-word phrase that brought these ideas into collision: “Virtual Manipulatives”. During an interview with Bill Gates, Jessie Woolley-Wilson, CEO of DreamBox, shared a wonderful example of the adaptive learning built in to their educational software. Her company’s online learning program will adapt which lesson is recommended next based not only the correctness of an answer, but by “capturing the strategies that students [use] to solve problems, not just that they get it right or wrong.” Let’s suppose we’re both challenged to count out rods and beads totaling 37. As Wooley-Wilson describes it:

You understand groupings and you recognize 10s, and you very quickly throw across three 10’s, and a 5 and two 1’s as one group. You don’t ask for help, you don’t hesitate, your mouse doesn’t hesitate over it. You do it immediately, ready for the next. I, on the other hand, am not as confident, and maybe I don’t understand grouping strategies. But I do know my 1’s. So I move over 37 single beads. Now, you have 37 and I have 37, and maybe in a traditional learning environment we will both go to the next lesson. But should we?

By observing how a student arrives at an answer, by monitoring movements of the mouse and what students “drag” over, the system can determine if someone has truly mastered the skill(s) needed to move on. This is certainly an inspiring example of adaptive learning, and a step forward toward the holy grail of personalized learning. But, it was the two words that followed that I found jarring: she described this online learning program, using a representation of the familiar counting beads, as virtual manipulatives. Isn’t the point of a manipulative that it is tangible? What is a virtual manipulative then, other than an oxymoron?

But this did spark an idea: what if we could take the tangible counting beads, the same kind kids have been playing with for decades, and endow them with the adaptive learning properties Woolley-Wilson describes? How much better might this be for facilitating understanding? And, with the increasing ubiquity of cheap technology (such as RFID tags and the like), is this concept really that far off? Imagine getting all the sensory (and cognitive) benefits of tangible objects, and all the intelligence that comes with “smart” objects.

Embodied Learning

You might wonder, “Why should we care about tangible computing?” Isn’t interacting with our fingers or through devices such as a mouse or touchscreens sufficient? In a world constrained by costs and resources, isn’t it preferable to ship interactive software (instead of interactive hardware), that can be easily replicated and doesn’t take up physical space? If you look at how media has shifted from vinyl records to cassette tapes to compact discs and finally digital files, isn’t this the direction in which everything is headed?

Where learning and understanding is required, I’d argue no. And, a definite no wherever young children are involved. Piaget established four stages of learning (sensorimotor, pre-operational, concrete operational, and formal operational), and argued that children “learn best from concrete [sensorimotor] activities.” This work was preceded by American psychologist and philosopher John Dewey, who emphasized firsthand learning experiences. Other child psychologists such as Bruner or Dienne have built on these “constructivist” ideas, creating materials used to facilitate learning. In a review of studies on the use of manipulatives in the classroom, researchers Marilyn Suydam and Jon Higgins concluded that “studies at every grade level support the importance and use of manipulative materials.” Taking things one step further, educator and artificial intelligence pioneer Seymour Papert introduced constructionism (not to be confused with constructivism), which holds that learning happens most effectively when people are also active in making tangible objects in the real world.

OK. But what of adults, who’ve had a chance to internalize most of these concepts? Using Piaget’s own model, some might argue that the body is great for lower-level cognitive problems, but not for more abstract or complex topics. This topic is one of some debate, with conversations returning to “enactivism” and the role of our bodies in constructing knowledge. The central question is this: if learning is truly embodied, why or how would that change with age? Various studies continue to reveal this mind-body connection. For example, one study found that saying words such as “lick, pick, and kick” activates the corresponding brain regions associated with the mouth, hand, and foot, respectively. I’d add that these thinking tools extend our thinking, the same way objects such as pen and paper, books, or the handheld calculator (abacus or digital variety—you choose) have allowed us to do things we couldn’t do before. Indeed, the more complex the topic, the more necessary it is to use our environment to externalize our thinking.

Moreover, there is indeed a strong and mysterious connection between the brain and the body. We tend to gesture when we’re speaking, even if on a phone when no one else can see us. I personally have observed different thinking patterns when standing versus sitting. In computer and retail environments, people talk about “leaning in” versus “leaning back” activities. In high school, I remember being told to look up, if I was unsure of how to answer a question—apparently looking up had, in some study, been shown to aid in the recall of information! Athletes, dancers, actors—all these professions talk about the yet unexplained connections between mind and body.

As magical as the personal computer and touchscreen devices are, there is something lost when we limit interactions to pressing on glass or clicking a button. Our bodies are capable of so much more. We have the capacity to grasp things, sense pressure (tactile or volumetric), identify textures, move our bodies, orient ourselves in space, sense changes in temperature, smell, listen, affect our own brain waves, control our breathing—so many human capabilities not recognized by most digital devices. In this respect, the most popular ways in which we now interact with technology, namely through the tips of our fingers, will someday seem like crude, one-dimensional methods.

Fortunately, the technology to sense these kinds of physical interactions already exists or is being worked on in research labs.

(Near) Future Technology

Let’s consider some of the ways that physical and digital technologies are becoming a reality, beginning with technologies and products that are already available to us:

In 2012, we saw the release of the Leap Motion Controller, a highly sensitive gestural interface, followed closely by Mylo, an armband that accomplishes similar Minority Report–style interactions, but using changes in muscles rather than cameras.

When it comes to touchscreens, Senseg uses electrostatic impulses to create the sensation of different textures. Tactus Technologies takes a different approach, and has “physical buttons that rise up from the touchscreen surface on demand.”

To demonstrate how sensors are weaving themselves into our daily lives, Lumo Back is a sensor band worn around the waist to help improve posture.

We’ve got the Ambient umbrella, which alerts you if it will be needed, based on available weather data.

A recent Kickstarter project aims to make DrumPants (the name says it all!) a reality.

In the wearables space, we have technologies such as conductive inks, muscle wire, thermochromic pigments, electrotextiles, and light diffusing acrylic (see Figure 1-4). Artists are experimenting with these new technologies, creating things like a quilt that doubles as a heat-map visualization of the stock market (or whatever dynamic data you link to it).

Figure 1-4. A collage of near-future tech (from left to right, top to bottom): Ambient umbrella, DrumPants, the Leap Motion Controller, Lumo Back, Mylo armband, Senseg, and Tactus tablet

Sites such as Sparkfun, Parallax, or Seeed offer hundreds of different kinds of sensors (RFID, magnetic, thermal, and so on) and associated hardware with which hobbyists and businesses can tinker. Crowdfunding sites such as Kickstarter have turned many of these hobbyist projects into commercial products.

Smartphones have a dozen or more different sensors (GPS, accelerometer, and so on) built in to them, making them a lot more “aware” than most personal computers (and ready for the imaginative entrepreneur). And while most of us are focused on the apps we can build on top of these now-ubiquitous smartphone sensors, folks like Chris Harrison, a researcher at Disney Research Labs, have crafted a way to recognize the differences between various kinds of touch—fingertip, knuckle, nail, and pad—using acoustics and touch sensitivity; the existing sensors can be exploited to create new forms of interaction.

Indeed, places such as Disney Research Labs in Pittsburgh or the MIT Media Lab are hotspots for these tangible computing projects. Imagine turning a plant into a touch surface, or a surface that can sense different grips. Look further out, and projects like ZeroN show an object floating in midair, seemingly defying gravity; when moved, information is recorded and you can play back these movements!

How about a robotic glove covered with sensors and micro-ultrasound machines? Med Sensation is inventing just such a device that would allow the wearer to assess all kinds of vital information not detectable through normal human touch.

There is no shortage of exciting technologies primed to be the next big thing!

We live in a time full of opportunity for imaginative individuals. In our lifetime, we will witness the emergence of more and varied forms of human-computer interaction than ever before. And, if history is any indication (there’s generally a 20-year incubation period from invention in a laboratory to commercial product), these changes will happen inside of the next few decades.

I can’t help but wonder what happens when ordinary, physical objects, such as the sandpaper letters or counting beads of my youth, become endowed with digital properties? How far off is a future in which ordinary learning becomes endowed with digital capabilities?

Thinking with Things, Today!

Whereas much of this is conjecture, there are a handful of organizations exploring some basic ways to make learning both tangible and digital.

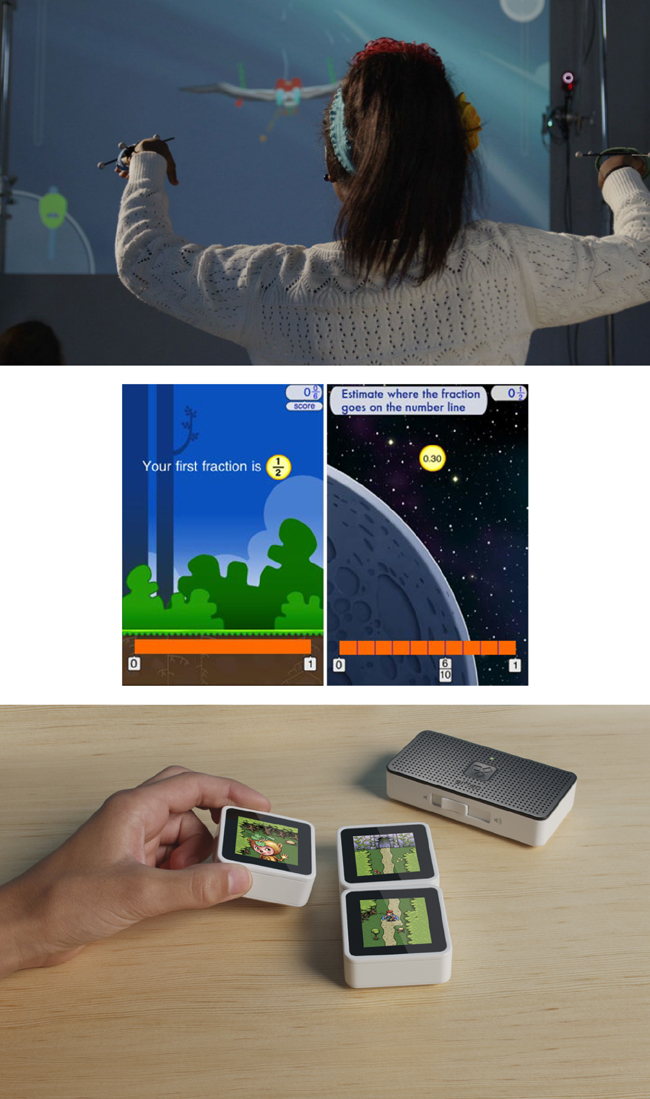

Sifteo Cubes

The most popular of these technologies is, of course, the Sifteo Cubes (see Figure 1-5). Announced at the February 2009 TED conference, these “toy tiles that talk to each other” have opened the doors to new kinds of play and interaction. Each cube, aside from having a touchscreen, has the added ability to interact with other cubes based on its proximity to a neighboring cube, cube configurations, rotation, and even orientation and gesture. In various games, players essentially reposition blocks to create mazes, roll a (virtual) ball into the next block, and do any number of other things accomplished by interacting with these blocks the way you would dominoes. They’ve been aptly described as “alphabet blocks with an app store.” Commenting on what Sifteo Cubes represent, founder Dave Merrill has said “What you can expect to see going forward are physical games that really push in the direction of social play.”

Motion Math

Similar to Sifteo Cubes, in that interaction comes through motion, is the fractions game Motion Math (Figure 1-5). This simple app for the iPhone and Android uses the accelerometer to teach fractions. Rather than tapping the correct answer or hitting a submit button, as you would with other math software, players tilt their devices left or right to direct a bouncing ball to the spot correctly matching the identified fraction; you learn fractions using hand-eye coordination and your body (or at least your forearm). And, rather than an “incorrect” response, the feedback loop of a bouncing ball allows you to playfully guide your ball to the correct spot.

Figure 1-5. Edu tech (from top to bottom): GameDesk’s Areo, the Motion Math app, and Sifteo Cubes

GameDesk

As exciting as Sifteo and Motion Math are, some of the best examples of whole body learning with technology would be the learning games developed by GameDesk. Take Aero, as an example. Codesigned with Bill Nye the Science Guy, Aero teachers sixth graders fundamental principles in physics and aerodynamics. How? According to GameDesk founder Lucient Vattel:

In this game, you outstretch your arms and you become a bird. It’s an accurate simulation of bird flight. And through that you get to understand the vectors: gravity, lift, drag, thrust. These concepts are not normally taught at the sixth grade level...

Vattel goes on to add that “a game can allow the concepts to be visualized, experienced...” And this is what is remarkable: that students are experiencing learning, with their entire body and having a blast while they’re at it—who doesn’t want to transform into a bird and fly, if only in a simulation?

GameDesk also works with other organizations that are exploring similar approaches to learning. One of those organizations is SMALLab Learning, which has a specific focus on creating embodied learning environments. SMALLab uses motion-capture technology to track students’ movements and overlay this activity with graphs and equations that represent their motions in real time. In a lesson on centripetal force, students swing an object tethered to a rope while a digital projection on the ground explains the different forces at play. Students can “see” and experience scientific principles. “They feel it, they enact it,” says David Birchfield, co-founder of SMALLab Learning.

The technology in these examples is quite simple—for Aero a Wiimote is hidden inside each of the wings—but the effect is dramatic. Various studies by SMALLab on the effectiveness of this kind of embodied learning show a sharp increase as evidenced by pre-, mid-, and post-test outcomes for two different control groups.

Timeless Design Principles?

Technology will change, which is why I’ve done little more here than catalog a handful of exciting advancements. What won’t change, and is needed, are principles for designing things with which to think. For this, I take an ethnographer’s definition of technology, focusing on the effect of these artifacts on a culture. Based on my work as an educator and designer, I propose the following principles for designing learning objects.

A good learning object:

- Encourages playful interactions

Aside from being fun or enjoyable, playfulness suggests you can play with it, that there is some interactivity. Learning happens through safe, nondestructive interactions, in which experimentation is encouraged. Telling me isn’t nearly as effective as letting me “figure it out on my own.” Themes of play, discovery, experimentation, and the like are common to all of the learning examples shared here. Sifteo founder Dave Merrill comments that “Like many games, [Sifteo] exercises a part of your brain, but it engages a fun play experience first and foremost.”

- Supports self-directed learning (SDL)

When learners are allowed to own their learning—determining what to learn, and how to go about filling that gap in their knowledge—they become active participants in the construction of new knowledge. This approach to learning encourages curiosity, helps to develop independent, intrinsically motivated learners, and allows for more engaged learning experiences. Contrary to what is suggested, SDL can be highly social, but agency lies in hands of the learner.

- Allows for self-correction

An incorrect choice, whether intended, unintended, or the result of playful interactions should be revealed quickly (in real time if possible) so that learners can observe cause-and-effect relationships. This kind of repeated readjusting creates a tight feedback loop, ultimately leading to pattern recognition.

- Makes learning tangible

Nearly everything is experienced with and through our bodies. We learn through physical interactions with the world around us and via our various senses. Recognizing the physicality of learning, and that multimodal learning is certainly preferable, we should strive for manipulatives and environments that encourage embodied learning.

- Offers intelligent recommendations

The unique value of digital objects is their ability to record data and respond based on that data. Accordingly, these “endowed objects” should be intelligent, offering instruction or direction based on passively collected data.

Each of these principles is meant to describe a desired quality that is known or believed to bring about noticeable learning gains, compared to other learning materials. So, how might we use these principles? Let’s apply these to a few projects, old and new.

Cylinder Blocks: Good Learning Objects

In many ways, the manipulatives designed by Maria Montessori more than a century ago satisfy nearly all of these principles. Setting aside any kind of inherent intelligence, they are very capable objects.

Consider the cylinder blocks shown in Figure 1-6. You have several cylinders varying in height and/or diameter that fit perfectly into designated holes drilled into each block. One intent is to learn about volume and how the volume of a shallow disc can be the same as that of a narrow rod. Additionally, these cylinder block toys help develop a child’s visual discrimination of size and indirectly prepare a child for writing through the handling of the cylinders by their knobs.

Figure 1-6. Montessori cylinder blocks6

How do these blocks hold up?

As with nearly all of Maria Montessori’s manipulative materials, these objects are treated like toys, for children to get off the shelf and play with, satisfying our first principle, playful interactions. Because children are encouraged to discover these items for themselves, and pursue uninterrupted play (learning) time with the object, we can say it satisfies the second principle: self-directed learning. Attempting to place a cylinder into the wrong hole triggers the learning by either not fitting into the hole (too big), or standing too tall and not filling the space; students are able to quickly recognize this fact and move cylinders around until a fitting slot is found, allowing for self-correction, our third principle. As you play with wooden cylinders, using your hands, we can safely say this satisfies our fourth principle: tangibility. As far as intelligence, this is the only missing piece.

With this kind of orientation in mind, I’d like to share a personal project I’m working on (along with a friend much more versed in the technical aspects).

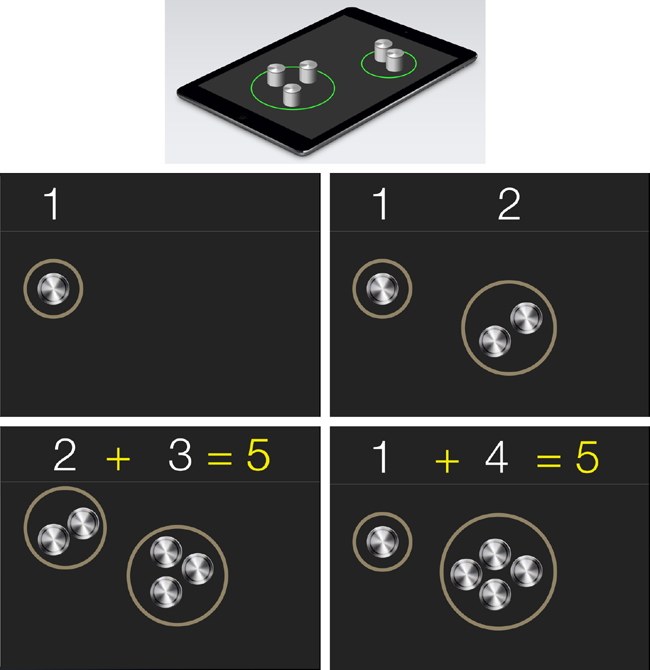

Case Study: An appcessory for early math concepts

When my kids were younger, I played a math game that never ceased to amuse them (or me, at least). The “game,” if you can call it that, consisted of grabbing a handful of Teddy Grahams snack crackers (usually off of their plate) and counting them out, one by one. I’d then do simple grouping exercises, moving crackers between two piles or counting by placing them into pairs. The real fun kicked in when we’d play subtraction. “You have seven Teddy Grahams. If Daddy eats one Teddy Graham, how many do you have left?” I think I enjoyed this more than my kids did (to be fair, I’d also make a few additional Teddy Grahams appear out of nowhere, to teach addition). All in all, this was a great way to explore early math concepts such as counting, grouping, subtraction, and addition.

So, how does this game stack up on the design principles? The learning is playful (if not downright mischievous). And the Teddy Grahams are tangible. On these two attributes my game is successful. However, the game doesn’t fare so well on the remaining principles: although my presence is not a bad thing, this doesn’t encourage self-directed learning, and the correction comes entirely from me and is not discovered. As for the intelligence, it’s dependent on my presence.

This left me wondering if this simple game, not all that effective without my presence, could be translated into the kinds of experiences I’m describing here? Could this be improved, to satisfy the identified five design principles?

Here’s my concept: what if we combined my pre-math Teddy Graham game with an iPad? As depicted in Figure 1-7, what if we exchanged the crackers for a set of short cylinders (like knobs on a stereo), and what if we figured out how to get these knobs talking to the iPad. Could that work? Is that possible? Even though this could be accomplished with a set of Sifteo blocks, the costs would be prohibitive for such a singular focus, especially where you’d want up to 10 knobs. I’m treating these as single-purpose objects, with the brains offloaded to the device on which they sit (in this case the iPad). Hence, the “appcessory” label.

Figure 1-7. Appcessory concept and walkthrough

Here’s a walkthrough of how the interactions might work:

Placing one of these knobs onto the surface of the iPad would produce a glowing ring and the number 1.

Adding a second knob in close proximity would make this ring larger, encircling both knobs (and changing the number to 2).

Let’s suppose you added a third knob farther away, which would create a new ring with the corresponding number 1.

Now you have two rings, one totaling 2, the other totaling 1. If you slide the lone knob close to the first two, you’d end up now with one ring, totaling 3. In this manner, and as you start to add more knobs (the iPad supports up to 10, double that of other platforms), you start to learn about grouping.

In this case, the learning is quite concrete, with the idea of numeric representations being the only abstract concept. You could then switch to an addition mode that would add up the total of however many groups of knobs are on the surface.

I could go on, but you get the idea. By simply placing and moving knobs on a surface the child begins to play with fundamental math concepts. As of this writing, we have proven out the functional technology, but have yet to test this with children. Although the app I’m describing could be built very quickly, my fundamental thesis is that by making these knobs something you can grasp, place, slide, move, remove, and so on, learning will be multimodal and superior to simply dragging flat circles behind glass.

How does this stack up on the five principles?

As with the earlier Teddy Grahams version, it is interactive and tangible. Moving this game to a tablet device allows for self-directed learning and feedback loops in the form of the rings and numerical values. As far as intelligence goes, there is no limit to the kinds of data one could program the iPad to monitor and act upon.

So where might this thinking lead, one day?

Farther Out, a Malleable Future

In the opening scenes of the Superman movie Man of Steel, one of the many pieces of Kryptonian technology we see are communication devices whose form and shape is constantly reshaping—a tangible, monochromatic hologram, if you will. Imagine thousands of tiny metal beads moving and reshaping as needed. Even though this makes for a nice bit of sci-fi eye candy, it’s also technology that MIT’s Tangible Media Group, led by Professor Hiroshi Ishii, is currently exploring. In their own words, this work “explores the ‘Tangible Bits’ vision to seamlessly couple the dual world of bits and atoms by giving physical form to digital information.” They are creating objects (the “tangible bits”) that can change shape!

Even though the team’s vision of “radical atoms” is still in the realm of the hypothetical, the steps they are taking to get there are no less inspiring. Their latest example of tangible bits is a table that can render 3D content physically, so users can interact with digital information in a tangible way. In their video demonstration, a remote participant in a video conference moves his hands, and in doing so reshapes the surface of a table, rolling a ball around. The technology is at once both awe-inspiring and crude; the wooden pegs moving up and down to define form aren’t that unlike the pin art toys we see marketed to children. Having said that, it’s easy to imagine something like this improving in fidelity over time, in the same way that the early days of monochromatic 8-bit pixels gave way to retina displays and photorealistic images.

I mention this example because it’s easy to diminish the value of tangible interactions when compared to mutability of pixels behind glass; a single device such as a smartphone or tablet can become so many things, if only at the cost of tangibility. Our current thinking says, “Why create more ‘stuff’ that only serves a single purpose?” And this makes sense. I recall the first app for musicians that I downloaded to my iPhone—a simple metronome. For a few dollars, I was able to download the virtual equivalent of an otherwise very expensive piece of hardware. It dawned on me: if indeed the internal electronics are comparable to those contained in the hardware, there will be a lot of companies threatened by this disruption. This ability to download for free an app that as an object would have cost much more (not to mention add clutter) is a great shift for society.

What if physical objects could reshape themselves in the same way that pixels do? What if one device, or really a blob of beads, could reshape into a nearly infinitesimal number of things? What if the distinctions between bits and atoms become nearly indistinguishable? Can we have physical interactions that can also dynamically change form to be 1,000 different things? Or, at a minimum, can the interface do more than resemble buttons; perhaps it could shape itself into the buttons and switches of last century and then flatten out again into some new form. How does the role of interaction designer change when you’re interface is a sculpted, changing thing? So long as we’re looking out into possible futures, this kind of thinking isn’t implausible, and should set some direction.

Nothing New Under the Sun

While much of this looks to a future in which physical and digital converge, there is one profession that has been exploring this intersection for some time now: museums.

Museums are amazing incubators for what’s next in technology. These learning environments have to engage visitors through visuals, interactions, stories, and other means, which often leads to (at least in the modern museum) spaces that are both tangible and take advantage of digital interactions. The self-directed pace that visitors move through an exhibit pressures all museum designers to create experiences that are both informative and entertaining. And, many artists and technologist are eager to, within the stated goals of an exhibit, try new things.

Take for example the Te Papa Tongarewa museum, in Wellington, New Zealand. Because New Zealand is an island formed from the collision of two tectonic plates, you can expect volcanoes, earthquakes, and all things geothermal to get some attention. As visitors move about the space, they are invited to learn about various topics in some amazing and inventive ways. When it comes to discussions of mass and density, there are three bowling ball–sized rocks ready for you to lift; they are all the same in size, but the weight varies greatly. When learning about tectonic shifts, you turn a crank that then displaces two halves of a map (along with sound effects), effectively demonstrating what has happened to New Zealand over thousands of years, and what is likely to happen in the future. Visitors are encouraged to step into a house in which they can experience the simulation of an earthquake. The common denominator between these and dozens more examples is that through a combination of technology and tangible interactions, visitors are encouraged to interact with and construct their own knowledge.

Closing

Novelist William Gibson once commented that future predictions are often guilty of selectively amplifying the observed present. Steam power. Robots. Many of us are being handed a future preoccupied with touchscreens and projections. In “A Brief Rant on the Future of Interaction Design” designer and inventor Bret Victor offers a brilliant critique of this “future behind glass,” and reminds us that there are many more forms of interaction of which we have yet to take advantage. As he says, “Why aim for anything less than a dynamic medium that we can see, feel, and manipulate?”

To limit our best imaginings of the future, and the future of learning, to touching a flat surface ignores 1) a body of research into tangible computing, 2) signs of things to come, and 3) centuries of accumulated knowledge about how we—as human creatures—learn best. Whether it’s the formal learning of schools or the informal learning required of an information age, we need to actively think about how to best make sense of our world. And all that we know (and are learning) about our bodies and how we come to “know” as human beings cries out for more immersive, tangible forms of interaction. I look forward to a union of sorts, when bits versus atoms will cease to be a meaningful distinction. I look to a future when objects become endowed with digital properties, and digital objects get out from behind the screen. The future is in our grasp.

1 http://www.lego-calendar.com

2 http://www.special-projects-studio.com

3 Photo by Jennifer Morrow (https://www.flickr.com/photos/asadotzler/8447477253) CC-BY-2.0 (http://creativecommons.org/licenses/by/2.0)

4 Photo by Dean Strelau (https://www.flickr.com/photos/dstrelau/5859068224) CC-BY-2.0 (http://creativecommons.org/licenses/by/2.0)

5 As featured on Montessori Outlet (http://www.montessorioutlet.com)

6 As featured on Montessori Outlet (http://www.montessorioutlet.com)

Get Designing for the Internet of Things now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.