Chapter 1. Why Distributed?

Node.js is a self-contained runtime for running JavaScript code on the server. It provides a JavaScript language engine and dozens of APIs, many of which allow application code to interact with the underlying operating system and the world outside of it. But you probably already knew that.

This chapter takes a high-level look at Node.js, in particular how it relates to this book. It looks at the single-threaded nature of JavaScript, simultaneously one of its greatest strengths and greatest weaknesses, and part of the reason why itâs so important to run Node.js in a distributed manner.

It also contains a small pair of sample applications that are used as a baseline, only to be upgraded numerous times throughout the book. The first iteration of these applications is likely simpler than anything youâve previously shipped to production.

If you find that you already know the information in these first few sections, then feel free to skip directly to âSample Applicationsâ.

The JavaScript language is transitioning from being a single-threaded language to being a multithreaded language. The Atomics object, for example, provides mechanisms to coordinate communication across different threads, while instances of SharedArrayBuffer can be written to and read from across threads. That said, as of this writing, multithreaded JavaScript still hasnât caught on within the community.

JavaScript today is multithreaded, but itâs still the nature of the language, and of the

ecosystem, to be single-threaded.

The Single-Threaded Nature of JavaScript

JavaScript, like most programming languages, makes heavy use of functions. Functions are a way to combine units of related work. Functions can call other functions as well. Each time one function calls another function, it adds frames to the call stack, which is a fancy way of saying the stack of currently run functions is getting taller. When you accidentally write a recursive function that would otherwise run forever, youâre usually greeted with a RangeError: Maximum call stack size exceeded error. When this happens youâve reached the maximum limit of frames in the call stack.

Note

The maximum call stack size is usually inconsequential and is chosen by the JavaScript engine. The V8 JavaScript engine used by Node.js v14 has a maximum call stack size of more than 15,000 frames.

However, JavaScript is different from some other languages in that it does not constrain itself to running within a single call stack throughout the lifetime of a JavaScript application. For example, when I wrote PHP several years ago, the entire lifetime of a PHP script (a lifetime ties directly to the time it takes to serve an HTTP request) correlated to a single stack, growing and shrinking and then disappearing once the request was finished.

JavaScript handles concurrencyâperforming multiple things at the same timeâby way of an event loop. The event loop used by Node.js is covered more in âThe Node.js Event Loopâ, but for now just think of it as an infinitely running loop that continuously checks to see if there is work to perform. When it finds something to do, it begins its taskâin this case it executes a function with a new call stackâand once the function is complete, it waits until more work is ready to be performed.

The code sample in Example 1-1 is an example of this happening. First, it runs the a() function in the current stack. It also calls the setTimeout() function that will queue up the x() function. Once the current stack completes, the event loop checks for more work to do. The event loop gets to check for more work to do only once a stack is complete. It isnât, for example, checking after every instruction. Since thereâs not a lot going on in this simple program, the x() function will be the next thing that gets run after the first stack completes.

Example 1-1. Example of multiple JavaScript stacks

functiona(){b();}functionb(){c();}functionc(){/**/}functionx(){y();}functiony(){z();}functionz(){/**/}setTimeout(x,0);a();

Figure 1-1 is a visualization of the preceding code sample. Notice how there are two separate stacks and that each stack increases in depth as more functions are called. The horizontal axis represents time; code within each function naturally takes time to execute.

Figure 1-1. Visualization of multiple JavaScript stacks

The setTimeout() function is essentially saying, âTry to run the provided function 0ms from now.â However, the x() function doesnât run immediately, as the a() call stack is still in progress. It doesnât even run immediately after the a() call stack is complete, either. The event loop takes a nonzero amount of time to check for more work to perform. It also takes time to prepare the new call stack. So, even though x() was scheduled to run in 0ms, in practice it may take a few milliseconds before the code runs, a discrepancy that increases as application load increases.

Another thing to keep in mind is that functions can take a long time to run. If the a() function took 100ms to run, then the earliest you should expect x() to run might be 101ms. Because of this, think of the time argument as the earliest time the function can be called. A function that takes a long time to run is said to block the event loopâsince the application is stuck processing slow synchronous code, the event loop is temporarily unable to process further tasks.

Now that call stacks are out of the way, itâs time for the interesting part of this section.

Since JavaScript applications are mostly run in a single-threaded manner, two call stacks wonât exist at the same time, which is another way of saying that two functions cannot run in parallel.1 This implies that multiple copies of an application need to be run simultaneously by some means to allow the application to scale.

Several tools are available to make it easier to manage multiple copies of an application. âThe Cluster Moduleâ looks at using the built-in cluster module for routing incoming HTTP requests to different application instances. The built-in worker_threads module also helps run multiple JavaScript instances at once. The child_process module can be used to spawn and manage a full Node.js process

as well.

However, with each of these approaches, JavaScript still can run only a single line of JavaScript at a time within an application. This means that with each solution, each JavaScript environment still has its own distinct global variables, and no object references can be shared between them.

Since objects cannot be directly shared with the three aforementioned approaches, some other method for communicating between the different isolated JavaScript contexts is needed. Such a feature does exist and is called message passing. Message passing works by sharing some sort of serialized representation of an object/data (such as JSON) between the separate isolates. This is necessary because directly sharing objects is impossible, not to mention that it would be a painful debugging experience if two separate isolates could modify the same object at the same time. These types of issues are referred to as deadlocks and race conditions.

Note

By using worker_threads it is possible to share memory between two different JavaScript instances. This can be done by creating an instance of SharedArrayBuffer and passing it from one thread to another using the same postMessage(value) method used for worker thread message passing. This results in an array of bytes that both threads can read and write to at the same time.

Overhead is incurred with message passing when data is serialized and deserialized. Such overhead doesnât need to exist in languages that support proper multithreading, as objects can be shared directly.

This is one of the biggest factors that necessitates running Node.js applications in a distributed manner. In order to handle scale, enough instances need to run so that any single instance of a Node.js process doesnât completely saturate its available CPU.

Now that youâve looked at JavaScriptâthe language that powers Node.jsâitâs time to look at Node.js itself.

The solution to the surprise interview question is provided in Table 1-1. The most important part is the order that the messages print, and the bonus is the time it takes them to print. Consider your bonus answer correct if youâre within a few milliseconds of the timing.

Log |

B |

E |

A |

D |

C |

Time |

1ms |

501ms |

502ms |

502ms |

502ms |

The first thing that happens is the function to log A is scheduled with a timeout of 0ms. Recall that this doesnât mean the function will run in 0ms; instead it is scheduled to run as early as 0 milliseconds but after the current stack ends. Next, the log B method is called directly, so itâs the first to print. Then, the log C function is scheduled to run as early as 100ms, and the log D is scheduled to happen as early as 0ms.

Then the application gets busy doing calculations with the while loop, which eats up half a second of CPU time. Once the loop concludes, the final call for log E is made directly and it is now the second to print. The current stack is now complete. At this point, only a single stack has executed.

Once thatâs done, the event loop looks for more work to do. It checks the queue and sees that there are three tasks scheduled to happen. The order of items in the queue is based on the provided timer value and the order that the setTimeout() calls were made. So, it first processes the log A function. At this point the script has been running for roughly half a second, and it sees that log A is roughly 500ms overdue, and so that function is executed. The next item in the queue is the log D function, which is also roughly 500ms overdue. Finally, the log C function is run and is roughly 400ms overdue.

Quick Node.js Overview

Node.js fully embraces the Continuation-Passing Style (CPS) pattern throughout its internal modules by way of callbacksâfunctions that are passed around and invoked by the event loop once a task is complete. In Node.js parlance, functions that are invoked in the future with a new stack are said to be run asynchronously. Conversely, when one function calls another function in the same stack, that code is said to run synchronously.

The types of tasks that are long-running are typically I/O tasks. For example, imagine that your application wants to perform two tasks. Task A is to read a file from disk, and Task B is to send an HTTP request to a third-party service. If an operation depends on both of these tasks being performedâan operation such as responding to an incoming HTTP requestâthe application can perform the operations in parallel, as shown in Figure 1-2. If they couldnât be performed at the same timeâif they had to be run sequentiallyâthen the overall time it takes to respond to the incoming HTTP request would be longer.

Figure 1-2. Visualization of sequential versus parallel I/O

At first this seems to violate the single-threaded nature of JavaScript. How can a Node.js application both read data from disk and make an HTTP request at the same time if JavaScript is single-threaded?

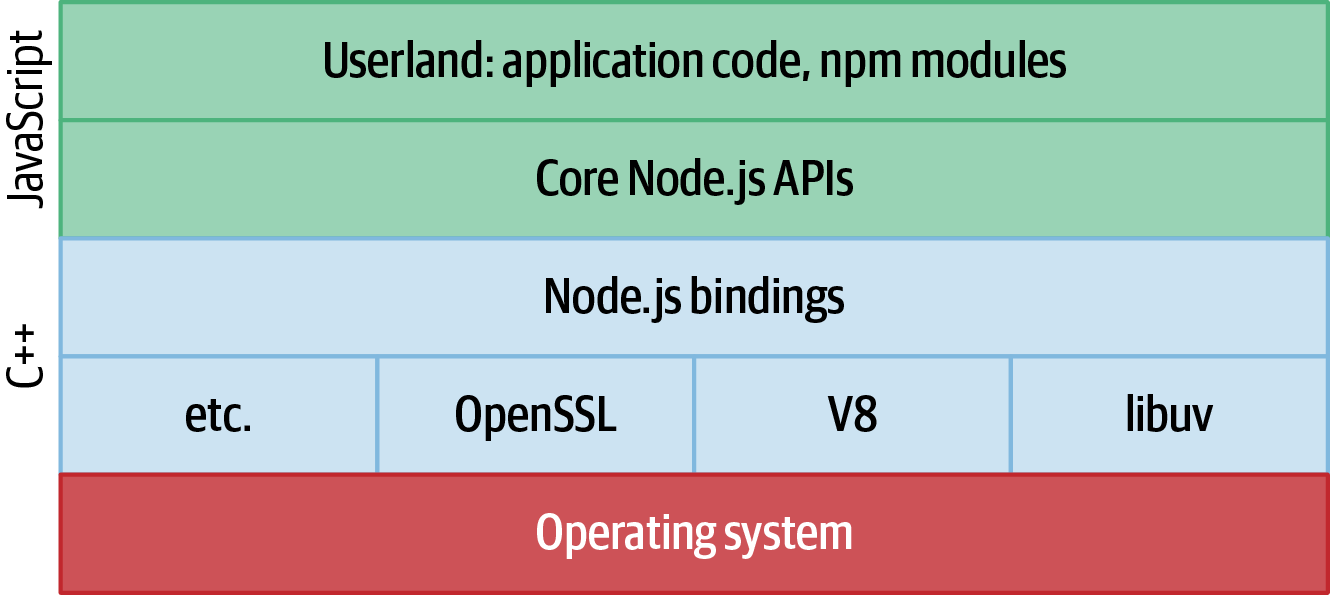

This is where things start to get interesting. Node.js itself is multithreaded. The lower levels of Node.js are written in C++. This includes third-party tools like libuv, which handles operating system abstractions and I/O, as well as V8 (the JavaScript engine) and other third-party modules. The layer above that, the Node.js binding layer, also contains a bit of C++. Itâs only the highest layers of Node.js that are written in JavaScript, such as parts of the Node.js APIs that deal directly with objects provided by userland.2 Figure 1-3 depicts the relationship between these different layers.

Figure 1-3. The layers of Node.js

Internally, libuv maintains a thread pool for managing I/O operations, as well as CPU-heavy operations like crypto and zlib. This is a pool of finite size where I/O operations are allowed to happen. If the pool only contains four threads, then only four files can be read at the same time. Consider Example 1-3 where the application attempts to read a file, does some other work, and then deals with the file content. Although the JavaScript code within the application is able to run, a thread within the bowels of Node.js is busy reading the content of the file from disk into memory.

Example 1-3. Node.js threads

#!/usr/bin/env nodeconstfs=require('fs');fs.readFile('/etc/passwd',(err,data)=>{if(err)throwerr;console.log(data);});setImmediate(()=>{console.log('This runs while file is being read');});

Tip

The libuv thread pool size defaults to four, has a max of 1,024, and can be overridden by setting the UV_THREADPOOL_SIZE=<threads> environment variable. In practice itâs not that common to modify it and should only be done after benchmarking the effects in a perfect replication of production. An app running locally on a macOS laptop will behave very differently than one in a container on a Linux server.

Internally, Node.js maintains a list of asynchronous tasks that still need to be completed. This list is used to keep the process running. When a stack completes and the event loop looks for more work to do, if there are no more operations left to keep the process alive, it will exit. That is why a very simple application that does nothing asynchronous is able to exit when the stack ends. Hereâs an example of such an application:

console.log('Print, then exit');

However, once an asynchronous task has been created, this is enough to keep a process alive, like in this example:

setInterval(()=>{console.log('Process will run forever');},1_000);

There are many Node.js API calls that result in the creation of objects that keep the process alive. As another example of this, when an HTTP server is created, it also keeps the process running forever. A process that closes immediately after an HTTP server is created wouldnât be very useful.

There is a common pattern in the Node.js APIs where such objects can be configured to no longer keep the process alive. Some of these are more obvious than others. For example, if a listening HTTP server port is closed, then the process may choose to end. Additionally, many of these objects have a pair of methods attached to them, .unref() and .ref(). The former method is used to tell the object to no longer keep the process alive, whereas the latter does the opposite.

Example 1-4 demonstrates this happening.

Example 1-4. The common .ref() and .unref() methods

constt1=setTimeout(()=>{},1_000_000);constt2=setTimeout(()=>{},2_000_000);// ...t1.unref();// ...clearTimeout(t2);

There is now one asynchronous operation keeping Node.js alive. The process should end in 1,000 seconds.

There are now two such operations. The process should now end in 2,000 seconds.

The t1 timer has been unreferenced. Its callback can still run in 1,000 seconds, but it wonât keep the process alive.

The t2 timer has been cleared and will never run. A side effect of this is that it no longer keeps the process alive. With no remaining asynchronous operations keeping the process alive, the next iteration of the event loop ends the process.

This example also highlights another feature of Node.js: not all of the APIs that exist in browser JavaScript behave the same way in Node.js. The setTimeout() function, for example, returns an integer in web browsers. The Node.js implementation returns an object with several properties and methods.

The event loop has been mentioned a few times, but it really deserves to be looked at in much more detail.

The Node.js Event Loop

Both the JavaScript that runs in your browser and the JavaScript that runs in Node.js come with an implementation of an event loop. Theyâre similar in that they both schedule and execute asynchronous tasks in separate stacks. But theyâre also different since the event loop used in a browser is optimized to power modern single page applications, while the one in Node.js has been tuned for use in a server. This section covers, at a high level, the event loop used in Node.js. Understanding the basics of the event loop is beneficial because it handles all the scheduling of your application codeâand misconceptions can lead to poor performance.

As the name implies, the event loop runs in a loop. The elevator pitch is that it manages a queue of events that are used to trigger callbacks and move the application along. But, as you might expect, the implementation is much more nuanced than that. It executes callbacks when I/O events happen, like a message being received on a socket, a file changing on disk, a setTimeout() callback being ready to run, etc.

At a low level, the operating system notifies the program that something has happened. Then, libuv code inside the program springs to life and figures out what to do. If appropriate, the message then bubbles up to code in a Node.js API, and this can finally trigger a callback in application code. The event loop is a way to allow these events in lower level C++ land to cross the boundary and run code in JavaScript.

Event Loop Phases

The event loop has several different phases to it. Some of these phases donât deal with application code directly; for example, some might involve running JavaScript code that internal Node.js APIs are concerned about. An overview of the phases that handle the execution of userland code is provided in Figure 1-4.

Each one of these phases maintains a queue of callbacks that are to be executed. Callbacks are destined for different phases based on how they are used by the application. Here are some details about these phases:

- Poll

-

The poll phase executes I/O-related callbacks. This is the phase that application code is most likely to execute in. When your main application code starts running, it runs in this phase.

- Check

-

In this phase, callbacks that are triggered via

setImmediate()are executed. - Close

-

This phase executes callbacks that are triggered via

EventEmittercloseevents. For example, when anet.ServerTCP server closes, it emits acloseevent that runs a callback in this phase. - Timers

-

Callbacks scheduled using

setTimeout()andsetInterval()are executed in this phase. - Pending

-

Special system events are run in this phase, like when a

net.SocketTCP socket throws anECONNREFUSEDerror.

To make things a little more complicated, there are also two special microtask queues that can have callbacks added to them while a phase is running. The first microtask queue handles callbacks that have been registered using process.nextTick().3 The second microtask queue handles promises that reject or resolve. Callbacks in the microtask queues take priority over callbacks in the phaseâs normal queue, and callbacks in the next tick microtask queue run before callbacks in the promise microtask queue.

Figure 1-4. Notable phases of the Node.js event loop

When the application starts running, the event loop is also started and the phases are handled one at a time. Node.js adds callbacks to different queues as appropriate while the application runs. When the event loop gets to a phase, it will run all the callbacks in that phaseâs queue. Once all the callbacks in a given phase are exhausted, the event loop then moves on to the next phase. If the application runs out of things to do but is waiting for I/O operations to complete, itâll hang out in the poll phase.

Code Example

Theory is nice and all, but to truly understand how the event loop works, youâre going to have to get your hands dirty. This example uses the poll, check, and timers phases. Create a file named event-loop-phases.js and add the content from Example 1-5 to it.

Example 1-5. event-loop-phases.js

constfs=require('fs');setImmediate(()=>console.log(1));Promise.resolve().then(()=>console.log(2));process.nextTick(()=>console.log(3));fs.readFile(__filename,()=>{console.log(4);setTimeout(()=>console.log(5));setImmediate(()=>console.log(6));process.nextTick(()=>console.log(7));});console.log(8);

If you feel inclined, try to guess the order of the output, but donât feel bad if your answer doesnât match up. This is a bit of a complex subject.

The script starts off executing line by line in the poll phase. First, the fs module is required, and a whole lot of magic happens behind the scenes. Next, the

setImmediate() call is run, which adds the callback printing 1 to the check queue. Then, the promise resolves, adding callback 2 to the promise microtask queue.

process.nextTick() runs next, adding callback 3 to the next tick microtask queue. Once thatâs done the fs.readFile() call tells the Node.js APIs to start reading a file, placing its callback in the poll queue once itâs ready. Finally, log number 8 is called directly and is printed to the screen.

Thatâs it for the current stack. Now the two microtask queues are consulted. The next tick microtask queue is always checked first, and callback 3 is called. Since thereâs only one callback in the next tick microtask queue, the promise microtask queue is checked next. Here callback 2 is executed. That finishes the two microtask queues, and the current poll phase is complete.

Now the event loop enters the check phase. This phase has callback 1 in it, which is then executed. Both the microtask queues are empty at this point, so the check phase ends. The close phase is checked next but is empty, so the loop continues. The same happens with the timers phase and the pending phase, and the event loop continues back around to the poll phase.

Once itâs back in the poll phase, the application doesnât have much else going on, so it basically waits until the file has finished being read. Once that happens the

fs.readFile() callback is run.

The number 4 is immediately printed since itâs the first line in the callback. Next, the setTimeout() call is made and callback 5 is added to the timers queue. The

setImmediate() call happens next, adding callback 6 to the check queue. Finally, the

process.nextTick() call is made, adding callback 7 to the next tick microtask queue. The poll queue is now finished, and the microtask queues are again consulted. Callback 7 runs from the next tick queue, the promise queue is consulted and found empty, and the poll phase ends.

Again, the event loop switches to the check phase where callback 6 is encountered. The number is printed, the microtask queues are determined to be empty, and the phase ends. The close phase is checked again and found empty. Finally the timers phase is consulted wherein callback 5 is executed. Once thatâs done, the application doesnât have any more work to do and it exits.

The log statements have been printed in this order: 8, 3, 2, 1, 4, 7, 6, 5.

When it comes to async functions, and operations that use the await keyword, code still plays by the same event loop rules. The main difference ends up being the syntax.

Here is an example of some complex code that interleaves awaited statements with statements that schedule callbacks in a more straightforward manner. Go through it and write down the order in which you think the log statements will be printed:

constsleep_st=(t)=>newPromise((r)=>setTimeout(r,t));constsleep_im=()=>newPromise((r)=>setImmediate(r));(async()=>{setImmediate(()=>console.log(1));console.log(2);awaitsleep_st(0);setImmediate(()=>console.log(3));console.log(4);awaitsleep_im();setImmediate(()=>console.log(5));console.log(6);await1;setImmediate(()=>console.log(7));console.log(8);})();

When it comes to async functions and statements preceded with await, you can almost think of them as being syntactic sugar for code that uses nested callbacks, or even as a chain of .then() calls. The following example is another way to think of the previous example. Again, look at the code and write down the order in which you think the log commands will print:

setImmediate(()=>console.log(1));console.log(2);Promise.resolve().then(()=>setTimeout(()=>{setImmediate(()=>console.log(3));console.log(4);Promise.resolve().then(()=>setImmediate(()=>{setImmediate(()=>console.log(5));console.log(6);Promise.resolve().then(()=>{setImmediate(()=>console.log(7));console.log(8);});}));},0));

Did you come up with a different solution when you read this second example? Did it seem easier to reason about? This time around, you can more easily apply the same rules about the event loop that have already been covered. In this example itâs hopefully clearer that, even though the resolved promises make it look like the code

that follows should be run much earlier, they still have to wait for the underlying

setTimeout() or setImmediate() calls to fire before the program can continue.

The log statements have been printed in this order: 2, 1, 4, 3, 6, 8, 5, 7.

Event Loop Tips

When it comes to building a Node.js application, you donât necessarily need to know this level of detail about the event loop. In a lot of cases it âjust worksâ and you usually donât need to worry about which callbacks are executed first. That said, there are a few important things to keep in mind when it comes to the event loop.

Donât starve the event loop. Running too much code in a single stack will stall the event loop and prevent other callbacks from firing. One way to fix this is to break CPU-heavy operations up across multiple stacks. For example, if you need to process 1,000 data records, you might consider breaking it up into 10 batches of 100 records, using setImmediate() at the end of each batch to continue processing the next batch. Depending on the situation, it might make more sense to offload processing to a child process.

You should never break up such work using process.nextTick(). Doing so will lead to a microtask queue that never emptiesâyour application will be trapped in the same phase forever! Unlike an infinitely recursive function, the code wonât throw a RangeError. Instead, itâll remain a zombie process that eats through CPU. Check out the following for an example of this:

constnt_recursive=()=>process.nextTick(nt_recursive);nt_recursive();// setInterval will never runconstsi_recursive=()=>setImmediate(si_recursive);si_recursive();// setInterval will runsetInterval(()=>console.log('hi'),10);

In this example, the setInterval() represents some asynchronous work that the application performs, such as responding to incoming HTTP requests. Once the nt_recursive() function is run, the application ends up with a microtask queue that never empties and the asynchronous work never gets processed. But the alternative version si_recursive() does not have the same side effect. Making setImmediate() calls within a check phase adds callbacks to the next event loop iterationâs check phase queue, not the current phaseâs queue.

Donât introduce Zalgo. When exposing a method that takes a callback, that callback should always be run asynchronously. For example, itâs far too easy to write something like this:

// Antipatternfunctionfoo(count,callback){if(count<=0){returncallback(newTypeError('count > 0'));}myAsyncOperation(count,callback);}

The callback is sometimes called synchronously, like when count is set to zero, and sometimes asynchronously, like when count is set to one. Instead, ensure the callback is executed in a new stack, like in this example:

functionfoo(count,callback){if(count<=0){returnprocess.nextTick(()=>callback(newTypeError('count > 0')));}myAsyncOperation(count,callback);}

In this case, either using setImmediate() or process.nextTick() is okay; just make sure you donât accidentally introduce recursion. With this reworked example, the callback is always run asynchronously. Ensuring the callback is run consistently is important because of the following situation:

letbar=false;foo(3,()=>{assert(bar);});bar=true;

This might look a bit contrived, but essentially the problem is that when the callback is sometimes run synchronously and sometimes run asynchronously, the value of bar may or may not have been modified. In a real application this can be the difference between accessing a variable that may or may not have been properly initialized.

Now that youâre a little more familiar with the inner workings of Node.js, itâs time to build out some sample applications.

Sample Applications

In this section youâll build a pair of small sample Node.js applications. They are intentionally simple and lack features that real applications require. Youâll then add to the complexity of these base applications throughout the remainder of the book.

I struggled with the decision to avoid using any third-party packages in these examples (for example, to stick with the internal http module), but using these packages reduces boilerplate and increases clarity. That said, feel free to choose whatever your preferred framework or request library is; itâs not the intent of this book to ever prescribe a particular package.

By building two services instead of just one, you can combine them later in interesting ways, like choosing the protocol they communicate with or the manner in which they discover each other.

The first application, namely the recipe-api, represents an internal API that isnât accessed from the outside world; itâll only be accessed by other internal applications. Since you own both the service and any clients that access it, youâre later free to make protocol decisions. This holds true for any internal service within an organization.

The second application represents an API that is accessed by third parties over the internet. It exposes an HTTP server so that web browsers can easily communicate with it. This application is called the web-api.

Service Relationship

The web-api service is downstream of the recipe-api and, conversely, the recipe-api is upstream of the web-api. Figure 1-5 is a visualization of the relationship between these two services.

Figure 1-5. The relationship between web-api and recipe-api

Both of these applications can be referred to as servers because they are both actively listening for incoming network requests. However, when describing the specific relationship between the two APIs (arrow B in Figure 1-5), the web-api can be referred to as the client/consumer and the recipe-api as the server/producer. Chapter 2 focuses on this relationship. When referring to the relationship between web browser and web-api (arrow A in Figure 1-5), the browser is called the client/consumer, and web-api is then called the server/producer.

Now itâs time to examine the source code of the two services. Since these two services will evolve throughout the book, now would be a good time to create some sample projects for them. Create a distributed-node/ directory to hold all of the code samples youâll create for this book. Most of the commands youâll run require that youâre inside of this directory, unless otherwise noted. Within this directory, create a web-api/, a recipe-api/, and a shared/ directory. The first two directories will contain different service representations. The shared/ directory will contain shared files to make it easier to apply the examples in this book.4

Youâll also need to install the required dependencies. Within both project directories, run the following command:

$ npm init -yThis creates basic package.json files for you. Once thatâs done, run the appropriate npm install commands from the top comment of the code examples. Code samples use this convention throughout the book to convey which packages need to be installed, so youâll need to run the init and install commands on your own after this. Note that each project will start to contain superfluous dependencies since the code samples are reusing directories. In a real-world project, only necessary packages should be listed as dependencies.

Producer Service

Now that the setup is complete, itâs time to view the source code. Example 1-6 is an internal Recipe API service, an upstream service that provides data. For this example it will simply provide static data. A real-world application might instead retrieve data from a database.

Example 1-6. recipe-api/producer-http-basic.js

#!/usr/bin/env node// npm install fastify@3.2constserver=require('fastify')();constHOST=process.env.HOST||'127.0.0.1';constPORT=process.env.PORT||4000;console.log(`worker pid=${process.pid}`);server.get('/recipes/:id',async(req,reply)=>{console.log(`worker request pid=${process.pid}`);constid=Number(req.params.id);if(id!==42){reply.statusCode=404;return{error:'not_found'};}return{producer_pid:process.pid,recipe:{id,name:"Chicken Tikka Masala",steps:"Throw it in a pot...",ingredients:[{id:1,name:"Chicken",quantity:"1 lb",},{id:2,name:"Sauce",quantity:"2 cups",}]}};});server.listen(PORT,HOST,()=>{console.log(`Producer running at http://${HOST}:${PORT}`);});

Tip

The first line in these files is known as a shebang. When a file begins with this line and is made executable (by running chmod +x filename.js./filename.js

Once this service is ready, you can work with it in two different terminal windows.5 Execute the following commands; the first starts the recipe-api service, and the second tests that itâs running and can return data:

$node recipe-api/producer-http-basic.js# terminal 1$curl http://127.0.0.1:4000/recipes/42# terminal 2

You should then see JSON output like the following (whitespace added for clarity):

{"producer_pid":25765,"recipe":{"id":42,"name":"Chicken Tikka Masala","steps":"Throw it in a pot...","ingredients":[{"id":1,"name":"Chicken","quantity":"1 lb"},{"id":2,"name":"Sauce","quantity":"2 cups"}]}}

Consumer Service

The second service, a public-facing Web API service, doesnât contain as much data but is more complex since itâs going to make an outbound request. Copy the source code from Example 1-7 to the file located at web-api/consumer-http-basic.js.

Example 1-7. web-api/consumer-http-basic.js

#!/usr/bin/env node// npm install fastify@3.2 node-fetch@2.6constserver=require('fastify')();constfetch=require('node-fetch');constHOST=process.env.HOST||'127.0.0.1';constPORT=process.env.PORT||3000;constTARGET=process.env.TARGET||'localhost:4000';server.get('/',async()=>{constreq=awaitfetch(`http://${TARGET}/recipes/42`);constproducer_data=awaitreq.json();return{consumer_pid:process.pid,producer_data};});server.listen(PORT,HOST,()=>{console.log(`Consumer running at http://${HOST}:${PORT}/`);});

Make sure that the recipe-api service is still running. Then, once youâve created the file and have added the code, execute the new service and generate a request using the following commands:

$node web-api/consumer-http-basic.js# terminal 1$curl http://127.0.0.1:3000/# terminal 2

The result of this operation is a superset of the JSON provided from the previous request:

{"consumer_pid":25670,"producer_data":{"producer_pid":25765,"recipe":{...}}}

The pid values in the responses are the numeric process IDs of each service. These PID values are used by operating systems to differentiate running processes. Theyâre included in the responses to make it obvious that the data came from two separate processes. These values are unique across a particular running operating system, meaning there should not be duplicates on the same running machine, though there will be collisions across separate machines, virtual or otherwise.

1 Even a multithreaded application is constrained by the limitations of a single machine.

2 âUserlandâ is a term borrowed from operating systems, meaning the space outside of the kernel where a userâs applications can run. In the case of Node.js programs, it refers to application code and npm packagesâbasically, everything not built into Node.js.

3 A âtickâ refers to a complete pass through the event loop. Confusingly, setImmediate() takes a tick to run, whereas process.nextTick() is more immediate, so the two functions deserve a name swap.

4 In a real-world scenario, any shared files should be checked in via source control or loaded as an outside dependency via an npm package.

5 Many of the examples in this book require you two run multiple processes, with some acting as clients and some as servers. For this reason, youâll often need to run processes in separate terminal windows. In general, if you run a command and it doesnât immediately exit, it probably requires a dedicated terminal.

Get Distributed Systems with Node.js now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.