Chapter 6. Beyond MapReduce

Applications and Organizations

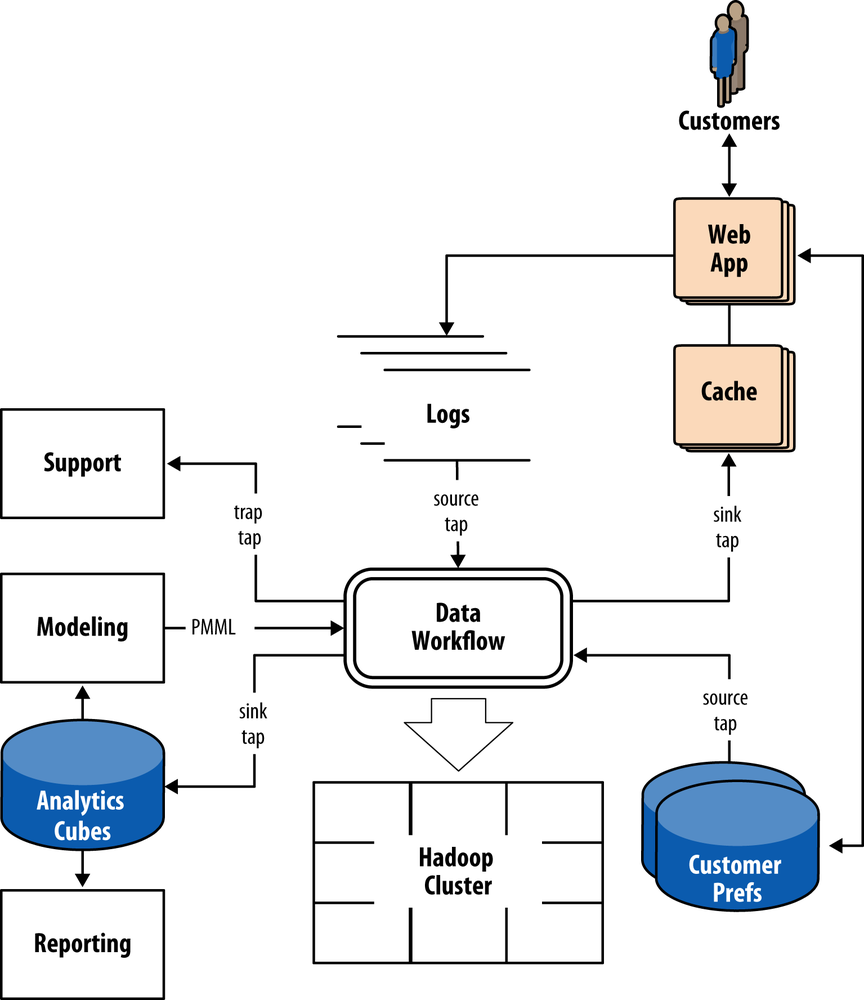

Overall, the notion of an Enterprise data workflow spans well beyond Hadoop, integrating many different kinds of frameworks and processes. Consider the architecture in Figure 6-1 as a strawman that shows where a typical Enterprise data workflow runs.

In the center there is a workflow consuming from some unstructured data—most likely some kind of machine data, such as log files—plus some other, more structured data from another framework, such as customer profiles. That workflow runs on an Apache Hadoop cluster, and possibly on other topologies, such as in-memory data grids (IMDGs).

Some of the results go directly to a frontend use case, such as getting pushed into Memcached, which is backing a customer API. Line of business use cases are what drive most of the need for Big Data apps.

Some of the results also go to the back office. Enterprise organizations almost always have made substantial investments in data infrastructure for the back office, in the process used to integrate systems and coordinate different departments, and in the people trained in that process. Workflow results such as data cubes get pushed from the Hadoop cluster out to an analytics framework. In turn, those data cubes get consumed for reporting needs, data science work, customer support, etc.

Figure 6-1. Strawman workflow architecture

We can also view this ...

Get Enterprise Data Workflows with Cascading now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.