Chapter 1. Designing Your Server Environment

Installation seems like such a benign thing, and traditionally, in the Mac OS world, it has been: sit down in front of the server, insert the install CD, format the drive, install, and repeat. Largely unchanged since the word CD replaced floppy, server installation is a process most administrators and technical staff are familiar with, and if nothing else it seems like a logical—if also a very boring—way to begin a book. Unix administrators, however, have long had a number of other options: possibly still boring, but in any case much more powerful and flexible from a systems management standpoint. With Mac OS X, and especially with Mac OS X Server, many of these options make their way to the Mac world, often with Apple’s characteristic ease of use.

A second and very important aspect of this process is planning. Technology vendors—particularly Apple—endeavor to remove complexity from the computing experience, in many cases very successfully. Integration into heterogeneous environments, though, is still a complex issue with a number of facets. Good planning can go a long way towards reducing the number of headaches and unexpected speed bumps that administrators experience. Unfortunately, planning is a little-documented and often-neglected part of deployment. This chapter examines that pre-installation process, starting with purchasing and policy decisions and traveling down several feasible installation and configuration routes.

Tip

Covering installation planning in the first chapter might seem a little awkward. You’ll be asked to take a lot of things into consideration, many of which you may not have any experience with yet, but most of which will be covered later in the book. With that in mind, this first chapter contains a lot of forward pointers to other material. Feel free to use it as a jumping-off point in order to explore concepts that are new to you.

Planning

The installation process actually begins well before a machine is booted for the first time. It can and should begin even before the machine arrives on site. Planning is an often-neglected aspect of server management, and probably the single most important factor in reducing support costs. This is especially true for a server product, since a server installation by its very nature affects more end users than a workstation install. While it’s important to keep from getting stuck in the quagmire of chronic bureaucratic deliberation and massive committee decision making, rushing into a server install is the last thing you want to do. Specific areas of planning focus should include the hardware platform, storage platform, volume architecture and management, and networking.

Hardware

Apple’s Xserve, a 1U (single standard rack-size) server product, has effectively ended most conversations about hardware choices. When the numbers are run, the Xserve, with its included unlimited client license version of Mac OS X Server, is almost always a better value than a Power Mac G5 with a separate Server license tacked on. The only real exceptions are very small deployments—particularly in education environments,[*] environments with existing hardware that can be put to work as a server, or when the purchase of new hardware can’t be justified.

That said, Mac OS X Server can run on virtually any hardware platform that Mac OS X can. In fact, much of the testing that went into this book was carried out on a set of iBooks I carry around while traveling. This isn’t to imply that Apple supports such a configuration; in fact, portables are specifically not supported by Mac OS X Server. Real deployments should always conform to Apple’s list of supported hardware, if for no reason other than that getting support for an unsupported hardware platform can be quite difficult.

Tip

According to Apple’s web site, Mac OS X Server requires an Xserve, Power Mac G5, G4, or G3, iMac, or eMac computer; a minimum of 128 MB RAM or at least 256 MB RAM for high-demand servers running multiple services; built-in USB; and 4 GB of available disk space. These specifications are probably underestimated for most server roles.

The choice of a supported platform, though, is really only the beginning of a good planning process. Hardware configuration is an entirely different matter. Mac OS X Server is a general-purpose server product with literally hundreds of features. This means that it’s very difficult to draw conclusions about hardware requirements without defining what the server will be used for. For instance, an iMac with 128 MB RAM could easily support thousands of static-content HTTP queries a day, while an Apple Filing Protocol (AFP) server supporting the same number of connections would need to be significantly more capable. The point is that different services—even at the same scale—can have very different requirements, and those requirements play an important role in your choice of hardware. Going into great depth regarding the performance bottlenecks of various services is well beyond the scope of this chapter, but is covered in some depth in chapters specific to each service. From this chapter you should take a framework for this planning; the actual details will come later.

One very important concept relates to system architecture: determining where bottlenecks actually exist in a system (be it a single machine or a very large network) in order to either work around those bottlenecks or more effectively optimize performance. A good example of this is the SCSI 320 standard (discussed later in this chapter). A SCSI 320 bus is theoretically capable of 320 megabytes per second (MBS) of data throughput. However, when installed in Macs prior to the G5, a SCSI 320 disk’s throughput would max out at a speed very similar to the much more affordable SCSI 160, mainly due to the constraints of the G4’s PCI bus. Luckily, Apple publishes fairly detailed hardware schematics in its hardware developer tech notes. See http://developer.apple.com/documentation/Hardware/Hardware.html for details.

Storage

Storage is one of the biggest variables in a server install, probably because the price of hard disk space has dropped enough for a wide variety of solutions to develop. The gauntlet of choices has only increased with Apple’s entry into the storage market. First, the Xserve was designed specifically to accommodate a large internal storage. Later, Apple released the Xserve RAID, an IDE-based Fibre Channel storage array. More recently, the introduction of Xsan, Apple’s Storage Area Network (SAN) product, has added another piece to the puzzle.

Tip

Two trends should be noted. First, in both the Xserve and Xserve RAID, Apple has chosen the economy of various IDE flavors—specifically--ATA 100 and Serial ATA—over the well-established nature of SCSI connectivity. Time will tell whether or not this choice—of size and redundancy over performance and reliability—was a good one. In the meantime, it is yet another area in which Apple has taken a strong stand in defense of some technology in a new role. Second, on a related note, the choice of fiber and the adoption of SAN technology illustrates a common trend seen in Apple products: bringing enterprise technology into the workgroup and driving down the price of previously very expensive products. Xsan is not covered in this book.

Confusion regarding storage really arises on two different levels: choice of storage technology and storage configuration. We’ll look at both of these issues as they relate to the planning process, as well as examine some specific products and the architectural decisions that went into them. Our discussion focuses on the Xserve, since it is the most common Mac OS X Server platform. This focus doesn’t really narrow our conversation much, since the Xserve is mostly a Mac in a server enclosure. It does, though, give us some focus.

Storage technologies

At one time in the not-so-distant past, discussions of server storage technologies (outside of very massive high-end hardware) tended to be fairly simple. Server platforms have traditionally supported SCSI disks—that was that—and then you could move into discussions of volume management. Apple was the first vendor to change that, by using ATA disks in the Xserve in such a way that they became viable for server products. This feature has spurred considerable debate and (toward the overall good of the server industry) made our discussion more complex. This section examines various storage technologies and highlights their strengths, weaknesses, and relative cost. Discussion of generic storage technologies might seem to be beyond the scope of this book, given its stated goal of refraining from rehashing material that’s widely available elsewhere. However, the topic of storage technologies is particularly germane to Mac OS X Server system administration for a number of reasons:

As mentioned, Apple has really pioneered use of IDE/ATA drives in server products in both the Xserve and Xserve RAID. This design necessitates a number of discussions that previous system administration titles have had the luxury of glossing over.

The introduction of the Xserve RAID brings Fibre Channel to the Mac in a big way. Fibre is an exciting technology and, as something new to a large portion of this book’s audience, something worth talking about.

Most Unix or Windows administrators wouldn’t consider FireWire to be a viable storage platform—but due to its popularity in the Mac world, we must cover it.

The recent rise of serial ATA is a huge development in storage technologies in general, much less the Mac platform alone, and is therefore something that warrants coverage.

What it really boils down to is this: we can no longer say, “Real servers use SCSI.” It oversimplifies the issue, and it’s just not the case. If you’re already familiar with these technologies (ATA, SATA, Fibre Channel, SCSI, and RAID), you might want to skip the remainder of this section. Then again, maybe you want to revisit some of them and possibly gain a new perspective.

AT Attachment (ATA) and Integrated Drive Electronics (IDE)

ATA and IDE are generic terms for a set of technologies and standards that grew out of the Wintel architecture. Long considered a PC-class technology (rather than being applicable to the workstation or server markets), ATA and IDE have come a long way in recent years, enough that under the right circumstances, they can be considered appropriate for server deployments. Specific improvements include:

- Vastly improved throughput

Theoretical maximum throughput for IDE drives has risen steadily throughout the evolution of the protocol. Today’s IDE drives have a theoretical maximum throughput of 133 MBS. While far less than SCSI’s current 320 MB maximum, this performance is sufficient for most applications, especially in the context of a well-engineered storage architecture, and especially given the far larger capacities and far lower prices of IDE drives.

Onboard cache also aids performance significantly. Current high-end IDE drives support up to 8 MB of onboard cache, vastly increasing read performance. Note, though, that theoretical throughput does not always equate with real-world performance, and more often than not, it’s IDE that benefits from this phenomenon (http://www.csl.cornell.edu/~bwhite/papers/ide_scsi.pdf).

- Advanced features

A long-held (and long-correct) assertion regarding IDE drives was that they lacked certain advanced features that SCSI supported; namely, tagged command queuing, scatter-gather I/O, and Direct Memory Access (DMA). The tremendous saturation of the low-end storage market, however, has forced vendors to innovate at a tremendous pace (in fact, all of these features are standard among recent high-end ATA-100 and -133 offerings). It would be presumptuous to assume that these features—in many cases first-generation capabilities—were as mature or stable as their SCSI counterparts; however, initial results have been very promising, and there’s no reason to assume that IDE won’t continue to evolve at a rapid pace.

- Quality and reliability

It is important to realize that there’s actually little difference among drive mechanisms inherent the fact that the drive is SCSI or IDE. These terms refer to the protocol and hardware standards used to communicate with the host CPU, which therefore implies that one way or another the drive controller electronics will have certain characteristics. More often than not, actual quality differences are simply reflections of SCSI’s higher price and more selective target market. Vendors, using the SCSI to differentiate their product lines, add larger caches and faster drive mechanisms to SCSI products. Faster mechanisms (SCSI drives regularly spin at 10,000–15,000 RPM, whereas even high-end mechanisms used in the IDE space spin at 7,200 RPM) place greater stress on mechanical systems in general and therefore require a higher standard of engineering. They are also primarily responsible for SCSI’s superior seek times. There is no technical reason why a drive vendor couldn’t drop an ATA-133 controller onto a 15,000 RPM mechanism—and indeed, as illustrated earlier, several vendors are moving to larger and on-disk caches for IDE drives. This trend towards better-engineered, higher-quality ATA features, has resulted in IDE drives that are more appropriate for server roles than ever before.

The SCSI protocol itself does maintain several architectural advantages, although they are not necessarily reflected by performance differences. SCSI is a general-purpose communications protocol, designed to be extensible to talk to a number of systems and devices. ATA is a disk communication protocol that has been extended in a sometimes ad hoc way to include other types of deices. SCSI also wins when it comes to physical interconnect (hardware) features. In any incarnation, but especially more recent ones, it supports much longer cable lengths and many more devices than ATA. In fact, ATA is still limited to two devices per bus, and an IDE device can sometimes monopolize the IDE bus in such a way that the other device on the bus has limited access to system resources. Thus, despite ATA’s growth, it should be deployed only very carefully in most servers. There is often a significant cost premium between the low end of ATA and the high end, where greater reliability and more advanced features are showing up. Drives destined for server deployment should, at a minimum, support tagged command queuing and scatter and gather I/O. Large caches—at least 8 MB—are also a must. Additionally, device management and conflict remain big issues in the IDE world, so in a server environment, each drive should have its own dedicated bus. Finally, due to decreased quality assurance and shorter warranties associated with IDE product lines, ATA drives should, whenever possible, be deployed redundantly.

Luckily, Apple has taken care of most of these requirements in its server products, showing that good overall system architecture can overcome many of IDE’s shortcomings. The bottom line is that IDE might go a long way towards meeting your storage needs, especially when managed appropriately. While SCSI might be architecturally superior and ostensibly a better performer, its performance and feature-to- price ratio is typically much lower than IDE’s, and IDE’s vastly lower cost makes for easy redundancy and affordable expansion.

Tip

As of this writing, current Xserves (which use the G5 processor) utilize serial ATA and support three (rather than four) drives. The Xserve RAID, however, still uses ATA 100.

Serial ATA, or SATA (first included by Apple in the PowerMac G5), is a backwards-compatible evolution of the ATA protocol. The fundamental architectural difference between the two is that Serial ATA is, as the name implies, a serial rather than a parallel technology. This might at first seem counter-intuitive; after all, sending a bunch of data in parallel seems faster than sending the same data serially. However, serial technologies have proven their viability in recent years, notably in the form of USB, FireWire, and Fibre Channel . In general, serial technologies require fewer wires-- instead of multiple parallel channels, they operate on the concept of having one channel each way, to and from the host to the device. This reduction in the number of wires in the cable means that the electronic noise associated with them is reduced, allowing for much longer wires and (theoretically) much faster operation.

SATA has all the benefits of ATA, along with several added bonuses:

Because it’s a Point-to-Point, rather than a multidevice bus, there are no device conflicts or master-slave issues.

SATA is also hot-pluggable (similar to FireWire), and requires a much thinner cable than the IDE ribbon.

It promises more widespread support for advanced drive features that are otherwise just making their way into the ATA space, like tagged command queuing and overlapping commands.

Right now SATA doesn’t really offer a significant performance increase over ATA, but as system bus performance improves, that difference will become more and more pronounced. While SATA isn’t revolutionary in any sense of the word, it is a very complete evolution of the ATA standard and it does go a long way towards legitimizing ATA in the server space. Nonetheless, the requirements placed on IDE should also be enforced before deploying SATA drives in a server role.

Fibre Channel

Fibre Channel is a serial storage protocol that is common in data center and enterprise environments. It is a high-performance flexible technology that is especially notable due to Apple’s move towards it with the Xserve RAID and Xsan products. Fibre Channel was designed to meet several of SCSI’s limitations; specifically, those related to architecture, cable length and device support.

An easy way to understand this is to look at the name SCSI. It’s an acronym for Small Computer Systems Interface, and although it has turned out to be extremely extensible, its evolution from a system designed for small-scale computer systems is undeniable. SCSI is a single-master technology. Generally, a single CPU (by way of a SCSI adaptor) has sole control over multiple SCSI devices. This is a difficult architecture to maintain in today’s world of centralized Storage Area Networks (SANs). Similarly, even in its most capable iteration, SCSI is limited to a cable length of 25 meters, and cannot deal with more than 16 devices. Although this is a vast simplification, the primary advantages of Fibre Channel are as follows.

- Architecture

Fibre Channel supports three topologies: Point-to-Point, Arbitrated Loop, and Switched Fabric. The simplest is Point-to-Point, which connects a single host to a single device; for instance, an Xserve RAID to an Xserve. Point-to-point connections resemble SCSI or FireWire connections from a conceptual standpoint, and are usually not difficult to grasp. The next topology is a Fibre Channel Arbitrated Loop, or FC-AL. In this architecture, all nodes are connected in a loop. A device wishing to establish communications takes control of the loop, essentially establishing a Point-to-Point connection with its target using other hosts on the loop as repeaters. A Fabric, or switched topology, is a Switched Fibre Channel network (relying on one or more Fibre Channel switches). Like any switched network, any node is able to communicate directly with any other. Each topology has its own strengths and weaknesses. Apple’s products specifically tend towards Point-to-Point and Switched Fabric topologies, although any host should be able to participate in an Arbitrated Loop. It’s worth pointing out that a Switched Fabric topology, while the most flexible and efficient choice, is also the most costly, due to the high cost of Fibre Channel switches. Like any high-end technology, this is likely to change sooner than later, especially with Apple’s entry into the fibre market. Apple’s Xsan product requires a Switched Fabric topology.

- Length

A single channel (using optical interconnects) may be up to 10 kilometers (KM) long. This feature poses a huge benefit to enterprise-wide SAN architectures, allowing for widely distributed systems. It does, though, prompt the question: “What is an optical interconnect?” Fibre Channel supports two kinds of media: copper and fiber optic. Copper media is used for shorter distances (up to 30 meters) and is relatively more affordable. Optical media is relatively more expensive, but can span up to 10 KM.

- Devices

A single FC-AL loop supports up to 127 devices. A Switched Fabric may have as many as 15,663,104.

It’s important to note that Fibre Channel storage is still limited by the underlying drive technology. A single ATA-backed fibre array typically is able to supply approximately 200 MBS. The real strength of fibre is that a single 2 GB channel could theoretically support several arrays being accessed at their full capacity (possibly a switched network with multiple hosts). This ability poses a huge benefit in even medium-sized enterprises by allowing IT architects to consolidate storage into a central array or group of arrays, allocating space on an as-needed basis to any number of hosts. Fibre is a high-bandwidth, high-performance standard, and there is no doubt that it makes a strong case for being the best storage choice in medium to large multiserver environments. The only real question is one of cost. Fibre, especially in a switched environment, is expensive, and the administrator will be forced to carefully weigh its benefits against its cost.

SCSI

Nobody ever got fired for deploying SCSI on a server (unless, of course, they exceeded their budget). SCSI is the stable, mature storage protocol against which all others are measured, and on which many server deployments are designed. Even referring to SCSI as a single standard, though, is a misstatement—SCSI is a continually evolving set of technologies loosely wrapped into three specifications: SCSI 1, SCSI 2, and SCSI 3. The biggest drawback to SCSI is its cost-per-density—storage vendors typically demand a 30% to 60% premium per megabyte for the privilege of running SCSI. It’s also been said that SCSI is unfriendly, although, like Unix in general, it’s actually just picky about who it makes friends with—so much that those high priests are said to be versed in SCSI voodoo. Cross-talk issues due to its parallel nature, termination, and cable length make it somewhat complex to deploy in larger environments. Other drawbacks include a complete lack of HVD SCSI adaptors on the Mac platform, keeping SCSI’s very high-end hardware away from Mac OS X. When all is said and done, though, SCSI makes a lot of sense in a number of environments, especially high-performance, cost-insensitive ones. SCSI’s high cost-per-density should scare anyone else away.

SCSI still stays a half-step ahead of ATA in terms of performance, but only a half step. So, if you deploy SCSI, be sure to choose the fastest hardware that can be considered stable. Also be sure to deploy SCSI sparingly, applying your high-performance SCSI toward high-demand applications (such as QuickTime Streaming or AFP-based Home directories). Even in these environments, though, fibre solutions might be applicable.

FireWire is the brand name for Apple’s implementation of the IEEE 1394 specification (http://developer.apple.com/firewire/ieee1394.html). Jointly developed by Apple and Sony, FireWire was designed specifically as a serial bus to support high-speed peripherals like digital video cameras and hard drives.

Each FireWire bus is capable of a theoretical throughput of 400 Mbps (100 MBS). FireWire 800--the standard’s second and newest version—doubles those capabilities. It is important once again to realize that those are performance numbers for the FireWire bus. When using FireWire as a storage mechanism, you are limited to the speed of the underlying drive (which is more often than not an ATA drive with a FireWire adaptor) and, to a lesser extent, the speed of the system bus. This means that testing, benchmarks, and public review are important components of the decision-making process when considering FireWire solutions.

Performance tends to vary widely. Even at its best, FireWire is not suited to heavy file serving duty. This tendency is due to the nature of FireWire, which was designed toward streaming data rather than random reads and writes (think digital video: both in terms of getting it off the DV camera and storing it on a filesystem). This is not to say that FireWire has no place in the system administrator’s toolkit; it’s simply that there are better solutions for file serving. Notably, FireWire is a particularly good backup medium. Tape backups, which have never been tremendously fast, are today are having trouble keeping up with current, high-volume, high-density storage solutions. FireWire drives—high-volume and relatively cheap—can play a surprisingly important role in a good backup solution. Similarly, FireWire excels at being sort of an advanced sneaker-net, with the capacity to hold a complete bootable Mac OS X system in addition to several system utilities.

If you’re reading this, you probably already have a good idea of what RAID is, at least in its simplest terms. RAID is a method of combining several disks and making them look like one volume to the operating system. There are a number of reasons for pursuing this strategy; as the name implies, redundancy is a common one. Having data in more than one place is important, especially with the rise of (theoretically) less reliable IDE drives in server roles. Contrary to its naming, though, RAID doesn’t always imply redundancy. It’s just as common, actually, to use RAID to increase throughput and decrease latency for read and write operations; the theory being that two disks working at once will be able to write or read twice as much data as one. The goal in many cases, of course, is to maximize both factors—redundancy and performance—in a particular RAID installation. Toward those ends, RAID has several configurations, or levels, the most common of which are:

- RAID 0

Also known as striping , reads and writes data to all drives in the array simultaneously, so that each bit is written to only one drive. (The total size of a RAID 0 volume is equal to the combined sizes of its member disks.) This design is expected to increase filesystem performance, since instead of waiting for one drive to fulfill I/O requests, they can simply be sent to the next drive in the array.

While RAID 0 does not result in truly incremental increases in performance, it does have a substantial impact on the overall performance of read and write operations. The downside, of course, is that RAID 0 provides no redundancy. Since each bit (including the data that keeps track of the filesystem structure) is written to only one drive, the loss of any one drive means the loss of the entire RAID array. Effectively, RAID 0 introduces another point of failure with each new disk added into the array.

- RAID 1

Mirroring can be considered the opposite of RAID 0. In a RAID 1 array, each piece of data is written to at least two drives, meaning that all data is fully redundant. (The total size of a RAID 1 volume is generally equal to that of its smallest member disk.) If one drive fails, all data is still preserved; the RAID is simply marked for repair. When a new disk is added, the array can be resynchronized, so that the mirror is once again functioning properly. RAID 1, however, does nothing to aid performance. Depending on the implementation, RAID 1 can impose a slight penalty for write operations.

- RAID 0+1

This is a combination of RAID 0 and 1, wherein two striped arrays are set up to mirror each other, resulting in faster performance while maintaining redundancy. If a disk in either stripe is lost, that stripe must be rebuilt and the mirror is marked as degraded. However, since all data is mirrored to the second stripe, the OS will know how to rebuild the stripe, because it’s a component in the mirrored array. While this initially sounds like a very good idea, keep in mind that it’s not a very efficient use of disk space, and that a loss of a drive in each array, however unlikely that seems, would result in complete data loss.

Warning

Because disks employed in arrays are often identical and purchased in batches, they are somewhat vulnerable to a common trend in storage products—clustered failures. Disk reliability is typically measured in the average period between failures (MTBF, or mean time between failures). Averages, however, give no statistical indication of distribution.

- RAID 3

This is often referred to as n+1. It is similar to RAID 0, in that data is striped across n drives (where n is the number of drives in the stripe). It gains some amount of redundancy, though, from an additional disk—a parity disk—so that the total number of disks is n+1. The RAID controller uses the parity disk to keep track of which data is stored on which disk in the stripe, so that in the case of disk failure, the data can be regenerated. If the parity disk is lost, its data can be regenerated from the remaining stripe. RAID 3 is most effective when dealing with large files, since its high throughput is somewhat offset by the latency associated with each transaction.

- RAID 5

This is very similar to RAID 3, except that parity data, instead of being written to a single parity disk, is distributed among every disk in the array. RAID 5 is usually best deployed in environments where reads and writes are random and small. Due to the maintenance of parity data, write performance for both RAID 5 and RAID 3 tends to suffer. One method for overcoming this deficit is to create two RAID 5 or RAID 3 arrays, treating each like a member of a RAID 0 stripe. The result, known as RAID 50 or RAID 30, respectively, is a very high performance array.

It is important to understand what RAID is and also what it isn’t. First and foremost, RAID is not backup. Backups preserve a history so that if you delete or otherwise lose a file, it can be recovered. RAID won’t do that for you. All RAID provides is redundancy and (in some cases) increased performance. Backup provides long-term protection against a variety of data-loss scenarios. RAID, in a redundant configuration, protects almost solely against hardware failure. Both are important components of a redundancy strategy, but it’s very important to not operate under the mistaken assumption that RAID frees you from thinking about backups.

Current Xserve models support three internal drive bay modules. Each module has its own dedicated SATA controller, resulting (as Apple is fond of pointing out) in nearly 300 MB of total theoretical throughput. Although these results are theoretical, they do result in a very good deal, especially considering the total capacity of all three drive bays. The biggest drawback to these mammoth, yet fairly quick, storage capabilities is a lack of flexibility. The Xserve itself, without the Xserve RAID, until recently, had no hardware RAID options for its built-in drive bays. Instead, Apple’s recommended solution is the software RAID capabilities of Mac OS X Server. While the recent (and long delayed) availability of a hardware RAID solution proposes to change this fact of deployment, it is too new, as of this writing, to discuss definitively. Software RAID in Mac OS X comes with a choice—RAID 0 or RAID 1; performance or redundancy—but not both. Mac OS X (and Mac OS X Server) support neither RAID 5 nor RAID 0+1. Apple has clearly put itself in the school of thought holding that software RAID 5, due to its processor-intensive nature, is not a viable solution. While this may be the case, it should be noted that several other operating systems, including most Linux distributions and FreeBSD, do support software RAID 5.

Tip

Since Apple’s kernel driver abstraction layer (IOKit) bears no relation to FreeBSD, its capabilities here don’t really affect Mac OS X.

Be smart about your data. If it is very static, and very well backed up, or if it’s just not important in the long term, RAID 0 is a real option. In most circumstances this will not be the case. Perhaps your system files, which are easily replaced, do not need to be protected by redundancy, but in cases where data is sensitive or where a lot of downtime is unacceptable, important data (like user home directories or web server files) should be stored on an array that offers some kind of redundancy. When feasible, consider evaluating Apple’s hardware RAID card. What’s important here is understanding the capabilities of the storage platform and how those capabilities coincide with your data needs.

Apple’s next tier of storage support is the Xserve RAID, a 3U, 14-drive, 3.5 TB (3,520 GB) Fibre Channel storage array. It is a very capable product, supporting RAID 0, 1, 3, and 5 on each of two independent controllers (there are two banks of 7 drives each; each bank connects to a single controller). Both banks may also be striped together, resulting in RAID 0+1, 10, 30, or 50. A drive in each bank may also be configured as a hot spare, so that if some other drive in that bank fails, the RAID may be transparently repaired and brought back to a fully redundant state without any action on the part of the system administrator.

A major component of the Xserve RAID’s architecture is its use of Fibre Channel—as touched on earlier, this feature adds a lot of depth and flexibility to Apple’s server product line, mostly by adding support for a Switched Fabric network. Beyond the strengths of fibre, though, the Xserve RAID gains a lot of value due to its tremendous density and flexibility. Frankly, it’s not like no one has ever thought of these things before—they’ve just never been common in the Mac space. What Apple has done has been to take a number of very desirable features and combine them into a product with a lot of finish. Successfully managing the Xserve RAID still requires forethought and knowledge of storage needs. It doesn’t, though, require a degree in computer science, and thus can be considered a step forward in the storage industry.

Warning

The one real drawback of the Xserve RAID is that its controllers, as of this writing, are truly independent, and are not redundant. If one controller fails, the second will not take over its arrays.

Obviously, Apple doesn’t have the RAID market—or especially the fibre market-- cornered. There are other solutions from a variety of vendors. We’re focusing on the Xserve RAID as a specific option, however, for two reasons. First of all, it’s a very common storage product, having a lot of exposure in the Mac market. Pre-existing Mac-compatible Fibre products were largely niche items, generally very expensive and not widely deployed. Secondly, from a support perspective, it is a best practice to choose storage products either from or certified by your server vendor. This reduces the vendor blame-game when you need support.

Although much more affordable per density, the Xserve RAID, like a SCSI drive, is premium storage space next to the Xserve’s built-in drives, and should be deployed in conjunction with applications that place a premium on performance and data integrity. This is particularly relevant in a multiple-server environment. Server-attached storage (that is, storage attached directly to the server, rather than being available through a SAN) is fairly inflexible: the storage is where it is, and other servers aren’t going to have access to it unless it’s shared out over a network protocol of some sort—defeating the benefit of fibre’s throughput. A fibre SAN, however, allows for much more efficient allocation of storage resources, since specific portions of the array can be reserved or shared among specific hosts.

An important factor in storage planning is determining where in the filesystem various storage resources will be mounted. At a basic level, this discussion is much simpler on Mac OS X than it is on other Unix platforms, since non-root volumes are typically mounted in /Volumes at a mount point based on their name, or volume label, as shown in Example 1-1

[home:~] admin% df

Filesystem 512-blocks Used Avail Capacity Mounted on

/dev/disk0s9 117219168 3578448 113128720 3% /

devfs 210 210 0 100% /dev

fdesc 2 2 0 100% /dev

<volfs> 1024 1024 0 100% /.vol

/dev/disk2s9 2147483647 2009392 2147483647 0% /Volumes/RAID

/dev/disk1s9 117219168 3322776 113896392 2% /Volumes/Backup HD

automount -fstab [417] 0 0 0 100% /Network/Servers

automount -static [417] 0 0 0 100% /automountThe df command shows you, among other things, where devices are currently mounted. The mount command has similar functionality:

[home:~] admin% mount

/dev/disk0s9 on / (local)

devfs on /dev (local)

fdesc on /dev (union)

<volfs> on /.vol (read-only)

/dev/disk2s9 on /Volumes/RAID (local, with quotas)

/dev/disk1s9 on /Volumes/Backup HD (local)

automount -fstab [417] on /Network/Servers (automounted)

automount -static [417] on /automount (automounted)However, this approach is inflexible in more sophisticated environments. The first thing to stress is a concept that we’ve touched on several times already—reserve your high-bandwidth storage for high-bandwidth applications. There is no need for most of the OS or an archival share for older, static data to be stored on an external RAID. Performance and redundancy needs vary widely by application, and it would be quite a headache to reconfigure every such application to find its data files somewhere in /Volumes. This is especially the case with built-in services like Cyrus (the open source IMAP and POP server used by Mac OS X Server).

Traditionally, Unix OS’s have devoted volumes (either partitions or disks) to specific portions of the OS. Note the output of the df command on a Solaris installation:

(sol:mb) 14:32:04 % df -k

Filesystem 1k-blocks Used Available Use% Mounted on

/dev/dsk/c0t0d0s0 492422 51257 391923 12% /

/dev/dsk/c0t0d0s6 1190430 797638 333271 71% /usr

/dev/dsk/c0t0d0s4 492422 231346 211834 52% /var

swap 1051928 24 1051904 0% /var/run

swap 1055280 3376 1051904 0% /tmp

/dev/dsk/c0t0d0s3 4837350 3754361 1034616 78% /mdx

/dev/dsk/c0t0d0s5 1190430 324556 806353 29% /usr/local

/vol/dev/dsk/c0t1d0/sl-5500There are other, non-performance-related reasons for managing disks in this manner. For instance, it’s feasible under a number of circumstances for processes to write lots of data to the filesystem, due to misconfiguration, bugs, or normal, day-to-day operation.

Tip

Some good examples include systems where quotas are not in use or were misestimated (iLife applications commonly add a tremendous amount of data to a user’s home directory), large amounts of swap files or server logs—which, on a busy server (Apache and AppleFileServer are common culprits) may grow very large.

Since on an out-of-box install that filesystem is likely to also be the root filesystem, denial-of service-opportunities could be presented for local or remote users once the hard drive’s capacity is exceeded. Some administrators also maintain a hot spare boot volume that’s instantly available should the root partition experience filesystem corruption or some other malady. This approach is feasible, because the bits that actually boot the machine don’t change much and actually make up a small percentage of the disk space used in the filesystem. Obviously, though, it’s usually not feasible to maintain a hot-everything spare system—hence the need to keep the root filesystem separate from larger, more mutable data volumes. And finally, in very security sensitive circumstances (commonly high-volume public servers), it’s sometimes desirable to have a read-only boot partition. This technique makes several general types of malicious compromises more difficult to execute. Obviously, though, some of the filesystem needs to have write capability enabled—again underlining the need to configure system partitions in very flexible ways.

Tip

Note that not all of these scenarios are possible on Mac OS X. Our point is simply to illustrate several common disk management schemes.

What it really comes down to is that there are several valid reasons, established in the Unix community over the course of years, for keeping different portions of your filesystem on different volumes. We’ll look at the actual mechanics of configuring Mac OS X a little bit later in this chapter—for now, the point is to think about the deployment planning process, and to consider critically your volume and storage resource options in the context of your application and security needs.

One final point of discussion when considering volume planning and management is swap space . Swap space, a component of the kernel’s virtual memory system, is stored on its own partition in most Unix-like operating systems. In Mac OS X, however, swap space is managed in a series of files that are by default installed in /var/vm. A common first impulse among seasoned system administrators is to configure Mac OS X to store its swap files on their own partition. The simple answer to this is that there’s really no good way to do it, and even if there were, it wouldn’t affect performance much in most circumstances.

Most methods for moving swap space involve manually mounting

volumes early in the /etc/rc

script by adding their device files (for instance, /dev/disk5s0) to the /etc/fstab file. Unfortunately, as we’ll

see later, device files are nondeterministic, meaning that

disk2s2 might be disk3s2 the next time you boot your

machine. Mac OS X uses a disk arbitration subsystem to mount disks

other than the root volume, and it does not start up early enough

to have those disks mounted before dynamic_pager (the process

that manages swap files) starts.

More importantly, beyond being maintained on a filesystem that’s less fragmented, storing swap files on a dedicated partition doesn’t gain you much performance improvement. The server is still writing files to an HFS+ volume—not to a specialized swap filesystem. Those writes are typically competing with other disk operations, at a minimum, and usually with operations from other disks that happen to be on the same I/O channel.

Even if swap space is being written to its own disk on its own I/O channel, it doesn’t change the fact that hard disk—even the fastest hard disk—is thousands of times slower than RAM. There’s just no reason to spend a lot of time configuring swap in some unsupported configuration when current Macs are capable of using 4-16 GB of tremendously cheap RAM.

Network Infrastructure

By their nature, servers tax networks, both in the physical sense of bandwidth constraints and in the organizational sense of managing heterogeneous systems of hosts. Every operating system exacts different tolls on the administrator. In this section, I’ll highlight some issues that are worth avoiding.

Performance

A number of Mac OS X Server features are undeniably high-bandwidth. Unlike simpler, more traditional services, whose bandwidth tends to be quite manageable at a small scale, these applications are bandwidth-intensive at any volume. NetBoot and QuickTime Streaming Server come immediately to mind. Fortunately, these newer services have prompted the Mac community to think a little more about bandwidth and other networking issues prior to deployment. This is a huge change for the better, because these issues are traditionally neglected in any environment. Unfortunately, networking is made a more complex topic due to its political implications. Typically in all but the smallest environments, the organization managing the network (wires, routers, and access points) is not the same organization managing the servers, and one has little reason to placate the other. This book isn’t meant to be a fundamental examination of networking, so we’ll limit our discussion to three basic topics: performance, infrastructure, and services.

Tip

The phrase “political implications,” in one form or the other, is repeated throughout this book over and over and over again. Unfortunately, this behavior is typical of IT organizations. They are territorial, bureaucratic, and sometimes top-heavy. The right hand rarely knows what the left hand is doing. This is certainly a vast generalization, but it’s a common enough one that we feel comfortable illustrating the trend.

Network performance is one of those things that isn’t a problem until it is visible. Users rarely approach you with compliments on the server’s speed. As an administrator, more often than not, your primary goal, is to hope that your users don’t think to complain about it. Fortunately, good planning, and accompanying good budgeting, can help you stay under the radar.

Network performance seems fairly simple—after all, performance everywhere else tends to be straightforward. Big engines mean fast cars. Faster processors really seem to add zip to desktop computers. Professional athletes tend to run fast. Unfortunately, as we’ve mentioned earlier, in many cases, performance is much more about perception than quantitative analysis, and network performance doesn’t always mean buying a bigger switch or faster network interface. For our purposes here, we’ll examine two metrics to help you measure performance: latency and throughput. These are very common guidelines and they apply to more than just networking—so we will see them again later.

Latency is the delay in a network transmission. Latency is independent of bandwidth. The classic example is a semi truck full of dlt tapes (or hard drives or DVDs or any other high-capacity media). This is a very high-bandwidth link—but it could have a latency of hours. Lots of things cause latency—from data loss (TCP is an error-correcting protocol, so lost data is retransmitted transparently) to bad routing to outdated Ethernet protocols. What is important is recognizing the situation and recognizing latency-sensitive applications. You might be glad to wait all day for hundreds of terabytes to be delivered by a semi. Waiting for hundreds of very small graphics to load from a web page, or trying to watch streaming video, is a different story.

Throughput is the total amount of data (usually measured per second) that can flow between two given network nodes. Although high latency can contribute to reduced throughput, it is just one of the factors in a very complex ecosystem.

Infrastructure

Certain infrastructure issues are particularly troublesome for the Mac administrator, especially a factor in environments that are mostly homogeneous; introduction of a new platform can negatively affect systems that were built on assumptions about other OSes. Since networks are rather ubiquitous—it’s hard to imagine participating in an enterprise without being exposed to them—they are a common forum for this kind of interaction. The overall pain and headache can be reduced, however, if these are accounted for in the planning process leading up to deployment.

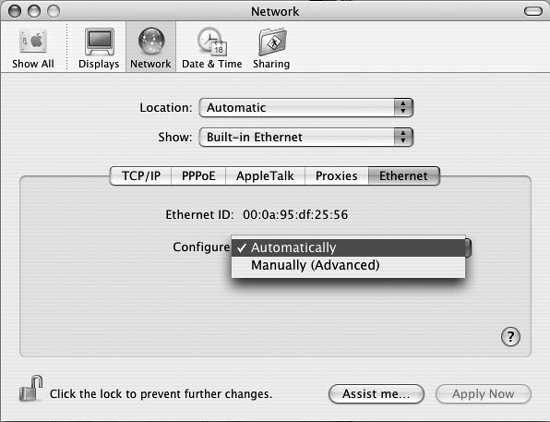

One of the most common manifestations of this sort of platform-specific infrastructure is the duplex setting on an organization’s network links. Ethernet interfaces for Apple hardware have always been of a fairly high quality, and have always been set to auto-negotiate. Unfortunately, in the commoditized market of x86 hardware, low-quality NICs are all too common. Windows administrators are commonly forced to turn off auto-negotiation on their network switches in order to compensate for these poorly implemented NICs. Matters are made worse by the fact that in Jaguar there’s no way to manually affect the duplex settings of an Ethernet interface through the included graphical management tools. Instead, the administrator must use the ifconfig command, which should otherwise be avoided when making destructive changes. If your network hardware is not set to auto-negotiate, you should be aware that you will most likely have to set the duplex manually on your Mac hardware. Since ifconfig’s modifications do not live through reboot, you’ll have to do this every time the machine restarts. Apple has a Knowledge Base article detailing this process. Fortunately, Panther presents a much deeper set of graphical configuration options, making speed and duplex options easily configurable in the Network pane of the System Preferences application (Figure 1-1).

Tip

In general, most networking settings in Mac OS X are managed by the System Configuration Framework. configd, the daemon that does the framework’s work, is not notified of changes made manually with ifconfig. Since configd also manages routing tables, DNS resolver settings, and several facets of directory service configuration, this setup can have unforeseen circumstances. A good rule of thumb is that you should not use ifconfig to affect any settings that configd is responsible for unless you have a very thorough understanding of the implications. Since configd in Mac OS X 10.2 doesn’t have an interface for managing duplex settings, they are safe for management with ifconfig.

Several other common Mac OS issues relate mostly to AppleTalk. Let’s get this straight up front: you should turn AppleTalk off. There’s no reason to rely on it. Previous to Mac OS X, this issue might have been debatable—the “network browser” application on Mac OS 8 and 9 insured that non-AppleTalk service discovery (at that time mostly limited to the Service Location Protocol, or SLP) would be a horrible user experience, banishing most users to the comfortable chooser to locate network resources. That was then, this is now. On a small network, AppleTalk is rather easy to support and fairly innocuous. Scaling up to large, subnetted infrastructures, though, can be extremely painful and difficult, especially now that network vendors, realizing that Apple is moving away from AppleTalk, have vastly reduced their support for it. It’s just not worth the pain, and it is totally contrary to our philosophy of reducing impact on existing infrastructures.

That said, it is common for AppleTalk to conflict with Spanning Tree, a common network protocol used to check for loops in Ethernet topologies. The issue is that Spanning Tree in essence forces a port on a switch or router to sit idle for 30 seconds while the hardware itself checks for loops. This is enough time for several legacy protocols including AppleTalk and NetBIOS to time out. TCP/IP tends to do fine, since most IP configuration mechanisms continue to send out DHCP broadcasts regularly if they fail to get a DHCP address on boot. In reality, there is very little reason to run Spanning Tree on ports that are connected to workstations, since it is almost impossible for a single workstation to cause a network loop.[*] Spanning Tree is quite popular among network engineers, though, so if it’s running at your site, it’s best to ensure that PortFast is also running on ports associated with workstations. PortFast bypasses several Spanning Tree tests but leaves the protocol active, providing the best of both worlds. Apple discusses the issue here: http://docs.info.apple.com/article.html?artnum=30922, and Cisco here: http://www.cisco.com/warp/public/473/12.html.

Services

We now introduce an interesting concept: services on which other services rely. This isn’t really anything new—networks are built on a variety of protocols, some more fundamental than others. However, we’d like to take that a bit further and specifically state that a number of subsystems in Mac OS X rely on hostname resolution. All of your Mac OS X servers (and preferably your Mac OS X clients) should be able to perform both forward and reverse host resolution. Note that we didn’t say DNS -- Mac OS X has a flexible resolver that’s capable of querying a variety of data sources. What is important is that when a process issues a gethostbyname() or gethostbyaddr() call, it needs to be answered. While DNS is the most standard and common method for ensuring this process, it is certainly not the only one. Several services—particularly, directory service-related applications and Mac OS X’s graphical management utilities—depend on host resolution, or at least function more smoothly when it is available.

This reliance isn’t specific to Mac OS X. In particular, Microsoft Active Directory (AD) depends on DNS—especially forward DNS, dynamic DNS, and DNS service discovery. Since integrating Active Directory’s DNS requirements into existing infrastructures can be quite difficult, many organizations—usually on Microsoft’s recommendation—have opted to allow Active Directory’s DNS to be authoritative for the .local namespace. In other words, all AD hosts live in the .local search domain, and all of AD’s services (LDAP, Kerberos, and so on) are registered and queried there. There’s really nothing wrong with that: no one owns the .local namespace, a number of legitimate sources in the DNS world advocate its use, and this practice extends well beyond the Microsoft world.

Tip

These sources include D.J. Bernstein, author of the djbdns daemon (http://cr.yp.to/djbdns/dot-local.html) and the comp.protocols.tcp-ip.domains FAQ (http://www.intac.com/~cdp/cptd-faq/section5.html#split_DNS). Neither of these entities are at all authoritative for the top-level namespace, but both are well-respected in the world of network architects.

However, Apple has also chosen to use the .local namespace for its implementation of the multicast DNS portion of Rendezvous, Apple’s version of the proposed Zeroconf standard. What this means is that in Mac OS X, all queries for the .local namespace will be passed off to mDNSResponder, the Multicast DNS responder that ships as a part of Rendezvous, rather than being sent out to the DNS hierarchy. In enterprises that have standardized on the .local namespace for local DNS services, this approach is quite problematic, since their DNS servers will never be queried (mDNS is a peer-to-peer technology that allows for name service lookups without the use of a DNS server).

There is much discussion in several communities relating to Apple’s use of .local. To be fair, Apple has submitted an IETF draft proposing that the .local namespace be reserved for multicast DNS (mDNS).[*] In Rendezvous, they implemented that draft—primarily because waiting for the IETF to actually decide anything can be a very long process. Apple had originally planned on local.arpa, but for user experience and marketing reasons settled on .local. One has to wonder if the benefits (because most end users have absolutely no understanding of this issue, and becuase the .local TLD is mostly hidden in the graphical interface) were really worth the issues this choice has caused in enterprises already using the .local namespace. That discussion is mostly irrelevant, though, because a number of vendors aside from Apple—HP, Tivo, and several others—have implemented solutions based on Apple’s mDNS specification. The only relevant question now for those enterprises already utilizing the .local namespace is how to turn off mDNS resolution in Mac OS X.

If you want to disable mDNS entirely, you have two options. Most simply, you can remove the mDNS startup item: /System/Library/StartupItems/mDNSResponder. As a matter of consistency, you should also probably disable the Rendezvous service location—although all this accomplishes is to hide any Rendezvous-enabled services from applications that specifically use the Directory Service API for service location. In order to do this, uncheck the Rendezvous Plug-In in the Service pane of the Directory Access application.

Another option is to reconfigure .local queries to be forwarded your local nameserver, rather than allowing them to be forwarded to multicast DNS. To do this, edit the /etc/resolver/local file, whose contents should look something like this out of the box:

nameserver 224.0.0.251 port 5353 timeout 1

Edit the nameserver and port entries to reflect your organization. For instance:

nameserver 192.168.2.5 port 53 timeout 1

After you restart the lookupd daemon (which, among other things, is responsible for host resolution), all queries for the .local namespace should be sent to the server you’ve specified.

In Panther, you have another option—the Mac OS X resolver libraries have been updated to reflect the feasibility that more than one DNS system might be responsible for the same namespace (we’ll ignore for a second the implications of that when one looks outside of the .local domain). Accordingly, you might have multiple entries in the /etc/resolver directory. Building on the previous case:

fury:~ mab9718$ ls -la /etc/resolver

total 32

drwxr-xr-x 6 root wheel 204 17 Aug 06:02 .

drwxr-xr-x 92 root wheel 3128 31 Dec 1969 ..

lrwxr-xr-x 1 root wheel 5 28 Aug 17:13 0.8.e.f.ip6.arpa -> local

lrwxr-xr-x 1 root wheel 5 28 Aug 17:13 0.8.e.f.ip6.int -> local

lrwxr-xr-x 1 root wheel 5 28 Aug 17:13 254.169.in-addr.arpa -> local

-rw-r—r-- 1 root wheel 43 17 Aug 06:02 localThere are four entries in the default install, three of which are symbolic links to /etc/resolver/local. These files are concerned with the default resolution of the .local domain, including the reverse resolution of the link-local IPv4 and IPv6 address spaces. In Panther, Mac OS X’s resolver is extensible, so specific domains might have different DNS configurations (confusingly enough, called clients). local is the only client installed by default, but others may be added. For instance, if I wanted to ensure all queries to the http://4am-media.com domain were processed by my own nameserver, I could create a file in /etc/resolver called 4am-media. Its content would be similar to the modified /etc/resolver/local file mentioned earlier.

Generally, the system’s resolver determines which domain each configuration file is responsible based on that file’s name—for instance local is responsible for the .local namespace in the previous example. How, then, would you have more than one configuration per namespace? It’s actually as simple as adding a domain directive to the file in question. So if I wanted to specify a traditional name server in addition to using mDNS in the .local domain (in order to both make use of Rendezvous and meet my organization’s business goals), I could append a file called 4amlocal to /etc/resolver, as follows:

nameserver 192.168.2.5 port 53 timeout 1 domain local search-order 1

In this case, for all queries regarding the .local namespace, my server will be queried initially, before mDNS. This approach, or something like it, is endorsed by Apple’s kbase 107800. I’d also have to add the following to adjust /etc/resolver/local:

nameserver 224.0.0.251 port 5353 timeout 1 search-order 2

Changing the search order for Apple’s mDNS resolver ensures that your organization’s .local DNS namespace will be queried before Rendezvous.

Finally, a more granular (and my preferred) approach is to specify a particular domain in your .local override, so that rather than query your organization’s DNS for the entire .local TLD (top-level domain), you query only for your particular local domain (for instance, 4am.local). This approach, although effective in most circumstances, has not always been compatible with Apple’s Active Directory Plug-In, so as always, testing should be undertaken carefully. Example 1-2 shows what my .local resolver configuration looks like:

nameserver 192.168.1.1 port 53 timeout 1 domain 4am.local search-order 1

Since it is specifically for the 4am.local domain, it does not affect Rendezvous queries. Consequently, I do not have to modify the search-order setting in the /etc/resolver/local file.

Whatever your approach, the point is to fit it most efficiently within your organization’s existing infrastructures. Asking your DNS administrator to change company-wide naming policy simply to use Mac OS X effectively is not a productive way of driving adoption of Apple technologies.

[*] In the education market, Mac OS X is sold for approximately half of its retail price.

[*] An exception is an environment where users might plug their own hubs or switches into multiple switches. This is an issue of policy, rather than a technical one.

[*] The draft is available at http://www.ietf.org/internet-drafts/draft-cheshire-dnsext-multicastdns-02.txt. As the draft progresses, though, the name will change by iteration of the 02.txt. For instance, www.ietf.org/internet-drafts/draft-cheshire-dnsext-multicastdns-01.txt is no longer available at the above URL.

Get Essential Mac OS X Panther Server Administration now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.