Previously, we described basic switch operation and features. Ethernet switches are building blocks of modern networking, and switches are used to build every kind of network imaginable. To meet these needs, vendors have created a wide range of Ethernet switch types and switch features. In this chapter we cover several special-purpose switches, many developed for specific network types. The Ethernet switch market is a big place, and here we can only provide an overview of some of the different kinds of switches that are available.

We begin with a look at various kinds of switches sold for specific markets. There are switches designed for enterprise and campus networks, data center networks, Internet Service Provider networks, industrial networks, and more. Within each category there are also multiple switch models.

As networks became more complex and switches evolved, the development of the multi-layer switch combined the roles of bridging and routing in a single device. This made it possible to purchase a single switch that could perform both kinds of packet forwarding: bridging at Layer 2 and routing at Layer 3. Early bridges and routers were separate devices, each with a specific role to play in building networks. Ethernet switches typically provided high-performance bridging across a lot of ports, and routers specialized in providing high-level protocol forwarding (routing) across a smaller set of ports. By combining those functions, a multilayer switch could provide benefits to the network designer.

As you might expect, a multilayer switch is more complex to configure. However, by providing both bridging and routing in the same device, a multilayer switch makes it easier to build large and complex networks that combine the advantages of both forms of packet forwarding. This makes it possible for vendors to provide high-performance operation of both bridging and routing across a large set of Ethernet ports at a competitive price point.

Large, multilayer switches are often used in the core of a network system to provide both Layer 2 switching and Layer 3 routing, depending on requirements. As networks grow, Layer 3 routing can provide isolation between Ethernet systems and help enable a network plan based on a hierarchical design. Multilayer switches are also used as distribution switches in a building, providing an aggregation point for access switches. Layer 3 routing in an aggregation switch can provide separate Layer 3 networks per VLAN in a building, improving isolation, resiliency, and performance.

In a large enterprise network, the bulk of the network connections are on the edge, where access switches are used to connect to end nodes such as desktop computers. As a result, there is a large marketplace for access switches, with multiple vendors and a wide variety of features and price points.

When it comes to building large networks with dozens, or even hundreds or thousands of access switches, a major consideration is the set of features that can provide ease of monitoring and management. Other considerations may include whether the access switches support high speed uplinks, whether they include features like multicast packet management, and whether their internal switching speeds are capable of handling all ports running at maximum packet rates.

Each vendor has a story to tell about their access switch capabilities and how they can help make it easier for you to build and manage a network. While it’s a significant task to compare and contrast the various access switches, you can learn a lot in the process and it will help you make an informed purchasing decision.

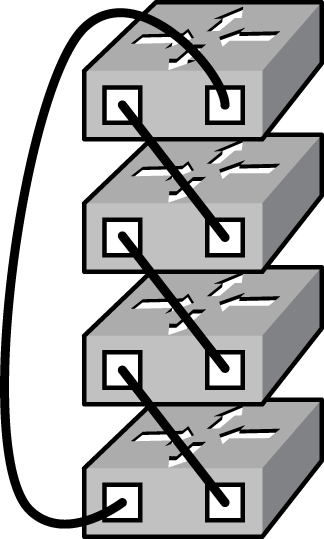

Some switches are designed to allow “stacking,” or combining a set of switches to operate as a single switch. Stacking makes it possible to combine multiple switches that support 24 or 48 ports each, for example, and manage the stacked set of switches and switch ports as a single, logical switch that supports the combined set of ports. Stacking also provides benefits when replacing a failed switch that is a member of the stack, since the replacement switch can be automatically re-configured with the software and configuration from the failed switch, making it possible to quickly and easily restore network service.

Stacking switches are linked together with special cables to create the physical and logical stack, as shown in Figure 4-1. These cables are typically kept as short as possible, and the switches are placed directly on top of each other, forming a compact stack of equipment that functions as a single switch. There is no IEEE standard for stacking; each vendor offering this feature has their own stacking cables and connectors.

The stacking cables create a backplane between the switches, but the packet switching speeds supported between switches in a stacking system vary, depending on the vendor. Some stacking switches are designed to use 10 Gigabit Ethernet cables connected between their uplink ports to create a stack. In this case, the stacking cable is a standard Ethernet patch cable, but the stacking software running on the switches is specific to the vendor. As a result, you cannot mix and match stacking switches from different vendors.

Industrial Ethernet switches are switches that have been “hardened” to make it possible for them to function in the harsh environments found in factories and other industrial settings. Industrial Ethernet switches are used to support industrial automation and control systems, as well as network connections to instrumentation for the control and monitoring of major infrastructures, such as the electrical power grid.

Industrial Ethernet switches may also feature special port connections that provide a seal around the Ethernet cable to keep moisture and dirt out of the switch ports. The switch itself may be sealed and fanless, to avoid exposing the internal electronics to a harsh environment. You may also find ratings for the G-forces and vibrations that industrial switches can handle. To make it possible to meet the stringent environmental specifications, industrial switches are often built as smaller units, with a limited set of ports.

The development of wireless Ethernet switches is a recent innovation. Wireless Ethernet is a marketing term for the IEEE 802.11 wireless LAN (WLAN) standard, which provides wireless data services, also known as “Wi-Fi.” Wireless Ethernet vendors provide a wide range of approaches to building and running a WLAN, with one widely adopted approach consisting of access points connected to WLAN controllers. A wireless LAN controller provides support for the APs, helping to maintain the operation of the wireless system through such functions as automatic wireless channel assignment and radio power management. Typically, the controller is located in the core of a network, and wireless AP data flows are connected to the controller either directly over a VLAN, or via packet tunneling over a Layer 3 network system.

Wireless vendors have extended the controller approach by combining the controller software with a standard Ethernet switch. Including wireless controller functions and wired Ethernet ports in a single device reduces cost and makes it possible for the wireless system to grow larger without overloading a central controller. Wireless switches typically include ports equipped with Power over Ethernet, powering the APs and managing their data and operation in a single device.

A typical wireless user in an enterprise or corporate network is required to authenticate themselves before being allowed to access the wireless system, which improves security and makes it possible to manage the system down to the individual user. Wireless Ethernet switches can also provide client authentication services on wired ports, extending the wireless user management capabilities to the wired Ethernet system.

In recent years, Ethernet has made inroads in the Internet service provider marketplace, providing switches that are used by carriers to build wide area networks that span entire continents and the globe. ISPs have specialized requirements based on their need to provide multiple kinds of networking. ISPs provide high-speed and high-performance links over long distances, but they may also provide metro-area links between businesses in a given city, as well as links to individual users to provide Internet services at homes.

None of the ISP and carrier networks are particularly “local” in the sense of the original “local area network” design for Ethernet. The development of full-duplex Ethernet links freed Ethernet from the timing constraints of the old half-duplex media system, and made it possible to use Ethernet technology to make network connections over much longer distances than envisioned in the original LAN standard. With the local area network distance limitations eliminated, carriers and ISPs were then able to exploit the high volume Ethernet market to help lower their costs by providing Ethernet links for long distances, metro areas, and home networks.

While the IEEE standardizes Ethernet technology and basic switch functions, there are sets of switch features that are used in the carrier and ISP marketplaces to meet the needs of those specific markets. To meet their requirements, businesses have formed forums and alliances to help identify the switch features that are most important to them, and to publish specifications that include specialized methods of configuring switches, which improve interoperability between equipment from different vendors.

One example of this is the Metro Ethernet Forum.[17] In January, 2013, the MEF announced the certifications for equipment that can be configured to meet “Carrier Ethernet 2.0” specifications. The set of CE 2.0 specifications include Ethernet switch features that provide a common platform for delivering services of use to carriers and ISPs. By carefully specifying these services, the MEF seeks to create a standard set of services and a network design that is distinguished from typical enterprise Ethernet systems by five “attributes.” The attributes include services of interest to carriers and ISPs, along with specifications to help achieve scalability, reliability, quality of service, and service management.

The Metro Ethernet Forum is an example of a business alliance developing a comprehensive set of specifications that help define Ethernet switch operation for metro, carrier, and ISP networks. These specifications rely on a set of switch features, some of them quite complex, that are needed to achieve their network design goals, but which typically don’t apply to an enterprise or campus network design.

Data center networks have a set of requirements that reflect the importance of data centers as sites that host hundreds or thousands of servers providing Internet and application services to clients. Corporate data centers hold critically important servers whose failure could affect the operations of major portions of the company, as well as the company’s customers. Because of the intense network performance requirements caused by placing many critically important servers in the same room, data center networks use some of the most advanced Ethernet switches and switch features available.

Some data center servers provide database access or storage access used by other high-performance servers in the data center, requiring 10 Gb/s ports to avoid bottlenecks. Major servers providing public services, such as access to World Wide Web pages, may also need 10 Gb/s interfaces, depending on how many clients they must serve simultaneously.

Data center servers also host virtual machines (VMs) in which a single server will run software that functions as multiple, virtual servers. There can be many VMs per server, and hundreds or thousands of virtual machines per data center. As each VM is a separate operating system running separate services, the large number of VMs also increases the network traffic generated by each physical server.

Data center switches typically feature non-blocking internal packet switching fabrics, to avoid any internal switch bottlenecks. These switches also feature high speed ports, since modern servers are equipped with Gigabit Ethernet interfaces and many of them come with interfaces that can run at both 1 and 10 Gb/s.

Data centers consist of equipment cabinets or racks aligned in rows, with servers mounted in dense stacks, using mounting hardware that screws into flanges. When you open the door to one of these cabinets, you are faced with a tightly-packed stack of servers, one on top of the other, filling the cabinet space. One method for providing switch ports in a cabinet filled with servers is to locate a “top of rack,” or TOR, switch at the top of the cabinet, and to connect the servers to ports on that switch.

The TOR switch connects, in turn, to larger and more powerful switches located either in the middle of a row, or in the end cabinet of a row. The row switches, in their turn, are connected to core switches located in a core row in the data center. This provides a “core, aggregation, edge” design. Each of the three types of switches has a different set of capabilities that reflect the role of the switch.

The TOR switch is designed to be as low cost as possible, as befits an edge switch that connects to all stations. However, data center networks require high performance, so the TOR switch must be able to handle the throughput. The row switches must also be high-performance, to allow them to aggregate the uplink connections from the TOR switches. Finally, the core switches must be very high-performance and provide enough high-speed uplink ports to connect to all row switches.

Oversubscription is common in engineering, and describes a system which is provisioned to meet the typical demand on the system rather than the absolute maximum demand. This reduces expense and avoids purchasing resources that would rarely, or never, be used. In network designs, oversubscription makes it possible to avoid purchasing excess switch and port performance.

When you’re dealing with the hundreds and thousands of high-performance ports that may be present in a modern data center, it can be quite difficult to provide enough bandwidth for the entire network to be “non-blocking,” meaning that all ports can simultaneously operate at their maximum performance. Rather than provide a non-blocking system, a network that is oversubscribed can serve all users economically, without a significant impact on their performance.

As an example, a non-blocking network design for one hundred 10 Gb/s ports located on a single row of a data center would have to provide 1 terabit of bandwidth to the core switches. If all 8 rows of a data center each needed to support one hundred 10 Gb/s ports, that would require 8 terabits of port speed up to the core, and an equivalent amount of switching capability in the core switches. Providing that kind of performance is very expensive.

But, even if you had the money and space to provide that many high-performance switches and ports, the large investment in network performance would be wasted, since the bandwidth would be mostly unused. Although a given server or set of servers may be high-performance, in the vast majority of data centers not all servers are running at maximum performance all of the time. In most network systems, the Ethernet links tend to run at low average bit rates, interspersed with higher rate bursts of traffic. Thus, you do not need to provide 100 percent simultaneous throughput for all ports in the majority of network designs, including most data centers. Instead, typical data center designs can include a significant level of oversubscription without affecting performance in any significant way.

Data centers continue to grow, and server connection speeds continue to increase, placing more pressure on data center networks to handle large amounts of traffic without excessive costs. To meet these needs, vendors are developing new switch designs, generally called “data center switch fabrics.” These fabrics combine switches in ways that increase performance and reduce oversubscription.

Each of the major vendors has a different approach to the problem, and there is no one definition for a data center fabric. There are both vendor proprietary approaches and standards-based approaches that are called “Ethernet fabrics,” and it is up to the marketplace to decide which approach or set of approaches will be widely adopted. Data center networks are evolving rapidly, and network designers must work especially hard to understand the options and their capabilities and costs.

A major goal of data centers is high availability, since any failure of access can affect large numbers of people. To achieve that goal, data centers implement resilient network designs based on the use of multiple switches supporting multiple connections to servers. As with other areas of network design, resilient approaches are evolving through the efforts of multiple vendors and the development of new standards.

One way to achieve resiliency for server connections in a data center is to provide two switches in a given row—call them Switch A and Switch B—and connect the server to both switches. To exploit this resiliency, some vendors provide “multi-chassis link aggregation” (MLAG), in which software running on both switches makes the switches appear as to the server to be a single switch.

The server thinks that it is connected to two Ethernet links on a single switch using standard 802.1AX link aggregation (LAG) protocols. But in reality, there are two switches involved in providing the aggregated link. Should a port or an entire switch fail for any reason, the server still has an active connection to the data center network and will not be isolated from the network. Note that MLAG is currently a vendor-specific feature, as there is no IEEE standard for multi-chassis aggregation.

[17] The MEF describes itself as: “… a global industry alliance comprising more than 200 organizations including telecommunications service providers, cable MSOs, network equipment/software manufacturers, semiconductors vendors and testing organizations. The MEF’s mission is to accelerate the worldwide adoption of Carrier-class Ethernet networks and services. The MEF develops Carrier Ethernet technical specifications and implementation agreements to promote interoperability and deployment of Carrier Ethernet worldwide.”

Get Ethernet Switches now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.