Chapter 1. Introduction

Until recently, big data systems have been batch oriented, where data is captured in distributed filesystems or databases and then processed in batches or studied interactively, as in data warehousing scenarios. Now, exclusive reliance on batch-mode processing, where data arrives without immediate extraction of valuable information, is a competitive disadvantage.

Hence, big data systems are evolving to be more stream oriented, where data is processed as it arrives, leading to so-called fast data systems that ingest and process continuous, potentially infinite data streams.

Ideally, such systems still support batch-mode and interactive processing, because traditional uses, such as data warehousing, haven’t gone away. In many cases, we can rework batch-mode analytics to use the same streaming infrastructure, where the streams are finite instead of infinite.

In this report I’ll begin with a quick review of the history of big data and batch processing, then discuss how the changing landscape has fueled the emergence of stream-oriented fast data architectures. Next, I’ll discuss hallmarks of these architectures and some specific tools available now, focusing on open source options. I’ll finish with a look at an example IoT (Internet of Things) application.

A Brief History of Big Data

The emergence of the Internet in the mid-1990s induced the creation of data sets of unprecedented size. Existing tools were neither scalable enough for these data sets nor cost effective, forcing the creation of new tools and techniques. The “always on” nature of the Internet also raised the bar for availability and reliability. The big data ecosystem emerged in response to these pressures.

At its core, a big data architecture requires three components:

-

A scalable and available storage mechanism, such as a distributed filesystem or database

-

A distributed compute engine, for processing and querying the data at scale

-

Tools to manage the resources and services used to implement these systems

Other components layer on top of this core. Big data systems come in two general forms: so-called NoSQL databases that integrate these components into a database system, and more general environments like Hadoop.

In 2007, the now-famous Dynamo paper accelerated interest in NoSQL databases, leading to a “Cambrian explosion” of databases that offered a wide variety of persistence models, such as document storage (XML or JSON), key/value storage, and others, plus a variety of consistency guarantees. The CAP theorem emerged as a way of understanding the trade-offs between consistency and availability of service in distributed systems when a network partition occurs. For the always-on Internet, it often made sense to accept eventual consistency in exchange for greater availability. As in the original evolutionary Cambrian explosion, many of these NoSQL databases have fallen by the wayside, leaving behind a small number of databases in widespread use.

In recent years, SQL as a query language has made a comeback as people have reacquainted themselves with its benefits, including conciseness, widespread familiarity, and the performance of mature query optimization techniques.

But SQL can’t do everything. For many tasks, such as data cleansing during ETL (extract, transform, and load) processes and complex event processing, a more flexible model was needed. Hadoop emerged as the most popular open source suite of tools for general-purpose data processing at scale.

Why did we start with batch-mode systems instead of streaming systems? I think you’ll see as we go that streaming systems are much harder to build. When the Internet’s pioneers were struggling to gain control of their ballooning data sets, building batch-mode architectures was the easiest problem to solve, and it served us well for a long time.

Batch-Mode Architecture

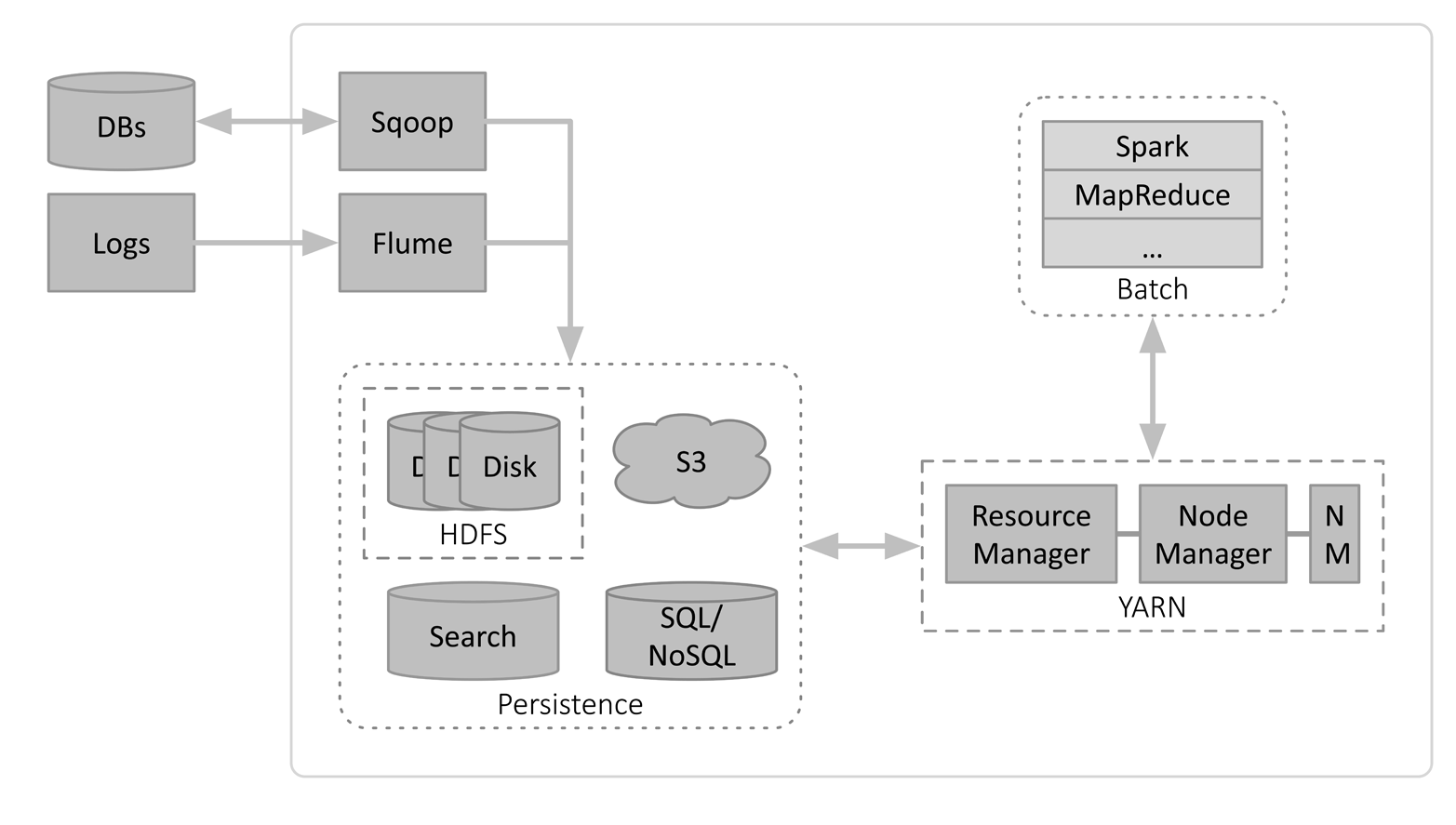

Figure 1-1 illustrates the “classic” Hadoop architecture for batch-mode analytics and data warehousing, focusing on the aspects that are important for our discussion.

Figure 1-1. Classic Hadoop architecture

In this figure, logical subsystem boundaries are indicated by dashed rectangles. They are clusters that span physical machines, although HDFS and YARN (Yet Another Resource Negotiator) services share the same machines to benefit from data locality when jobs run. Functional areas, such as persistence, are indicated by the rounded dotted rectangles.

Data is ingested into the persistence tier, into one or more of the following: HDFS (Hadoop Distributed File System), AWS S3, SQL and NoSQL databases, and search engines like Elasticsearch. Usually this is done using special-purpose services such as Flume for log aggregation and Sqoop for interoperating with databases.

Later, analysis jobs written in Hadoop MapReduce, Spark, or other tools are submitted to the Resource Manager for YARN, which decomposes each job into tasks that are run on the worker nodes, managed by Node Managers. Even for interactive tools like Hive and Spark SQL, the same job submission process is used when the actual queries are executed as jobs.

Table 1-1 gives an idea of the capabilities of such batch-mode systems.

| Metric | Sizes and units |

|---|---|

Data sizes per job |

TB to PB |

Time between data arrival and processing |

Many minutes to hours |

Job execution times |

Minutes to hours |

So, the newly arrived data waits in the persistence tier until the next batch job starts to process it.

Get Fast Data Architectures for Streaming Applications now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.