Chapter 4. Continuous Testing

Your fast feedback efforts are in limbo without continuous feedback!

In the previous chapter, we discussed how adding tests in the right layers of the application accelerates feedback cycles. It is imperative to receive such fast feedback continuously and not just in random bursts to seamlessly regulate the applicationâs quality throughout the development cycle. This chapter is dedicated to the elaboration of such a continuous testing practice.

Continuous testing (CT) is the process of validating application quality using both manual and automated testing methods after every incremental change, and alerting the team when the change causes deviation from the intended quality outcomes. For example, when a piece of functionality deviates from the expected application performance numbers, the CT process immediately notifies the team by means of failing performance tests. This gives the team an opportunity to fix issues as early as possible, when they are still relatively small and manageable. A lack of such a continuous feedback loop might leave the issues unnoticed for an extended period, allowing them to cascade to deeper levels of the code over time and increasing the effort required to remedy them.

The CT process relies heavily on the practice of continuous integration (CI) to perform automated testing against every change. Adopting CI together with CT allows the team to do continuous delivery (CD). Ultimately, the trio of CI, CD, and CT make the team a high-performing one, as measured by the four key metrics, lead time, deployment frequency, mean time to restore, and change fail percentage. These metrics, which weâll look at toward the end of this chapter, provide insights about the quality of the teamâs delivery practices.

This chapter will equip you with the skills required to establish a CT process for your team. You will learn about CI/CD/CT processes and strategies to achieve multiple feedback loops on various quality dimensions. A guided exercise to set up a CI server and integrate the automated tests is included too.

Building Blocks

As a foundation for the continuous testing skill, this section will introduce you to the terminology and the overall CI/CD/CT process. You will also learn the fundamental principles and etiquette that should be carefully imbued within the team to make the process successful. Letâs begin with an introduction to CI.

Introduction to Continuous Integration

Martin Fowler, author of a half dozen books including Refactoring: Improving the Design of Existing Code (Addison Wesley) and Chief Scientist at Thoughtworks, describes continuous integration as âa software development practice where members of a team integrate their work frequently, usually each person integrates at least dailyâleading to multiple integrations per day.â Letâs consider an example to illustrate the benefits of following such a practice.

Two teammates, Allie and Bob, independently started developing a login and home page. Work started in the morning, and by noon Allie had finished a basic login flow and Bob had completed a basic home page structure. They both tested their respective functionalities on their local machines and continued work. By the end of the day, Allie had completed the login functionality by making the application land on an empty home page after successful login, since the home page wasnât available to her yet. Similarly, Bob completed the home page functionality by hardcoding the username in the welcome message, since the user information from the login was not available to him.

The following day, they both reported their functionalities to be âdoneâ! But are they really done? Which of the two developers is responsible for integrating the pages? Should they create a separate integration user story for every integration scenario across the application? If so, will they be ready for the expense of the duplicated testing efforts involved in testing the integration story? Or should they delay testing until the integration is done? These are the kinds of questions that get addressed implicitly with continuous integration.

When CI is followed, Allie and Bob will share their work progress throughout the day (after all, both had a basic skeleton of their functionalities ready by noon). Bob will be able to add the necessary integration code to abstract the username after login (e.g., from a JSON or JWT token), and Allie will be able to make the application land on the actual home page after successful login. The application will really be usable and testable then!

It may seem like a small additional cost to integrate the two pages the next day in this example. However, when code is accrued and integrated later in the development cycle, integration testing becomes costly and time-consuming. Furthermore, the more testing is delayed, the more likely they are to find entangled issues that are hard to fix, sometimes even warranting a rewrite of a major chunk of the software. This subsequently will create a general fear of integration among team membersâoften an unspoken accompaniment of delayed integration!

The practice of continuous integration essentially tries to reduce such integration risks and save the team from ad hoc rewriting and patches. It doesnât entirely eliminate integration defects, but makes it easier to find and fix them early, when they are just budding.

The CI/CT/CD Process

Letâs start by looking at the continuous integration and testing processes in detail. Later, weâll see how they connect to form the continuous delivery process.

The CI/CT process relies on four individual components:

The version control system (VCS), which holds the entire application code base and serves as a central repository from which all team members can pull the latest version of the code and where they can integrate their work continuously

The automated functional and cross-functional tests that validate the application

The CI server, which automatically executes the automated tests against the latest version of the application code for every additional change

The infrastructure that hosts the CI server and the application

The continuous integration and testing workflow begins with the developer, who, as soon as they finish a small portion of functionality, pushes their changes into a common version control system (e.g., Git, SVN). The VCS tracks every change submitted to it. The changes are then sent through the continuous testing process, where the application code is fully built and automated tests are executed against it by a CI server (e.g., Jenkins, GoCD). When all the tests pass, the new changes are considered fully integrated. When there are failures, the respective codeâs owner fixes the issues as quickly as possible. Sometimes, changes are reverted back from the VCS until the issues are resolved. This is mainly to prevent others from pulling the code with issues and integrating their work on top of it.

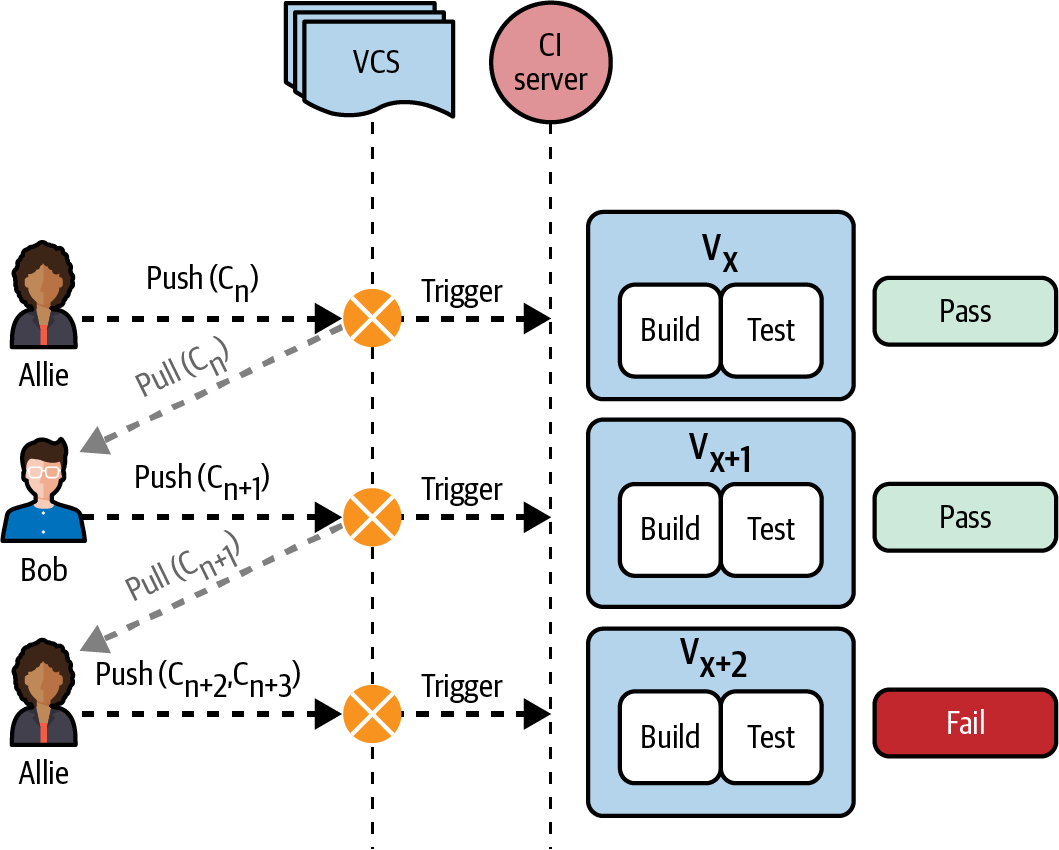

As Figure 4-1 shows, Allie pushes her code for the basic login functionality along with the login tests into the common version control system before noon, as part of commit Cn.

Figure 4-1. Components in a continuous integration and testing process

Note

In the Git VCS, a commit is a snapshot of the entire code base at a given point in time. When practicing continuous integration, it is recommended that small incremental changes are saved as independent commits on the local machine. When the functionality reaches a logical state, such as completing a basic login functionality, the commits should be pushed to the common VCS repository. Only on pushing the changes to the VCS do the CI and testing processes begin.

The new change, Cn, triggers a separate pipeline in the CI server. Each pipeline is composed of many sequential stages. The first is the build and test stage, which builds the application and runs automated tests against it. These include all the micro- and macro-level tests discussed in Chapter 3 and tests that assert on the applicationâs quality dimensions (performance, security, etc.), which we will discuss in the upcoming chapters. Once this stage is complete, the test results are indicated to Allie. In this case, Allieâs code has been successfully integrated, and she proceeds with her login functionality.

Later in the day, Bob pushes commit Cn+1 for the home page feature after pulling the latest changes (Cn) from the common VCS. Cn+1 is thus a snapshot of the application code base including both Allieâs and Bobâs new changes. This triggers the build and test stage in the CI process. The tests when run against Cn+1 ensure that Bobâs new changes havenât broken any of the previous functionalities, including Allieâs latest commit, as she has also added the login tests. Luckily, Bob hasnât. However, we see in Figure 4-1 that Allieâs changes as part of commits Cn+2 and Cn+3 have broken the integration, and the tests have failed. She needs to fix them before proceeding any further with her work, as she has introduced a bug into the common VCS. She can push her fix as another commit, and the process will continue.

Imagine the same workflow in a large distributed team, and you can understand how much easier CI makes it for all team members to share their progress and integrate their work seamlessly. Also, in large-scale applications, there are typically several interdependent components that warrant exhaustive integration testing, and the continuous testing process provides the much-needed confidence in the finesse of their integration!

With that kind of confidence gained from the fully automated integration and testing processes, the team is placed in a privileged spot to push their code to production whenever the business demands it. In other words, the team is equipped to do continuous delivery.

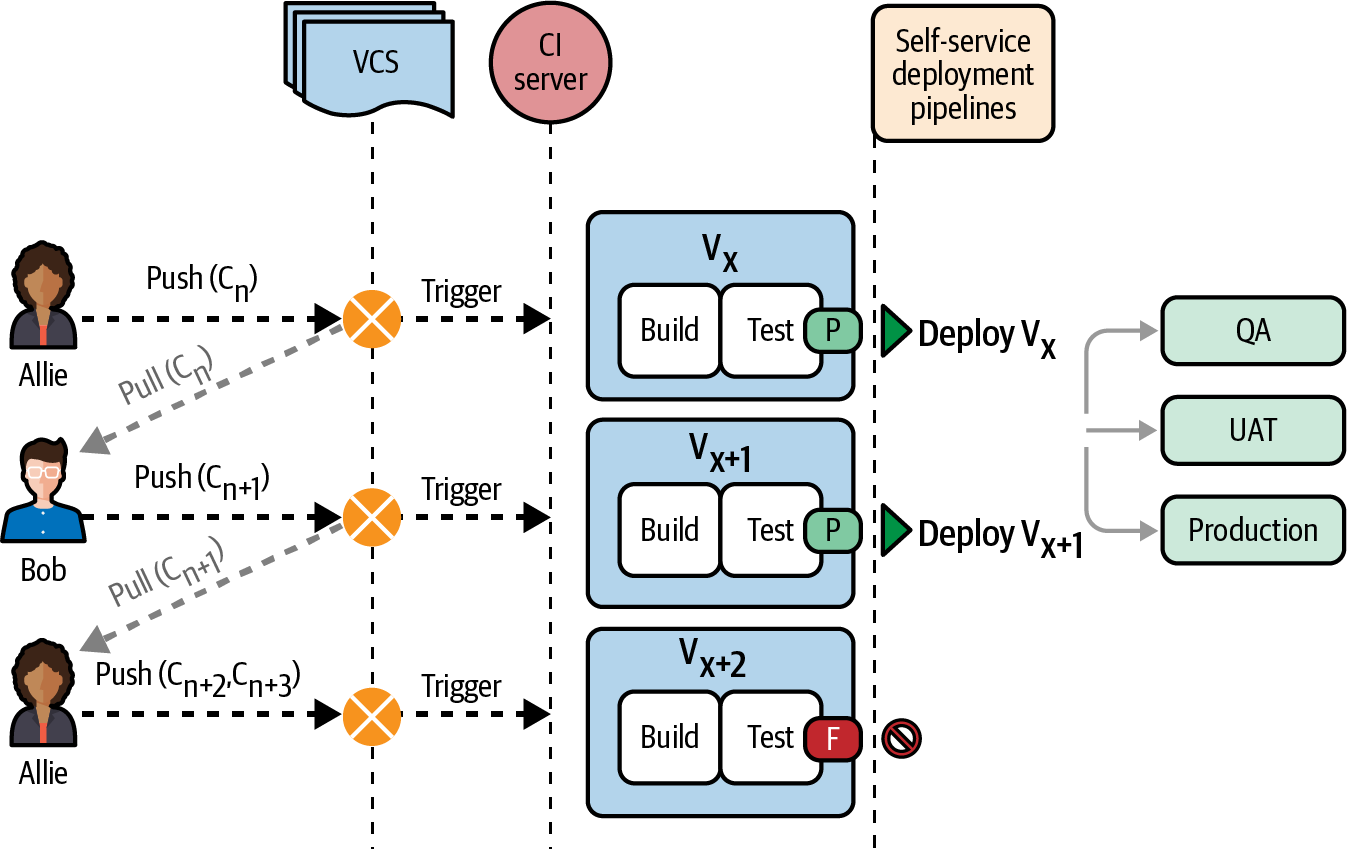

Continuous delivery depends upon following continuous integration and testing processes so that the application is production-ready at all times. Additionally, it dictates having an automated deployment mechanism that can be triggered with a single click to deploy to any environment, be it QA or production. Figure 4-2 shows the continuous delivery process.

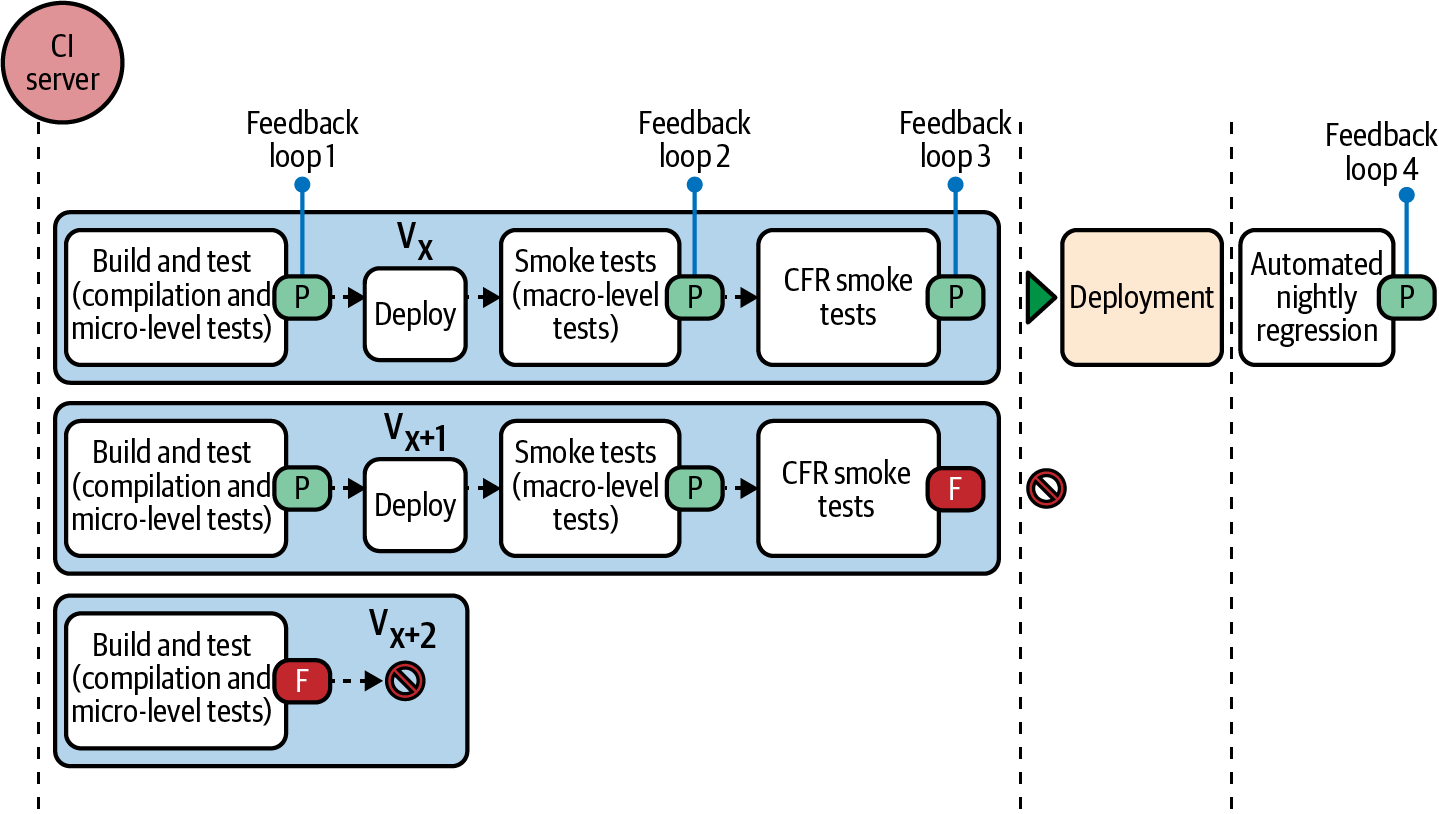

Figure 4-2. Continuous delivery process with CI, CT, and deployment pipelines

As you can see, the continuous delivery process encompasses the CI/CT processes along with the self-service deployment pipelines. These pipelines are stages configured in the CI server as well; they perform the task of deploying the âchosenâ version of the application artifacts to the required environment.

The CI server lists all the commits with their test results status. Only if all the tests have passed for a commit (or a set of commits) does it offer the option to deploy that particular application version (V). For example, letâs say Allieâs team wants to receive feedback from the business on the basic login functionality pushed as part of commit Cn. They can push the Deploy Vx button, as seen in Figure 4-2, and choose the  user acceptance testing (UAT) environment. This will deploy only the changes made up to that point to the UAT environmentâthat is, Bobâs Cn+1 and later commits will not be deployed. As you can see, the commits Cn+2 and Cn+3 are not available for deployment as the tests have failed.

This kind of continuous delivery setup solves many critical issues, but one of the most important benefits it provides is the ability to launch product features to the market at the right time. Often delays in feature releases result in loss of revenue and loss of customers to competitors. Additionally, from the teamâs point of view, the deployment process becomes fully automated, reducing the dependency on certain individuals to do their magic on the day of deployment; anyone is free to make a hassle-free deployment to any environment at any time. Automating deployments also reduces the risk of incompatible libraries, missing or incorrect configurations, and insufficient documentation.

Principles and Etiquette

Now that we have discussed the CI/CD/CT processes, it is important to call out that these processes can reach fruition only if all the team members follow a set of well-defined principles and etiquette. After all, it is an automated way to collaborate on their workâbe it automated tests, application code, or infrastructure configurations. The team should establish these principles at the beginning of their delivery cycle and keep reinforcing them throughout. Here is a minimum set of principles and etiquette a team will have to respect to be successful:

- Do frequent code commits

Team members should make frequent code commits and push them to the VCS as soon as they finish each small piece of functionality, so that it is tested and made available for others to build on top of it.

- Always commit self-tested code

Whenever a new piece of code is committed, it should be accompanied by automated tests in the same commit. Martin Fowler calls this practice self-testing code. For example, as we saw earlier, Allie committed her login functionality along with login tests. This ensured that her commit was not broken when Bob committed his code next.

- Adhere to the Continuous Integration Certification Test

Each team member should ensure their commit passes the continuous testing process before moving on to the next set of tasks. If the tests fail, they need to fix them immediately. According to Martin Fowlerâs Continuous Integration Certification Test, a broken build and test stage should be repaired within 10 minutes. If this is not possible, the broken commit should be reverted, leaving the code stable (or green).

- Do not ignore/comment out the failing tests

In the rush to make the build and test stage pass, team members should not comment out and ignore the failing tests. As evident as the reasons for why this should not be done are, itâs a common practice.

- Do not push to a broken build

The team should not push their code when the build and test stage is broken (or red). Pushing work on top of an already broken code base will lead to tests failing again. This will further burden the team with the additional task of finding which changes originally broke the build.

- Take ownership of all failures

When tests fail in an area of code that someone didnât work on, but it fails because of their changes, the responsibility of fixing the build is still on them. If necessary, they can pair with someone who has the required knowledge to fix it, but ultimately getting it fixed before moving on to their next task is a fundamental requisite. This practice is essential because often the responsibility of fixing the failed tests is tossed around, causing a delay in resolving the issues. Sometimes the tests are eliminated from running in the CI for days as the issue is not fixed. This results in the continuous testing process giving incomplete or false feedback for the changes pushed during that open window.

Many teams also adopt stricter practices for their own benefit, such as mandating that all the micro- and macro-level tests pass on local machines before pushing the commit to the VCS, failing the build and test stage if a commit does not meet the code coverage threshold, publishing the commitâs status (pass or fail) with the name of the individual who made the commit to everyone on a communication channel such as Slack, playing loud music in the team area whenever a build is broken from a dedicated CI monitor, and so on. Also, as a tester on the team, I keep an eye on the status of the tests in the CI and see to it that they get fixed on time. Fundamentally, all these measures are taken to streamline the teamâs practices around CI/CT processes and thereby yield the right benefitsâalthough the foremost measure that always seems to work best is empowering the team with knowledge of not only the âhowâ but also the âwhyâ behind the process!

Continuous Testing Strategy

Now that you know the processes and principles, the next step is to create and apply strategies custom to your project needs.

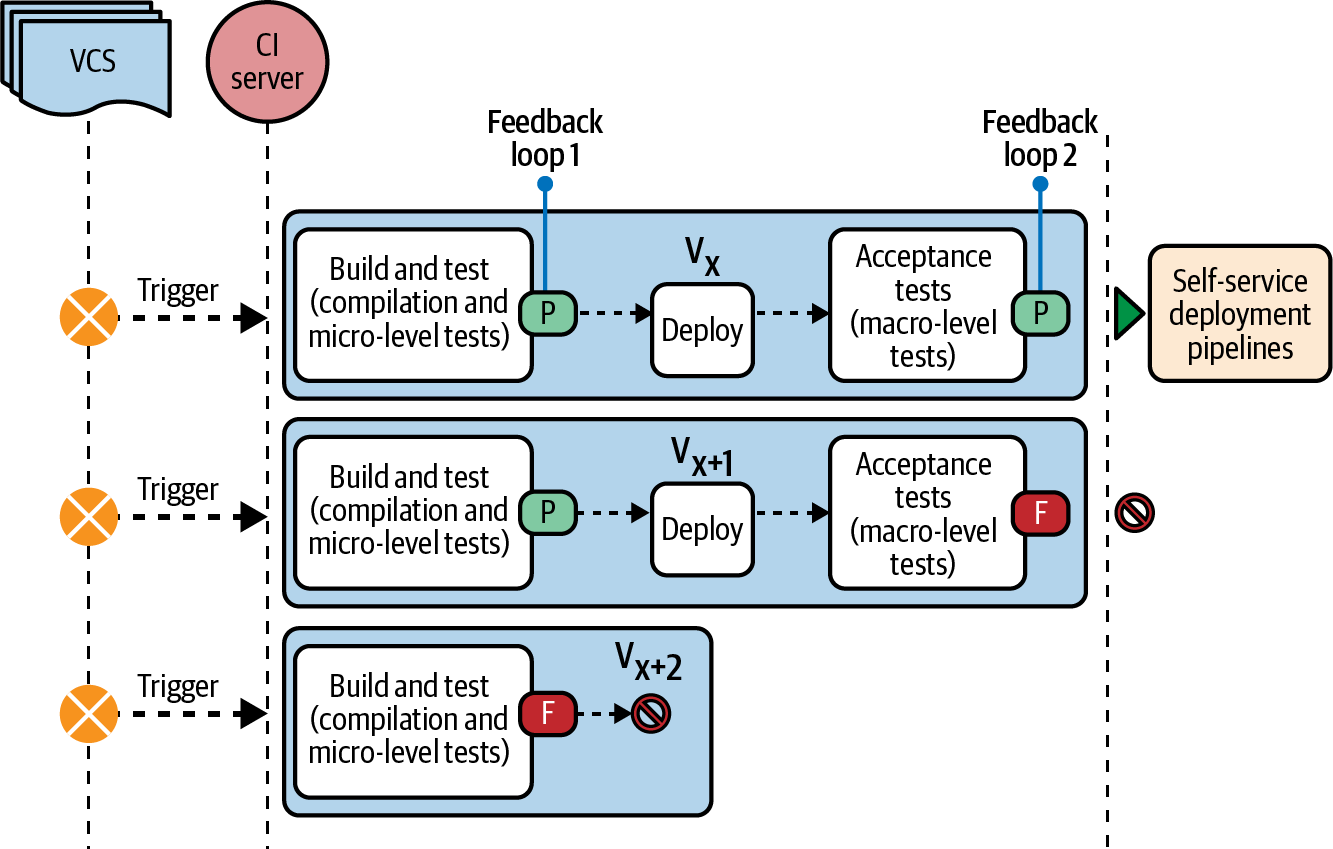

In the previous section, the continuous testing process was demonstrated with a single build and test stage that runs all the tests and gives feedback in a single loop. You can also accelerate the feedback cycle with two independent feedback loops: one that runs the tests against the static application code (e.g., all the micro-level tests), and the other that runs the macro-level tests against the deployed application. This, in a way, is a slight shift left where we leverage the micro-level (unit, integration, contract) testsâ ability to run faster than the macro-level (API, UI, end-to-end) tests to get faster feedback.

Figure 4-3 shows a CT process with two stages. As you can see here, a common practice is to combine the application compilation with the micro-level tests as a single stage in CI. This is traditionally called the build and test stage. When the team adheres to the test pyramid, like we discussed in Chapter 3, the micro-level tests will end up validating a broad range of application functionalities. As a result, this stage helps them get extensive feedback on the commit quickly. The build and test stage should be swift enough to finish execution within a few minutes so that, per the recommended principles and etiquette, the team will wait for it to complete before moving on to the next task. If it takes longer, the team should find ways to improve itâfor example, parallelizing the build and test stage for each component instead of having a single stage for the entire code base.1

Figure 4-3. The continuous testing process with two feedback loops

Note

In their book Continuous Delivery (Addison-Wesley Professional), Jez Humble and David Farley suggest that the build and test stage should be short enough that it takes âaround the amount of time you can devote to making a cup of tea, a quick chat, checking your email, or stretching your muscles.â

As soon as the build and test stage passes, the deploy stage pushes the application artifacts to a CI environment (sometimes called the dev environment). The next stage, called the functional testing stage or acceptance testing stage, runs the macro-level tests against the deployed application in the CI environment. Only when this stage passes is the application ready for self-service deployments to other higher-level environments such as QA, UAT, and production.

The feedback from this stage might take longer as the acceptance tests take longer to run, and the stage is triggered after the application deployment, which takes time too. But when teams properly implement the test pyramid, the two feedback loops should take less than an hour to complete. The example I gave in Chapter 3 corroborates this: when the team had ~200 macro-level tests it took them 8 hours to get feedback, but when they reimplemented their testing structure to conform to the test pyramid, it took them only about 35 minutes from commit to being ready for self-service deployment with ~470 micro- and macro-level tests.

Another consideration is that when the feedback loop is short, team members can still prioritize fixing the issues found in the continuous testing process even if theyâve picked up a new task shortly after the build and test stage. If it takes several hours, they may be tempted to ignore the failing tests and track them as defect cards to fix later. This is harmful, as it means they are integrating their new code on top of defects, and the new code is not thoroughly tested either as the failing tests are ignored. Hence, the team should continue to monitor and adopt ways to quicken the two feedback loops using techniques like parallelizing the test run, implementing the test pyramid, removing duplicate tests, and refactoring the tests to remove waits and abstract common functionalities.2

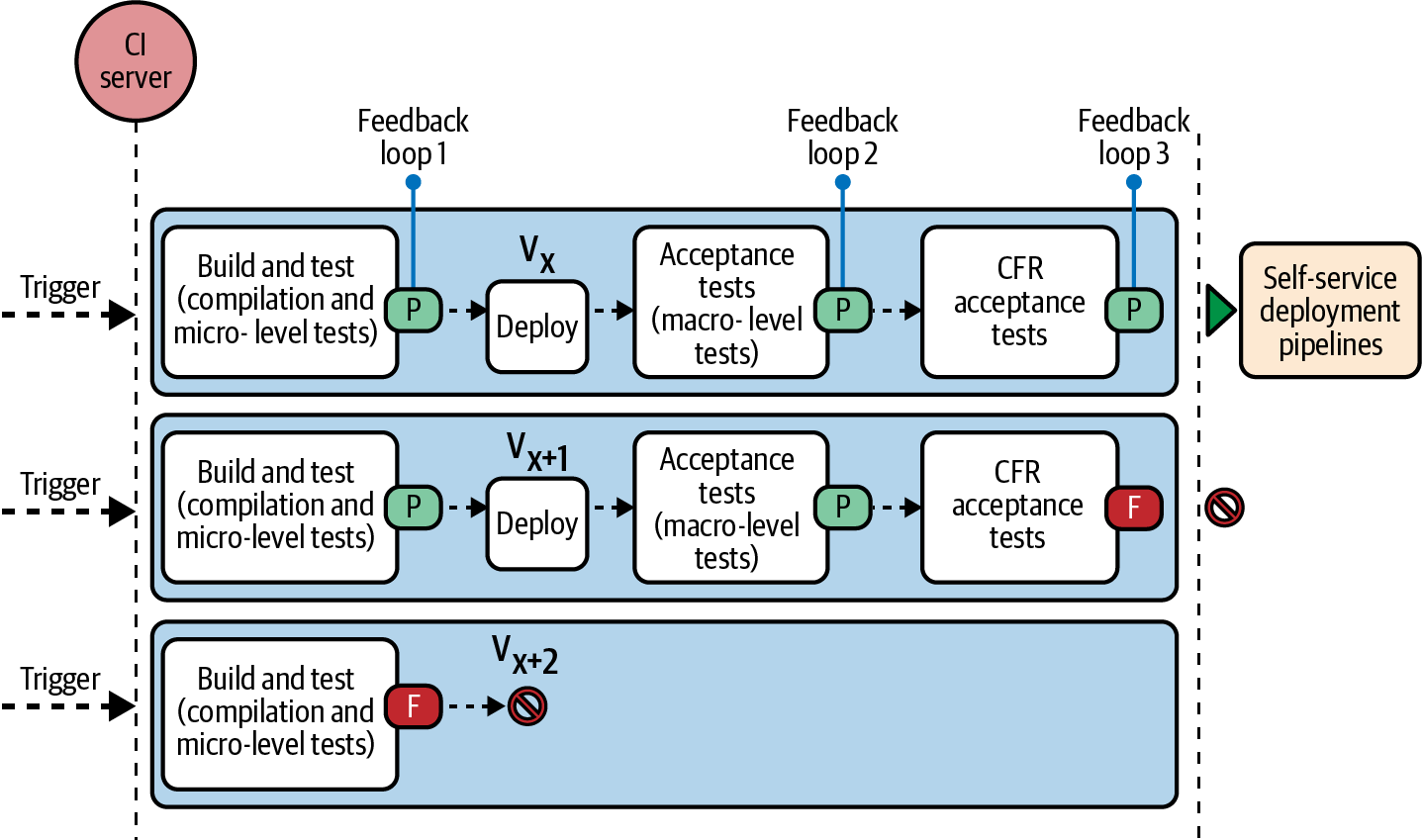

This continuous testing process can be further extended to receive cross-functional feedback, as depicted in Figure 4-4. Teams can run automated performance, security, and accessibility tests as part of the two existing feedback loops or configure separate stages subsequent to the acceptance testing stage in the CI server, achieving the goal of receiving continuous fast feedback on the applicationâs quality holistically. You will learn shift-left strategies for cross-functional testing in the upcoming chapters.

Figure 4-4. The continuous testing process with three feedback loops

At this point, when you run all the tests in a chained pipeline fashion it may take ample time and resources to finish all the stages. A way to strategize the CT process in this case is to split the tests into smoke tests and nightly regression tests, as seen in Figure 4-5.

Figure 4-5. The continuous testing process with four feedback loops

Smoke testing is a term borrowed from the electrical engineering world, where electricity is passed after the circuit is completed to assess the end-to-end flow. When there are issues in the circuit, there will be smoke (hence the name). Similarly, you can choose the tests that cover the end-to-end flow of every feature in the application to form the smoke test pack and only run them as part of the acceptance testing stage. This way, you can get a high-level signal on the status of every commit quickly. As seen in Figure 4-5, the commit is ready for self-service deployment after the smoke test stage.

When you choose to perform smoke testing, you have to complement it with nightly regression. The nightly regression stage is configured in the CI server to run the entire test suite once every day when the team is off work (e.g., it may be scheduled to run at 7 p.m. every day). The tests are run against the latest code base with all the dayâs commits. The team must make a habit of analyzing the nightly regression results first thing the next day and prioritize fixing defects and environment failures. Sometimes this may require test script changes, and that has to be prioritized for the day as well so that the continuous testing process gives the right feedback for the upcoming commits.

You can apply these two strategies to split both the functional and cross-functional tests. For example, you can choose to run the performance load test for a single critical endpoint as part of every commit and run the remaining performance tests as part of nightly regression (performance tests are discussed in Chapter 8). Similarly, you can run the static code security scanning tests as part of the build and test stage and run the functional security scanning tests (discussed in Chapter 7) as part of the nightly regression stage. As obvious as it may be, the caveat with such an approach is that the feedback is delayed by a day. Consequently, there is a delay in fixing the feedback as well; the issues are tracked as defects and fixed later. As a result, you should be careful while choosing the types of tests you run as part of the smoke test and nightly regression stages. Also, note that only macro-level and cross-functional tests should be categorized as smoke tests; all the micro-level tests should still be run as part of the build and test stage.

Most often, when the application is young, you can forgo these strategies and enjoy the privilege of running all the tests for every commit. Then, as the application starts to grow (along with the number of tests), you can implement the various CI runtime optimization methods, then eventually go the smoke test and nightly regression way.

Benefits

If youâre wondering whether all that effort to undertake a continuous testing process will turn out to bear worthwhile fruit, Figure 4-6 showcases some benefits to get you and your team motivated.

Figure 4-6. Benefits of the continuous testing process

Letâs take a look at each of these in turn:

- Common quality goals

Following the continuous testing process ensures that all team members are aware of and working toward a common quality goalâin terms of both functional and cross-functional quality aspectsâas their work is continuously evaluated against that goal. This is a concrete way to build quality in.

- Early defect detection

Every team member gets immediate feedback on their commits, both in terms of functional and cross-functional aspects. This gives them the opportunity to fix issues while they have the relevant context, as opposed to coming back to the code a few days or weeks later.

- Ready to deliver

Since the code is continuously tested, the application is always in a ready-to-deploy state for any environment.

- Enhanced collaboration

It is easier to collaborate with distributed team members who are sharing their work and to keep track of which commit caused which issues, thereby avoiding accusations and limiting animosity.

- Combined delivery ownership

Ownership for delivery is distributed among all team members instead of just the testing team or senior developers, as everyone is responsible for assuring their commits are ready for deployment.

If youâve been working in the software industry for a while, you will surely know how hard it is to achieve some of these benefits otherwise!

Exercise

Itâs time to get hands-on. The guided exercise here will show you how to push the automated tests that you created as part of Chapter 3 into a VCS, set up a CI server, and integrate the automated tests with the CI server such that whenever you push a commit to the VCS, the automated tests will be executed. You will be learning to use Git and Jenkins as part of this exercise.

Git

Originally developed in 2005 by Linus Torvalds, creator of the Linux operating system kernel, Git is the most widely used open source version control system. According to the 2021 Stack Overflow survey, 90% of respondents use Git. Itâs a distributed version control system, which means every team member gets a copy of the entire code base along with the history of changes. This gives teams a lot of flexibility in terms of debugging and working independently.

Setup

To begin with, youâll need somewhere to host your code base. GitHub and Bitbucket are companies that provide cloud-based offerings to host Git repositories (a repository, in simple terms, is a storage location for your code base). GitHub allows hosting public repositories for free, which makes it popular, especially among the open source community. So for this exercise, if you donât have a GitHub account already, create one now.

In your GitHub account, navigate to Your Repositories â New to create a new repository for your automated Selenium tests. Provide a name for the repository, say FunctionalTests, and make it a public repository. You will land on the repository setup page on successful creation. Note the URL for your repository (https://github.com/<yourusername>/FunctionalTests.git). The page will also give you a set of instructions to push your code to the repository using Git commands. Youâll have to set up and configure Git on your machine to run them.

To do this, follow these steps:

Download and install Git from your command prompt using the following commands:

// macOS $ brew install git // Linux $ sudo apt-get install git

If you are on Windows, download the installer from the official Git for Windows site.

Verify the installation by running the following command:

$ git --version

Whenever you make a commit, it needs to be tied to a username and email address for tracking purposes. Provide yours to Git with these commands so that it automatically attaches them when you make a commit:

$ git config --global user.name "yourUsername" $ git config --global user.email "yourEmail"

Verify the configuration by running this command:

$ git config --global --list

Workflow

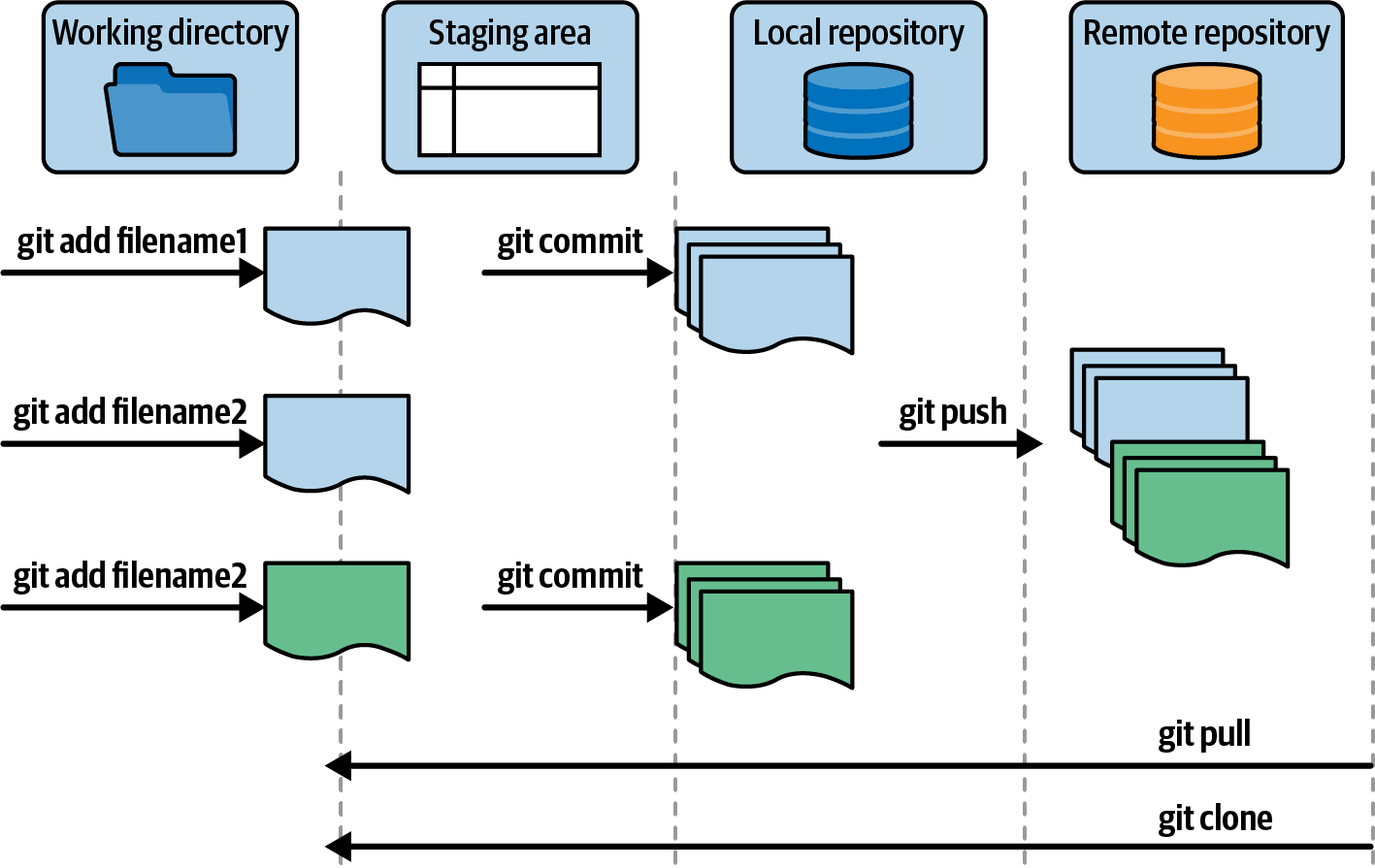

The workflow in Git has four stages that your code will move through, as seen in Figure 4-7. Each stage has a different purpose, as you will learn.

Figure 4-7. Git workflow with four stages

The first stage is your working directory, where you make changes to your test code (add new tests, fix test scripts, etc.). The second stage is the local staging area to which you add each small chunk of work, such as creating a page class, as you finish it. This allows you to keep track of the changes you are making so you can review and reuse them later. The third stage is your local repository. As mentioned earlier, Git gives everyone a copy of the entire repository along with the history on their local machine. Once you have a working test structure, you can make a commit that will move everything youâve added to the staging area to your local repository. This makes it easy to revert back all the code as a single chunk when there are failures. Once you are finally done with all the required changesâin this case, when you have fully completed a test and want it to run as part of the CI pipelineâyou can push it to the remote repository. The new test will be available to everyone in your team as well.

The Git commands to move the code through the different stages are shown in Figure 4-7. You can try them now step by step as follows:

In your terminal, go to the folder where you created your automated Selenium tests in Chapter 3. Run the following command to initialize the Git repository:

$ cd /path/to/project/ $ git init

This command will set up the .git folder in your current working directory.

Add your entire test suite to the staging area by running the following command:

$ git add .

You can instead add a specific file (or directory) with

git add filename.Commit your changes to the local repository with a readable message explaining the context of the commit by executing the following command with the appropriate message text:

$ git commit -m "Adding functional tests"

You can combine steps 2 and 3 by appending the optional parameter with

-a; i.e.,git commit -am "message".To push your code to the public repository, you must first provide its location to your local Git. Do that by running the following command:

$ git remote add origin

https://github.com/<yourusername>/FunctionalTests.git

The next step is to push it to the public repository. You need to authenticate by providing your GitHub username and personal access token while pushing. A personal access token is a short-lived password mandated by GitHub for all operations from August 2021, for security reasons. To get your personal access token, go to your GitHub account, navigate to Settings â Developer Settings â âPersonal access tokens,â click âGenerate new token,â and fill in the required fields. Use the token when prompted after running the following command:

$ git push -u origin master

Tip

If you donât want to authenticate every time you interact with the public repository, you can choose to set up the SSH authentication mechanism.

Open your GitHub account and verify the repository.

When working with team members, you will have to pull their code to your machine from the public repository. You can do that by running the git pull command. If you already have a functional tests repository for your team, you can use git clone repoURL to get your local repository copy instead of git init.

Several other Git commands, such as git merge, git fetch, and git reset, make our lives easier. Explore them in the official documentation when needed.

Jenkins

The next step is to set up a Jenkins CI server on your local machine and integrate the automated tests from your Git repository.

Note

The intention of this part of the exercise is to give you an understanding of how continuous testing can be implemented in practice using CI/CD tools, not to teach you DevOps. Teams might engage developers with specialized DevOps skills or have a DevOps role to manage CI/CD/CT pipeline creation and maintenance work. However, it is essential for both developers and testers to be familiar with the CI/CD/CT process and its workings, as they will be interacting with this process and debugging failures firsthand. Also, from a testing perspective, it is critical to learn to adapt the CT process to the specific project needs and ensure the test stages are properly chained, as per the teamâs CT strategy.

Setup

Jenkins is an open source CI server. To use it, download the installation package for your OS and follow the standard installation procedure. Once installed, start the Jenkins service. On macOS, you can install and start the Jenkins service using brew commands as follows:

$ brew install jenkins-lts $ brew services start jenkins-lts

After the service has started successfully, open the Jenkins web UI at http://localhost:8080/. The site will lead you through the following configuration activities:

Unlock Jenkins with a unique administrator password that was generated as part of the installation process. The web page will show you the path to the location of this password on your local machine.

Download and install the commonly used Jenkins plug-ins.

Create an administrator account. You will log in to Jenkins every time with this account.

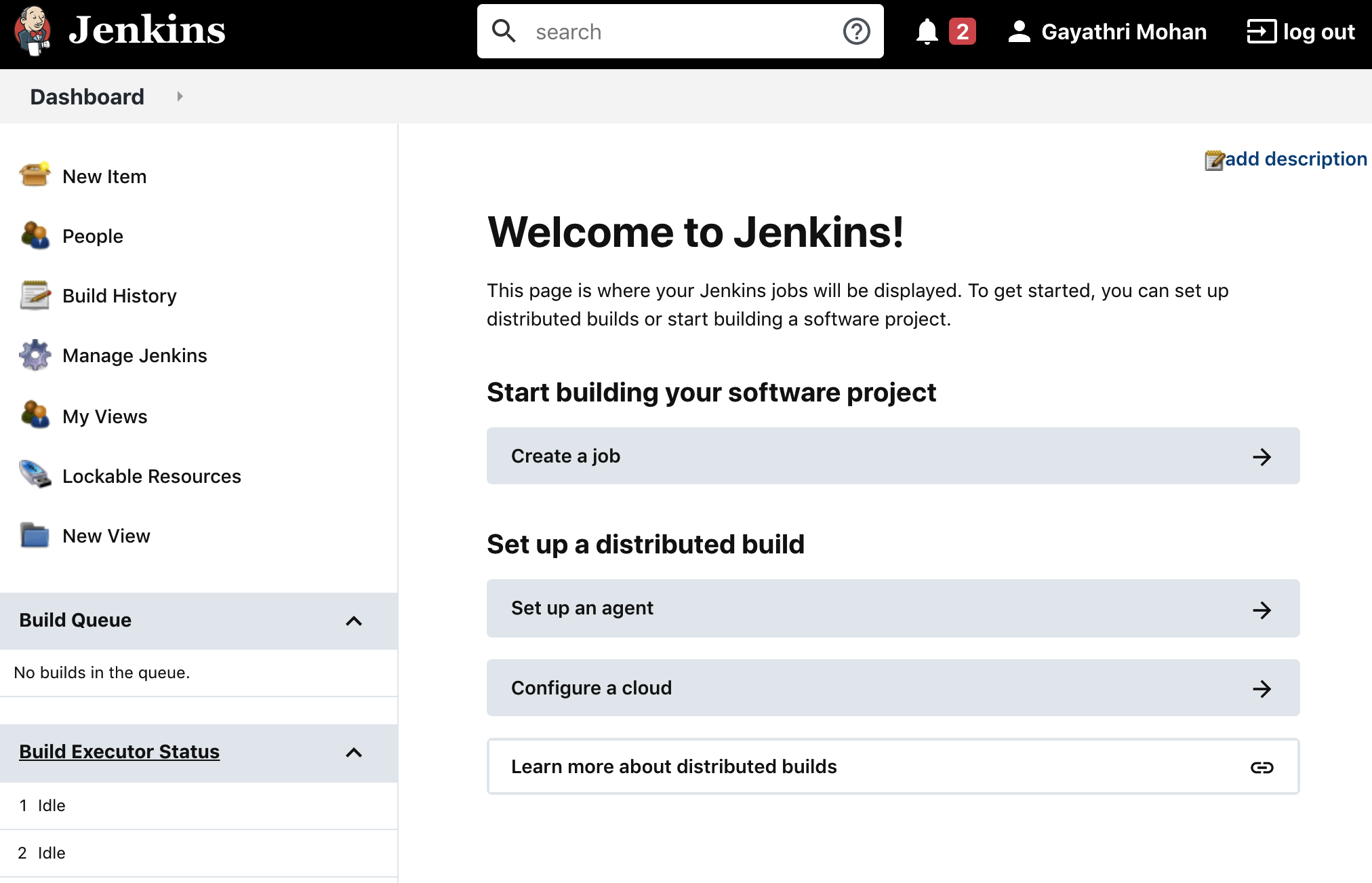

After the initial configuration you will be taken to the Jenkins Dashboard page, as seen in Figure 4-8.

Figure 4-8. Jenkins Dashboard view

Workflow

Now, follow these steps to set up a new pipeline for your automated tests:

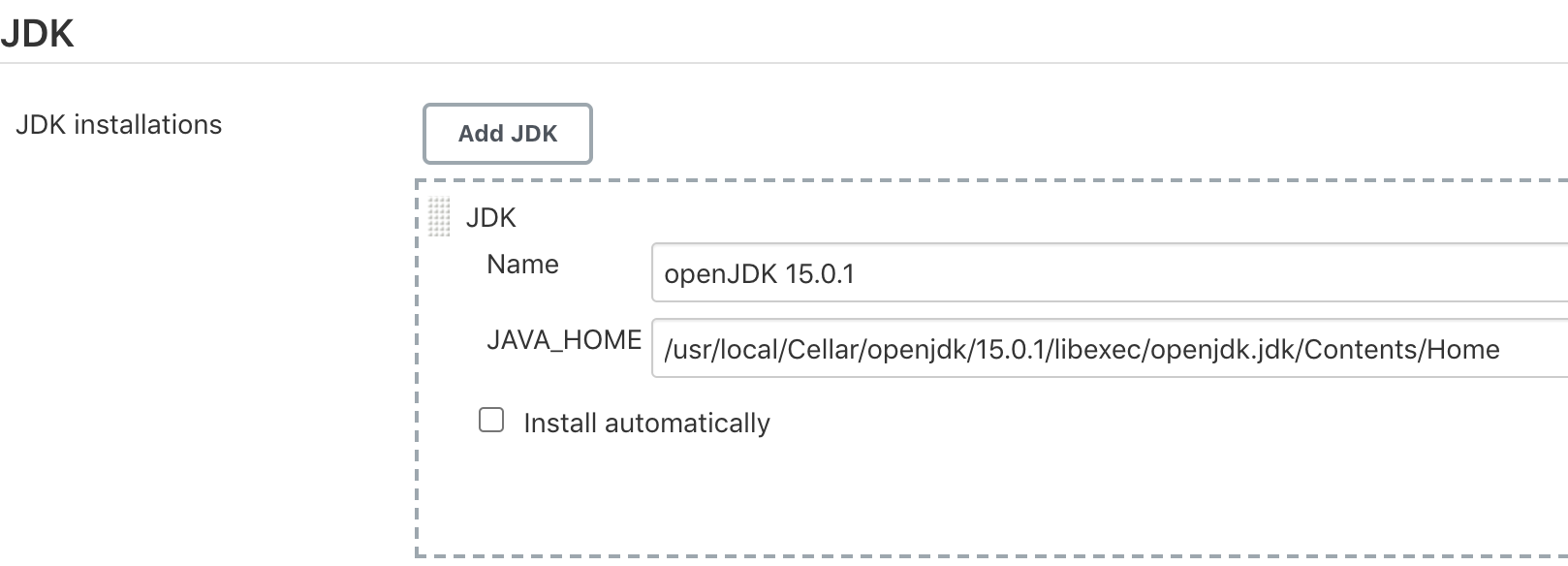

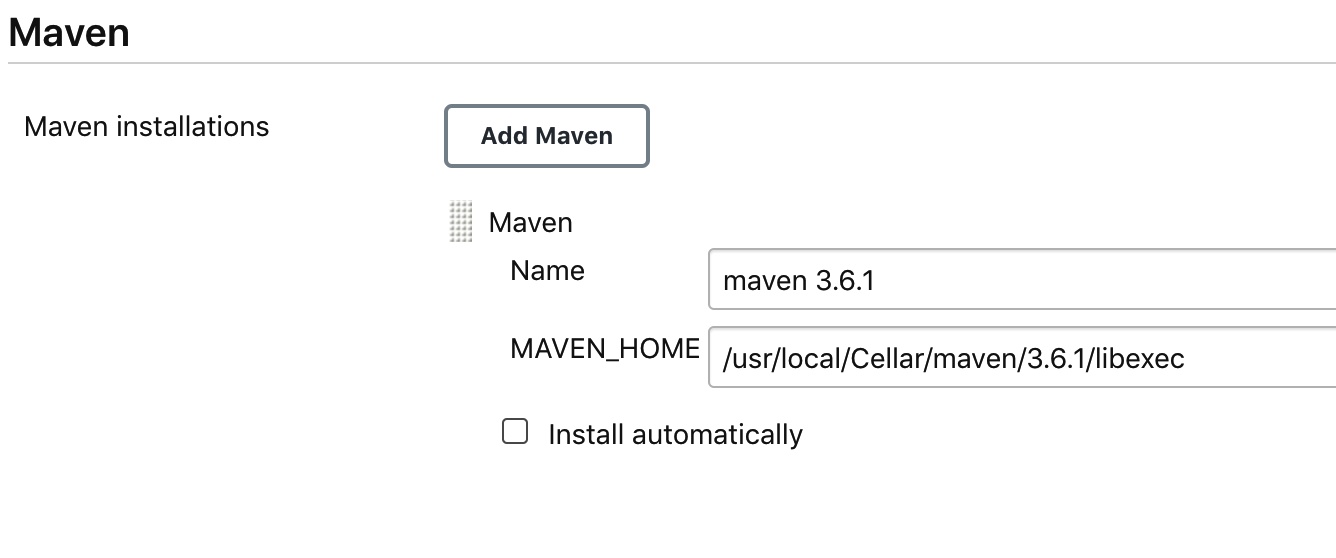

From the Jenkins Dashboard, go to Manage Jenkins â Global Tool Configuration to configure the

JAVA_HOMEandMAVEN_HOMEenvironment variables, as seen in 4-9 and 4-10. You can type themvn -vcommand in your terminal to get both locations.

Figure 4-9. Configuring

JAVA_HOMEin Jenkins

Figure 4-10. Configuring

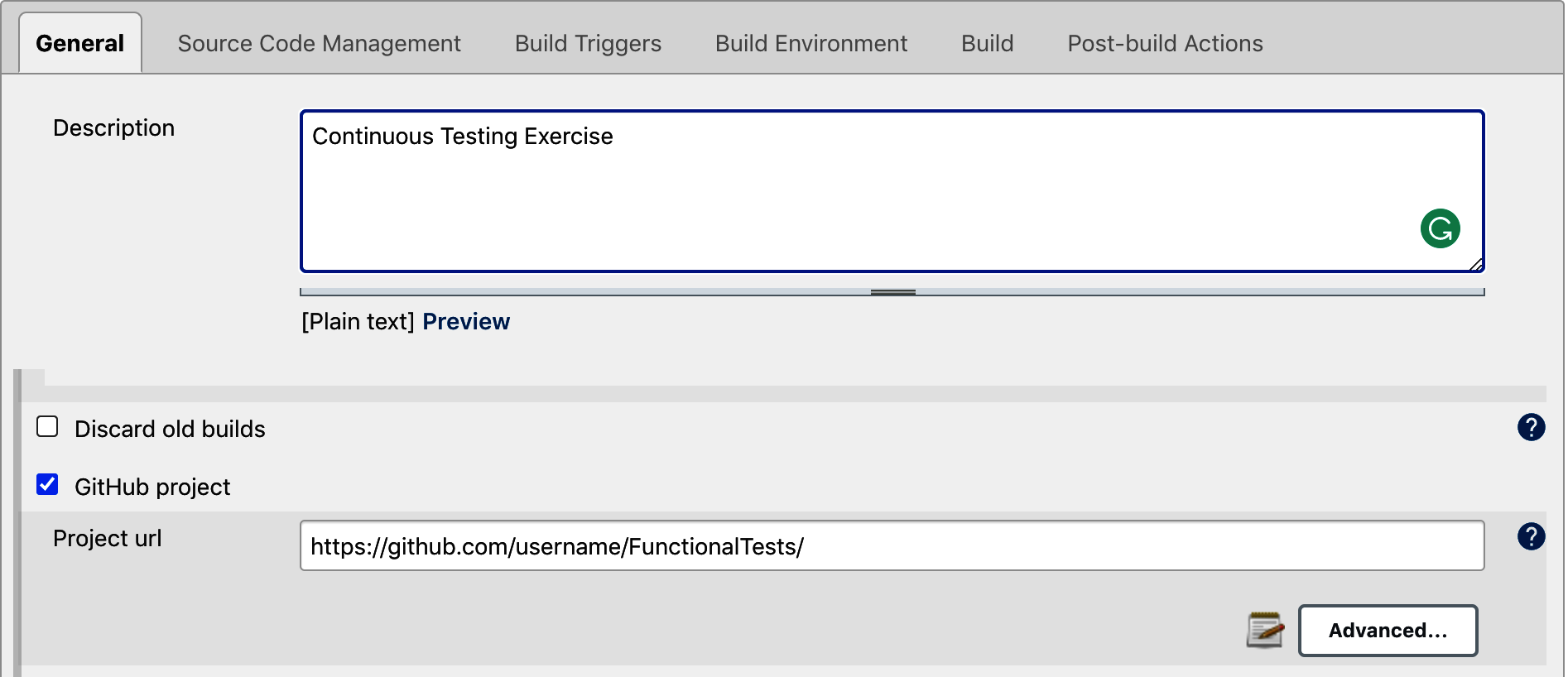

MAVEN_HOMEin JenkinsComing back to the Dashboard view, select the New Item option in the lefthand panel to create a new pipeline. Enter a name for the pipeline, say âFunctional Tests,â and choose the âFreestyle projectâ option. This will take you to the pipeline configuration page, as seen in Figure 4-11.

Figure 4-11. The Jenkins pipeline configuration page

Enter the following details to configure your pipeline:

On the General tab, add a pipeline description. Select âGitHub projectâ and enter your repository URL (without the .git extension).

On the Source Code Management tab, select Git and enter your repository URL (with the .git extension this time). Jenkins will use this to do

git clone.The Build Triggers tab provides a few options to configure when and how to kickstart the pipeline in an automated fashion. For example, the Poll SCM option can be used to poll the Git repository every two minutes to check for new changes and, if there are any, start the test run. The Build Periodically option can be used to schedule the test run at fixed intervals even if there are no new code changes. This can be used to configure nightly regressions. Similarly, the âGitHub hook trigger for GITScm pollingâ option configures a GitHub plug-in to send a trigger to Jenkins whenever there are new changes. To keep it simple, choose Poll SCM and enter this value to poll the functional tests repository every two minutes:

H/2 * * * *.Since your Selenium WebDriver functional test framework uses Maven, select the âInvoke top-level Maven targetsâ option on the Build tab. Choose your local Maven, which you configured in Chapter 3. In the Goals field, enter the Maven lifecycle phase that needs to be run by the pipeline:

test. This will execute themvn testcommand from the project directory.The Post-build Actions tab is where you can chain multiple pipelinesâi.e., trigger the CFR tests pipeline after the functional tests pipeline has passed and create a complete CD pipeline.4

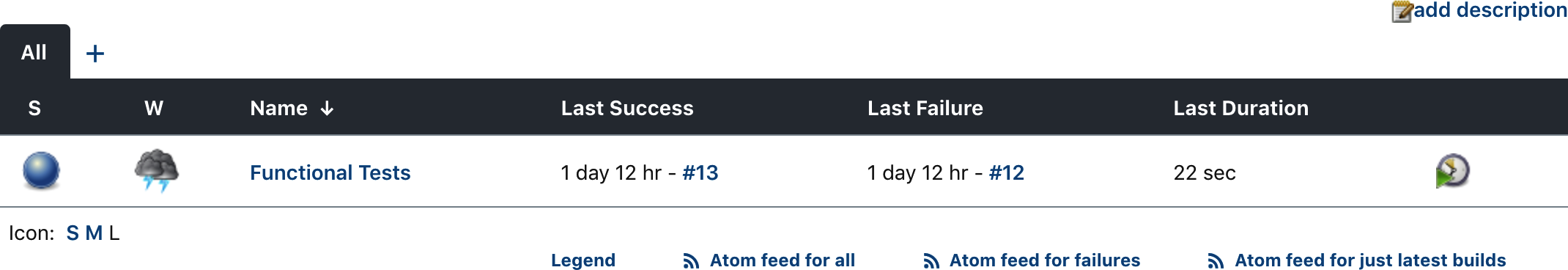

Save and navigate to the Dashboard view. You will see the pipeline created, as seen in Figure 4-12.

Figure 4-12. Your pipeline in the Jenkins Dashboard

Click the pipeline name in the Dashboard view, and on the landing page, select the Build Now option in the left panel. The pipeline will clone the repository on your local machine and execute the

mvn testcommand. You can see the Chrome browser open and close as part of test execution.Locate the Workspace folder on the same page. Youâll find the local cloned copy of the code from the repository and the reports generated after the tests are run in this folder; you can use this for debugging purposes.

In the bottom section of the lefthand panel on the same page, select the current pipeline execution build count. You will have the option to view the console output in the left panel of the landing page. This view will show the live execution activities for debugging.

Congratulations, that completes your CI setup!

Along the same lines, you will have to add the stages of the respective tests (static code, acceptance, smoke, CFR) as per your continuous testing strategy to complete the end-to-end CD setup for the project. Ensure that the stages get triggered not only after the application code changes but also after configuration, infrastructure, and test code changes!

The Four Key Metrics

The ultimate outcome from all this effort spent on setting up your CI/CD/CT processes (and adhering to the principles and etiquette laid out earlier) is the team qualifying as an elite or high-performing team according to the four key metrics (4KM) identified by Googleâs DevOps Research and Assessment (DORA) team. DORA formulated the 4KM based on extensive research, and outlined how to use these metrics to quantify a software teamâs performance level as either elite, high, medium, or low. The book Accelerate by Jez Humble, Gene Kim, and Nicole Forsgren is an excellent read to learn the details of the research.

In short, the four key metrics give us a handle on measuring a teamâs delivery tempo and the stability of their releases. They are:

- Lead time

The time taken from code being committed to it being ready for production deployment

- Deployment frequency

The frequency at which the software is deployed to production or an app store

- Mean time to restore

The time taken to restore any service outages or recover from failures

- Change fail percentage

The percentage of changes released to production that require subsequent remediation, such as rollbacks to a previous version or hot fixes, or that cause a degradation in service quality

The first two metrics, lead time and deployment frequency, expose the delivery tempo of the team. They measure how quickly a team can deliver value to end users and how frequently they add value to end users. However, in the rush to deliver value to customers, the team should not compromise on the stability of the software. The last two metrics validate this. The mean time to restore and change fail percentage provide an indication of the stability of the software being released. In todayâs world, software failures are inevitable, and these metrics measure how easy it is to recover from these failures and how often such failures occur due to new releases. As you can see, together the 4KM give a clear picture of a software teamâs performance by measuring their speed, reactivity, and ability to deliver with quality and stability.

The targets for an elite team, per DORA research, are represented in Table 4-1.

| Metric | Target |

|---|---|

| Deployment frequency | On-demand (multiple deploys per day) |

| Lead time | Less than a day |

| Mean time to restore | Less than an hour |

| Change fail percentage | 0â15% |

As discussed earlier, one of the main benefits of having a rigorous CI/CD/CT process is that your team will be able to deliver value to customers on demand. Similarly, as youâve seen, when you place automated tests in the right application layers, you can have your code tested as part of the continuous testing process and easily made ready for deployment within hours (i.e., your lead time will be less than a day). Also, with your functional and cross-functional requirements tests automated and run as part of the CT process, it should be no problem to keep your change fail percentage well within the recommended range of 0â15%. Thus, the effort you put in on this front will enable your team to earn âeliteâ status, per the DORA definition. DORA research also shows that elite teams contribute to an organizationâs success, in terms of profit, share price, customer retention, and other criteria. And when the organization does well, it takes good care of its employees, right?

Key Takeaways

Here are the key takeaways from this chapter:

The continuous testing process validates the application quality in terms of both functional and cross-functional aspects in an automated fashion for every incremental change.

Continuous testing relies heavily on the continuous integration process. Continuous integration and testing, in turn, enable continuous delivery of software to customers on demand.

Continuous integration and testing processes require teams to follow strict principles and etiquette for them to be fruitful.

Plan your continuous testing process such that you get fast feedback in multiple loops continuously.

The benefits of continuous testing are numerous, and many of themâincluding establishing common quality goals across roles and teams, shared delivery ownership, and improved collaboration across distributed teamsâare difficult to achieve otherwise.

Although DevOps engineers might be responsible for CI/CD setup and maintenance, it is vital for testers on the team to devise the continuous testing strategy and ensure the feedback loops are triggered correctly. Most importantly, they should keep a keen watch over the teamâs CT practices to ensure the effort spent on creating and maintaining tests reaps the right benefits.

Following rigorous CI/CD/CT processes will lead your team to become an elite team, as defined by DORA research. And an elite team contributes to the success of the entire organization!

1 For more on this and other commonly prescribed CI/CD industry principles, see The DevOps Handbook (IT Revolution Press), by Gene Kim, Jez Humble, Patrick Debois, and John Willis.

2 Jez Humble and David Farley discuss such optimization techniques at greater length in Continuous Delivery.

3 For further details, see Jez Humble, Gene Kim, and Nicole Forsgrenâs book Accelerate (IT Revolution Press).

4 For more information on working with pipelines, see the Jenkins documentation.

Get Full Stack Testing now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.