Chapter 4. Understanding Data Science: An Emerging Discipline for Data-Intensive Discovery

Over the past two decades, Data-Intensive Analysis (DIA)—also referred to as Big Data Analytics—has emerged not only as a basis for the Fourth Paradigm of engineering and scientific discovery, but as a basis for discovery in most human endeavors for which data is available. Though the idea originated in the 1960s, widespread deployment has occurred only recently, thanks to the emergence of big data and massive computing power. Data-Intensive Analysis is still in its infancy in its application and our understanding of it, and likewise in its development. Given the potential risks and rewards of DIA, and its breadth of application, it is imperative that we get it right.

The objective of this new Fourth Paradigm is more than simply acquiring data and extracting knowledge. Like its predecessor, the scientific method, the objective of the Fourth Paradigm is to investigate phenomena by acquiring new knowledge, and to integrate it with and use it to correct previous knowledge. It is now time to identify and understand the fundamentals. In my research, I have analyzed more than 30 large-scale use cases to understand current practical aspects, to gain insight into the fundamentals, and to address the fourth “V” of big data—veracity, or the accuracy of the data and the resulting analytics.

Data Science: A New Discovery Paradigm That Will Transform Our World

Big data has opened the door to profound change—to new ways of reasoning, problem solving, and processing that in turn bring new opportunities and challenges. But as was the case with its predecessor discovery paradigms, establishing this emerging Fourth Paradigm and the underlying principles and techniques of data science may take decades.

To better understand DIA and its opportunities and challenges, my research has focused on DIA use cases that are at very large scale—in the range where theory and practice may break. This chapter summarizes some key results of this research, related to understanding and defining data science as a body of principles and techniques with which to measure and improve the correctness, completeness, and efficiency of Data-Intensive Analysis.

Significance of DIA and Data Science

Data science is transforming discovery in many human endeavors, including healthcare, manufacturing, education, financial modeling, policing, and marketing [1][2]. It has been used to produce significant results in areas from particle physics (e.g., Higgs Boson), to identifying and resolving sleep disorders using Fitbit data, to recommenders for literature, theatre, and shopping. More than 50 national governments have established data-driven strategies as an official policy, in science and engineering [3] as well as in healthcare (e.g., the US National Institutes of Health and President Obama’s Precision Medicine Initiative for “delivering the right treatments, at the right time, every time to the right person”). The hope, supported by early results, is that data-driven techniques will accelerate the discovery of treatments to manage and prevent chronic diseases that are more precise and are tailored to specific individuals, as well as being dramatically lower in cost.

Data science is being used to radically transform entire domains, such as medicine and biomedical research—as is stated as the purpose of the newly created Center for Biomedical Informatics at Harvard Medical School. It is also making an impact in economics [4], drug discovery [5], and many other domains. As a result of its successes and potential, data science is rapidly becoming a subdiscipline of most academic areas. These developments suggest a strong belief in the potential value of data science—but can it deliver?

The early successes and clearly stated expectations of data science are truly remarkable; however, its actual deployment, like that of many hot trends, is far less extensive than it might appear. According to Gartner’s 2015 survey of Big Data Management and Analytics, 60% of the Fortune 500 companies claim to have deployed data science, yet less than 20% have implemented consequent significant changes, and less than 1% have optimized its benefits. Gartner concludes that 85% of these companies will be unable to exploit big data in 2015. The vast majority of deployments address tactical aspects of existing processes and static business intelligence, rather than realizing the power of data science by discovering previously unforeseen value and identifying strategic advantages.

Illustrious Histories: The Origins of Data Science

Data science is in its infancy. Few individuals or organizations understand the potential of and the paradigm shift associated with data science, let alone understand it conceptually. The high rewards and equally high risks, and its pervasive application, make it imperative that we better understand data science—its models, methods, processes, and results.

Data science is inherently multidisciplinary. Its principal components include mathematics, statistics, and computer science—especially areas of artificial intelligence such as machine learning, data management, and high-performance computing. While these disciplines need to be evaluated in the new paradigm, they have long, illustrious histories.

Data analysis developed over 4,000 years ago, with origins in Babylon (17th–12th c. BCE) and India (12th c. BCE). Mathematical analysis originated in the 17th c. around the time of the Scientific Revolution. While statistics has its roots in the 5th c. BCE and the 18th c. CE, its application in data science originated in 1962 with John W. Tukey [6] and George Box [7]. These long, illustrious histories suggest that data science draws on well-established results that took decades or centuries to develop. To what extent do they (e.g., statistical significance) apply in this new context?

Data science constitutes a new paradigm in the sense of Thomas S. Kuhn’s scientific revolutions [8]. Data science’s predecessor paradigm, the scientific method, was approximately 2,000 years in the development, starting with Aristotle (384–322 BCE) and continuing through Ptolemy (1st c. CE) and the Bacons (13th, 16th c. CE). Today, data science is emerging following the ~1,000-year development of its three predecessor paradigms of scientific and engineering discovery: theory, experimentation, and simulation [9]. Data science has been developing over the course of the last 50 years, but it changed qualitatively in the late 20th century with the emergence of big data—data whose volumes, velocities, and variety current technologies, let alone humans, cannot handle efficiently. This chapter addresses another characteristic that current technologies and theories do not handle well—veracity.

What Could Possibly Go Wrong?

Do we understand the risks of recommending the wrong product, let alone the wrong medical diagnoses, treatments, or drugs? The risks of a result that fails to achieve its objectives may include losses in time, resources, customer satisfaction, customers, and potentially business collapse. The vast majority of data science applications face such small risks, however, that veracity has received little attention.

Far greater risks could be incurred if incorrect data science results are acted upon in critical contexts, such as drug discovery [10] and personalized medicine. Most scientists in these contexts are well aware of the risks of errors, and go to extremes to estimate and minimize them. The announcement of the “discovery” of the Higgs boson at CERN’s Large Hadron Collider (LHC) on July 4, 2012 might have suggested that the results were achieved overnight—they were not. The results took 40 years to achieve and included data science techniques developed over a decade and applied over big data by two independent projects, ATLAS and CMS, each of which were subsequently peer-reviewed and published [11][12] with a further year-long verification. To what extent do the vast majority of data science applications concern themselves with verification and error bounds, let alone understand the verification methods applied at CERN? Informal surveys of data scientists conducted in my research at data science conferences suggest that 80% of customers never ask for error bounds.

The existential risks of applying data science have been called out by world-leading authorities in institutions such as the Organisation for Economic Co-operation and Development and in the artificial Intelligence (AI) [13][14][15][16] and legal [17] communities. The most extreme concerns have been stated by the Future of Life Institute, which has the objective of safeguarding life, developing optimistic visions of the future, and mitigating “existential risks facing humanity” from AI.

Given the potential risks and rewards of DIA and its breadth of application across conventional, empirical scientific and engineering domains, as well as across most human endeavors, we had better get this right! The scientific and engineering communities place high trust in their existing discovery paradigms, with well-defined measures of likelihood and confidence within relatively precise error estimates. Can we say the same for modern data science as a discovery paradigm, and for its results? A simple observation of the formal development of the processes and methods of its predecessors suggests that we cannot. Indeed, we do not know if, or under what conditions, the constituent disciplines—like statistics—may break down.

Do we understand DIA to the extent that we can assign probabilistic measures of likelihood to its results? With the scale and emerging nature of DIA-based discovery, how do we estimate the correctness and completeness of analytical results relative to a hypothesized discovery question? The underlying principles and techniques may not apply in this new context.

In summary, we do not yet understand DIA adequately to quantify the probability or likelihood that a projected outcome will occur within estimated error bounds. While the researchers at CERN used data science and big data to identify results, verification was ultimately empirical, as it must be in drug discovery [10] and other critical areas until analytical techniques are developed and proven robust.

Do We Understand Data Science?

Do we even understand what data science methods compute or how they work? Human thought is limited by the human mind. According to Miller’s Law [4], the human mind (short-term working memory) is capable of holding on to less than 10 (7 +/– 2) concepts at one time. Hence, humans have difficulty understanding complex models involving more than 10 variables. The conventional process is to imagine a small number of variables, and then abstract or encapsulate that knowledge into a model that can subsequently be augmented with more variables. Thus, most scientific theories develop slowly over time into complex models. For example, Newton’s model of physics was extended over the course of three centuries, through Bohr, Einstein, Heisenberg, and more, up to Higgs—to form the Standard Model of particle physics. Scientific discovery in particle physics is wonderful, but it has taken over 300 years. Due to its complexity, no physicist has claimed to understand the entire Standard Model.

When humans analyze a problem, they do so with models with a limited number of variables. As the number of variables increases, it becomes increasingly difficult to understand the model and the potential combinations and correlations. Hence, humans limit the scale of their models and analyses—which are typically theory-driven—to a level of complexity that they can comprehend.

But what if the phenomenon is arbitrarily complex or beyond immediate human conception? I suspect that this is addressed iteratively, with one model (theory) being abstracted as the base for another more complex theory, and so on (standing on the shoulders of those who have gone before), as the development of quantum physics from the discovery of elementary particles. That is, once the human mind understands a model, it can form the basis of a more complex model. This development under the scientific method scales at a rate limited by human conception, thus limiting the number of variables and the complexity. This is error-prone, since phenomena may not manifest at a certain level of complexity. Models correct at one scale may be wrong at a larger scale, or vice versa—a model wrong at one scale (and hence discarded) may become correct at a higher scale (a more complex model).

Machine learning algorithms can identify correlations between thousands, millions, or even billions of variables. This suggests that it is difficult or even impossible for humans to understand what (or how) these algorithms discover. Imagine trying to understand such a model that results from selecting some subset of the correlations on the assumption that they may be causal, and thus constitute a model of the phenomenon with high confidence of being correct with respect to some hypotheses, with or without error bars.

Cornerstone of a New Discovery Paradigm

The Fourth Paradigm—eScience supported by data science—is paradigmatically different from its predecessor discovery paradigms. It provides revolutionary new ways [8] of thinking, reasoning, and processing; new modes of inquiry, problem solving, and decision making. It is not the Third Paradigm augmented by big data, but something profoundly different. Losing sight of this difference forfeits its power and benefits, and loses the perspective that it is “a revolution that will transform how we live, work, and think” [2].

Paradigm shifts are difficult to notice as they emerge. There are several ways to describe the trend. There is a shift of resources, from (empirically) discovering causality (why the phenomenon occurs)—the heart of the scientific method—to discovering interesting correlations (what might have occurred). This shift involves moving from a strategic perspective driven by human-generated hypotheses (theory-driven, top-down) to a tactical perspective driven by observations (data-driven, bottom-up).

Seen at their extremes, the scientific method involves testing hypotheses (theories) posed by scientists, while data science can be used to generate hypotheses to be tested based on significant correlations among variables that are identified algorithmically in the data. In principle, vast amounts of data and computing resources can be used to accelerate discovery simply by outpacing human thinking in both power and complexity.

Data science is rapidly gaining momentum due to the development of ever more powerful computing resources and algorithms, such as deep learning. Rather than optimizing existing processes, data science can be used to identify patterns that suggest unforeseen solutions.

However, even more compelling is the idea that goes one step beyond the simple version of this shift—namely, a symbiosis of both paradigms. For example, data science can be used to offer several highly probable hypotheses or correlations, from which we select those with acceptable error estimates that are worthy of subsequent empirical analysis. In turn, empiricism can be used to pursue these hypotheses until some converge and some diverge, at which point data science can be applied to refine or confirm the converging hypotheses, and the cycle starts again. Ideally, one would optimize the combination of theory-driven empirical analysis with data-driven analysis to accelerate discovery to a rate neither on its own could achieve.

While data science is a cornerstone of a new discovery paradigm, it may be conceptually and methodologically more challenging than its predecessors, since it involves everything included in its predecessor, paradigms—modeling, methods, processes; measures of correctness, completeness, and efficiency—in a much more complex context, namely that of big data. Following well-established developments, we should try to find the fundamentals of data science—its principles and techniques—to help manage the complexity and guide its understanding and application.

Data Science: A Perspective

Since data science is in its infancy and is inherently multidisciplinary, there are naturally many definitions that emerge and evolve with the discipline. As definitions serve many purposes, it is reasonable to have multiple definitions, each serving different purposes. Most definitions of data science attempt to define why (its purpose), what (constituent disciplines), and how (constituent actions of discovery workflows).

A common definition of data science is the activity of extracting knowledge from data. While simple, this does not convey the larger goal of data science or its consequent challenges. A DIA activity is far more than a collection of actions, or the mechanical processes of acquiring and analyzing data. Like its predecessor paradigm, the scientific method, the purpose of data science and DIA activities is to investigate phenomena by acquiring new knowledge, and correcting and integrating it with previous knowledge—continually evolving our understanding of the phenomena, based on newly available data. We seldom start from scratch. Hence, discovering, understanding, and integrating data must precede extracting knowledge, and all at massive scale—i.e., largely by automated means.

The scientific method that underlies the Third Paradigm is a body of principles and techniques that provide the formal and practical bases of scientific and engineering discovery. The principles and techniques have been developed over hundreds of years, originating with Plato, and are still evolving today, with significant unresolved issues such as statistical significance (i.e., P values) and reproducibility.

While data science had its origins 50 years ago with Tukey [16] and Box [7], it started to change qualitatively less than two decades ago, with the emergence of big data and the consequent paradigm shift we’ve explored. The focus of this research into modern data science is on veracity—the ability to estimate the correctness, completeness, and efficiency of an end-to-end DIA activity and of its results. Therefore, we will use the following definition, in the spirit of Provost and Fawcett [5]:

Data science is a body of principles and techniques for applying data-intensive analysis to investigate phenomena, acquire new knowledge, and correct and integrate previous knowledge with measures of correctness, completeness, and efficiency of the derived results, with respect to some predefined (top-down) or emergent (bottom-up) specification (scope, question, hypothesis).

Understanding Data Science from Practice

Methodology to Better Understand DIA

Driven by a passion for understanding data science in practice, my year-long and ongoing research study has investigated over 30 very large-scale big data applications—most of which have produced or are daily producing significant value. The use cases include particle physics; astrophysics and satellite imagery; oceanography; economics; information services; several life sciences applications in pharmaceuticals, drug discovery, and genetics; and various areas of medicine including precision medicine, hospital studies, clinical trials, and intensive care unit and emergency room medicine.

The aim of this study is to investigate relatively well understood, successful use cases where correctness is critical and the big data context is at massive scale; such use cases constitute less than 5% of all deployed big data analytics projects. The focus is on these use cases, as we do not know where errors (outside normal scientific and analytical errors) may arise. There is a greater likelihood that established disciplines such as statistics and data management might break at very large scale, where errors due to failed fundamentals may be more obvious.

The breadth and depth of the use cases revealed strong, significant emerging trends, some of which are listed below. These confirmed for some use case owners solutions and directions that they were already pursuing, and suggested to others directions that they could not have seen without the perspective of 30+ use cases.

DIA Processes

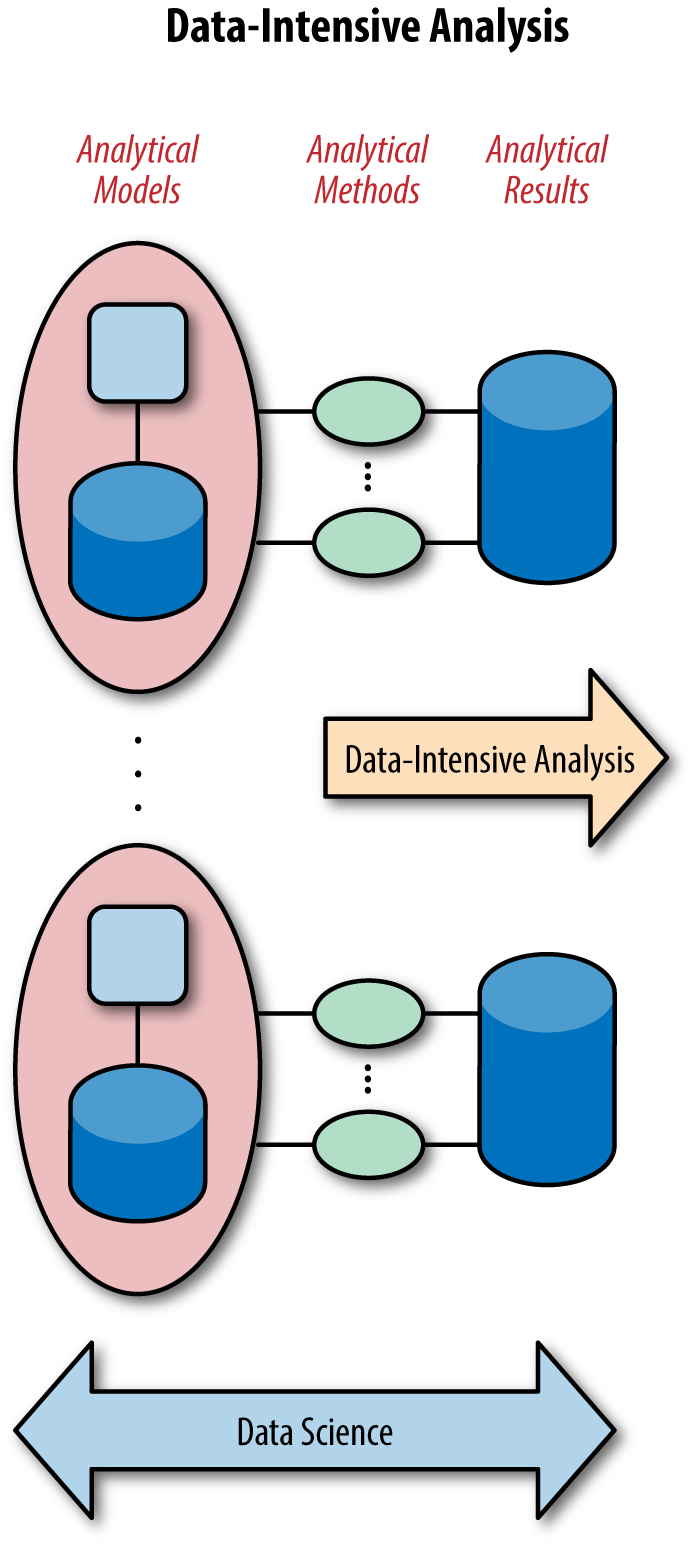

A Data-Intensive-Activity is an analytical process that consists of applying sophisticated analytical methods to large data sets that are stored under some analytical models (Figure 4-1). While this is the typical view of data science projects or DIA use cases, this analytical component of the DIA activity constitutes ∼20% of an end-to-end DIA pipeline or workflow. Thus, currently it consumes ∼20% of the resources required to complete a DIA analysis.

Figure 4-1. Conventional view of Data-Intensive Analysis

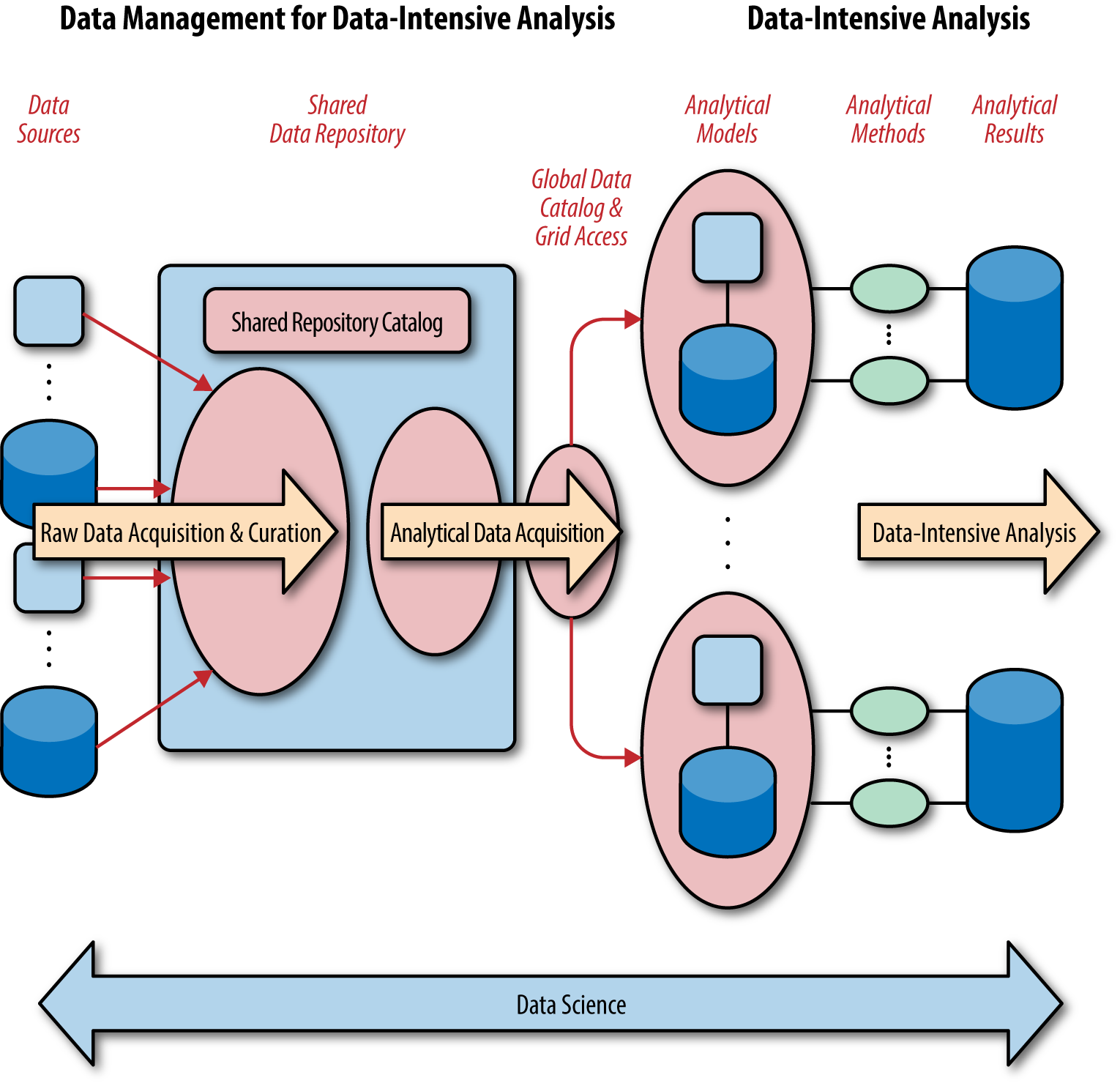

An end-to-end DIA activity (Figure 4-2) involves two data management processes that precede the DIA process, namely raw data acquisition and curation and analytical data acquisition. Raw data acquisition and curation starts with discovering and understanding data in data sources and ends with integrating and storing in a repository curated data that represents entities in the domain of interest, and metadata about those entities. Analytical data acquisition starts with discovering and understanding data within the shared repository and ends with storing the resulting information—specific entities and interpretations—into an analytical model to be used by the subsequent DIA process.

Figure 4-2. End-to-end Data-Intensive Analysis workflow

Sophisticated algorithms, such as machine learning algorithms, largely automate DIA processes, which have to be automated to process such large volumes of data using complex algorithms. Currently, raw data acquisition and curation and analytical data acquisition processes are far less automated, typically requiring 80% or more of the total resources to complete.

This understanding leads us to the following definitions:

- Data-Intensive Discovery (DID)

-

The activity of using big data to investigate phenomena, to acquire new knowledge, and to correct and integrate previous knowledge. The “-Intensive” is added when the data is “at scale.” Theory-driven DID is the application of human-generated scientific, engineering, or other hypotheses to big data. Data-driven DID employs automatic hypothesis generation.

- Data-Intensive Analysis (DIA)

-

The process of analyzing big data with analytical methods and models.

DID goes beyond the Third Paradigm of scientific or engineering discovery by investigating scientific or engineering hypotheses using DIA. A DIA activity is an experiment over data, thus requiring all aspects of a scientific experiment—e.g., experimental design, expressed over data (a.k.a. data-based empiricism).

- DIA process (workflow or pipeline)

-

A sequence of operations that constitute an end-to-end DIA activity, from the acquisition of the source data to the quantified, qualified result.

Currently, ~80% of the effort and resources required for the entire DIA activity are dedicated to the two data management processes—areas where scientists/analysts are not experts. Emerging technologies, such as those for data curation at scale, aim to flip that ratio from 80:20 to 20:80, to let scientists do science and analysts do analysis. This requires an understanding of the data management processes and their correctness, completeness, and efficiency, in addition to those of the DIA process. Another obvious consequence of the present imbalance is that proportionally, 80% of the errors that could arise in DIA may arise in the data management processes, prior to DIA even starting.

Characteristics of Large-Scale DIA Use Cases

The focus of my research is successful, very large-scale, multiyear projects with 100s–1,000s of ongoing DIA activities. These activities are supported by a DIA ecosystem, consisting of a community of users (e.g., over 5,000 scientists in the ATLAS and CMS projects at CERN and similar numbers of scientists using the worldwide Cancer Genome Atlas) and technology (e.g., science gateways, collectively referred to in some branches of science as networked science). Some significant trends that have emerged from the analysis of these use cases are listed, briefly, below.

The typical view of data science appears to be based on the vast majority (~95%) of DIA use cases. While they share some characteristics with those in this study, there are fundamental differences, such as the concern for and due diligence associated with veracity.

Based on this study data, analysis appears to fall into three classes. Conventional data analysis over “small data” accounts for at least 95% of all data analysis, often using Microsoft Excel. DIA over big data has two subclasses: simple DIA, including the vast majority of DIA use cases mentioned above, and complex DIA, such as the use cases analyzed in this study, which are characterized by complex analytical models and a corresponding plethora of analytical methods. The models and methods are as complex as the phenomena being analyzed.

The most widely used DIA tools for simple cases profess to support analyst self-service in point-and-click environments, with some claiming “point us at the data and we will find the patterns of interest for you.” This model is infeasible in the use cases analyzed, which are characterized not only by being machine-driven and human-guided, but by extensive attempts to optimize this man–machine symbiosis for scale, cost, and precision (too much human-in-the-loop leads to errors; too little leads to nonsense).

DIA ecosystems are inherently multidisciplinary (ideally interdisciplinary), collaborative, and iterative. Not only does DIA (Big Data Analytics) require multiple disciplines—e.g., genetics, statistics and machine learning—but so too do the data management processes—e.g., data management, domain and machine-learning experts for data curation, statisticians for sampling, and so on.

In large-scale DIA ecosystems, a DIA is a virtual experiment [18]. Far from claims of simplicity and point-and-click self-service, most large-scale DIA activities reflect the complexity of the analysis at hand and are the result of long-term (months to years) experimental designs. These designs necessarily involve greater complexity than their empirical counterparts, to deal with scale, significance, hypotheses, null hypotheses, and deeper challenges, such as determining causality from correlations and identifying and dealing with biases, and often irrational human intervention.

Finally, veracity is one of the most significant challenges and critical requirements of all DIA ecosystems studied. While there are many complex methods in conventional data science to estimate veracity, most owners of the use cases studied expressed concern for adequately estimating veracity in modern data science. Most assume that all data is imprecise, and hence require probabilistic measures and error bars and likelihood estimates for all results. More basically, most DIA ecosystem experts recognize that errors can arise across an end-to-end DIA activity and are investing substantially in addressing these issues in both the DIA process and the data management processes, which currently require significant human guidance.

An objective of this research is to discover the extent to which the characteristics of very large-scale, complex DIAs described here also apply to simple DIAs. There is a strong likelihood that they apply directly, but are difficult to detect—that is, that the principles and techniques of DIA apply equally to simple and complex DIA.

Looking Into a Use Case

Due to the detail involved, there is not space in this chapter (or this report) to fully describe a single use case considered in this study. However, let’s look into a single step of a use case, involving a virtual experiment conducted at CERN in the ATLAS project.

The heart of empirical science is experimental design. It starts by identifying, formulating, and verifying a worthy hypothesis to pursue. This first complex step typically involves a multidisciplinary team, called the collaborators for this virtual experiment, often from around the world and for more than a year. We will consider the second step, the construction of the control or background model (executable software and data) that creates the background (e.g., an executable or testable model and a given data set) required as the basis within which to search (analyze) for “signals” that would represent the phenomenon being investigated in the hypothesis. This control completely excludes the data of interest. That is, the data of interest (the signal region) is “blinded” completely so as not to bias the experiment. The background (control) is designed using software that simulates relevant parts of the Standard Model of particle physics, plus data from ATLAS selected with the appropriate signatures with the data of interest blinded.

Over time, ATLAS contributors have developed simulations of many parts of the Standard Model. Hence, constructing the model required for the background involves selecting and combining relevant simulations. If there is no simulation for some aspect that is required, then it must be requested or built by hand. Similarly, if there is no relevant data of interest in the experimental data repository, it must be requested from a subsequent capture from the detectors when the LHC is next fired up in the appropriate energy levels. This comes from a completely separate team running the (non-virtual) experiment.

The development of the background is approximately a one-person-year activity, as it involves the experimental design, the design and refinement of the model (software simulations), the selection of methods and tuning to achieve the correct signature (i.e., get the right data), the verification of the model (observing expected outcomes when tested), and dealing with errors (statistical and systematic) that arise from the hardware or process. The result of the background phase is a model approved by the collaborative to represent the background required by the experiment with the signal region blinded. The model is an “application” that runs on the ATLAS “platform” using ATLAS resources—libraries, software, simulations, and data, drawing on the ROOT framework, CERN’s core modeling and analysis infrastructure. It is verified by being executed under various testing conditions.

This is an incremental or iterative process, each step of which is reviewed. The resulting design document for the Top Quark experiment was approximately 200 pages of design choices, parameter settings, and results—both positive and negative! All experimental data and analytical results are probabilistic. All results have error bars; in particle physics they must be at least 5 sigma to be accepted. This explains the year of iteration in which analytical models are adjusted, analytical methods are selected and tuned, and results are reviewed by the collaborative. The next step is the actual virtual experiment. This too takes months. Surprisingly, once the data is unblinded (i.e., synthetic data is replaced in the region of interest with experimental data), the experimenter—often a PhD candidate—gets one and only one execution of the “verified” model over the experimental data.

Hopefully, this portion of a use case illustrates that Data-Intensive Analysis is a complex but critical tool in scientific discovery, used with a well-defined understanding of veracity. It must stand up to scrutiny that evaluates whether the experiment—consisting of all models, methods, and data with probabilistic results and error bounds better than 5 sigma—is adequate to be accepted by Science or Nature as demonstrating that the hypothesized correlation is causal.

Research for an Emerging Discipline

The next step in this research to better understand the theory and practice of the emerging discipline of data science, to understand and address its opportunities and challenges, and to guide its development is given in its definition. Modern data science builds on conventional data science and on all of its constituent disciplines required to design, verify, and operate end-to-end DIA activities, including both data management and DIA processes, in a DIA ecosystem for a shared community of users. Each discipline must be considered with respect to what it contributes to investigating phenomena, acquiring new knowledge, and correcting and integrating new with previous knowledge. Each operation must be understood with respect to the level of correctness, completeness, and efficiency that can be estimated.

This research involves identifying relevant principles and techniques. Principles concern the theories that are established formally—e.g., mathematically—and possibly demonstrated empirically. Techniques involve the application of wisdom [19]; i.e., domain knowledge, art, experience, methodologies, and practice—often called best practices. The principles and techniques, especially those established for conventional data science, must be verified and, if required, extended, augmented, or replaced for the new context of the Fourth Paradigm—especially its volumes, velocities, and variety. For example, new departments at MIT, Stanford, and the University of California, Berkeley, are conducting such research under what some are calling 21st-century statistics.

A final, stimulating challenge is what is called metamodeling or metatheory. This area emerged in the physical sciences in the 1980s and subsequently in statistics and machine learning and is now being applied in other areas. Metamodeling arises when using multiple analytical models and multiple analytical methods to analyze different perspectives or characteristics of the same phenomenon. This extremely natural and useful methodology, called ensemble modeling, is required in many physical sciences, statistics, and AI, and should be explored as a fundamental modeling methodology.

Acknowledgment

I gratefully acknowledge the brilliant insights and improvements proposed by Prof Jennie Duggan of Northwestern University and Prof Thilo Stadelmann of the Zurich University of Applied Sciences.

1 M.I. Jordan and T.M. Mitchell. 2015. Machine learning: Trends, perspectives, and prospects. Science 349(6245), 255–260.

2 V. Mayer-Schönberger and K. Cukier. 2013. Big Data: A Revolution That Will Transform How We Live, Work, and Think. New York: Houghton Mifflin Harcourt.

3 National Science Foundation. 2008. Accelerating discovery in science and engineering through petascale simulations and analysis (PetaApps). Posted July 28, 2008.

4 G.A. Miller. 1956. The magical number seven, plus or minus two: Some limits on our capacity for processing information. Psychological Review 63(2), 81–97.

5 F. Provost and T. Fawcett. 2013. Data science and its relationship to big data and data-driven decision making. Big Data 1(1), 51–59.

6 J.W. Tukey. 1962. The future of data analysis. Annals of Mathematical Statistics 33(1), 1–67.

7 G.E.P. Box. 2012. Science and statistics. Journal of the American Statistical Association 71(356), 791–799. Reprint of original from 1962.

8 T.S. Kuhn. 1996. The Structure of Scientific Revolutions. 3rd ed. Chicago, IL: University of Chicago Press.

9 J. Gray. 2009. Jim Gray on eScience: A transformed scientific method. In A.J.G. Hey, S. Tansley, and K.M. Tolle (Eds.), The Fourth Paradigm: Data-Intensive Scientific Discovery. Microsoft Research.

10 S. Spangler et al. 2014. Automated hypothesis generation based on mining scientific literature. In Proceedings of the 20th ACM SIGKDD international conference on knowledge discovery and data mining (KDD ’14). New York: ACM.

11 G. Aad et al. 2012. Observation of a new particle in the search for the Standard Model Higgs boson with the ATLAS detector at the LHC. Physics Letters B716(1), 1–29.

12 V. Khachatryan et al. 2012. Observation of a new boson at a mass of 125 GeV with the CMS experiment at the LHC. Physics Letters B716(1), 30–61.

13 J. Bohannon. 2015. Fears of an AI pioneer. Science, 349(6245), 252.

14 S.J. Gershman, E.J. Horvitz, and J.B. Tenenbaum. 2015. Computational rationality: A converging paradigm for intelligence in brains, minds, and machines. Science 349(6245), 273–278.

15 E. Horvitz and D. Mulligan. 2015. Data, privacy, and the greater good. Science 349(6245), 253–255.

16 J. Stajic, R. Stone, G. Chin, and B. Wible. 2015. Rise of the machines. Science 349(6245), 248–249.

17 N. Diakopoulos. 2014. Algorithmic accountability reporting: On the investigation of black boxes. Tow Center.

18 J. Duggan and M. Brodie. 2015. Hephaestus: Data reuse for accelerating scientific discovery. In CIDR 2015.

19 B. Yu. 2015. Data wisdom for data science. ODBMS.org.

Get Getting Data Right now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.