Chapter 4. Eventing

So far we’ve only sent basic HTTP requests to our applications, and that’s a perfectly valid way to consume functions on Knative. However, the loosely coupled nature of serverless fits an event-driven architecture as well. That is to say, perhaps we want to invoke our function when a file is uploaded to an FTP server. Or, maybe any time we make a sale we need to invoke a function to process the payment and update our inventory. Rather than having our applications and functions worry about the logic of watching for these events, instead we can express interest in certain events and let Knative handle letting us know when they occur.

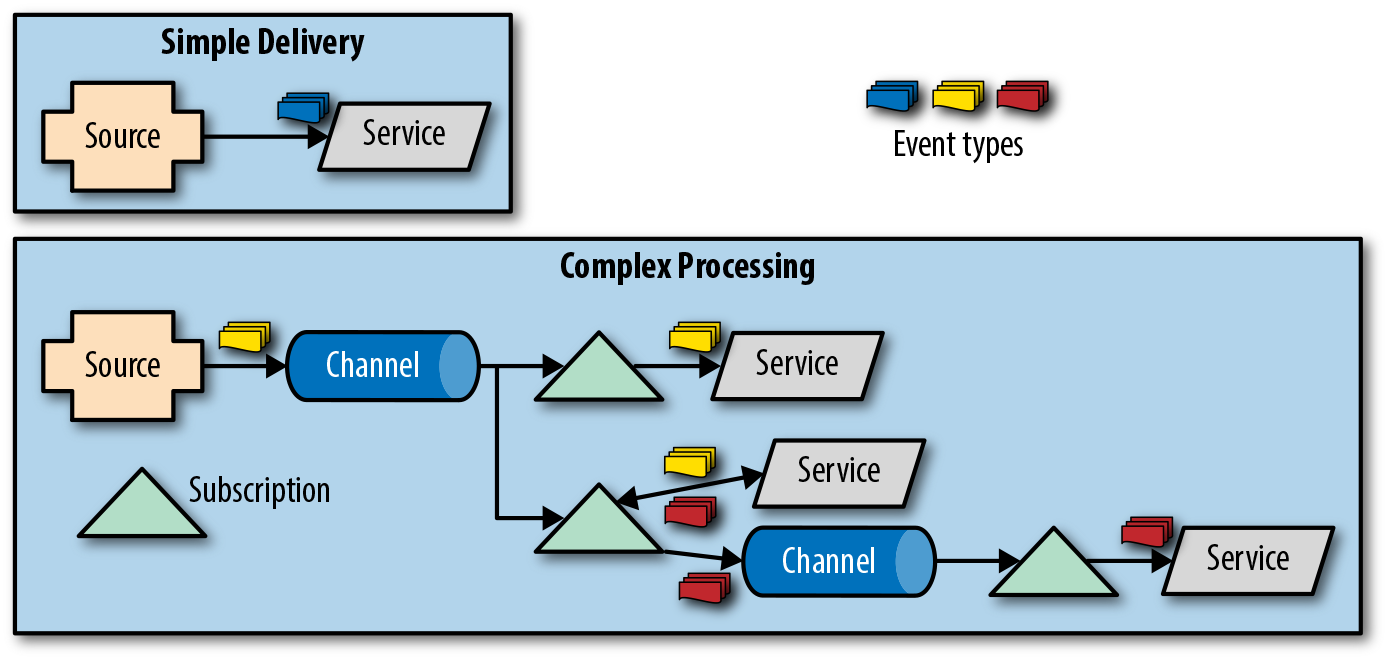

Doing this on your own would be quite a bit of work and implementation-specific coding. Luckily, Knative provides a layer of abstraction that makes it easy to consume events. Instead of writing code specific to your message broker of choice, Knative simply delivers an “event.” Your application doesn’t have to care where it came from or how it got there, just simply that it happened. To accomplish this, Knative introduces three new concepts: Sources, Channels, and Subscriptions.

Sources

Sources are, as you may have guessed, the source of the events. They’re how we define where events are being generated and how they’re delivered to those interested in them. For example, the Knative teams have developed a number of Sources that are provided right out of the box. To name just a few:

- GCP PubSub

-

Subscribe to a topic in Google’s PubSub Service and listen for messages.

- Kubernetes Events

-

A feed of all events happening in the Kubernetes cluster.

- GitHub

-

Watches for events in a GitHub repository, such as pull requests, pushes, and creation of releases.

- Container Source

-

In case you need to create your own Event Source, Knative has a further abstraction, a Container Source. This allows you to easily create your own Event Source, packaged as a container. See “Building a Custom Event Source”.

While this is just a subset of current Event Sources, the list is quickly and constantly growing as well. You can see a current list of Event Sources in the Knative ecosystem in the Knative Eventing documentation.

Let’s take a look at a simple demo that will use the Kubernetes Events Source and log them to STDOUT. We’ll deploy a function that listens for POST requests on port 8080 and spits them back out, shown in Example 4-1.

Example 4-1. knative-eventing-demo/app.go

package main

import (

"fmt"

"io/ioutil"

"log"

"net/http"

)

func handlePost(rw http.ResponseWriter, req *http.Request) {

defer req.Body.Close()

body, _ := ioutil.ReadAll(req.Body)

fmt.Fprintf(rw, "%s", body)

log.Printf("%s", body)

}

func main() {

log.Print("Starting server on port 8080...")

http.HandleFunc("/", handlePost)

log.Fatal(http.ListenAndServe(":8080", nil))

}

We’ll deploy this Service just as we would any other, shown in Example 4-2.

Example 4-2. knative-eventing-demo/service.yaml

apiVersion: serving.knative.dev/v1alpha1 kind: Service metadata: name: knative-eventing-demo spec: runLatest: configuration: revisionTemplate: spec: container: image: docker.io/gswk/knative-eventing-demo:latest

$ kubectl apply -f service.yaml

So far, no surprises. We can even send requests to this Service like we have done in the previous two chapters:

$ curl $SERVICE_IP -H "Host: knative-eventing-demo.default. example.com" -XPOST -d "Hello, Eventing" > Hello, Eventing

Next, we can set up the Kubernetes Event Source. Different Event Sources will have different requirements when it comes to configuration and authentication. The GCP PubSub source, for example, requires information to authenticate to GCP. For the Kubernetes Event Source, we’ll need to create a Service Account that has permission to read the events happening inside of our Kubernetes cluster. Like we did in Chapter 3, we define this Service Account in YAML and apply it to our cluster, shown in Example 4-3.

Example 4-3. knative-eventing-demo/serviceaccount.yaml

apiVersion: v1 kind: ServiceAccount metadata: name: events-sa namespace: default --- apiVersion: rbac.authorization.k8s.io/v1 kind: Role metadata: creationTimestamp: null name: event-watcher rules: - apiGroups: - "" resources: - events verbs: - get - list - watch --- apiVersion: rbac.authorization.k8s.io/v1 kind: RoleBinding metadata: creationTimestamp: null name: k8s-ra-event-watcher roleRef: apiGroup: rbac.authorization.k8s.io kind: Role name: event-watcher subjects: - kind: ServiceAccount name: events-sa namespace: default

kubectl apply -f serviceaccount.yaml

With our “events-sa” Service Account in place, all that’s left is to define our actual source, an instance of the Kubernetes Event Source in our case. An instance of an Event Source will run with specific configuration, in our case a predefined Service Account. We can see what our configuration looks like in Example 4-4.

Example 4-4. knative-eventing-demo/source.yaml

apiVersion: sources.eventing.knative.dev/v1alpha1 kind: KubernetesEventSource metadata: name: k8sevents spec: namespace: default serviceAccountName: events-sa sink: apiVersion: eventing.knative.dev/v1alpha1 kind: Channel name: knative-eventing-demo-channel

Most of this is fairly straightforward. We define the kind of object we’re creating as a KubernetesEventSource, give it the name k8sevents, and pass along some instance-specific configuration such as the namespace we should run in and the Service Account we should use. There is one new thing you may have noticed though, the sink configuration.

Sinks are a way of defining where we want to send events to and are a Kubernetes ObjectReference, or more simply, a way of addressing another predefined object in Kubernetes. When working with Event Sources in Knative, this will generally either be a Service (in case we want to send events directly to an application running on Knative), or a yet-to-be-introduced component, a Channel.

Channels

Now that we’ve defined a source for our events, we need somewhere to send them. While you can send events straight to a Service, this means it’s up to you to handle retry logic and queuing. And what happens when an event is sent to your Service and it happens to be down? What if you want to send the same events to multiple Services? To answer all of these questions, Knative introduces the concept of Channels.

Channels handle buffering and persistence, helping ensure that events are delivered to their intended Services, even if that service is down. Additionally, Channels are an abstraction between our code and the underlying messaging solution. This means we could swap this between something like Kafka and RabbitMQ, but in neither case are we writing code specific to either. Continuing through our demo, we’ll set up a Channel that we’ll send all of our events, as shown in Example 4-5. You’ll notice that this Channel matches the sink we defined in our Event Source in Example 4-4.

Example 4-5. knative-eventing-demo/channel.yaml

apiVersion: eventing.knative.dev/v1alpha1 kind: Channel metadata: name: knative-eventing-demo-channel spec: provisioner: apiVersion: eventing.knative.dev/v1alpha1 kind: ClusterChannelProvisioner name: in-memory-channel

kubectl apply -f channel.yaml

Here we create a Channel named knative-eventing-demo-channel and define the type of Channel we’d like to create, in this case an in-memory-channel. As mentioned before, a big goal of eventing in Knative is that it’s completely abstracted away from the underlying infrastructure, and this means making the messaging service backing our Channels pluggable. This is done by implementations of the ClusterChannelProvisioner, a pattern for defining how Knative should communicate with our messaging services. Our demo uses the in-memory-channel provisioner, but Knative actually ships with a few options for backing services for our Channels as well:

- in-memory-channel

-

Handled completely in-memory inside of our Kubernetes cluster and does not rely on a separate running service to deliver events. Great for development but is not recommended to be used in production.

- GCP PubSub

-

Uses Google’s PubSub hosted service to deliver messages, only needs access to a GCP account.

- Kafka

-

Sends events to a running Apache Kafka cluster, an open source distributed streaming platform with great message queue capabilities.

- NATS

-

Sends events to a running NATS cluster, an open source message system that can deliver and consume messages in a wide variety of patterns and configurations.

With these pieces in place, one question remains: How do we get our events from our Channel to our Service?

Subscriptions

We have our Event Source sending events to a Channel, and a Service ready to go to start processing them, but currently we don’t have a way to get our events from our Channel to our Service. Knative allows us to define a Subscription for just this scenario. Subscriptions are the glue between Channels and Services, instructing Knative how our events should be piped through the entire system. Figure 4-1 shows an example of how Subscriptions can be used to route events to multiple applications.

Figure 4-1. Event Sources can send events to a Channel so multiple Services can receive them simultaneously, or they can instead be sent straight to one Service

Services in Knative don’t know or care how events and requests are getting to them. It could be an HTTP request from the ingress gateway or it could be an event sent from a Channel. Either way, our Service simply receives an HTTP request. This is an important decoupling in Knative that ensures that we’re writing our code to our architecture, not our infrastructure. Let’s create the Subscription that will deliver events from our Channel to our Service. As you can see in Example 4-6, the definition takes just two references, one to a Channel and one to a Service.

Example 4-6. knative-eventing-demo/subscription.yaml

apiVersion: eventing.knative.dev/v1alpha1 kind: Subscription metadata: name: knative-eventing-demo-subscription spec: channel: apiVersion: eventing.knative.dev/v1alpha1 kind: Channel name: knative-eventing-demo-channel subscriber: ref: apiVersion: serving.knative.dev/v1alpha1 kind: Service name: knative-eventing-demo

With this final piece in place, we now have all the plumbing in place to deliver events to our application. Kubernetes logs events occurring in the cluster, which our Event Source picks up and sends to our Channel and subsequently to our Service thanks to the Subscription that we defined. If we check out the logs in our Service, we’ll see these events start coming across right away, as shown in Example 4-7.

Example 4-7. Retrieving the logs from our Service

$ kubectl get pods -l app=knative-eventing-demo-00001 -o name

pod/knative-eventing-demo-00001-deployment-764c8ccdf8-8w782

$ kubectl logs knative-eventing-demo-00001-deployment-f4c794667

-mcrcv -c user-container

2019/01/04 22:46:41 Starting server on port 8080...

2019/01/04 22:46:44 {"metadata":{"name":"knative-eventing-demo-00001.

15761326c1edda18","namespace":"default"...[Truncated for brevity]

Conclusion

These building blocks pave the way to help enable a rich, robust event-driven architecture, but this is just the beginning. We’ll look at creating a custom source using the ContainerSource in “Building a Custom Event Source”. We’ll also show Eventing in action in Chapter 7.

Get Getting Started with Knative now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.