Chapter 4. Client API: Advanced Features

Now that you understand the basic client API, we will discuss the advanced features that HBase offers to clients.

Filters

HBase filters are a powerful feature that can greatly enhance your effectiveness when working with data stored in tables. You will find predefined filters, already provided by HBase for your use, as well as a framework you can use to implement your own. You will now be introduced to both.

Introduction to Filters

The two prominent read functions for HBase are get() and scan(), both supporting either direct access

to data or the use of a start and end key, respectively. You can limit

the data retrieved by progressively adding more limiting selectors to

the query. These include column families, column qualifiers, timestamps

or ranges, as well as version number.

While this gives you control over what is

included, it is missing more fine-grained features, such as selection of

keys, or values, based on regular expressions. Both classes support

filters for exactly these reasons: what cannot be

solved with the provided API functionality to filter row or column keys,

or values, can be achieved with filters. The base interface is aptly named Filter, and there is a list of concrete

classes supplied by HBase that you can use without doing any

programming.

You can, on the other hand, extend the

Filter classes to implement your own

requirements. All the filters are actually applied on the server side,

also called predicate pushdown. This ensures the

most efficient selection of the data that needs to be transported back

to the client. You could implement most of the filter functionality in

your client code as well, but you would have to transfer much more

data—something you need to avoid at scale.

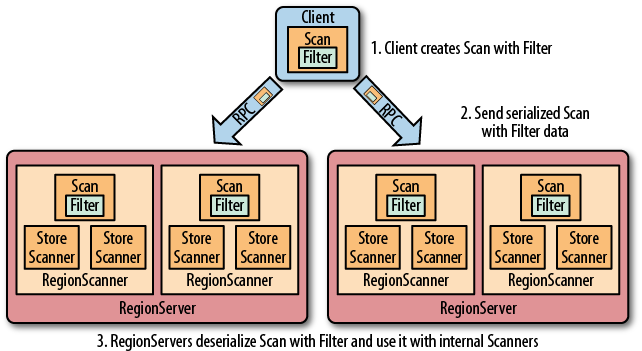

Figure 4-1 shows how the filters are configured on the client, then serialized over the network, and then applied on the server.

The filter hierarchy

The lowest level in the filter hierarchy is

the Filter interface, and the

abstract FilterBase class that

implements an empty shell, or skeleton, that is used by the actual

filter classes to avoid having the same boilerplate code in each of

them.

Most concrete filter classes are direct descendants of

FilterBase, but a few use another,

intermediate ancestor class. They all work the same way: you define a new instance of the filter you want to apply

and hand it to the Get or Scan instances, using:

setFilter(filter)

While you initialize the filter instance

itself, you often have to supply parameters for whatever the filter is

designed for. There is a special subset of filters, based on CompareFilter, that

ask you for at least two specific parameters, since they are used by

the base class to perform its task. You will learn about the two

parameter types next so that you can use them in context.

Note

Filters have access to the entire row they are applied to. This means that they can decide the fate of a row based on any available information. This includes the row key, column qualifiers, actual value of a column, timestamps, and so on.

When referring to values, or comparisons, as we will discuss shortly, this can be applied to any of these details. Specific filter implementations are available that consider only one of those criteria each.

Comparison operators

As CompareFilter-based

filters add one more feature to the base FilterBase class, namely the

compare() operation, it has to have a user-supplied

operator type that defines how the result of the comparison is

interpreted. The values are listed in Table 4-1.

The comparison operators define what is included, or excluded, when the filter is applied. This allows you to select the data that you want as either a range, subset, or exact and single match.

Comparators

The second type that you need to provide to CompareFilter-related classes is a

comparator, which is needed to compare various

values and keys in different ways. They are derived from WritableByteArrayComparable, which

implements Writable, and Comparable. You do not have to go

into the details if you just want to use an implementation provided by

HBase and listed in Table 4-2. The

constructors usually take the control value, that is, the one to

compare each table value against.

Note

The last three comparators listed in Table 4-2—the BitComparator, RegexStringComparator, and SubstringComparator—only

work with the EQUAL and NOT_EQUAL operators, as the compareTo() of these comparators returns

0 for a match or 1 when there is no match. Using them in a

LESS or GREATER comparison will yield erroneous

results.

Each of the comparators usually has a

constructor that takes the comparison value. In other words, you need

to define a value you compare each cell against. Some of these

constructors take a byte[], a byte

array, to do the binary comparison, for example, while others take a

String parameter—since the data

point compared against is assumed to be some sort of readable

text. Example 4-1 shows some of these in

action.

Comparison Filters

The first type of supplied filter implementations are the

comparison filters. They take the comparison operator and comparator

instance as described earlier. The constructor of each of them has the

same signature, inherited from CompareFilter:

CompareFilter(CompareOp valueCompareOp, WritableByteArrayComparable valueComparator)

You need to supply this comparison operator and comparison class for the filters to do their work. Next you will see the actual filters implementing a specific comparison.

Note

Please keep in mind that the general contract of the HBase filter API means you are filtering out information—filtered data is omitted from the results returned to the client. The filter is not specifying what you want to have, but rather what you do not want to have returned when reading data.

In contrast, all filters based on CompareFilter are doing the

opposite, in that they include the matching

values. In other words, be careful when choosing the comparison

operator, as it makes the difference in regard to what the server

returns. For example, instead of using LESS to skip some information, you may need

to use GREATER_OR_EQUAL to include

the desired data points.

RowFilter

This filter gives you the ability to filter data based on row keys.

Example 4-1 shows how the filter can use different comparator instances to get the desired results. It also uses various operators to include the row keys, while omitting others. Feel free to modify the code, changing the operators to see the possible results.

Scan scan = new Scan();

scan.addColumn(Bytes.toBytes("colfam1"), Bytes.toBytes("col-0"));

Filter filter1 = new RowFilter(CompareFilter.CompareOp.LESS_OR_EQUAL,  new BinaryComparator(Bytes.toBytes("row-22")));

scan.setFilter(filter1);

ResultScanner scanner1 = table.getScanner(scan);

for (Result res : scanner1) {

System.out.println(res);

}

scanner1.close();

Filter filter2 = new RowFilter(CompareFilter.CompareOp.EQUAL,

new BinaryComparator(Bytes.toBytes("row-22")));

scan.setFilter(filter1);

ResultScanner scanner1 = table.getScanner(scan);

for (Result res : scanner1) {

System.out.println(res);

}

scanner1.close();

Filter filter2 = new RowFilter(CompareFilter.CompareOp.EQUAL,  new RegexStringComparator(".*-.5"));

scan.setFilter(filter2);

ResultScanner scanner2 = table.getScanner(scan);

for (Result res : scanner2) {

System.out.println(res);

}

scanner2.close();

Filter filter3 = new RowFilter(CompareFilter.CompareOp.EQUAL,

new RegexStringComparator(".*-.5"));

scan.setFilter(filter2);

ResultScanner scanner2 = table.getScanner(scan);

for (Result res : scanner2) {

System.out.println(res);

}

scanner2.close();

Filter filter3 = new RowFilter(CompareFilter.CompareOp.EQUAL,  new SubstringComparator("-5"));

scan.setFilter(filter3);

ResultScanner scanner3 = table.getScanner(scan);

for (Result res : scanner3) {

System.out.println(res);

}

scanner3.close();

new SubstringComparator("-5"));

scan.setFilter(filter3);

ResultScanner scanner3 = table.getScanner(scan);

for (Result res : scanner3) {

System.out.println(res);

}

scanner3.close();Here is the full printout of the example on the console:

Adding rows to table...

Scanning table #1...

keyvalues={row-1/colfam1:col-0/1301043190260/Put/vlen=7}

keyvalues={row-10/colfam1:col-0/1301043190908/Put/vlen=8}

keyvalues={row-100/colfam1:col-0/1301043195275/Put/vlen=9}

keyvalues={row-11/colfam1:col-0/1301043190982/Put/vlen=8}

keyvalues={row-12/colfam1:col-0/1301043191040/Put/vlen=8}

keyvalues={row-13/colfam1:col-0/1301043191172/Put/vlen=8}

keyvalues={row-14/colfam1:col-0/1301043191318/Put/vlen=8}

keyvalues={row-15/colfam1:col-0/1301043191429/Put/vlen=8}

keyvalues={row-16/colfam1:col-0/1301043191509/Put/vlen=8}

keyvalues={row-17/colfam1:col-0/1301043191593/Put/vlen=8}

keyvalues={row-18/colfam1:col-0/1301043191673/Put/vlen=8}

keyvalues={row-19/colfam1:col-0/1301043191771/Put/vlen=8}

keyvalues={row-2/colfam1:col-0/1301043190346/Put/vlen=7}

keyvalues={row-20/colfam1:col-0/1301043191841/Put/vlen=8}

keyvalues={row-21/colfam1:col-0/1301043191933/Put/vlen=8}

keyvalues={row-22/colfam1:col-0/1301043191998/Put/vlen=8}

Scanning table #2...

keyvalues={row-15/colfam1:col-0/1301043191429/Put/vlen=8}

keyvalues={row-25/colfam1:col-0/1301043192140/Put/vlen=8}

keyvalues={row-35/colfam1:col-0/1301043192665/Put/vlen=8}

keyvalues={row-45/colfam1:col-0/1301043193138/Put/vlen=8}

keyvalues={row-55/colfam1:col-0/1301043193729/Put/vlen=8}

keyvalues={row-65/colfam1:col-0/1301043194092/Put/vlen=8}

keyvalues={row-75/colfam1:col-0/1301043194457/Put/vlen=8}

keyvalues={row-85/colfam1:col-0/1301043194806/Put/vlen=8}

keyvalues={row-95/colfam1:col-0/1301043195121/Put/vlen=8}

Scanning table #3...

keyvalues={row-5/colfam1:col-0/1301043190562/Put/vlen=7}

keyvalues={row-50/colfam1:col-0/1301043193332/Put/vlen=8}

keyvalues={row-51/colfam1:col-0/1301043193514/Put/vlen=8}

keyvalues={row-52/colfam1:col-0/1301043193603/Put/vlen=8}

keyvalues={row-53/colfam1:col-0/1301043193654/Put/vlen=8}

keyvalues={row-54/colfam1:col-0/1301043193696/Put/vlen=8}

keyvalues={row-55/colfam1:col-0/1301043193729/Put/vlen=8}

keyvalues={row-56/colfam1:col-0/1301043193766/Put/vlen=8}

keyvalues={row-57/colfam1:col-0/1301043193802/Put/vlen=8}

keyvalues={row-58/colfam1:col-0/1301043193842/Put/vlen=8}

keyvalues={row-59/colfam1:col-0/1301043193889/Put/vlen=8}You can see how the first filter did an exact match on the row key, including all of those rows that have a key, equal to or less than the given one. Note once again the lexicographical sorting and comparison, and how it filters the row keys.

The second filter does a regular expression match, while the third uses a substring match approach. The results show that the filters work as advertised.

FamilyFilter

This filter works very similar to the RowFilter, but applies the comparison to the

column families available in a row—as opposed to the row key. Using

the available combinations of operators and comparators you can filter

what is included in the retrieved data on a column family level. Example 4-2 shows how to use this.

Filter filter1 = new FamilyFilter(CompareFilter.CompareOp.LESS,new BinaryComparator(Bytes.toBytes("colfam3"))); Scan scan = new Scan(); scan.setFilter(filter1); ResultScanner scanner = table.getScanner(scan);

for (Result result : scanner) { System.out.println(result); } scanner.close(); Get get1 = new Get(Bytes.toBytes("row-5")); get1.setFilter(filter1); Result result1 = table.get(get1);

System.out.println("Result of get(): " + result1); Filter filter2 = new FamilyFilter(CompareFilter.CompareOp.EQUAL, new BinaryComparator(Bytes.toBytes("colfam3"))); Get get2 = new Get(Bytes.toBytes("row-5"));

get2.addFamily(Bytes.toBytes("colfam1")); get2.setFilter(filter2); Result result2 = table.get(get2);

System.out.println("Result of get(): " + result2);

The output—reformatted and abbreviated for the sake of readability—shows the filter in action. The input data has four column families, with two columns each, and 10 rows in total.

Adding rows to table...

Scanning table...

keyvalues={row-1/colfam1:col-0/1303721790522/Put/vlen=7,

row-1/colfam1:col-1/1303721790574/Put/vlen=7,

row-1/colfam2:col-0/1303721790522/Put/vlen=7,

row-1/colfam2:col-1/1303721790574/Put/vlen=7}

keyvalues={row-10/colfam1:col-0/1303721790785/Put/vlen=8,

row-10/colfam1:col-1/1303721790792/Put/vlen=8,

row-10/colfam2:col-0/1303721790785/Put/vlen=8,

row-10/colfam2:col-1/1303721790792/Put/vlen=8}

...

keyvalues={row-9/colfam1:col-0/1303721790778/Put/vlen=7,

row-9/colfam1:col-1/1303721790781/Put/vlen=7,

row-9/colfam2:col-0/1303721790778/Put/vlen=7,

row-9/colfam2:col-1/1303721790781/Put/vlen=7}

Result of get(): keyvalues={row-5/colfam1:col-0/1303721790652/Put/vlen=7,

row-5/colfam1:col-1/1303721790664/Put/vlen=7,

row-5/colfam2:col-0/1303721790652/Put/vlen=7,

row-5/colfam2:col-1/1303721790664/Put/vlen=7}

Result of get(): keyvalues=NONEThe last get() shows that you can (inadvertently)

create an empty set by applying a filter for exactly one column

family, while specifying a different column family selector using addFamily().

QualifierFilter

Example 4-3 shows how the same logic is applied on the column qualifier level. This allows you to filter specific columns from the table.

Filter filter = new QualifierFilter(CompareFilter.CompareOp.LESS_OR_EQUAL,

new BinaryComparator(Bytes.toBytes("col-2")));

Scan scan = new Scan();

scan.setFilter(filter);

ResultScanner scanner = table.getScanner(scan);

for (Result result : scanner) {

System.out.println(result);

}

scanner.close();

Get get = new Get(Bytes.toBytes("row-5"));

get.setFilter(filter);

Result result = table.get(get);

System.out.println("Result of get(): " + result);ValueFilter

This filter makes it possible to include only columns that

have a specific value. Combined with the RegexStringComparator, for example, this can

filter using powerful expression syntax. Example 4-4 showcases this feature. Note, though,

that with certain comparators—as explained earlier—you can only employ

a subset of the operators. Here a substring match is performed and

this must be combined with an EQUAL, or NOT_EQUAL, operator.

Filter filter = new ValueFilter(CompareFilter.CompareOp.EQUAL,new SubstringComparator(".4")); Scan scan = new Scan(); scan.setFilter(filter);

ResultScanner scanner = table.getScanner(scan); for (Result result : scanner) { for (KeyValue kv : result.raw()) { System.out.println("KV: " + kv + ", Value: " +

Bytes.toString(kv.getValue())); } } scanner.close(); Get get = new Get(Bytes.toBytes("row-5")); get.setFilter(filter);

Result result = table.get(get); for (KeyValue kv : result.raw()) { System.out.println("KV: " + kv + ", Value: " + Bytes.toString(kv.getValue())); }

DependentColumnFilter

Here you have a more complex filter that does not simply filter out data based on directly available information. Rather, it lets you specify a dependent column—or reference column—that controls how other columns are filtered. It uses the timestamp of the reference column and includes all other columns that have the same timestamp. Here are the constructors provided:

DependentColumnFilter(byte[] family, byte[] qualifier) DependentColumnFilter(byte[] family, byte[] qualifier, boolean dropDependentColumn) DependentColumnFilter(byte[] family, byte[] qualifier, boolean dropDependentColumn, CompareOp valueCompareOp, WritableByteArrayComparable valueComparator)

Since it is based on CompareFilter, it also offers you to further

select columns, but for this

filter it does so based on their values. Think of it as a combination

of a ValueFilter and a filter selecting on a

reference timestamp. You can optionally hand in your own operator and

comparator pair to enable this feature. The class provides

constructors, though, that let you omit the operator and comparator

and disable the value filtering, including all columns by default,

that is, performing the timestamp filter based on the reference column

only.

Example 4-5 shows the filter in use. You

can see how the optional values can be handed in as well. The dropDependentColumn parameter is giving you

additional control over how the reference column is handled: it is

either included or dropped by the filter, setting this parameter to

false or true, respectively.

private static void filter(boolean drop,

CompareFilter.CompareOp operator,

WritableByteArrayComparable comparator)

throws IOException {

Filter filter;

if (comparator != null) {

filter = new DependentColumnFilter(Bytes.toBytes("colfam1"),  Bytes.toBytes("col-5"), drop, operator, comparator);

} else {

filter = new DependentColumnFilter(Bytes.toBytes("colfam1"),

Bytes.toBytes("col-5"), drop);

}

Scan scan = new Scan();

scan.setFilter(filter);

ResultScanner scanner = table.getScanner(scan);

for (Result result : scanner) {

for (KeyValue kv : result.raw()) {

System.out.println("KV: " + kv + ", Value: " +

Bytes.toString(kv.getValue()));

}

}

scanner.close();

Get get = new Get(Bytes.toBytes("row-5"));

get.setFilter(filter);

Result result = table.get(get);

for (KeyValue kv : result.raw()) {

System.out.println("KV: " + kv + ", Value: " +

Bytes.toString(kv.getValue()));

}

}

public static void main(String[] args) throws IOException {

filter(true, CompareFilter.CompareOp.NO_OP, null);

filter(false, CompareFilter.CompareOp.NO_OP, null);

Bytes.toBytes("col-5"), drop, operator, comparator);

} else {

filter = new DependentColumnFilter(Bytes.toBytes("colfam1"),

Bytes.toBytes("col-5"), drop);

}

Scan scan = new Scan();

scan.setFilter(filter);

ResultScanner scanner = table.getScanner(scan);

for (Result result : scanner) {

for (KeyValue kv : result.raw()) {

System.out.println("KV: " + kv + ", Value: " +

Bytes.toString(kv.getValue()));

}

}

scanner.close();

Get get = new Get(Bytes.toBytes("row-5"));

get.setFilter(filter);

Result result = table.get(get);

for (KeyValue kv : result.raw()) {

System.out.println("KV: " + kv + ", Value: " +

Bytes.toString(kv.getValue()));

}

}

public static void main(String[] args) throws IOException {

filter(true, CompareFilter.CompareOp.NO_OP, null);

filter(false, CompareFilter.CompareOp.NO_OP, null);  filter(true, CompareFilter.CompareOp.EQUAL,

new BinaryPrefixComparator(Bytes.toBytes("val-5")));

filter(false, CompareFilter.CompareOp.EQUAL,

new BinaryPrefixComparator(Bytes.toBytes("val-5")));

filter(true, CompareFilter.CompareOp.EQUAL,

new RegexStringComparator(".*\\.5"));

filter(false, CompareFilter.CompareOp.EQUAL,

new RegexStringComparator(".*\\.5"));

}

filter(true, CompareFilter.CompareOp.EQUAL,

new BinaryPrefixComparator(Bytes.toBytes("val-5")));

filter(false, CompareFilter.CompareOp.EQUAL,

new BinaryPrefixComparator(Bytes.toBytes("val-5")));

filter(true, CompareFilter.CompareOp.EQUAL,

new RegexStringComparator(".*\\.5"));

filter(false, CompareFilter.CompareOp.EQUAL,

new RegexStringComparator(".*\\.5"));

}Warning

This filter is not

compatible with the batch feature of the scan operations, that is,

setting Scan.setBatch() to a

number larger than zero. The filter needs to see the entire row to

do its work, and using batching will not carry the reference column

timestamp over and would result in erroneous results.

If you try to enable the batch mode nevertheless, you will get an error:

Exception org.apache.hadoop.hbase.filter.IncompatibleFilterException: Cannot set batch on a scan using a filter that returns true for filter.hasFilterRow

The example also proceeds slightly differently compared to the earlier filters, as it sets the version to the column number for a more reproducible result. The implicit timestamps that the servers use as the version could result in fluctuating results as you cannot guarantee them using the exact time, down to the millisecond.

The filter() method used is called with

different parameter combinations, showing how using the built-in value

filter and the drop flag is affecting the returned data set.

Dedicated Filters

The second type of supplied filters are based directly on

FilterBase and implement more

specific use cases. Many of these filters are only really applicable

when performing scan operations, since they filter out entire rows. For

get() calls, this is often too

restrictive and would result in a

very harsh filter approach: include the whole row or nothing at

all.

SingleColumnValueFilter

You can use this filter when you have exactly one column that decides if an entire row should be returned or not. You need to first specify the column you want to track, and then some value to check against. The constructors offered are:

SingleColumnValueFilter(byte[] family, byte[] qualifier, CompareOp compareOp, byte[] value) SingleColumnValueFilter(byte[] family, byte[] qualifier, CompareOp compareOp, WritableByteArrayComparable comparator)

The first one is a convenience function as

it simply creates a BinaryComparator instance internally on your

behalf. The second takes the same parameters we used for the CompareFilter-based classes. Although the

SingleColumnValueFilter does not

inherit from the CompareFilter

directly, it still uses the same parameter types.

The filter class also exposes a few auxiliary methods you can use to fine-tune its behavior:

boolean getFilterIfMissing() void setFilterIfMissing(boolean filterIfMissing) boolean getLatestVersionOnly() void setLatestVersionOnly(boolean latestVersionOnly)

The former controls what happens to rows

that do not have the column at all. By default, they are included in

the result, but you can use setFilterIfMissing(true) to reverse that

behavior, that is, all rows that do not have the reference column are

dropped from the result.

Note

You must include the column you want to

filter by, in other words, the reference column, into the families

you query for—using addColumn(),

for example. If you fail to do so, the column is considered missing

and the result is either empty, or contains all rows, based on the

getFilterIfMissing()

result.

By using setLatestVersionOnly(false)—the default is

true—you can change the default

behavior of the filter, which is only to check the newest version of

the reference column, to instead include previous versions in the

check as well. Example 4-6

combines these features to select a specific set of rows only.

SingleColumnValueFilter filter = new SingleColumnValueFilter(

Bytes.toBytes("colfam1"),

Bytes.toBytes("col-5"),

CompareFilter.CompareOp.NOT_EQUAL,

new SubstringComparator("val-5"));

filter.setFilterIfMissing(true);

Scan scan = new Scan();

scan.setFilter(filter);

ResultScanner scanner = table.getScanner(scan);

for (Result result : scanner) {

for (KeyValue kv : result.raw()) {

System.out.println("KV: " + kv + ", Value: " +

Bytes.toString(kv.getValue()));

}

}

scanner.close();

Get get = new Get(Bytes.toBytes("row-6"));

get.setFilter(filter);

Result result = table.get(get);

System.out.println("Result of get: ");

for (KeyValue kv : result.raw()) {

System.out.println("KV: " + kv + ", Value: " +

Bytes.toString(kv.getValue()));

}SingleColumnValueExcludeFilter

The SingleColumnValueFilter we just discussed is

extended in this class to provide slightly different semantics: the

reference column, as handed into the constructor, is omitted from the

result. In other words, you have the same features, constructors, and

methods to control how this filter works. The only difference is that

you will never get the column you are checking against as part of the

Result instance(s) on the client

side.

PrefixFilter

Given a prefix, specified when you instantiate the filter instance, all rows that match this prefix are returned to the client. The constructor is:

public PrefixFilter(byte[] prefix)

Example 4-7 has this applied to the usual test data set.

Filter filter = new PrefixFilter(Bytes.toBytes("row-1"));

Scan scan = new Scan();

scan.setFilter(filter);

ResultScanner scanner = table.getScanner(scan);

for (Result result : scanner) {

for (KeyValue kv : result.raw()) {

System.out.println("KV: " + kv + ", Value: " +

Bytes.toString(kv.getValue()));

}

}

scanner.close();

Get get = new Get(Bytes.toBytes("row-5"));

get.setFilter(filter);

Result result = table.get(get);

for (KeyValue kv : result.raw()) {

System.out.println("KV: " + kv + ", Value: " +

Bytes.toString(kv.getValue()));

}It is interesting to see how the get() call fails to return anything, because

it is asking for a row that does not match the

filter prefix. This filter does not make much sense when doing

get() calls but is highly useful

for scan operations.

The scan also is actively ended when the filter encounters a row key that is larger than the prefix. In this way, and combining this with a start row, for example, the filter is improving the overall performance of the scan as it has knowledge of when to skip the rest of the rows altogether.

PageFilter

You paginate through rows by employing this filter. When you

create the instance, you specify a pageSize parameter, which controls how many

rows per page should be returned.

Note

There is a fundamental issue with

filtering on physically separate servers. Filters run on different

region servers in parallel and cannot retain or communicate their

current state across those boundaries. Thus, each filter is required

to scan at least up to pageCount

rows before ending the scan. This means a slight inefficiency is

given for the PageFilter as more

rows are reported to the client than necessary. The final

consolidation on the client obviously has visibility into all

results and can reduce what is accessible through the API

accordingly.

The client code would need to remember the last row that was returned, and then, when another iteration is about to start, set the start row of the scan accordingly, while retaining the same filter properties.

Because pagination is setting a strict limit on the number of rows to be returned, it is possible for the filter to early out the entire scan, once the limit is reached or exceeded. Filters have a facility to indicate that fact and the region servers make use of this hint to stop any further processing.

Example 4-8 puts this together, showing how a client can reset the scan to a new start row on the subsequent iterations.

Filter filter = new PageFilter(15);

int totalRows = 0;

byte[] lastRow = null;

while (true) {

Scan scan = new Scan();

scan.setFilter(filter);

if (lastRow != null) {

byte[] startRow = Bytes.add(lastRow, POSTFIX);

System.out.println("start row: " +

Bytes.toStringBinary(startRow));

scan.setStartRow(startRow);

}

ResultScanner scanner = table.getScanner(scan);

int localRows = 0;

Result result;

while ((result = scanner.next()) != null) {

System.out.println(localRows++ + ": " + result);

totalRows++;

lastRow = result.getRow();

}

scanner.close();

if (localRows == 0) break;

}

System.out.println("total rows: " + totalRows);Because of the lexicographical sorting of the row keys by HBase and the comparison taking care of finding the row keys in order, and the fact that the start key on a scan is always inclusive, you need to add an extra zero byte to the previous key. This will ensure that the last seen row key is skipped and the next, in sorting order, is found. The zero byte is the smallest increment, and therefore is safe to use when resetting the scan boundaries. Even if there were a row that would match the previous plus the extra zero byte, the scan would be correctly doing the next iteration—this is because the start key is inclusive.

KeyOnlyFilter

Some applications need to access just the keys of each

KeyValue, while omitting the actual

data. The KeyOnlyFilter provides

this functionality by applying the filter’s ability to modify the

processed columns and cells, as they pass through. It does so by

applying the KeyValue.convertToKeyOnly(boolean) call that

strips out the data part.

The constructor of this filter has a

boolean parameter, named lenAsVal. It is handed to the convertToKeyOnly() call as-is, controlling

what happens to the value part of each KeyValue instance processed. The default

false simply sets the value to zero

length, while the opposite true

sets the value to the number representing the length of the original

value.

The latter may be useful to your application when quickly iterating over columns, where the keys already convey meaning and the length can be used to perform a secondary sort, for example. Client API: Best Practices has an example.

FirstKeyOnlyFilter

If you need to access the first column—as sorted implicitly by HBase—in each row, this filter will provide this feature. Typically this is used by row counter type applications that only need to check if a row exists. Recall that in column-oriented databases a row really is composed of columns, and if there are none, the row ceases to exist.

Another possible use case is relying on the column sorting in lexicographical order, and setting the column qualifier to an epoch value. This would sort the column with the oldest timestamp name as the first to be retrieved. Combined with this filter, it is possible to retrieve the oldest column from every row using a single scan.

This class makes use of another optimization feature provided by the filter framework: it indicates to the region server applying the filter that the current row is done and that it should skip to the next one. This improves the overall performance of the scan, compared to a full table scan.

InclusiveStopFilter

The row boundaries of a scan are inclusive for the start

row, yet exclusive for the stop row. You can overcome the stop row

semantics using this filter, which includes the

specified stop row. Example 4-9 uses

the filter to start at row-3, and

stop at row-5

inclusively.

The output on the console, when running the example code, confirms that the filter works as advertised:

Adding rows to table...

Results of scan:

keyvalues={row-3/colfam1:col-0/1301337961569/Put/vlen=7}

keyvalues={row-30/colfam1:col-0/1301337961610/Put/vlen=8}

keyvalues={row-31/colfam1:col-0/1301337961612/Put/vlen=8}

keyvalues={row-32/colfam1:col-0/1301337961613/Put/vlen=8}

keyvalues={row-33/colfam1:col-0/1301337961614/Put/vlen=8}

keyvalues={row-34/colfam1:col-0/1301337961615/Put/vlen=8}

keyvalues={row-35/colfam1:col-0/1301337961616/Put/vlen=8}

keyvalues={row-36/colfam1:col-0/1301337961617/Put/vlen=8}

keyvalues={row-37/colfam1:col-0/1301337961618/Put/vlen=8}

keyvalues={row-38/colfam1:col-0/1301337961619/Put/vlen=8}

keyvalues={row-39/colfam1:col-0/1301337961620/Put/vlen=8}

keyvalues={row-4/colfam1:col-0/1301337961571/Put/vlen=7}

keyvalues={row-40/colfam1:col-0/1301337961621/Put/vlen=8}

keyvalues={row-41/colfam1:col-0/1301337961622/Put/vlen=8}

keyvalues={row-42/colfam1:col-0/1301337961623/Put/vlen=8}

keyvalues={row-43/colfam1:col-0/1301337961624/Put/vlen=8}

keyvalues={row-44/colfam1:col-0/1301337961625/Put/vlen=8}

keyvalues={row-45/colfam1:col-0/1301337961626/Put/vlen=8}

keyvalues={row-46/colfam1:col-0/1301337961627/Put/vlen=8}

keyvalues={row-47/colfam1:col-0/1301337961628/Put/vlen=8}

keyvalues={row-48/colfam1:col-0/1301337961629/Put/vlen=8}

keyvalues={row-49/colfam1:col-0/1301337961630/Put/vlen=8}

keyvalues={row-5/colfam1:col-0/1301337961573/Put/vlen=7}TimestampsFilter

When you need fine-grained control over what versions are

included in the scan result, this filter provides the means. You have

to hand in a List of

timestamps:

TimestampsFilter(List<Long> timestamps)

Note

As you have seen throughout the book so far, a version is a specific value of a column at a unique point in time, denoted with a timestamp. When the filter is asking for a list of timestamps, it will attempt to retrieve the column versions with the matching timestamps.

Example 4-10 sets up a filter with three timestamps and adds a time range to the second scan.

List<Long> ts = new ArrayList<Long>();

ts.add(new Long(5));

ts.add(new Long(10));  ts.add(new Long(15));

Filter filter = new TimestampsFilter(ts);

Scan scan1 = new Scan();

scan1.setFilter(filter);

ts.add(new Long(15));

Filter filter = new TimestampsFilter(ts);

Scan scan1 = new Scan();

scan1.setFilter(filter);  ResultScanner scanner1 = table.getScanner(scan1);

for (Result result : scanner1) {

System.out.println(result);

}

scanner1.close();

Scan scan2 = new Scan();

scan2.setFilter(filter);

scan2.setTimeRange(8, 12);

ResultScanner scanner1 = table.getScanner(scan1);

for (Result result : scanner1) {

System.out.println(result);

}

scanner1.close();

Scan scan2 = new Scan();

scan2.setFilter(filter);

scan2.setTimeRange(8, 12);  ResultScanner scanner2 = table.getScanner(scan2);

for (Result result : scanner2) {

System.out.println(result);

}

scanner2.close();

ResultScanner scanner2 = table.getScanner(scan2);

for (Result result : scanner2) {

System.out.println(result);

}

scanner2.close();Here is the output on the console in an abbreviated form:

Adding rows to table...

Results of scan #1:

keyvalues={row-1/colfam1:col-10/10/Put/vlen=8,

row-1/colfam1:col-15/15/Put/vlen=8,

row-1/colfam1:col-5/5/Put/vlen=7}

keyvalues={row-10/colfam1:col-10/10/Put/vlen=9,

row-10/colfam1:col-15/15/Put/vlen=9,

row-10/colfam1:col-5/5/Put/vlen=8}

keyvalues={row-100/colfam1:col-10/10/Put/vlen=10,

row-100/colfam1:col-15/15/Put/vlen=10,

row-100/colfam1:col-5/5/Put/vlen=9}

...

Results of scan #2:

keyvalues={row-1/colfam1:col-10/10/Put/vlen=8}

keyvalues={row-10/colfam1:col-10/10/Put/vlen=9}

keyvalues={row-100/colfam1:col-10/10/Put/vlen=10}

keyvalues={row-11/colfam1:col-10/10/Put/vlen=9}

...The first scan, only using the filter, is outputting the column values for all three specified timestamps as expected. The second scan only returns the timestamp that fell into the time range specified when the scan was set up. Both time-based restrictions, the filter and the scanner time range, are doing their job and the result is a combination of both.

ColumnCountGetFilter

You can use this filter to only retrieve a specific maximum number of columns per row. You can set the number using the constructor of the filter:

ColumnCountGetFilter(int n)

Since this filter stops the entire scan once

a row has been found that matches the maximum number of columns

configured, it is not useful for scan operations, and in fact, it was

written to test filters in get()

calls.

ColumnPaginationFilter

Similar to the PageFilter,

this one can be used to page through columns in a row. Its constructor

has two parameters:

ColumnPaginationFilter(int limit, int offset)

It skips all columns up to the number given

as offset, and then includes

limit columns afterward. Example 4-11 has this applied to a

normal scan.

Running this example should render the following output:

Adding rows to table...

Results of scan:

keyvalues={row-01/colfam1:col-15/15/Put/vlen=9,

row-01/colfam1:col-16/16/Put/vlen=9,

row-01/colfam1:col-17/17/Put/vlen=9,

row-01/colfam1:col-18/18/Put/vlen=9,

row-01/colfam1:col-19/19/Put/vlen=9}

keyvalues={row-02/colfam1:col-15/15/Put/vlen=9,

row-02/colfam1:col-16/16/Put/vlen=9,

row-02/colfam1:col-17/17/Put/vlen=9,

row-02/colfam1:col-18/18/Put/vlen=9,

row-02/colfam1:col-19/19/Put/vlen=9}

...Note

This example slightly changes the way the

rows and columns are numbered by adding a padding to the numeric

counters. For example, the first row is padded to be row-01. This also shows how padding can be

used to get a more human-readable style of

sorting, for example—as known from a dictionary or telephone

book.

The result includes all 10 rows, starting

each row at column (offset = 15)

and printing five columns (limit =

5).

ColumnPrefixFilter

Analog to the PrefixFilter,

which worked by filtering on row key prefixes, this filter does the

same for columns. You specify a prefix when creating the

filter:

ColumnPrefixFilter(byte[] prefix)

All columns that have the given prefix are then included in the result.

RandomRowFilter

Finally, there is a filter that shows what is also possible

using the API: including random rows into the result. The constructor

is given a parameter named chance,

which represents a value between 0.0 and 1.0:

RandomRowFilter(float chance)

Internally, this class is using a Java

Random.nextFloat() call to

randomize the row inclusion, and then compares the value with the

chance given. Giving it a negative

chance value will make the filter exclude all rows, while a value

larger than 1.0 will make it

include all rows.

Decorating Filters

While the provided filters are already very powerful, sometimes it can be useful to modify, or extend, the behavior of a filter to gain additional control over the returned data. Some of this additional control is not dependent on the filter itself, but can be applied to any of them. This is what the decorating filter group of classes is about.

SkipFilter

This filter wraps a given filter and extends it to exclude

an entire row, when the wrapped filter hints for a KeyValue to be skipped. In other words, as

soon as a filter indicates that a column in a row is omitted, the

entire row is omitted.

Note

The wrapped filter

must implement the filterKeyValue() method, or the SkipFilter will not work as

expected.[58] This is because the

SkipFilter is only

checking the results of that method to decide how to handle the

current row. See Table 4-5 on page for an overview of compatible

filters.

Example 4-12

combines the SkipFilter with a

ValueFilter to first select all

columns that have no zero-valued column, and subsequently drops all

other partial rows that do not have a matching value.

Filter filter1 = new ValueFilter(CompareFilter.CompareOp.NOT_EQUAL,

new BinaryComparator(Bytes.toBytes("val-0")));

Scan scan = new Scan();

scan.setFilter(filter1);  ResultScanner scanner1 = table.getScanner(scan);

for (Result result : scanner1) {

for (KeyValue kv : result.raw()) {

System.out.println("KV: " + kv + ", Value: " +

Bytes.toString(kv.getValue()));

}

}

scanner1.close();

Filter filter2 = new SkipFilter(filter1);

scan.setFilter(filter2);

ResultScanner scanner1 = table.getScanner(scan);

for (Result result : scanner1) {

for (KeyValue kv : result.raw()) {

System.out.println("KV: " + kv + ", Value: " +

Bytes.toString(kv.getValue()));

}

}

scanner1.close();

Filter filter2 = new SkipFilter(filter1);

scan.setFilter(filter2);  ResultScanner scanner2 = table.getScanner(scan);

for (Result result : scanner2) {

for (KeyValue kv : result.raw()) {

System.out.println("KV: " + kv + ", Value: " +

Bytes.toString(kv.getValue()));

}

}

scanner2.close();

ResultScanner scanner2 = table.getScanner(scan);

for (Result result : scanner2) {

for (KeyValue kv : result.raw()) {

System.out.println("KV: " + kv + ", Value: " +

Bytes.toString(kv.getValue()));

}

}

scanner2.close();The example code should print roughly the following results when you execute it—note, though, that the values are randomized, so you should get a slightly different result for every invocation:

Adding rows to table... Results of scan #1: KV: row-01/colfam1:col-00/0/Put/vlen=5, Value: val-4 KV: row-01/colfam1:col-01/1/Put/vlen=5, Value: val-2 KV: row-01/colfam1:col-02/2/Put/vlen=5, Value: val-4 KV: row-01/colfam1:col-03/3/Put/vlen=5, Value: val-3 KV: row-01/colfam1:col-04/4/Put/vlen=5, Value: val-1 KV: row-02/colfam1:col-00/0/Put/vlen=5, Value: val-3 KV: row-02/colfam1:col-01/1/Put/vlen=5, Value: val-1 KV: row-02/colfam1:col-03/3/Put/vlen=5, Value: val-4 KV: row-02/colfam1:col-04/4/Put/vlen=5, Value: val-1 ... Total KeyValue count for scan #1: 122 Results of scan #2: KV: row-01/colfam1:col-00/0/Put/vlen=5, Value: val-4 KV: row-01/colfam1:col-01/1/Put/vlen=5, Value: val-2 KV: row-01/colfam1:col-02/2/Put/vlen=5, Value: val-4 KV: row-01/colfam1:col-03/3/Put/vlen=5, Value: val-3 KV: row-01/colfam1:col-04/4/Put/vlen=5, Value: val-1 KV: row-07/colfam1:col-00/0/Put/vlen=5, Value: val-4 KV: row-07/colfam1:col-01/1/Put/vlen=5, Value: val-1 KV: row-07/colfam1:col-02/2/Put/vlen=5, Value: val-1 KV: row-07/colfam1:col-03/3/Put/vlen=5, Value: val-2 KV: row-07/colfam1:col-04/4/Put/vlen=5, Value: val-4 ... Total KeyValue count for scan #2: 50

The first scan returns all columns that are not zero valued. Since the value is assigned at random, there is a high probability that you will get at least one or more columns of each possible row. Some rows will miss a column—these are the omitted zero-valued ones.

The second scan, on the other hand, wraps

the first filter and forces all partial rows to be dropped. You can

see from the console output how only complete rows are emitted, that

is, those with all five columns the example code creates initially.

The total KeyValue count for each

scan confirms the more restrictive behavior of the SkipFilter

variant.

WhileMatchFilter

This second decorating filter type works somewhat similarly

to the previous one, but aborts the entire scan once a piece of

information is filtered. This works by checking the wrapped filter and

seeing if it skips a row by its key, or a column of a row because of a

KeyValue check.[59]

Example 4-13 is a slight variation of the previous example, using different filters to show how the decorating class works.

Filter filter1 = new RowFilter(CompareFilter.CompareOp.NOT_EQUAL, new BinaryComparator(Bytes.toBytes("row-05"))); Scan scan = new Scan(); scan.setFilter(filter1); ResultScanner scanner1 = table.getScanner(scan); for (Result result : scanner1) { for (KeyValue kv : result.raw()) { System.out.println("KV: " + kv + ", Value: " + Bytes.toString(kv.getValue())); } } scanner1.close(); Filter filter2 = new WhileMatchFilter(filter1); scan.setFilter(filter2); ResultScanner scanner2 = table.getScanner(scan); for (Result result : scanner2) { for (KeyValue kv : result.raw()) { System.out.println("KV: " + kv + ", Value: " + Bytes.toString(kv.getValue())); } } scanner2.close();

Once you run the example code, you should get this output on the console:

Adding rows to table... Results of scan #1: KV: row-01/colfam1:col-00/0/Put/vlen=9, Value: val-01.00 KV: row-02/colfam1:col-00/0/Put/vlen=9, Value: val-02.00 KV: row-03/colfam1:col-00/0/Put/vlen=9, Value: val-03.00 KV: row-04/colfam1:col-00/0/Put/vlen=9, Value: val-04.00 KV: row-06/colfam1:col-00/0/Put/vlen=9, Value: val-06.00 KV: row-07/colfam1:col-00/0/Put/vlen=9, Value: val-07.00 KV: row-08/colfam1:col-00/0/Put/vlen=9, Value: val-08.00 KV: row-09/colfam1:col-00/0/Put/vlen=9, Value: val-09.00 KV: row-10/colfam1:col-00/0/Put/vlen=9, Value: val-10.00 Total KeyValue count for scan #1: 9 Results of scan #2: KV: row-01/colfam1:col-00/0/Put/vlen=9, Value: val-01.00 KV: row-02/colfam1:col-00/0/Put/vlen=9, Value: val-02.00 KV: row-03/colfam1:col-00/0/Put/vlen=9, Value: val-03.00 KV: row-04/colfam1:col-00/0/Put/vlen=9, Value: val-04.00 Total KeyValue count for scan #2: 4

The first scan used just the RowFilter to skip one out of 10 rows; the

rest is returned to the client. Adding the WhileMatchFilter for the second scan shows

its behavior to stop the entire scan operation, once the wrapped

filter omits a row or column. In the example this is row-05, triggering the end of the

scan.

FilterList

So far you have seen how filters—on their own, or

decorated—are doing the work of filtering out various dimensions of a

table, ranging from rows, to columns, and all the way to versions of

values within a column. In practice, though, you may want to have more

than one filter being applied to reduce the data returned to your client

application. This is what the FilterList is for.

Note

The FilterList class implements the same

Filter interface, just like any

other single-purpose filter. In doing so, it can be used as a drop-in

replacement for those filters, while combining the effects of each

included instance.

You can create an instance of FilterList while providing various parameters

at instantiation time, using one

of these constructors:

FilterList(List<Filter> rowFilters) FilterList(Operator operator) FilterList(Operator operator, List<Filter> rowFilters

The rowFilters parameter specifies the list of

filters that are assessed together, using an operator to combine their results. Table 4-3 lists the possible choices of operators.

The default is MUST_PASS_ALL, and can

therefore be omitted from the constructor when you do not need a

different one.

Adding filters, after the

FilterList instance has been created,

can be done with:

void addFilter(Filter filter)

You can only specify one

operator per FilterList, but you are

free to add other FilterList

instances to an existing FilterList,

thus creating a hierarchy of filters, combined with the operators you

need.

You can further control the execution order of

the included filters by carefully choosing the List implementation you require. For example,

using ArrayList would guarantee that

the filters are applied in the order they were added to the list. This

is shown in Example 4-14.

List<Filter> filters = new ArrayList<Filter>();

Filter filter1 = new RowFilter(CompareFilter.CompareOp.GREATER_OR_EQUAL,

new BinaryComparator(Bytes.toBytes("row-03")));

filters.add(filter1);

Filter filter2 = new RowFilter(CompareFilter.CompareOp.LESS_OR_EQUAL,

new BinaryComparator(Bytes.toBytes("row-06")));

filters.add(filter2);

Filter filter3 = new QualifierFilter(CompareFilter.CompareOp.EQUAL,

new RegexStringComparator("col-0[03]"));

filters.add(filter3);

FilterList filterList1 = new FilterList(filters);

Scan scan = new Scan();

scan.setFilter(filterList1);

ResultScanner scanner1 = table.getScanner(scan);

for (Result result : scanner1) {

for (KeyValue kv : result.raw()) {

System.out.println("KV: " + kv + ", Value: " +

Bytes.toString(kv.getValue()));

}

}

scanner1.close();

FilterList filterList2 = new FilterList(

FilterList.Operator.MUST_PASS_ONE, filters);

scan.setFilter(filterList2);

ResultScanner scanner2 = table.getScanner(scan);

for (Result result : scanner2) {

for (KeyValue kv : result.raw()) {

System.out.println("KV: " + kv + ", Value: " +

Bytes.toString(kv.getValue()));

}

}

scanner2.close();The first scan filters out a lot of details, as at least one of the filters in the list excludes some information. Only where they all let the information pass is it returned to the client.

In contrast, the second scan includes

all rows and columns in the result. This is caused

by setting the FilterList operator to

MUST_PASS_ONE, which includes all the

information as soon as a single filter lets it pass. And in this

scenario, all values are passed by at least one of them,

including everything.

Custom Filters

Eventually, you may exhaust the list of supplied filter types and

need to implement your own. This can be done by either implementing the

Filter interface, or extending the

provided FilterBase class. The latter

provides default implementations for all methods that are members of the

interface.

The Filter interface has

the following structure:

public interface Filter extends Writable {

public enum ReturnCode {

INCLUDE, SKIP, NEXT_COL, NEXT_ROW, SEEK_NEXT_USING_HINT

}

public void reset()

public boolean filterRowKey(byte[] buffer, int offset, int length)

public boolean filterAllRemaining()

public ReturnCode filterKeyValue(KeyValue v)

public void filterRow(List<KeyValue> kvs)

public boolean hasFilterRow()

public boolean filterRow()

public KeyValue getNextKeyHint(KeyValue currentKV)The interface provides a public enumeration

type, named ReturnCode, that is used

by the filterKeyValue() method to

indicate what the execution framework should do next. Instead of blindly

iterating over all values, the filter has the ability to skip a value,

the remainder of a column, or the rest of the entire row. This helps

tremendously in terms of improving performance while retrieving

data.

Note

The servers may still need to scan the

entire row to find matching data, but the optimizations provided by

the filterKeyValue() return code

can reduce the work required to do so.

Table 4-4 lists the possible values and their meaning.

| Return code | Description |

INCLUDE | Include the given KeyValue instance in the

result. |

SKIP | Skip the current KeyValue and proceed to the

next. |

NEXT_COL | Skip the remainder of the current column, proceeding to

the next. This is used by the TimestampsFilter, for

example. |

NEXT_ROW | Similar to the previous, but skips the remainder of the

current row, moving to the next. The RowFilter makes use of this return

code, for example. |

SEEK_NEXT_USING_HINT | Some filters want to skip a variable number of values and

use this return code to indicate that the framework should use

the getNextKeyHint() method

to determine where to skip to. The ColumnPrefixFilter, for example, uses

this feature. |

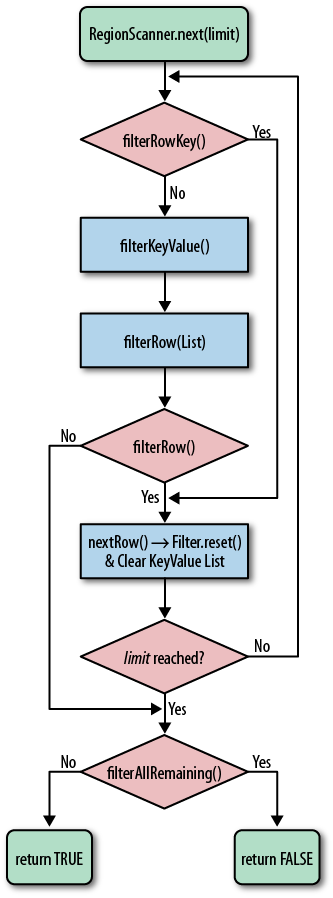

Most of the provided methods are called at various stages in the process of retrieving a row for a client—for example, during a scan operation. Putting them in call order, you can expect them to be executed in the following sequence:

filterRowKey(byte[] buffer, int offset, int length)The next check is against the row key, using this method of the

Filterimplementation. You can use it to skip an entire row from being further processed. TheRowFilteruses it to suppress entire rows being returned to the client.filterKeyValue(KeyValue v)When a row is not filtered (yet), the framework proceeds to invoke this method for every

KeyValuethat is part of the current row. TheReturnCodeindicates what should happen with the current value.filterRow(List<KeyValue> kvs)Once all row and value checks have been performed, this method of the filter is called, giving you access to the list of

KeyValueinstances that have been included by the previous filter methods. TheDependentColumnFilteruses it to drop those columns that do not match the reference column.filterRow()After everything else was checked and invoked, the final inspection is performed using

filterRow(). A filter that uses this functionality is thePageFilter, checking if the number of rows to be returned for one iteration in the pagination process is reached, returningtrueafterward. The defaultfalsewould include the current row in the result.reset()This resets the filter for every new row the scan is iterating over. It is called by the server, after a row is read, implicitly. This applies to get and scan operations, although obviously it has no effect for the former, as

gets only read a single row.filterAllRemaining()This method can be used to stop the scan, by returning

true. It is used by filters to provide the early out optimizations mentioned earlier. If a filter returnsfalse, the scan is continued, and the aforementioned methods are called.Obviously, this also implies that for

getoperations this call is not useful.

Figure 4-2 shows the logical flow of the filter methods for a single row. There is a more fine-grained process to apply the filters on a column level, which is not relevant in this context.

Example 4-15 implements a

custom filter, using the methods provided by FilterBase, overriding only those methods that

need to be changed.

The filter first assumes all rows should be filtered, that is, removed from the result. Only when there is a value in any column that matches the given reference does it include the row, so that it is sent back to the client.

public class CustomFilter extends FilterBase{

private byte[] value = null;

private boolean filterRow = true;

public CustomFilter() {

super();

}

public CustomFilter(byte[] value) {

this.value = value;  }

@Override

public void reset() {

this.filterRow = true;

}

@Override

public void reset() {

this.filterRow = true;  }

@Override

public ReturnCode filterKeyValue(KeyValue kv) {

if (Bytes.compareTo(value, kv.getValue()) == 0) {

filterRow = false;

}

@Override

public ReturnCode filterKeyValue(KeyValue kv) {

if (Bytes.compareTo(value, kv.getValue()) == 0) {

filterRow = false;  }

return ReturnCode.INCLUDE;

}

return ReturnCode.INCLUDE;  }

@Override

public boolean filterRow() {

return filterRow;

}

@Override

public boolean filterRow() {

return filterRow;  }

@Override

public void write(DataOutput dataOutput) throws IOException {

Bytes.writeByteArray(dataOutput, this.value);

}

@Override

public void write(DataOutput dataOutput) throws IOException {

Bytes.writeByteArray(dataOutput, this.value);  }

@Override

public void readFields(DataInput dataInput) throws IOException {

this.value = Bytes.readByteArray(dataInput);

}

@Override

public void readFields(DataInput dataInput) throws IOException {

this.value = Bytes.readByteArray(dataInput);  }

}

}

}Example 4-16 uses

the new custom filter to find rows with specific values in it, also

using a FilterList.

List<Filter> filters = new ArrayList<Filter>();

Filter filter1 = new CustomFilter(Bytes.toBytes("val-05.05"));

filters.add(filter1);

Filter filter2 = new CustomFilter(Bytes.toBytes("val-02.07"));

filters.add(filter2);

Filter filter3 = new CustomFilter(Bytes.toBytes("val-09.00"));

filters.add(filter3);

FilterList filterList = new FilterList(

FilterList.Operator.MUST_PASS_ONE, filters);

Scan scan = new Scan();

scan.setFilter(filterList);

ResultScanner scanner = table.getScanner(scan);

for (Result result : scanner) {

for (KeyValue kv : result.raw()) {

System.out.println("KV: " + kv + ", Value: " +

Bytes.toString(kv.getValue()));

}

}

scanner.close();Just as with the earlier examples, here is what should appear as output on the console when executing this example:

Adding rows to table... Results of scan: KV: row-02/colfam1:col-00/1301507323088/Put/vlen=9, Value: val-02.00 KV: row-02/colfam1:col-01/1301507323090/Put/vlen=9, Value: val-02.01 KV: row-02/colfam1:col-02/1301507323092/Put/vlen=9, Value: val-02.02 KV: row-02/colfam1:col-03/1301507323093/Put/vlen=9, Value: val-02.03 KV: row-02/colfam1:col-04/1301507323096/Put/vlen=9, Value: val-02.04 KV: row-02/colfam1:col-05/1301507323104/Put/vlen=9, Value: val-02.05 KV: row-02/colfam1:col-06/1301507323108/Put/vlen=9, Value: val-02.06 KV: row-02/colfam1:col-07/1301507323110/Put/vlen=9, Value: val-02.07 KV: row-02/colfam1:col-08/1301507323112/Put/vlen=9, Value: val-02.08 KV: row-02/colfam1:col-09/1301507323113/Put/vlen=9, Value: val-02.09 KV: row-05/colfam1:col-00/1301507323148/Put/vlen=9, Value: val-05.00 KV: row-05/colfam1:col-01/1301507323150/Put/vlen=9, Value: val-05.01 KV: row-05/colfam1:col-02/1301507323152/Put/vlen=9, Value: val-05.02 KV: row-05/colfam1:col-03/1301507323153/Put/vlen=9, Value: val-05.03 KV: row-05/colfam1:col-04/1301507323154/Put/vlen=9, Value: val-05.04 KV: row-05/colfam1:col-05/1301507323155/Put/vlen=9, Value: val-05.05 KV: row-05/colfam1:col-06/1301507323157/Put/vlen=9, Value: val-05.06 KV: row-05/colfam1:col-07/1301507323158/Put/vlen=9, Value: val-05.07 KV: row-05/colfam1:col-08/1301507323158/Put/vlen=9, Value: val-05.08 KV: row-05/colfam1:col-09/1301507323159/Put/vlen=9, Value: val-05.09 KV: row-09/colfam1:col-00/1301507323192/Put/vlen=9, Value: val-09.00 KV: row-09/colfam1:col-01/1301507323194/Put/vlen=9, Value: val-09.01 KV: row-09/colfam1:col-02/1301507323196/Put/vlen=9, Value: val-09.02 KV: row-09/colfam1:col-03/1301507323199/Put/vlen=9, Value: val-09.03 KV: row-09/colfam1:col-04/1301507323201/Put/vlen=9, Value: val-09.04 KV: row-09/colfam1:col-05/1301507323202/Put/vlen=9, Value: val-09.05 KV: row-09/colfam1:col-06/1301507323203/Put/vlen=9, Value: val-09.06 KV: row-09/colfam1:col-07/1301507323204/Put/vlen=9, Value: val-09.07 KV: row-09/colfam1:col-08/1301507323205/Put/vlen=9, Value: val-09.08 KV: row-09/colfam1:col-09/1301507323206/Put/vlen=9, Value: val-09.09

As expected, the entire row that has a column with the value matching one of the references is included in the result.

Filters Summary

Table 4-5 summarizes some of the features and compatibilities related to the provided filter implementations. The ✓ symbol means the feature is available, while ✗ indicates it is missing.

| Filter | Batch[a] | Skip[b] | While-Match[c] | List[d] | Early Out[e] | Gets[f] | Scans[g] |

RowFilter | ✓ | ✓ | ✓ | ✓ | ✓ | ✗ | ✓ |

FamilyFilter | ✓ | ✓ | ✓ | ✓ | ✗ | ✓ | ✓ |

QualifierFilter | ✓ | ✓ | ✓ | ✓ | ✗ | ✓ | ✓ |

ValueFilter | ✓ | ✓ | ✓ | ✓ | ✗ | ✓ | ✓ |

DependentColumnFilter | ✗ | ✓ | ✓ | ✓ | ✗ | ✓ | ✓ |

SingleColumnValueFilter | ✓ | ✓ | ✓ | ✓ | ✗ | ✗ | ✓ |

SingleColumnValueExcludeFilter | ✓ | ✓ | ✓ | ✓ | ✗ | ✗ | ✓ |

PrefixFilter | ✓ | ✗ | ✓ | ✓ | ✓ | ✗ | ✓ |

PageFilter | ✓ | ✗ | ✓ | ✓ | ✓ | ✗ | ✓ |

KeyOnlyFilter | ✓ | ✓ | ✓ | ✓ | ✗ | ✓ | ✓ |

FirstKeyOnlyFilter | ✓ | ✓ | ✓ | ✓ | ✗ | ✓ | ✓ |

InclusiveStopFilter | ✓ | ✗ | ✓ | ✓ | ✓ | ✗ | ✓ |

TimestampsFilter | ✓ | ✓ | ✓ | ✓ | ✗ | ✓ | ✓ |

ColumnCountGetFilter | ✓ | ✓ | ✓ | ✓ | ✗ | ✓ | ✗ |

ColumnPaginationFilter | ✓ | ✓ | ✓ | ✓ | ✗ | ✓ | ✓ |

ColumnPrefixFilter | ✓ | ✓ | ✓ | ✓ | ✗ | ✓ | ✓ |

RandomRowFilter | ✓ | ✓ | ✓ | ✓ | ✗ | ✗ | ✓ |

SkipFilter | ✓ | ✓/✗[h] | ✓/✗[h] | ✓ | ✗ | ✗ | ✓ |

WhileMatchFilter | ✓ | ✓/✗[h] | ✓/✗[h] | ✓ | ✓ | ✗ | ✓ |

FilterList | ✓/✗[h] | ✓/✗[h] | ✓/✗[h] | ✓ | ✓/✗[h] | ✓ | ✓ |

[a] Filter supports [b] Filter can be used with the decorating [c] Filter can be used with the decorating [d] Filter can be used with the combining [e] Filter has optimizations to stop a scan early, once there are no more matching rows ahead. [f] Filter can be usefully applied to [g] Filter can be usefully applied to [h] Depends on the included filters. | |||||||

Counters

In addition to the functionality we already discussed, HBase offers another advanced feature: counters. Many applications that collect statistics—such as clicks or views in online advertising—were used to collect the data in logfiles that would subsequently be analyzed. Using counters offers the potential of switching to live accounting, foregoing the delayed batch processing step completely.

Introduction to Counters

In addition to the check-and-modify operations you saw earlier, HBase also has a mechanism to treat columns as counters. Otherwise, you would have to lock a row, read the value, increment it, write it back, and eventually unlock the row for other writers to be able to access it subsequently. This can cause a lot of contention, and in the event of a client process, crashing it could leave the row locked until the lease recovery kicks in—which could be disastrous in a heavily loaded system.

The client API provides specialized methods to do the read-and-modify operation atomically in a single client-side call. Earlier versions of HBase only had calls that would involve an RPC for every counter update, while newer versions started to add the same mechanisms used by the CRUD operations—as explained in CRUD Operations—which can bundle multiple counter updates in a single RPC.

Note

While you can update multiple counters, you

are still limited to single rows. Updating counters in multiple rows

would require separate API—and

therefore RPC—calls. The batch() calls

currently do not support the Increment instance, though this should

change in the near future.

Before we discuss each type separately, you need to have a few more details regarding how counters work on the column level. Here is an example using the shell that creates a table, increments a counter twice, and then queries the current value:

hbase(main):001:0>create 'counters', 'daily', 'weekly', 'monthly'0 row(s) in 1.1930 secondshbase(main):002:0>incr 'counters', '20110101', 'daily:hits', 1COUNTER VALUE = 1hbase(main):003:0>incr 'counters', '20110101', 'daily:hits', 1COUNTER VALUE = 2hbase(main):04:0>get_counter 'counters', '20110101', 'daily:hits'COUNTER VALUE = 2

Every call to incr returns the new value of the counter.

The final check using get_counter shows the current value

as expected.

Note

The format of the shell’s incr command is as follows:

incr '<table>', '<row>', '<column>', [<increment-value>]

You can also access the counter with a get call, giving you this result:

hbase(main):005:0>get 'counters', '20110101'COLUMN CELL daily:hits timestamp=1301570823471, value=\x00\x00\x00\x00\x00\x00\x00\x02 1 row(s) in 0.0600 seconds

This is obviously not very readable, but it shows that a counter is simply a column, like any other. You can also specify a larger increment value:

hbase(main):006:0>incr 'counters','20110101', 'daily:hits', 20COUNTER VALUE = 22hbase(main):007:0>get 'counters', '20110101'COLUMN CELL daily:hits timestamp=1301574412848, value=\x00\x00\x00\x00\x00\x00\x00\x16 1 row(s) in 0.0400 secondshbase(main):008:0>get_counter 'counters','20110101', 'daily:hits'COUNTER VALUE = 22

Accessing the counter directly gives you the

byte array representation, with the

shell printing the separate bytes as

hexadecimal values. Using the get_counter once again shows the current value

in a more human-readable format, and confirms that variable increments

are possible and work as expected.

Finally, you can use the increment value of the incr call to not only increase the counter,

but also retrieve the current value, and decrease it as well. In fact,

you can omit it completely and the default of 1 is assumed:

hbase(main):004:0>incr 'counters', '20110101','daily:hits'COUNTER VALUE = 3hbase(main):005:0>incr 'counters', '20110101', 'daily:hits'COUNTER VALUE = 4hbase(main):006:0>incr 'counters', '20110101', 'daily:hits', 0COUNTER VALUE = 4hbase(main):007:0>incr 'counters', '20110101', 'daily:hits', -1COUNTER VALUE = 3hbase(main):008:0>incr 'counters', '20110101', 'daily:hits', -1COUNTER VALUE = 2

Using the increment value—the last parameter

of the incr command—you can achieve

the behavior shown in Table 4-6.

| Value | Effect |

greater than

zero | Increase the counter by the given value. |

zero | Retrieve the current value of the

counter. Same as using the get_counter shell command. |

less than zero | Decrease the counter by the given value. |

Obviously, using the shell’s incr command only allows you to increase a

single counter. You can do the same using the client API, described

next.

Single Counters

The first type of increment call is for single counters only: you need

to specify the exact column you want to use. The methods, provided by HTable, are as such:

long incrementColumnValue(byte[] row, byte[] family, byte[] qualifier, long amount) throws IOException long incrementColumnValue(byte[] row, byte[] family, byte[] qualifier, long amount, boolean writeToWAL) throws IOException

Given the coordinates of

a column, and the increment account, these methods only differ by the

optional writeToWAL parameter—which

works the same way as the Put.setWriteToWAL() method.

Omitting writeToWAL uses the default value of true, meaning the write-ahead log is

active.

Apart from that, you can use them easily, as shown in Example 4-17.

HTable table = new HTable(conf, "counters");

long cnt1 = table.incrementColumnValue(Bytes.toBytes("20110101"),  Bytes.toBytes("daily"), Bytes.toBytes("hits"), 1);

long cnt2 = table.incrementColumnValue(Bytes.toBytes("20110101"),

Bytes.toBytes("daily"), Bytes.toBytes("hits"), 1);

long cnt2 = table.incrementColumnValue(Bytes.toBytes("20110101"),  Bytes.toBytes("daily"), Bytes.toBytes("hits"), 1);

long current = table.incrementColumnValue(Bytes.toBytes("20110101"),

Bytes.toBytes("daily"), Bytes.toBytes("hits"), 1);

long current = table.incrementColumnValue(Bytes.toBytes("20110101"),  Bytes.toBytes("daily"), Bytes.toBytes("hits"), 0);

long cnt3 = table.incrementColumnValue(Bytes.toBytes("20110101"),

Bytes.toBytes("daily"), Bytes.toBytes("hits"), 0);

long cnt3 = table.incrementColumnValue(Bytes.toBytes("20110101"),  Bytes.toBytes("daily"), Bytes.toBytes("hits"), -1);

Bytes.toBytes("daily"), Bytes.toBytes("hits"), -1);cnt1: 1, cnt2: 2, current: 2, cnt3: 1

Just as with the shell commands used earlier, the API calls have the same effect: they increment the counter when using a positive increment value, retrieve the current value when using zero for the increment, and eventually decrease the counter by using a negative increment value.

Multiple Counters

Another way to increment counters is provided by the increment() call of HTable. It works similarly to the CRUD-type

operations discussed earlier, using the following method to do the

increment:

Result increment(Increment increment) throws IOException

You must create an instance of the Increment class and fill it with the

appropriate details—for example,

the counter coordinates. The constructors provided by this class

are:

Increment() {}

Increment(byte[] row)

Increment(byte[] row, RowLock rowLock)You must provide a row key when instantiating

an Increment, which sets the row

containing all the counters that the subsequent call to increment() should modify.

The optional parameter rowLock specifies a custom row lock instance,

allowing you to run the entire operation under your exclusive

control—for example, when you want to modify the same row a few times

while protecting it against updates from other writers.

Warning

While you can guard the increment operation against other writers, you currently cannot do this for readers. In fact, there is no atomicity guarantee made for readers.

Since readers are not taking out locks on rows that are incremented, it may happen that they have access to some counters—within one row—that are already updated, and some that are not! This applies to scan and get operations equally.

Once you have decided which row to update and created the

Increment instance, you need to add

the actual counters—meaning columns—you want to increment, using this

method:

Increment addColumn(byte[] family, byte[] qualifier, long amount)

The difference here, as compared to the

Put methods, is that there is no

option to specify a version—or timestamp—when dealing with increments:

versions are handled implicitly. Furthermore, there is no addFamily() equivalent, because counters are

specific columns, and they need to be specified as such. It therefore

makes no sense to add a column family alone.

A special feature of the Increment class is the ability to take an

optional time range:

Increment setTimeRange(long minStamp, long maxStamp) throws IOException

Setting a time range for a set of counter

increments seems odd in light of the fact that versions are handled

implicitly. The time range is actually passed on to the servers to

restrict the internal get operation from retrieving the current counter

values. You can use it to expire counters, for

example, to partition them by time: when you set the time range to be

restrictive enough, you can mask out older counters from the internal

get, making them look like they are nonexistent. An increment would

assume they are unset and start at 1

again.

The Increment class provides additional methods,

which are summarized in Table 4-7.

Similar to the shell example shown earlier, Example 4-18 uses various increment values to increment, retrieve, and decrement the given counters.

Increment increment1 = new Increment(Bytes.toBytes("20110101"));

increment1.addColumn(Bytes.toBytes("daily"), Bytes.toBytes("clicks"), 1);

increment1.addColumn(Bytes.toBytes("daily"), Bytes.toBytes("hits"), 1);  increment1.addColumn(Bytes.toBytes("weekly"), Bytes.toBytes("clicks"), 10);

increment1.addColumn(Bytes.toBytes("weekly"), Bytes.toBytes("hits"), 10);

Result result1 = table.increment(increment1);

increment1.addColumn(Bytes.toBytes("weekly"), Bytes.toBytes("clicks"), 10);

increment1.addColumn(Bytes.toBytes("weekly"), Bytes.toBytes("hits"), 10);

Result result1 = table.increment(increment1);  for (KeyValue kv : result1.raw()) {

System.out.println("KV: " + kv +

" Value: " + Bytes.toLong(kv.getValue()));

for (KeyValue kv : result1.raw()) {

System.out.println("KV: " + kv +

" Value: " + Bytes.toLong(kv.getValue()));  }

Increment increment2 = new Increment(Bytes.toBytes("20110101"));

increment2.addColumn(Bytes.toBytes("daily"), Bytes.toBytes("clicks"), 5);

increment2.addColumn(Bytes.toBytes("daily"), Bytes.toBytes("hits"), 1);

}

Increment increment2 = new Increment(Bytes.toBytes("20110101"));

increment2.addColumn(Bytes.toBytes("daily"), Bytes.toBytes("clicks"), 5);

increment2.addColumn(Bytes.toBytes("daily"), Bytes.toBytes("hits"), 1);  increment2.addColumn(Bytes.toBytes("weekly"), Bytes.toBytes("clicks"), 0);

increment2.addColumn(Bytes.toBytes("weekly"), Bytes.toBytes("hits"), -5);

Result result2 = table.increment(increment2);

for (KeyValue kv : result2.raw()) {

System.out.println("KV: " + kv +

" Value: " + Bytes.toLong(kv.getValue()));

}

increment2.addColumn(Bytes.toBytes("weekly"), Bytes.toBytes("clicks"), 0);

increment2.addColumn(Bytes.toBytes("weekly"), Bytes.toBytes("hits"), -5);

Result result2 = table.increment(increment2);

for (KeyValue kv : result2.raw()) {

System.out.println("KV: " + kv +

" Value: " + Bytes.toLong(kv.getValue()));

}When you run the example, the following is output on the console:

KV: 20110101/daily:clicks/1301948275827/Put/vlen=8 Value: 1 KV: 20110101/daily:hits/1301948275827/Put/vlen=8 Value: 1 KV: 20110101/weekly:clicks/1301948275827/Put/vlen=8 Value: 10 KV: 20110101/weekly:hits/1301948275827/Put/vlen=8 Value: 10 KV: 20110101/daily:clicks/1301948275829/Put/vlen=8 Value: 6 KV: 20110101/daily:hits/1301948275829/Put/vlen=8 Value: 2 KV: 20110101/weekly:clicks/1301948275829/Put/vlen=8 Value: 10 KV: 20110101/weekly:hits/1301948275829/Put/vlen=8 Value: 5

When you compare the two sets of increment results, you will notice that this works as expected.

Coprocessors

Earlier we discussed how you can use filters to reduce the amount of data being sent over the network from the servers to the client. With the coprocessor feature in HBase, you can even move part of the computation to where the data lives.

Introduction to Coprocessors

Using the client API, combined with specific selector mechanisms, such as filters, or column family scoping, it is possible to limit what data is transferred to the client. It would be good, though, to take this further and, for example, perform certain operations directly on the server side while only returning a small result set. Think of this as a small MapReduce framework that distributes work across the entire cluster.

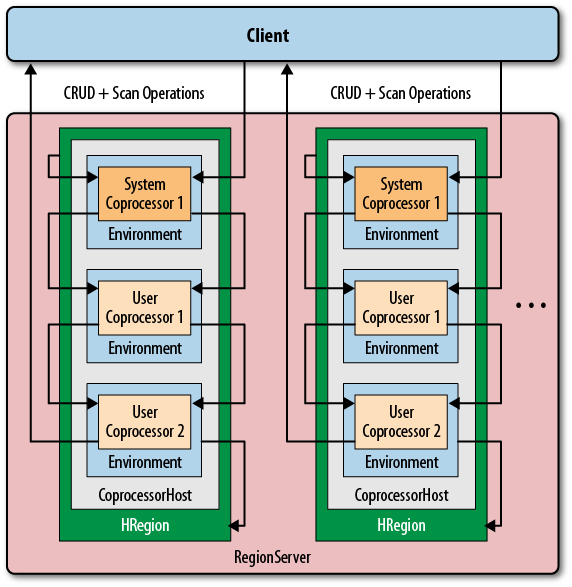

A coprocessor enables you to run arbitrary code directly on each region server. More precisely, it executes the code on a per-region basis, giving you trigger-like functionality—similar to stored procedures in the RDBMS world. From the client side, you do not have to take specific actions, as the framework handles the distributed nature transparently.

There is a set of implicit events that you can use to hook into, performing auxiliary tasks. If this is not enough, you can also extend the RPC protocol to introduce your own set of calls, which are invoked from your client and executed on the server on your behalf.

Just as with the custom filters (see Custom Filters), you need to create special Java classes that implement specific interfaces. Once they are compiled, you make these classes available to the servers in the form of a JAR file. The region server process can instantiate these classes and execute them in the correct environment. In contrast to the filters, though, coprocessors can be loaded dynamically as well. This allows you to extend the functionality of a running HBase cluster.

Use cases for coprocessors are, for instance, using hooks into row mutation operations to maintain secondary indexes, or implementing some kind of referential integrity. Filters could be enhanced to become stateful, and therefore make decisions across row boundaries. Aggregate functions, such as sum(), or avg(), known from RDBMSes and SQL, could be moved to the servers to scan the data locally and only returning the single number result across the network.

Note

Another good use case for coprocessors is access control. The authentication, authorization, and auditing features added in HBase version 0.92 are based on coprocessors. They are loaded at system startup and use the provided trigger-like hooks to check if a user is authenticated, and authorized to access specific values stored in tables.

The framework already provides classes, based on the coprocessor framework, which you can use to extend from when implementing your own functionality. They fall into two main groups: observer and endpoint. Here is a brief overview of their purpose:

- Observer

This type of coprocessor is comparable to triggers: callback functions (also referred to here as hooks) are executed when certain events occur. This includes user-generated, but also server-internal, automated events.

The interfaces provided by the coprocessor framework are:

Observers provide you with well-defined event callbacks, for every operation a cluster server may handle.

- Endpoint

Next to event handling there is also a need to add custom operations to a cluster. User code can be deployed to the servers hosting the data to, for example, perform server-local computations.

Endpoints are dynamic extensions to the RPC protocol, adding callable remote procedures. Think of them as stored procedures, as known from RDBMSes. They may be combined with observer implementations to directly interact with the server-side state.

All of these interfaces are based on the Coprocessor interface to gain common features,

but then implement their own specific functionality.

Finally, coprocessors can be chained, very similar to what the Java Servlet API does with request filters. The following section discusses the various types available in the coprocessor framework.

The Coprocessor Class

All coprocessor classes

must be based on this interface. It defines the

basic contract of a coprocessor and facilitates the management by the