Chapter 4. Multi-Touch Events and Geometry

In Chapter 3, you were introduced to some of the iPhone’s basic user

interface elements. Many objects support high-level events to notify the

application of certain actions taken by the user. These actions rely on

lower-level mouse events provided by the UIView class and

a base class underneath it: UIResponder. The

UIResponder class provides methods to

recognize and handle the mouse events that occur when the user taps or

drags on the iPhone’s screen. Higher-level objects, such as tables and

action sheets, take these low-level events and wrap them into even

higher-level ones to handle button clicks, swipes, row selections, and

other types of behavior. Apple has provided a multi-touch API capable of

intercepting finger gestures in order to make the same use of finger

movements in your UI. The touches API tells the application exactly what has occurred on the

screen and provides the information the application needs to interact with

the user.

Introduction to Geometric Structures

Before diving into events management, you’ll need a basic understanding of some geometric structures commonly used on the iPhone. You’ve already seen some of these in Chapter 3. The Core Graphics framework provides many general structures to handle graphics-related functions. Among these structures are points, window sizes, and window regions. Core Graphics also provides many C-based functions for creating and comparing these structures.

CGPoint

A CGPoint is the simplest CoreGraphics structure, and contains two floating-point

values corresponding to horizontal (x) and vertical (y) coordinates on a

display. To create a CGPoint, use

the CGPointMake

method:

CGPoint point = CGPointMake (320.0, 480.0);

The first value represents x, the horizontal pixel value, and the second represents y, the vertical pixel value. You can also access these values directly:

float x = point.x; float y = point.y;

The iPhone’s display resolution is 320×480 pixels. The upper-left corner of the screen is referenced at 0×0, and the lower-right at 319×479 (pixel offsets are zero-indexed).

Being a general-purpose structure, a CGPoint can refer equally well to a

coordinate on the screen or within a window. For example, if a window

is drawn at 0×240 (halfway down the screen), a CGPoint with values (0, 0) could address

either the upper-left corner of the screen or the upper-left corner of

the window (0×240). Which one it means is determined by the context

where the structure is being used in the program.

You can compare two CGPoint

structures using the CGPointEqualToPoint function:

BOOL isEqual = CGPointEqualToPoint(point1, point2);

CGSize

A CGSize structure represents the size of a rectangle. It encapsulates the

width and height of an object and is primarily found in the iPhone

APIs to dictate the size of screen objects—namely windows. To create a

CGSize object, use CGSizeMake:

CGSize size = CGSizeMake(320.0, 480.0);

The values provided to CGSizeMake indicate the width and height of

the element being described. You can directly access values using the

structure’s width and height variable names:

float width = size.width; float height = size.height;

You can compare two CGSize

structures using the CGSizeEqualToSize

function:

BOOL isEqual = CGSizeEqualToSize(size1, size2);

CGRect

The CGRect

structure combines both a CGPoint and CGSize structure to describe the frame of a

window on the screen. The frame includes an origin, which represents the location of the

upper-left corner of the window, and the size of the window. To create a CGRect, use the CGRectMake

function:

CGRect rect = CGRectMake(0.0, 200.0, 320.0, 240.0);

This example describes a 320×240 window whose upper-left corner

is located at coordinates 0×200. As with the CGPoint structure, these coordinates could

reference a point on the screen itself or offsets within an existing

window; it depends on where and how the CGRect structure is used.

You can also access the components of the CGRect structure directly:

CGPoint windowOrigin = rect.origin; float x = rect.origin.x; float y = rect.origin.y; CGSize windowSize = rect.size; float width = rect.size.width; float height = rect.size.height;

Containment and intersection

Two CGRect structures can

be compared using the CGRectEqualToRect

function:

BOOL isEqual = CGRectEqualToRect(rect1, rect2);

To determine whether a given point is contained inside a

CGRect, use the CGRectContainsPoint

method. This is particularly useful when determining whether

a user has tapped inside a particular region. The point is

represented as a CGPoint structure:

BOOL containsPoint = CGRectContainsPoint(rect, point);

You can use a similar function to determine whether one

CGRect structure contains another

CGRect structure. This is useful

when testing whether certain objects overlap:

BOOL containsRect = CGRectContainsRect(rect1, rect2);

For one structure to contain another, all the pixels in one

structure must also be in the other. In contrast, two structures

intersect as long as they share at least one pixel. To determine

whether two CGRect structures

intersect, use the CGRectIntersectsRect function:

BOOL doesIntersect = CGRectIntersectsRect(rect1, rect2);

Edge and center detection

The following functions can be used to determine the various

edges of a rectangle and calculate the coordinates of the

rectangle’s center. All of these functions accept a CGRect structure as their only argument

and return a float value:

CGRectGetMinXCGRectGetMinYCGRectGetMidXCGRectGetMidYCGRectGetMaxXCGRectGetMaxY

Multi-Touch Events Handling

The multi-touch support on the iPhone provides a series of

touch events consisting of smaller, individual parts of a single

multi-touch gesture. For example, placing your finger on the screen

generates one event, placing a second finger on the screen generates

another, and moving either finger generates yet another. All of these

are handled through the UITouch and

UIEvent APIs. These objects provide

information about which gesture events have been made and the screen

coordinates where they occur. The location of the touch is consistent

with a particular window and view, so you’ll also learn how to tell in

which object a touch occurred.

Because events are relative to the object in which they occur, the coordinates returned will not actually be screen coordinates, but rather relative coordinates. For example, if a view window is drawn halfway down the screen, at 0×240, and your user touches the upper-left corner of that view, the coordinates reported to your application will be 0×0, not 0×240. The 0×0 coordinates represent the upper-left corner of the view object that the user tapped.

UITouch Notifications

UITouch is the

class used to convey a single action within a series of

gestures. A UITouch object might

include notifications for one or more fingers down, a finger that has

moved, or the termination of a gesture. Several UITouch notifications can occur over the

period of a single gesture.

The UITouch object includes

various properties that identify an event:

timestampProvides a timestamp relative to a previous event using the foundation class

NSTimeInterval.phaseThe type of touch event occurring. This informs the application about what the user is actually doing with his finger, and can be one of the following enumerated values:

UITouchPhaseBeganUITouchPhaseMovedSent when a finger is moved along the screen. If the user is making a gesture or dragging an item, this type of notification will be sent several times, updating the application with the coordinates of the move.

UITouchPhaseEndedUITouchPhaseStationarySent when a finger is touching the screen, but hasn’t moved since the previous notification.

UIPhaseCancelledSent when the system cancels the gesture. This can happen during events that would cause your application to be suspended, such as an incoming phone call, or if there is little enough contact on the screen surface that the iPhone doesn’t believe a finger gesture is being made any longer, and is thus cancelled. Apple humorously gives the example of sticking the iPhone on your face to generate this notification, which may be useful if you’re writing a “stick an iPhone on your face” application to complement your flashlight application.

tapCountIdentifies the number of taps (including the current tap). For example, the second tap of a double tap would have this property set to

2.windowA pointer to the

UIWindowobject in which this touch event occurred.viewA pointer to the

UIViewobject in which this event occurred.

In addition to its properties, the UITouch class contains the following methods

that you can use to identify the screen coordinates at which the event

took place. Remember, these coordinates are going to be relative to

the view objects in which they occurred, and do not represent actual

screen coordinates:

locationInView- (CGPoint) locationInView: (UIView *) view;

Returns a

CGPointstructure containing the coordinates (relative to theUIViewobject) at which this event occurred.previousLocationInView- (CGPoint) previousLocationInView: (UIView *) view;

Returns a

CGPointstructure containing the coordinates (relative to theUIViewobject) from which this event originated. For example, if this event described aUITouchPhaseMovedevent, it will return the coordinates from which the finger was moved.

UIEvent

A UIEvent object aggregates a series of UITouch

objects into a single, portable object that you can manage easily. A

UIEvent provides methods to look up

the touches that have occurred in a single window, view, or even

across the entire application.

Event objects are sent as a gesture is made, containing all of

the events for that gesture. The foundation class NSSet is used to deliver the collection of

subsequent UITouch events. Each of

the UITouch events included in the

set includes the specific timestamp, phase, and location of each

event, while the UIEvent object

includes its own timestamp property as well.

The UIEvent object supports

the following methods:

allTouches- (NSSet *) allTouches;

Returns a set of all touches occurring within the application.

touchesForWindow- (NSSet *) touchesForWindow: (UIWindow *) window;

Returns a set of all touches occurring only within the specified

UIWindowobject.touchesForView- (NSSet *) touchesForView: (UIView *) view;

Returns a set of all touches occurring only within the specified

UIViewobject.

Events Handling

When the user makes a gesture, the application notifies the

key window and supplies the UIEvent

structure containing the events that are occurring. The key

window will relay this information to the first responder for the

window. This is typically the view in which the actual event occurred.

Once a gesture has been associated with a given view, all subsequent

events related to the gesture will be reported to that view. The

UIApplication and UIWindow objects contain a method named sendEvent:

- (void) sendEvent: (UIEvent *)event;

This method is called as part of the event dispatch process. These methods are responsible for receiving and dispatching events to their correct destinations. In general, you won’t need to override these methods unless you want to monitor all incoming events.

When the key window receives an event, it polls each of the view classes it presides over to determine in which one the event originated, and then dispatches the event to it. The object’s responder will then dispatch the event to its view controller, if the view is assigned to one, and then to the view’s own super class. It will then make its way back up the responder chain to its window, and finally to the application.

To receive multi-touch events, you must override one or more of

the event-handler methods below in your UIView–derived object. Your UIWindow and UIApplication classes may also override

these methods to receive events, but there is rarely a need to do so.

Apple has specified the following prototypes to receive multi-touch

events. Each of the methods used is associated with one of the touch

phases described in the previous section:

- (void)touchesBegan:(NSSet *)touches withEvent:(UIEvent *)event; - (void)touchesMoved:(NSSet *)touches withEvent:(UIEvent *)event; - (void)touchesEnded:(NSSet *)touches withEvent:(UIEvent *)event; - (void)touchesCancelled:(NSSet *)touches withEvent:(UIEvent *)event;

Two arguments are provided for each method. The NSSet provided contains a set consisting

only of new touches that have occurred since the last event. A

UIEvent is also provided, serving

two purposes: it allows you to filter the individual touch events for

only a particular view or window, and it provides a means of accessing

previous touches as they pertain to the current gesture.

You’ll only need to implement the notification methods for

events you’re interested in. If you’re interested only in receiving

taps as “mouse clicks,” you’ll need to use only the touchesBegan and touchesEnded methods. If you’re using

dragging, such as in a slider control, you’ll need the touchesMoved method. The touchesCancelled method is optional, but

Apple recommends using it to clean up objects in persistent

classes.

Example: Tap Counter

In

this example, you’ll override the touchesBegan method to detect single, double, and triple taps. For our

purposes here, we’ll send output to the console only, but in your own

application, you’ll relay this information to its UI

components.

To build this example, create a sample view-based application in Xcode named TouchDemo, and add the code below to your TouchDemoViewController.m class:

- (void) touchesBegan:(NSSet *)touches withEvent:(UIEvent *)event

{

UITouch *touch = [ touches anyObject ];

CGPoint location = [ touch locationInView: self.view ];

NSUInteger taps = [ touch tapCount ];

NSLog(@"%s tap at %f, %f tap count: %d",

(taps == 1) ? "Single" :

(taps == 2) ? "Double" : "Triple+",

location.x, location.y, taps);

}Compile the application and run it in the simulator. In Xcode, choose Console from the Run menu to open the display console. Now experiment with single, double, and triple taps on the simulator’s screen. You should see output similar to the output below:

Untitled[4229:20b] Single tap at 161.000000, 113.953613 taps count: 1 Untitled[4229:20b] Double tap at 161.000000, 113.953613 taps count: 2 Untitled[4229:20b] Triple+ tap at 161.000000, 113.953613 taps count: 3 Untitled[4229:20b] Triple+ tap at 161.000000, 113.953613 taps count: 4

Note

The tap count will continue to increase indefinitely, meaning you could technically track quadruple taps and even higher tap counts.

Example: Tap and Drag

To track objects being dragged, you’ll need to override three methods:

touchesBegan, touchesMoved, and touchesEnded. The touchesMoved method will be called frequently as the mouse is dragged

across the screen. When the user releases her finger, the touchesEnded method

will notify you of this.

To build this example, create a sample view-based application in Xcode named DragDemo, and add the code below to your DragDemoViewController.m class:

- (void) touchesBegan:(NSSet *)touches withEvent:(UIEvent *)event

{

UITouch *touch = [ touches anyObject ];

CGPoint location = [ touch locationInView: self.view ];

NSUInteger taps = [ touch tapCount ];

[ super touchesBegan: touches withEvent: event ];

NSLog(@"Tap BEGIN at %f, %f Tap count: %d", location.x, location.y, taps);

}

- (void) touchesMoved:(NSSet *)touches withEvent:(UIEvent *)event

{

UITouch *touch = [ touches anyObject ];

CGPoint oldLocation = [ touch previousLocationInView: self.view ];

CGPoint location = [ touch locationInView: self.view ];

[ super touchesMoved: touches withEvent: event ];

NSLog(@"Finger MOVED from %f, %f to %f, %f",

oldLocation.x, oldLocation.y, location.x, location.y);

}

- (void) touchesEnded:(NSSet *)touches withEvent:(UIEvent *)event

{

UITouch *touch = [ touches anyObject ];

CGPoint location = [ touch locationInView: self.view ];

[ super touchesEnded: touches withEvent: event ];

NSLog(@"Tap ENDED at %f, %f", location.x, location.y);

}When you run this application, you’ll get notifications whenever the user places his finger down or lifts it up, and you’ll also receive many more as the user moves his finger across the screen:

BEGIN at 101.000000, 117.953613 Tap count: 1 Finger MOVED from 101.000000, 117.953613 to 102.000000, 117.953613 Finger MOVED from 102.000000, 117.953613 to 104.000000, 117.953613 Finger MOVED from 104.000000, 117.953613 to 105.000000, 117.953613 Finger MOVED from 105.000000, 117.953613 to 107.000000, 117.953613 Finger MOVED from 107.000000, 117.953613 to 109.000000, 116.953613 Finger MOVED from 109.000000, 116.953613 to 113.000000, 115.953613 Finger MOVED from 113.000000, 115.953613 to 116.000000, 115.953613 Finger MOVED from 116.000000, 115.953613 to 120.000000, 114.953613 Finger MOVED from 120.000000, 114.953613 to 122.000000, 114.953613 ... Tap ENDED at 126.000000, 144.953613

Because you’ll receive several different notifications when the

user’s finger moves, it’s important to make sure your application

doesn’t perform any resource-intensive functions until after the

user’s finger is released, or at externally timed intervals. For

example, if your application is a game application, the touchesMoved method may not be the ideal

place to write character movements. Instead, use this method to queue

up the movement, and use a separate mechanism, such as an NSTimer, to execute the actual movements.

Otherwise, larger mouse movements will slow your application down

because it will be trying to handle so many events in a short period

of time.

Processing Multi-Touch

By default, a view is configured only to receive single fingering

events. To enable a view to process multi-touch gestures,

set the multipleTouchEnabled method. In the previous

example, overriding the view controller’s viewDidLoad method, as shown below, would do this:

- (void)viewDidLoad {

self.view.multipleTouchEnabled = YES;

[ super viewDidLoad ];

}Once enabled, both single-finger events and multiple-finger

gestures will be sent to the touch notification methods. You can

determine the number of touches on the screen by looking at the number

of UITouch events provided in the

NSSet from the first argument.

During a gesture, each of the touch methods will be provided with two

UITouch events, one for each

finger. You can determined this by performing a simple count on the

NSSet object provided:

int fingerCount = [ touches count ];

To determine the number of fingers used in the gesture as a

whole, query the UIEvent. This

allows you to determine when the last finger in a gesture has been

lifted:

int fingersInGesture = [ [ event touchesForView: self.view ] count ];

if (fingerCount == fingersInGesture) {

NSLog(@"All fingers present in the event");

}PinchMe: Pinch Tracking

This example is similar to the previous examples in this

chapter, but instead of tracking a single finger movement, both

fingers are tracked to determine a scaling factor for a pinch

operation. You can then easily apply the scaling factor to resize an

image or perform other similar operations using a pinch. You’ll

override three methods in your view controller, as before: touchesBegan, touchesMoved, and touchedEnded. You’ll also override the viewDidLoad method to enable multi-touch gestures. The touchesMoved method will be called

frequently as the mouse is dragged across the screen, where the

scaling factor will be calculated. When the user releases her finger,

the touchesEnded method will notify

you of this.

To build this example, create a sample view-based application in Xcode named PinchMe, and add the code from Example 4-1 to your PinchMeViewController.m class.

- (void)viewDidLoad {

[super viewDidLoad];

[ self.view setMultipleTouchEnabled: YES ];

}

- (void) touchesBegan:(NSSet *)touches withEvent:(UIEvent *)event

{

UITouch *touch = [ touches anyObject ];

CGPoint location = [ touch locationInView: self.view ];

NSUInteger taps = [ touch tapCount ];

[ super touchesBegan: touches withEvent: event ];

NSLog(@"Tap BEGIN at %f, %f Tap count: %d", location.x, location.y, taps);

}

- (void) touchesMoved:(NSSet *)touches withEvent:(UIEvent *)event

{

int finger = 0;

NSEnumerator *enumerator = [ touches objectEnumerator ];

UITouch *touch;

CGPoint location[2];

while ((touch = [ enumerator nextObject ]) && finger < 2)

{

location[finger] = [ touch locationInView: self.view ];

NSLog(@"Finger %d moved: %fx%f",

finger+1, location[finger].x, location[finger].y);

finger++;

}

if (finger == 2) {

CGPoint scale;

scale.x = fabs(location[0].x - location[1].x);

scale.y = fabs(location[0].y - location[1].y);

NSLog(@"Scaling: %.0f x %.0f", scale.x, scale.y);

}

[ super touchesMoved: touches withEvent: event ];

}

- (void) touchesEnded:(NSSet *)touches withEvent:(UIEvent *)event

{

UITouch *touch = [ touches anyObject ];

CGPoint location = [ touch locationInView: self.view ];

[ super touchesEnded: touches withEvent: event ];

NSLog(@"Tap ENDED at %f, %f", location.x, location.y);

}Open up a console while you run the application. Holding in the option key, you’ll be able to simulate multi-touch pinch gestures in the iPhone Simulator. As you move the mouse around, you’ll see reports of both finger coordinates and of the x, y delta between the two:

Untitled[5039:20b] Finger 1 moved: 110.000000x293.046387 Untitled[5039:20b] Finger 2 moved: 210.000000x146.953613 Untitled[5039:20b] Scaling: 100 × 146 Untitled[5039:20b] Finger 1 moved: 111.000000x289.046387 Untitled[5039:20b] Finger 2 moved: 209.000000x150.953613 Untitled[5039:20b] Scaling: 98 × 138 Untitled[5039:20b] Finger 1 moved: 112.000000x285.046387 Untitled[5039:20b] Finger 2 moved: 208.000000x154.953613 Untitled[5039:20b] Scaling: 96 × 130 Untitled[5039:20b] Finger 1 moved: 113.000000x282.046387 Untitled[5039:20b] Finger 2 moved: 207.000000x157.953613 Untitled[5039:20b] Scaling: 94 × 124 Untitled[5039:20b] Finger 1 moved: 113.000000x281.046387 Untitled[5039:20b] Finger 2 moved: 207.000000x158.953613 Untitled[5039:20b] Scaling: 94 × 122

TouchDemo: Multi-Touch Icon Tracking

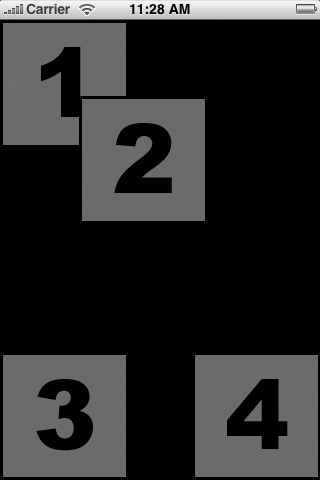

For a fuller example of the touches API, TouchDemo illustrates how you can use the touches API to track individual finger movements on the screen. Four demo images are downloaded automatically from a website and displayed on the screen. The user may use one or more fingers to simultaneously drag the icons to a new position. The example tracks each touch individually; it repositions each icon as the user drags. See Figure 4-1.

You can compile this application, shown in Examples 4-2 through 4-6, with the SDK by creating a view-based application project named TouchDemo. Be sure to pull out the Interface Builder code so you can see how these objects are created from scratch.

#import <UIKit/UIKit.h>

@class TouchDemoViewController;

@interface TouchDemoAppDelegate : NSObject <UIApplicationDelegate> {

UIWindow *window;

TouchDemoViewController *viewController;

}

@property (nonatomic, retain) IBOutlet UIWindow *window;

@property (nonatomic, retain) IBOutlet TouchDemoViewController *viewController;

@end#import "TouchDemoAppDelegate.h"

#import "TouchDemoViewController.h"

@implementation TouchDemoAppDelegate

@synthesize window;

@synthesize viewController;

- (void)applicationDidFinishLaunching:(UIApplication *)application {

CGRect screenBounds = [ [ UIScreen mainScreen ] applicationFrame ];

self.window = [ [ [ UIWindow alloc ] initWithFrame: screenBounds ]

autorelease

];

viewController = [ [ TouchDemoViewController alloc ] init ];

[ window addSubview: viewController.view ];

[ window makeKeyAndVisible ];

}

- (void)dealloc {

[ viewController release ];

[ window release ];

[ super dealloc ];

}

@end#import <UIKit/UIKit.h>

@interface TouchView : UIView {

UIImage *images[4];

CGPoint offsets[4];

int tracking[4];

}

@end

@interface TouchDemoViewController : UIViewController {

TouchView *touchView;

}

@end#import "TouchDemoViewController.h"

@implementation TouchView

- (id)initWithFrame:(CGRect)frame {

frame.origin.y = 0.0;

self = [ super initWithFrame: frame ];

if (self != nil) {

self.multipleTouchEnabled = YES;

for(int i=0; i<4; i++) {

NSURL *url = [ NSURL URLWithString: [

NSString stringWithFormat:

@"http://www.zdziarski.com/demo/%d.png", i+1 ]

];

images[i] = [ [ UIImage alloc ]

initWithData: [ NSData dataWithContentsOfURL: url ]

];

offsets[i] = CGPointMake(0.0, 0.0);

}

offsets[0] = CGPointMake(0.0, 0.0);

offsets[1] = CGPointMake(self.frame.size.width

- images[1].size.width, 0.0);

offsets[2] = CGPointMake(0.0, self.frame.size.height

- images[2].size.height);

offsets[3] = CGPointMake(self.frame.size.width

- images[3].size.width, self.frame.size.height

- images[3].size.height);

}

return self;

}

- (void)drawRect:(CGRect)rect {

for(int i=0; i<4; i++ ) {

[ images[i] drawInRect: CGRectMake(offsets[i].x, offsets[i].y,

images[i].size.width, images[i].size.height)

];

}

}

- (void)touchesBegan:(NSSet *)touches withEvent:(UIEvent *)event {

UITouch *touch;

int touchId = 0;

NSEnumerator *enumerator = [ touches objectEnumerator ];

while ((touch = (UITouch *) [ enumerator nextObject ])) {

tracking[touchId] = −1;

CGPoint location = [ touch locationInView: self ];

for(int i=3;i>=0;i--) {

CGRect rect = CGRectMake(offsets[i].x, offsets[i].y,

images[i].size.width, images[i].size.height);

if (CGRectContainsPoint(rect, location)) {

NSLog(@"Begin Touch ID %d Tracking with image %d\n", touchId, i);

tracking[touchId] = i;

break;

}

}

touchId++;

}

}

- (void)touchesMoved:(NSSet *)touches withEvent:(UIEvent *)event {

UITouch *touch;

int touchId = 0;

NSEnumerator *enumerator = [ touches objectEnumerator ];

while ((touch = (UITouch *) [ enumerator nextObject ])) {

if (tracking[touchId] != −1) {

NSLog(@"Touch ID %d Tracking with image %d\n",

touchId, tracking[touchId]);

CGPoint location = [ touch locationInView: self ];

CGPoint oldLocation = [ touch previousLocationInView: self ];

offsets[tracking[touchId]].x += (location.x - oldLocation.x);

offsets[tracking[touchId]].y += (location.y - oldLocation.y);

if (offsets[tracking[touchId]].x < 0.0)

offsets[tracking[touchId]].x = 0.0;

if (offsets[tracking[touchId]].x

+ images[tracking[touchId]].size.width > self.frame.size.width)

{

offsets[tracking[touchId]].x = self.frame.size.width

- images[tracking[touchId]].size.width;

}

if (offsets[tracking[touchId]].y < 0.0)

offsets[tracking[touchId]].y = 0.0;

if (offsets[tracking[touchId]].y

+ images[tracking[touchId]].size.height > self.frame.size.height)

{

offsets[tracking[touchId]].y = self.frame.size.height

- images[tracking[touchId]].size.height;

}

}

touchId++;

}

[ self setNeedsDisplay ];

}

@end

@implementation TouchDemoViewController

- (void)loadView {

[ super loadView ];

touchView = [ [ TouchView alloc ] initWithFrame: [

[ UIScreen mainScreen ] applicationFrame ]

];

self.view = touchView;

}

- (void)didReceiveMemoryWarning {

[ super didReceiveMemoryWarning ];

}

- (void)dealloc {

[ super dealloc ];

}

@endWhat’s Going On

The TouchDemo example works as follows:

When the application starts, it instantiates a new window and view controller. A subclass of

UIView, namedTouchView, is created and attached to the view controller.When the user presses one or more fingers, the

touchesBeganmethod is notified. It compares the location of each touch with the image’s offsets and size to determine which images the user touched. It records the index in thetrackingarray.When the user moves a finger, the

touchesMovesmethod is notified. This determines which images the user originally touched and adjusts their offsets on the screen by the correct amount. It then calls thesetNeedsDisplaymethod, which invokes thedrawRectmethod for redrawing the view’s contents.

Get iPhone SDK Application Development now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.