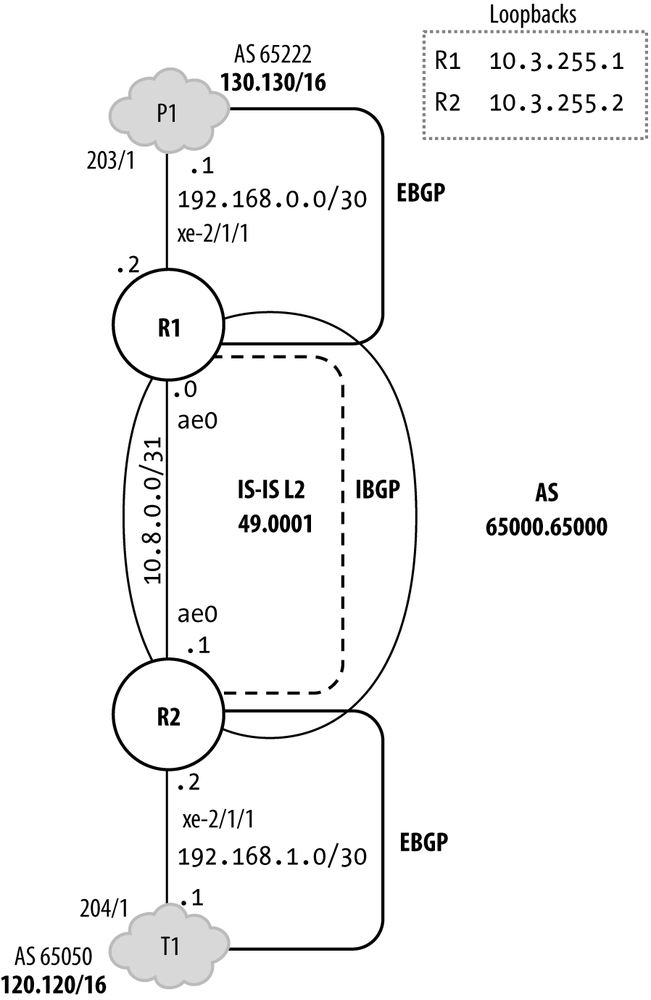

This section provides a sample use case for the BGP flow-spec feature. The network topology is shown in Figure 4-4.

Routers R1 and R2 have the best practice IPv4 RE protection filter previously discussed in effect on their loopback interfaces. The DDoS protection feature is enabled with the only change from the default being scaled FPC bandwidth for the ICMP aggregate. They peer with each other using loopback-based MP-IBGP, and to External peers P1 and T1 using EBGP. The P1 network is the source of routes from the 130.130/16 block, whereas T1 is the source of 120.120/16 routes. IS-IS Level 2 is used as the IGP. It runs passively on the external links to ensure the EBGP next-hops can be resolved. It is also used to distribute the loopback addresses used to support the IBGP peering. The protocols stanza on R1 is shown here:

{master}[edit]

jnpr@R1-RE0# show protocols

bgp {

log-updown;

group t1_v4 {

type external;

export bgp_export;

peer-as 65050;

neighbor 192.168.1.1;

}

group int_v4 {

type internal;

local-address 10.3.255.2;

family inet {

unicast;

flow;

}

bfd-liveness-detection {

minimum-interval 150;

multiplier 3;

}

neighbor 10.3.255.1;

}

}

isis {

reference-bandwidth 100g;

level 1 disable;

interface xe-2/1/1.0 {

passive;

}

interface ae0.1 {

point-to-point;

bfd-liveness-detection {

minimum-interval 150;

multiplier 3;

}

}

interface lo0.0 {

passive;

}

}

lacp {

traceoptions {

file lacp_trace size 10m;

flag process;

flag startup;

}

}

lldp {

interface all;

}

layer2-control {

nonstop-bridging;

}

vstp {

interface xe-0/0/6;

interface ae0;

interface ae1;

interface ae2;

vlan 100 {

bridge-priority 4k;

interface xe-0/0/6;

interface ae0;

interface ae1;

interface ae2;

}

vlan 200 {

bridge-priority 8k;

interface ae0;

interface ae1;

interface ae2;

}

}Note that flow NLRI has been

enabled for the inet family on the

internal peering session. Note again that BGP flow-spec is also supported

for EBGP peers, which means it can operate across AS boundaries when both

networks have bilaterally agreed to support the functionality. The DDoS

stanza is displayed here:

{master}[edit]

jnpr@R2-RE0# show system ddos-protection

protocols {

icmp {

aggregate {

fpc 2 {

bandwidth-scale 30;

burst-scale 30;

}

}

}

}The DDoS settings alter FPC slot 2 to permit 30% of the system aggregate for ICMP. Recall from the previous DDoS section that by default all FPCs inherit 100% of the system aggregate, which means any one FPC can send at the full maximum load with no drops, but also means a DDoS attack on any one FPC can cause contention at aggregation points for other FPCs with normal loads. Here, FPC 2 is expected to permit some 6,000 PPS before it begins enforcing DDoS actions at 30% of the system aggregate, which by default is 20,000 PPS in this release.

You next verify the filter chain application to the lo0 interface. While only R1 is shown, R2 also has the best practice IPv4 RE protection filters in place; the operation of the RE protection filter was described previously in the RE protection case study.

{master}[edit]

jnpr@R1-RE0# show interfaces lo0

unit 0 {

family inet {

filter {

input-list [ discard-frags accept-common-services accept-sh-bfd accept-bgp accept-ldp accept-rsvp accept-telnet accept-vrrp discard-all ];

}

address 10.3.255.1/32;

}

family iso {

address 49.0001.0100.0325.5001.00;

}

family inet6 {

filter {

input-list [ discard-extension-headers accept-MLD-hop-by-hop_v6 deny-icmp6-undefined accept-common-services-v6 accept-sh-bfd-v6 accept-bgp-v6 accept-telnet-v6 accept-ospf3 accept-radius-v6 discard-all-v6 ];

}

address 2001:db8:1::ff:1/128;

}

}The IBGP and EBGP session status is confirmed. Though not shown, R2 also has both its neighbors in an established state:

{master}[edit]

jnpr@R1-RE0# run show bgp summary

Groups: 2 Peers: 2 Down peers: 0

Table Tot Paths Act Paths Suppressed History Damp State Pending

inet.0 200 200 0 0 0 0

inetflow.0 0 0 0 0 0 0

inet6.0 0 0 0 0 0 0

Peer AS InPkt OutPkt OutQ Flaps Last Up/Dwn State|#Active/Received/Accepted/Damped...

10.3.255.2 65000.65000 12 12 0 0 3:14 Establ

inet.0: 100/100/100/0

inetflow.0: 0/0/0/0

192.168.0.1 65222 7 16 0 0 3:18 Establ

inet.0: 100/100/100/As is successful negotiation of the flow NLRI during BGP,

capabilities exchange is confirmed by displaying the IBGP neighbor to

confirm that the inet-flow NLRI is in

effect:

{master}[edit]

jnpr@R1-RE0# run show bgp neighbor 10.3.255.2 | match nlri

NLRI for restart configured on peer: inet-unicast inet-flow

NLRI advertised by peer: inet-unicast inet-flow

NLRI for this session: inet-unicast inet-flow

NLRI that restart is negotiated for: inet-unicast inet-flow

NLRI of received end-of-rib markers: inet-unicast inet-flow

NLRI of all end-of-rib markers sent: inet-unicast inet-flowAnd lastly, a quick confirmation of routing to both loopback and EBGP prefixes, from the perspective of R2:

{master}[edit]

jnpr@R2-RE0# run show route 10.3.255.1

inet.0: 219 destinations, 219 routes (219 active, 0 holddown, 0 hidden)

+ = Active Route, - = Last Active, * = Both

10.3.255.1/32 *[IS-IS/18] 00:11:21, metric 5

> to 10.8.0.0 via ae0.1

{master}[edit]

jnpr@R2-RE0# run show route 130.130.1.0/24

inet.0: 219 destinations, 219 routes (219 active, 0 holddown, 0 hidden)

+ = Active Route, - = Last Active, * = Both

130.130.1.0/24 *[BGP/170] 00:01:22, localpref 100, from 10.3.255.1

AS path: 65222 ?

> to 10.8.0.0 via ae0.1The output confirms that IS-IS is supporting the IBGP session by providing a route to the remote router’s loopback address, and that R2 is learning the 130.130/16 prefixes from R1, which in turn learned them via its EBGP peering to P1.

With the stage set, things begin with a DDoS log alert at R2:

{master}[edit]

jnpr@R2-RE0# run show log messages | match ddos

Mar 18 17:43:47 R2-RE0 jddosd[75147]: DDOS_PROTOCOL_VIOLATION_SET: Protocol ICMP:aggregate is violated at fpc 2 for 4 times, started at 2012-03-18 17:43:47 PDT, last seen at 2012-03-18 17:43:47 PDTMeanwhile, back at R1, no violations are reported, making it clear that R2 is the sole victim of the current DDoS bombardment:

{master}[edit]

jnpr@R1-RE0# run show ddos-protection protocols violations

Number of packet types that are being violated: 0The syslog entry warns of excessive ICMP traffic at FPC 2. Details

on the current violation are obtained with a show ddos protocols command:

jnpr@R2-RE0#run show ddos-protection protocols violationsNumber of packet types that are being violated: 1 Protocol Packet Bandwidth Arrival Peak Policer bandwidth group type (pps) rate(pps) rate(pps) violation detected at icmp aggregate 20000 13587 13610 2012-03-18 17:43:47 PDT Detected on: FPC-2 {master}[edit] jnpr@R2-RE0#run show ddos-protection protocols icmpProtocol Group: ICMP Packet type: aggregate (Aggregate for all ICMP traffic) Aggregate policer configuration: Bandwidth: 20000 pps Burst: 20000 packets Priority: high Recover time: 300 seconds Enabled: Yes System-wide information: Aggregate bandwidth is being violated! No. of FPCs currently receiving excess traffic: 1 No. of FPCs that have received excess traffic: 1 Violation first detected at: 2012-03-18 17:43:47 PDT Violation last seen at: 2012-03-18 17:58:26 PDT Duration of violation: 00:14:39 Number of violations: 4 Received: 22079830 Arrival rate: 13607 pps Dropped: 566100 Max arrival rate: 13610 pps Routing Engine information: Aggregate policer is never violated Received: 10260083 Arrival rate: 6001 pps Dropped: 0 Max arrival rate: 6683 pps Dropped by individual policers: 0 FPC slot 2 information: Bandwidth: 30% (6000 pps), Burst: 30% (6000 packets), enabled Aggregate policer is currently being violated! Violation first detected at: 2012-03-18 17:43:47 PDT Violation last seen at: 2012-03-18 17:58:26 PDT Duration of violation: 00:14:39 Number of violations: 4 Received: 22079830 Arrival rate: 13607 pps Dropped: 566100 Max arrival rate: 13610 pps Dropped by individual policers: 0 Dropped by aggregate policer: 566100

The output confirms ICMP aggregate-level discards at the FPC level, with a peak load of 13,600 PPS, well in excess of the currently permitted 6,000 PPS. In addition, and much to your satisfaction, R2 remains responsive showing that the DDoS first line of defense is doing its job. However, aside from knowing there is a lot of ICMP arriving at FPC 2 for this router, there is not much to go on yet as far as tracking the attack back toward its source, flow-spec style or otherwise. You know this ICMP traffic must be destined for R2, either due to unicast or broadcast, because only host-bound traffic is subjected to DDoS policing.

The loopback filter counters and policer statistics are displayed at R2:

{master}[edit]

jnpr@R2-RE0# run show firewall filter lo0.0-i

Filter: lo0.0-i

Counters:

Name Bytes Packets

accept-bfd-lo0.0-i 25948 499

accept-bgp-lo0.0-i 1744 29

accept-dns-lo0.0-i 0 0

accept-icmp-lo0.0-i 42252794 918539

accept-ldp-discover-lo0.0-i 0 0

accept-ldp-igmp-lo0.0-i 0 0

accept-ldp-unicast-lo0.0-i 0 0

accept-ntp-lo0.0-i 0 0

accept-ntp-server-lo0.0-i 0 0

accept-rsvp-lo0.0-i 0 0

accept-ssh-lo0.0-i 0 0

accept-telnet-lo0.0-i 7474 180

accept-tldp-discover-lo0.0-i 0 0

accept-traceroute-icmp-lo0.0-i 0 0

accept-traceroute-tcp-lo0.0-i 0 0

accept-traceroute-udp-lo0.0-i 0 0

accept-vrrp-lo0.0-i 3120 78

accept-web-lo0.0-i 0 0

discard-all-TTL_1-unknown-lo0.0-i 0 0

discard-icmp-lo0.0-i 0 0

discard-ip-options-lo0.0-i 32 1

discard-netbios-lo0.0-i 0 0

discard-tcp-lo0.0-i 0 0

discard-udp-lo0.0-i 0 0

discard-unknown-lo0.0-i 0 0

no-icmp-fragments-lo0.0-i 0 0

Policers:

Name Bytes Packets

management-1m-accept-dns-lo0.0-i 0 0

management-1m-accept-ntp-lo0.0-i 0 0

management-1m-accept-ntp-server-lo0.0-i 0 0

management-1m-accept-telnet-lo0.0-i 0 0

management-1m-accept-traceroute-icmp-lo0.0-i 0 0

management-1m-accept-traceroute-tcp-lo0.0-i 0 0

management-1m-accept-traceroute-udp-lo0.0-i 0 0

management-5m-accept-icmp-lo0.0-i 21870200808 475439148

management-5m-accept-ssh-lo0.0-i 0 0

management-5m-accept-web-lo0.0-i 0 0The counters for the management-5m-accept-icmp-lo0.0-I

prefix-specific counter and policers make it clear that a large amount

of ICMP traffic is hitting the loopback filter and being policed by the

related 5 M policer. Given that the loopback policer is executed before

the DDoS processing, right as host-bound traffic arrives at the Trio

PFE, it’s clear that the 5 Mbps of ICMP that is permitted by the policer

amounts to more than the 6,000 PPS; otherwise, there would be no current

DDoS alert or DDoS discard actions in the FPC.

Knowing that a policer evoked through a loopback filter is executed before any DDoS processing should help in dimensioning your DDoS and loopback policers so they work well together. Given that a filter-evoked policer measures bandwidth in bits per second while the DDoS policers function on a packet-per-second basis should make it clear that trying to match them is difficult at best and really isn’t necessary anyway.

Because a loopback policer represents a system-level aggregate, there is some sense to setting the policer higher than that in any individual FPC. If the full expected aggregate arrives on a single FPC, then the lowered DDoS settings in the FPC will kick in to ensure that no one FPC can consume the system’s aggregate bandwidth, thereby ensuring plenty of capacity of other FPCs that have normal traffic loads. The downside to such a setting is that you can now expect FPC drops even when only one FPC is active and below the aggregate system load.

Obtaining the detail needed to describe the attack flow is where sampling or filter-based logging often come into play. In fact, the current RE protection filter has a provision for logging:

{master}[edit]

jnpr@R2-RE0# show firewall family inet filter accept-icmp

apply-flags omit;

term no-icmp-fragments {

from {

is-fragment;

protocol icmp;

}

then {

count no-icmp-fragments;

log;

discard;

}

}

term accept-icmp {

from {

protocol icmp;

ttl-except 1;

icmp-type [ echo-reply echo-request time-exceeded unreachable source-quench router-advertisement parameter-problem ];

}

then {

policer management-5m;

count accept-icmp;

log;

accept;

}

}The presence of the log and

syslog action modifiers in the

accept-icmp filter means you simply

need to display the firewall cache or syslog to obtain the details

needed to characterize the attack flow:

jnpr@R2-RE0# run show firewall log

Log :

Time Filter Action Interface Protocol Src Addr Dest Addr

18:47:47 pfe A ae0.1 ICMP 130.130.33.1 10.3.255.2

18:47:47 pfe A ae0.1 ICMP 130.130.60.1 10.3.255.2

18:47:47 pfe A ae0.1 ICMP 130.130.48.1 10.3.255.2

18:47:47 pfe A ae0.1 ICMP 130.130.31.1 10.3.255.2

18:47:47 pfe A ae0.1 ICMP 130.130.57.1 10.3.255.2

18:47:47 pfe A ae0.1 ICMP 130.130.51.1 10.3.255.2

18:47:47 pfe A ae0.1 ICMP 130.130.50.1 10.3.255.2

18:47:47 pfe A ae0.1 ICMP 130.130.3.1 10.3.255.2

18:47:47 pfe A ae0.1 ICMP 130.130.88.1 10.3.255.2

18:47:47 pfe A ae0.1 ICMP 130.130.94.1 10.3.255.2

18:47:47 pfe A ae0.1 ICMP 130.130.22.1 10.3.255.2

18:47:47 pfe A ae0.1 ICMP 130.130.13.1 10.3.255.2

18:47:47 pfe A ae0.1 ICMP 130.130.74.1 10.3.255.2

18:47:47 pfe A ae0.1 ICMP 130.130.77.1 10.3.255.2

18:47:47 pfe A ae0.1 ICMP 130.130.46.1 10.3.255.2

18:47:47 pfe A ae0.1 ICMP 130.130.94.1 10.3.255.2

18:47:47 pfe A ae0.1 ICMP 130.130.38.1 10.3.255.2

18:47:47 pfe A ae0.1 ICMP 130.130.36.1 10.3.255.2

18:47:47 pfe A ae0.1 ICMP 130.130.47.1 10.3.255.2

. . .The contents of the firewall log make it clear the attack is ICMP based (as already known), but in addition you can now confirm the destination address matches R2’s loopback, and that the source appears to be from a range of 130.130.x/24 subnets from within P1’s 130.130/16 block. Armed with this information, you can contact the administrator of the P1 network to ask them to address the issue, but that can wait until you have this traffic filtered at ingress to your network, rather than after it has had the chance to consume resources in your network and at R2, specifically.

A flow route is defined on R2:

{master}[edit]

jnpr@R2-RE0# show routing-options flow

route block_icmp_p1 {

match {

destination 10.3.255.2/32;

source 130.130.0.0/16;

protocol icmp;

}

then discard;

}The flow matches all ICMP traffic sent to R2’s loopback address from any source in the 130.130/16 space with a discard action. Once locally defined, the flow-spec is placed into effect (there is no validation for a local flow-spec, much like there is no need to validate a locally defined firewall filter), as confirmed by the current DDoS statistics, which now report a 0 PPs arrival rate:

{master}[edit]

jnpr@R2-RE0# run show ddos-protection protocols icmp

Protocol Group: ICMP

Packet type: aggregate (Aggregate for all ICMP traffic)

Aggregate policer configuration:

Bandwidth: 20000 pps

Burst: 20000 packets

Priority: high

Recover time: 300 seconds

Enabled: Yes

System-wide information:

Aggregate bandwidth is no longer being violated

No. of FPCs that have received excess traffic: 1

Last violation started at: 2012-03-18 18:47:28 PDT

Last violation ended at: 2012-03-18 18:52:59 PDT

Duration of last violation: 00:05:31 Number of violations: 5

Received: 58236794 Arrival rate: 0 pps

Dropped: 2300036 Max arrival rate: 13620 pps

Routing Engine information:

Aggregate policer is never violated

Received: 26237723 Arrival rate: 0 pps

Dropped: 0 Max arrival rate: 6683 pps

Dropped by individual policers: 0

FPC slot 2 information:

Bandwidth: 30% (6000 pps), Burst: 30% (6000 packets), enabled

Aggregate policer is no longer being violated

Last violation started at: 2012-03-18 18:47:28 PDT

Last violation ended at: 2012-03-18 18:52:59 PDT

Duration of last violation: 00:05:31 Number of violations: 5

Received: 58236794 Arrival rate: 0 pps

Dropped: 2300036 Max arrival rate: 13620 pps

Dropped by individual policers: 0

Dropped by aggregate policer: 2300036The presence of a flow-spec filter is confirmed with a show firewall command:

{master}[edit]

jnpr@R2-RE0# run show firewall | find flow

Filter: __flowspec_default_inet__

Counters:

Name Bytes Packets

10.3.255.2,130.130/16,proto=1 127072020948 2762435238The presence of the flow-spec filter is good, but the non-zero counters confirm that it’s still matching a boatload of traffic to 10.3.255.2, from 130.130/16 sources, for protocol 1 (ICMP), as per its definition. Odd, as in theory R1 should now also be filtering this traffic, which clearly is not the case; more on that later.

It’s also possible to display the inetflow.0 table directly to see both local

and remote entries; the table on R2 currently has only its one locally

defined flow-spec:

{master}[edit]

jnpr@R2-RE0# run show route table inetflow.0 detail

inetflow.0: 1 destinations, 1 routes (1 active, 0 holddown, 0 hidden)

10.3.255.2,130.130/16,proto=1/term:1 (1 entry, 1 announced)

*Flow Preference: 5

Next hop type: Fictitious

Address: 0x8df4664

Next-hop reference count: 1

State: <Active>

Local AS: 4259905000

Age: 8:34

Task: RT Flow

Announcement bits (2): 0-Flow 1-BGP_RT_Background

AS path: I

Communities: traffic-rate:0:0Don’t be alarmed about the fictitious next-hop bit. It’s an

artifact from the use of BGP, which has a propensity for next-hops,

versus a flow-spec, which has no such need. Note also how the discard action is conveyed via a community

that encodes an action of rate limiting the matching traffic to 0

bps.

With R2 looking good, let’s move on to determine why R1 is apparently not yet filtering this flow. Things begin with a confirmation that the flow route is advertised to R1:

{master}[edit]

jnpr@R2-RE0# run show route advertising-protocol bgp 10.3.255.1 table inetflow.0

inetflow.0: 1 destinations, 1 routes (1 active, 0 holddown, 0 hidden)

Prefix Nexthop MED Lclpref AS path

10.3.255.2,130.130/16,proto=1/term:1

* Self 100 IAs expected, the output confirms that R2 is sending the

flow-spec to R1, so you expect to find a matching entry in its

inetflow.0 table, along with a

dynamically created filter that should be discarding the attack

traffic at ingress from P1 as it arrives on the xe-2/1/1 interface.

But, thinking back, it was noted that R2’s local flow route is showing

a high packet count and discard rate, which clearly indicates that R1

is still letting this traffic through.

Your curiosity piqued, you move to R1 and find the flow route is hidden:

{master}[edit]

jnpr@R1-RE0# run show route table inetflow.0 hidden detail

inetflow.0: 1 destinations, 1 routes (0 active, 0 holddown, 1 hidden)

10.3.255.2,130.130/16,proto=1/term:N/A (1 entry, 0 announced)

BGP /-101

Next hop type: Fictitious

Address: 0x8df4664

Next-hop reference count: 1

State: <Hidden Int Ext>

Local AS: 65000.65000 Peer AS: 65000.65000

Age: 16:19

Task: BGP_65000.65000.10.3.255.2+179

AS path: I

Communities: traffic-rate:0:0

Accepted

Validation state: Reject, Originator: 10.3.255.2

Via: 10.3.255.2/32, Active

Localpref: 100

Router ID: 10.3.255.2Given the flow route is hidden, no filter has been created at R1:

{master}[edit]

jnpr@R1-RE0# run show firewall | find flow

Pattern not found

{master}[edit]And as a result, the attack data is confirmed to be leaving R1’s ae0 interface on its way to R2:

Interface: ae0, Enabled, Link is Up Encapsulation: Ethernet, Speed: 20000mbps Traffic statistics: Current delta Input bytes: 158387545 (6624 bps) [0] Output bytes: 4967292549831 (2519104392 bps) [0] Input packets: 2335568 (12 pps) [0] Output packets: 52813462522 (6845389 pps) [0] Error statistics: Input errors: 0 [0] Input drops: 0 [0] Input framing errors: 0 [0] Carrier transitions: 0 [0] Output errors: 0 [0] Output drops: 0 [0]

Thinking a bit about the hidden flow-spec and its rejected

state, the answer arrives: this is a validation failure. Recall that,

by default, only the current best source of a route is allowed to

generate a flow-spec that could serve to filter the related traffic.

Here, R2 is not the BGP source of the 130.130/16 route that the

related flow-spec seeks to filter. In effect, this is a third-party

flow-spec, and as such, it does not pass the default validation

procedure. You can work around this issue by using the no-validate option along with a policy at R1

that tells it to accept the route. First, the policy:

{master}[edit]

jnpr@R1-RE0# show policy-options policy-statement accept_icmp_flow_route

term 1 {

from {

route-filter 10.3.255.2/32 exact;

}

then accept;

}The policy is applied under the flow family using the no-validate keyword:

{master}[edit]

jnpr@R1-RE0# show protocols bgp group int

type internal;

local-address 10.3.255.1;

family inet {

unicast;

flow {

no-validate accept_icmp_flow_route;

}

}

bfd-liveness-detection {

minimum-interval 2500;

multiplier 3;

}

neighbor 10.3.255.2After the change is committed, the flow route is confirmed at R1:

{master}[edit]

jnpr@R1-RE0# run show firewall | find flow

Filter: __flowspec_default_inet__

Counters:

Name Bytes Packets

10.3.255.2,130.130/16,proto=1 9066309970 197093695The 10.3.255.2,130.130/16,proto=1 flow-spec

filter has been activated at R1, a good indication the flow-spec route

is no longer hidden due to validation failure. The net result that you

have worked so hard for is that now, the attack data is no longer

being transported over your network just to be discarded at R2.

{master}[edit]

jnpr@R1-RE0# run monitor interface ae0

Next='n', Quit='q' or ESC, Freeze='f', Thaw='t', Clear='c', Interface='i'

R1-RE0 Seconds: 0 Time: 19:18:22

Delay: 16/16/16

Interface: ae0, Enabled, Link is Up

Encapsulation: Ethernet, Speed: 20000mbps

Traffic statistics: Current delta

Input bytes: 158643821 (6480 bps) [0]

Output bytes: 5052133735948 (5496 bps) [0]

Input packets: 2339427 (12 pps) [0]

Output packets: 54657835299 (10 pps) [0]

Error statistics:

Input errors: 0 [0]

Input drops: 0 [0]

Input framing errors: 0 [0]

Carrier transitions: 0 [0]

Output errors: 0 [0]

Output drops: 0 [0]The Junos OS combined with Trio-based PFEs offers a rich set of stateless firewall filtering, a rich set of policing options, and some really cool built-in DDoS capabilities. All are performed in hardware so you can enable them in a scaled production environment without appreciable impact to forwarding rates.

Even if you deploy your MX in the core, where edge-related traffic conditions and contract enforcement is typically not required, you still need stateless filters, policers, and/or DDoS protection to protect your router’s control plane from unsupported services and to guard against excessive traffic, whether good or bad, to ensure the router remains secure and continues to operate as intended even during periods of abnormal volume of control plane traffic, be it intentional or attack based.

This chapter provided current best practice templates from strong RE protection filters for both IPv4 and IPv6 control plane. All readers should compare their current RE protection filters to the examples provided to decide if any modifications are needed to maintain current best practice in this complex, but all too important, subject. The new DDoS feature, supported on Trio line cards only, works symbiotically with RE protection filters, or can function standalone, and acts as a robust primary, secondary, and tertiary line of defense to protect the control plane from resource exhaustion, stemming from excessive traffic that could otherwise impact service, or worse, render the device inoperable and effectively unreachable during the very times you need access the most!

Get Juniper MX Series now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.