Chapter 4. One Box, Many Options

It isn’t often that you can accurately predict all of the business demands and requirements when working in IT; it seems as if the target is always moving, requiring that you always adjust. Adapting to change is required when working with technology and supporting constantly changing business requirements. To react quickly, you must have the flexibility to choose the best tool for the job. Having multiple options is always a winning strategy against any opponent.

The Juniper QFX5100 is a powerful series of switches because they give you the power inherent in having many different options at hand. You are not forced to use a particular option but instead are empowered to make your own determination as to what technology option makes the most sense in a particular situation. The Juniper QFX5100 family can support the following technology options:

-

Standalone

-

Virtual Chassis Fabric (VCF)

-

QFabric Node

-

Virtual Chassis

-

Multi-Chassis Link Aggregation (MC-LAG)

-

Clos Fabric

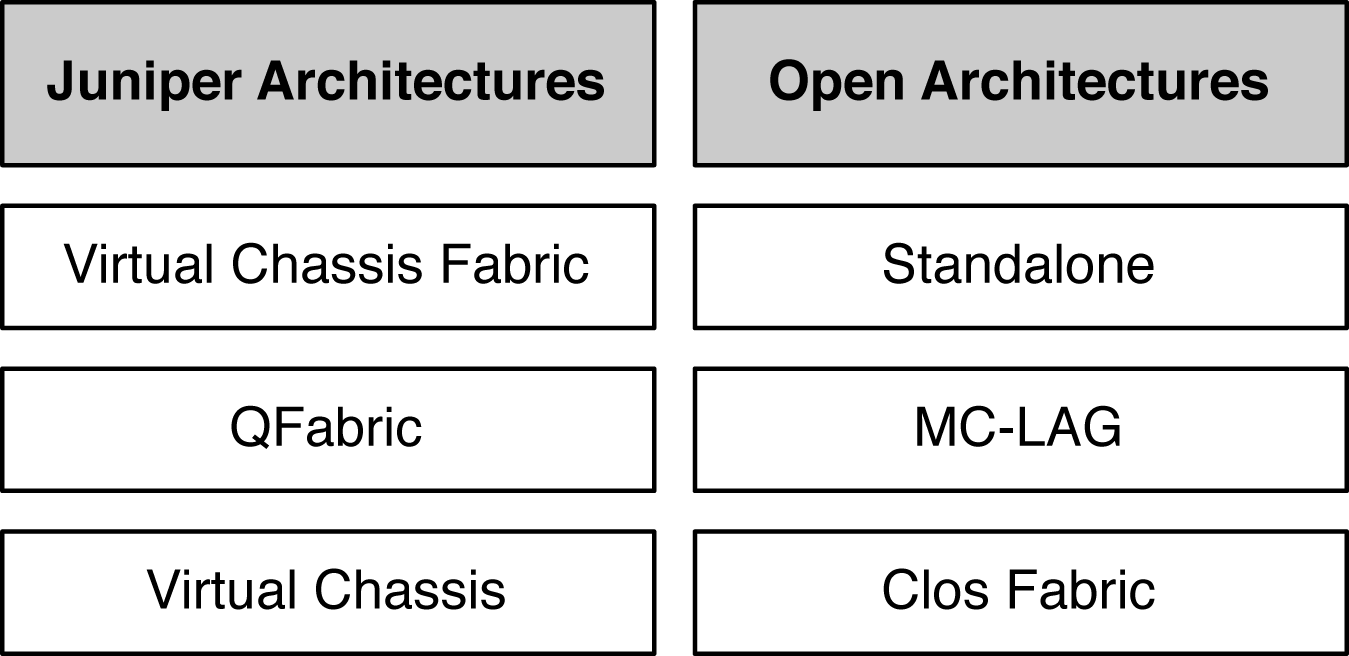

Not only do you have multiple options, but you can also choose to deploy a Juniper architecture or an open architecture (see Figure 4-1). You have the ability to take advantage of turnkey Ethernet fabrics or simply create your own and integrate products from other vendors as you go along.

Figure 4-1. Juniper architectures and open architectures options

This chapter is intended to introduce you to the many different options the Juniper QFX5100 offers. We’ll investigate each option, one by one, and get a better idea about what each technology can do for you and where it can be used in your network.

Standalone

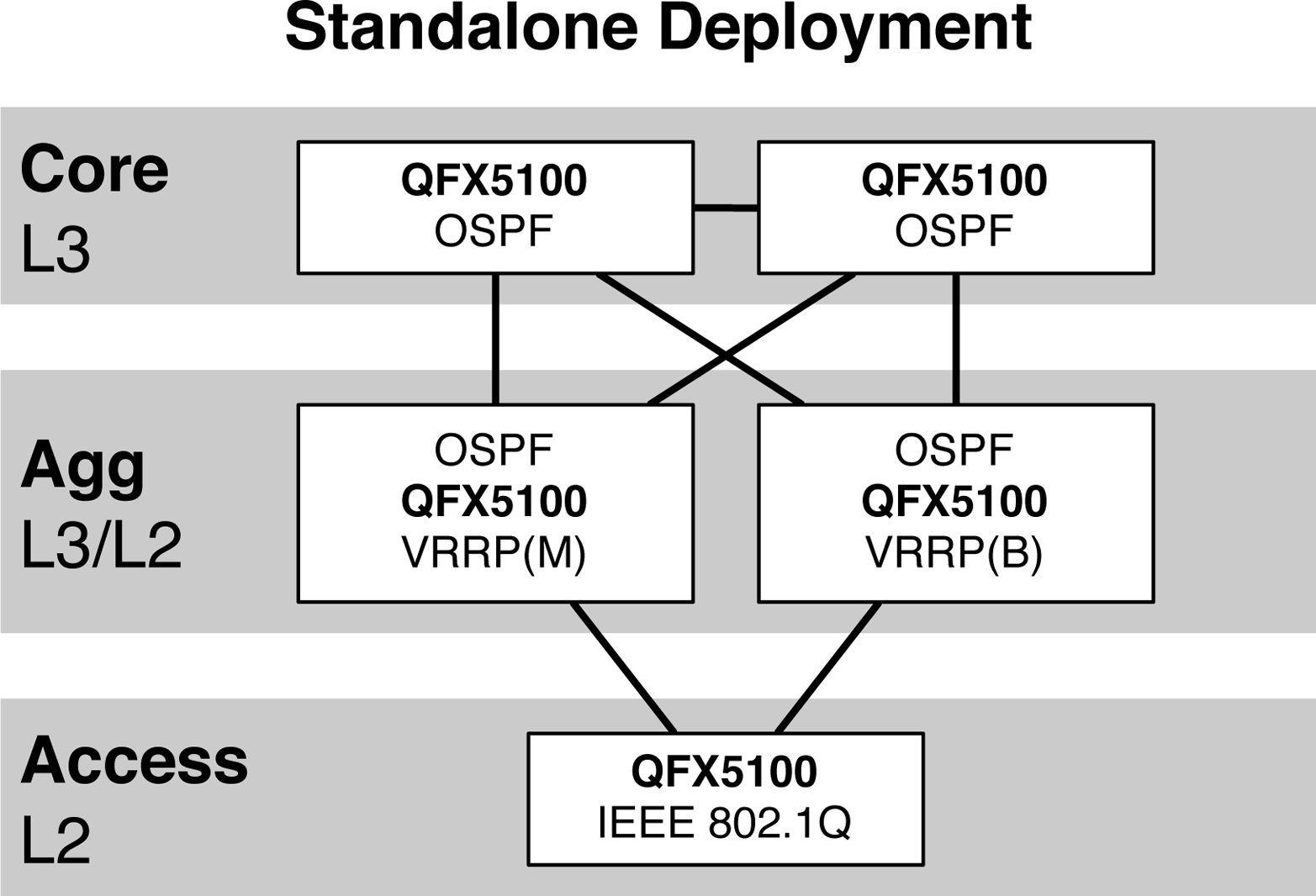

The most obvious way to implement a Juniper QFX5100 switch is in standalone mode, just a simple core, aggregation, or access switch. Each Juniper QFX5100 switch operates independently and uses standard routing and switching protocols to forward traffic in the network, as illustrated in Figure 4-2.

The Juniper QFX5100 switches in the core layer in Figure 4-2 are running only Open Shortest Path First (OSPF) to provide Layer 3 connectivity. The switches in the aggregation layer are running both OSPF and Virtual Router Redundancy Protocol (VRRP); this is to provide Layer 3 connectivity to both the core and access layers. The links from the aggregation switches to the access switch are simple Layer 2 interfaces running IEEE 802.1Q. The aggregation switch on the left is the VRRP master. It provides Layer 3 gateway services to the access switch.

Figure 4-2. Standalone deployment

Note

In Figure 4-2, notice that two aggregation switches do not have a connection between them. This is intentional. The VRRP protocol requires a Layer 2 connection between master and backup switches; otherwise, the election process wouldn’t work. In this example, the two switches have a Layer 2 connection through the access switch and VRRP is able to elect a master. Another design benefit from removing the Layer 2 link between the aggregation switches is that it physically eliminates the possibility of a Layer 2 loop in the network.

The benefit of a standalone deployment is that you can easily implement the Juniper QFX5100 switch into an existing network using standards-based protocols. Easy peasy!

Virtual Chassis

When Juniper released its first switch, the EX4200, one of the innovations was Virtual Chassis, which took traditional “stacking” to the next level. By virtualizing all of the functions of a physical chassis, this technology made it possible for a set of physical switches to form a virtualized chassis, complete with master and backup routing engines, line cards, and a true single point of management.

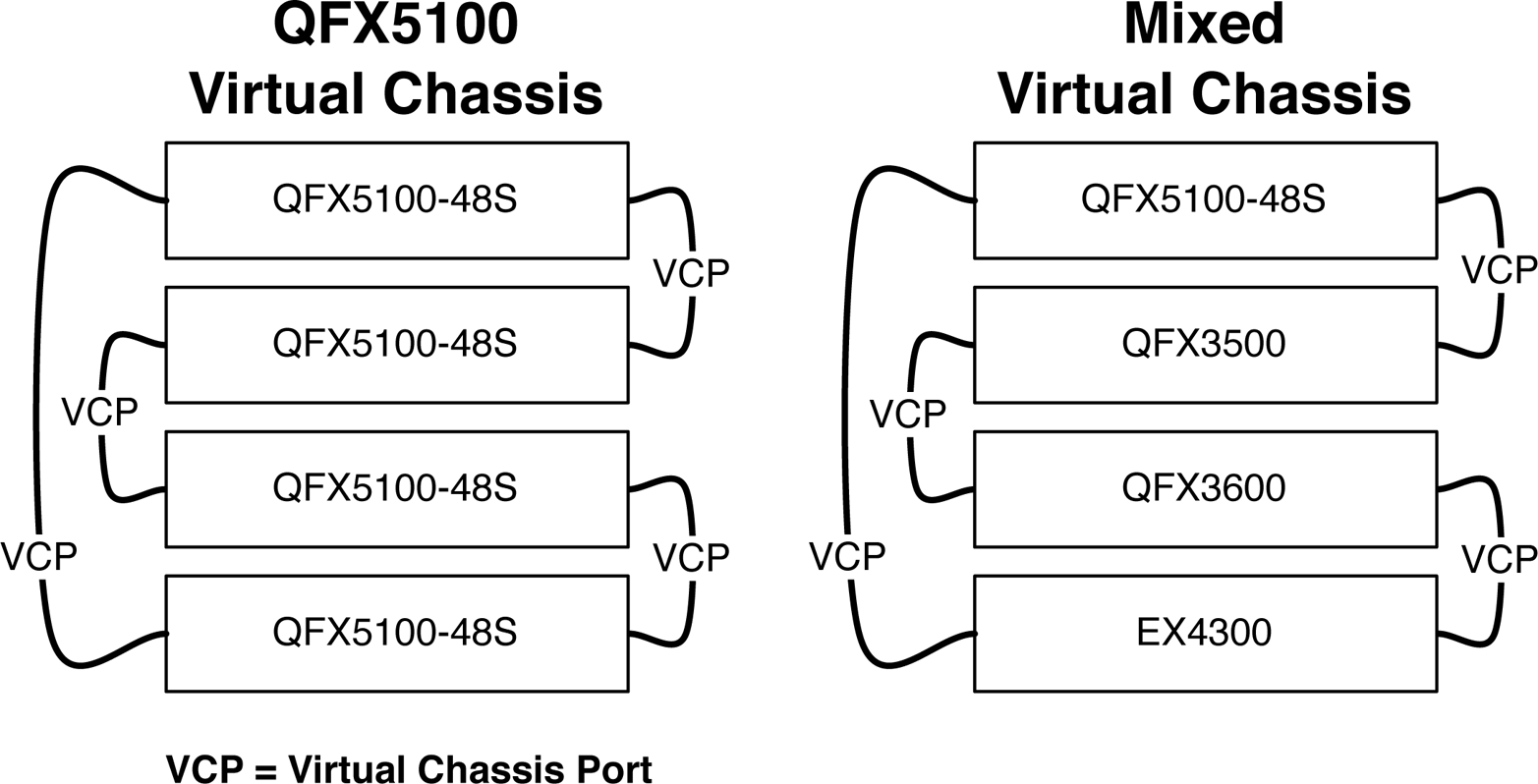

The Juniper QFX5100 family continues to support Virtual Chassis. You can form a Virtual Chassis between a set of QFX5100 switches or create a mixed Virtual Chassis by using the QFX3500, QFX3600, or EX4300, as demonstrated in Figure 4-3.

Figure 4-3. QFX5100 Virtual Chassis and mixed Virtual Chassis

Warning

Figure 4-3 doesn’t specify the best current practice on how to cable VCPs between switches; instead, it simply illustrates that the Juniper QFX5100 series supports regular Virtual Chassis and a mixed Virtual Chassis with other devices.

Virtual Chassis is a great technology to reduce the number of devices to manage in the access tier of a data center or campus. In the example in Figure 4-3, the Juniper QFX5100 Virtual Chassis has four physical devices, but only a single point of management.

One drawback of Virtual Chassis is the scale and topology. Virtual Chassis allows a maximum of 10 switches and is generally deployed in a ring topology. Traffic going from one switch to another in a ring topology is subject to nondeterministic latency and over-subscription, depending on how many transit switches are between the source and destination. To be able to take innovation to the next level, a new technology is required to increase the scale and provide deterministic latency and over-subscription.

QFabric

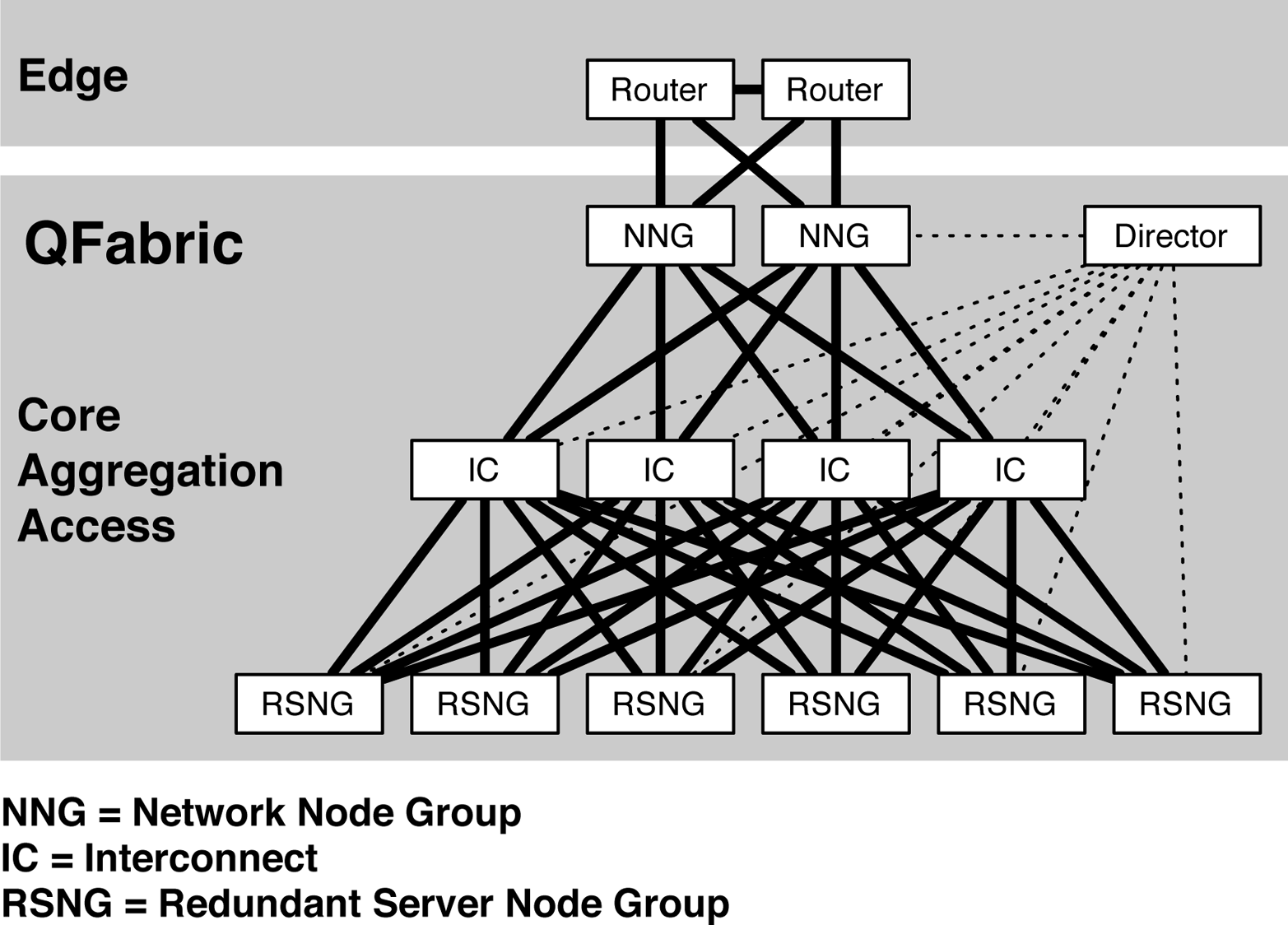

QFabric is the next step up from Virtual Chassis. It’s able to scale up to 128 switches and uses an internal 3-stage Clos topology to provide deterministic latency and over-subscription. With higher scale and performance, QFabric has the ability to collapse the core, aggregation, and access into a single data center tier, as shown in Figure 4-4.

Figure 4-4. QFabric architecture and roles

All of the components in the core, aggregation, and access tier (the large gray box in Figure 4-4) make up the QFabric architecture. The components and function of a QFabric architecture are listed in Table 4-1.

| Component | Tier | Function |

|---|---|---|

| IC | Core and aggregation | All traffic flows through the IC switches; it acts as the middle stage in a 3-stage Clos fabric. |

| RSNG | Access | All servers, storage, and other end points connect into the Redundant Server Node Group (RSNG) top-of-rack (ToR) switches for connectivity into the fabric. |

| NNG | Routing | Any other devices that need to peer to QFabric through a standard routing protocol such as OSPF or Border Gateway Protocol (BGP) are required to peer into a Network Node Group (NNG). |

| Director | Control plane | Although QFabric is a set of many physical devices, it’s managed as a single switch. The control plane has been virtualized and placed outside of the fabric. Each component in QFabric has a connection to the Director. All configuration and management is performed from a pair of Directors. |

Managing an entire data center network through a single, logical switch has tremendous operational benefits. You no longer need to worry about routing and switching protocols between the core, aggregation, and access tiers in the network. The QFabric architecture handles all of the routing and switching logic for you; it simply provides you a turnkey Ethernet fabric that can scale up to 128 ToR switches.

The Juniper QFX5100 series is able to participate in the QFabric architecture as a ToR switch or RSNG. A important benefit to using a Juniper QFX5100 switch as an RSNG in a QFabric architecture is that it increases the logical scale of QFabric as compared to using the QFX3500 or QFX3600 as an RSNG. A QFabric data center only using QFX5100 RSNGs can reach logical scaling, which is described in Chapter 3.

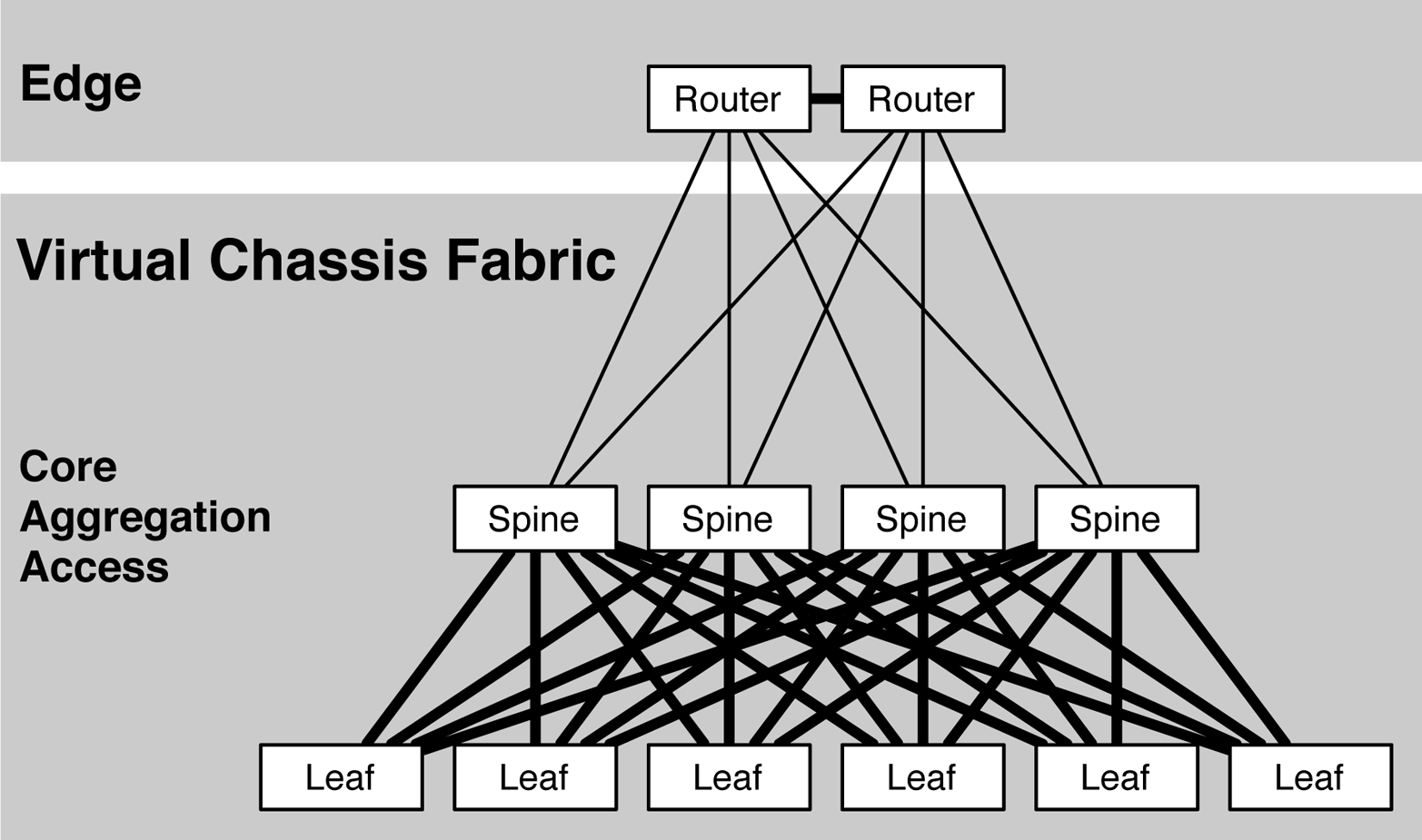

Virtual Chassis Fabric

If the scale of Virtual Chassis is a bit too small and the QFabric a bit too big, Juniper’s next innovation is VCF; it’s a perfect fit between traditional Virtual Chassis and QFabric. By adopting the best attributes of Virtual Chassis and QFabric, Juniper has created a new technology with which you can build a plug-and-play Ethernet fabric that scales up to 32 members and provides deterministic latency and over-subscription with an internal 3-stage Clos topology, as depicted in Figure 4-5.

Figure 4-5. VCF architecture

At first glance, VCF and QFabric look very similar. A common question is, “What’s different?” Table 4-2 looks at what the technologies have in common and what separates them.

VCF offers features and capabilities that are above and beyond QFabric and is a great technology to collapse multiple tiers in a data center network. As of this writing, the only limitation is that VCF allows a maximum of 32 members. One of the main differences is the introduction of a concept called Universal Server Ports. This makes it possible for a server to plug into any place into the topology. For example, a server can plug into either a leaf or spine switch in VCF. On the other hand, with QFabric you can plug servers only into QFabric Nodes, because the IC switches are reserved only for QFabric nodes.

The Juniper QFX5100 family can be used in both the spine and leaf roles of VCF. You can use the EX4300 series in VCF, too, but only as a leaf. Table 4-3 presents device compatibility in a VCF as of this writing.

| Switch | Spine | Leaf |

|---|---|---|

| QFX5100-24Q | Yes | Yes |

| QFX5100-96S | Yes | Yes |

| QFX5100-48S | Yes | Yes |

| QFX5100-48T | No | Yes |

| QFX3500 | No | Yes |

| QFX3600 | No | Yes |

| EX4300 | No | Yes |

In summary, the Juniper QFX5100 series must be the spine in a VCF, but you can use all of the other QFX5100 models, as well as QFX3500, QFX3600, and EX4300 series switches as a leaf.

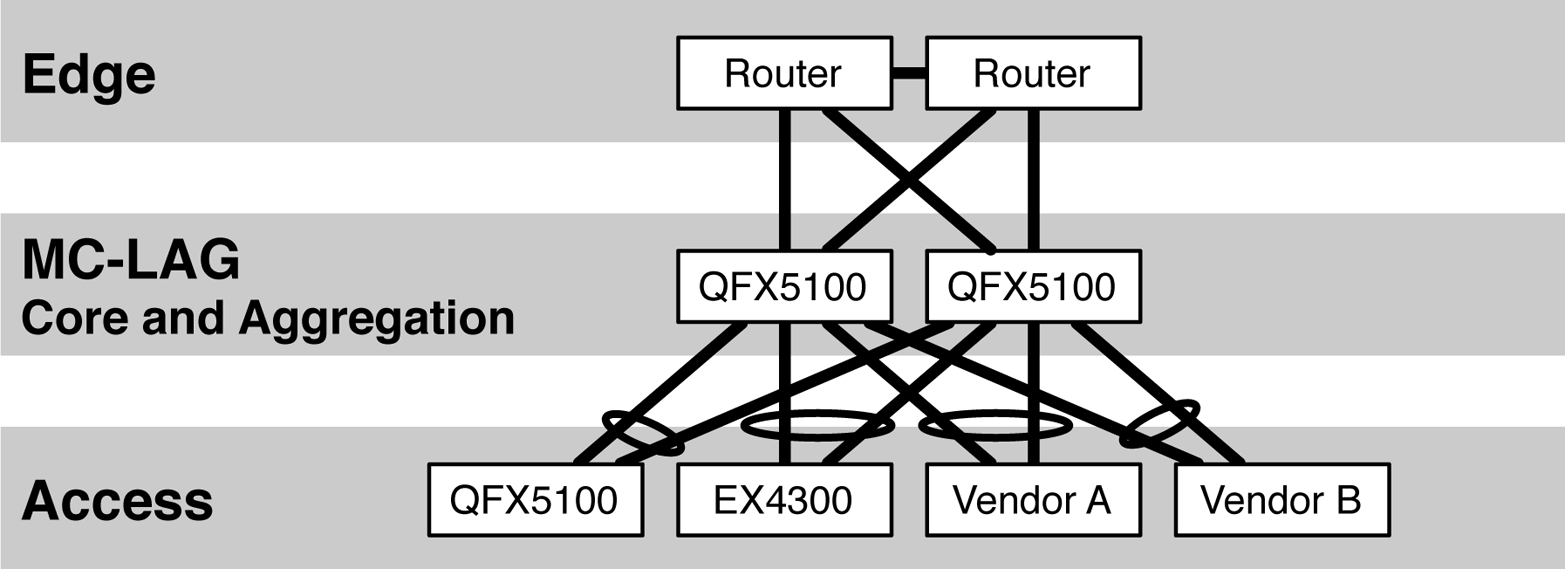

MC-LAG

Virtual Chassis, QFabric, and VCF are all Juniper architectures. Let’s move back into the realm of open architectures and take a look at MC-LAG. In a network with multiple vendors, it’s desirable to choose protocols that support different vendors, as shown in Figure 4-6.

Figure 4-6. MC-LAG architecture

The figure shows that the Juniper QFX5100 family supports the MC-LAG protocol between two switches. All switches in the access tier simply speak IEEE 802.1AX/LACP to the pair of QFX5100 switches in the core and aggregation tier. From the perspective of any access switch, it’s unaware of MC-LAG and only speaks IEEE 802.1AX/LACP. Although there are two physical QFX5100 switches running MC-LAG, the access switches only see two physical interfaces and combine them into a single logical aggregated Ethernet interface.

All of the Juniper QFX5100 platforms support MC-LAG, and you can use any access switch in the access layer that supports IEEE 802.3ad/LACP.

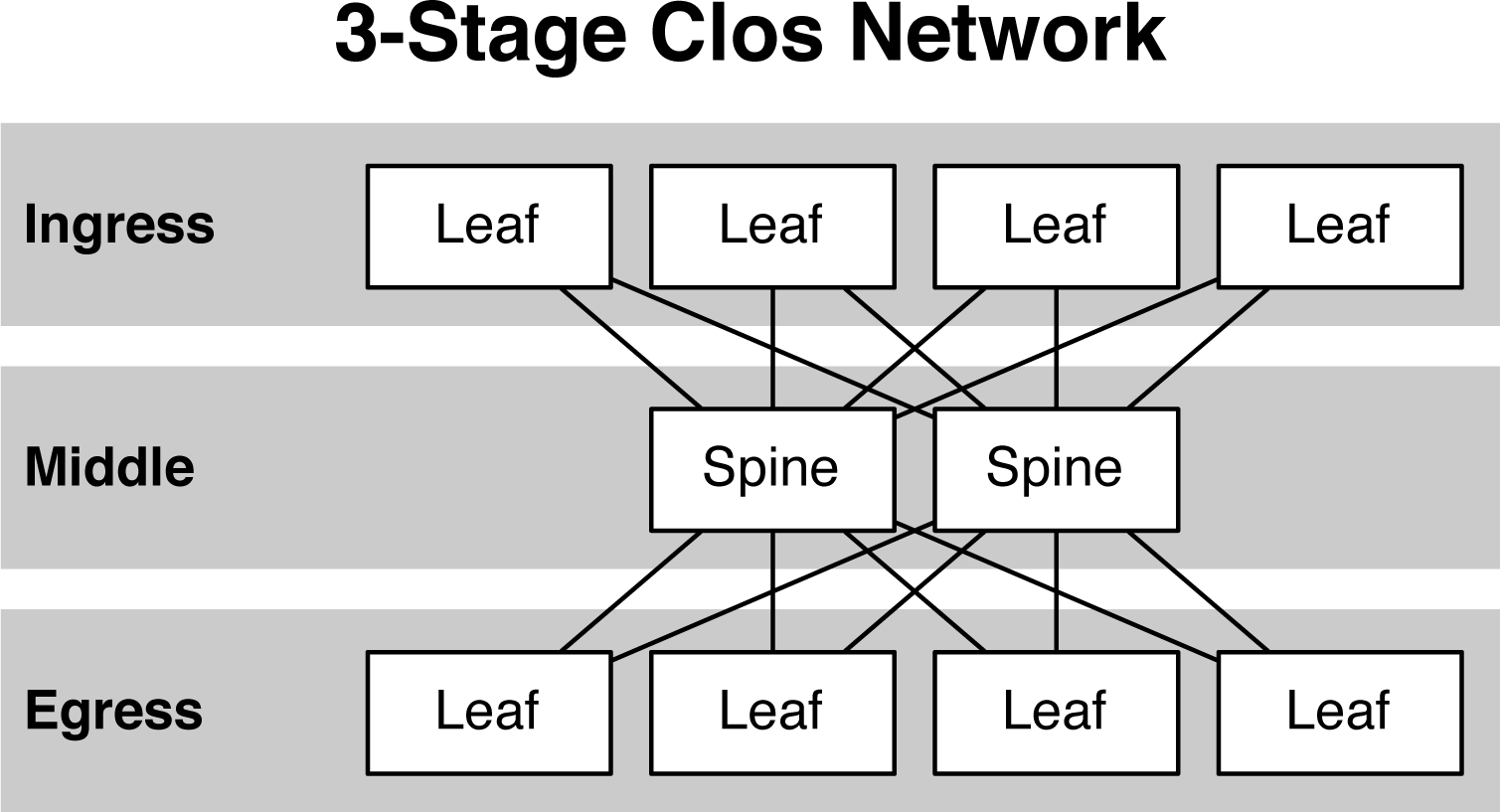

Clos Fabric

When scale is a large factor in building a data center, many engineers turn toward building a Clos fabric with which they can easily scale to 100,000s of ports. The most common Clos network is a 3-stage topology, as illustrated in Figure 4-7.

Figure 4-7. Architecture of Clos network

Depending on the port density of the switches used in a Clos network, the number of leaves can easily exceed 500 devices. Due to the large scale of Clos networks, it’s always a bad idea to use traditional Layer 2 protocols such as spanning tree or MC-LAG because it creates large broadcast domains and excessive flooding. Clos fabrics are Layer 3 in nature because routing protocols scale in an orderly fashion and reduce the amount of flooding. If Layer 2 connectivity is required, using higher level architectures such as overlay networking go hand-in-hand with Clos networks. There are many options when it comes to routing protocols, but traditionally, BGP is used primarily for three reasons:

-

Support multiple protocols families (inet, inet6, evpn)

-

Multivendor stability

-

Scale

-

Traffic engineering and tagging

The Juniper QFX5100 series works exceedingly well at any tier in a Clos network. The Juniper QFX5100-24Q works well in the spine because of its high density of 40GbE interfaces. Other models such as the Juniper QFX5100-48S or QFX5100-96S work very well in the leaf because most hosts require 10GbE access, and the spine operates at 40GbE.

Clos fabrics are covered in much more detail in Chapter 7.

Transport Gymnastics

The Juniper QFX5100 series handles a large variety of different data plane encapsulations and technologies. The end result is that a single platform can solve many types of problems in the data center, campus, and WAN. There are five major types of transport that Juniper QFX5100 platforms support:

-

MPLS

-

VXLAN

-

Ethernet

-

FCoE

-

HiGig2

The Juniper QFX5100 is pretty unique in the world of merchant silicon switches because of the amount of transport encapsulations enabled on the switch. Typically other vendors don’t support MPLS, Fibre Channel over Ethernet (FCoE), or HiGig2. Now that you have access to all of these major encapsulations, what can you do with them?

MPLS

Right out of the box, MPLS is one of the key differentiators of Juniper QFX5100 switches. Typically, such technology is reserved only for big service provider routers such as the Juniper MX. As of this writing, the QFX5100 family supports the following MPLS features:

-

LDP

-

RSVP

-

LDP tunneling over RSVP

-

L3VPN

-

MPLS automatic bandwidth allocation

-

Policer actions

-

Traffic engineering extensions for OSPF and IS-IS

-

MPLS Ping

One thing to note is that Juniper QFX5100 platforms don’t support as many MPLS features as the Juniper MX, but all of the basic functionality is there. The Juniper QFX5100 family also supports MPLS within the scale of the underlying Broadcom chipset, as outlined in Chapter 3.

Virtual Extensible LAN

The cool kid on the block when it comes to data center overlays is Virtual Extensible LAN (VXLAN). By encapsulating Layer 2 traffic with VXLAN, you can transport it over a Layer 3 IP Fabric, which has better scaling and high availability metrics than a traditional Layer 2 network. Some of the VXLAN features that Juniper QFX5100 switches supports are:

-

OVSDB and VMware NSX control plane support

-

DMI and Juniper Contrail control plane support

-

VXLAN Layer 2 Gateway for bare-metal server support

Chapter 8 contains more in-depth content about VXLAN.

Ethernet

One of the most fundamental data center protocols is Ethernet. When a piece of data is transferred between end points, it’s going to use Ethernet as the vehicle. The Juniper QFX5100 family supports all of the typical Ethernet protocols:

-

IEEE 802.3

-

IEEE 802.1Q

-

IEEE 802.1QinQ

Pretty straightforward, eh?

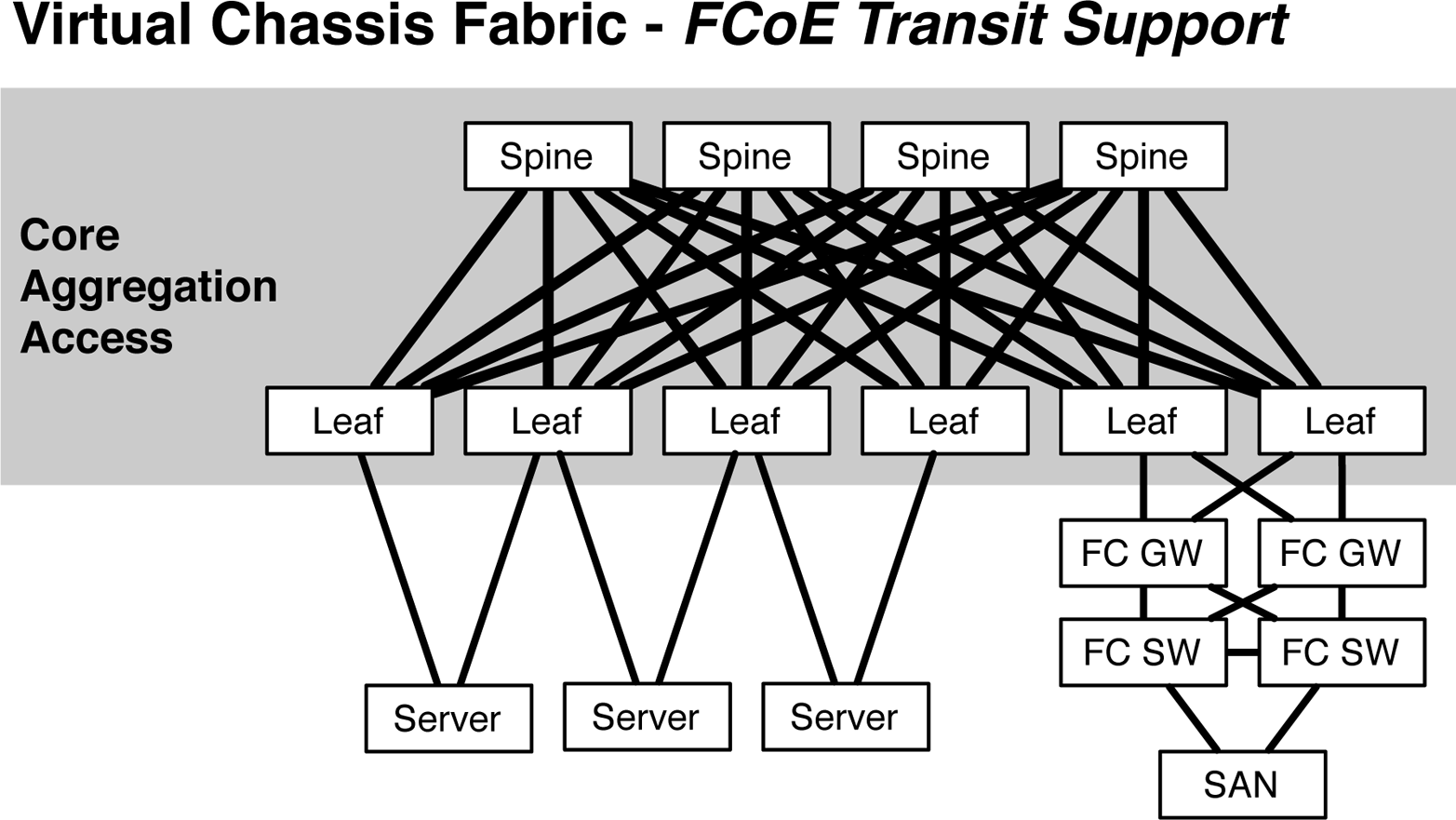

FCoE

One of the biggest advantages of the Juniper QFX5100 series is the ability to support converged storage via FCoE. The two Juniper architectures that enable FCoE are QFabric and VCF. Figure 4-8 looks at how FCoE would work with VCF.

The servers would use standard Converged Network Adapters (CNA) and can be dual-homed into the VCF. Both data and storage would flow across these links using FCoE. The Storage Area Network (SAN) storage device would need to speak native Fibre Channel (FC) and use a pair of FC switches for redundancy. The FC switches would terminate into a pair of FC gateways that would convert FC into FCoE, and vice versa. In this scenario, VCF simply acts as a FCoE transit device. The FC gateway and switch functions need to be provided by other devices.

Figure 4-8. FCoE transit with VCF

HiGig2

One of the more interesting encapsulations is Broadcom HiGig2; this encapsulation can be used only between switches using a Broadcom chipset. The Broadcom HiGig2 is just another transport encapsulation, but the advantage is that it contains more fields and meta information that vendors can use to create custom architectures. For example, VCF uses the Broadcom HiGig2 encapsulation.

One of the distinct advantages of HiGig2 over standard Ethernet is that there’s only a single ingress lookup. The architecture only needs to know the egress Broadcom chipset when transmitting data; any intermediate switches simply forward the HiGig2 frames to the egress chipset without having to waste time looking at other headers. Because the intermediate switches are so efficient, the end-to-end latency using HiGig2 is less than standard Ethernet.

The HiGig2 encapsulation isn’t user-configurable. Instead this special Broadcom encapsulation is used in the following Juniper architectures: QFabric, Virtual Chassis, and VCF. This allows Juniper to offer options better performance and ease of use when building a data center. Juniper gives you the option to “do it yourself” with all the standard networking protocols, and the “plug and play” option for customers who want a simplified network operations.

Summary

This chapter covered the six different technology options of the Juniper QFX5100 series. There are three Juniper architecture options:

-

Virtual Chassis

-

QFabric

-

VCF

There are also three open architecture options:

-

Standalone

-

MC-LAG

-

Clos Fabric

In addition to the six architectures supported by the Juniper QFX5100, there are five major transport encapsulations, as well:

-

MPLS

-

VXLAN

-

Ethernet

-

FCoE

-

HiGig2

The Juniper QFX5100 family of switches is a great platform on which to standardize because each offers so much in a small package. You can build efficient Ethernet fabrics with QFabric or VCF; large IP Fabrics using a Clos architecture; and small WAN deployments using MPLS. Using a single platform has both operational and capital benefits. Being able to use the same platform across various architectures creates a great use case for sparing. And, keeping a common set of power supplies, modules, and switches for failures lowers the cost of ownership.

Get Juniper QFX5100 Series now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.