Chapter 1. Introduction to the SRX

Firewalls are a staple of almost every network in the world. The firewall protects nearly every network-based transaction that occurs, and even the end user understands its metaphoric name, meant to imply keeping out the bad stuff. But firewalls have had to change. Whether it’s the growth of networks or the growth of network usage, they have had to move beyond the simple devices that only require protection from inbound connections. A firewall now has to transcend its own title, the one end users are so familiar with, into a whole new type of device and service. This new class of device is a services gateway. And it needs to provide much more than just a firewall—it needs to look deeper into the packet and use the contained data in new ways that are advantageous to the network for which it is deployed. Can you tell if an egg is good or not by just looking at its shell? And once you break it open, isn’t it best to use all of its contents? Deep inspection from a services gateway is the new firewall of the future.

Deep inspection isn’t a new concept, nor is it something that Juniper Networks invented. What Juniper did do, however, is start from the ground up to solve the technical problems of peering deeply. With the Juniper Networks SRX Series Services Gateways, Juniper built a new platform to answer today’s problems while scaling the platform’s features to solve the anticipated problems of tomorrow. It’s a huge challenge, especially with the rapid growth of enterprise networks. How do you not only solve the needs of your network today, but also anticipate the needs for tomorrow?

Juniper spent an enormous amount of effort to create a platform that can grow over time. The scalability is built into the features, performance, and multifunction capability of the SRX Series. This chapter introduces what solutions the SRX Series can provide for your organization today, while detailing its architecture to help you anticipate and solve your problems of tomorrow.

Evolving into the SRX

The predecessors to the SRX Series products are the legacy ScreenOS products. They really raised the bar when they were introduced to the market, first by NetScreen and then by Juniper Networks. Many features might be remembered as notable, but the most important was the migration of a split firewall software and operating system (OS) model. Firewalls at the time of their introduction consisted of a base OS and then firewall software loaded on top. This was flexible for the organization, since it could choose the underlying OS it was comfortable with, but when any sort of troubleshooting occurred, it led to all sorts of finger-pointing among vendors. ScreenOS provided an appliance-based approach by combining the underling OS and the features it provided.

The integrated approach of ScreenOS transformed the market. Today, most vendors have migrated to an appliance-based firewall model, but it has been more than 10 years since the founding of NetScreen Technologies and its ScreenOS approach. So, when Juniper began to plan for a totally new approach to firewall products, it did not have to look far to see its next-generation choice for an operating system: Junos became the base for the new product line called the SRX Series.

ScreenOS to Junos

Juniper Networks’ flagship operating system is Junos. The Junos operating system has been a mainstay of Juniper and it runs on the majority of its products. Junos was created in the mid-1990s as an offshoot of the FreeBSD Unix-like operating system. The goal was to provide a robust core OS that could control the underlying chassis hardware. At that time, FreeBSD was a great choice on which to base Junos, because it provided all of the important components, including storage support, a memory controller, a kernel, and a task scheduler. The BSD license also allowed anyone to modify the source code without having to return the new code. This allowed Juniper to modify the code as it saw fit.

Note

Junos has evolved greatly from its initial days as a spin-off of BSD. It contains millions of lines of code and an extremely strong feature set. You can learn more details about Junos in Chapter 2.

The ScreenOS operating system aged gracefully over time, but it hit some important limits that prevented it from being the choice for the next-generation SRX Series products. First, ScreenOS cannot separate the running of tasks from the kernel. All processes effectively run with the same privileges. Because of this, if any part of ScreenOS were to crash or fail, the entire OS would end up crashing or failing. Second, the modular architecture of Junos allows for the addition of new services, since this was the initial intention of Junos and the history of its release train. ScreenOS could not compare.

Finally, there’s a concept called One Junos. Junos is one system, designed to completely rethink the way the network works. Its operating system helps to reduce the amount of time and effort required to plan, deploy, and operate network infrastructure. The one release train provides stable delivery of new functionality in a time-tested cadence. And its one modular software architecture provides highly available and scalable software that keeps up with changing needs. As you will see in this book, Junos opened up enormous possibilities and network functionality from one device.

Inherited ScreenOS features

Although the next-generation SRX Series devices were destined to use the well-developed and long-running Junos operating system, that didn’t mean the familiar features of ScreenOS were going away. For example, ScreenOS introduced the concept of zones to the firewall world. A zone is a logical entity that interfaces are bound to, and zones are used in security policy creation, allowing the specification of an ingress and egress zone in the security policy. Creating ingress and egress zones means the specified traffic can only pass in a specific direction. It also increases the overall speed of policy lookup, and since multiple zones are always used in a firewall, it separates the overall firewall rule base into many subsets of zone groupings. We cover zones further in Chapter 4.

The virtual router (VR) is an example of another important feature developed in ScreenOS and embraced by the new generation of SRX Series products. A VR allows for the creation of multiple routing tables inside the same device, providing the administrator with the ability to segregate traffic and virtualize the firewall.

Table 1-1 elaborates on the list of popular ScreenOS features that were added to Junos for the SRX Series. Although some of the features do not have a one-to-one naming parity, the functionality of these features is generally replicated on the Junos platform.

Feature | ScreenOS | Junos |

Zones | Yes | Yes |

Virtual routers (VRs) | VRs | Yes as routing instances |

Screens | Yes | Yes |

Deep packet inspection | Yes | Yes as full intrusion prevention |

Network Address Translation (NAT) | Yes as NAT objects | Yes as NAT policies |

Unified Threat Management (UTM) | Yes | Yes |

IPsec virtual private network (VPN) | Yes | Yes |

Dynamic routing | Yes | Yes |

High availability (HA) | NetScreen Redundancy Protocol (NSRP) | Chassis cluster |

Device management

Junos has evolved since it was first deployed in service provider networks. Over the years, many lessons were learned regarding how to best use the device running the OS. These practices have been integrated into the SRX Series and are shared throughout this book, specifically in how to use the command-line interface (CLI).

For the most part, Junos users traditionally tend to utilize the CLI for managing the platform. As strange as it may sound, even very large organizations use the CLI to manage their devices. The CLI was designed to be easy to utilize and navigate through, and once you are familiar with it, even large configurations are completely manageable through a simple terminal window. Throughout this book, we will show you various ways to navigate and configure the SRX Series products using the CLI.

Note

In Junos, the CLI extends beyond just a simple set of commands. The CLI is actually implemented as an Extensible Markup Language (XML) interface to the operating system. This XML interface is called Junoscript and is even implemented as an open standard called NETCONF. Third-party applications can integrate with Junoscript or a user may even use it on the device. Juniper Networks provides extensive training and documentation covering this feature; an example is its Day One Automation Series (see http://www.juniper.net/dayone).

Sometimes, getting started with such a rich platform is a daunting task, if only because thousands of commands can be used in the Junos operating system. To ease this task and get started quickly, the SRX Series of products provides a web interface called J-Web. The J-Web tool is automatically installed on the SRX Series (on some other Junos platforms it is an optional package), and it is enabled by default. The interface is intuitive and covers most of the important tasks for configuring a device. We will cover both J-Web and the CLI in more depth in Chapter 2.

For large networks with many devices, we all know mass efficiency is required. It may be feasible to use the CLI, but it’s hard to beat a policy-driven management system. Juniper provides two tools to accomplish efficient management. The first tool is called Network and Security Manager (NSM). This is the legacy tool that you can use to manage networks. It was originally designed to manage ScreenOS products, and over time, it evolved to manage most of Juniper’s products. However, the architecture of the product is getting old, and it’s becoming difficult to implement new features. Although it is still a viable platform for management, just like the evolution of ScreenOS to Junos, a newly architected platform is available.

This new platform is called Junos Space, and it is designed from the ground up to be a modular platform that can integrate easily with a multitude of devices, and even other management systems. The goal for Junos Space is to allow for the simplified provisioning of a network.

To provide this simplified provisioning, three important things must be accomplished:

Integrate with a heterogeneous network environment.

Integrate with many different types of management platforms.

Provide this within an easy-to-use web interface.

By accomplishing these tasks, Junos Space will take network management to a new level of productivity and efficiency for an organization.

At the time of this writing, Junos Space was still being finalized. Nonetheless, readers of this book will learn about the capabilities of the SRX Series using the Junos CLI from the ground up, and will be ready to apply it within Junos Space anytime they deem appropriate.

The SRX Series Platform

The SRX Series hardware platform is a next-generation departure from the previous ScreenOS platforms, built from the ground up to provide scalable services. Now, the question that begs to be answered is: what exactly is a service?

A service is an action or actions that are applied to the network traffic passing through the SRX Series of products. Two examples of services are stateful firewalling and intrusion prevention.

The ScreenOS products were designed primarily to provide three services: stateful firewalling, NAT, and VPN. When ScreenOS was originally designed, these were the core value propositions for a firewall in a network. In today’s network, these services are still important, but they need to be provided on a larger scale since the number of Internet Protocol (IP) devices in a network has grown significantly, and each of them relies on the Internet for access to information they need in order to run. Since the SRX is going to be processing this traffic, it is critical that it provides as many services as possible on the traffic in one single pass.

Built for Services

So, the SRX provides services on the passing traffic, but it must also provide scalable services. This is an important concept to review. Scale is the ability to provide the appropriate level of processing based on the required workload, and it’s a concept that is often lost when judging firewalls because you have to think about the actual processing capability of a device and how it works. Although all devices have a maximum compute capability, or the maximum level at which they can process information, it’s very important to understand how a firewall processes this load. This allows the administrator to better judge how the device scales under such load.

Scaling under load is based on the services a device is attempting to provide and the scale it needs to achieve. The traditional device required to do all this is either a branch device, or the new, high-end data center firewall. A branch firewall needs to provide a plethora of services at a performance level typical of the available WAN speeds. These services include the traditional stateful firewall, VPN, and NAT, as well as more security-focused services such as UTM and intrusion prevention.

A data center firewall, on the other hand, needs to provide highly scalable performance. When a firewall is placed in the core of a data center it cannot impede the performance of the entire network. Each transaction in the data center contains a considerable amount of value to the organization, and any packet loss or delay can cause financial implications. A data center firewall requires extreme stateful firewall speeds, a high session capacity, and very fast new sessions per second.

In response to these varied requirements, Juniper Networks created two product lines: the branch SRX Series and the data center SRX Series. Each is targeted at its specific market segments and the network needs of the device in those segments.

Deployment Solutions

Networking products are created to solve problems and increase efficiencies. Before diving into the products that comprise the SRX Series, let’s look at some of the problems these products solve in the two central locations in which they are deployed:

The branch SRX Series products are designed for small to large office locations consisting of anywhere from a few individuals to hundreds of employees, representing either a small, single device requirement or a reasonably sized infrastructure. In these locations, the firewall is typically deployed at the edge of the network, separating the users from the Internet.

The data center SRX Series products are Juniper’s flagship high-end firewalls. These products are targeted at the data center and the service provider. They are designed to provide services to scale. Data center and service provider deployments are as differentiated as branch locations.

Let’s look at examples of various deployments and what type of services the SRX Series products provide. We will look at the small branch first, then larger branches, data centers, service providers, and mobile carriers, and finally all the way up (literally) to cloud networks.

Small Branch

A small branch location is defined as a network with no more than a dozen hosts. Typically, a small branch has a few servers or, most often, connects to a larger office. The requirements for a firewall device are to provide not only connectivity to an Internet source, or larger office connection, but also connectivity to all of the devices in the office. The branch firewall also needs to provide switching, and in some cases, wireless connectivity, to the network.

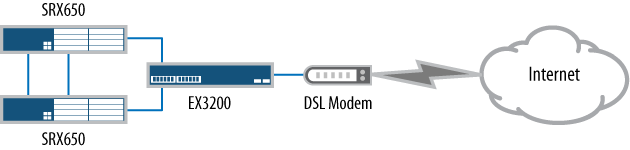

Figure 1-1 depicts a small branch location. Here a Juniper Networks SRX210 Services Gateway is utilized. It enables several hosts to the SRX210 and connects to an upstream device that provides Internet connectivity. In this deployment, the device consolidates a firewall, switch, and DSL router.

The small-location deployment keeps the footprint to one small device, and keeps branch management to one device—if the device were to fail, it’s simple to replace and get the branch up and running using a backup of the current configuration. Finally, you should note that all of the network hosts are directly connected to the branch.

Medium Branch

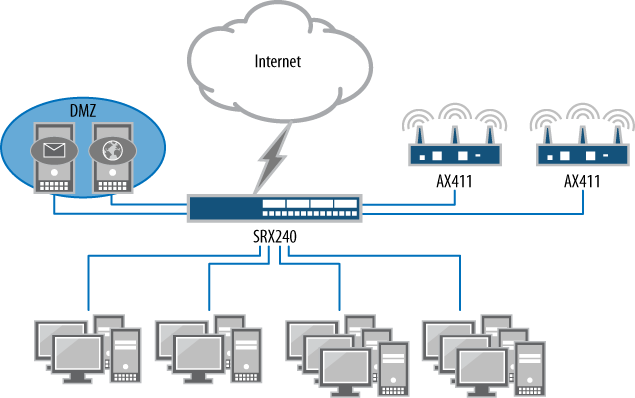

In medium to large branch offices, the network has to provide more to the location because there are 20 or more users—our network example contains about 50 client devices—so here the solution is the Juniper Networks SRX240 Services Gateway branch device. Figure 1-2 shows the deployment of the SRX240 placed at the Internet edge. It utilizes a WAN port to connect directly to the Internet service provider (ISP). For this medium branch, it contains several services and Internet-accessible services.

Note that the servers are connected directly to the SRX240 to provide maximum performance and security. Since this branch provides email and web-hosting services to the Internet, security must be provided. Not only can the SRX240 provide stateful firewalling, but it can also offer intrusion protection services (IPS) for the web and email services, including antivirus services for the email. The branch can be supported by a mix of both wired and wireless connections.

The SRX240 has sixteen 1-gigabit ports which can accommodate the four branch office servers and provide coverage for the client’s two Juniper Networks AX411 Wireless LAN Access Points, adequately covering the large office area. The AX411 access points are easy to deploy since they connect directly to the Power over Ethernet (PoE) network ports on the SRX240. This leaves ten 1-gigabit Ethernet ports that can be used to accommodate any other client systems that need high-speed access to the servers.

Large Branch

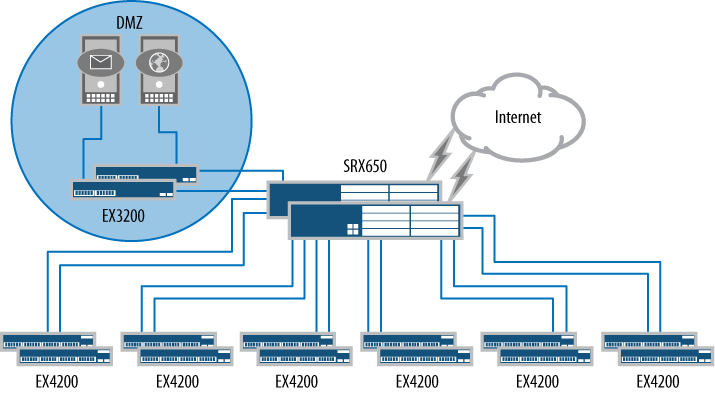

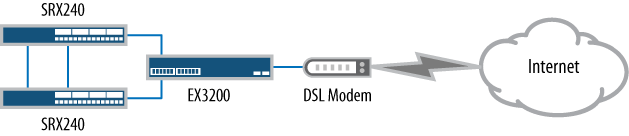

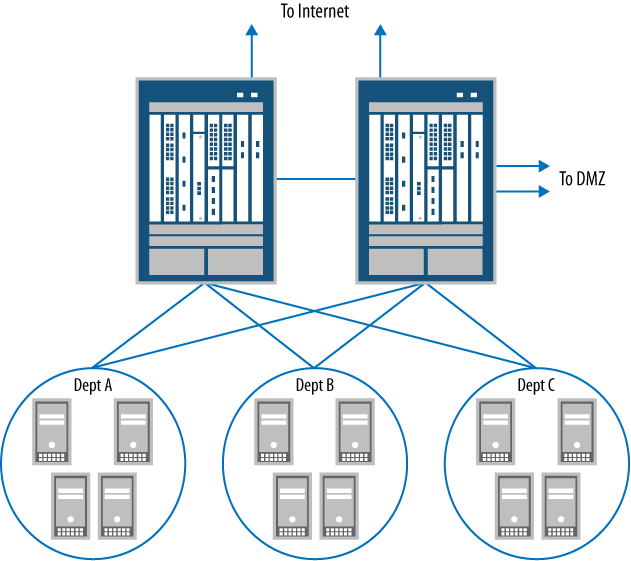

The last branch deployment to review is the large branch. For our example, the large branch has 250 clients. This network requires significantly more equipment than was used in the preceding branch examples. Note that for this network, the Juniper Networks EX Series Ethernet Switches were reutilized to provide client access to the network. Figure 1-3 depicts our large branch topology.

Our example branch network needs to provide Ethernet access for 250 clients, so to realistically depict this, six groupings of two EX4200 switches are deployed. Each switch provides 48 tri-speed Ethernet ports. To simplify management, all of the switches are connected using Juniper’s virtual chassis technology.

Note

For more details on how the EX Series switches and the virtual chassis technology operates, as well as how the EX switches can be deployed and serve various enterprise networks, see Junos Enterprise Switching by Harry Reynolds and Doug Marschke (O’Reilly).

The SRX Series platform of choice for the large branch is the Juniper Networks SRX650 Services Gateway. The SRX650 is the largest of the branch SRX Series products and its performance capabilities actually exceed those of the branch, allowing for future adoption of features in the branch. Just as was done in the previous deployment, the local servers will sit off of an arm of the SRX650, but note that in this deployment, HA was utilized, so the servers must sit off of their own switch (here the Juniper Networks EX3200 switch).

The HA deployment of the SRX650 products means two devices are used, allowing the second SRX650 to take over in the event of a failure on the primary device. The SRX650 HA model provides an extreme amount of flexibility for deploying a firewall, and we detail its capabilities in Chapter 9.

Data Center

What truly is a data center has blurred in recent times. The traditional concept of a data center is a physical location that contains servers that provide services to clients. The data center does not contain client hosts (a few machines here and there to administer the servers don’t count), or clear bounds of ingress and egress to the network. Ingress points may be Internet or WAN connections, but each type of ingress point requires different levels of security.

The new data center of today seems to be any network that contains services, and these networks may even span multiple physical locations. In the past, a data center and its tiers were limited to a single physical location because there were some underlying technologies that were hard to stretch. But today it’s much easier to provide the same Layer 2 network across two or more physical locations, thus expanding the possibilities of creating a data center. With the popularization of MPLS and virtual private LAN service (VPLS) technologies, data centers can be built in new and creative ways.

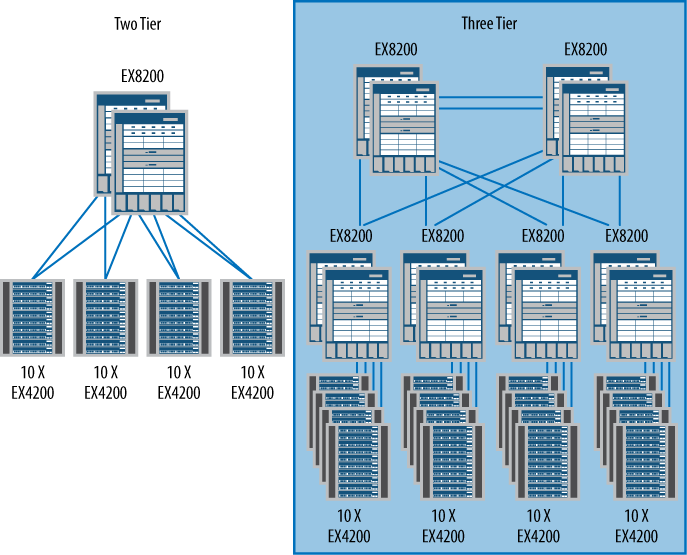

The traditional data center design consists of a two- or three-tier switching model. Figure 1-4 shows both a two-tier and a three-tier switching design. Both are fundamentally the same, except that between the two is the addition of the aggregation switching tier. The aggregation tier compensates for the lack of port density at the core (only in the largest switched networks should a distribution tier be required).

Note that the edge tier is unchanged in both models. This is where the servers connect into the network, and the number of edge switches (and their configuration) is driven by the density of the servers. Most progressively designed data centers are using virtualization technologies which allow multiple servers to run on the same bit of hardware, reducing the overall footprint, energy consumption, and rack space.

Neither this book nor this chapter is designed to be a comprehensive primer on data centers. Design considerations for a data center are enormous and can easily comprise several volumes of text. The point here is to give a little familiarity to the next few deployment scenarios and to show how the various SRX Series platforms scale to the needs of those deployments.

Data Center Edge

As discussed in the previous section, a data center needs to have an ingress point to allow clients to access the data center’s services. The most common service is ingress Internet traffic, and as you can imagine, the ingress point is a very important area to secure. This area needs to allow access to the servers, yet in a limited and secure fashion, and because the data center services are typically high-profile, they may be the target of denial-of-service (DoS), distributed denial-of-service (DDoS), and botnet attacks. It is a fact of network life that must be taken into consideration when building a data center network.

An SRX Series product deployed at the edge of the network must handle all of these tasks, as well as handle the transactional load of the servers. Most connections into applications for a data center are quick to be created and torn down, and during the connection, only a small amount of data is sent. An example of this is accessing a web application. Many small components are actually delivered to the web browser on the client, and most of them are delivered asynchronously, so the components may not be returned in the order they were accessed. This leads to many small data exchanges or transactions, which differs greatly from the model of large continual streams of data transfer.

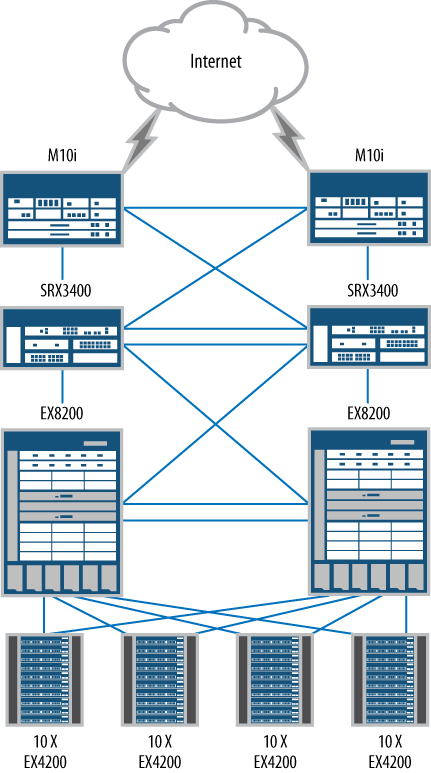

Figure 1-5 illustrates where the SRX Series would be deployed in our example topology. The products of choice are the Juniper Networks SRX3000 line, because they can meet the needs identified in the preceding paragraph. Figure 1-5 might look familiar to you as it is part of what we discussed regarding the data center tier in Figure 1-4. The data center is modeled after that two-tier design, with the edge being placed at the top of the diagram. The SRX3000 line of products do not have WAN interfaces, so upstream routers are used. The WAN routers consolidate the various network connections and then connect to the SRX3000 products. For connecting into the data center itself, the SRX3000 line uses its 10-gigabit Ethernet to connect to the data center core and WAN routers.

A data center relies on availability—all systems must be deployed to ensure that there is no single point of failure. This includes the SRX Series. The SRX3000 line provides a robust set of HA features. In Figure 1-5, both SRX3000 line products are deployed in what is traditionally called an active/active deployment. This means both firewalls can pass traffic simultaneously. When a product in the SRX3000 line operates in a cluster, the two boxes operate as though they are one unit. This simplifies HA deployment because management operations are reduced. Also, traffic can enter and exit any port on either chassis. This model is flexible compared to the traditional model of forcing traffic to only go through an active member.

Data Center Services Tier

The data center core is the network’s epicenter for all server communications, and most connections in a data center flow through it. A firewall at the data center core needs to maintain many concurrent sessions. Although servers may maintain long-lived connections, they are more likely to have connectivity bursts that last a short period of time. This, coupled with the density of running systems, increases the required number of concurrent connections, but at the rate of new connections per second. If a firewall fails to create sessions quickly enough, or falls behind in allowing the creation of new sessions, transactions are lost.

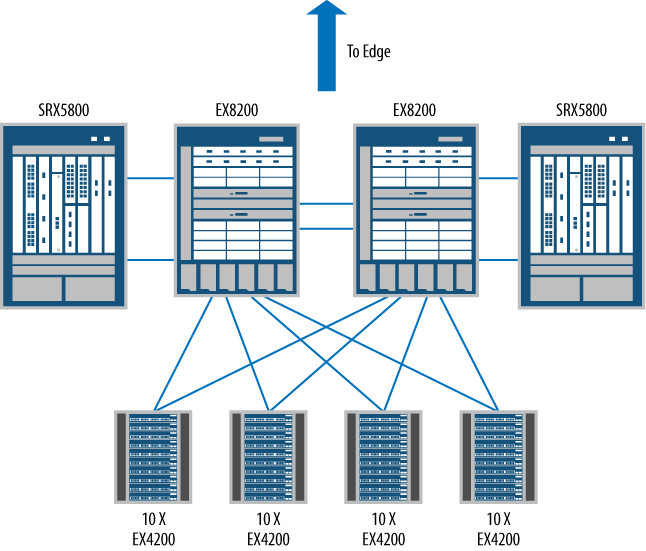

For this example, the Juniper Networks SRX5800 Services Gateway is a platform that can meet these needs. The SRX5800 is the largest member of the SRX5000 line, and is well suited for the data center environment. It can meet the scaling needs of today as well as those of tomorrow. Placing a firewall inside the data center core is always challenging, and typically the overall needs of the data center dictate the placement of the firewall. However, there is a perfect location for the deployment of our SRX5800, as shown in Figure 1-6, which builds upon the example shown as part of the two-tier data center in Figure 1-4.

This location in the data center network is called the services tier, and it is where services are provided to the data center servers on the network traffic. This includes services provided by the SRX5800, such as stateful firewalling, IPS, Application Denial of Service (AppDoS) prevention, and server load balancing. This allows the creation of a pool of resources that can be shared among the various servers. It is also possible to deploy multiple firewalls and distribute the load across all of them, but that increases complexity and management costs. The trend over the past five years has been to move toward consolidation for all the financial and managerial reasons you can imagine.

In the data center core, AppDoS and IPS are two key services to include in the data center services tier design. The AppDoS feature allows the SRX5800 to look for attack patterns unlike other security products. AppDoS looks for DoS and DDoS patterns against a server, the application context (such as the URL), and connection rates from individual clients. By combining and triangulating the knowledge of these three items, the newer style of botnet attacks can finally be stopped.

A separate SRX Series specialty is IPS. The IPS feature differs from AppDoS as it looks for specific attacks through the streams of data. When an attack is identified, it’s possible to block, log, or ignore the threat. Since all of the connections to the critical servers will pass through the SRX5800, adding the additional protection of the IPS technology provides a great deal of value, not to mention additional security for the services tier.

Service Provider

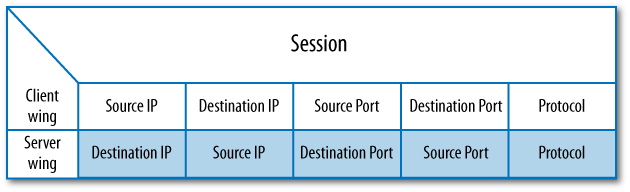

Although most administrators are more likely to use the services of a service provider than they are to run one, looking at the use case of a service provider can be quite interesting. Providing connectivity to millions of hosts in a highly available and scalable method is an extremely tough proposition. Accomplishing this task requires a Herculean effort of thousands of people. Extending a service provider network to include stateful security is just as difficult. Traditionally, a service provider processes traffic in a stateless manner, meaning that each packet is treated independently of any other. Although scaling stateless packet processing isn’t inexpensive, or simple by any means, it does require less computing power than stateful processing.

In a stateful processing device, each packet is matched as part of a new or existing flow. Each packet must be processed to ensure that it is part of an existing session, or a new session must be created. All of the fields of each packet must be validated to ensure that they correctly match the values of the existing flow. For example, in TCP, this would include TCP sequencing numbers and TCP session state. Scaling a device to do this is, well, extremely challenging.

A firewall can be placed in many locations in a service provider’s network. Here we’ll discuss two specific examples: in the first the firewall provides a managed service, and in the second the service provider protects its own services.

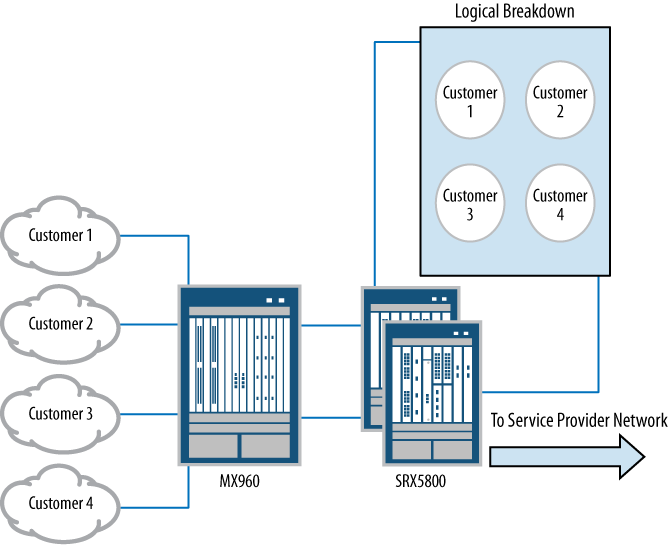

Starting with the managed service provider (MSP) environment, Figure 1-7 shows a common MSP deployment. On the left, several customers are shown, and depending on the service provider environment, this may be several dozen to several thousand (for the purposes of explanation only a handful are needed). The connections from these customers are aggregated to a Layer 2 and Layer 3 routing switch, in this case a Juniper Networks MX960 3D Universal Edge Router. Then the MX Series router connects to an SRX5800. The SRX5800 is logically broken down into smaller firewalls for each customer so that each customer gains the services of a firewall while the provider consolidates all of these “devices” into a single hardware unit. The service provider can minimize its operational costs and maximize the density of customers on a single device.

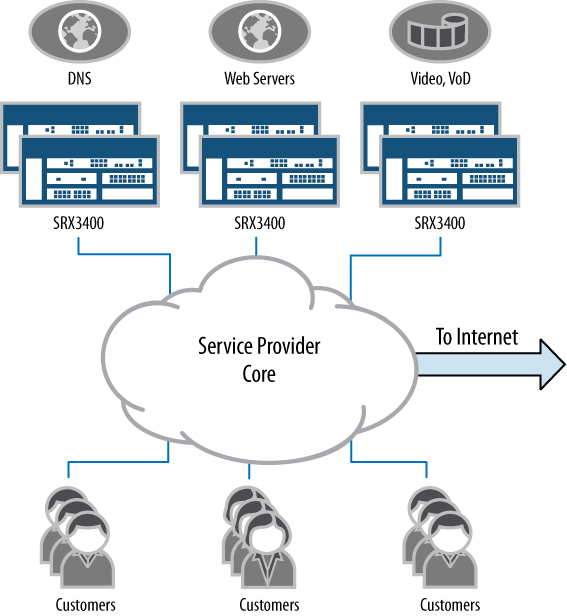

Our second scenario for service providers involves protecting the services that they provide. Although a service provider provides access to other networks, such as the Internet, it also has its own hosted services. These include, but are not limited to, Domain Name System (DNS), email, and web hosting. Because these services are public, it’s important for the service provider to ensure their availability, as any lack of availability can become a front-page story or at least cause a flurry of angry customers. For these services, firewalls are typically deployed, as shown in our example topology in Figure 1-8.

Several attack vectors are available to service providers’ public services, including DoS, DDoS, and service exploits. They are all the critical types of attacks that the provider needs to be aware of and defend. The data center SRX products can protect against both DDoS and the traditional DoS attack. In the case of a traditional DoS attack, the screen feature can be utilized.

A screen is a mechanism that is used to stop more simplistic attacks such as SYN and UDP floods (note that although these types of attacks are “simple” in nature, they can quickly overrun a server or even a firewall). Screens allow the administrator of an SRX Series product to set up specific thresholds for TCP and UDP sessions. Once these thresholds have been exceeded, protection mechanisms are enacted to minimize the threat of these attacks. We will discuss the screen feature in detail in Chapter 6.

Mobile Carriers

The phones of today are more than the computers of yesterday; they are fully fledged modern computers in a hand-held format, and almost all of a person’s daily tasks can be performed through them. Although a small screen doesn’t lend itself to managing 1,000-line spreadsheets, the devices can easily handle the job of sharing information through email or web browsing. More and more people who would typically not use the Internet are now accessing the Internet through these mobile devices, which means that access to the public network is advancing in staggering demographic numbers.

This explosion of usage has brought a new challenge to mobile operators: how to provide a resilient data network to every person in the world. Such a mobile network, when broken down into smaller, easy-to-manage areas, provides a perfect example of how an SRX Series firewall can be utilized to secure such a network.

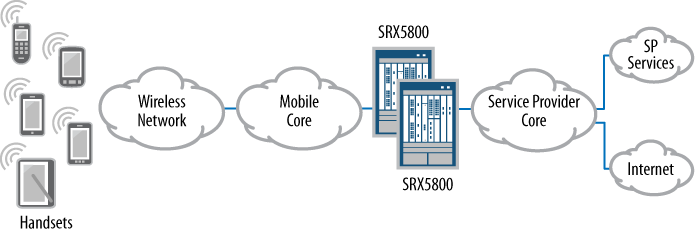

For mobile carrier networks, an SRX5800 is the right choice, for a few specific reasons: its high session capacity and its high connections-per-second rate. In the network locations where this device is placed, connection rates can quickly vary from a few thousand to several hundred thousand. A quick flood of new emails or everyone scrambling to see a breaking news event can strain any well-designed network. And as mentioned in the preceding service provider example, it’s difficult to provide firewall services in a carrier network.

Figure 1-9 shows a simplified example of a mobile operator network. It’s simplified in order to focus more on the firewalls and less on the many layers of the wireless carrier’s network. For the purposes of this discussion, the way in which IP traffic is tunneled to the firewalls isn’t relevant.

In Figure 1-9, the handsets are depicted on the far left, and their radio connections, or cell connections, are terminated into the provider’s network. Then, at the edge of the provider’s network, when the actual data requests are terminated, the IP-based packet is ready for transport to the Internet, or to the provider’s services.

An SRX5800 at the location depicted in Figure 1-9 is designed to protect the carrier’s network, ensuring that its infrastructure is secure. By protecting the network, it ensures that its availability and the service that customers spend money on each month continues. If the protection of the handsets is the responsibility of the handset provider in conjunction with the carrier, the same goes for the cellular or 3G Internet services that can be utilized by consumers using cellular or 3G modems. These devices allow users to access the Internet directly from anywhere in a carrier’s wireless coverage network—these computers need to employ personal firewalls for the best possible protection.

For any service provider, mobile carriers included, the provided services need to be available to the consumers. As shown in Figure 1-9, the SRX5800 devices are deployed in a highly available design. If one SRX5800 experiences a hardware failure, the second SRX5800 can completely take over for the primary. Of course, this failover is transparent to the end user for uninterrupted service and network uptime that reaches to the five, six, or even seven 9s, or 99.99999% of the time. As competitive as the mobile market is these days, the mobile carrier’s networks need to be a competitive advantage.

Cloud Networks

It seems like cloud computing is on everyone’s mind today. The idea of providing any service to anyone at any time to any scale with complete resilience is a dream that is becoming a reality for many organizations. Both cloud computing vendors and large enterprises are providing their own private clouds.

Although each cloud network has its own specific design needs, the SRX Series can and should play an important role.

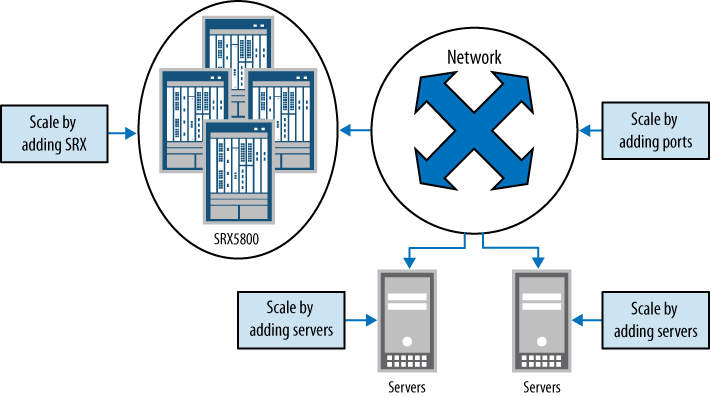

That’s because a cloud network must scale in many directions to really be a cloud. It must scale in the number of running operating systems it can provide. It must scale in the number of physical servers that can run these operating systems. And it must scale in the available number of networking ports that the network provides to the servers. The SRX Series must be able to scale to secure all of this traffic, and in some cases, it must be able to be bypassed for other services. Figure 1-10 depicts this scale in a sample cloud network that is meant to merely show the various components and how they might scale.

The logical items are easier to scale than the physical items, meaning it’s easy to make 10 copies of an operating system run congruently, since they are easily instantiated, but the challenge is in ensuring that enough processing power can be provided by the servers since they are a physical entity and it takes time to get more of them installed. The same goes for the network. A network in a cloud environment will be divided into many virtual LANs (VLANs) and many routing domains. It is simple to provide more VLANs in the network, but it is hard to ensure that the network has the capacity to handle the needs of the servers. The same goes for the SRX Series firewalls.

For the SRX Series in particular, the needs of the cloud computing environment must be well planned. As we discussed in regard to service providers, the demands of a stateful device are enormous when processing large amounts of traffic. Since the SRX Series device is one of the few stateful devices in the cloud network, it needs to be deployed to scale. As Figure 1-10 shows, the SRX5800 is chosen for this environment because it can be deployed in many different configurations based on the needs of the deployment. (The scaling capabilities of the SRX5800 are discussed in detail in SRX5000.)

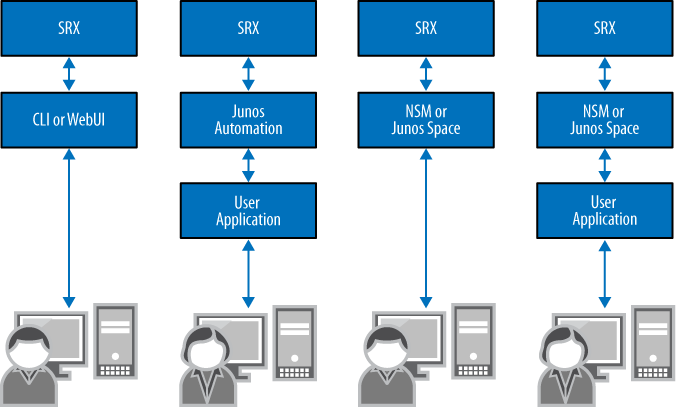

Because of the dynamic nature of cloud computing, infrastructure provisioning of services must be done seamlessly. This goes for every component in the network, including the servers, the network, and the firewalls. Juniper Networks provides several options for managing all of its devices, as shown in Figure 1-11, which illustrates the management paradigm for the devices.

Just as the provisioning model scales for the needs of any organization, so does the cloud computing model. On the far left, direct hands-on or user device management is shown. This is the device management done by an administrator through the CLI or web management system (J-Web). The next example is the command of the device by way of its native API (either Junos automation or NETCONF, both of which we will discuss in Chapter 2), where either a client or a script would need to act as the controller that would use the API to provision the device.

The remaining management examples are similar to the first two examples of the provisioning model, except they utilize a central management console provided by Juniper Networks. Model three shows a user interacting with the default client provided by the Juniper Networks Network and Security Manager (NSM) or Junos Space. In this case, the NSM uses the native API to talk to the devices.

Lastly, in management option six is the most layered and scalable approach. It shows a custom-written application controlling the NSM directly with its own API, and then controlling the devices with its own API.

Although this approach seems highly layered, it provides many advantages in an environment where scaling is required. First, it allows for the creation of a custom application to provide network-wide provisioning in a case where a single management product is not available to manage all of the devices on the network. Second, the native Juniper application is developed specifically around the Juniper devices, thus taking advantage of the inherent health checks and services without having to integrate them.

The Junos Enterprise Services Reference Network

To simplify the SRX Series learning process, this book consistently uses a single topology which contains a number of SRX Series devices and covers all of the scenarios, many of the tutorials, and all of the case studies in the book. A single reference network allows the reader to follow along and only have to reference one network map.

Note

This book’s reference network is primarily focused on branch topologies since the majority of readers have access to those units. For readers who are interested in or are using the data center SRX products, these are discussed as well, but the larger devices are not the focus for most of the scenarios. Where differences exist, they will be noted.

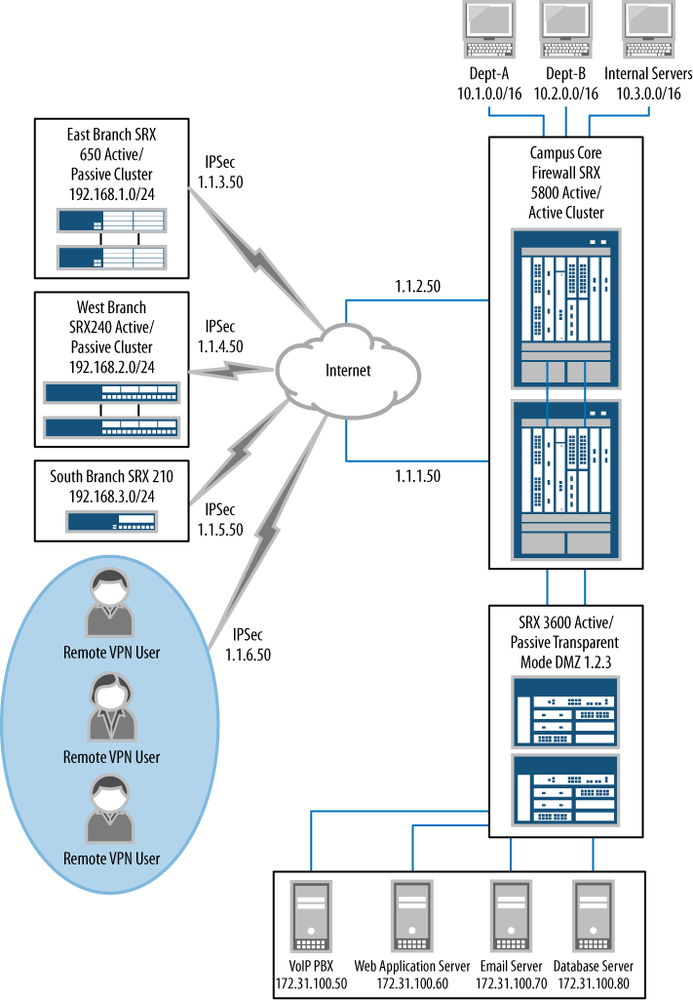

Figure 1-12 shows this book’s reference network. The network consists of three branch deployments, two data center firewall deployments, and remote VPN users. Five of the topologies represent HA clusters with only a single location that specifies a non-HA deployment. The Internet is the network that provides connectivity between all of the SRX Series deployments. Although the reference network is not the perfect “real-world” network, it does provide the perfect topology to cover all of the features in the SRX Series.

Note

Although three of the locations are called branches, they could also represent standalone offices without a relationship to any other location.

The first location to review is the South Branch location. The South Branch location is a typical small branch, utilizing a single SRX210 device. This device is a small, low-cost appliance that can provide a wide range of features for a location with 2 to 10 users and perhaps a wireless access point, as shown in the close-up view in Figure 1-13. The remote users at this location can access both the Internet and other locations over an IPsec VPN connection. Security is provided by using a combination of stateful firewalling, IPS, and UTM. The hosts on the branch network can talk to each other over the local switch on the SRX210 or over the optional wireless AX411 access point.

The West Branch, shown in Figure 1-14, is a larger remote branch location. The West Branch location utilizes two SRX240 firewalls. These firewalls are larger in capacity than the SRX210 devices in terms of ports, throughput, and concurrent sessions. They are designed for a network with more than 10 users or where greater throughputs are needed. Because this branch has more local users, HA is required to prevent loss of productivity due to loss of access to the Internet or the corporate network.

The East Branch location uses the largest branch firewall, the SRX650. This deployment represents both a large branch and a typical office environment where support for hundreds of users and several gigabits per second of throughput is needed. The detailed view of the East Branch is shown in Figure 1-15. This deployment, much like that of the West Branch, utilizes HA. Just as with the other branch SRX Series devices, the SRX650 devices can also use IPS, UTM, stateful firewalling, NAT, and many other security features. The SRX650 provides the highest possible throughput for these features compared to any other branch product line.

Deployment of the campus core firewalls of our reference network will be our first exploration into the high-end or data center SRX Series devices. These are the largest firewalls of the Juniper Networks firewall product line (at the time of this book’s publication). The deployment uses SRX5800 products, and more than 98% of the data center SRX Series firewalls sold are deployed in a highly available deployment, as represented here. These firewalls secure the largest network in the reference design, and Figure 1-16 illustrates a detailed view of the campus core.

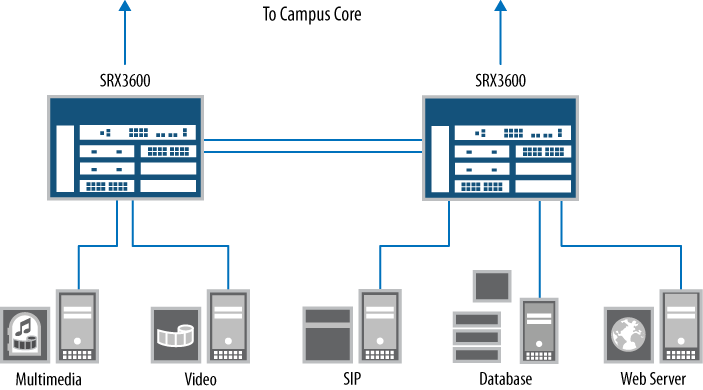

Our campus core example network shows three networks; in a “real-world” deployment this could be hundreds or thousands of networks, but to show the fundamentals of the design and to fit on the printed page, only three are used: Department-A, Department-B, and the Internal Servers networks. These are separated by the SRX5800 HA cluster. Each network has a simple switch to allow multiple hosts to talk to each other. Off the campus core firewalls is a DMZ or demilitarized zone SRX Series firewall cluster, as shown in Figure 1-17.

The DMZ SRX Series devices’ firewall deployment uses an SRX3600 firewall cluster. The SRX3600 firewalls are perfect for providing interface density with high capacity and performance. In the DMZ network, several important servers are deployed. These servers provide critical services to the network and need to be secured to ensure service continuity.

This DMZ deployment is unique compared to the other network deployments because it is the only one that highlights transparent mode deployment, which allows the firewall to act as a bridge. Instead of routing packets like a Layer 3 firewall would, it routes packets to a destination host using its Media Access Control (MAC) address. This allows the firewall to act as a transparent device, hence the term.

Finally, you might note that the remote VPN users are an example use case of two different types of IPsec access to the SRX Series firewalls. The first is the dynamic VPN client, which is a dynamically downloaded client that allows client VPN access into the branch networks. The second client type highlighted is a third-party client, which is not provided by Juniper but is recommended when a customer wants to utilize a standalone software client. We will cover both use cases in Chapter 5.

The reference network contains the most common deployments for the SRX Series products, allowing you to see the full breadth of topologies within which the SRX Series is deployed. The depicted topologies show all the features of the SRX Series in ways in which actual customers use the products. The authors of this book intend for real administrators to sit down and understand how the SRX Series is used and learn how to configure it. We have seen the majority of SRX Series deployments in the world and boiled them down to our reference network.

SRX Series Product Lines

So far, this chapter has focused on SRX Series examples and concepts more than anything, and hopefully this approach has allowed you to readily identify the SRX Series products and their typical uses. For the remainder of the chapter, we will take a deep dive into the products so that you can link the specific features of each to a realistic view of its capabilities. We will begin with what is common to the entire SRX Series, and then, as before, we’ll divide the product line into branch and data center categories.

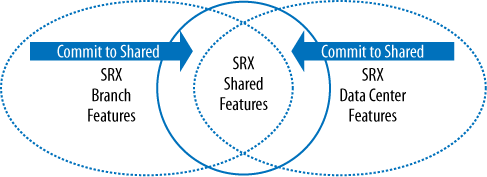

Before the deep dive into each SRX Series product, we must note that each SRX Series platform has a core set of features that are shared across the other platforms. And some of the platforms have different features that are not shared. This might lead to some confusion, because feature parity is not the same across all of the platforms, but the two product lines were designed with different purposes and the underlying architectures vary between the branch and the data center.

The branch SRX Series was designed for small and wide needs, meaning that the devices offer a wide set of features that can solve a variety of problems. This does not mean performance is poor, but rather that the products provide a lot of features.

The data center SRX Series was designed for scale and speed. This means these firewalls can scale from a smaller deployment up to huge performance numbers, all while keeping performance metrics to scale linearly. So, when configuring the modular data center SRX Series device, the designer is able to easily determine how much hardware is required. Over time, product-line-specific features are likely to merge between the two platforms, as shown in Figure 1-18, which is a diagram of that merging model.

Branch SRX Series

The majority of SRX Series firewalls sold and deployed are from within the branch SRX Series, designed primarily for average firewall deployment. A branch SRX Series product can be identified by its three-digit product number. The first digit represents the series and the last two digits specify the specific model number. The number is used simply to identify the product, and doesn’t represent performance or the number of ports, or have any other special meaning.

When a branch product is deployed in a small office, as either a remote office location or a company’s main firewall, it needs to provide many different features to secure the network and its users. This means it has to be a jack-of-all-trades, and in many cases, it is an organization’s sole source of security.

Branch-Specific Features

Minimizing the number of pieces of network equipment is important in a remote or small office location, as that reduces the need to maintain several different types of equipment, their troubleshooting, and of course, their cost. One key to all of this consolidation is the network switch, and all of the branch SRX Series products provide full switching support. This includes support for spanning tree and line rate blind switching. Table 1-2 is a matrix of the possible number of supported interfaces per platform.

SRX100 | SRX210 | SRX240 | SRX650 | |

10/100 | 8 | 6 | 0 | 0 |

10/100/1000 | 0 | 2 | 20 | 52 |

10/100/1000 PoE | 0 | 4 | 16 | 48 |

Note

As of Junos 10.2, the data center SRX Series firewalls do not support blind switching. Although the goal is to provide this feature in the future, it is more cost-effective to utilize a Juniper Networks EX Series Ethernet Switch to provide line rate switching and then create an aggregate link back to a data center SRX Series product to provide secure routing between VLANs. In the future, Juniper may add this feature to its data center SRX Series products.

In most branch locations, SRX Series products are deployed as the only source of security. Because of this, some of the services that are typically distributed can be consolidated into the SRX, such as antivirus. Antivirus is a feature that the branch SRX Series can offer to its local network when applied to the following protocols: Simple Mail Transfer Protocol (SMTP), Post Office Protocol 3 (POP3), Internet Message Access Protocol (IMAP), Hyper Text Transfer Protocol (HTTP), and File Transfer Protocol (FTP). The SRX Series scans for viruses silently as the data is passed through the network, allowing it to stop viruses on the protocols where viruses are most commonly found.

Note

The data center SRX Series does not support the antivirus feature as of Junos 10.2. In organizations that deploy a data center SRX Series product, the antivirus feature set is typically decentralized for increased security as well as enabling antivirus scanning while maintaining the required performance for a data center. A bigger focus for security is utilizing IPS to secure connections into servers in a data center. This is a more common requirement than antivirus. The IPS feature is supported on both the high-end and branch SRX Series product lines.

Antispam is another UTM feature set that aids in consolidation of services on the branch SRX Series. Today it’s reported that almost 95% of the email in the world is spam. And this affects productivity. In addition, although some messages are harmless, offering general-use products, others contain vulgar images, sexual overtures, or illicit offers. These messages can be offensive, a general nuisance, and a distraction.

The antispam technology included on the SRX Series can prevent such spam from being received, and it removes the need to use antispam software on another server.

Note

Much like antivirus, the data center SRX Series does not provide antispam services. In data center locations where mail services are intended for thousands of users, a larger solution is needed, one that is distributed on mail proxies or on the mail servers.

Controlling access to what a user can or can’t see on the Internet is called universal resource locator (URL) filtering. URL filtering allows the administrator to limit what categories of websites can be accessed. Sites that contain pornographic material may seem like the most logical to block, but other types of sites are common too, such as social networking sites that can be time sinks for employees. There are also a class of sites that company policy blocks or temporarily allows access to—for instance, during lunch hour. In any case, all of this is possible on the branch SRX Series products.

Note

For the data center SRX Series product line, URL filtering is not currently integrated. In many large data centers where servers are protected, URL filtering is not needed or is delegated to other products.

Because branch tends to mean small locations all over the world, these branches typically require access to the local LAN for desktop maintenance or to securely access other resources. To provide a low-cost and effective solution, Juniper has introduced the dynamic VPN client. This IPsec client allows for dynamic access to the branch without any preinstalled software on the client station, a very helpful feature to have in the branch so that remote access is simple to set up and requires very little maintenance.

Note

Dynamic VPN is not available on the data center SRX devices. Juniper Networks recommends the use of its SA Series SSL VPN Appliances, allowing for the scaling of tens of thousands of users while providing a rich set of features that go beyond just network access.

When the need for cost-saving consolidation is strong in certain branch scenarios, adding wireless, both cellular and WiFi, can provide interesting challenges. Part of the challenge concerns consolidating these capabilities into a device while not providing radio frequency (RF) interference; the other part concerns providing a device that can be centrally placed and still receive or send enough wireless power to provide value.

All electronic devices give off some sort of RF interference, and all electronic devices state this clearly on their packaging and/or labels. Although this may be minor interference in the big scheme of things, it can also be extremely detrimental to wireless technologies such as cellular Internet access or WiFi—therefore, extreme care is required when integrating these features into any product. Some of the branch SRX Series products have the capability to attach a cellular Internet card or USB dongle directly to them, which can make sense in some small branch locations because typically, cellular signals are fairly strong throughout most buildings.

But what if the device is placed in the basement where it’s not very effective at receiving these cellular signals? Because of this and other office scenarios, Juniper Networks provides a product that can be placed anywhere and is both powered and managed by the SRX Series: the Juniper Networks CX111 Cellular Broadband Data Bridge and CX411 Cellular Broadband Data Bridge.

The same challenge carries over for WiFi. If an SRX Series product is placed in a back room or basement, an integrated WiFi access point may not be very relevant, so Juniper took the same approach and provides an external access point (AP) called the AX411 Wireless LAN Access Point. This AP is managed and powered by any of the branch SRX Series products.

Note

As you might guess, although the wireless features are very compelling for the branch, they aren’t very useful in a data center. Juniper has abstained from bringing wireless features to the data center SRX Series products, but because the two products contain the same codebase, it’s easy to port the feature to the data center SRX Series if the relevance for the feature makes its case.

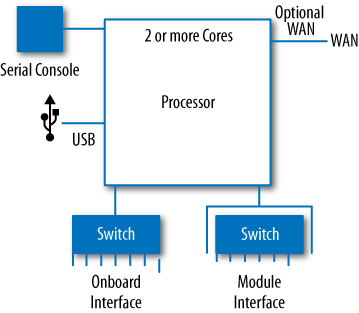

The first Junos products for the enterprise market were the Juniper Networks J Series Services Routers and the first iteration of the J Series was a packet-based device. This means the device acts on each packet individually without any concern for the next packet—typical of how a traditional router operates. Over time, Juniper moved the J Series products toward the capabilities of a flow-based device, and this is where the SRX Series devices evolved from.

Although a flow-based device has many merits, it’s unwise to move away from being able to provide packet services, so the SRX Series can run in packet mode as well as flow. It’s even possible to run both modes simultaneously! This allows the SRX Series to act as traditional packet-based routers and to run advanced services such as MPLS.

MPLS as a technology is not new—carrier networks have been using it for years. Many enterprise networks have used MPLS, but typically it has been done transparently to the enterprise. Now, with the SRX Series, the enterprise has a low-cost solution, so it can create its own MPLS network, bringing the power back to the enterprise from the service providers, and saving money on MPLS as a managed service. On the flip side, it allows the service providers to offer a low-cost service that can provide security and MPLS in a single platform.

The last feature common to the branch SRX Series products is their ability to utilize many types of WAN interfaces. We will detail these interface types as we drill down into each SRX Series platform.

Note

The data center SRX Series products, as of Junos 10.2, only utilize Ethernet interfaces. These are the most common interfaces used in the locations where these products are deployed, and where a data center SRX Series product is deployed they are typically paired with a Juniper Networks MX Series 3D Universal Edge Router, which can provide WAN interfaces.

SRX100

The SRX100, as of Junos 10.2, is the only product in the SRX100 line (if you remember from the SRX numbering scheme, the 1 is the series number and the 00 is the product number inside that series). The SRX100 Services Gateway is shown in Figure 1-19, and it is a fixed form factor, meaning no additional modules or changes can be made to the product after it is purchased. As you can see in the figure, the SRX100 has a total of eight 10/100 Ethernet ports, and perhaps more difficult to see, but clearly onboard, are a serial console port and a USB port.

The eight Ethernet ports can be configured in many different ways. They may be configured in the traditional manner, in which each port has a single IP address, or they can be configured in any combination as an Ethernet switch. The same switching capabilities of the EX Series switches have been combined into the SRX100 so that the SRX100 not only supports line rate blind switching but also supports several variants of the spanning tree protocols; therefore, if the network is expanded in the future, an errant configuration won’t lead to a network loop. The SRX100 can also provide a default gateway on its local switch by using a VLAN interface, as well as a Dynamic Host Configuration Protocol (DHCP) server.

Although the SRX100 is a small, desktop-sized device, it’s also a high-performing platform. It certainly stands out by providing up to 650 Mbps of throughput. This may seem like an exorbitant amount of throughput for a branch platform, but it’s warranted where security is needed between two local network devices. For such a WAN connection, 650 Mbps is far more than what would be needed in a location that would use this type of device, but small offices have a way of growing.

Speaking of performance, the SRX100 supports high rates of VPN, IPS, and antivirus as well if the need to use these features arises in locations where the SRX100 is deployed. The SRX100 also supports a session ramp-up rate of 2,000 new connections per second (CPS), or the number of new TCP-based sessions that can be created per second. UDP sessions are also supported, but this new-session-per-second metric is rated with TCP since it takes three times the number of packets per second to process than it would UDP to set up a session (see Table 1-3).

Type | Capacity | |

CPS | 2,000 | |

Maximum firewall throughput | 650 Mbps | |

Maximum IPS throughput | 60 Mbps | |

Maximum VPN throughput | 65 Mbps | |

Maximum antivirus throughput | 25 Mbps | |

Maximum concurrent sessions | 16K (512 MB of RAM) | 32K (1 GB of RAM) |

Maximum firewall policies | 384 | |

Maximum concurrent users | Unlimited | |

Although 2,000 new connections per second seems like overkill, it isn’t. Many applications today are written in such a way that they may attempt to grab 100 or more data streams simultaneously. If the local firewall device is unable to handle this rate of new connections, these applications may fail to complete their transactions, leading to user complaints and, ultimately, the cost or loss of time in troubleshooting the network.

Also, because users may require many concurrent sessions, the SRX100 can support up to 32,000 sessions. A session is a current connection that is monitored between two peers, and can be of the more common protocols of TCP and UDP, or of other protocols such as Encapsulating Security Payload (ESP) or Generic Route Encapsulation (GRE).

The SRX100 has two separate memory options: low-memory and high-memory versions. They don’t require a change of hardware, but simply the addition of a license key to activate access to the additional memory. The base memory version uses 512 MB of memory and the high-memory version uses 1 GB of memory. When the license key is added, and after a reboot, the new SRX Series flow daemon is brought online. The new flow daemon is designed to access the entire 1 GB of memory.

Activating the 1 GB of memory does more than just enable twice the number of sessions; it is required to utilize UTM. If any of the UTM features are activated, the total number of sessions are cut back to the number of low-memory sessions. Reducing the number of sessions allows the UTM processes to run. The administrator can choose whether sessions or the UTM features are the more important option.

The SRX100 can be placed in one of four different options. The default placement is on any flat surface. The other three require additional hardware to be ordered: vertically on a desktop, in a network equipment rack, or mounted on a wall. The wall mount kit can accommodate a single SRX100, and the rack mount kit can accommodate up to two SRX100 units in a single rack unit.

SRX200

The SRX200 line is the next step up in the branch SRX Series. The goal of the SRX200 line is to provide modular solutions to branch environments. This modularity comes through the use of various interface modules that allow the SRX200 line to connect to a variety of media types such as T1. Furthermore, the modules can be shared among all of the devices in the line.

The first device in the line is the SRX210. It is similar to the SRX100, except that it has additional expansion capabilities and extended throughput. The SRX210 has eight Ethernet ports, like the SRX100 does, but it also includes two 10/100/100 tri-speed Ethernet ports, allowing high-speed devices such as switches or servers to be connected. In addition, the SRX210 can be optionally ordered with built-in PoE ports. If this option is selected, the first four ports on the device can provide up to 15.4W of power to devices, be they VoIP phones or Juniper’s AX and CX wireless devices.

Figure 1-20 shows the SRX210. Note in the top right the large slot where the mini-PIM is inserted. The front panel includes the eight Ethernet ports. Similar to the SRX100, the SRX210 includes a serial console port and, in this case, two USB ports. The eight Ethernet ports can be used (just like the SRX100) to provide line rate blind switching and/or a traditional Layer 3 interface.

The rear of the box contains a surprise. In the rear left, as depicted in Figure 1-21, an express card slot is shown. This express card slot can utilize 3G or cellular modem cards to provide access to the Internet, which is useful for dial backup or the new concept of a zero-day branch. In the past, when an organization wanted to roll out branches rapidly, it required the provisioning of a private circuit or a form of Internet access. It may take weeks or months to get this service installed. With the use of a 3G card, a branch can be installed the same day, allowing organizations and operations to move quickly to reach new markets or emergency locations. Once a permanent circuit is deployed, the 3G card can be used for dial backup or moved to a new location.

The performance of the SRX210 is within the range of the SRX100, but it is a higher level of performance than the SRX100 across all of its various capabilities. As you can see in Table 1-4, the overall throughput increased from 650 Mbps on the SRX100 to 750 Mbps on the SRX210. The same goes for the IPS, VPN, and antivirus throughputs. They each increased by about 10% over the SRX100. A significant change is the fact that the total number of sessions doubles, for both the low-memory and high-memory versions. That is a significant advantage in addition to the modularity of the platform.

Type | Capacity | |

CPS | 2,000 | |

Maximum firewall throughput | 750 Mbps | |

Maximum IPS throughput | 80 Mbps | |

Maximum VPN throughput | 75 Mbps | |

Maximum antivirus throughput | 30 Mbps | |

Maximum concurrent sessions | 32K (512 MB of RAM) | 64K (1 GB of RAM) |

Maximum firewall policies | 512 | |

Maximum concurrent users | Unlimited | |

The SRX210 consists of three hardware models: the base memory model, the high-memory model, and the PoE with high-memory model (it isn’t possible to purchase a base memory model and PoE). Unlike the SRX100, the memory models are actually fixed and cannot be upgraded with a license key, so when planning for a rollout with the SRX210, it’s best to plan ahead in terms of what you think the device will need. The SRX210 also has a few hardware accessories: it can be ordered with a desktop stand, a rack mount kit, or a wall mount kit. The rack mount kit can accommodate one SRX210 in a single rack unit.

The SRX240 is the first departure from the small desktop form factor, as it is designed to be mounted in a single rack unit. It also can be placed on the top of a desk and is about the size of a pizza box. The SRX240, unlike the other members of the SRX200 line, includes sixteen 10/100/1000 Ethernet ports, but like the other two platforms, line rate switching can be achieved between all of the ports that are configured in the same VLAN. It’s also possible to configure interfaces as a standard Layer 3 interface, and each interface can also contain multiple subinterfaces. Each subinterface is on its own separate VLAN. This is a capability that is shared across all of the SRX product lines, but it’s typically used on the SRX240 since the SRX240 is deployed on larger networks.

Figure 1-22 shows the SRX240, and you should be able to see the sixteen 10/100/1000 Ethernet ports across the bottom front of the device. There’s the standard fare of one serial console port and two USB ports, and on the top of the front panel of the SRX240 are the four mini-PIM slots. These slots can be used for any combination of supported mini-PIM cards.

The performance of the SRX240 is double that of the other platforms. It’s designed for mid-range to large branch location and can handle more than eight times the connections per second, for up to 9,000 CPS. Not only is this good for outbound traffic, but it is also great for hosting small to medium-size services behind the device—including web, DNS, and email services, which are typical services for a branch network. The throughput for the device is enough for a small network, as it can secure more than 1 gigabit per second of traffic. This actually allows several servers to sit behind it and for the traffic to them from both the internal and external networks to be secured. The device can also provide for some high IPS throughput, which is great for inspecting traffic as it goes through the device from untrusted hosts.

Again, Table 1-5 shows that the total number of sessions on the device has doubled from the lower models. The maximum rate of 128,000 sessions is considerably large for most networks. Just as you saw on the SRX210, the SRX240 provides three different hardware models: the base memory model that includes 512 MB of memory (it’s unable to run UTM and runs with half the number of sessions); the high-memory version which has twice the amount of memory on the device (it’s able to run UTM with an additional license); and the high-memory with PoE model that can provide PoE to all 16 of its built-in Ethernet ports.

Type | Capacity | |

CPS | 9,000 | |

Maximum firewall throughput | 1.5 Gbps | |

Maximum IPS throughput | 250 Mbps | |

Maximum VPN throughput | 250 Mbps | |

Maximum antivirus throughput | 85 Mbps | |

Maximum concurrent sessions | 64K (512 MB of RAM) | 128K (1 GB of RAM) |

Maximum firewall policies | 4,096 | |

Maximum concurrent users | Unlimited | |

Interface modules for the SRX200 line

The SRX200 Series Services Gateways currently support six different types of mini-PIMs, as shown in Table 1-6. On the SRX240 these can be mixed and matched to support any combination that the administrator chooses, offering great flexibility if there is a need to have several different types of WAN interfaces. The administrator can also add up to a total of four SFP mini-PIM modules on the SRX240, giving it a total of 20 gigabit Ethernet ports. The SFP ports can be either a fiber optic connection or a copper twisted pair link. The SRX210 can only accept one card at a time, so there isn’t a capability to mix and match cards, although as stated, the SRX210 can accept any of the cards. Though the SRX210 is not capable of inspecting gigabit speeds of traffic, a fiber connection may be required in the event that a long haul fiber is used to connect the SRX210 to the network.

Type | Description |

ADSL | 1-port ADSL2+ mini-PIM supporting ADSL/ADSL2/ADSL2+ Annex A |

ADSL | 1-port ADSL2+ mini-PIM supporting ADSL/ADSL2/ADSL2+ Annex B |

G.SHDSL | 8-wire (4-pair) G.SHDSL mini-PIM |

Serial | 1-port Sync Serial mini-PIM |

SFP | 1-port SFP mini-PIM |

T1/E1 | 1-port T1/E1 mini-PIM |

The ADSL cards support all of the modern standards for DSL and work with most major carriers. The G.SHDSL standard is much newer than the older ADSL, and it is a higher-speed version of DSL that is provided over traditional twisted pair lines. Among the three types of cards, all common forms of ADSL are available to the SRX200 line.

The SRX200 line also supports the use of the tried-and-true serial port connection. This allows for connection to an external serial port and is the least commonly used interface card. A more commonly used interface card is the T1/E1 card, which is typical for WAN connection to the SRX200 line. Although a T1/E1 connection may be slow by today’s standards, compared to the average home broadband connection, it is still commonly used in remote branch offices.

SRX600

The SRX600 line is the most different from the others in the branch SRX Series. This line is extremely modular and offers very high performance for a device that is categorized as a branch solution.

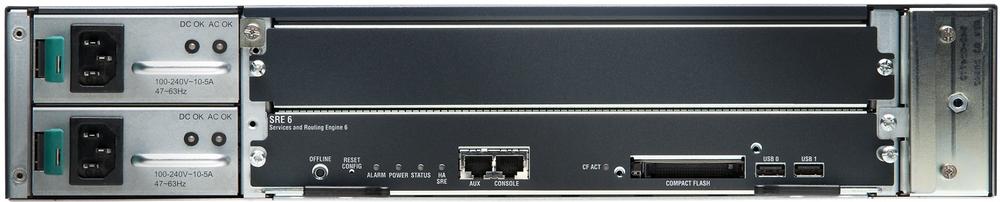

The only model in the SRX600 line (at the time of this writing) is the SRX650. The SRX650 comes with four onboard 10/100/1000 ports. All the remaining components are modules. The base system comes with the chassis and a component called the Services and Routing Engine or SRE. The SRE provides the processing and management capabilities for the platform. It has the same architecture as the other branch platforms, but this time the component for processing is modular.

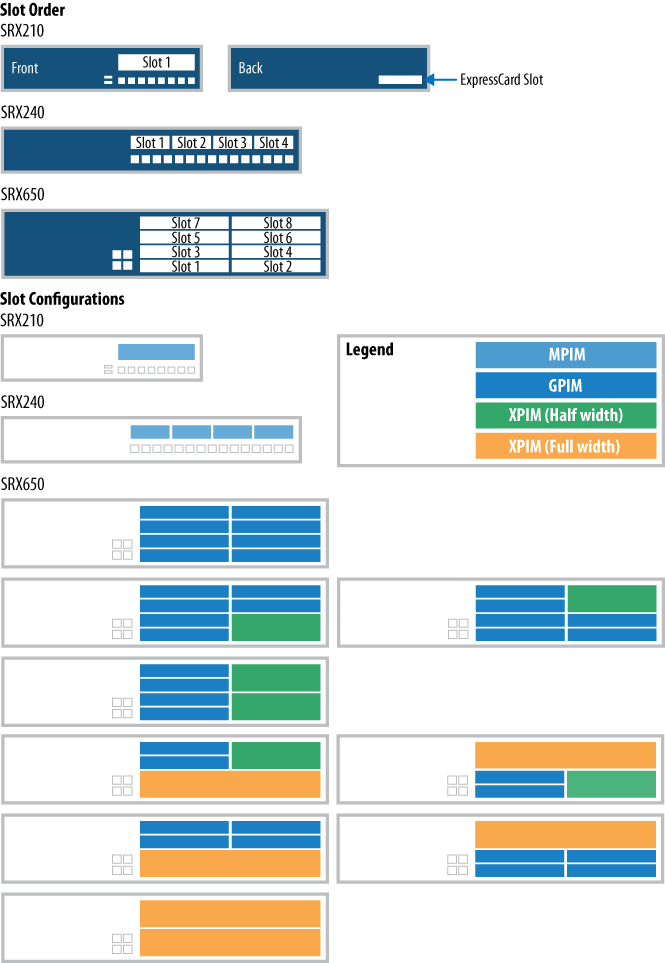

Figure 1-23 shows the front of the SRX650 chassis, and the four onboard 10/100/1000 ports are found on the front left. The other items to notice are the eight modular slots, which are different here than in the other SRX platforms. Here the eight slots are called G-PIM slots, but it is also possible to utilize another card type called an X-PIM which utilizes multiple G-PIM slots.

On the back of the SRX650 is where the SRE is placed. There are two slots that fit the SRE into the chassis, but note that as of the Junos 10.2 release, only the bottom slot can be used. In the future, the SRX650 may support a new double-height SRE, or even multiple SREs. On the SRE there are several ports: first the standard serial console port and then a secondary serial auxiliary port, shown in the product illustration in Figure 1-24. Also, the SRE has two USB ports.

New to this model is the inclusion of a secondary compact flash port. This port allows for expanded storage for logs or software images. The SRX650 also supports up to two power supplies for redundancy.

The crown jewel feature of the SRX650 is its performance capabilities. The SRX650 is more than enough for most branch office locations, allowing for growth in the branch office. As shown in Table 1-7, it can provide up to 30,000 new connections per second, which is ample for a fair bit of servers that can be hosted behind the firewall. It also accounts for a large number of users that can be hosted behind the SRX. The total number of concurrent sessions is four times higher than on the SRX240, with a maximum of 500,000 sessions. Only 250,000 sessions are available when UTM is enabled; the other available memory is shifted for the UTM features to utilize.

Type | Capacity |

CPS | 30,000 |

Maximum firewall throughput | 7 Gbps |

Maximum IPS throughput | 1.5 Gbps |

Maximum VPN throughput | 1.5 Gbps |

Maximum antivirus throughput | 350 Mbps |

Maximum concurrent sessions | 512K (2 GB of RAM) |

Maximum firewall policies | 8,192 |

Maximum concurrent users | Unlimited |

The SRX650 can provide more than enough throughput on the device, and it can provide local switching as well. The maximum total throughput is 7 gigabits per second. This represents a fair bit of secure inspection of traffic in this platform. Also, for the available UTM services it provides, it is extremely fast. IPS performance exceeds 1 gigabit as well as VPN. The lowest performing value is the inline antivirus, and although 350 Mbps is far lower than the maximum throughput, it is very fast considering the amount of inspection that is needed to scan files for viruses.

Interface modules for the SRX600 line

The SRX650 has lots of different interface options that are not available on any other platform today. This makes the SRX650 fairly unique as a platform compared to the rest of the branch SRX Series. The SRX650 can use two different types of modules: the G-PIM and the X-PIM. The G-PIM occupies only one of the possible eight slots, whereas an X-PIM takes a minimum of two slots, and some X-PIMs take a maximum of four slots. Table 1-8 lists the different interface cards.

Type | Description | Slots |

Dual T1/E1 | Dual T1/E1, two ports with integrated CSU/DSU – G-PIM. Single G-PIM slot. | 1 |

Quad T1/E1 | Quad T1/E1, four ports with integrated CSU/DSU – G-PIM. Single G-PIM slot. | 1 |

16-port 10/100/1000 | Ethernet switch 16-port 10/100/1000-baseT X-PIM. | 2 |

16-port 10/100/1000 PoE | Ethernet switch 16-port 10/100/1000-baseT X-PIM with PoE. | 2 |

24-port 10/100/1000 plus four SFP ports | Ethernet Switch 24-port 10/100/1000-baseT X-PIM. Includes four SFP slots. | 4 |

24-port 10/100/1000 PoE plus four SFP ports | PoE Ethernet switch 24-port 10/100/1000-baseT X-PIM. Includes four SFP slots. | 4 |

Two different types of G-PIM cards provide T1/E1 ports. One provides two T1/E1 ports and the other provides a total of four ports. These cards can go in any of the slots on the SRX650 chassis, up to the maximum of eight slots.

The next type of card is the dual-slot X-PIM. These cards provide sixteen 10/100/1000 ports and come in the PoE or non-PoE variety. Using this card takes up two of the eight slots. They can only be installed in the right side of the chassis, with a maximum of two cards in the chassis.

The third type of card is the quad-slot X-PIM. This card has twenty-four 10/100/1000 ports and four SFP ports and comes in a PoE and non-PoE version. The SFP ports can use either fiber or twisted-pair SFP transceivers. Figure 1-25 shows the possible locations of each type of card.

Local switching can be achieved at line rate for ports on the same card, meaning that on each card, switching must be done on that card to achieve line rate. It is not possible to configure switching across cards. All traffic that passes between cards must be inspected by the firewall, and the throughput is limited to the firewall’s maximum inspection. Administrators who deploy the SRX should be aware of this limitation.

AX411

The AX411 Wireless LAN Access Point is not an SRX device, but more of an accessory to the branch SRX Series product line. The AX411 cannot operate on its own without an SRX Series appliance. To use the AX411 device, simply plug it into an SRX device that has DHCP enabled and an AX411 license installed. The access point will get an IP address from the SRX and register with the device, and the configuration for the AX411 will be pushed down from the SRX to the AX411. Then queries can be sent from the SRX to the AX411 to get status on the device and its associated clients. Firmware updates and remote reboots are also handled by the SRX product.

The AX411 is designed to be placed wherever it’s needed: on a desktop, mounted on a wall, or inside a drop ceiling. As shown in Figure 1-26, the AX411 has three antennas and one Ethernet port. It also has a console port, which is not user-accessible.

The AX411 has impressive wireless capabilities, as it supports 802.11a/b/g/n wireless networking. The three antennas provide multiple input-multiple output (MIMO) for maximum throughput. The device features two separate radios, one at the 2.4 GHz range and the other at the 5 GHz range. For the small branch, it meets all of the requirements of an access point. The AX411 is not meant to provide wireless access for a large campus network, so administrators should not expect to be able to deploy dozens of AX411 products in conjunction; the AX411 is not designed for this purpose.

Each SRX device in the branch SRX Series is only capable of managing a limited number of AX411 appliances, and Table 1-9 shows the number of access points per platform that can be managed. The SRX100 can manage up to two AX411 devices. From there, each platform doubles the total number of access points that can be managed, going all the way up to 16 access points on the SRX650.

CX111

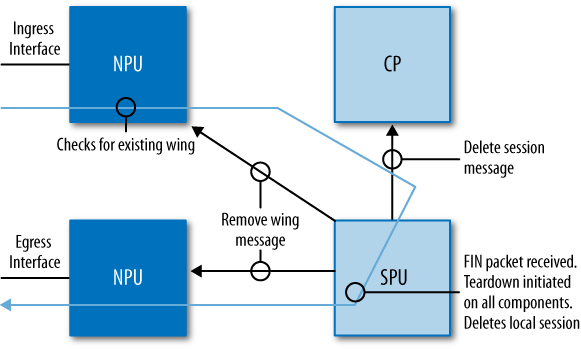

The CX111 Cellular Broadband Data Bridge (see Figure 1-27) can be used in conjunction with the branch SRX Series products. The CX111 is designed to accept a 3G (or cellular) modem and then provide access to the Internet via a wireless carrier. The CX111 supports about 40 different manufacturers of these wireless cards and up to three USB wireless cards and one express card. Access to the various wireless providers can be always-on or dial-on-demand.